Featured article of the week: December 19–25:"Fueling clinical and translational research in Appalachia: Informatics platform approach"

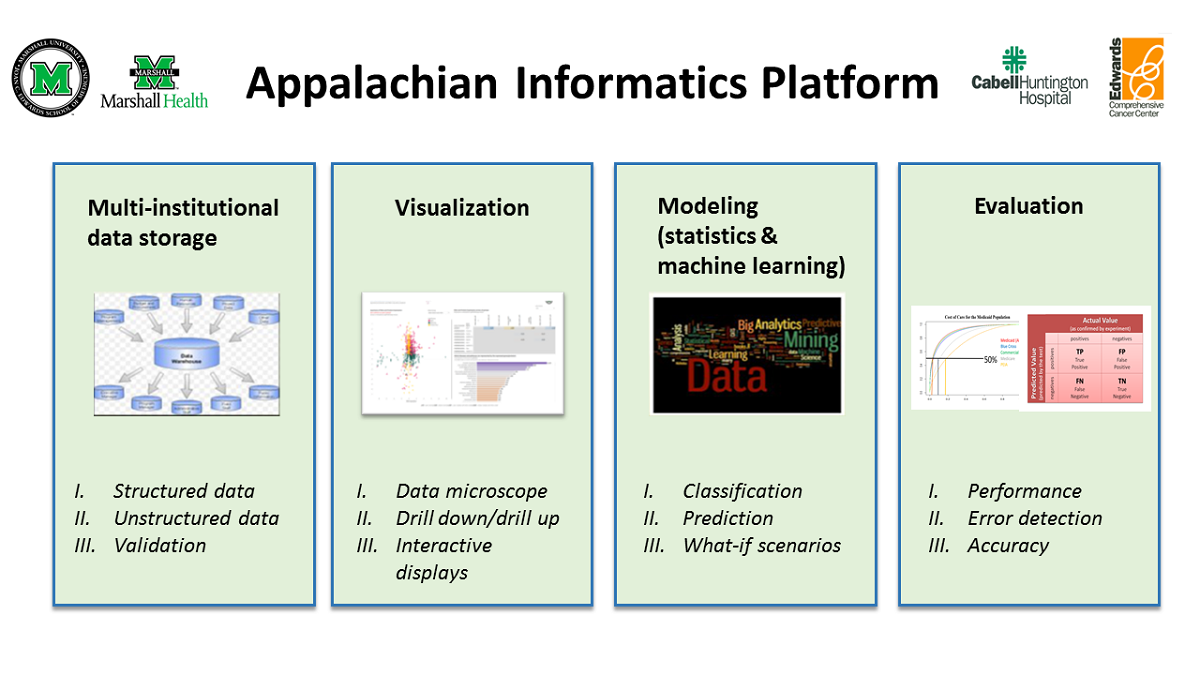

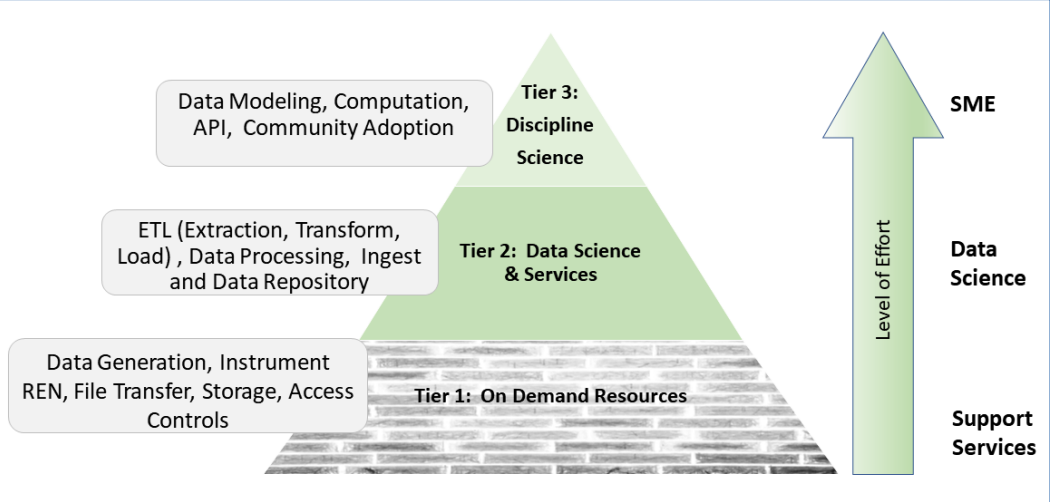

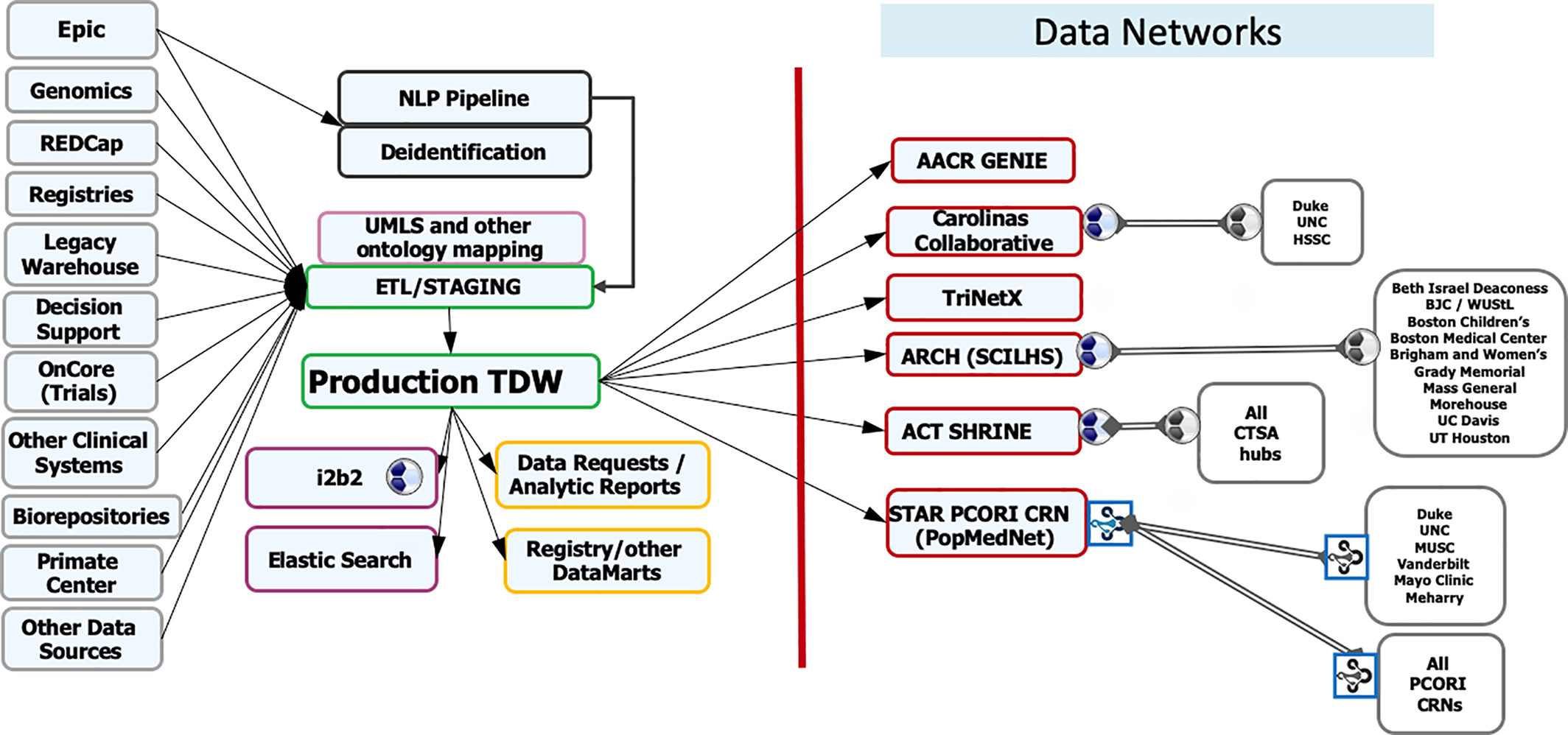

The Appalachian population is distinct, not just culturally and geographically but also in its healthcare needs, facing the most health care disparities in the United States. To meet these unique demands, Appalachian medical centers need an arsenal of analytics and data science tools with the foundation of a centralized data warehouse to transform healthcare data into actionable clinical interventions. However, this is an especially challenging task given the fragmented state of medical data within Appalachia and the need for integration of other types of data such as environmental, social, and economic with medical data. This paper aims to present the structure and process of the development of an integrated platform at a midlevel Appalachian academic medical center, along with its initial uses ... (Full article...)

Featured article of the week: December 12–18:

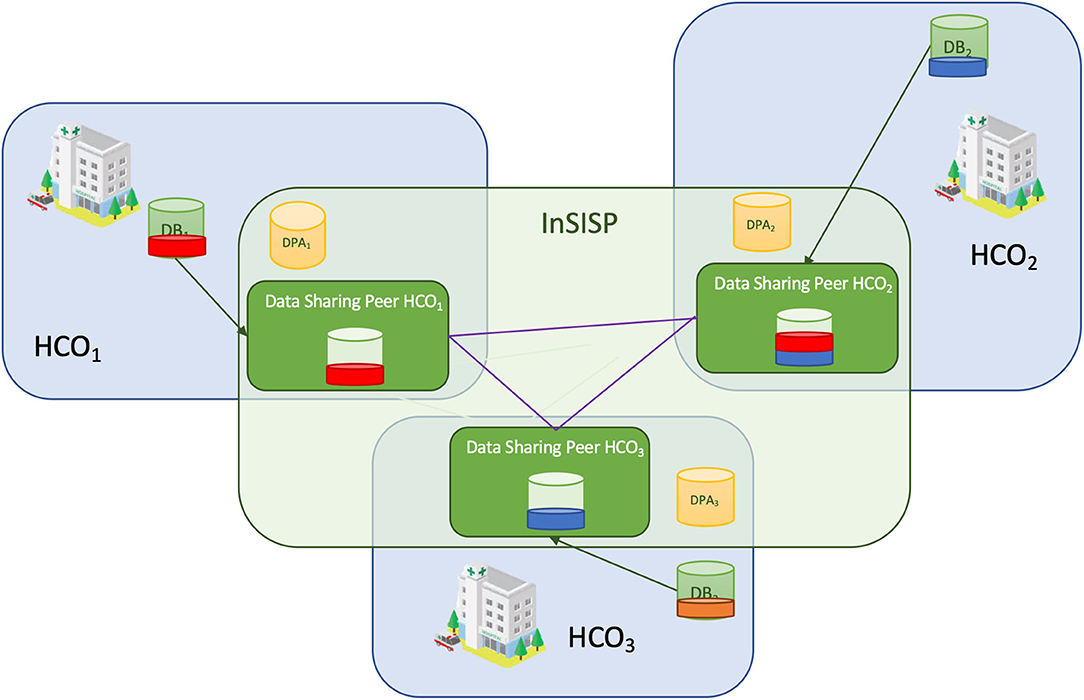

"Using knowledge graph structures for semantic interoperability in electronic health records data exchanges"

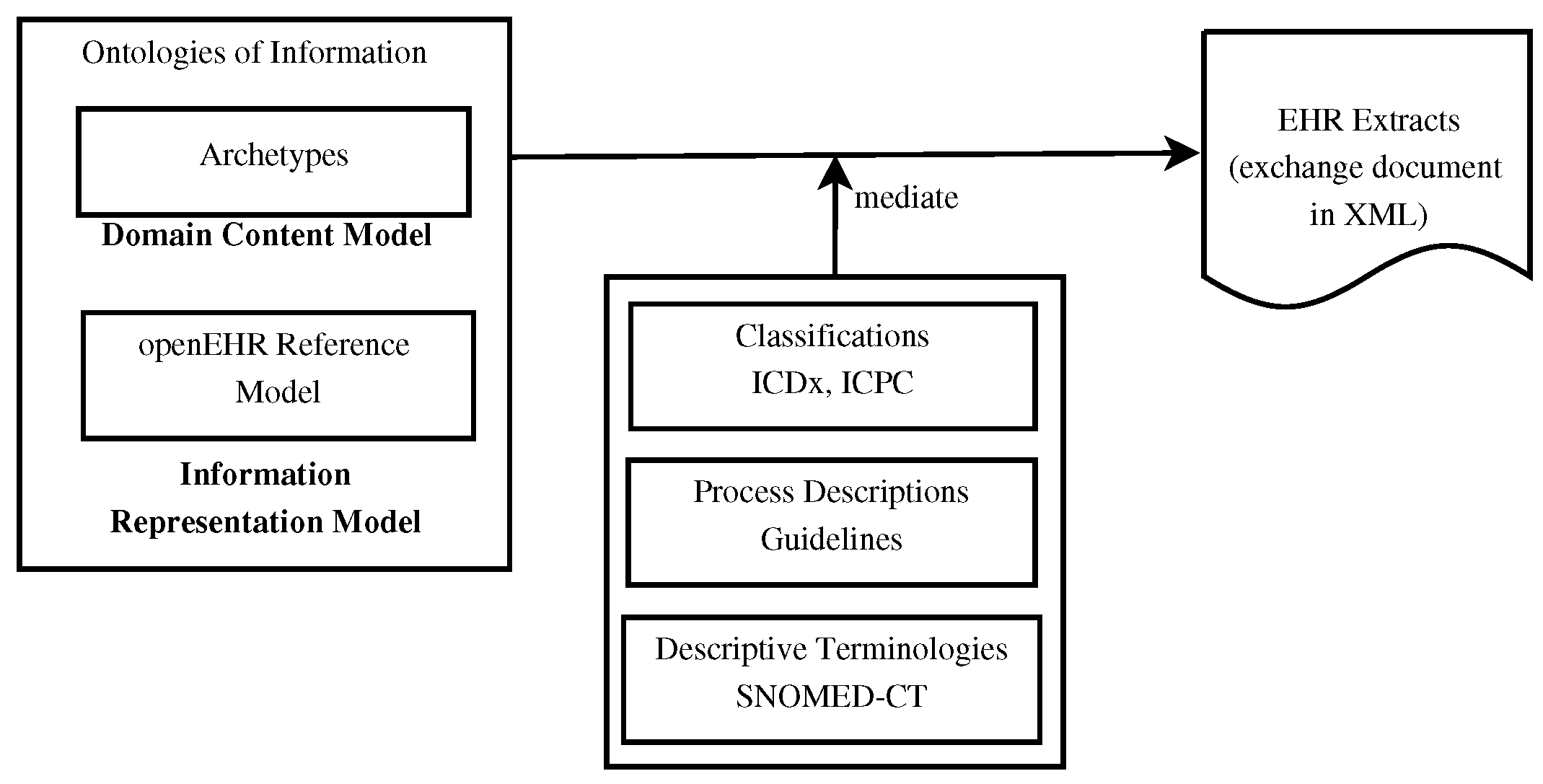

Information sharing across medical institutions is restricted to information exchange between specific partners. The lifelong electronic health record (EHR) structure and content require standardization efforts. Existing standards such as openEHR, Health Level 7 (HL7), and ISO/EN 13606 aim to achieve data independence along with semantic interoperability. This study aims to discover knowledge representation to achieve semantic health data exchange. openEHR and ISO/EN 13606 use archetype-based technology for semantic interoperability. The HL7 Clinical Document Architecture is on its way to adopting this through HL7 templates. Archetypes are the basis for knowledge-based systems, as these are means to define clinical knowledge. The paper examines a set of formalisms for the suitability of describing, representing, and reasoning about archetypes. Each of the information exchange technologies—such as XML, Web Ontology Language (OWL), Object Constraint Language (OCL), and Knowledge Interchange Format (KIF)—is evaluated as a part of the knowledge representation experiment. These examine the representation of archetypes as described by Archetype Definition Language (ADL) ... (Full article...)

|

Featured article of the week: December 05–11:

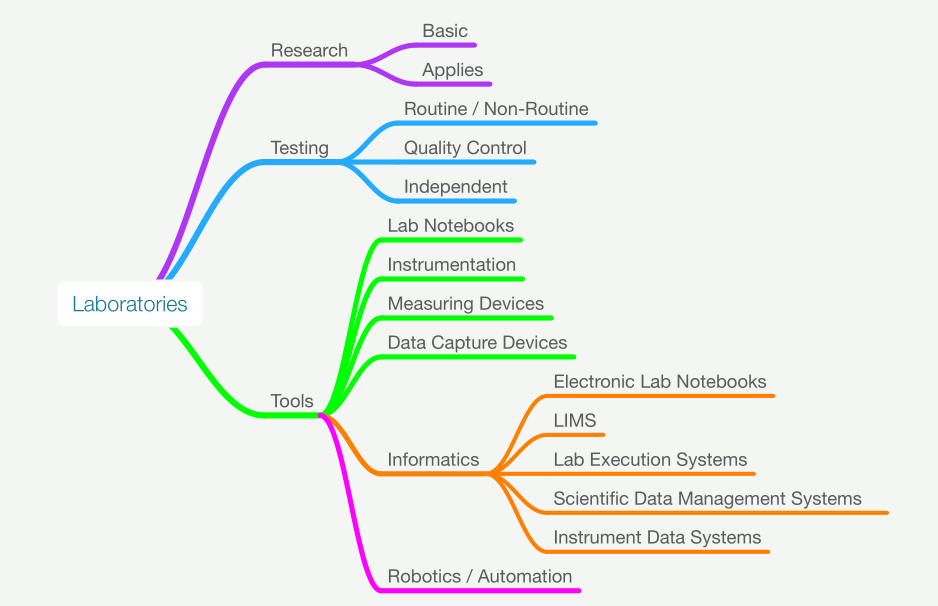

"Development of a smart laboratory information management system: A case study of NM-AIST Arusha of Tanzania"

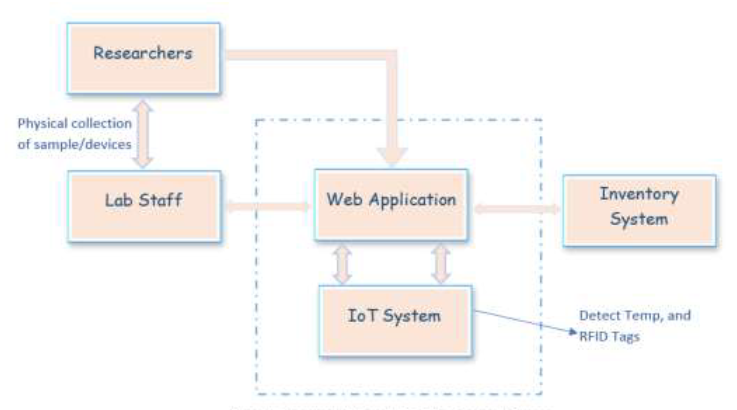

Testing laboratories in higher learning institutions of science, technology, and engineering are used by institutional staff, researchers, and external stakeholders in conducting research experiments, sample analysis, and result dissemination. However, there exists a challenge in the management of laboratory operations and processing of laboratory-based data. Operations carried out in the laboratory at Nelson Mandela African Institution of Science and Technology (NM-AIST), in Arusha, Tanzania—where this case study was carried out—are paper-based. There is no automated way of sample registration and identification, and researchers are prone to making errors when handling sensitive reagents. Users have to physically visit the laboratory to enquire about available equipment or reagents before borrowing or reserving those resources. Additionally, paper-based forms have to be filled out and handed to the laboratory manager for approval ... (Full article...)

|

Featured article of the week: November 28–December 04:

"Health informatics: Engaging modern healthcare units: A brief overview"

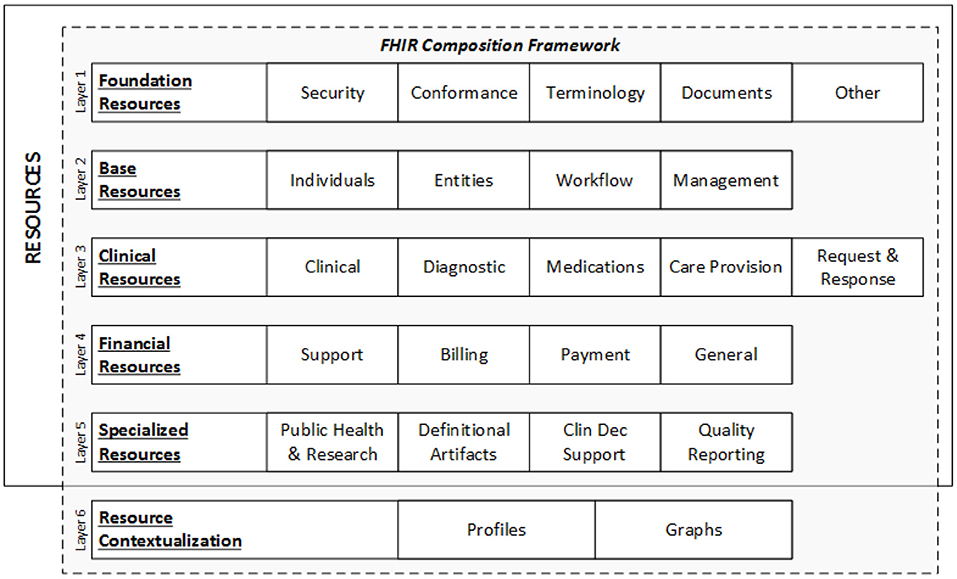

With a large amount of unstructured data finding its way into health systems, health informatics implementations are currently gaining traction, allowing healthcare units to leverage and make meaningful insights for doctors and decision makers using relevant information to scale operations and predict the future view of treatments via information systems communication. Now, around the world, massive amounts of data are being collected and analyzed for better patient diagnosis and treatment, improving public health systems and assisting government agencies in designing and implementing public health policies, while also instilling confidence in future generations who want to use better public health systems. This article provides an overview of the Health Level 7 FHIR architecture ... (Full article...)

|

Featured article of the week: November 21–27:

"A roadmap for LIMS at NIST Material Measurement Laboratory"

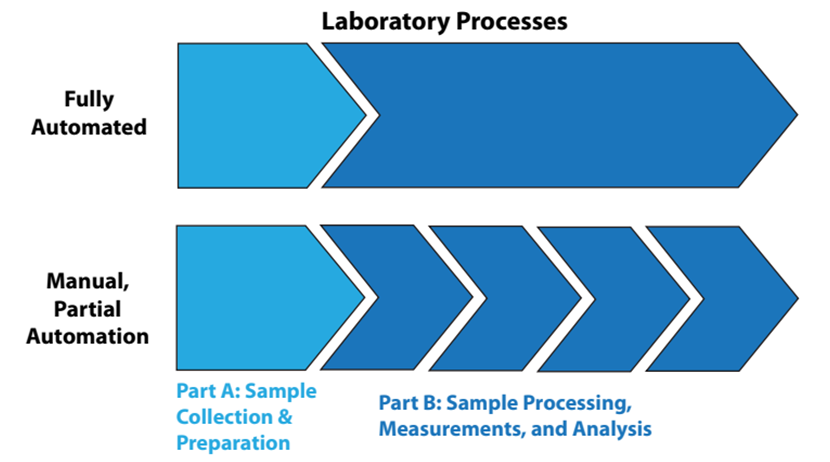

Over the past decade, emerging technology in laboratory and computational science has changed the landscape for research by accelerating the production, processing, and exchange of data. The NIST Material Measurement Laboratory community recognizes that to keep pace with the transformation of measurement science to a digital paradigm, it is essential to implement laboratory information management systems (LIMS). Effective introduction of LIMS early in the research life cycle provides direct support for planning and execution of experiments and accelerating research productivity. From this perspective, LIMS are not passive entities with isolated interaction, but rather key resources supporting collaboration, scientific integrity, and transfer of knowledge over time. They serve as a delivery system for organizational contributions to the broader federated data community, supporting both controlled and open access, determined by the sensitivity of the research ... (Full article...)

|

Featured article of the week: November 14–20:

"A model for design and implementation of a laboratory information management system specific to molecular pathology laboratory operations"

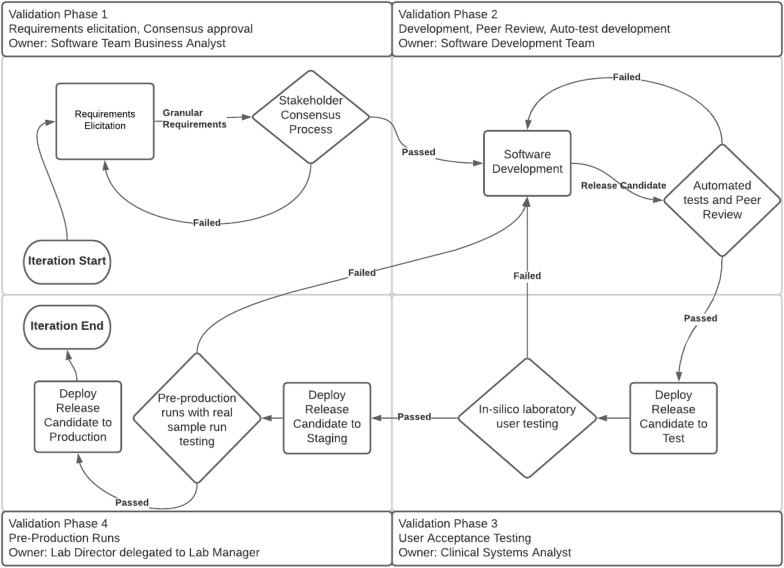

The Molecular Pathology Section of Cleveland Clinic (Cleveland, OH) has undergone enhancement of its testing portfolio and processes. An electronic- and paper-based data management system was replaced with a commercially available laboratory information management system (LIMS) solution, a separate bioinformatics platform, customized test-interpretation applications, a dedicated accessioning service, and a results-releasing solution. The LIMS solution manages complex workflows, large-scale data packets, and process automation. However, a customized approach was required for the LIMS since a survey of commercially available off-the-shelf (COTS) software solutions revealed none met the diverse and complex needs of Cleveland Clinic's molecular pathology service ... (Full article...)

|

Featured article of the week: November 7–13:

"DigiPatICS: Digital pathology transformation of the Catalan Health Institute network of eight hospitals - Planning, implementation, and preliminary results"

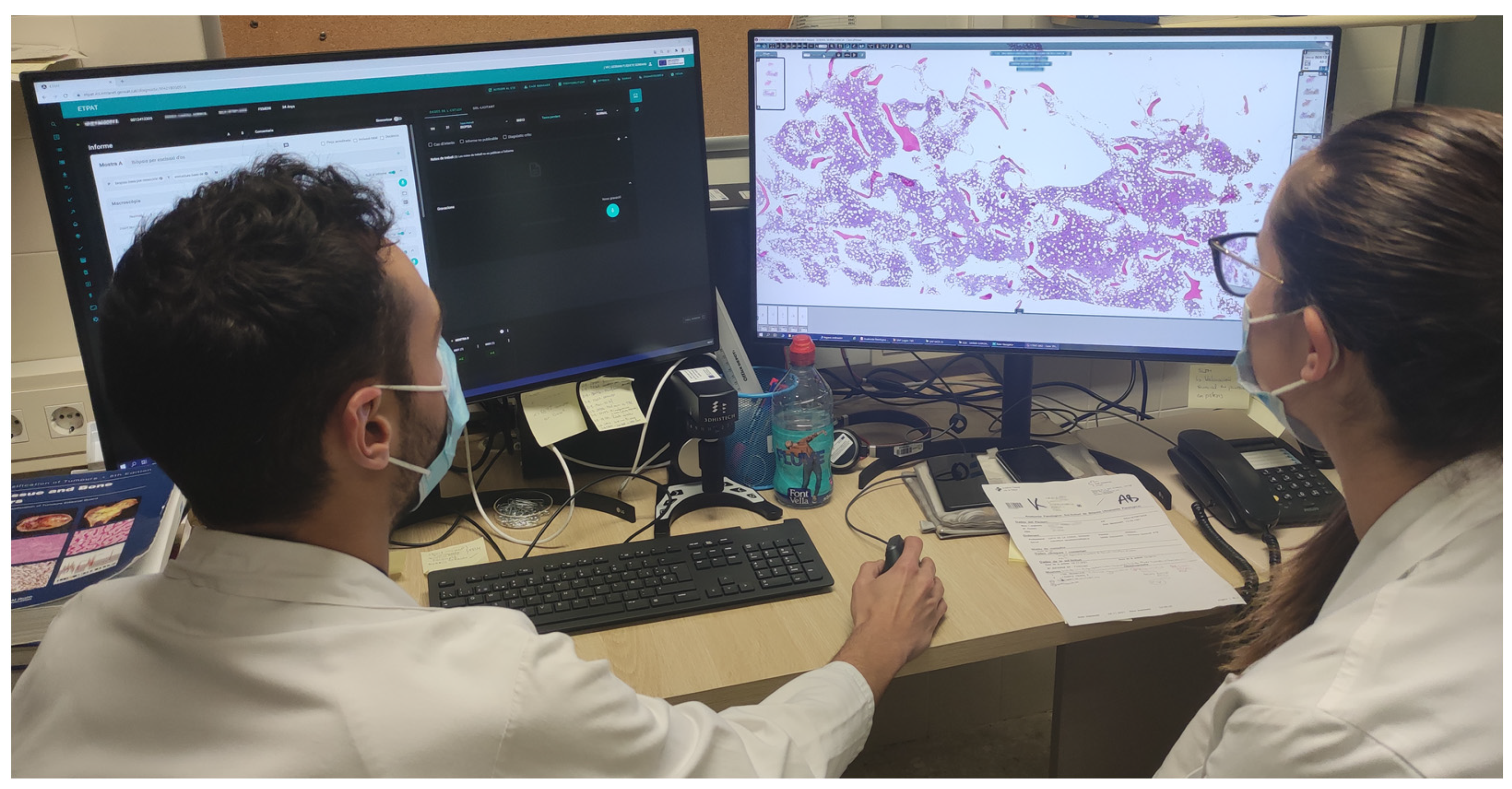

Complete digital pathology transformation for primary histopathological diagnosis is a challenging yet rewarding endeavor. Its advantages are clear with more efficient workflows, but there are many technical and functional difficulties to be faced. The Catalan Health Institute (Institut Català de la Salut or ICS) has started its DigiPatICS project, aiming to deploy digital pathology in an integrative, holistic, and comprehensive way within a network of eight hospitals, over 168 pathologists, and over one million slides each year. We describe the bidding process and the careful planning that was required, followed by swift implementation in stages. The purpose of the DigiPatICS project is to increase patient safety and quality of care, improving diagnosis and the efficiency of processes in the pathological anatomy departments of the ICS through process improvement, digital pathology, and artificial intelligence (AI) tools ... (Full article...)

|

Featured article of the week: October 31–November 6:

"Structure-based knowledge acquisition from electronic lab notebooks for research data provenance documentation"

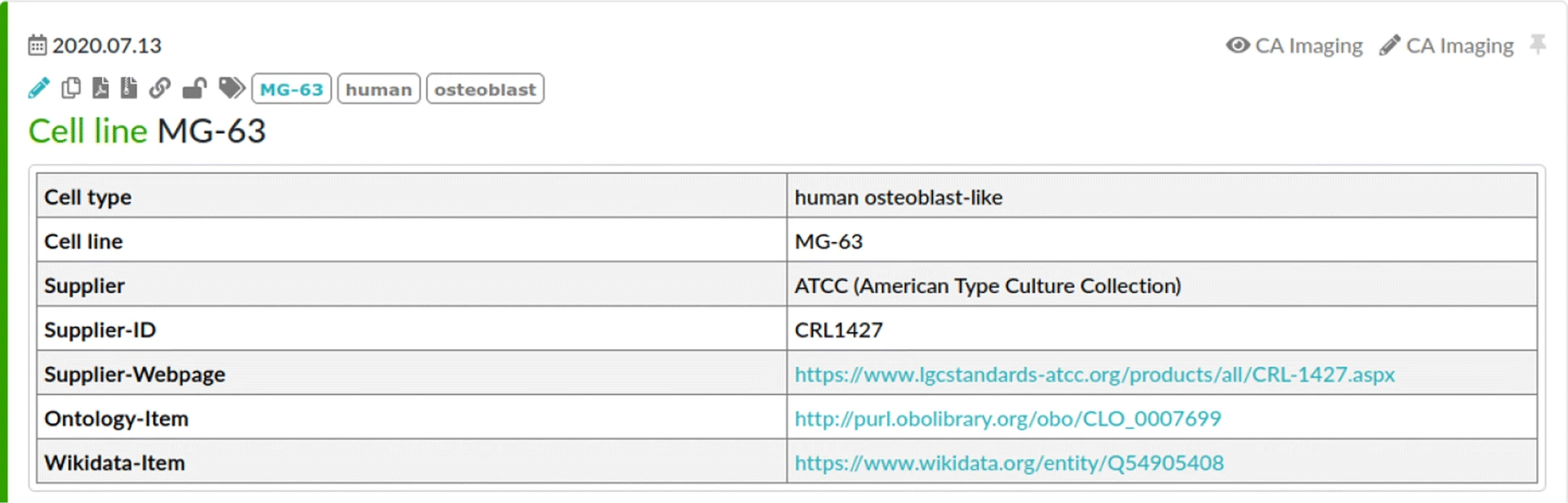

Electronic laboratory notebooks (ELNs) are used to document experiments and investigations in the wet lab. Protocols in ELNs contain a detailed description of the conducted steps, including the necessary information to understand the procedure and the raised research data, as well as to reproduce the research investigation. The purpose of this study is to investigate whether such ELN protocols can be used to create semantic documentation of the provenance of research data by the use of ontologies and linked data methodologies. Based on an ELN protocol of a biomedical wet lab experiment, a retrospective provenance model of the raised research data describing the details of the experiment in a machine-interpretable way is manually engineered. Furthermore, an automated approach for knowledge acquisition from ELN protocols is derived from these results. This structure-based approach exploits the structure in the experiment’s description—such as headings, tables, and links—to translate the ELN protocol into a semantic knowledge representation ... (Full article...)

|

Featured article of the week: October 24–30:

"Food informatics: Review of the current state-of-the-art, revised definition, and classification into the research landscape"

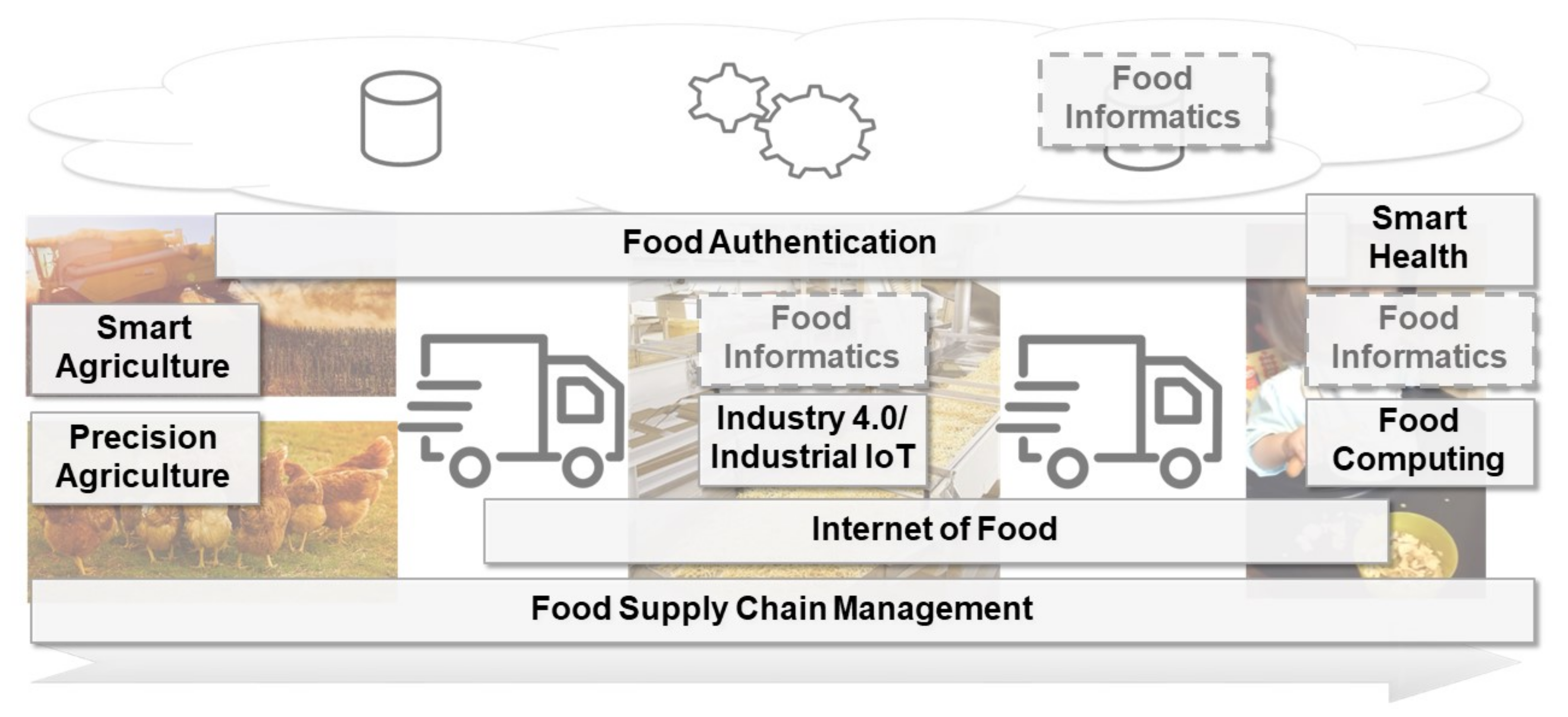

The increasing population of humans and their changing food consumption behavior, as well as the recent developments in the awareness for food sustainability, lead to new challenges for the production of food. Advances in the internet of things (IoT) and artificial intelligence (AI) technology, including machine learning and data analytics, might help to account for these challenges. Several research perspectives—among them precision agriculture, industrial IoT, internet of food, and smart health—already provide new opportunities through digitalization. In this paper, we review the current state-of-the-art of the mentioned concepts. An additional concept to address is food informatics, which so far is mostly recognized as a mainly data-driven approach to support the production of food. In this review paper, we propose and discuss a new perspective for the concept of food informatics as a supportive discipline ... (Full article...)

|

Featured article of the week: October 17–23:

"Creating learning health systems and the emerging role of biomedical informatics"

The nature of information used in medicine has changed. In the past, we were limited to routine clinical data and published clinical trials. Today, we deal with massive, multiple data streams and easy access to new tests, ideas, and capabilities to process them. Whereas in the past getting information for decision-making was a challenge, today's clinicians have readily available access to information and data through the multitude of data-collecting devices, though it remains a challenge at times to analyze, evaluate, and prioritize it. As such, clinicians must become adept with the tools needed to deal with the era of big data, requiring a major change in how we learn to make decisions. Major change is often met with resistance and questions about value. A "learning health system" (LHS) is an enabler to encourage the development of such tools and demonstrate value in improved decision-making ... (Full article...)

|

Featured article of the week: October 10–16:

"Planning for Disruptions in Laboratory Operations"

A high-level of productivity is something laboratory management wants and those working for them strive to achieve. However, what happens when reality trips us up? We found out when COVID-19 appeared. This work from laboratory informatics veteran Joe Liscouski examines how laboratory operations can be organized to meet that disruption, as well as other disruptions we may have to face. Many of these changes, including the introduction of new technologies and changing attitudes about work, were in the making already but at a much slower pace. Over the years, productivity has had many measures, from 40 to 60 hour work weeks and piece-work to pounds of material processed to samples run, all of which comes from a manufacturing mind set. People went to work in an office, lab, or production site, did their work, put in their time, and went home. That was in the timeframe leading up to the 1950s and '60s. Today, in 2022, things have changed ... (Full article...)

|

<Featured article of the week: October 3–9:

"The current state of knowledge on imaging informatics: A survey among Spanish radiologists"

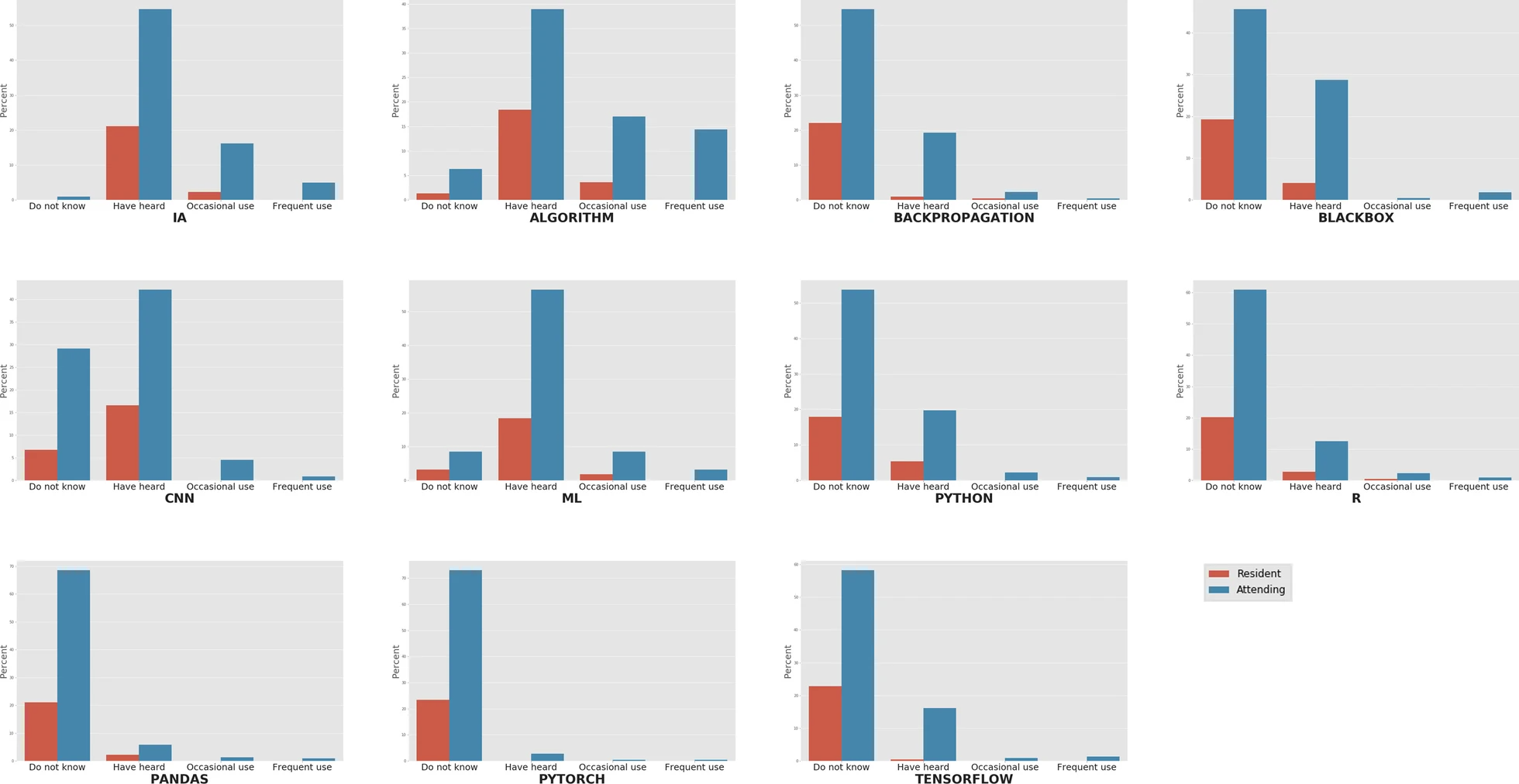

There is growing concern about the impact of artificial intelligence (AI) on radiology and the future of the profession. The aim of this study is to evaluate general knowledge and concerns about trends on imaging informatics among radiologists working in Spain (residents and attending physicians). For this purpose, an online survey among radiologists working in Spain was conducted with questions related to knowledge about terminology and technologies, need for a regulated academic training on AI, and concerns about the implications of the use of these technologies. A total of 223 radiologists answered the survey, of whom 23.3% were residents and 76.7% were attending physicians. General terms such as "AI" and "algorithm" had been heard of or read in at least 75.8% and 57.4% of the cases, respectively, while more specific terms were scarcely known. All the respondents considered that they should pursue academic training in medical informatics and new technologies, and 92.9% of them reckoned this preparation should be incorporated in the training program of the specialty ... (Full article...)

|

Featured article of the week: September 26–October 02:

"Emerging cybersecurity threats in radiation oncology"

Modern image-guided radiation therapy is dependent on information technology and data storage applications that, like any other digital technology, are at risk from cyberattacks. Owing to a recent escalation in cyberattacks affecting radiation therapy treatments, the American Society for Radiation Oncology's Advances in Radiation Oncology is inaugurating a new special manuscript category devoted to cybersecurity issues. We conducted a review of emerging cybersecurity threats and a literature review of cyberattacks that affected radiation oncology practices. In the last 10 years, numerous attacks have led to an interruption of radiation therapy for thousands of patients, and some of these catastrophic incidents have been described as being worse than coronavirus disease 2019's impact on healthcare centers in New Zealand ... (Full article...)

|

Featured article of the week: September 19–25:

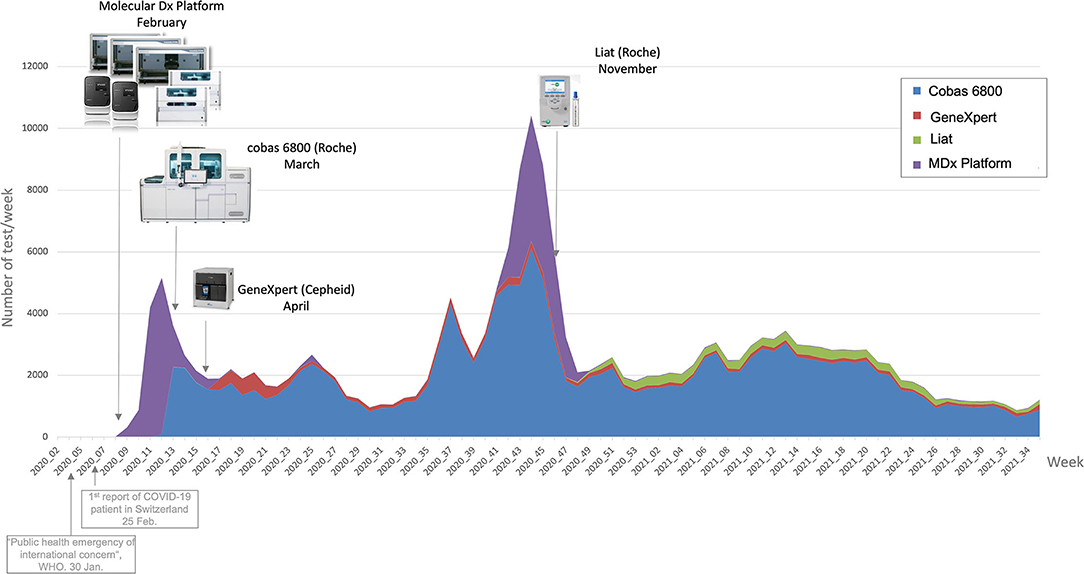

"An automated dashboard to improve laboratory COVID-19 diagnostics management"

In response to the COVID-19 pandemic, our microbial diagnostic laboratory located in a university hospital has implemented several distinct SARS-CoV-2 reverse transcription polymerase chain reaction (RT-PCR) systems in a very short time. More than 148,000 tests have been performed over 12 months, which represents about 405 tests per day, with peaks to more than 1,500 tests per days during the second wave. This was only possible thanks to automation and digitalization, to allow high-throughput, acceptable time to results and to maintain test reliability. An automated dashboard was developed to give access to key performance indicators (KPIs) to improve laboratory operational management. RT-PCR data extraction of four respiratory viruses—SARS-CoV-2, influenza A and B, and RSV—from our laboratory information system (LIS) was automated. This included ... (Full article...)

|

Featured article of the week: September 12–18:

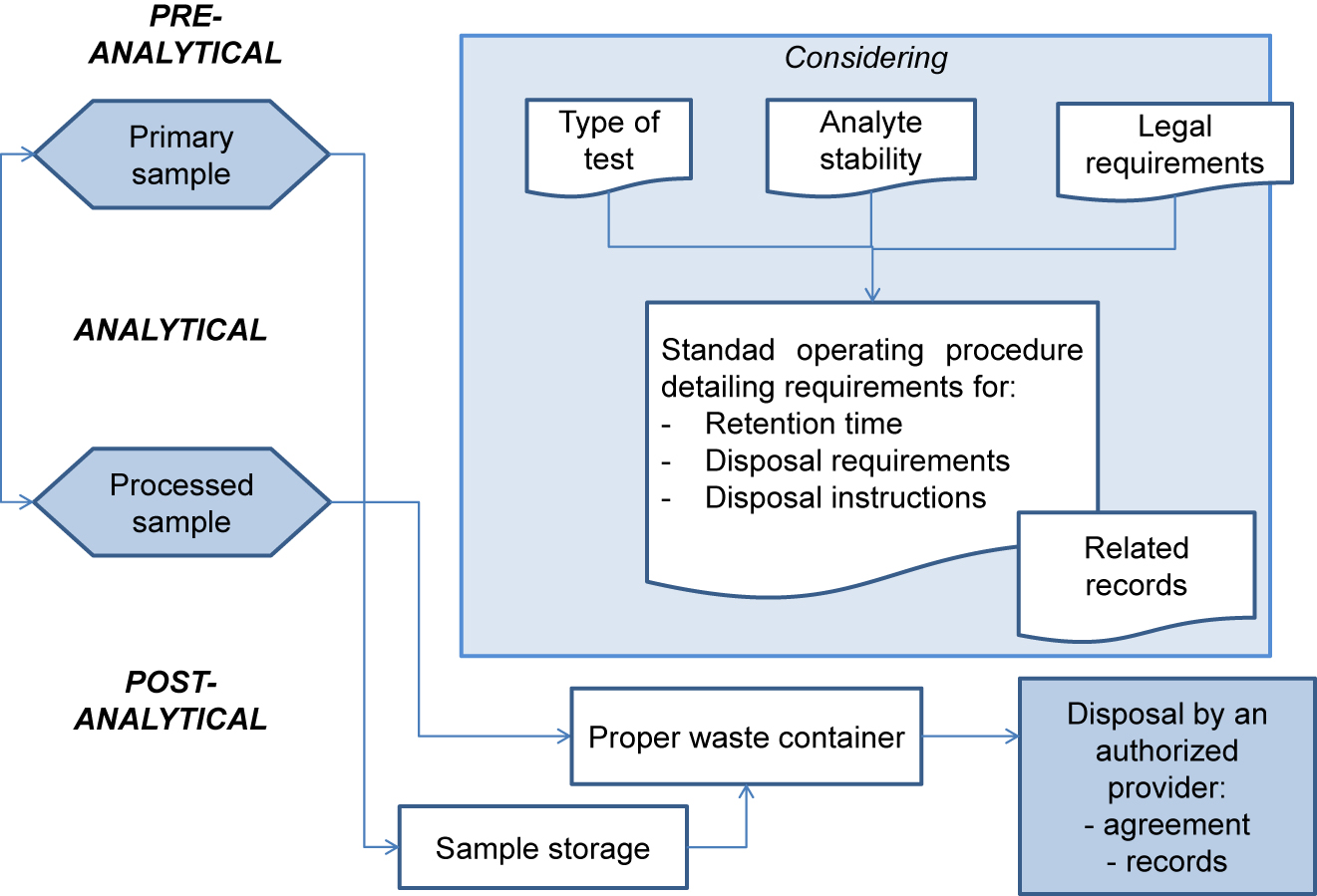

"Management of post-analytical processes in the clinical laboratory according to ISO 15189:2012: Considerations about the management of clinical samples, ensuring quality of post-analytical processes and laboratory information management"

ISO 15189:2012 Medical laboratories — Requirements for quality and competence establishes the requirements for clinical specimen management, ensuring the quality of processes and laboratory information management. ENAC (Entidad Nacional de Acreditación), the sole accreditation authority in Spain, established the requirements for the authorized use of the ISO 15189 accreditation label in reports issued by accredited laboratories. These recommendations are applicable to the lab's post-analytical processes and the professionals involved. The standard requires laboratories to define and document the duration and conditions of specimen retention. Laboratories are also required to design an internal quality control scheme to verify whether post-analytical activities attain the expected standards. Information management requirements are also established, and laboratories are required to design a contingency plan to ensure the communication of laboratory results ... (Full article...)

|

Featured article of the week: September 05–11:

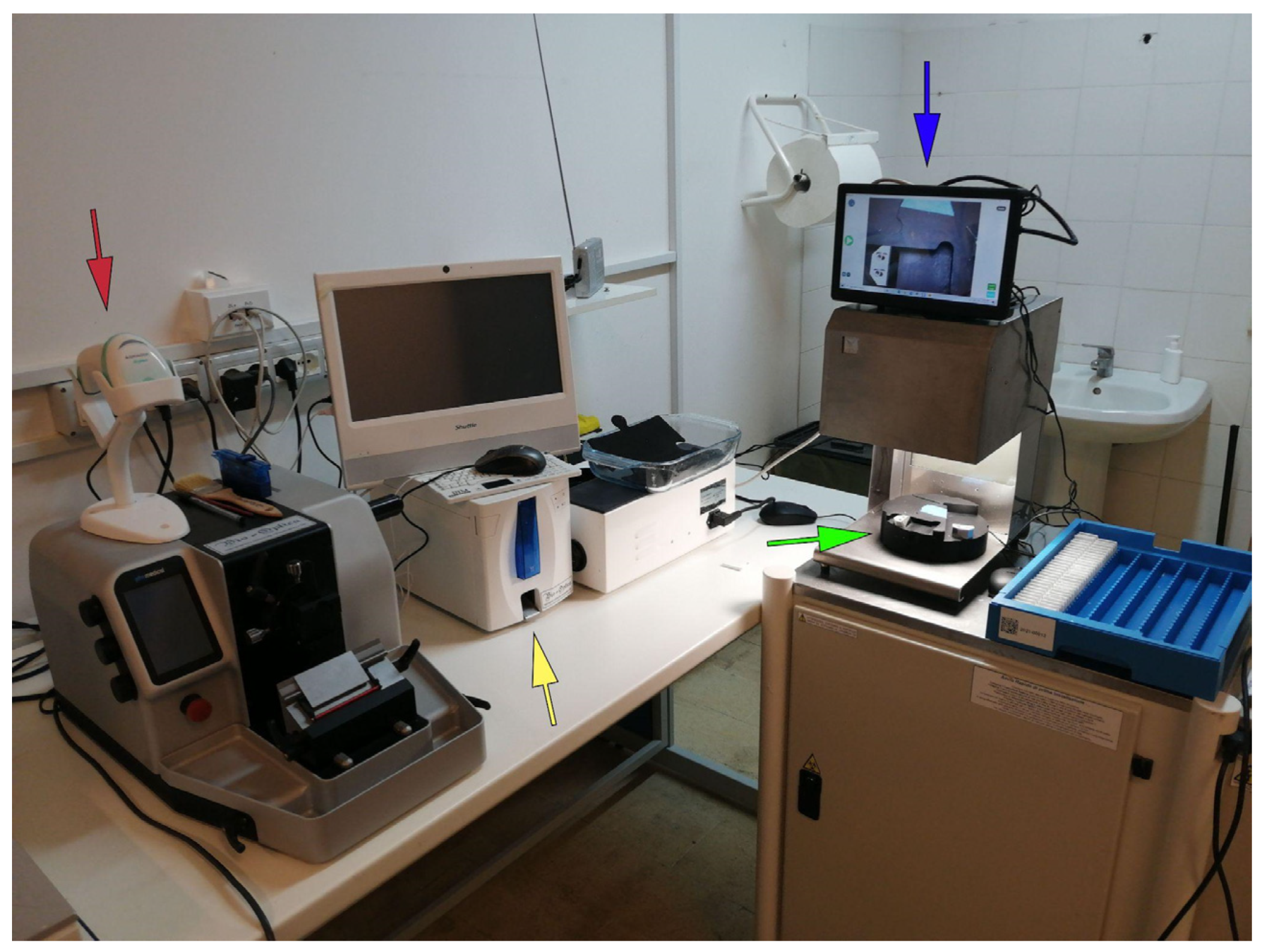

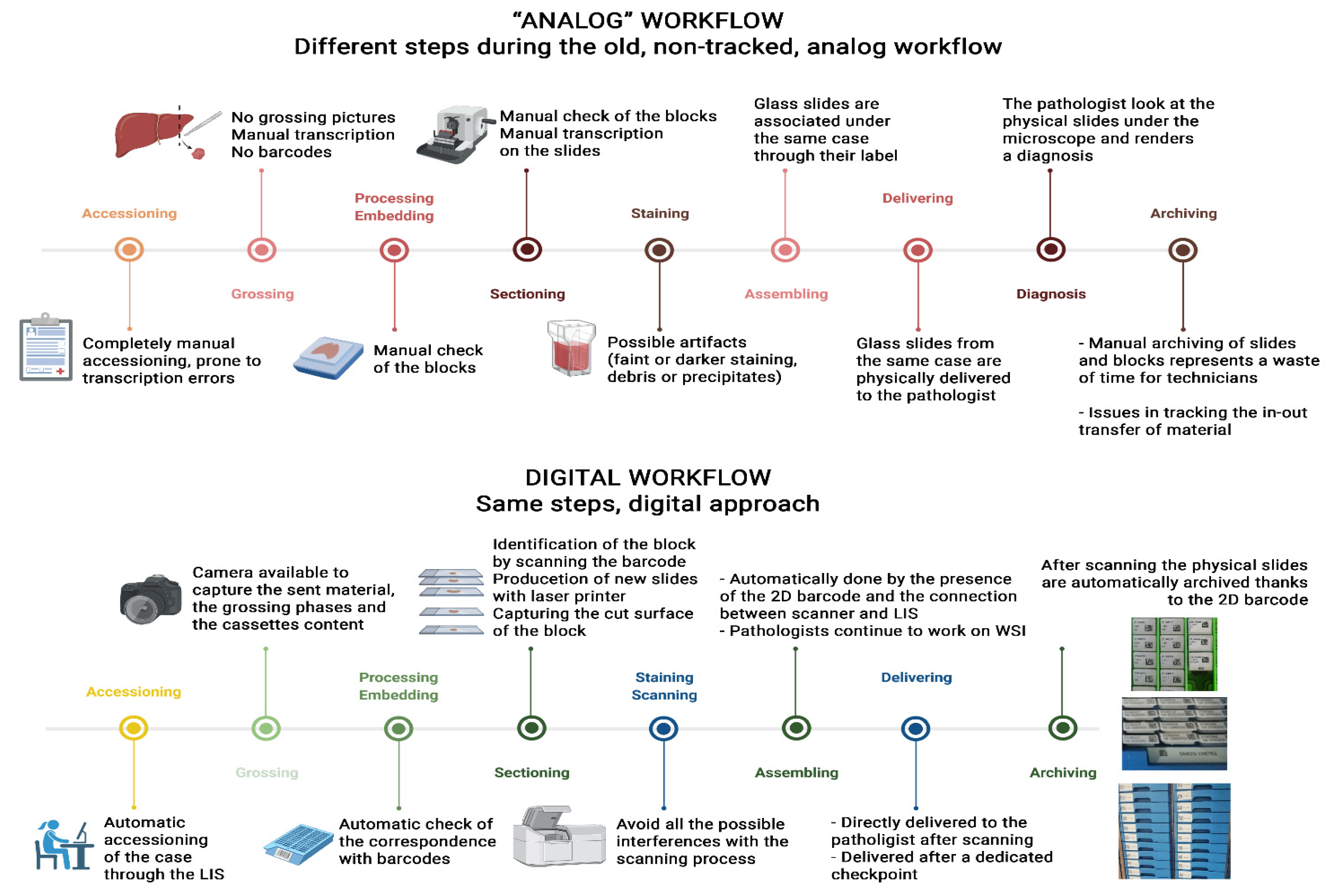

"A survival guide for the rapid transition to a fully digital workflow: The Caltagirone example"

Digital pathology for the routine assessment of cases for primary diagnosis has been implemented by few laboratories worldwide. The Gravina Hospital in Caltagirone (Sicily, Italy), which collects cases from seven different hospitals distributed in the Catania area, converted its entire workflow to digital starting from 2019. Before the transition, the Caltagirone pathology laboratory was characterized by a non-tracked workflow, based on paper requests, and hand-written blocks and slides, as well as manual assembling and delivering of the cases and glass slides to the pathologists. Moreover, the arrangement of the spaces and offices in the department was illogical and under-productive for the linearity of the workflow. For these reasons, an adequate 2D barcode system for tracking purposes, the redistribution of laboratory spaces, and the implementation of whole-slide imaging (WSI) technology based on a laboratory information system (LIS)-centric approach ... (Full article...)

|

<Featured article of the week: August 29–September 04:

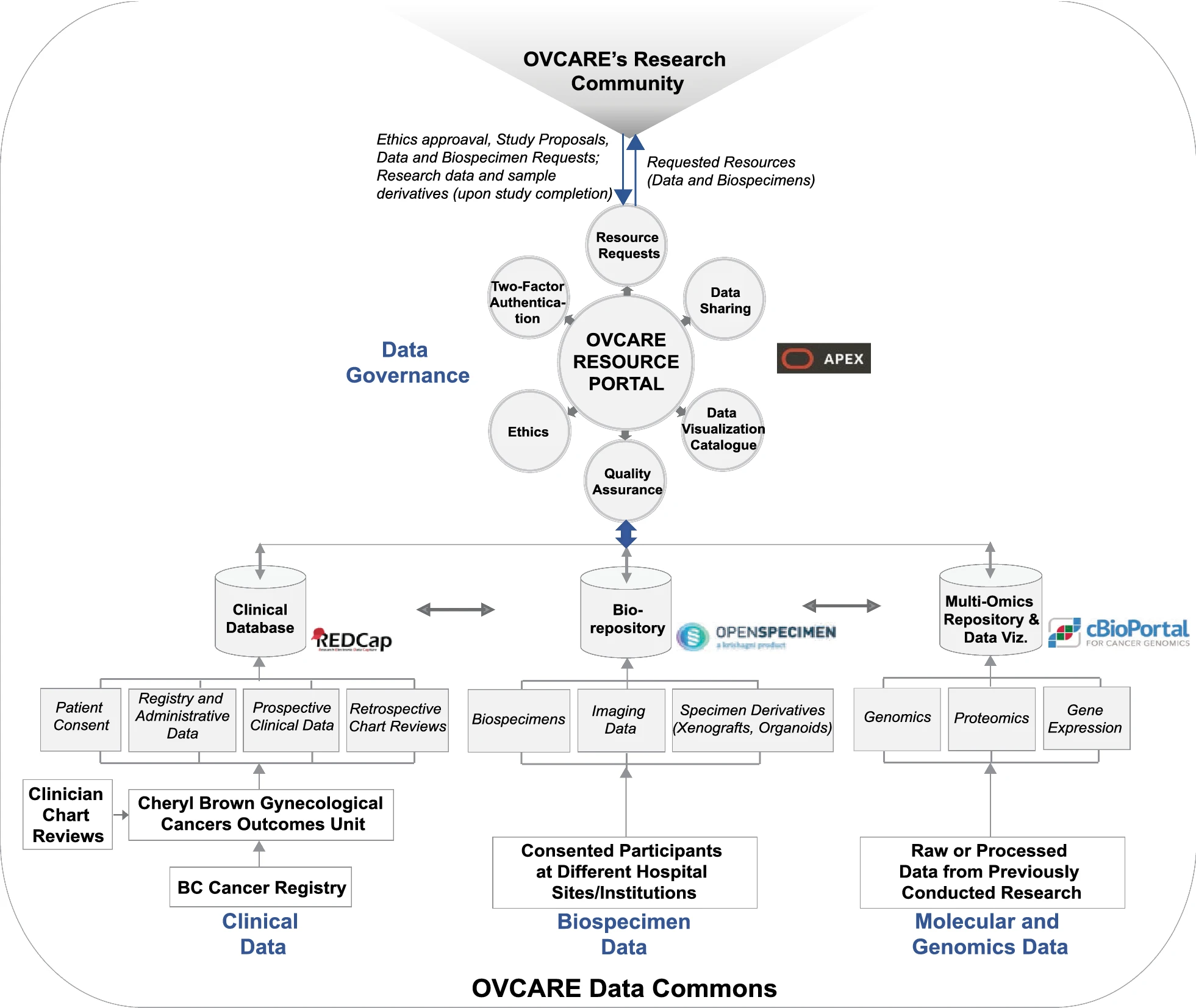

"From biobank and data silos into a data commons: Convergence to support translational medicine"

To drive translational medicine, modern day biobanks need to integrate with other sources of data (e.g., clinical, genomics) to support novel data-intensive research. Currently, vast amounts of research and clinical data remain in silos, held and managed by individual researchers, operating under different standards and governance structures; such a framework impedes sharing and effective use of data. In this article, we describe the journey of British Columbia’s Gynecological Cancer Research Program (OVCARE) in moving a traditional tumor biobank, outcomes unit, and a collection of data silos into an integrated data commons to support data standardization and resource sharing under collaborative governance, as a means of providing the gynecologic cancer research community in British Columbia access to tissue samples and associated clinical and molecular data from thousands of patients ... (Full article...)

|

Featured article of the week: August 15–21:

"Quality management system implementation in human and animal laboratories"

The ability to rapidly detect emerging and re-emerging threats relies on a strong network of laboratories providing high-quality testing services. Improving laboratory quality management systems (QMS) to ensure that these laboratories effectively play their critical role using a tailored stepwise approach can assist them to comply with World Health Organization's (WHO) International Health Regulations (IHRs), as well as the World Organization for Animal Health's (OIE) guidelines. Fifteen (15) laboratories in Armenia's human and veterinary laboratory networks were enrolled into a QMS strengthening program from 2017 to 2020. Training was provided for key staff, resulting in an implementation plan developed to address gaps. Routine mentorship visits were conducted. Audits were undertaken at baseline and post-implementation using standardized checklists to assess laboratory improvements ... (Full article...)

|

Featured article of the week: August 08–14:

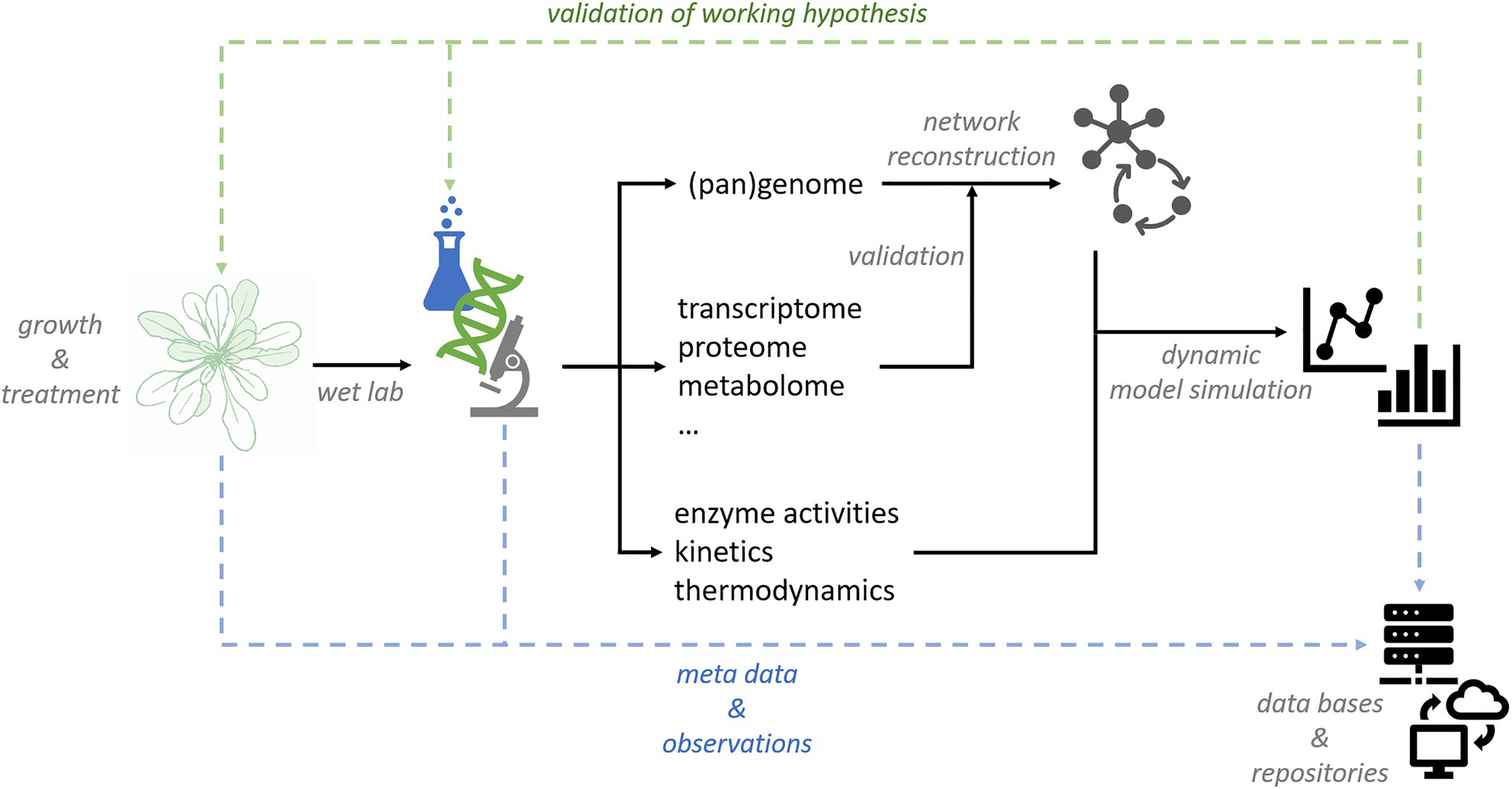

"Data management and modeling in plant biology"

The study of plant-environment interactions is a multidisciplinary research field. With the emergence of quantitative large-scale and high-throughput techniques, the amount and dimensionality of experimental data have strongly increased. Appropriate strategies for data storage, management, and evaluation are needed to make efficient use of experimental findings. Computational approaches to data mining are essential for deriving statistical trends and signatures contained in data matrices. Although, current biology is challenged by high data dimensionality in general, this is particularly true for plant biology. As sessile organisms, plants have to cope with environmental fluctuations ... (Full article...)

|

Featured article of the week: August 01-07:

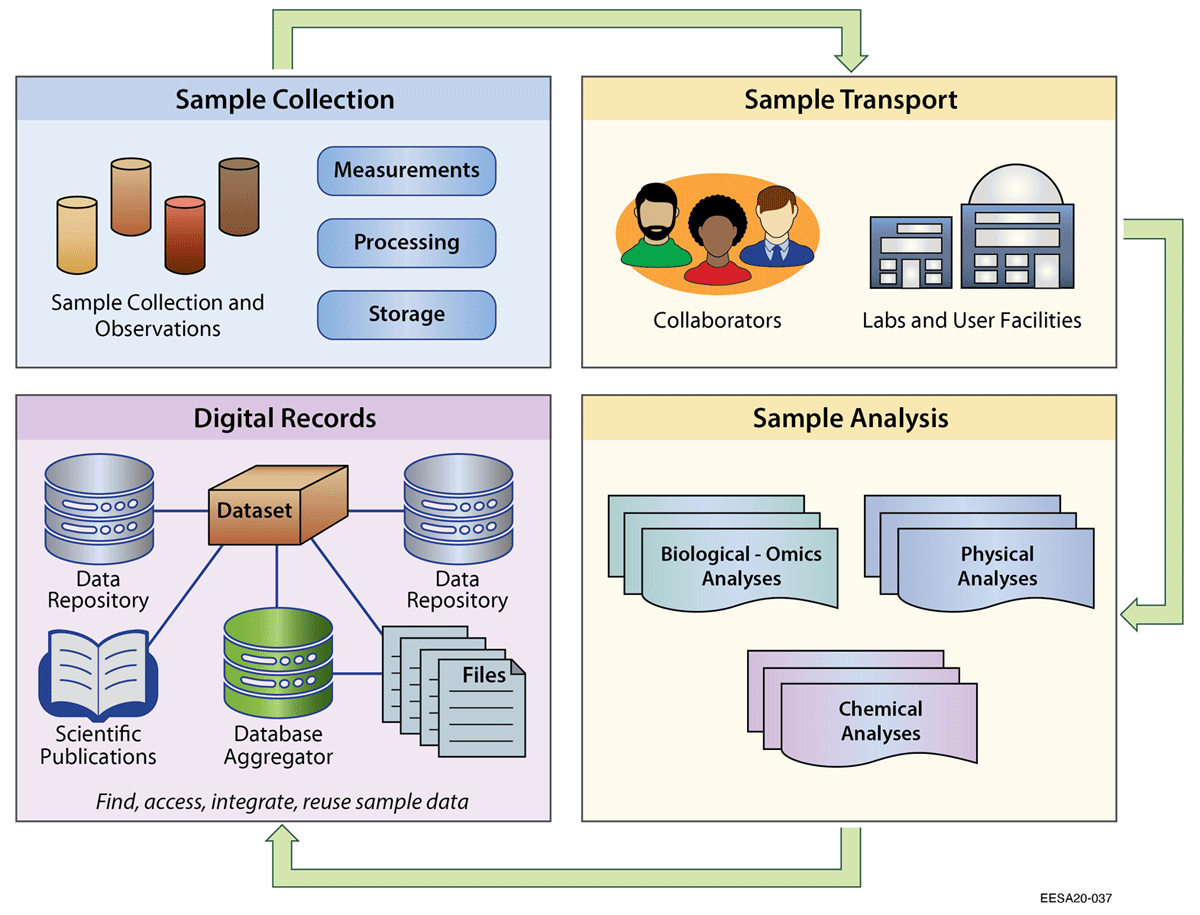

"Sample identifiers and metadata to support data management and reuse in multidisciplinary ecosystem sciences"

Physical samples are foundational entities for research across the biological, Earth, and environmental sciences. Data generated from sample-based analyses are not only the basis of individual studies, but can also be integrated with other data to answer new and broader-scale questions. Ecosystem studies increasingly rely on multidisciplinary team-based science to study climate and environmental changes. While there are widely adopted conventions within certain domains to describe sample data, these have gaps when applied in a multidisciplinary context. In this study, we reviewed existing practices for identifying, characterizing, and linking related environmental samples. We then tested practicalities of assigning persistent identifiers to samples, with standardized metadata, in a pilot field test involving eight United States Department of Energy projects. ... (Full article...)

|

Featured article of the week: July 25–31:

"Best practice recommendations for the implementation of a digital pathology workflow in the anatomic pathology laboratory by the European Society of Digital and Integrative Pathology (ESDIP)"

The interest in implementing digital pathology (DP) workflows to obtain whole slide image (WSI) files for diagnostic purposes has increased in the last few years. The increasing performance of technical components and the Food and Drug Administration (FDA) approval of systems for primary diagnosis led to increased interest in applying DP workflows. However, despite this revolutionary transition, real-world data suggest that a fully digital approach to histological workflow has been implemented in only a minority of pathology laboratories. The objective of this study is to facilitate the implementation of DP workflows in pathology laboratories, helping those involved in this process of transformation with: (a) how to identify the scope and the boundaries of the DP transformation; (b) how to introduce automation to reduce errors; (c) how to introduce appropriate quality control to guarantee the safety of the process; and (d) addressing the hardware and software needed to implement DP systems inside the pathology laboratory. ... (Full article...)

|

Featured article of the week: July 18–24:

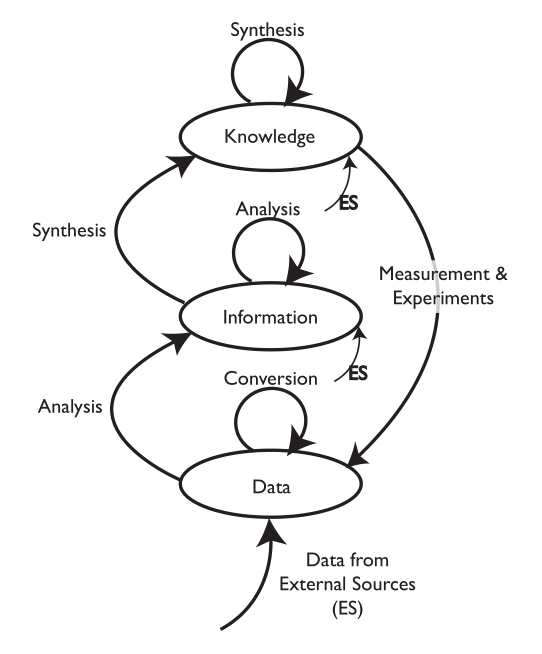

"Directions in Laboratory Systems: One Person's Perspective"

The purpose of this work is to provide one person's perspective on planning for the use of computer systems in the laboratory, and with it a means of developing a direction for the future. Rather than concentrating on “science first, support systems second,” it reverses that order, recommending the construction of a solid support structure before populating the lab with systems and processes that produce knowledge, information, and data (K/I/D). This material is intended for those working in laboratories of all types. The biggest benefit will come to those working in startup labs since they have a clean slate to work with, as well as those freshly entering into scientific work as it will help them understand the roles of various systems. Those working in existing labs will also benefit by seeing a different perspective than they may be used to, giving them an alternative path for evaluating their current structure and how they might adjust it to improve operations ... (Full article...)

|

Featured article of the week: July 11–17:

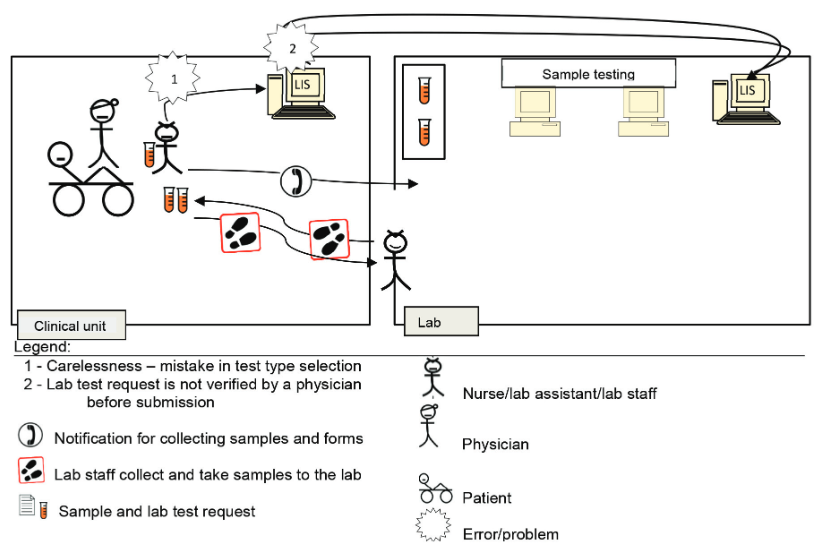

"Error evaluation in the laboratory testing process and laboratory information systems"

The laboratory testing process consists of five analysis phases, featuring the total testing process (TTP) framework. Activities in laboratory processing, including those of testing, are error-prone and affect the use of laboratory information systems (LIS). This study seeks to identify error factors related to system use, as well as the first and last phases of the laboratory testing process, using a proposed framework known as the "total testing process for laboratory information systems" (TTP-LIS). We conducted a qualitative case study evaluation in two private hospitals and a medical laboratory. We collected data using interviews, observations, and document analysis methods involving physicians, nurses, an information technology officer, and the laboratory staff... (Full article...)

|

Featured article of the week: July 3–10:

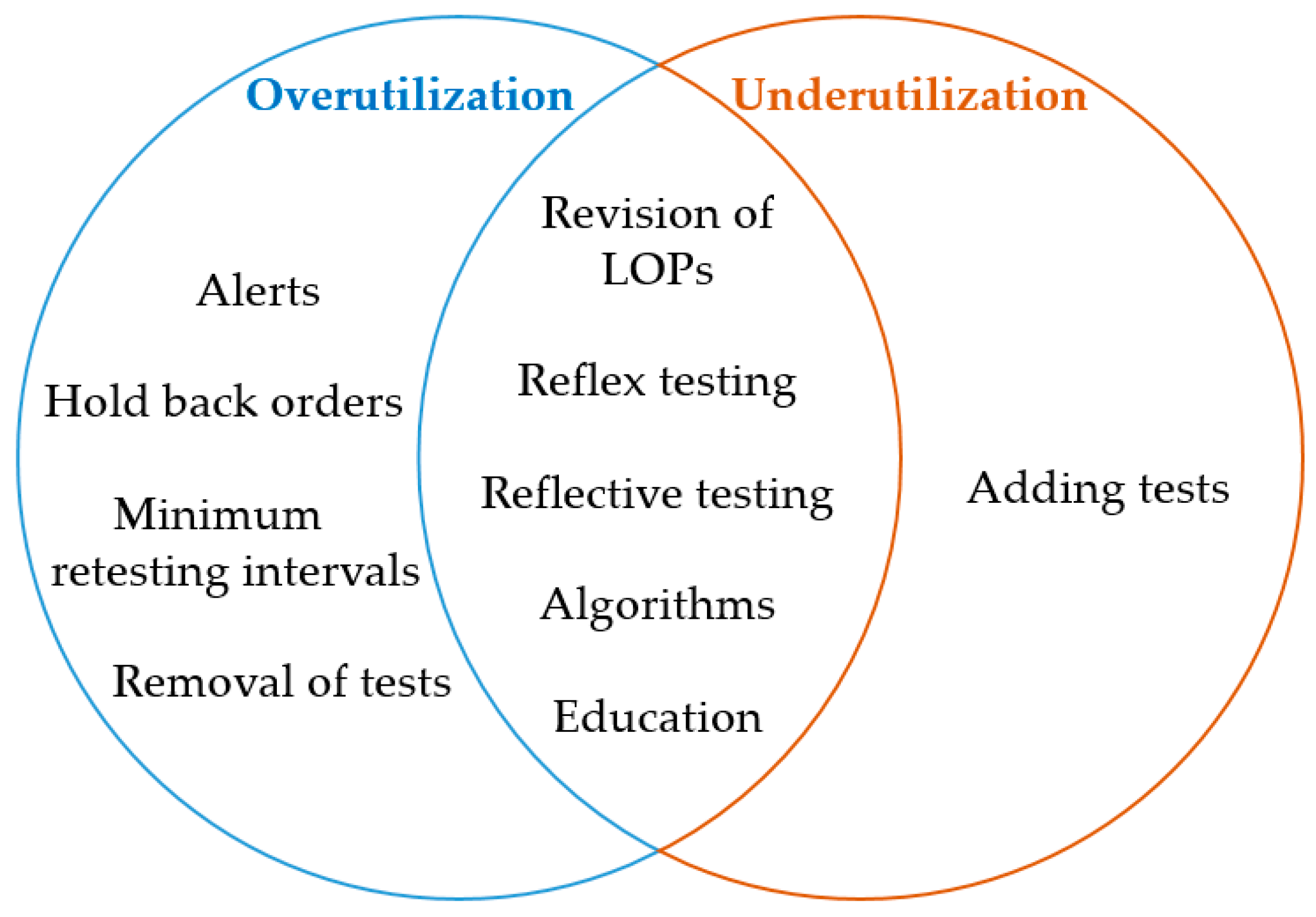

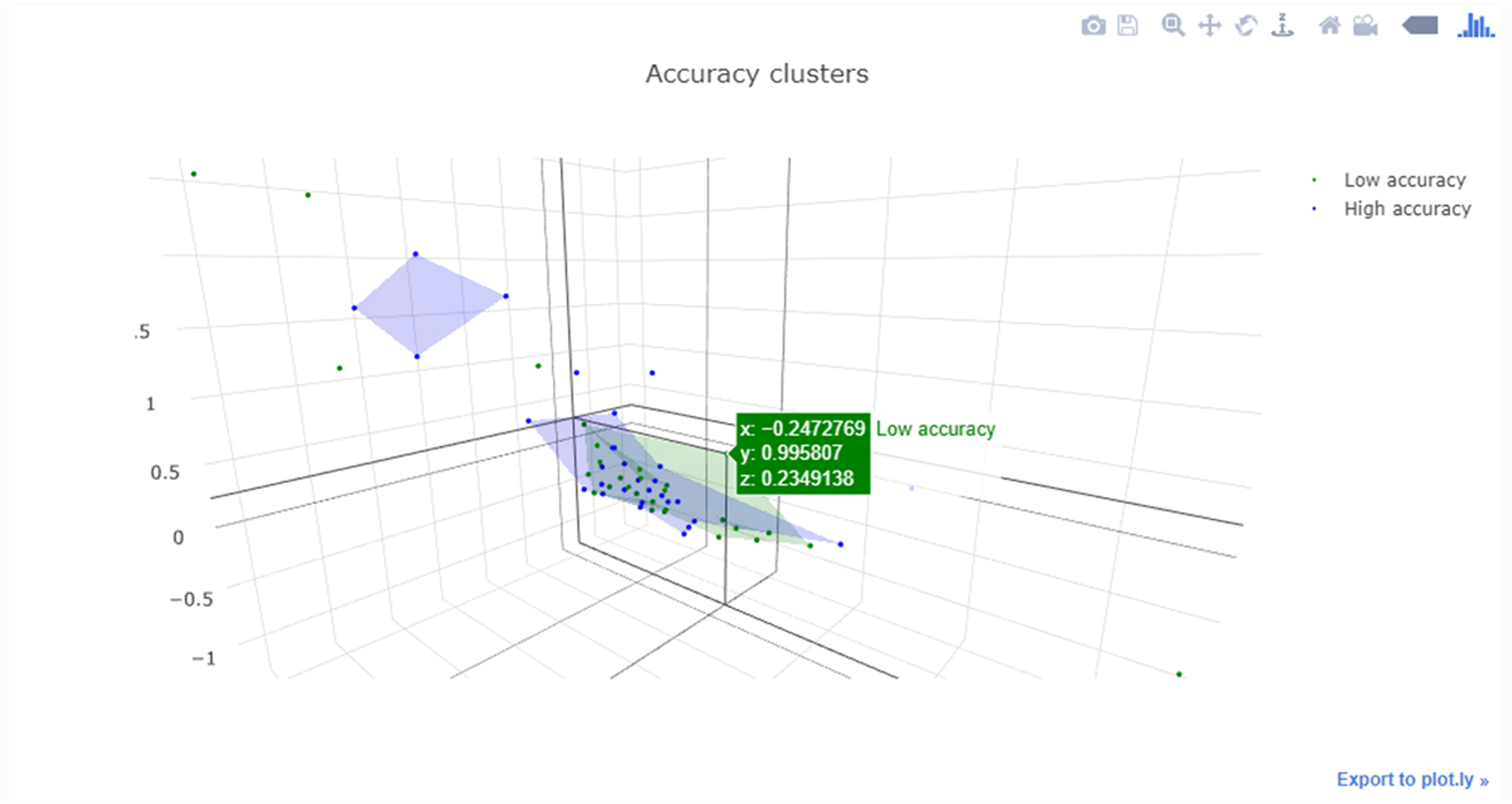

"Laboratory demand management strategies: An overview"

Inappropriate laboratory test selection in the form of overutilization as well as underutilization, frequently occurs despite available guidelines. There is broad approval among laboratory specialists and clinicians that demand management (DM) strategies are useful tools to avoid this issue. Most of these tools are based on automated algorithms or other types of machine learning. This review summarizes the available DM strategies that may be adopted to local settings. We believe that artificial intelligence (AI) may help to further improve these available tools. (Full article...)

|

Featured article of the week: June 27—July 3:

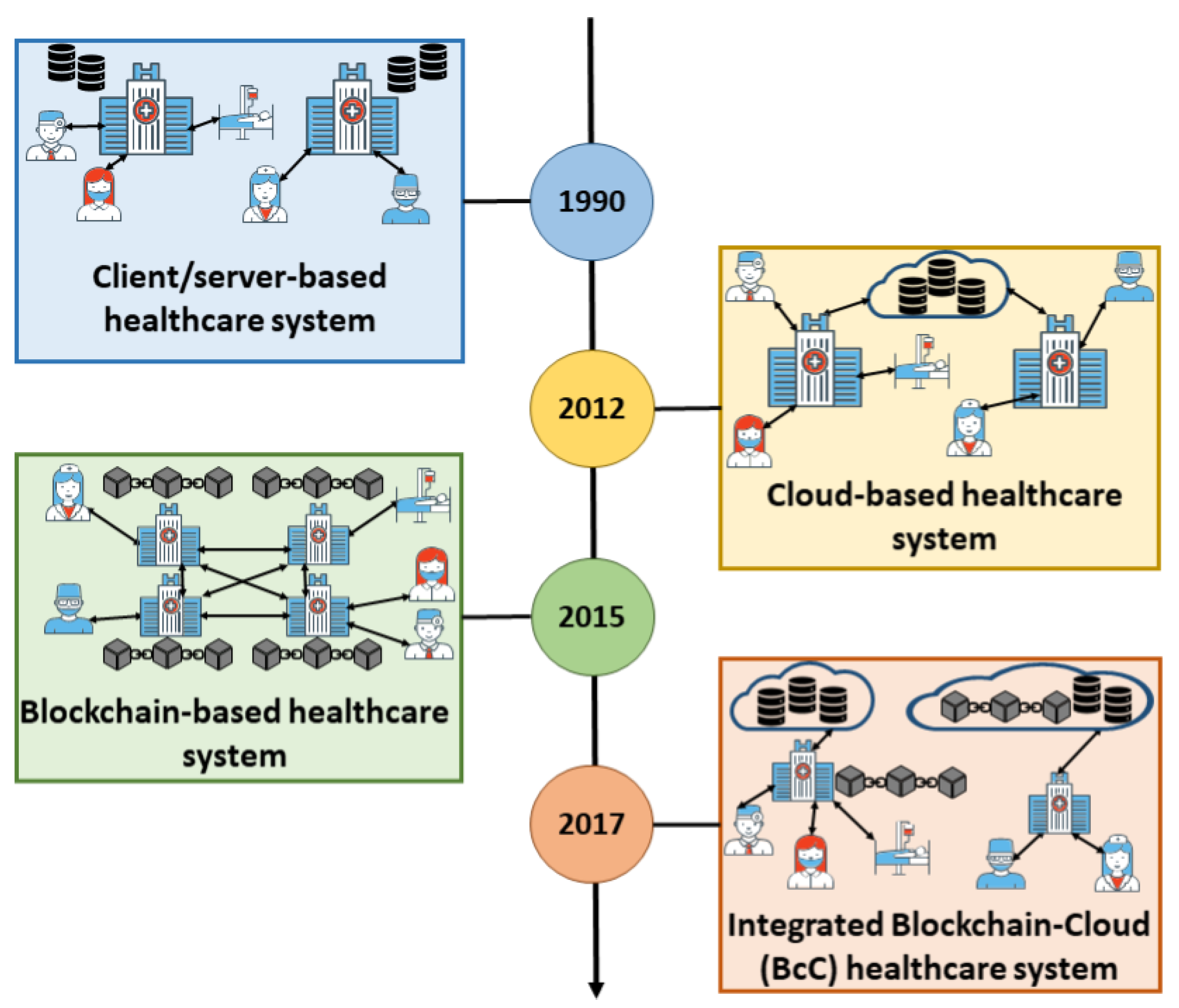

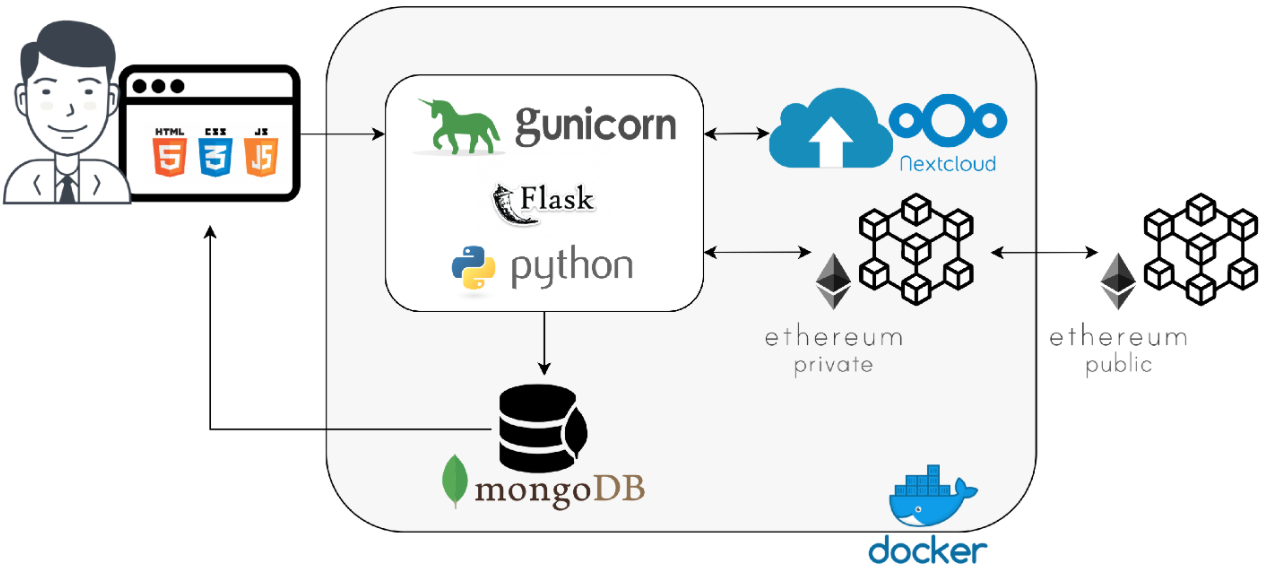

"A scoping review of integrated blockchain-cloud architecture for healthcare: Applications, challenges, and solutions"

Blockchain is a disruptive technology for shaping the next era of healthcare systems striving for efficient and effective patient care. This is thanks to its peer-to-peer, secure, and transparent characteristics. On the other hand, cloud computing made its way into the healthcare system thanks to its elasticity and cost-effective nature. However, cloud-based systems fail to provide a secured and private patient-centric cohesive view to multiple healthcare stakeholders. In this situation, blockchain provides solutions to address security and privacy concerns of the cloud because of its decentralization feature combined with data security and privacy, while cloud provides solutions to the blockchain scalability and efficiency challenges. Therefore a novel paradigm of blockchain-cloud integration (BcC) emerges for the domain of healthcare ... (Full article...)

|

Featured article of the week: June 20—26:

"Laboratory information management system for the biosafety laboratory: Safety and efficiency"

The laboratory information management system (LIMS) has been widely used to facilitate laboratory activities. However, the current LIMS does not contain functions to improve the safety of laboratory work, which is a major concern of biosafety laboratories (BSLs). With significant biosafety information needing to be managed and an increasing number of biosafety-related research projects underway, it is worthy of expanding the current framework of LIMS and building a system that is more suitable for BSL usage. Such a system should carefully trade off between the safety and efficiency of regular lab activities, allowing laboratory staff to conduct their research as freely as possible while also ensuring their and the environment’s safety. In order to achieve this goal, relevant information on the type of research, laboratory personnel, experimental materials, and experimental equipment must be collected and fully utilized by a centralized system and its databases. (Full article...)

|

Featured article of the week: June 13—19:

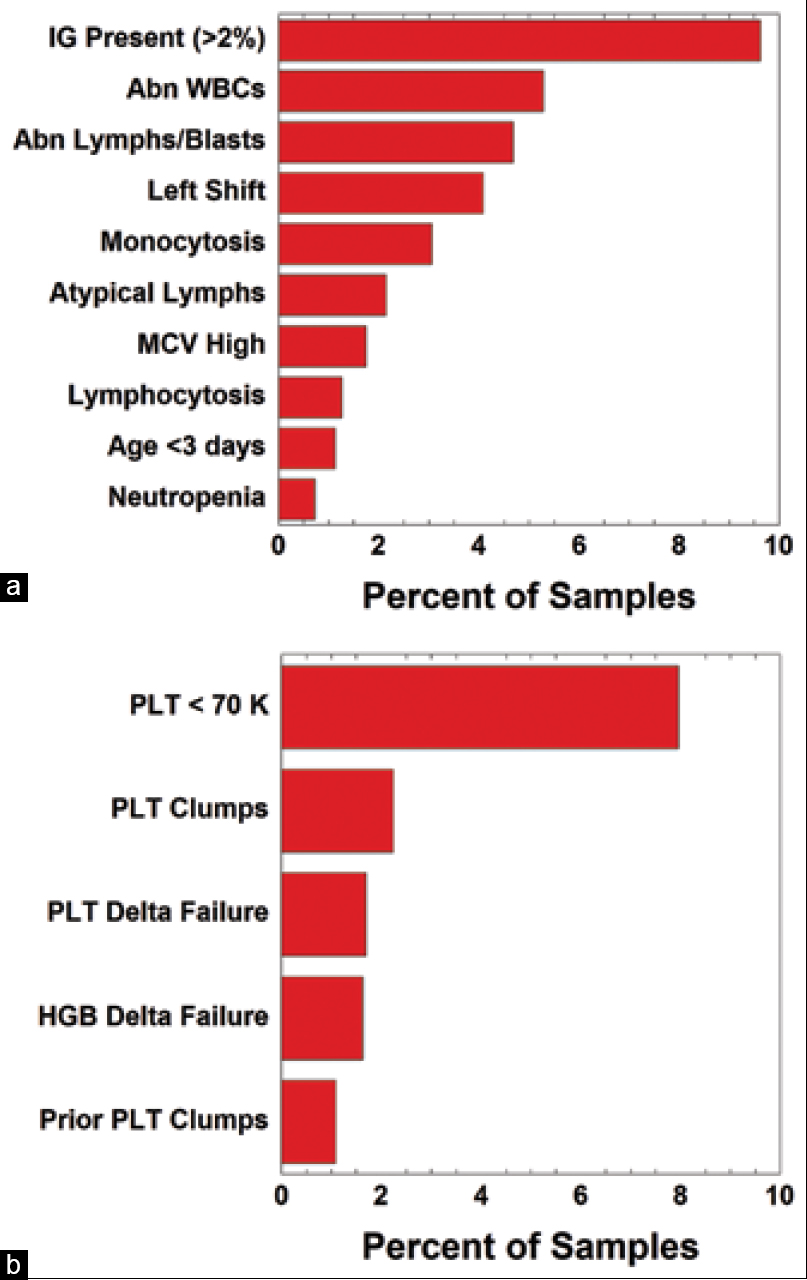

"Use of middleware data to dissect and optimize hematology autoverification"

Hematology analysis comprises some of the highest volume tests run in clinical laboratories. Autoverification of hematology results using computer-based rules reduces turnaround time for many specimens, while strategically targeting specimen review by technologist or pathologist. Autoverification rules had been developed over a decade at an 800-bed tertiary/quarternary care academic medical central laboratory serving both adult and pediatric populations. In the process of migrating to newer hematology instruments, we analyzed the rates of the autoverification rules/flags most commonly associated with triggering manual review. We were particularly interested in rules that on their own often led to manual review in the absence of other flags. Prior to the study, autoverification rates were 87.8% (out of 16,073 orders) for complete blood count (CBC) if ordered as a panel and 85.8% (out of 1,940 orders) for CBC components ordered individually (not as the panel). (Full article...)

|

Featured article of the week: June 6—12:

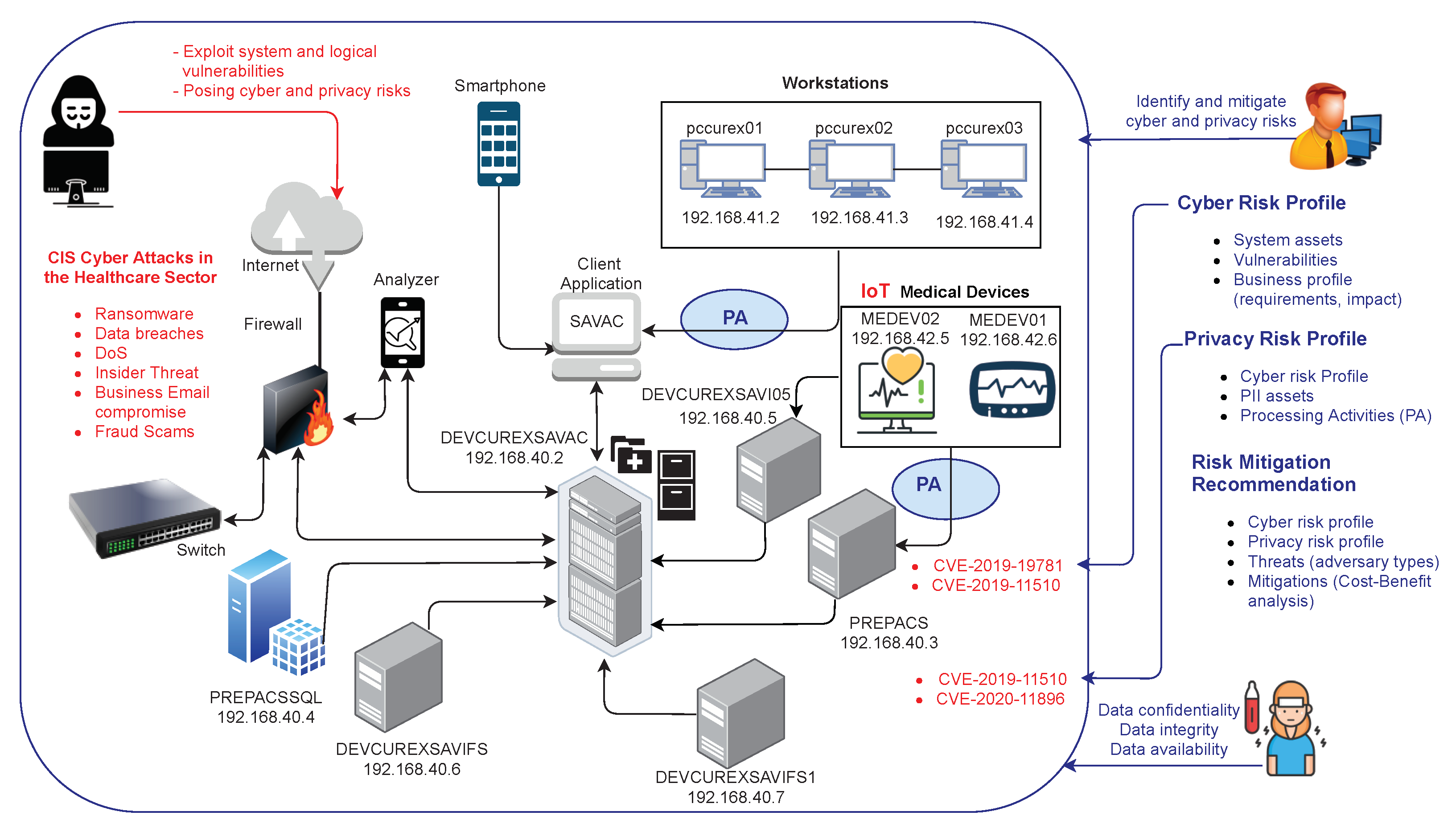

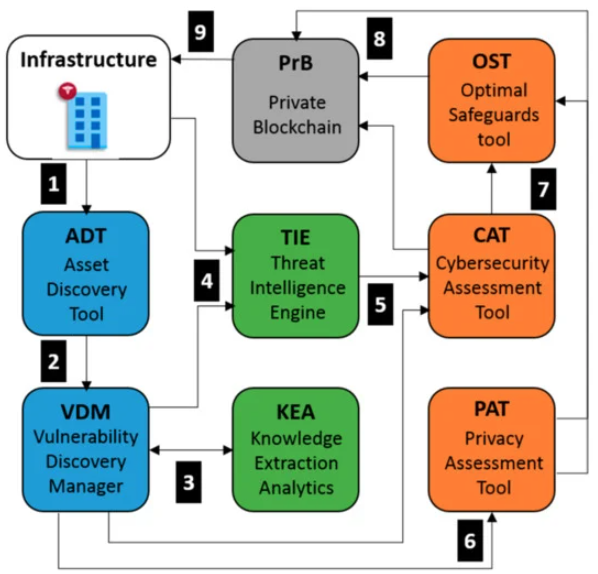

"Automated cyber and privacy risk management toolkit"

Addressing cyber and privacy risks has never been more critical for organizations. While a number of risk assessment methodologies and software tools are available, it is most often the case that one must, at least, integrate them into a holistic approach that combines several appropriate risk sources as input to risk mitigation tools. In addition, cyber risk assessment primarily investigates cyber risks as the consequence of vulnerabilities and threats that threaten assets of the investigated infrastructure. In fact, cyber risk assessment is decoupled from privacy impact assessment, which aims to detect privacy-specific threats and assess the degree of compliance with data protection legislation. Furthermore, a privacy impact assessment (PIA) is conducted in a proactive manner during the design phase of a system, combining processing activities and their inter-dependencies with assets, vulnerabilities, real-time threats and personally identifiable information (PII) that may occur during the dynamic lifecycle of systems. (Full article...)

|

Featured article of the week: May 30–June 5:

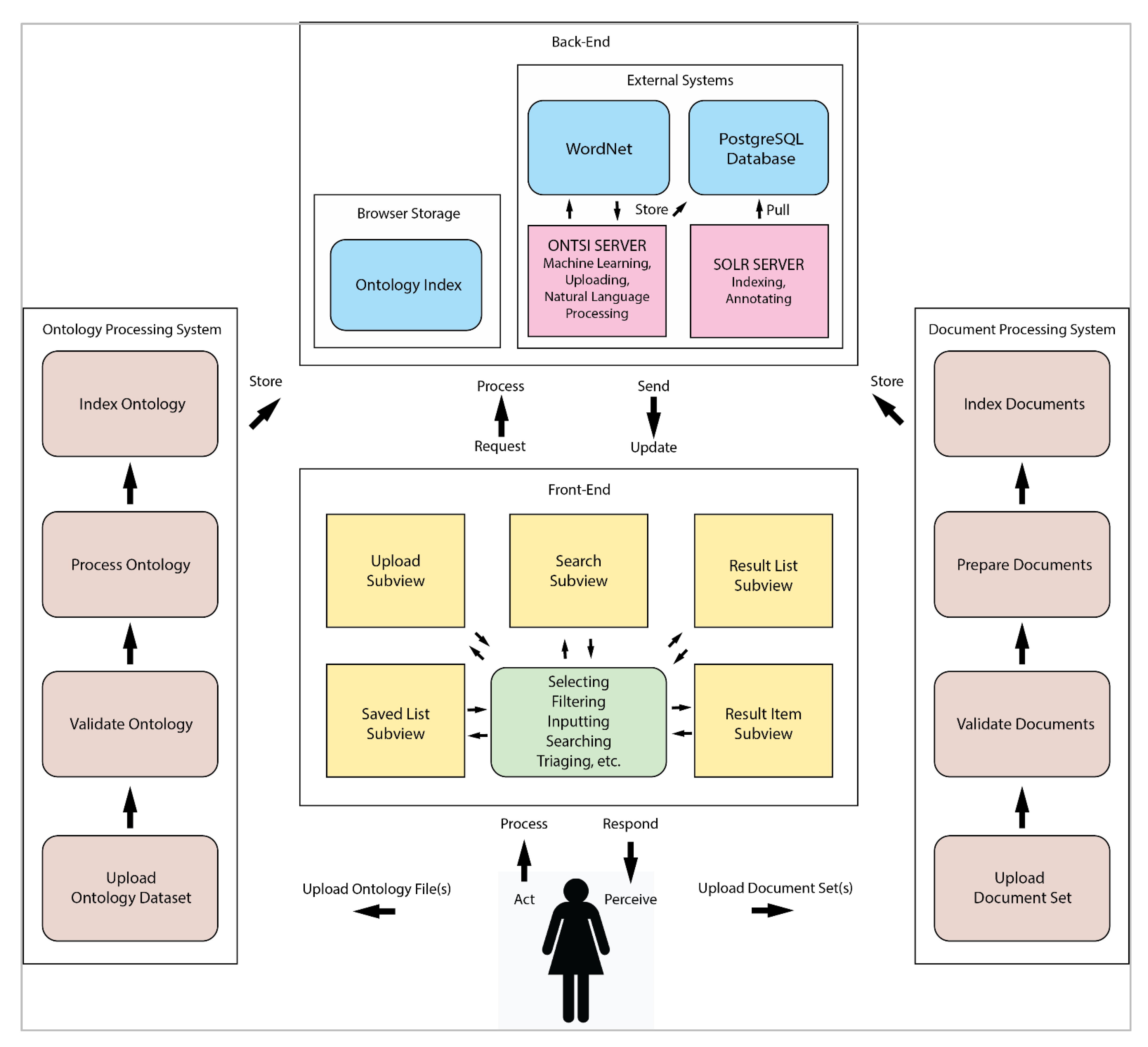

"Design of generalized search interfaces for health informatics"

In this paper, we investigate ontology-supported interfaces for health informatics search tasks involving large document sets. We begin by providing background on health informatics, machine learning, and ontologies. We review leading research on health informatics search tasks to help formulate high-level design criteria. We then use these criteria to examine traditional design strategies for search interfaces. To demonstrate the utility of the criteria, we apply them to the design of the ONTology-supported Search Interface (ONTSI), a demonstrative, prototype system. ONTSI allows users to plug-and-play document sets and expert-defined domain ontologies through a generalized search interface. ONTSI’s goal is to help align users’ common vocabulary with the domain-specific vocabulary of the plug-and-play document set. We describe the functioning and utility of ONTSI in health informatics search tasks through a workflow and a scenario. We conclude with a summary of ongoing evaluations, limitations, and future research. (Full article...)

|

Featured article of the week: May 23–29:

"Cybersecurity and privacy risk assessment of point-of-care systems in healthcare: A use case approach"

Point-of-care (POC) systems are generally used in healthcare to respond rapidly and prevent critical health conditions. Hence, POC systems often handle personal health information, and, consequently, their cybersecurity and privacy requirements are of crucial importance. However, assessing these requirements is a significant task. In this work, we propose a use-case approach to assess specifications of cybersecurity and privacy requirements of POC systems in a structured and self-contained form. Such an approach is appropriate since use cases are one of the most common means adopted by developers to derive requirements. As a result, we detail a use case approach in the framework of a real-based healthcare IT infrastructure that includes a health information system, integration engines, application servers, web services, medical devices, smartphone apps, and medical modalities (all data simulated) together with the interaction with participants. Since our use case also sustains the analysis of cybersecurity and privacy risks in different threat scenarios, it also supports decision making and the analysis of compliance considerations. (Full article...)

|

Featured article of the week: May 16–22:

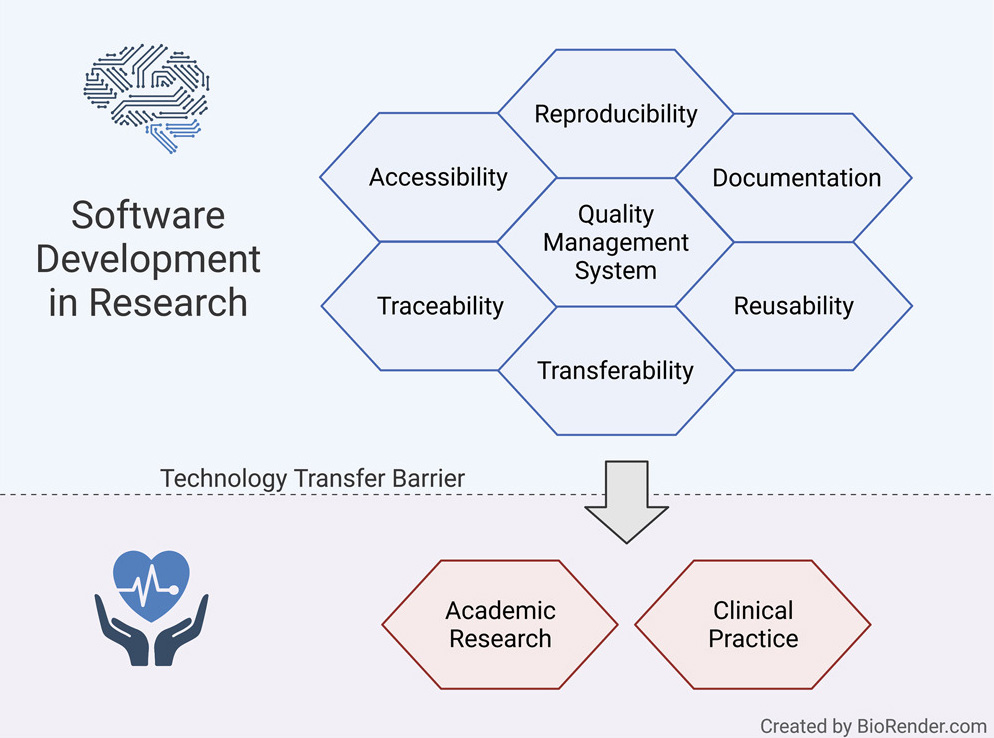

"Fostering reproducibility, reusability, and technology transfer in health informatics"

Computational methods can transform healthcare. In particular, health informatics combined with artificial intelligence (AI) has shown tremendous potential when applied in various fields of medical research and has opened a new era for precision medicine. The development of reusable biomedical software for research or clinical practice is time-consuming and requires rigorous compliance with quality requirements as defined by international standards. However, research projects rarely implement such measures, hindering smooth technology transfer to the research community or manufacturers, as well as reproducibility and reusability. Here, we present a guideline for quality management systems (QMS) for academic organizations incorporating the essential components, while confining the requirements to an easily manageable effort. It provides a starting point to effortlessly implement a QMS tailored to specific needs and greatly facilitates technology transfer in a controlled manner, thereby supporting reproducibility and reusability. (Full article...)

|

Featured article of the week: May 09–15:

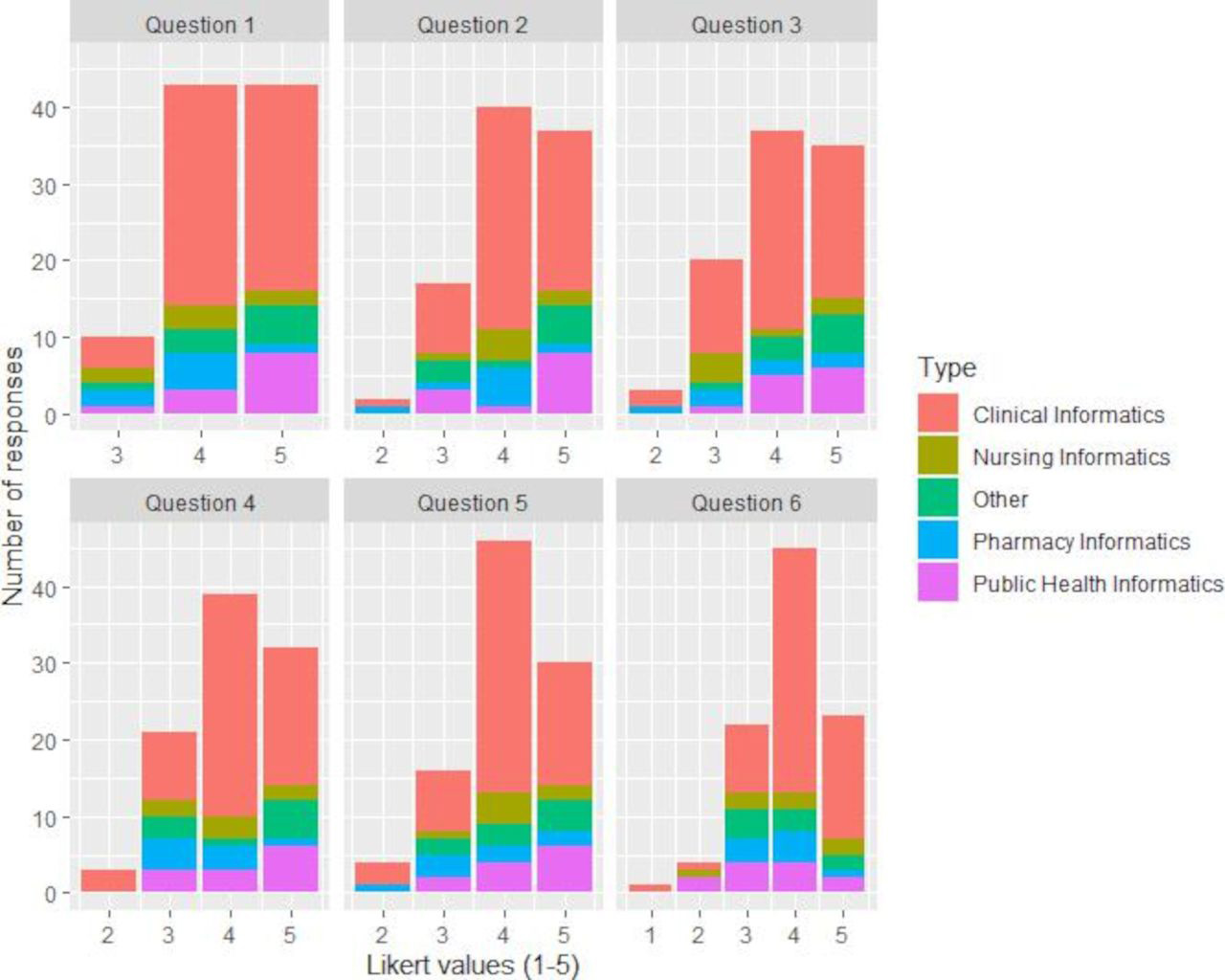

"Development of a core competency framework for clinical informatics"

Up to this point, there has not been a national core competency framework for clinical informatics in the U.K. Here we report on the final two iterations of work carried out towards the formation of a national core competency framework. This follows an initial systematic literature review of existing skills and competencies and a job listing analysis. An iterative approach was applied to framework development. Using a mixed-methods design, we carried out semi-structured interviews with participants involved in informatics (n = 15). The framework was updated based on the interview findings and was subsequently distributed as part of a bespoke online digital survey for wider participation (n = 87). The final version of the framework is based on the findings of the survey.(Full article...)

|

Featured article of the week: May 02–08:

"Strategies for laboratory professionals to drive laboratory stewardship"

Appropriate laboratory testing is critical in today's healthcare environment that aims to improve patient care while reducing cost. In recent years, laboratory stewardship has emerged as a strategy for assuring quality in laboratory medicine with the goal of providing the right test for the right patient at the right time. Implementing a laboratory stewardship program now presents a valuable opportunity for laboratory professionals to exercise leadership within health systems and to drive change toward realizing aims in healthcare. The proposed framework for program implementation includes five key elements: 1) a clear vision and organizational alignment; 2) appropriate skills for program execution and management; 3) resources to support the program; 4) incentives to motivate participation; and, 5) a plan of action that articulates program objectives and metrics. This framework builds upon principles of change management, with emphasis on engagement with clinical and administrative stakeholders and the use of clinical data as the basis for change. These strategies enable laboratory professionals to cultivate organizational support for improving laboratory use and take a leading role in providing high-quality patient care. (Full article...)

|

Featured article of the week: April 25–May 01:

"Cybersecurity impacts for artificial intelligence use within Industry 4.0"

In today’s modern digital manufacturing landscape, new and emerging technologies can shape how an organization can compete, while others will view those technologies as a necessity to survive, as manufacturing has been identified as a critical infrastructure. Universities struggle to hire university professors that are adequately trained or willing to enter academia due to competitive salary offers in the industry. Meanwhile, the demand for people knowledgeable in fields such as artificial intelligence, data science, and cybersecurity continuously rises, with no foreseeable drop in demand in the next several years. This results in organizations deploying technologies with a staff that inadequately understands what new cybersecurity risks they are introducing into the company. This work examines how organizations can potentially mitigate some of the risk associated with integrating these new technologies and developing their workforce to be better prepared for looming changes in technological skill need. (Full article...)

|

Featured article of the week: April 18–24:

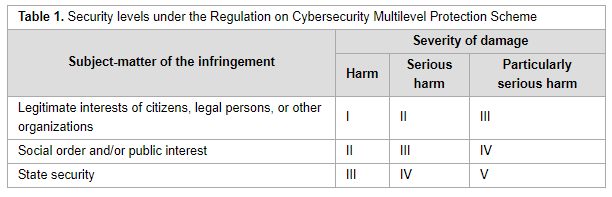

"Cross-border data transfer regulation in China"

With the growing participation of emerging countries in global data governance, the traditional legislative paradigm dominated by the European Union and the United States is constantly being analyzed and reshaped. It is of particular importance for China to establish the regulatory framework of cross-border data transfer, for not only does it involve the rights of Chinese citizens and entities, but also the concepts of cyber-sovereignty and national security, as well as the framing of global cyberspace rules. China continues to leverage data sovereignty to persuade lawmakers to support the development of critical technology in digital domains and infrastructure construction. This paper aims to systematically and chronologically describe Chinese regulations for cross-border data exchange. Enacted and draft provisions—as well as binding and non-binding regulatory rules—are studied, and various positive dynamic developments in the framing of China’s cross-border data regulation are shown ... (Full article...)

|

Featured article of the week: April 11–17:

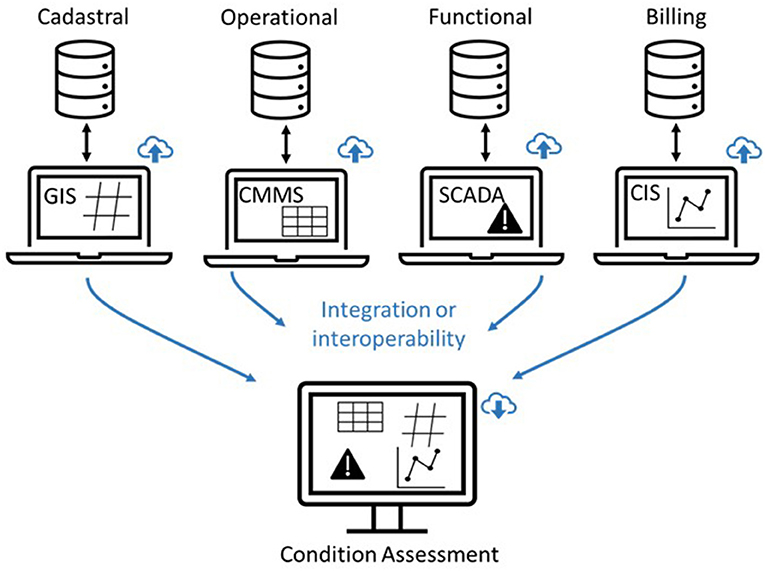

"Data and information systems management for urban water infrastructure condition assessment"

Most of the urban water infrastructure around the world was built several decades ago and nowadays they are deteriorated. As such, the assets that constitute these infrastructures need to be updated or replaced. Since most of the assets are buried, water utilities face the challenge of deciding where, when, and how to update or replace those assets. Condition assessment is a vital component of any planned update and replacement activities and is mostly based on the data collected from the managed networks. This collected data needs to be organized and managed in order to be transformed into useful information. Nonetheless, the large amount of assets and data involved makes data and information management a challenging task for water utilities, especially those with as lower digital maturity level. This paper highlights the importance of data and information systems' management for urban water infrastructure condition assessment based on the authors' experiences. (Full article...)

|

Featured article of the week: April 04–10:

"Diagnostic informatics: The role of digital health in diagnostic stewardship and the achievement of excellence, safety, and value"

Diagnostic investigations (i.e., pathology laboratory analysis and medical imaging) aim to increase the certainty of the presence of or absence of disease by supporting the process of differential diagnosis, support clinical management, and monitor a patient's trajectory (e.g., disease progression or response to treatment). Digital health—defined as the collection, storage, retrieval, transmission, and utilization of data, information, and knowledge to support healthcare—has become an essential component of the diagnostic investigational process, helping to facilitate the accuracy and timeliness of information transfer and enhance the effectiveness of decision-making processes. Digital health is also important to diagnostic stewardship, which involves coordinated guidance and interventions to ensure the appropriate utilization of diagnostic tests for therapeutic decision-making. (Full article...)

|

Featured article of the week: March 28–April 03:

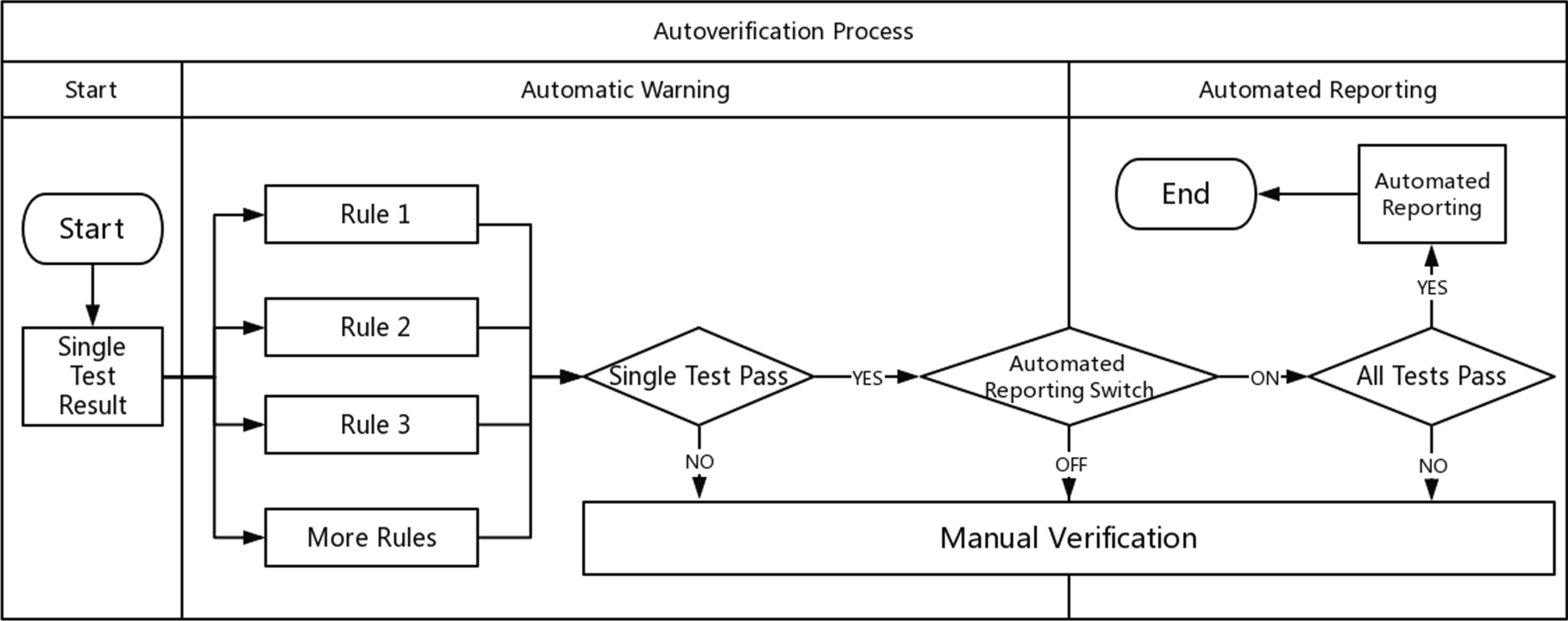

"Development and implementation of an LIS-based validation system for autoverification toward zero defects in the automated reporting of laboratory test results"

For laboratory informatics applications, validation of the autoverification function is one of the critical steps to confirm its effectiveness before use. It is crucial to verify whether the programmed algorithm follows the expected logic and produces the expected results. This process has always relied on the assessment of human–machine consistency and is mostly a manually recorded and time-consuming activity with inherent subjectivity and arbitrariness that cannot guarantee a comprehensive, timely, and continuous effectiveness evaluation of the autoverification function. To overcome these inherent limitations, we independently developed and implemented a laboratory information system (LIS)-based validation system for autoverification. We developed a correctness verification and integrity validation method (hereinafter referred to as the "new method") in the form of a human–machine dialog ... (Full article...)

|

Featured article of the week: March 21–27:

"Emerging and established trends to support secure health information exchange"

This work aims to provide information, guidelines, established practices and standards, and an extensive evaluation on new and promising technologies for the implementation of a secure information sharing platform for health-related data. We focus strictly on the technical aspects and specifically on the sharing of health information, studying innovative techniques for secure information sharing within the healthcare domain, and we describe our solution and evaluate the use of blockchain methodologically for integrating within our implementation. To do so, we analyze health information sharing within the concept of the PANACEA project, which facilitates the design, implementation, and deployment of a relevant platform. The research presented in this paper provides evidence and argumentation toward advanced and novel implementation strategies for a state-of-the-art information sharing environment; a description of high-level requirements for the national and cross-border transfer of data between different healthcare organizations ... (Full article...)

|

Featured article of the week: March 14–20:

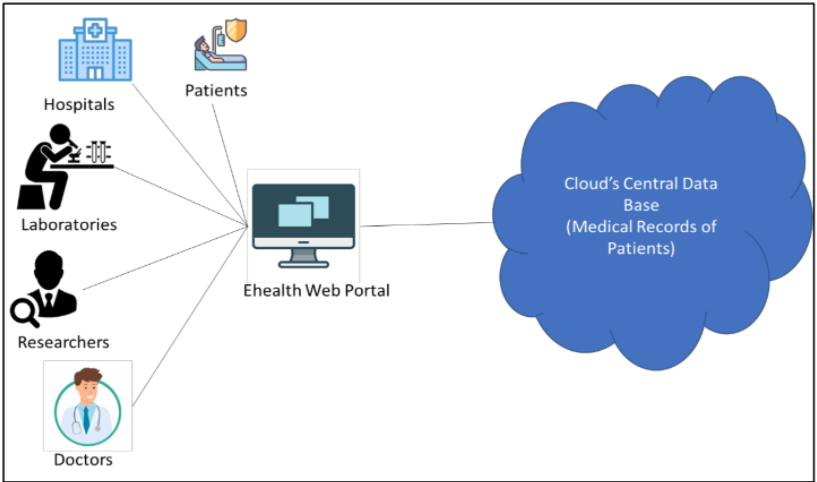

"Security and privacy in cloud-based eHealth systems"

Cloud-based healthcare computing has changed the face of healthcare in many ways. The main advantages of cloud computing in healthcare are scalability of the required service and the provision to upscale or downsize the data storge, particularly in conjunction with approaches to artificial intelligence (AI) and machine learning. This paper examines various research studies to explore the utilization of intelligent techniques in health systems and mainly focuses on the security and privacy issues in the current technologies. Despite the various benefits related to cloud computing applications for healthcare, there are different types of management, technology handling, security measures, and legal issues to be considered and addressed. The key focus of this paper is to address the increased demand for cloud computing and its definition, technologies widely used in healthcare, their problems and possibilities, and the way protection mechanisms are organized and prepared when the company chooses to implement the latest evolving service model. (Full article...)

|

Featured article of the week: March 7–13:

"Using interactive digital notebooks for bioscience and informatics education"

Interactive digital notebooks provide an opportunity for researchers and educators to carry out data analysis and report results in a single digital format. Further to just being digital, the format allows for rich content to be created in order to interact with the code and data contained in such a notebook to form an educational narrative. This primer introduces some of the fundamental aspects involved in using Jupyter Notebook in an educational setting for teaching in the bioinformatics and health informatics disciplines. We also provide two case studies that detail 1. how we used Jupyter Notebooks to teach non-coders programming skills on a blended master’s degree module for a health informatics program, and 2. a fully online distance learning unit on programming for a postgraduate certificate (PG Cert) in clinical bioinformatics, with a more technical audience. (Full article...)

|

Featured article of the week: February 28–March 6:

"The Application of Informatics to Scientific Work: Laboratory Informatics for Newbies"

The purpose of this piece is to introduce people who are not intimately familiar with laboratory work to the basics of laboratory operations and the role that informatics can play in assisting scientists, engineers, and technicians in their efforts. The concepts are important because they provide a functional foundation for understanding lab work and how that work is done in the early part of the twenty-first century (things will change, just wait for it). This material is intended for anyone who is interested in seeing how modern informatics tools can help those doing scientific work. It will provide an orientation to scientific and laboratory work, as well as the systems that have been developed to make that work more productive. (Full article...)

|

Featured article of the week: February 21–27:

"Blockchain-based healthcare workflow for IoT-connected laboratories in federated hospital clouds"

In a pandemic-related situation such as that caused by the SARS-CoV-2 virus, the need for telemedicine and other distanced services becomes dramatically fundamental to reducing the movement of patients, and by extension reducing the risk of infection in healthcare settings. One potential avenue for achieving this is through leveraging cloud computing and internet of things (IoT) technologies. This paper proposes an IoT-connected laboratory service where clinical exams are performed on patients directly in a hospital by technicians through the use of IoT medical diagnostic devices, with results automatically being sent via the hospital's cloud to doctors of federated hospitals for validation and/or consultation. In particular, we discuss a distributed scenario where nurses, technicians, and medical doctors belonging to different hospitals cooperate through their federated hospital clouds ... (Full article...)

|

Featured article of the week: February 14–20:

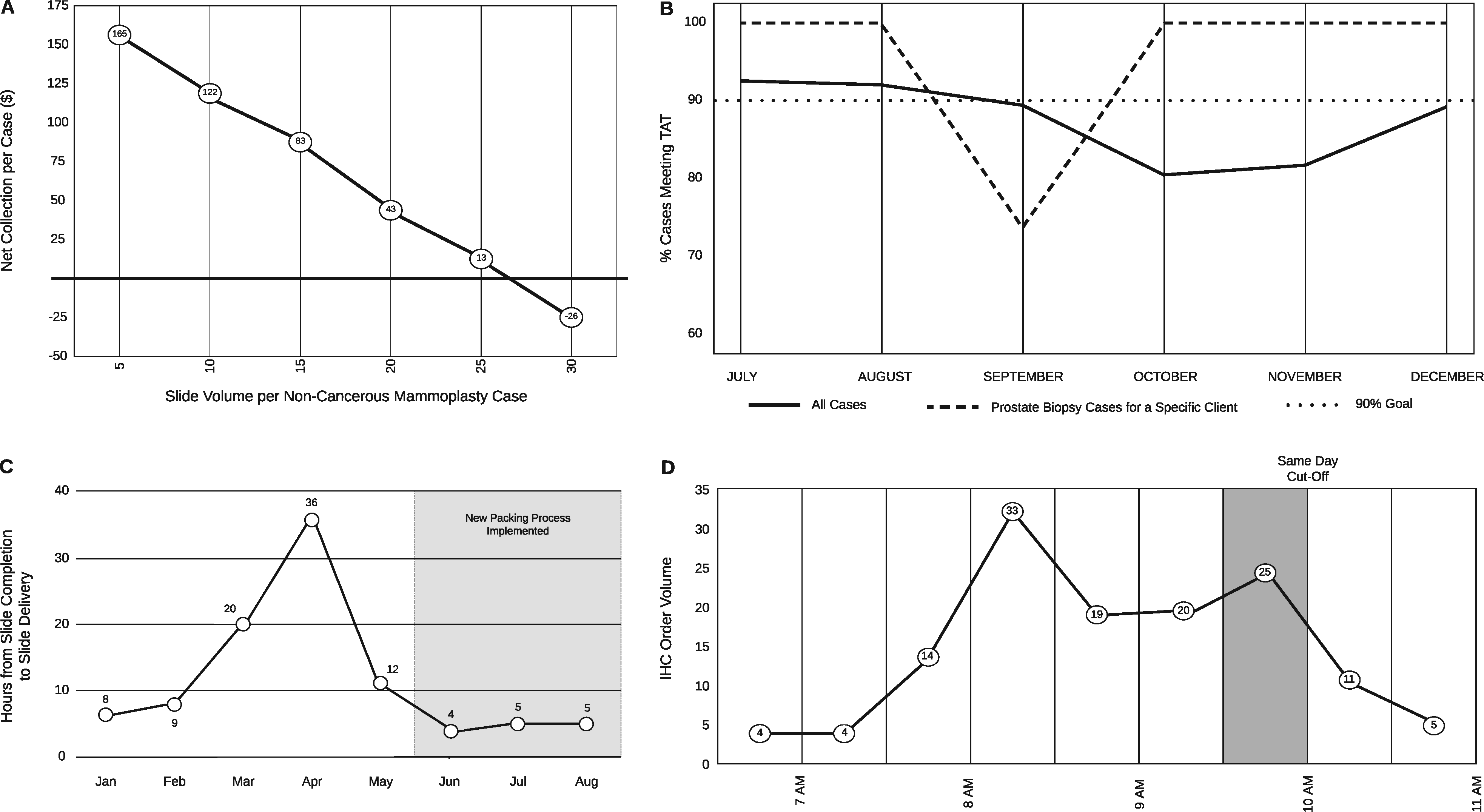

"Informatics-driven quality improvement in the modern histology lab"

Laboratory information systems (LISs) and data visualization techniques have untapped potential in anatomic pathology laboratories. The pre-built functionalities of an LIS do not address all the needs of a modern histology laboratory. For instance, “go live” is not the end of LIS customization, only the beginning. After closely evaluating various histology lab workflows, we implemented several custom data analytics dashboards and additional LIS functionalities to monitor and address weaknesses. Herein, we present our experience with LIS and data-tracking solutions that improved trainee education, slide logistics, staffing and instrumentation lobbying, and task tracking. The latter was addressed through the creation of a novel “status board” akin to those seen in inpatient wards. These use-cases can benefit other histology laboratories. (Full article...)

|

Featured article of the week: February 07–13:

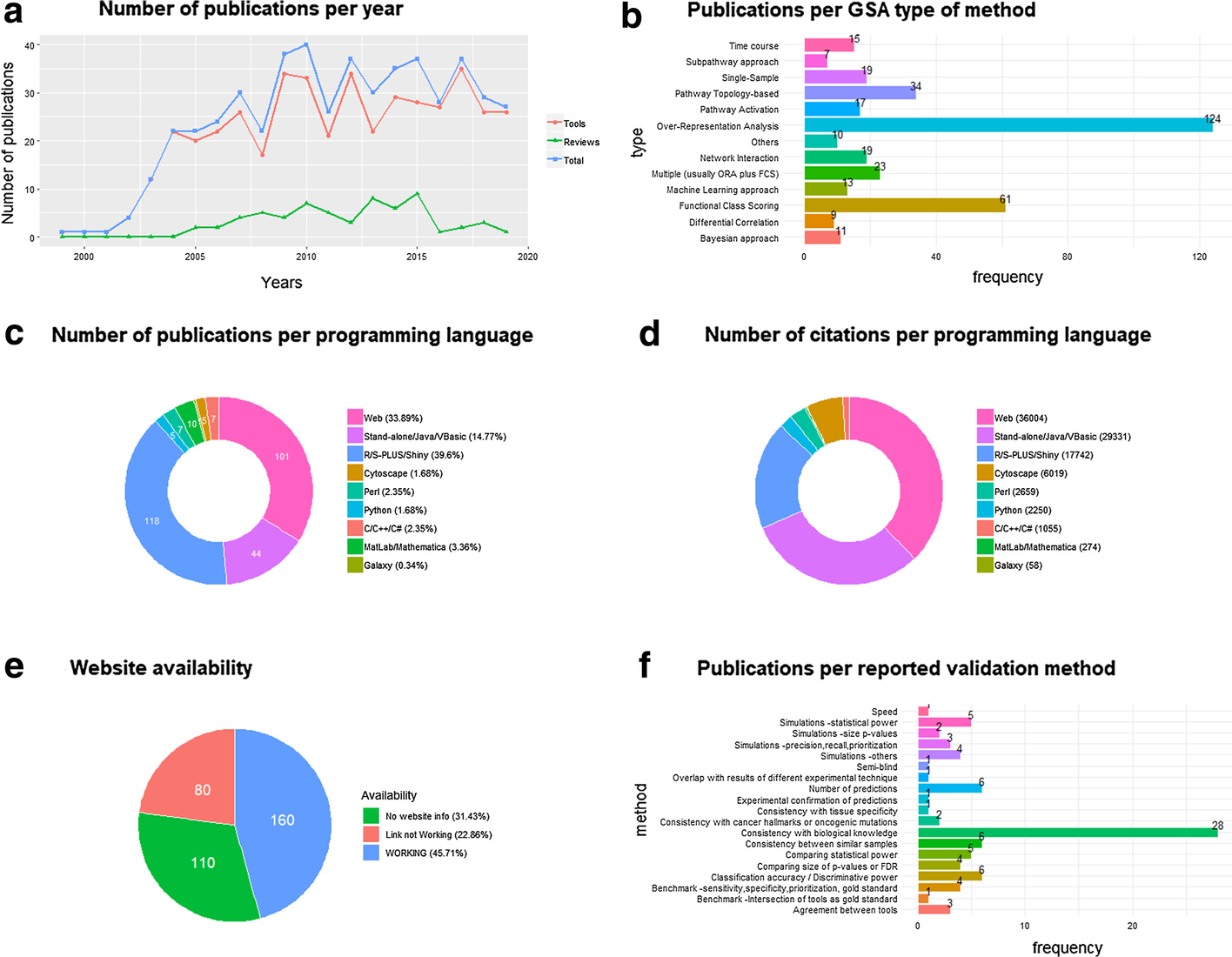

"Popularity and performance of bioinformatics software: The case of gene set analysis"

Gene set analysis (GSA) is arguably the method of choice for the functional interpretation of omics results. This work explores the popularity and the performance of all the GSA methodologies and software published during the 20 years since its inception. "Popularity" is estimated according to each paper's citation counts, while "performance" is based on a comprehensive evaluation of the validation strategies used by papers in the field, as well as the consolidated results from the existing benchmark studies. Regarding popularity, data is collected into an online open database ("GSARefDB") which allows browsing bibliographic and method-descriptive information from 503 GSA paper references; regarding performance, we introduce a repository of Jupyter Notebook workflows and Shiny apps for automated benchmarking of GSA methods (“GSA-BenchmarKING”). After comparing popularity versus performance, results show discrepancies between the most popular and the best performing GSA methods. (Full article...)

|

Featured article of the week: January 31–February 06:

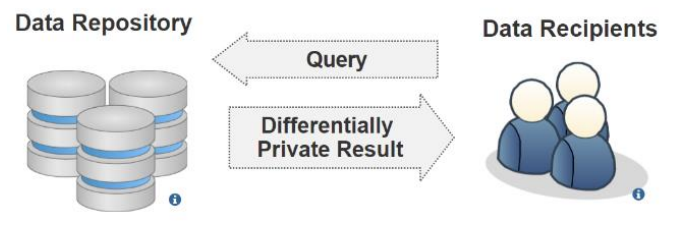

"Privacy-preserving healthcare informatics: A review"

The electronic health record (EHR) is the key to an efficient healthcare service delivery system. The publication of healthcare data is highly beneficial to healthcare industries and government institutions to support a variety of medical and census research. However, healthcare data contains sensitive information of patients, and the publication of such data could lead to unintended privacy disclosures. In this paper, we present a comprehensive survey of the state-of-the-art privacy-enhancing methods that ensure a secure healthcare data sharing environment. We focus on the recently proposed schemes based on data anonymization and differential privacy approaches in the protection of healthcare data privacy. We highlight the strengths and limitations of the two approaches and discuss some promising future research directions in this area. (Full article...)

|

Featured article of the week: January 24–30:

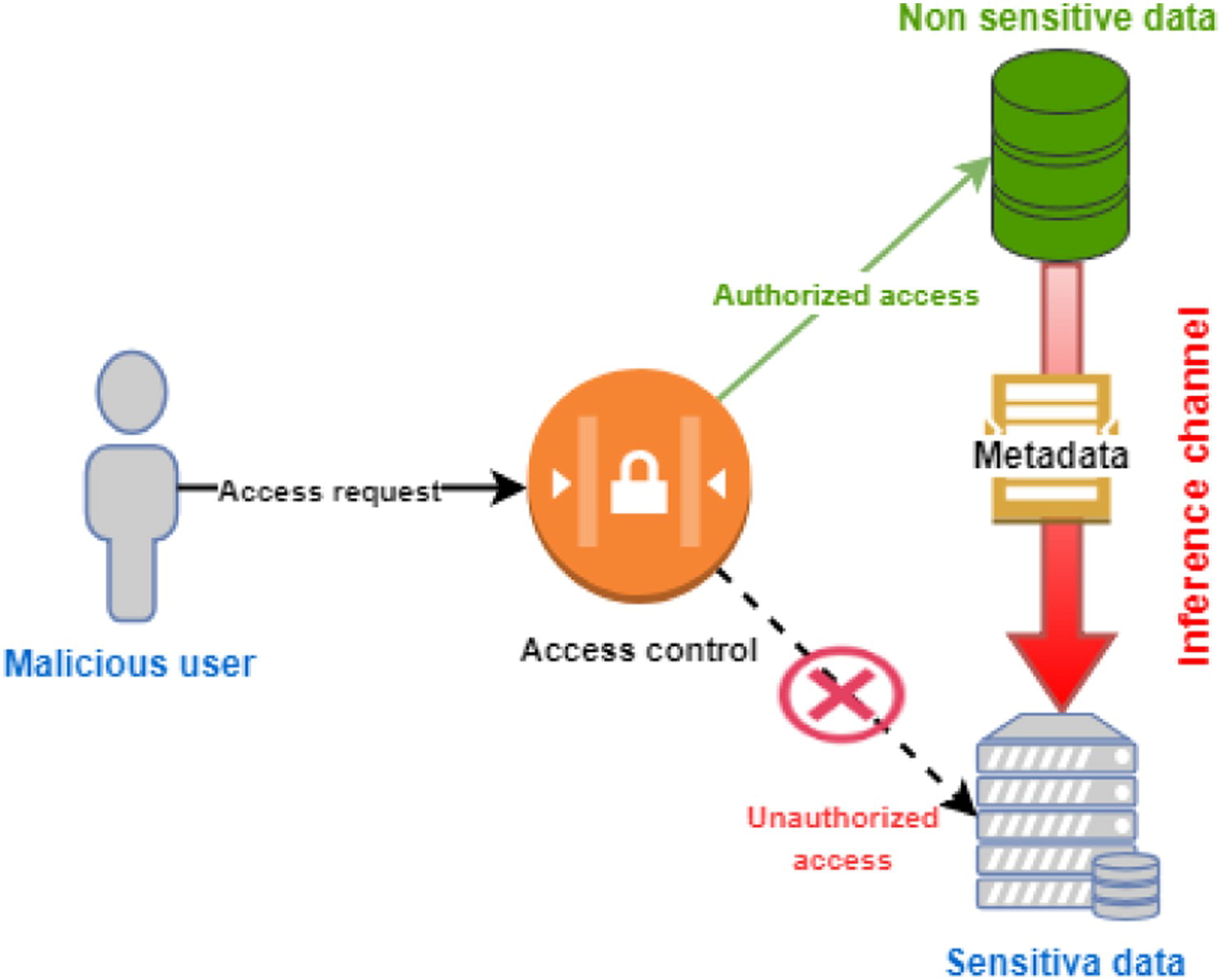

"Secure data outsourcing in presence of the inference problem: Issues and directions"

With the emergence of the cloud computing paradigms, secure data outsourcing—moving some or most data to a third-party provider of secure data management services—has become one of the crucial challenges of modern computing. Data owners place their data among cloud service providers (CSPs) in order to increase flexibility, optimize storage, enhance data manipulation, and decrease processing time. Nevertheless, from a security point of view, access control proves to be a major concern in this situation seeing that the security policy of the data owner must be preserved when data is moved to the cloud. The lack of a comprehensive and systematic review on this topic in the available literature motivated us to review this research problem. Here, we discuss current and emerging research on privacy and confidentiality concerns in cloud-based data outsourcing and pinpoint potential issues that are still unresolved. (Full article...)

|

Featured article of the week: January 17–23:

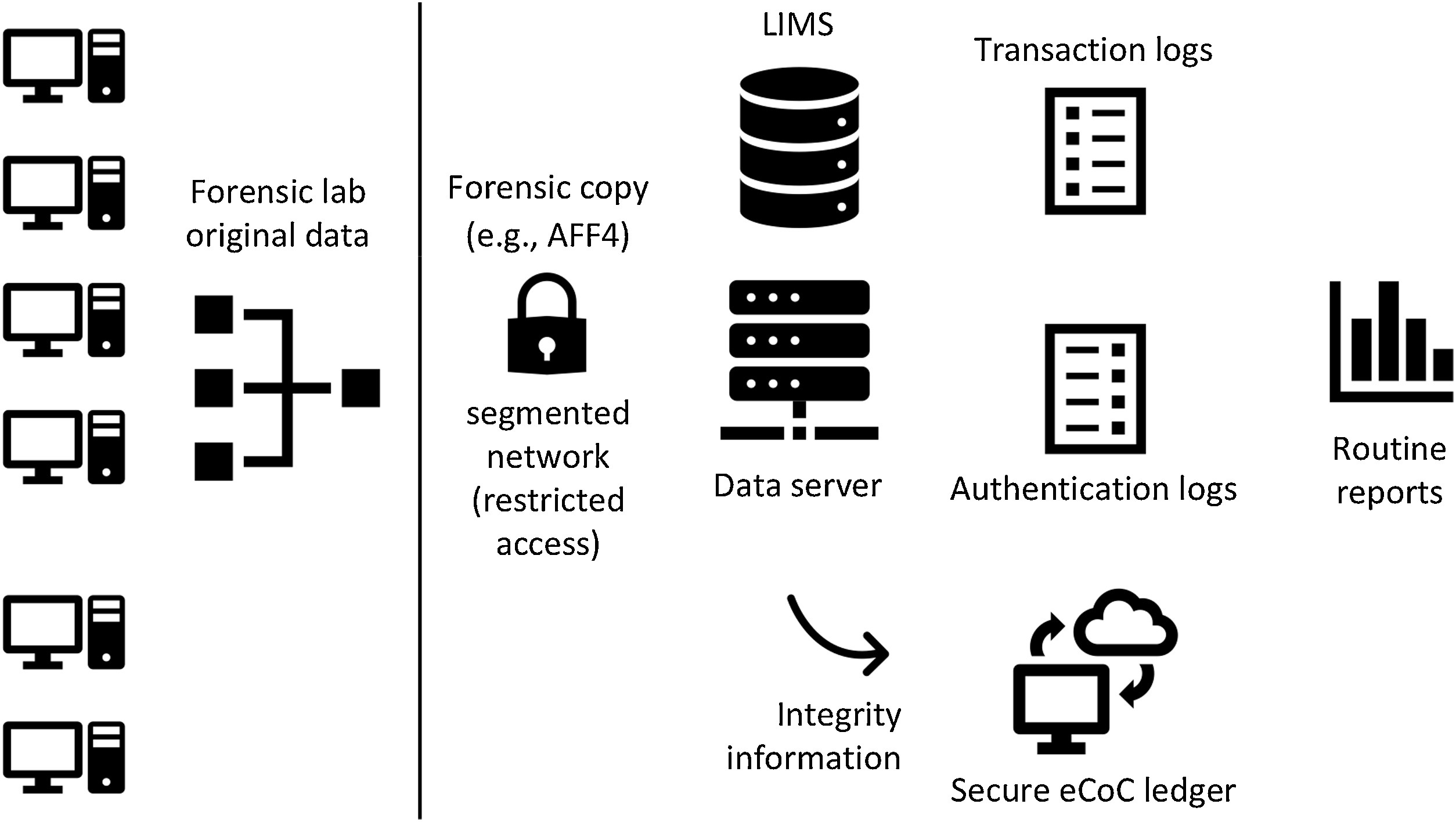

"Digital transformation risk management in forensic science laboratories"

Technological advances are changing how forensic laboratories operate in all forensic disciplines, not only digital. Computers support workflow management and enable evidence analysis (physical and digital), while new technology enables previously unavailable forensic capabilities. Used properly, the integration of digital systems supports greater efficiency and reproducibility, and drives digital transformation of forensic laboratories. However, without the necessary preparations, these digital transformations can undermine the core principles and processes of forensic laboratories. Forensic preparedness concentrating on digital data reduces the cost and operational disruption of responding to various kinds of problems, including misplaced exhibits, allegations of employee misconduct, disclosure requirements, and information security breaches ... (Full article...)

|

Featured article of the week: January 10–16:

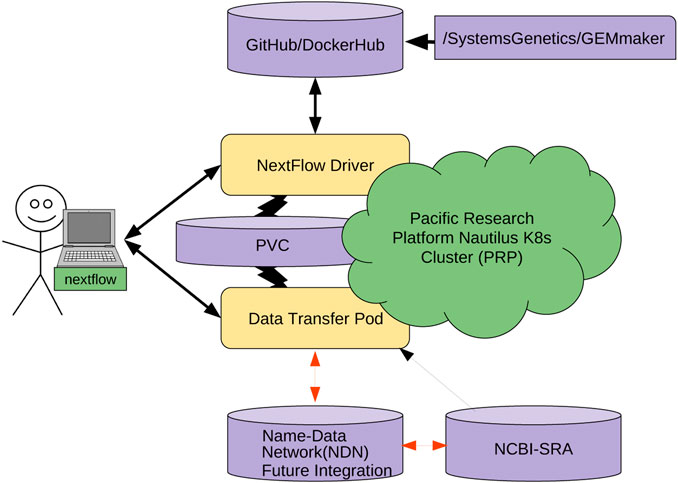

"Named data networking for genomics data management and integrated workflows"

Advanced imaging and DNA sequencing technologies now enable the diverse biology community to routinely generate and analyze terabytes of high-resolution biological data. The community is rapidly heading toward the petascale in single-investigator laboratory settings. As evidence, the National Center for Biotechnology Information (NCBI) Sequence Read Archive (SRA) central DNA sequence repository alone contains over 45 petabytes of biological data. Given the geometric growth of this and other genomics repositories, an exabyte of mineable biological data is imminent. The challenges of effectively utilizing these datasets are enormous, as they are not only large in size but also stored in various geographically distributed repositories such as those hosted by the NCBI, as well as in the DNA Data Bank of Japan (DDBJ), European Bioinformatics Institute (EBI), and NASA’s GeneLab. (Full article...)

|

Featured article of the week: January 3–9:

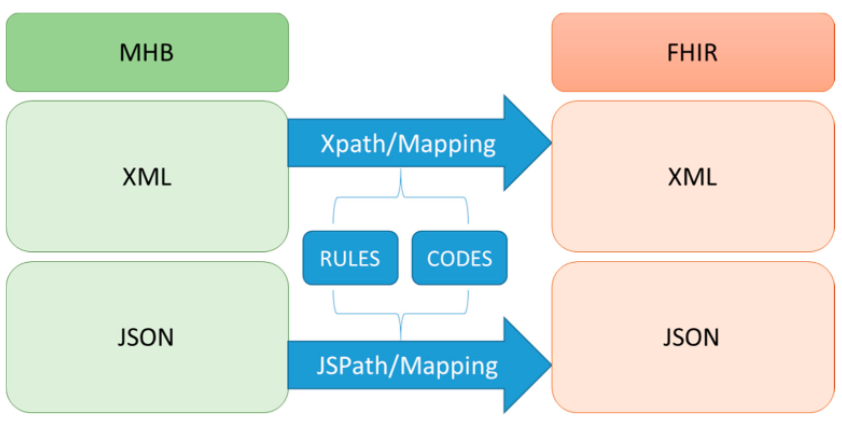

"Implement an international interoperable PHR by FHIR: A Taiwan innovative application"

Personal health records (PHRs) have many benefits for things such as health surveillance, epidemiological surveillance, self-control, links to various services, public health and health management, and international surveillance. The implementation of an international standard for interoperability is essential to accessing PHRs. In Taiwan, the nationwide exchange platform for electronic medical records (EMRs) has been in use for many years. The Health Level Seven International (HL7) Clinical Document Architecture (CDA) was used as the standard for those EMRs. However, the complication of implementing CDA became a barrier for many hospitals to realizing standard EMRs. In this study, we implemented a Fast Healthcare Interoperability Resources (FHIR)-based PHR transformation process, including a user interface module to review the contents of PHRs. (Full article...)

|

|