Featured article of the week: June 20–26:

"Chemozart: A web-based 3D molecular structure editor and visualizer platform"

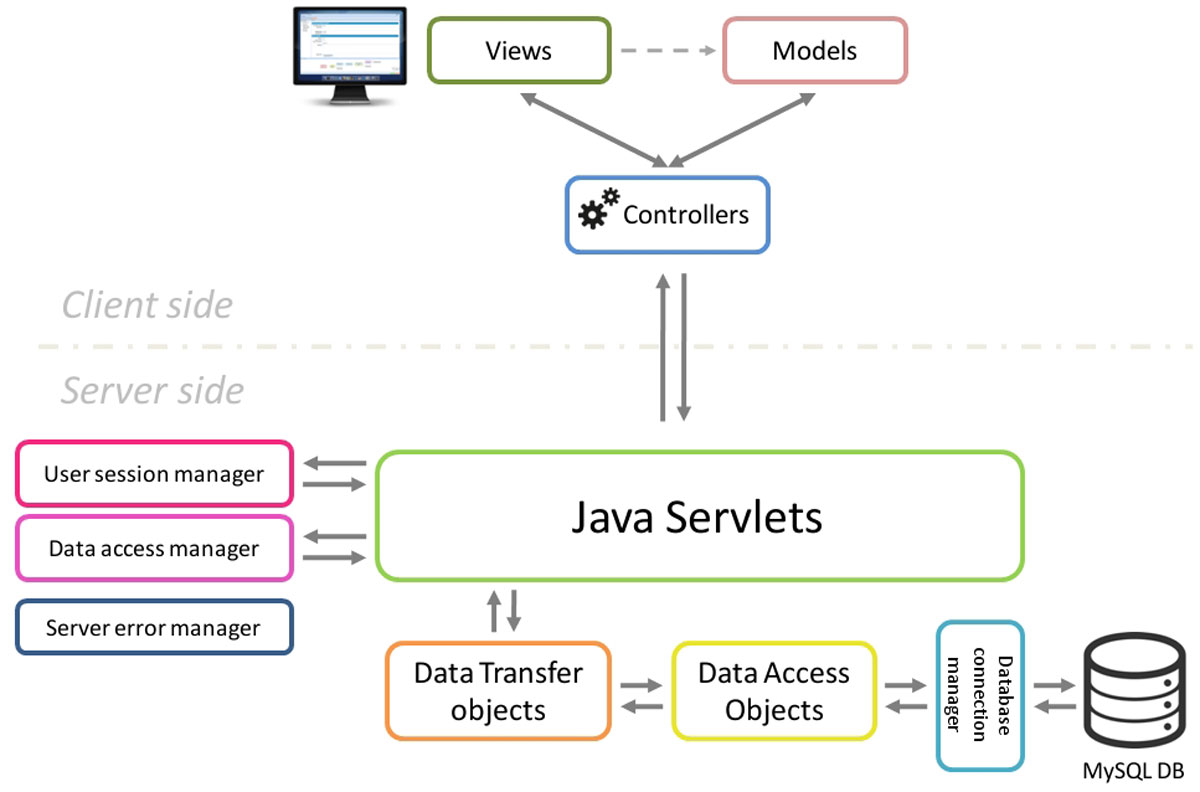

Chemozart is a 3D Molecule editor and visualizer built on top of native web components. It offers an easy to access service, user-friendly graphical interface and modular design. It is a client centric web application which communicates with the server via a representational state transfer style web service. Both client-side and server-side application are written in JavaScript. A combination of JavaScript and HTML is used to draw three-dimensional structures of molecules.

With the help of WebGL, three-dimensional visualization tool is provided. Using CSS3 and HTML5, a user-friendly interface is composed. More than 30 packages are used to compose this application which adds enough flexibility to it to be extended. Molecule structures can be drawn on all types of platforms and is compatible with mobile devices. No installation is required in order to use this application and it can be accessed through the internet. This application can be extended on both server-side and client-side by implementing modules in JavaScript. Molecular compounds are drawn on the HTML5 Canvas element using WebGL context. (Full article...)

Featured article of the week: June 13–19:

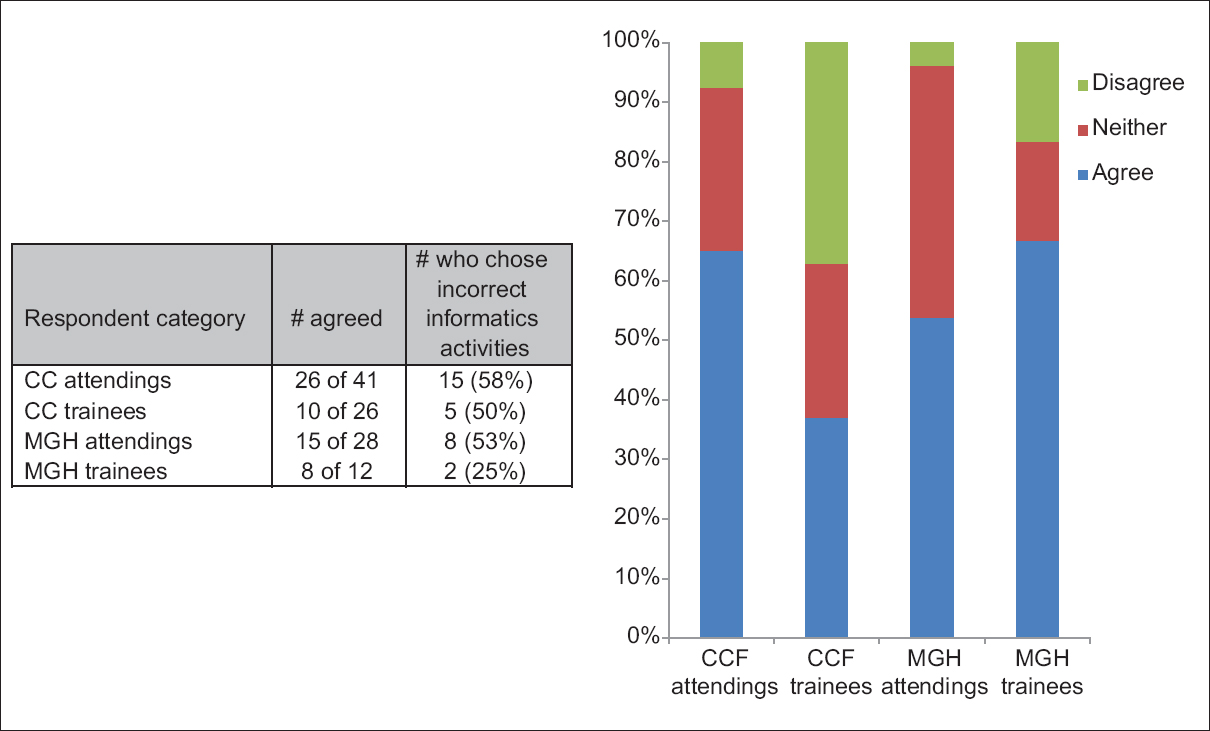

"Perceptions of pathology informatics by non-informaticist pathologists and trainees"

Although pathology informatics (PI) is essential to modern pathology practice, the field is often poorly understood. Pathologists who have received little to no exposure to informatics, either in training or in practice, may not recognize the roles that informatics serves in pathology. The purpose of this study was to characterize perceptions of PI by noninformatics-oriented pathologists and to do so at two large centers with differing informatics environments. Pathology trainees and staff at Cleveland Clinic (CC) and Massachusetts General Hospital (MGH) were surveyed. At MGH, pathology department leadership has promoted a pervasive informatics presence through practice, training, and research. At CC, PI efforts focus on production systems that serve a multi-site integrated health system and a reference laboratory, and on the development of applications oriented to department operations. The survey assessed perceived definition of PI, interest in PI, and perceived utility of PI. (Full article...)

|

Featured article of the week: June 06–12:

"A pocket guide to electronic laboratory notebooks in the academic life sciences"

Every professional doing active research in the life sciences is required to keep a laboratory notebook. However, while science has changed dramatically over the last centuries, laboratory notebooks have remained essentially unchanged since pre-modern science. We argue that the implementation of electronic laboratory notebooks (ELN) in academic research is overdue, and we provide researchers and their institutions with the background and practical knowledge to select and initiate the implementation of an ELN in their laboratories. In addition, we present data from surveying biomedical researchers and technicians regarding which hypothetical features and functionalities they hope to see implemented in an ELN, and which ones they regard as less important. We also present data on acceptance and satisfaction of those who have recently switched from paper laboratory notebook to an ELN. We thus provide answers to the following questions: What does an electronic laboratory notebook afford a biomedical researcher, what does it require, and how should one go about implementing it? (Full article...)

|

Featured article of the week: May 30–June 05:

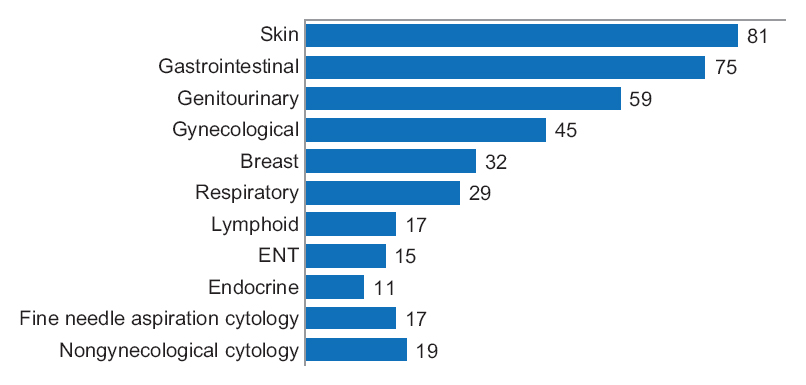

"Diagnostic time in digital pathology: A comparative study on 400 cases"

Numerous validation studies in digital pathology confirmed its value as a diagnostic tool. However, a longer time to diagnosis than traditional microscopy has been seen as a significant barrier to the routine use of digital pathology. As a part of our validation study, we compared a digital and microscopic diagnostic time in the routine diagnostic setting.

One senior staff pathologist reported 400 consecutive cases in histology, nongynecological, and fine needle aspiration cytology (20 sessions, 20 cases/session), over 4 weeks. Complex, difficult, and rare cases were excluded from the study to reduce the bias. A primary diagnosis was digital, followed by traditional microscopy, six months later, with only request forms available for both. Microscopic slides were scanned at ×20, digital images accessed through the fully integrated laboratory information management system (LIMS) and viewed in the image viewer on double 23” displays. (Full article...)

|

Featured article of the week: May 16–22:

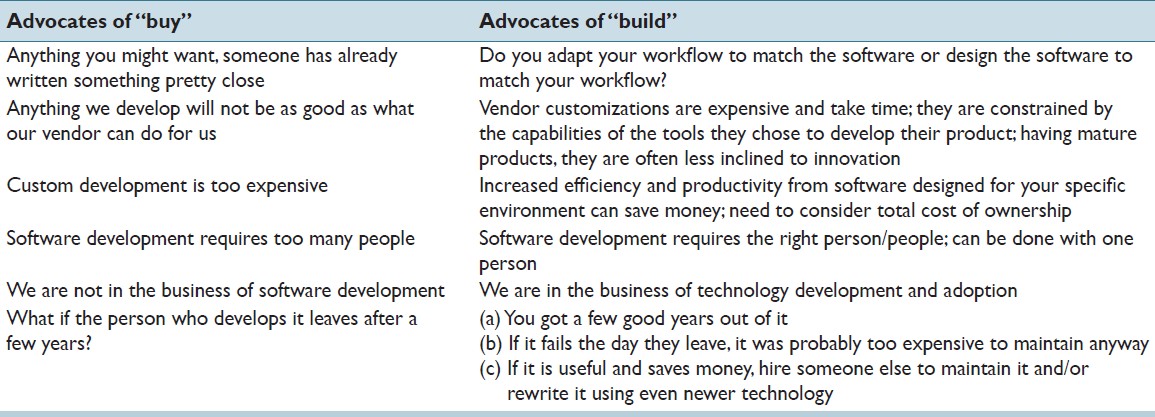

"Custom software development for use in a clinical laboratory"

In-house software development for use in a clinical laboratory is a controversial issue. Many of the objections raised are based on outdated software development practices, an exaggeration of the risks involved, and an underestimation of the benefits that can be realized. Buy versus build analyses typically do not consider total costs of ownership, and unfortunately decisions are often made by people who are not directly affected by the workflow obstacles or benefits that result from those decisions. We have been developing custom software for clinical use for over a decade, and this article presents our perspective on this practice. A complete analysis of the decision to develop or purchase must ultimately examine how the end result will mesh with the departmental workflow, and custom-developed solutions typically can have the greater positive impact on efficiency and productivity, substantially altering the decision balance sheet. (Full article...)

|

Featured article of the week: May 23–29:

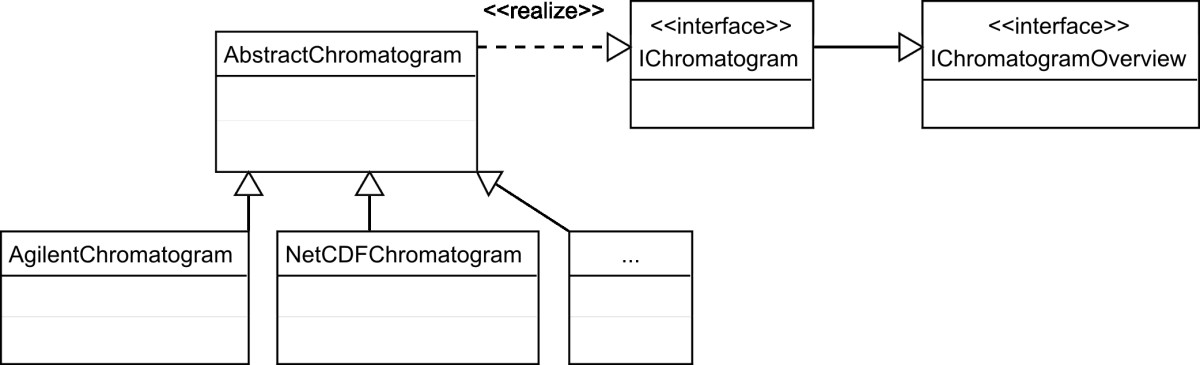

"OpenChrom: A cross-platform open source software for the mass spectrometric analysis of chromatographic data"

Today, data evaluation has become a bottleneck in chromatographic science. Analytical instruments equipped with automated samplers yield large amounts of measurement data, which needs to be verified and analyzed. Since nearly every GC/MS instrument vendor offers its own data format and software tools, the consequences are problems with data exchange and a lack of comparability between the analytical results. To challenge this situation a number of either commercial or non-profit software applications have been developed. These applications provide functionalities to import and analyze several data formats but have shortcomings in terms of the transparency of the implemented analytical algorithms and/or are restricted to a specific computer platform. (Full article...)

|

Featured article of the week: May 16–22:

"Custom software development for use in a clinical laboratory"

In-house software development for use in a clinical laboratory is a controversial issue. Many of the objections raised are based on outdated software development practices, an exaggeration of the risks involved, and an underestimation of the benefits that can be realized. Buy versus build analyses typically do not consider total costs of ownership, and unfortunately decisions are often made by people who are not directly affected by the workflow obstacles or benefits that result from those decisions. We have been developing custom software for clinical use for over a decade, and this article presents our perspective on this practice. A complete analysis of the decision to develop or purchase must ultimately examine how the end result will mesh with the departmental workflow, and custom-developed solutions typically can have the greater positive impact on efficiency and productivity, substantially altering the decision balance sheet. (Full article...)

|

Featured article of the week: May 09–15:

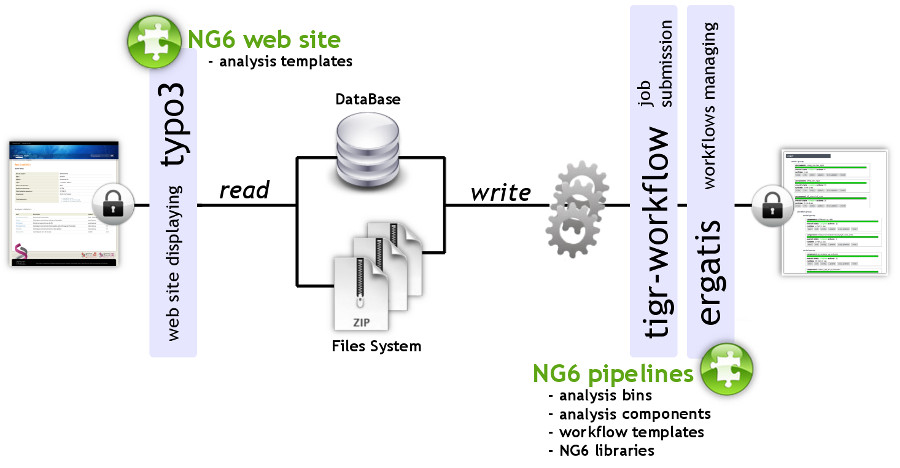

"NG6: Integrated next generation sequencing storage and processing environment"

Next generation sequencing platforms are now well implanted in sequencing centres and some laboratories. Upcoming smaller scale machines such as the 454 junior from Roche or the MiSeq from Illumina will increase the number of laboratories hosting a sequencer. In such a context, it is important to provide these teams with an easily manageable environment to store and process the produced reads.

We describe a user-friendly information system able to manage large sets of sequencing data. It includes, on one hand, a workflow environment already containing pipelines adapted to different input formats (sff, fasta, fastq and qseq), different sequencers (Roche 454, Illumina HiSeq) and various analyses (quality control, assembly, alignment, diversity studies,…) and, on the other hand, a secured web site giving access to the results. The connected user will be able to download raw and processed data and browse through the analysis result statistics. (Full article...)

|

Featured article of the week: May 02–08:

"STATegra EMS: An experiment management system for complex next-generation omics experiments"

High-throughput sequencing assays are now routinely used to study different aspects of genome organization. As decreasing costs and widespread availability of sequencing enable more laboratories to use sequencing assays in their research projects, the number of samples and replicates in these experiments can quickly grow to several dozens of samples and thus require standardized annotation, storage and management of preprocessing steps. As a part of the STATegra project, we have developed an Experiment Management System (EMS) for high throughput omics data that supports different types of sequencing-based assays such as RNA-seq, ChIP-seq, Methyl-seq, etc, as well as proteomics and metabolomics data. The STATegra EMS provides metadata annotation of experimental design, samples and processing pipelines, as well as storage of different types of data files, from raw data to ready-to-use measurements. The system has been developed to provide research laboratories with a freely-available, integrated system that offers a simple and effective way for experiment annotation and tracking of analysis procedures. (Full article...)

|

Featured article of the week: April 25–May 01:

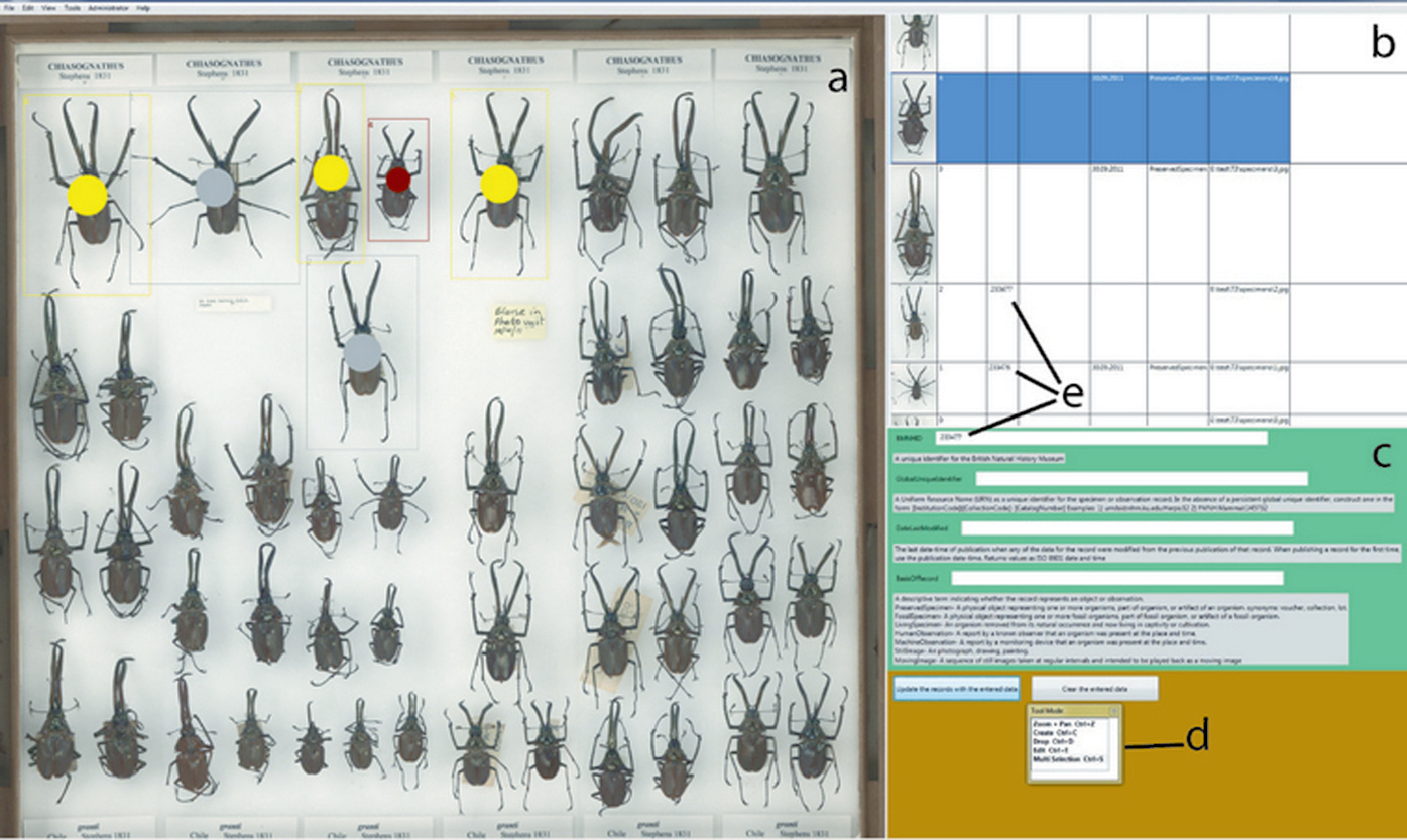

"No specimen left behind: Industrial scale digitization of natural history collections"

Traditional approaches for digitizing natural history collections, which include both imaging and metadata capture, are both labour- and time-intensive. Mass-digitization can only be completed if the resource-intensive steps, such as specimen selection and databasing of associated information, are minimized. Digitization of larger collections should employ an “industrial” approach, using the principles of automation and crowd sourcing, with minimal initial metadata collection including a mandatory persistent identifier. A new workflow for the mass-digitization of natural history museum collections based on these principles, and using SatScan® tray scanning system, is described. (Full article...)

|

Featured article of the week: April 18–24:

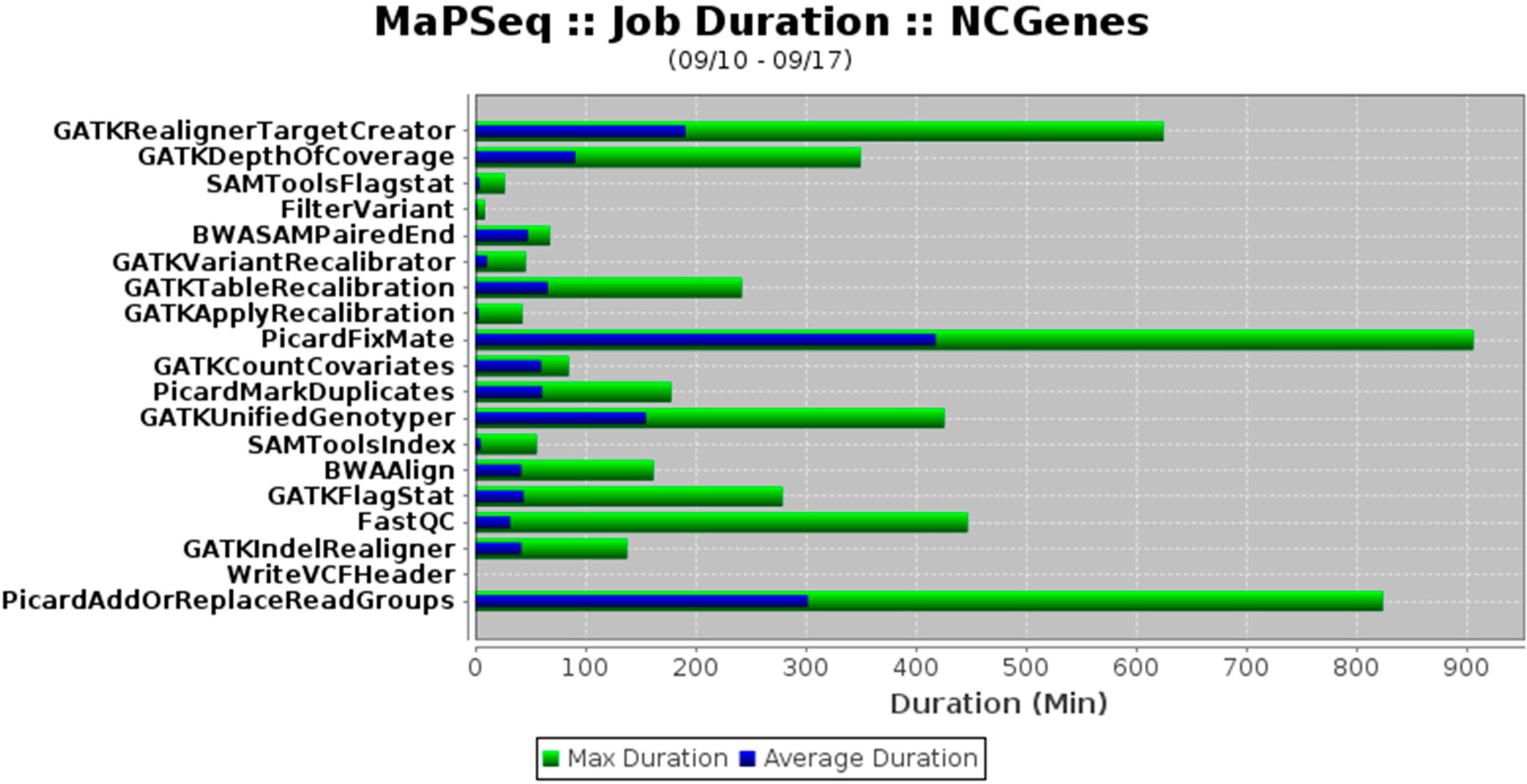

"MaPSeq, a service-oriented architecture for genomics research within an academic biomedical research institution"

Genomics research presents technical, computational, and analytical challenges that are well recognized. Less recognized are the complex sociological, psychological, cultural, and political challenges that arise when genomics research takes place within a large, decentralized academic institution. In this paper, we describe a Service-Oriented Architecture (SOA) — MaPSeq — that was conceptualized and designed to meet the diverse and evolving computational workflow needs of genomics researchers at our large, hospital-affiliated, academic research institution. We present the institutional challenges that motivated the design of MaPSeq before describing the architecture and functionality of MaPSeq. We then discuss SOA solutions and conclude that approaches such as MaPSeq enable efficient and effective computational workflow execution for genomics research and for any type of academic biomedical research that requires complex, computationally-intense workflows. (Full article...)

|

Featured article of the week: April 11–17:

"Grand challenges in environmental informatics"

We live in an era of environmental deterioration through depletion and degradation of resources such as air, water, and soil; the destruction of ecosystems and the extinction of wildlife. As a matter of fact, environmental degradation is one of three main threats identified in 2004 by the High Level Threat Panel of the United Nations, the other two being poverty and infectious diseases. In particular, air pollution ranked seventh on the worldwide list of risk factors, contributing to approximately three million deaths each year. (Full article...)

|

Featured article of the week: April 04–10:

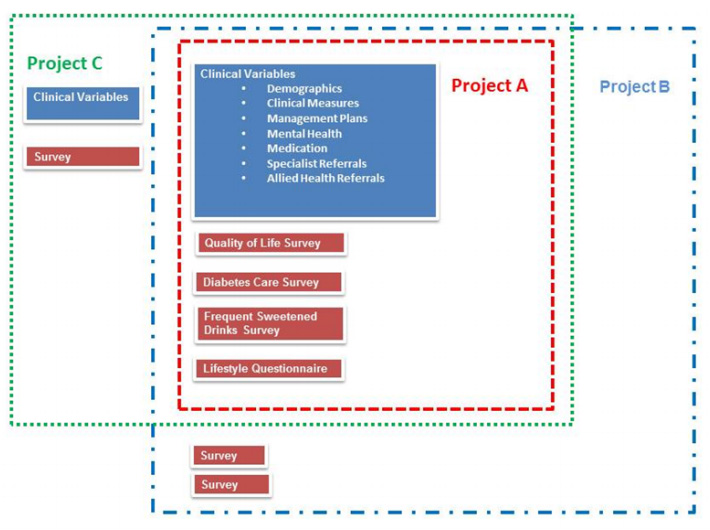

"The development of the Public Health Research Data Management System"

The design and development of the Public Health Research Data Management System highlights how it is possible to construct an information system, which allows greater access to well, preserved public health research data to enable it to be reused and shared. The Public Health Research Data Management System (PHRDMS) manages clinical, health service, community and survey research data within a secure web environment. The conceptual model under pinning the PHRDMS is based on three main entities: participant, community and health service. The PHRDMS was designed to provide data management to allow for data sharing and reuse. The system has been designed to enable rigorous research and ensure that: data that are unmanaged be managed, data that are disconnected be connected, data that are invisible be findable, data that are single use be reusable, within a structured collection. The PHRDMS is currently used by researchers to answer a broad range of policy relevant questions, including monitoring incidence of renal disease, cardiovascular disease, diabetes and mental health problems in different risk groups. (Full article...)

|

Featured article of the week: March 28–April 03:

"The need for informatics to support forensic pathology and death investigation"

As a result of their practice of medicine, forensic pathologists create a wealth of data regarding the causes of and reasons for sudden, unexpected or violent deaths. This data have been effectively used to protect the health and safety of the general public in a variety of ways despite current and historical limitations. These limitations include the lack of data standards between the thousands of death investigation (DI) systems in the United States, rudimentary electronic information systems for DI, and the lack of effective communications and interfaces between these systems. Collaboration between forensic pathology and clinical informatics is required to address these shortcomings and a path forward has been proposed that will enable forensic pathology to maximize its effectiveness by providing timely and actionable information to public health and public safety agencies. (Full article...)

|

Featured article of the week: March 21–27:

"Efficient sample tracking with OpenLabFramework"

The advance of new technologies in biomedical research has led to a dramatic growth in experimental throughput. Projects therefore steadily grow in size and involve a larger number of researchers. Spreadsheets traditionally used are thus no longer suitable for keeping track of the vast amounts of samples created and need to be replaced with state-of-the-art laboratory information management systems. Such systems have been developed in large numbers, but they are often limited to specific research domains and types of data. One domain so far neglected is the management of libraries of vector clones and genetically engineered cell lines. OpenLabFramework is a newly developed web-application for sample tracking, particularly laid out to fill this gap, but with an open architecture allowing it to be extended for other biological materials and functional data. Its sample tracking mechanism is fully customizable and aids productivity further through support for mobile devices and barcoded labels. (Full article...)

|

Featured article of the week: March 14–20:

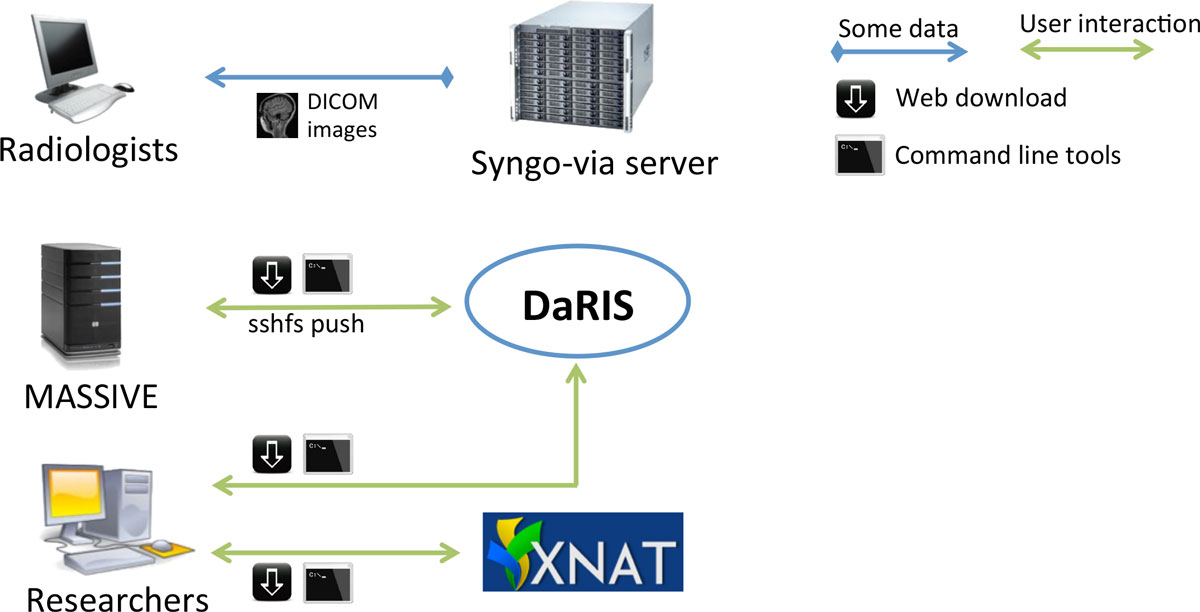

"Design, implementation and operation of a multimodality research imaging informatics repository"

Biomedical imaging research increasingly involves acquiring, managing and processing large amounts of distributed imaging data. Integrated systems that combine data, meta-data and workflows are crucial for realising the opportunities presented by advances in imaging facilities. This paper describes the design, implementation and operation of a multi-modality research imaging data management system that manages imaging data obtained from biomedical imaging scanners operated at Monash Biomedical Imaging (MBI), Monash University in Melbourne, Australia. In addition to Digital Imaging and Communications in Medicine (DICOM) images, raw data and non-DICOM biomedical data can be archived and distributed by the system. Imaging data are annotated with meta-data according to a study-centric data model and, therefore, scientific users can find, download and process data easily. The research imaging data management system ensures long-term usability, integrity inter-operability and integration of large imaging data. Research users can securely browse and download stored images and data, and upload processed data via subject-oriented informatics frameworks including the Distributed and Reflective Informatics System (DaRIS), and the Extensible Neuroimaging Archive Toolkit (XNAT). (Full article...)

|

Featured article of the week: March 07–13:

Forensic science (often shortened to forensics) is the application of a broad spectrum of sciences — from anthropology to toxicology — to answer questions of interest to a legal system. During the course of an investigation, forensic scientists collect, preserve, and analyze scientific evidence using a variety of special laboratory equipment and special techniques for such interests. In addition to their laboratory role, the forensic scientists may also testify as an expert witness in both criminal and civil cases and can work for either the prosecution or the defense.

Much of the work of forensic science is conducted in the forensic laboratory. Such a laboratory has many similarities to a traditional clinical or research lab in so much that it contains various lab instruments and several areas set aside for different tasks. However, it differs in other ways. Windows, for example, represent a point of entry into a forensic lab, which must be secure as it contains evidence to crimes. (Full article...)

|

Featured article of the week: February 29–March 06:

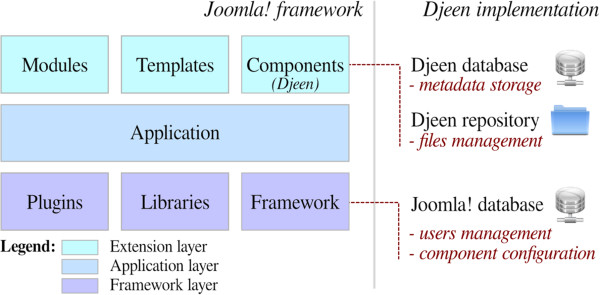

"Djeen (Database for Joomla!’s Extensible Engine): A research information management system for flexible multi-technology project administration"

With the advance of post-genomic technologies, the need for tools to manage large scale data in biology becomes more pressing. This involves annotating and storing data securely, as well as granting permissions flexibly with several technologies (all array types, flow cytometry, proteomics) for collaborative work and data sharing. This task is not easily achieved with most systems available today.

We developed Djeen (Database for Joomla!’s Extensible Engine), a new Research Information Management System (RIMS) for collaborative projects. Djeen is a user-friendly application, designed to streamline data storage and annotation collaboratively. Its database model, kept simple, is compliant with most technologies and allows storing and managing of heterogeneous data with the same system. Advanced permissions are managed through different roles. Templates allow Minimum Information (MI) compliance. (Full article...)

|

Featured article of the week: February 22–28:

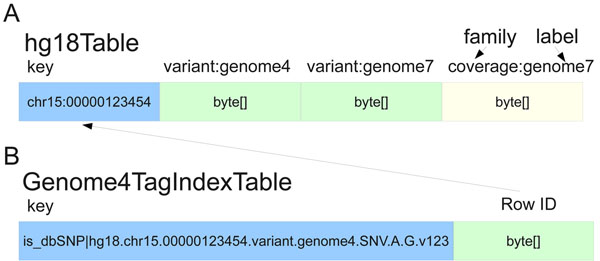

"SeqWare Query Engine: Storing and searching sequence data in the cloud"

Since the introduction of next-generation DNA sequencers the rapid increase in sequencer throughput, and associated drop in costs, has resulted in more than a dozen human genomes being resequenced over the last few years. These efforts are merely a prelude for a future in which genome resequencing will be commonplace for both biomedical research and clinical applications. The dramatic increase in sequencer output strains all facets of computational infrastructure, especially databases and query interfaces. The advent of cloud computing, and a variety of powerful tools designed to process petascale datasets, provide a compelling solution to these ever increasing demands.

In this work, we present the SeqWare Query Engine which has been created using modern cloud computing technologies and designed to support databasing information from thousands of genomes. Our backend implementation was built using the highly scalable, NoSQL HBase database from the Hadoop project. We also created a web-based frontend that provides both a programmatic and interactive query interface and integrates with widely used genome browsers and tools. (Full article...)

|

Featured article of the week: February 15–21:

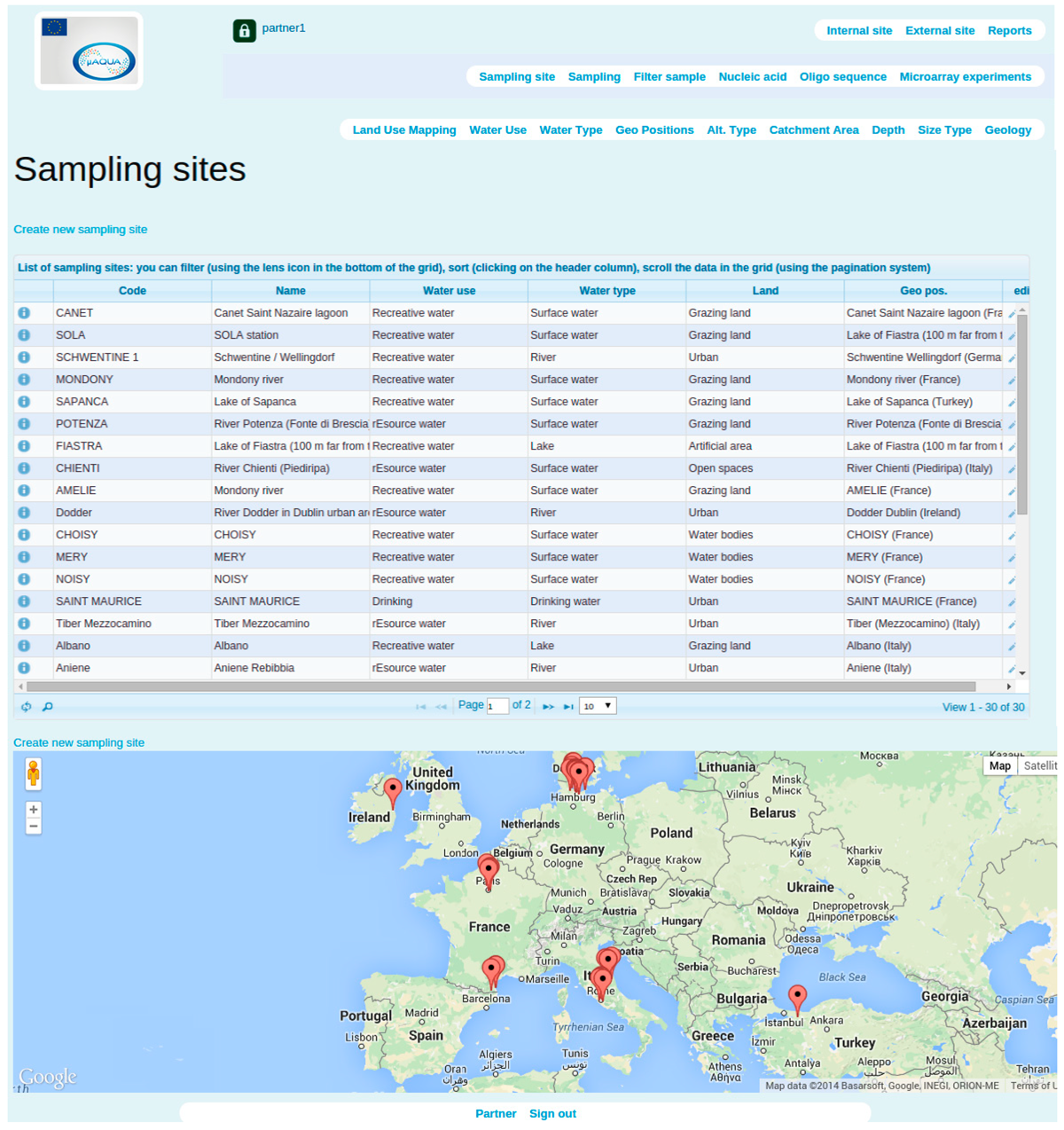

"SaDA: From sampling to data analysis—An extensible open source infrastructure for rapid, robust and automated management and analysis of modern ecological high-throughput microarray data"

One of the most crucial characteristics of day-to-day laboratory information management is the collection, storage and retrieval of information about research subjects and environmental or biomedical samples. An efficient link between sample data and experimental results is absolutely important for the successful outcome of a collaborative project. Currently available software solutions are largely limited to large scale, expensive commercial Laboratory Information Management Systems (LIMS). Acquiring such LIMS indeed can bring laboratory information management to a higher level, but most of the times this requires a sufficient investment of money, time and technical efforts. There is a clear need for a light weighted open source system which can easily be managed on local servers and handled by individual researchers. Here we present a software named SaDA for storing, retrieving and analyzing data originated from microorganism monitoring experiments. SaDA is fully integrated in the management of environmental samples, oligonucleotide sequences, microarray data and the subsequent downstream analysis procedures. It is simple and generic software, and can be extended and customized for various environmental and biomedical studies. (Full article...)

|

Featured article of the week: January 25–31:

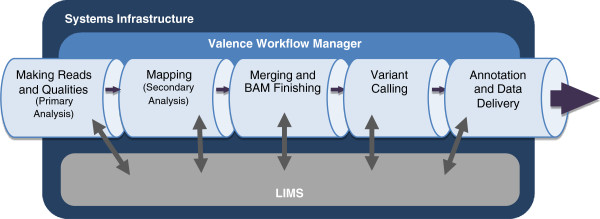

"Launching genomics into the cloud: Deployment of Mercury, a next generation sequence analysis pipeline"

Massively parallel DNA sequencing generates staggering amounts of data. Decreasing cost, increasing throughput, and improved annotation have expanded the diversity of genomics applications in research and clinical practice. This expanding scale creates analytical challenges: accommodating peak compute demand, coordinating secure access for multiple analysts, and sharing validated tools and results.

To address these challenges, we have developed the Mercury analysis pipeline and deployed it in local hardware and the Amazon Web Services cloud via the DNAnexus platform. Mercury is an automated, flexible, and extensible analysis workflow that provides accurate and reproducible genomic results at scales ranging from individuals to large cohorts.

By taking advantage of cloud computing and with Mercury implemented on the DNAnexus platform, we have demonstrated a powerful combination of a robust and fully validated software pipeline and a scalable computational resource that, to date, we have applied to more than 10,000 whole genome and whole exome samples. (Full article...)

|

Featured article of the week: January 18–24:

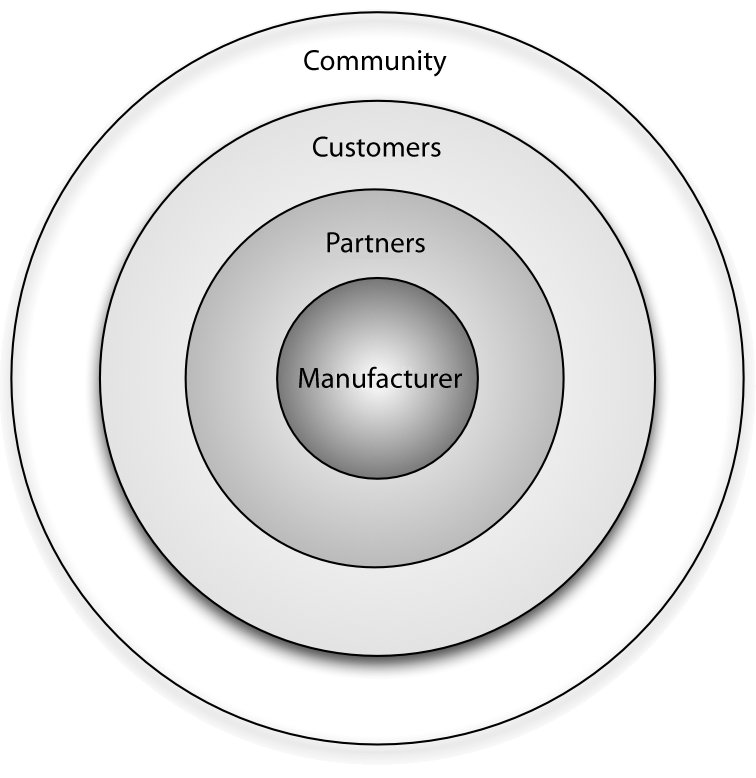

"Benefits of the community for partners of open source vendors"

Open source vendors can benefit from business ecosystems that form around their products. Partners of such vendors can utilize this ecosystem for their own business benefit by understanding the structure of the ecosystem, the key actors and their relationships, and the main levers of profitability. This article provides information on all of these aspects and identifies common business scenarios for partners of open source vendors. Armed with this information, partners can select a strategy that allows them to participate in the ecosystem while also maximizing their gains and driving adoption of their product or solution in the marketplace.

Every free/libre open source software (F/LOSS) vendor strives to create a business ecosystem around its software product. Doing this offers two primary advantages from a sales and marketing perspective: i) it increases the viability and longevity of the product in both commercial and communal spaces, and ii) it opens up new channels for communication and innovation. (Full article...)

|

Featured article of the week: January 11–17:

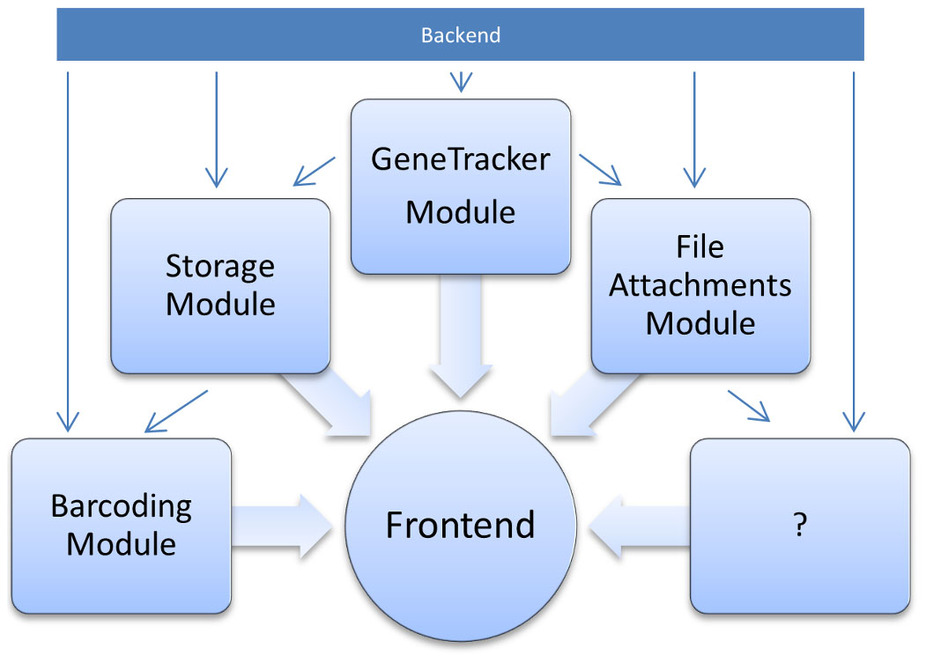

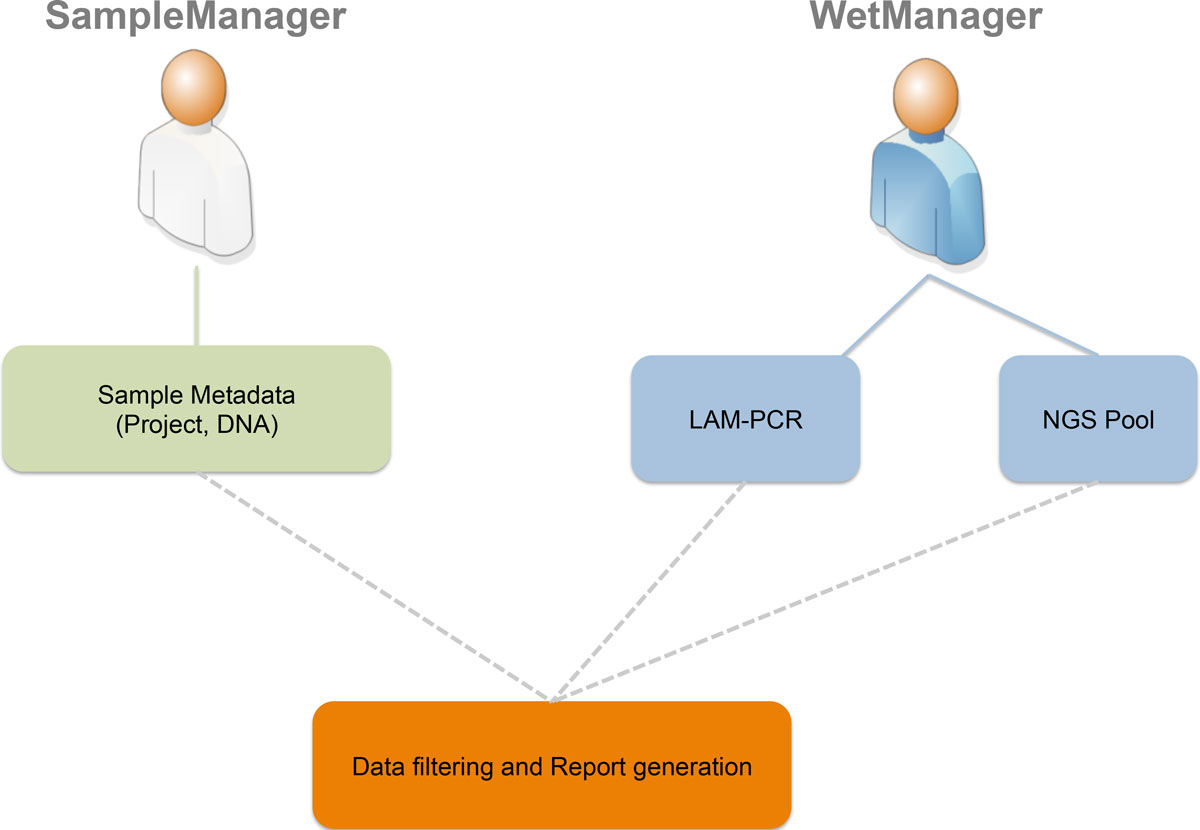

"adLIMS: A customized open source software that allows bridging clinical and basic molecular research studies"

Many biological laboratories that deal with genomic samples are facing the problem of sample tracking, both for pure laboratory management and for efficiency. Our laboratory exploits PCR techniques and Next Generation Sequencing (NGS) methods to perform high-throughput integration site monitoring in different clinical trials and scientific projects. Because of the huge amount of samples that we process every year, which result in hundreds of millions of sequencing reads, we need to standardize data management and tracking systems, building up a scalable and flexible structure with web-based interfaces, which are usually called Laboratory Information Management System (LIMS).

We extended and customized ADempiere ERP to fulfill LIMS requirements and we developed adLIMS. It has been validated by our end-users verifying functionalities and GUIs through test cases for PCRs samples and pre-sequencing data and it is currently in use in our laboratories. adLIMS implements authorization and authentication policies, allowing multiple users management and roles definition that enables specific permissions, operations and data views to each user. (Full article...)

|

Featured article of the week: January 4–10:

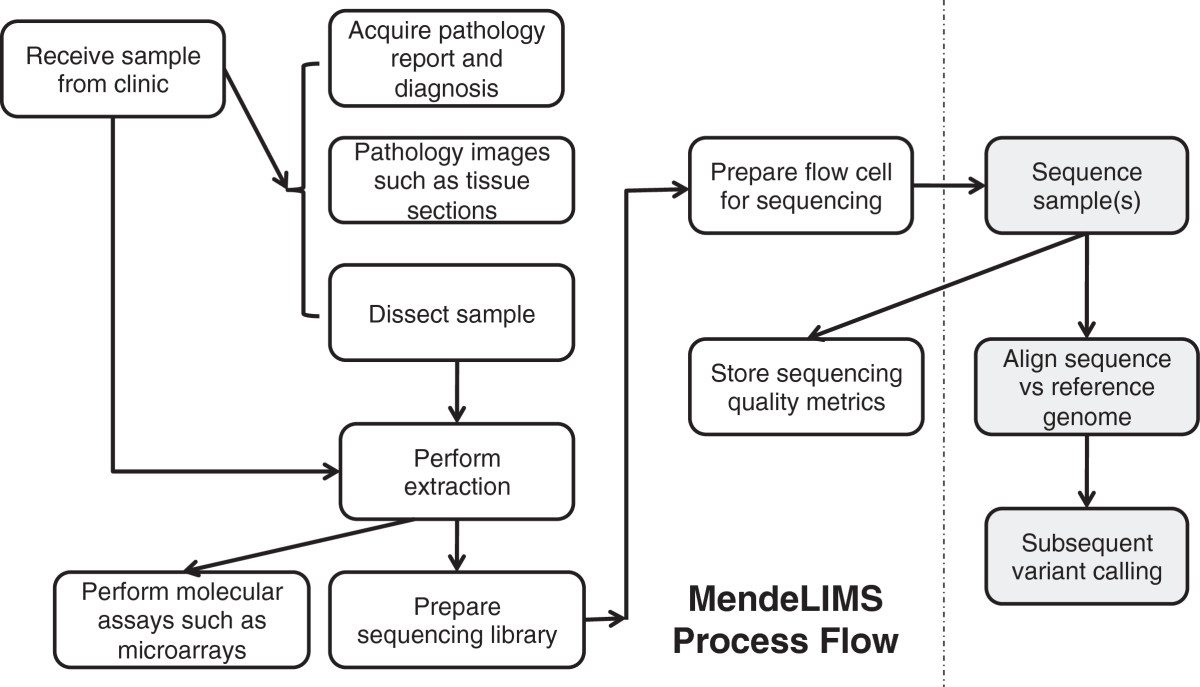

"MendeLIMS: A web-based laboratory information management system for clinical genome sequencing"

Large clinical genomics studies using next generation DNA sequencing require the ability to select and track samples from a large population of patients through many experimental steps. With the number of clinical genome sequencing studies increasing, it is critical to maintain adequate laboratory information management systems to manage the thousands of patient samples that are subject to this type of genetic analysis.

To meet the needs of clinical population studies using genome sequencing, we developed a web-based laboratory information management system (LIMS) with a flexible configuration that is adaptable to continuously evolving experimental protocols of next generation DNA sequencing technologies. Our system is referred to as MendeLIMS, is easily implemented with open source tools and is also highly configurable and extensible. MendeLIMS has been invaluable in the management of our clinical genome sequencing studies. (Full article...)

|

|