LII:Medical Device Software Development with Continuous Integration/Continuous Integration

Should I use continuous integration builds?

Yes, a software project should have continuous integration (CI) builds. This goes for projects with a large team as well as projects with a small team. While the CI build is very useful for a large group to collaborate, there is tremendous value even for the smallest team. Even a software engineer working alone can benefit from a continuous integration build.

Why is continuous integration a necessity?

First, a reminder of the definition we're using:

In software engineering, continuous integration (CI) implements continuous processes of applying quality control — small pieces of effort, applied frequently. Continuous integration aims to improve the quality of software, and to reduce the time taken to deliver it, by replacing the traditional practice of applying quality control after completing all development.[1]

A continuous integration (CI) tool is no longer simply something that is "nice to have" during project development. As someone who has spent more time than I care to discuss wading through documents and making sure references, traceability, document versions, and design outputs are properly documented in a design history file (DHF), I hope to make the value of using CI to automate such tedious and error prone manual labor clear. CI shouldn’t be though of as "nice to have." On the contrary, it is an absolute necessity!

When I say that continuous integration is an absolute necessity, I mean that both the CI tool and the process are needed. A CI tool takes the attempts — sometimes feeble — of humans to make large amounts of documentation consistently traceable and forces the computer system to do what it does best. The use of a CI tool is not simply an esoteric practice for those who are fond of its incorporation. As you will learn in this chapter, utilizing software tools to ease the continuous integration process is something that good development teams have always attempted but have too often failed to do. Going a step further, development teams can use CI tools to simplify steps that they may never have dreamed of before!

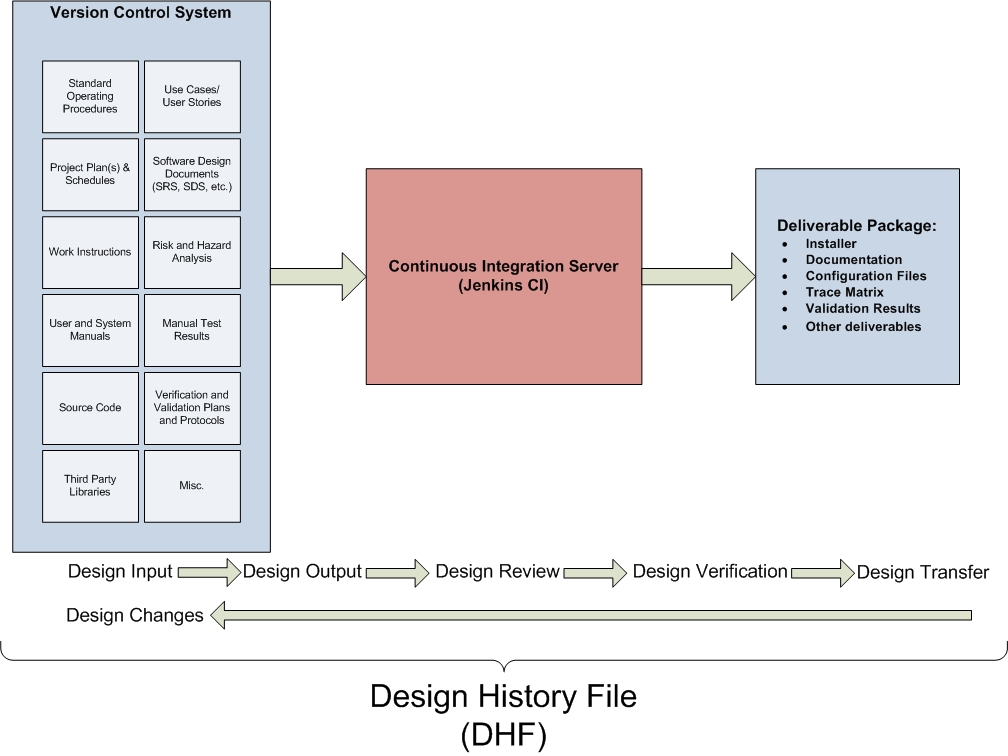

Continuous integration refers to both the continuous compiling and building of a project tree and the continuous testing, releasing, and quality control of the project as a while. This means that throughout the project, at every stage, the development team will have a build available with at least partial documentation and testing included. The CI build is used to perform certain tasks at certain times. In general, this simply means that builds are performed in an environment that closely matches the actual production environment of the system. In addition, a CI environment should be used to provide statistical feedback on build performance, tests, and incorporation of a version control system and ticketing system. In a development environment, the team may use a version control tool (i. e. Subversion) to link to tickets. As such, any CI build will be linked to a specific changeset, thereby providing linkage to issues, requirements, and, ultimately, the trace matrix. To this end, the CI environment, used properly, can be the DHF.

A development team should attempt to perform continuous integration builds frequently enough that no window of additional version control update occurs between commit and build, and such that no errors can arise without developers noticing them and correcting them immediately. This means that for a project that is in-development, it should be configured that a check triggers a build in a timely manner. Likewise, it is generally a good practice for the developer committing a changeset of code to verify that his or her own changeset does not break the continuous integration build.

Allow me to address the word "build." Most software engineers think of a build as the output of compiling and linking. I suggest moving away from this narrow definition and expanding it. A "build" is a completion (in both the compiler sense and beyond) of all things necessary for a successful product delivery. A CI tool runs whatever scripts the development team tells it to run. As such, the team has the freedom to use the CI tool as a build manager (sorry build managers, I don’t mean to threaten your job). It can compile code, create an installer, bundle any and all documents, create release notes, run tests, and alert team members about its progress.

Jenkins CI

While there are many tools, I will focus on one of the most popular, Jenkins CI. The open-source tool Jenkins CI (the continuation of a product formerly called Hudson) allows continuous integration builds in the following ways:

- It integrates with popular build tools (ant, maven, make) so that it can run the appropriate build scripts to compile, test, and package within an environment that closely matches what will be the production environment.

- It integrates with version control tools, including Subversion, so that different projects can be set up depending on projection location within the trunk.

- It can be configured to trigger builds automatically by time and/or changeset (i.e. if a new changeset is detected in the Subversion repository for the project, a new build is triggered).

- It reports on build status. If the build is broken, it can be configured to alert individuals by email.

The above screenshot gives an example of what a main page for Jenkins CI (or any CI tool) may look like. It can be configured to allow logins at various levels and on a per-project basis. This main page lists all the projects that are currently active, along with a status (a few details about the build) and some configuration links on the side. These links may not be available to a general user.

Clicking any project ("job") links to further details on the build history and status. This image provides us details on what the overview screen in the CI environment might look like, but it is at the detailed project level that we see the real benefit of packaging that can be performed by a well setup CI environment.

What's so great about a CI build?

Tracing of builds to a changeset

Okay, so I’m starting to sound repetitive, but tracing is a good thing, and with this setup I can trace all over the place! With the entire process in place — from standard operating procedures to work instructions and from use cases and requirements to tickets and changests — how do we know that the team members working on a project are actually following procedure? The CI environment, at least with regard to the ongoing development of code, gives us a single point of view for all other activities. From here we can see changesets, tickets, build status, and test coverage. Using the proper add-ons, we can even gain insight into the quality of the code that is being developed.

To pull this off, of course, we need to use our ticketing system wisely. With Redmine, or just about any other good ticketing system, we can capture elements of software requirements and software design as parent tickets. These parent tickets have one to many sub-tickets, which themselves can have sub-tickets. A parent ticket may not be closed or marked complete until a child ticket is completed.

Immediate feedback

At its most basic level, Jenkins CI only does one thing: it runs whatever scripts we tell it to. The power of Jenkins CI is that we can tell it to run whatever we want, log the outcome, keep build artifacts, run third-party evaluation tools, and report on results. With Subversion integration, Jenkins CI will display the changeset relevant to a particular build. It can be configured to generate a build at whatever interval we want (nightly, hourly, every time there is a code commit, etc.).

Personally, every time I do any code commit of significance, one of the first things I do is check the CI build for success. If I’ve broken the build, I get to work on correcting the problem (and if I cannot correct the problem quickly, I roll my changeset out so that the CI build continues to work until I’ve fixed the issue).

Jenkins can be configured to email team members on build results, and it may be useful to set it up so that developers are emailed should a build break.

Central build location

The build artifacts of your project, in general, should not be a part of the repository (there are exceptions to this rule). Build artifacts belong in your continuous integration build tool, where they can be viewed and used by anyone on the team who needs them. These artifacts include test results, compiled libraries, and executables. Too often a released build is created locally on some developer’s machine. This is a serious problem since we have no good way of knowing what files were actually used to create that build. Was there a configuration change? A bug introduced? An incorrect file version?

While developers have good reason to generate and test build locally, a formal build used for testing (or, more importantly, release) must never be created locally. Never.

Build artifacts are not checked in to the source control repository for a number of reasons, but the biggest reason is because we never want to make assumptions about the environment in which those items were built. The build artifacts should instead remain in our CI environment, where we know the conditions within which the build was generated.

Also, because these builds remain easily accessible and labeled in the CI build environment, any team member can easily access any given build. In particular, it may become necessary to use a specific build to recreate an issue in a build that has been released for internal or external use. Because we know the label of the build (the version number given to it) as well as the repository changeset number of the build (because our build and install scripts include it), we know precisely which build to pull from the CI build server to recreate the necessary conditions.

Unit tests are run over and over (and over)

Developers should do whatever they can to keep the CI build from breaking. Of course, this doesn’t always work well. I’ve broken the CI build countless times for a number of reasons:

- I forgot to add a necessary library or new file.

- I forgot to commit a configuration change.

- I accidentally included a change in my changeset.

- It worked locally but not when built on the CI build server (this is why the CI build server should, as much as possible, mimic the production environment).

- Unit tests worked locally but not on the CI build server because of some environmental difference.

If not for a CI build environment with good unit tests, such problems would only be discovered at a later time or by some other frustrated team member. In this regard, the CI build saves us from many headaches.

The most difficult software defects to fix (much less, find) are the ones that do not happen consistently. Database locking issues, memory issues, and race conditions can result in such defects. These defects are serious, but if we never detect them, how can we fix them?

It’s a good idea to have unit tests that go above and beyond what we traditionally think of as unit tests and perhaps go several steps further, such as automating functional testing. This is another one of those areas where team members often (incorrectly) feel that there is not sufficient time to do all the work.

With Jenkins running all of these tests every time a build is performed, we sometimes encounter situations where a test fails suddenly for no apparent reason. It worked before, an hour ago, and there has not been any change to the code, so what caused it to fail this time? Pay attention, because this WILL happen.

Integration with other nice-to-haves, such as Findbugs, PMD, and Cobertura

Without going into too much detail, there are great tools out there that can be used with Jenkins to evaluate the code for potential bugs, bad coding practices, and testing coverage. These tools definitely come in handy. Use them.

Releasing software

When software is released, it is typically given some kind of version number (e.g., 1.0). This is good, but it doesn’t tell us the specifics of what went into that build. It’s a good idea to include the Subversion changeset number somewhere in the release so that we always know EXACTLY what went into the build. I would include a build.xml (or build.prop) file somewhere that includes the version number of the release, the Subversion changeset number and the date of the build. As far as the last two values, these can (and should) be generated automatically by your build scripts.

As far as actually using Subversion within Linux/Unix, all commands are available from the command line. When working in Windows, I really like using TortoiseSVN. It integrates with Windows File Explorer, showing icons that indicate the status of any versioned file. It also provides a nice interface for viewing file differences (even differences of your Word documents) and repository history.

Ticketing and issue tracing

For too long we’ve thought of our ticketing systems as "bug trackers." One of the previously most popular open-source tools, Bugzilla, even used the word "bug" in its name. But issue tracking does not need to imply that it is only useful for tracking software defects. On the contrary, it can be used for everything in software design and development, from addressing documentation needs to capturing software requirements and handling software defect reporting.

On that note, I would like to add something that may require an entire post. I think it might be best to get away from using standard documents for the capture of software use cases, requirements, hazards, and so on. By capturing everything related to a software project in our issue tracking tool, we can leverage the power of a tool like Trac or Redmine to enhance team collaboration and project tracing. But I won’t bite off more than I can chew right now.

When I first began writing all of these thoughts of mine down, I was going to use Trac as my example (http://trac.edgewall.org/). Trac is a great tool, but there’s something better now, and that something is Redmine (http://www.redmine.org/).

The principal shortcoming of Trac is the fact that it doesn’t lend itself well (at all) to handling multiple projects. One installation of Trac can be integrated with only a single Subversion repository, and the ticketing system can only handle a single project. I still like the way Trac can be used to group tickets into sprints, but by using sub-projects in Redmine, a similar grouping can be achieved. In the past, if a tool was used at all, we used Bugzilla or Clearquest to handle our issue tracking. These tools were very good at the time, but they did not integrate well with other tools, nor did they include features like wikis, calendars, or Gantt charts. (Admittedly, I have not used Clearquest in many years, so I really have no idea whether or not it has since addressed some of these needs.)

Redmine

So what’s so great about Redmine?

- With the power of the wiki, your documents maintain all of your project management details, work instructions, use cases, requirements, and so on. Again, I think this information can be placed into the wiki, but that may be a step that not everyone is comfortable taking.

- That said, all developer setup, lessons learned, and other informal notes can be placed in the wiki. One time I spent nearly four days tracking down a very strange defect. By the time I finally figured it all out I had learned a lot about a very strange issue that others were surely to encounter. I created a wiki page explaining the issue.

- Another power feature of the wiki is the fact that with Redmine (and Trac) we can link not only to other wiki pages but also to tickets (issues), projects, sub-projects, and Subversion changesets. Again, more tracing. Nice.

- With Subversion and Redmine integrated I can link back and forth between the two. Those work instructions explaining to the team how we will make use of our procedures should explain that no ticket can be closed without a link to a Subversion changeset (unless, of course, the ticket is rejected). Redmine can be configured to search for keywords in your Subversion changeset commit. For example, if I am checking in several files that address issue #501, I might put a comment like this: "Corrected such and such. This fixes #501." We can configure Redmine to look for that word "fixes" and use it. Redmine may use that word as a flag to close the ticket and link to the changeset that was created when I did that commit. Likewise, when we view your Subversion history, we will see "#501" attached to the changeset as a link to the ticket. The tracing works both ways… Beautiful!

- It has multiple project handling and integration with different Subversion repositories. This is a major reason why I (and others) switched to Redmine. Trac was great, but it only handled a single project. Redmine, with its handling of multiple projects, can be used corporate-wide for all development, and each project can be tied to a different Subversion repository. Additionally, a single project can have multiple sub-projects. This gives us the flexibility to use sub-projects for sprints, specific branched versions, and so on.

- With Jenkins integrated, I don’t have to leave the wiki to see how my CI builds look. Not only that, I can link to a specific CI build from any page within the wiki or ticketing system.

- Everything can be configured in Redmine. Yes, EVERYTHING. We can even configure the flow of tickets.

The issue tracking system

I propose that it is not enough to simply leave functional requirements in the software requirements specification document. This does not provide sufficient tracing, nor does it provide a clear path from idea to functional code. Here are the steps that I suggest:

- All requirements and software design items are entered as tickets. For now they are simply high-level tickets with no child tickets.

- The development team, organized by a lead developer, breaks down each high-level ticket into as many child tickets as necessary. Using the ticketing system, we set up relationships so that the parent ticket (the requirement itself) cannot be closed until all child tickets are completed. (Note: It may be a good idea to require corresponding unit tests with each ticket.)

- Hazards (and I'm not doing to bother an explanation of hazard and risk analysis in this article) are mitigated by a combination of documentation, requirements, and tests. We can leverage our ticketing system to capture our hazards and provide tracing in much the same way as with requirements. This does not remove the need for a traceability matrix, but it does enhance our ability to create and maintain it. (As a side note, I think it would be great to use the Redmine wiki for use cases, requirements, hazard analysis, software design documents, and traceability matrices, thereby allowing for linking within, but this may be a hard sell for now).

- Not all requirements are functional code requirements. Many are documentation and/or quality requirements. These should be captured in the same ticketing system. Use the ability of the system to label the type of ticket to handle the fact that there are different categories. By doing this, even documentation requirements are traceable in our system.

- I'm not suggesting that the tickets will be locked down this early, not for a moment! Tickets are created, closed, and modified throughout project design and the development process. Our project plan (created before we started writing code) explains to us which tickets need to be done when, focusing more on the highest level tickets. That said, I find it best to use some sort of iterative approach (and allow the development team to use sub-iterations, or "sprints").

References

- ↑ "Continuous integration". Wikipedia. Wikimedia Foundation, Inc. 28 August 2011. https://en.wikipedia.org/w/index.php?title=Continuous_integration&oldid=447106578. Retrieved 27 April 2016.