Journal:What is this sensor and does this app need access to it?

| Full article title | What is this sensor and does this app need access to it? |

|---|---|

| Journal | Informatics |

| Author(s) | Mehrnezhad, Maaryam; Toreini, Ehsan |

| Author affiliation(s) | Newcastle University |

| Primary contact | Email: maryam dot mehrnezhad at ncl dot ac dot uk |

| Year published | 2019 |

| Volume and issue | 6(1) |

| Page(s) | 7 |

| DOI | 10.3390/informatics6010007 |

| ISSN | 2227-9709 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.mdpi.com/2227-9709/6/1/7/htm |

| Download | https://www.mdpi.com/2227-9709/6/1/7/pdf (PDF) |

Abstract

Mobile sensors have already proven to be helpful in different aspects of people’s everyday lives such as fitness, gaming, navigation, etc. However, illegitimate access to these sensors results in a malicious program running with an exploit path. While users are benefiting from richer and more personalized apps, the growing number of sensors introduces new security and privacy risks to end-users and makes the task of sensor management more complex. In this paper, we first discuss the issues around the security and privacy of mobile sensors. We investigate the available sensors on mainstream mobile devices and study the permission policies that Android, iOS and mobile web browsers offer for them. Second, we reflect on the results of two workshops that we organized on mobile sensor security. In these workshops, the participants were introduced to mobile sensors by working with sensor-enabled apps. We evaluated the risk levels perceived by the participants for these sensors after they understood the functionalities of these sensors. The results showed that knowing sensors by working with sensor-enabled apps would not immediately improve the users’ security inference of the actual risks of these sensors. However, other factors such as the prior general knowledge about these sensors and their risks had a strong impact on the users’ perception. We also taught the participants about the ways that they could audit their apps and their permissions. Our findings showed that when mobile users were provided with reasonable choices and intuitive teaching, they could easily self-direct themselves to improve their security and privacy. Finally, we provide recommendations for educators, app developers, and mobile users to contribute toward awareness and education on this topic.

Keywords: mobile sensors, IoT sensors, sensor security, security education, app permission, mobile security awareness, user privacy, user security, sensor attacks

Introduction

According to The Economist[1], smartphones have become the fastest-selling gadgets in history, outselling personal computers (PCs) four to one. Today, about half the adult population owns a smartphone; by 2020, 80% will. Mobile and smart device vendors are increasingly augmenting their products with various types of sensors such as the Hall effect sensor, accelerometer, NFC (near-field communication) sensor, heart rate sensor, and iris scanner, which are connected to each other through the internet of things (IoT). We have observed that approximately 10 new sensors have been augmented or became popular in mainstream mobile devices in less than two years, bringing the number of mobile sensors to more than 30 sensors. Examples include FaceID, Active edge, depth cameras (using infrared), thermal cameras, air sensors, laser sensors, haptic sensors, iris scanners, heart rate sensors, and body sensors.

Sensors are added to mobile and other devices to make them smart: to sense the surrounding environment and infer aspects of the context of use, and thus to facilitate more meaningful interactions with the user. Many of these sensors are used in popular mobile apps such as fitness trackers and games. Mobile sensors have also been proposed for security purposes, e.g., authentication[2][3], authorization[4], device pairing[5], and secure contactless payment.[6] However, malicious access to sensor streams results in an installed app running in the background with an exploit path. Researchers have shown that user PINs and passwords can be disclosed through sensors such as the camera and microphone[7], the ambient light sensor[8], and the gyroscope.[9] Sensors such as NFC can also be misused to attack financial payments.[10]

In our previous research[11][12][13][14], we have shown that the sensor management problem is spreading from apps to browsers. We proposed and implemented the first JavaScript-based side channel attack revealing a wide range of sensitive information about users such as phone calls’ timing, physical activities (sitting, walking, running, etc.), touch actions (click, hold, scroll, and zoom) and PINs on mobile phones. In this attack, the JavaScript code embedded in the attack web page listens to the motion and orientation sensor streams without needing any permission from the user. By analyzing these streams via machine learning algorithms, this attack infers the user’s touch actions and PINs with an accuracy of over 70% on the first try. The above research attracted considerable international media coverage, including by the Guardian[15] and the BBC[16], which reassures the importance of the topic. We disclosed the identified vulnerability described in the above to the industry. While working with World Wide Web Consortium (W3C) and browser vendors (Google Chromium, Mozilla Firefox, Apple, etc.) to fix the problem, we came to appreciate the complexity of the sensor management problem in practice and the challenge of balancing security, usability, and functionality.

Through a series of user studies over the years[13][14], we concluded that mobile users are not generally familiar with most sensors. In addition, we observed that there is a significant disparity between the actual and perceived risk levels of sensors. In another work[17], the same conclusion was made by Crager et. al. for motion sensors. We discussed how this observation, along with other factors, renders many academic and industry solutions ineffective at managing mobile sensors.[14] Given that sensors are going beyond mobile devices, e.g., in a variety of IoT devices in smart homes and cities, the sensor security problem has already attracted more attention not only from researchers, but also from hackers. In view of all this, we believe that there is much room for more focus on people’s awareness and education about the privacy and security issues of sensor technology.

Previous research[14][17] has focused on individual user studies to study human aspects of sensor security. In this paper, we present the results of a more advanced teaching method—working with sensor-enabled apps—on the risk level that users associate with the PIN discovery scenario for all sensors. We reflect the results of two interactive workshops that we organized on mobile sensor security. These workshops covered the following: an introduction of mobile sensors and their applications, working with sensor-enabled mobile apps, an introduction of the security and privacy issues of mobile sensors, and an overview of how to manage the app permissions on different mobile platforms.

In these workshops, the participants were sitting in groups and introduced to mobile sensors by working with sensor-enabled apps. Throughout the workshops, we asked the participants to fill in a few forms in order to evaluate the general knowledge they had about mobile sensors, as well as their perceived risk levels for these sensors after they understood their functionalities. After analyzing these self-declared forms, we also measured the correlation between the knowledge and perceived risk level for mobile sensors. The results showed that knowing sensors by working with sensor-enabled apps would not immediately improve the users’ security inference of the actual risks of these sensors. However, other factors such as the prior general knowledge about these sensors and their risks have a strong impact on the users’ perception. We also taught the participants about the ways that they could audit their apps and their permissions, including per app vs. per permission. Our participants found both models useful in different ways. Our findings show that when mobile users are provided with reasonable choices and intuitive teaching, they can easily self-direct themselves to improve their security and privacy.

In the next section, we list the available sensors on mobile devices and categorize them, and then we present the current permission policies for these sensors on Android, iOS, and mobile web browsers. In the subsequent section, we present the structure of these workshops in full detail. Afterwards, we include our analysis on the general knowledge and perceived risk levels that our participants had for sensors and their correlation, followed by our observations of the apps’ and permissions’ review activities in the workshops. We then go on to present a list of our recommendations to different stakeholders. Finally, in the final two sections, we include limitations, future work, and the conclusion.

Mobile sensors

As stated, there are more than 30 sensors on mobile devices. Both iOS and Android, as well as mobile web browsers, allow native apps and JavaScript code in web pages to access most of these sensors. Developers can have access to mobile sensors either by (1) writing native code using mobile OS APIs[18][19], (2) recompiling HTML5 code into a native app[20], or (3) using standard APIs provided by the W3C[21], which are accessible through JavaScript code within a mobile browser.

As shown by Taylor and Martinovic[22], the average number of permissions used by Android apps increases over time, in particular for popular apps and free apps. These permissions are requested for having access to the operating system (OS) resources such as contacts and files, as well as sensors such as the GPS and microphone. This has the potential to make apps over-privileged and unnecessarily increase the attack surface.

Mobile sensors' categorization

We first created a list of available sensors on various mobile devices. We prepared this list by inspecting the official websites of mainstream mobile devices such as the iPhone X, Samsung Galaxy S9, Google Pixel 2, as well as the specifications that W3C[23], Android[18], and Apple[19] provide for developers. We proposed categorizing these sensors into four main groups: identity-related (biometric) sensors, communicational sensors, motion sensors, and ambient (environmental) sensors, as presented in Table 1. Note that this list can be even longer if all mobile brands are included. For example, the Cat S61 smart phone has sensors such as a thermal camera, an air sensor (measures the quality of the environmental air), and a laser sensor (to measure distance).

| ||||||||||||||||

In Appendix A (see the supplemental material at the end), we present a brief description of each sensor. With the growing number of sensors on mobile devices, categorizing them into a few groups is much more difficult than before. Some of these sensors can belong to multiple groups. For example, one might argue that GPS belongs to the environmental category; however, since it is associated with people’s identities, we propose to keep it in the identity-related category. Similarly, the sensor hub monitors the device’s movements, which is associated with the user’s activities. Hence, it is difficult to decide to which category (motion or biometric) it belongs.

Sensor management challenges

In Table 2, we present how the Android, iOS, and W3C specs (followed by mobile browsers) treat different sensors in terms of access. We used the Android and Apple developer websites, the W3C specifications, and caniuse.com to build this table.[18][19][23][24] As can be seen, permission policies for having access to different sensors vary across sensors and platforms. We argue that sensing is still unmanaged on existing smartphone platforms. The in-app access to certain sensors including GPS, camera, and microphone requires user permission when installing and running the app. However, as Simon and Anderson have discussed[7], an attacker can easily trick a user into granting permission through social engineering (e.g., presenting it as a free game app). Once the app is installed and the permission approved, usage of the sensor data is not restricted. On the other hand, access to many other sensors such as accelerometers, gyroscopes, and light sensors is unrestricted; any app can have free access to the sensor data without needing any user permission, as these sensors are left unmanaged on mobile operating systems.

Although the information leakage caused by sensors has been known for years[7][8][9], the problem has remained unsolved in practice. One main reason is the complexity of the problem; keeping the balance between security and usability. Another reason, from the practical perspective, is that all the reported attacks depend on one condition: the user must initiate the downloading and installing of the app. Therefore, users are relied upon to be vigilant and not to install untrusted apps. Furthermore, it is expected that app stores such as the Apple App Store and Google Play will screen the apps and impose severe penalties if the app is found to contain malicious content. However, in the browser-based attack[11][12][13][14], we have demonstrated that these measures are ineffective. Apart from academic efforts, there are industrial solutions (e.g., Navenio) that use some of these sensors such as the accelerometer to track users precisely indoors and outdoors. These products can easily be integrated with illegitimate apps and websites and break user’s privacy and security. With the growing number of sensors, and more sensitive sensor hardware provisioned with new mobile devices and other IoT devices, the problem of information leakage caused by sensors is becoming more severe. Previous research[14][17] suggests that users are not aware of (i) the data generated by the sensors, (ii) how that data might be used to undermine their security and privacy, and (iii) what precautionary measure they could and should take. Given that, we believe that raising public knowledge about the sensor technology through education is a very timely matter. WorkshopWe ran two rounds of a 90-minute workshop entitled What Your Sensors Say About You, which was hosted by the Thinking Digital conference in November 2016[25] and May 2018 at Newcastle University, U.K. The attendees could find the following description of the workshop on the event page:

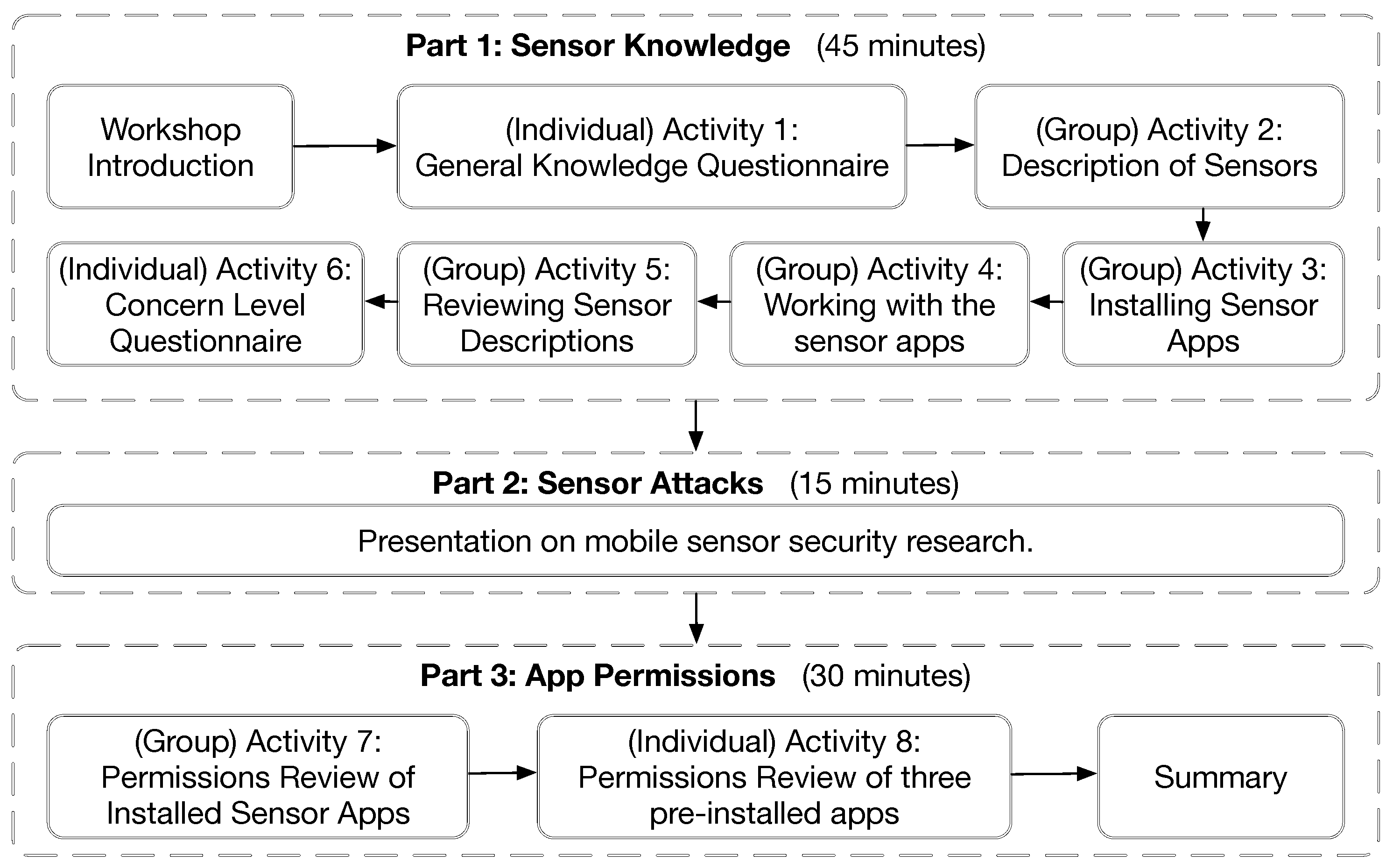

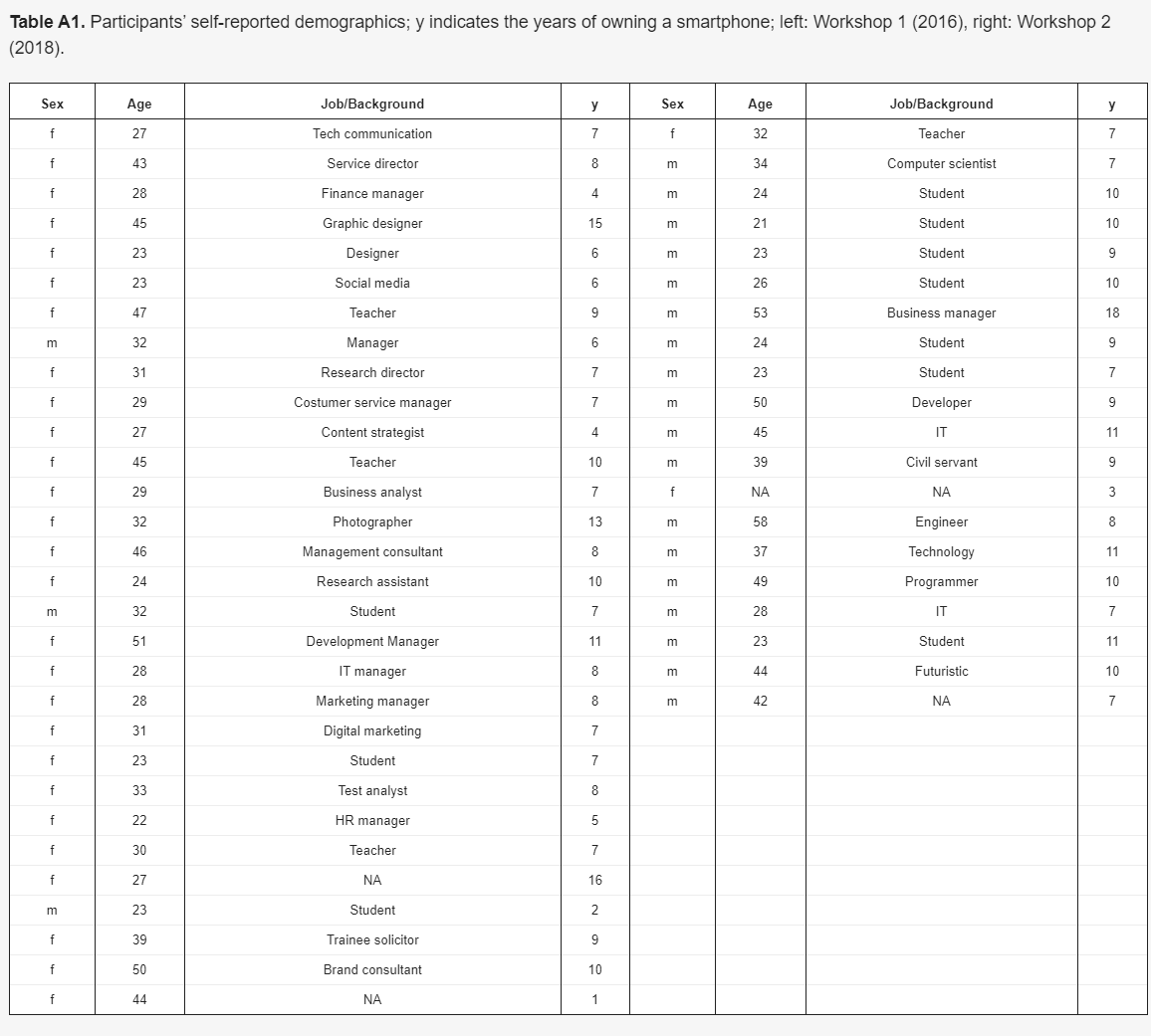

Pedagogical approachFor the purposes of those workshops, our teaching approach, incorporating taught and research dissemination activities, embodied the principles of constructive alignment and constructivist learning theory. In particular, we deliberately introduced a number of periods of reflection throughout the workshop. Attendees were supported in considering various preventative measures in relation to permission-granting in sensor-related apps and extrapolating their future impacts. A widely adopted theory in the public understanding of scientific research is that of the “deficit model.”[26] The deficit model acknowledges that a lack of available information leads to a lack of popular understanding, which in turn fosters scepticism and hostility. Through our public engagement exercise, and by making available our resources, we seek to equip the public with accessible information, which may inform reasonable precautionary behavior. We adopt a challenging role, both as researchers active in mobile sensor security and mediators seeking to popularize research findings. This leads to tension between providing layman and specialist explanations, a perennial issue in science communication.[27] As such, we acknowledge the role popularization of science plays in informing future iterations of research.[28][29] Indeed, our observations of participants’ interactions serve to inform future technological interventions to support mobile sensor security. ParticipantsIn both rounds of the workshop, participation was voluntary, with conference attendees selecting among multiple parallel workshops. We presented the workshop to the audience in both rounds. In the first run in 2016, 27 female and three male participants, aged between 22 and 51, attended the workshop. In the second run, two female and 18 male participants aged between 21 and 58 attended the workshop. This brought the total number of our participants to 50 (29 female). In both rounds, the workshop attendees were sitting at tables of five or six and could interact with each other and the educators during the workshop. The attendees have owned iOS and Android phones for as little as one year, all the way up to 15 years. Full details of the participants’ demography is presented in Appendix B (see the supplementary material at the end). Workshop contentWe ran the workshops by presenting a PowerPoint file, which is publicly available via the first author’s homepage. These slides contain all the general and technical content delivered to the attendees and the individual/group exercises they were asked to complete. We explicitly explained to the participants whether they need to complete an activity individually or in a group. We also observed them during the workshop to make sure everyone was following the instructions. We explained to the attendees that their feedback during the workshop, through completing a few forms, would be used for a research project. The attendees could leave the workshop at any stage without giving any explanation. In both rounds of the workshop, all participants completed the session to the end. These workshops were organised into three parts, as shown in Figure 1. In Part 1, we went through the current mobile sensors by (a) providing the participants with a description of sensors and (b) working with sensor-enabled apps. In Part 2, we explained the sensor-based attacks that have been performed on sensitive user information such as PINs. Finally, in Part 3, we discussed mobile app permission settings.

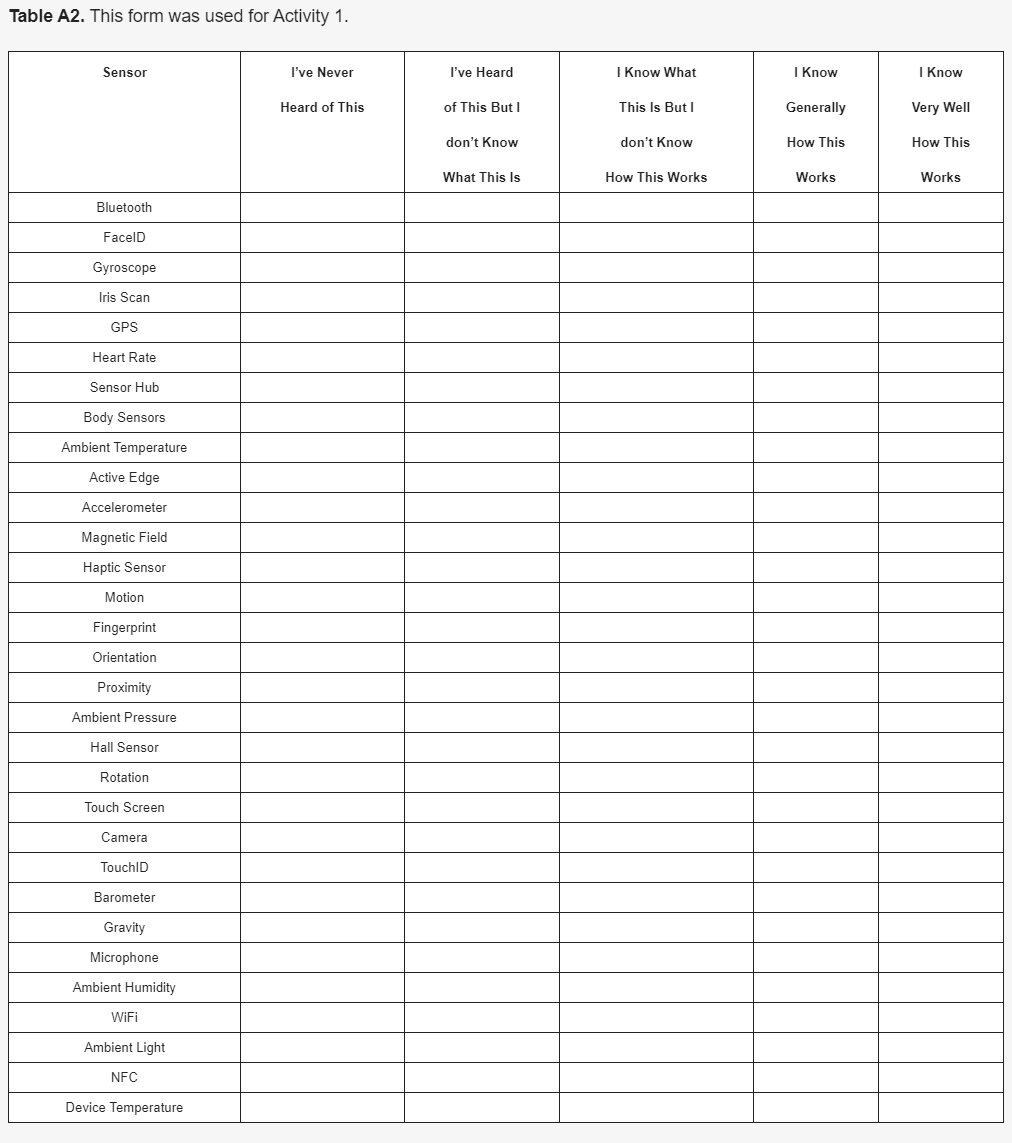

Sensor knowledgeAfter a brief introduction about the workshop, we first asked the participants to fill in a five-point scale self-rated familiarity questionnaire on a list of different sensors listed in Table 1 (see Appendix C—borrowed from Mehrnezhad et al.[14]—in the supplementary material at the end). In the first round of the workshop in 2016, this form had 25 sensors which we had been consistently using in our previous research.[14] However, in the second round of the workshop in 2018, we added six new sensors (FaceID, iris scan, heart rate, body sensors, Active Edge and haptic sensors). This was due to the augmentation of popular mobile devices with these new sensors. In this form, we asked the users to express the level of the general knowledge they had of each sensor by choosing one of the following: “I’ve never heard of this”; “I’ve heard of this, but I don’t know what this is”; “I know what this is, but I don’t know how this works”; “I know generally how this works”; and “I know very well how this works.” This was an individual exercise, and the list of sensors was randomly ordered for each user to minimize bias. Description of sensors (Activity 2): After completing the knowledge form, we asked the participants to go through the description of each sensor (see Appendix A) on a printed paper given to everyone. This was a group activity, and the participants could help each other for a better understanding. In case of any difficulty, the attendees were encouraged to interact with the educators. After everyone went through the description page, we gave them examples of the usage of each sensor, e.g., motion sensors for gaming, NFC for contactless payment and haptic sensors for virtual reality applications. Afterwards, for the second activity, we asked the participants to go through the description of each sensor (see Appendix A, supplementary material) on a printed paper given to everyone. This was a group activity, and the participants could help each other for a better understanding. In case of any difficulty, the attendees were encouraged to interact with the educators. After everyone went through the description page, we gave them examples of the usage of each sensor, e.g., motion sensors for gaming, NFC for contactless payment, and haptic sensors for virtual reality applications. For the third activity, we then asked the participants to visit the app stores on their devices and download and install a particular sensor-enabled app (sensor app). Sensor apps are those that visually allow the users to choose different sensors on the screen and see their functionality. For Android users, we recommended the participants install Sensor Box for Android[30], as shown in Figure 2, left. This app detects most of the available sensors on the device and visually shows the user how they work. This app supports the accelerometer, gyroscope, orientation, gravity, light, temperature, proximity, pressure, and sound sensors. For iPhone users, we recommended the Sensor Kinetics app[31], as shown in Figure 2, right. This app mainly supports motion sensors (gyroscope, magnetometer, linear accelerometer, gravity, attitude).

Both apps were chosen based on the popularity, number of installs, rating, and the features they offered. We also had a few extra Android phones with the sensor app installed on them. These phones were offered to participants who were unable to install the app and use their own phones. Since the features offered by the Android sensor app were richer, we made sure that each table had at least one Android phone. This was a group activity, and the attendees could help each other find the app on the store and install it. We observed that all users were able to install the app, except two cases in Round 1 and one case in Round 2, who had connection and storage problems. There was another case in Round 2 where the participant did not wish to install the app on his phone due to security and privacy concerns. We lent the Android phones to these users. We then began two more activities. At this point, we invited the participants to work with the installed apps on their devices. We asked everyone to go through each sensor and find out about its functionality by using the app. Meanwhile, the participants were advised to keep the sensor description handout to refer to if necessary. This was a group activity, and the participants could exchange ideas about the app and sensors, as well as help each other to understand the sensors better. During this activity, we worked with individuals either separately or in small groups of two or three and reviewed at least two sensors in the app, including one motion sensor, using the Android app. Through this pair-working activity, we made sure all participants had the chance to observe a few different sensors on the Android device since it offered more features in comparison to the iOS app. At the end of that activity, we then asked the participants to review the sensor description page again, ensuring nobody expressed difficulties in understanding the general functionalities of mobile sensors. Finally, we wanted to assess the effect of teaching about sensors to mobile users—via working with mobile sensor apps—on the perceived risk level for each sensor. Similar to our previous research[14], we described a specific scenario:

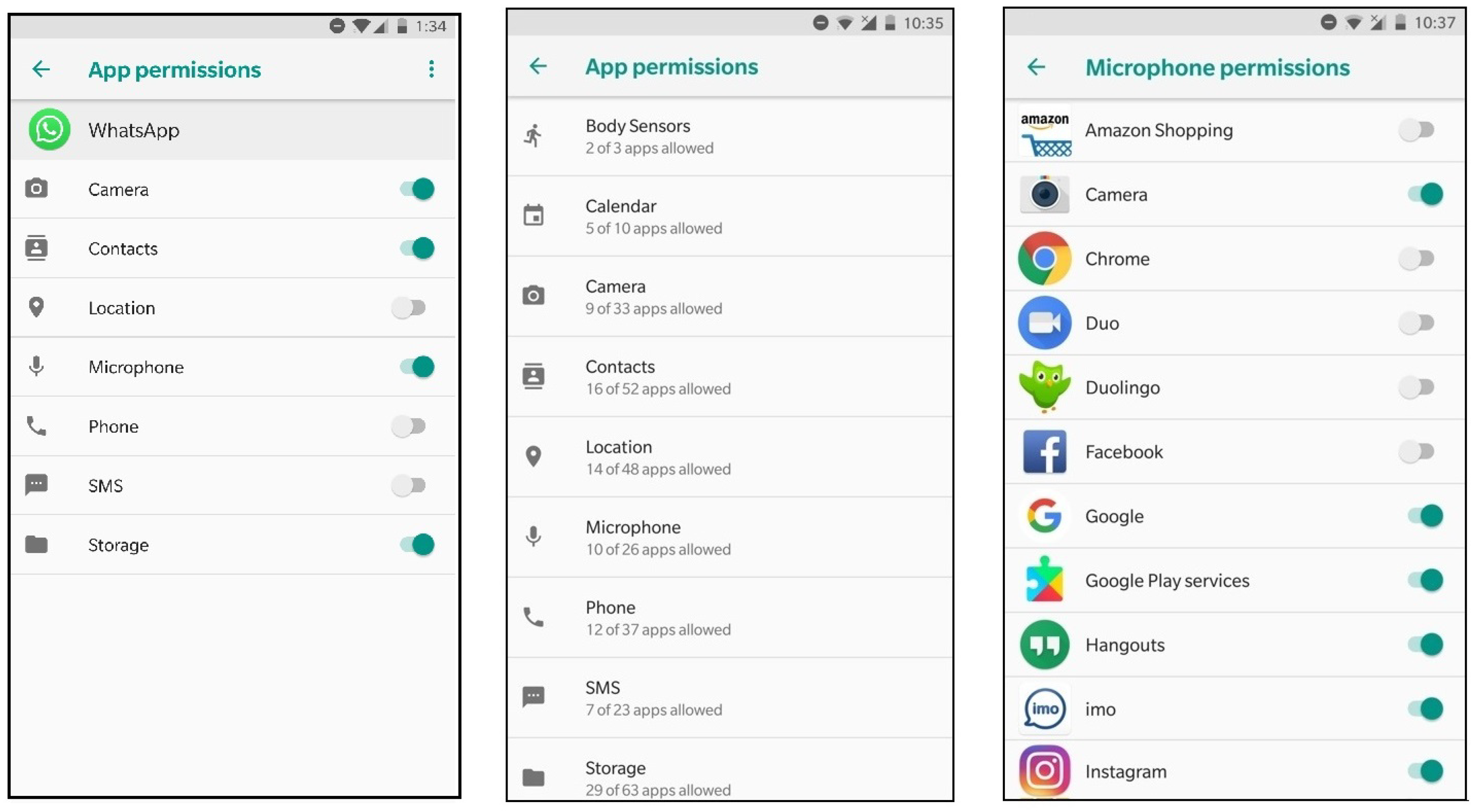

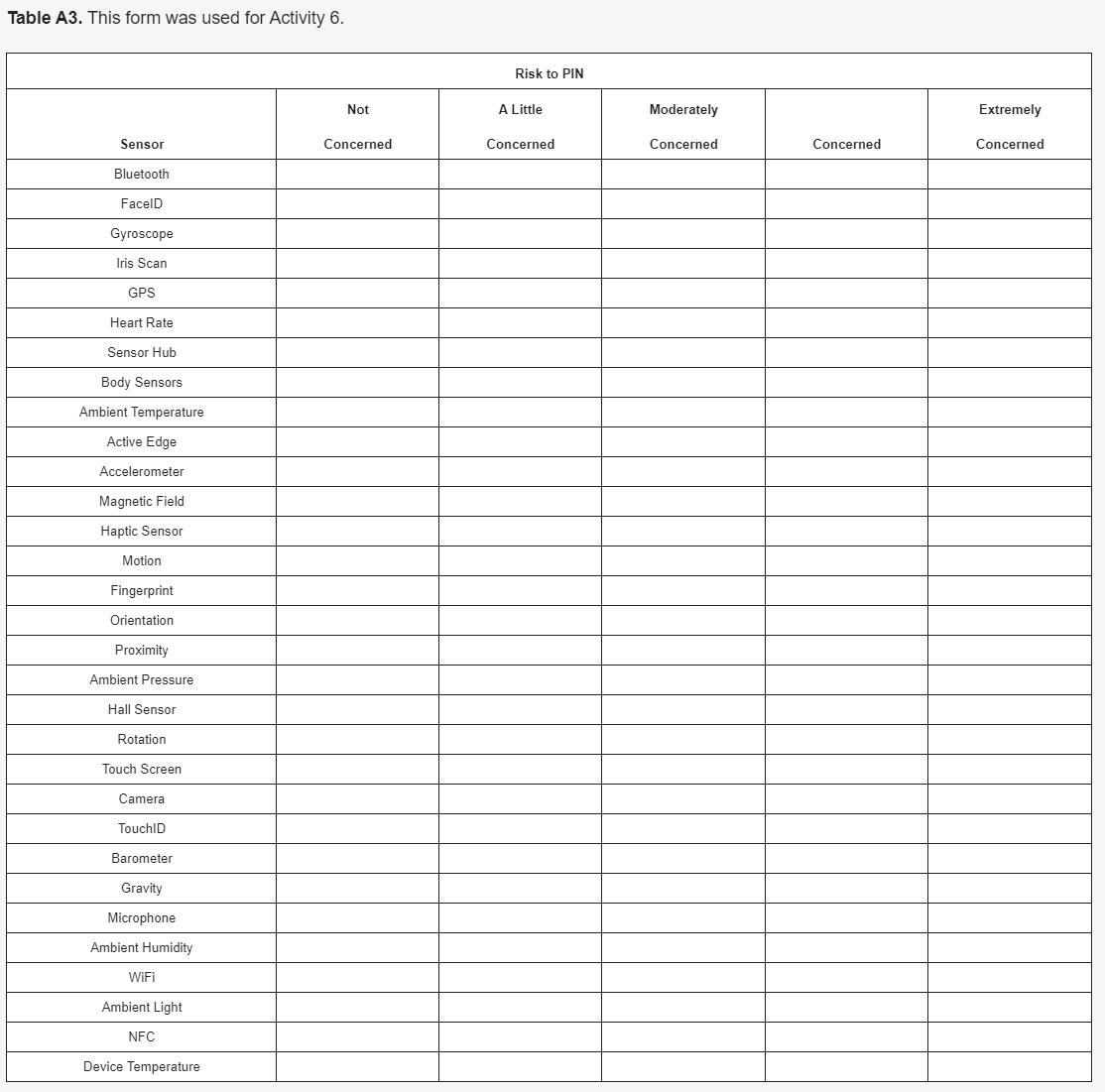

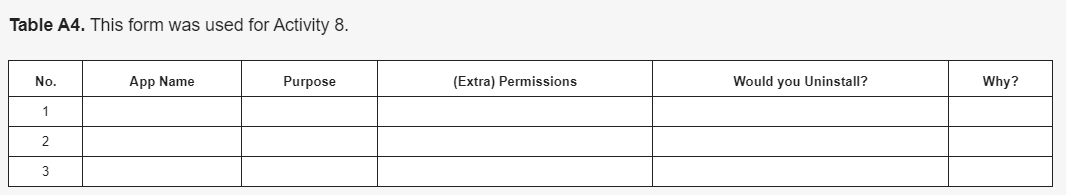

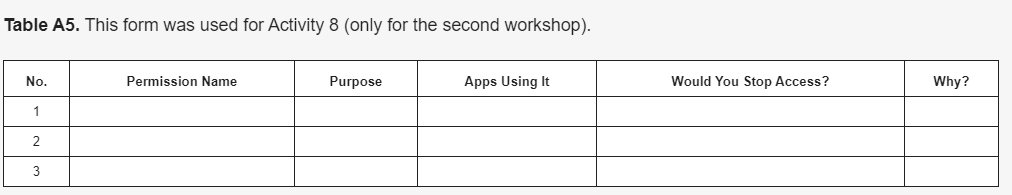

Then, we asked each participant to fill in a questionnaire (see Appendix C, supplementary material), which included five different levels of concerns: “Not concerned,” “A little concerned,” “Moderately concerned,” “Concerned,’’ and “Extremely concerned.” At the end of this individual activity, we asked the participants to complete a demography form. This form included age, gender, profession, first language, mobile device brand, and the duration of owning a smartphone (see Appendix C, supplementary material). We explained to the participants that these forms would be used anonymously for research purposes, and they could refuse to fill it out (partially or completely). Sensor attacksAfter a short break, we presented a few sensor attacks. In particular, we explained the attacks that we have performed on user sensitive information by using motion and orientation sensors via either installed apps or JavaScript code.[11][12][13][14] These attacks could reveal phone call timing, physical activities (e.g., sitting, walking, running, etc.), touch actions (e.g., click, hold, scroll, zoom) and PINs. (For the exact content presented in this part, please see the PowerPoint file.) App permissionsAfter another short break, we explained the problem of over-privileged apps to the participants. We showed examples of such apps, e.g., Calorie Counter-MyFitnessPal, Zara, and Sensor Box for Android (the one that we used in this workshop). These apps ask for extra permissions; Sensor Box, for example, does not need to have access to WiFi and phone information to function. Then we engaged in our seventh activity. This group activity invited the participants to go to the system settings of their mobile phones (or the borrowed ones) and check the permissions of the sensor app that they installed during the workshop. We also explained to them that in both Android and iOS devices, it is possible to disable and enable permissions via the system settings (the option of limiting access while using the app was discussed with iPhone users.) At this stage, we began our eighth activity, asking the participants to go through the pre-installed apps on their own devices and choose three apps to review their permissions. We asked them to individually complete a form by naming the app, explaining the purpose of the app, listing the (extra) permissions, and expressing whether they would keep the app or uninstall it and why. This form is provided in Appendix D (see supplementary information at the end). Note that when we ran the workshop in 2016, most Android users were not updated with Android 6 (Lollipop) and had only one way of accessing permissions, which was through each app’s settings (Figure 3, left). From Android Lollipop onward, another permission review model was offered; the user could go to the settings app and see which apps can access certain permission (Figure 3, middle and right). We noticed that in our second workshop in 2018, the participants used both models (explained further in the results section).

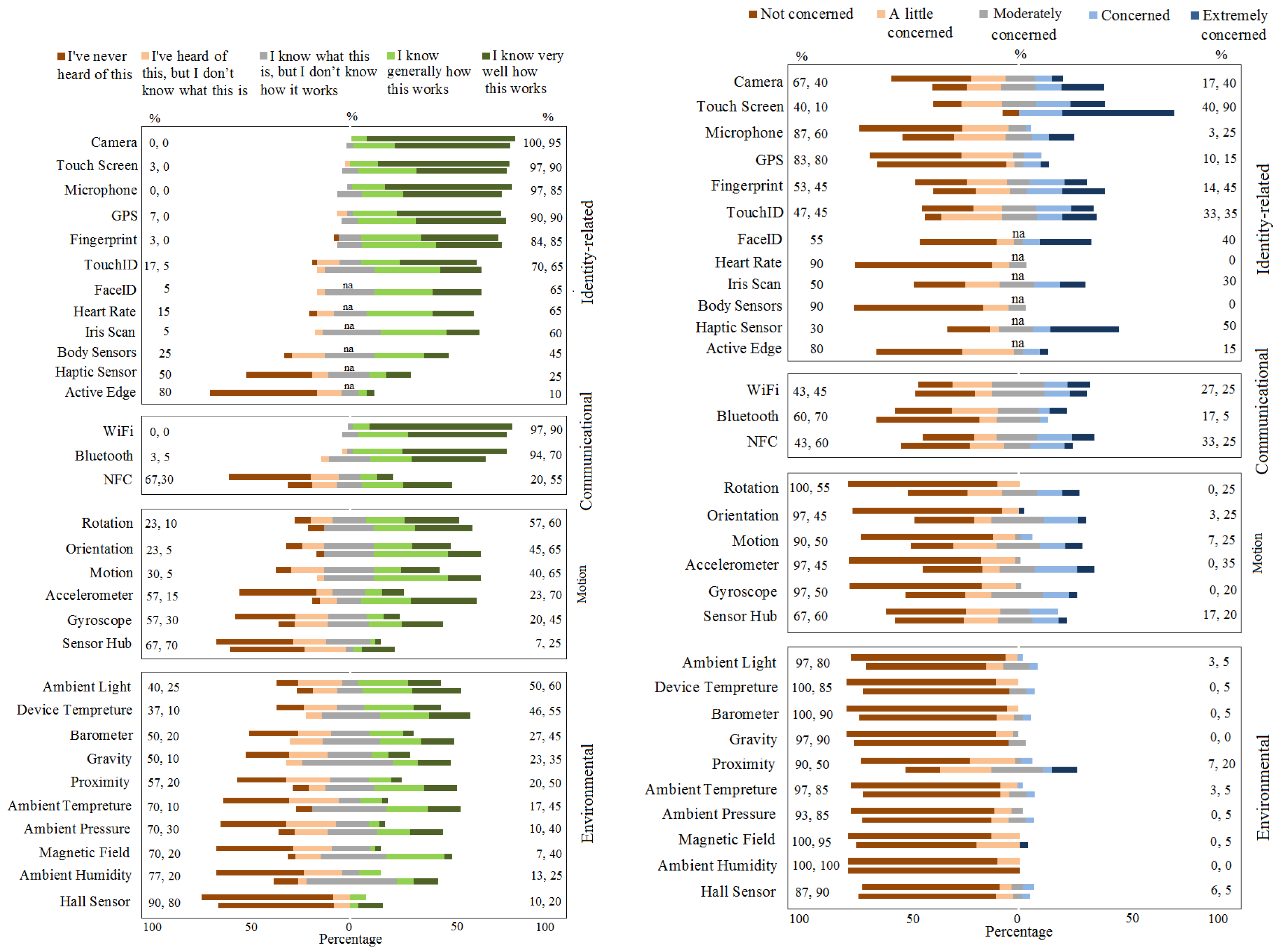

At the end of this workshop, we invited the attendees to discuss their opinions on mobile sensor security with their peers and the educators, giving the attendees an opportunity to gain a few tips to improve their mobile security (explained further in the discussion section). ResultsIn this section, we present the results of our analysis of different stages of the two rounds of the workshop, including the general knowledge level about sensors and their perceived risk level, as well as the correlation between them. General knowledgeRecall that our participants completed the general knowledge form at the beginning of the workshop, before being presented with any information. We present this knowledge level in a stacked bar chart on the left side of Figure 4 for the two rounds. The top bars represent the participants of the first round of the workshop in 2016, and the bottom bars are for the second round in 2018. We categorized these sensors into four groups, as seen in Table 1. In each category, sensors were ordered based on the aggregate percentage of participants in the first round of the workshop declaring they knew generally or very well how each sensor works. This aggregate percentage is shown on the right side; the first number for Round 1, the second number for Round 2. In the case of an equal percentage, the sensor with a bigger share of being known very well by the participants is shown earlier. Note that the bars for some of these sensors (FaceID, heart rate, iris scan, body sensors, haptic sensors, and Active Edge) are solo since they were studied only in our second workshop. We conclude the following observations from Figure 4, left.

Ambient sensors: Our participants were generally less familiar with ambient sensors. Some of these sensors, such as ambient light and device temperature, were better known to both groups. However, the second group of our participants expressed more knowledge of ambient sensors. Generally speaking, however, the environmental sensors remained the least known among all our participants. Communication sensors: Apart from NFC (which was extensively adopted by users after the introduction of ApplePay and Google Pay), other communication sensors (WiFi and Bluetooth) were well known to the users. When we explained the usage of NFC for contactless payment, our participants could recognize it, though its name did not contribute to their knowledge they expressed for it. Although, the second group of our participants expressed more knowledge for NFC, it still remains the least-known sensor in this category. Identity-related sensors: Our participants knew most of the identity-related sensors well, and there was not much difference between the two groups of participants. Some sensors such as the touch screen, camera, microphone, and GPS have been available on mobile devices for a long time. However, some such as FaceID, iris scan, and body sensors are relevant. Yet, since the applications of these sensors are immediate and they are named after their functionalities, our participants felt confident about them. The only two lesser-known sensors in this group were the haptic sensor and Active Edge. We believe that since these sensors are used in a more implicit way (see their descriptions in Appendix A, supplemental material) and were introduced more recently with limited applications, they were less known to the users. Motion sensors: The sensors of this category are generally lesser known to our participants in comparison to the communication and identity-related biometric sensors. However, there was a significant increase in the general knowledge about these sensors in the second group of our participants. On the other hand, in the first group of participants, low-level hardware sensors such as the accelerometer and gyroscope seemed to be lesser known in comparison with high-level software ones such as motion, orientation, and rotation, which were named after their functionalities. This was true only about the gyroscope in the second group of participants. Both groups expressed little familiarity about the sensor hub. When reading the handout on the sensors and later their descriptions, our participants were generally surprised to hear about some sensors and impressed by the variety. An overall look at Figure 4, left, shows that identity-related and communication sensors were better known to the users in comparison to the other two categories. We suspect that this is due to the fact that these sensors have explicit use cases (such as taking a picture, unlocking the phone, exchanging files), which users can easily associate with. These explicit use cases contributed to a better knowledge people expressed for the first two categories. In contrast, the usage of ambient and motion sensors was not immediately clear to the users, and they felt less confident about them. These results are consistent with the results of our previous research.[14] Through multiple rounds of user studies over the years, we have witnessed that the level of the knowledge that mobile users have of most sensors is increasing gradually. Perceived risks of sensorsSimilar to the above, we also examined the concern level that our participants expressed for each sensor. Following our previous work[14], we also limited our study to the level of perceived risks users associate with their PINs being discovered by each sensor since finding one’s PIN is a clear and intuitive security risk. (The actual risks of mobile sensors to people’s PINs and passwords were briefly discussed in the introduction.) Note that when our participants completed the concern form, they had not been given any security knowledge about sensors. This activity was done after they had the description about the sensors and worked with the sensor apps. As can be seen in Figure 4, right, we made the following observations. Ambient sensors: Almost all of our participants felt "Not" or only "A little concerned" about ambient sensors in relation to their risk to PINs. The only exception was the proximity sensor, of which the participants of our second workshop showed a little more concern. Communication sensors: The participants of the two groups showed consistent levels of concern for WiFi, Bluetooth, and NFC. Most of the participants were either "Not," "A little," or "Moderately concerned" about these sensors. Identity-related sensors: Both groups of our participants generally expressed more concern for biometric sensors. Yet, apart from the touch screen in the second workshop, none of these sensors received an aggregated percentage declaration of "Concerned" and "Extremely concerned" of more than 50%. Among these sensors, the touch screen, TouchID/fingerprint, and camera were on top of the list for both groups. FaceID, the haptic sensor, and the iris scanner had higher concern levels as well. Our participants did not think that the heart rate sensor, body sensors, and Active Edge can contribute to the PIN discovery attack scenario much. Motion sensors: The first group of our participants expressed "No" or "A little concern" about these sensors. However, similar to the knowledge level, the second group showed higher concern levels about motion sensors, and the gap was even more noticeable. This correlation between the knowledge and concern levels is interesting, as we discuss later. Note that despite the actual risks of these sensors and with this increase in the concern level of the participants of the second group, most of them were still "Not," "A little," or "Moderately concerned" about motion sensors being able to reveal their PINs. In our previous study[14], we concluded that providing only the description of mobile sensors would not affect the concern level considerably. In some cases, participants expressed less concern after knowing the sensor description since they felt more confident about the functionality of the sensor. However, in some other cases, participants became more concerned after they knew about the sensor description. The same conclusion was stated in[17], where the participants were generally unaware of the keystroke monitoring risks associated with motion sensors. In this study, however, the concern level varied across the sensor categories. While the percentages have not changed for ambient sensors much, the perceived risk level for the PIN discovery scenario was slightly lower for most biometric and communication sensors in comparison to our previous research.[14] However, the concern level for motion sensors fluctuated for the participants of our first and second workshops. We observed a reduction in the concern level of the participants of the first group and a noticeable rise in the second group. This increase in the perceived risk levels for motion sensors in the second group was not expected. When we discussed it with our participants, we concluded that this could be due to various reasons, including having more knowledge about the actual risks of these sensors via different ways. As a matter of fact, a few of our participants pointed out that they had previously seen articles and news on the risks of motion sensors to sensitive information such as PINs. We believe that this could have contributed to this finding. General knowledge vs. risk perceptionFigure 4 (right and left) suggests that there may be a correlation between the relative level of knowledge users have about sensors and the relative level of risk they express for them. We confirmed our observation of this correlation by using Spearman’s rank-order correlation measure.[32] We ranked the sensors based on the level of user familiarity, using the same method applied in each category of sensors in Figure 4. Separately, the levels of concern were ranked as well. After applying Spearman’s equation, the correlation between the comparative knowledge was r = 0.48 and r = 0.52 (p < 0.05), for the first group and the second group of our participants, respectively. This, together with our previous results[14], suggests that there was a moderate/strong correlation between the general knowledge and perceived risk. These results support that the more the users know about these sensors (before being presented with any information), the more concern they express about the risk of the sensors revealing PINs in general. Apps and permissions reviewIn the final part of the workshop, we asked our participants to review the permissions of some of the pre-installed apps on their devices through the settings. In this section, the participants had the opportunity to go beyond sensor security and investigate access to all sorts of mobile OS resources by apps (Figure 3, left). For the second workshop, this activity was done in two forms: per app vs. per permission, as we explain later. Reviewing permissions per appThe participants in both workshops picked a wide variety of apps to investigate the permissions, ranging from system apps, social networking, gaming, banking, shopping, discount apps, etc. In most cases, they could successfully identify the functionality of the app and whether it had reasonable permissions or not. However, in some cases, the participants felt unsure about the permissions. The decision made by the users for either uninstalling the app, limiting its access, or leaving it as-is varied across users and apps for various reasons. Uninstalling: Some of our participants expressed their willingness to uninstall certain apps since they were over-privileged. In the comment section, the participants provided various reasons for this action: they don’t really need the app, they can replace it by using a web browser, they don’t understand the necessity of the permission, and/or they are concerned about their security and privacy. For example, after one of our participants discovered the permissions already given to a shopping app (camera, contacts, location, storage, and telephone), she expressed: “It does not need those things- uninstalled!” Similarly another participant could easily infer that a discount app should not be able to modify/delete the SD card and decided to remove it. In some cases, the extra permissions without explanation made our participants upset, leading them to remove the app. For example, a participant stated that he did not know that some of his apps, such as a university app, had too many permission, and he would uninstall them since he was “not happy with the fact that this app uses contacts.” Another participant stated that: “I don’t see why the BBC needs access to my location,” and he decided to remove it. Disabling/limiting access: There were cases where participants could identify the risk of extra permissions granted to apps, but instead of uninstalling, they chose to disable certain accesses or limit them to while using the app. For instance, one participant observed that if she disabled the access to contacts, storage, and telephone, Spotify would still work. The same approach was taken by another participants when he limited FM Radio’s access to microphone and storage and LinkedIn’s access to camera, microphone, storage, and location, and continued using them. Another participant said that she would occasionally turn off location on Twitter, e.g., if she is on holiday. In another example, one of the participants commented: “[I] would remove photo and camera permissions but still use [Uber] app”. Some participants commented that they limited access to location to only while using apps such as Google Maps and Trainline. Leaving as-is: In some cases, our participants reviewed the app permissions and found them reasonable and not risky. For example, when one of our participants found out that a parking payment app has access to the camera, she commented: “Camera [is] used to take pictures of payment cards.” Another comment was made about a messaging app that had a variety of permissions; the user said: “[this app] needs those permissions to fully work.” Another participant said his taxi booking app uses location while the app is in-use, and he thought it was “secure and functional.” In some other cases, our participants could identify over-privileged apps but decided to leave the apps and their permissions as-is. They expressed various reasons for this decision. For example, one participant chose to continue using a discount app saying that “[I’m] not that concerned that it has access to photos.” Another participant said she would not uninstall a sleep monitoring app since “I find it useful for self-tracking. I don’t worry about people having access to that particular information [microphone, motion and fitness, mobile data] about me.” In another case, while our participant could list the extra permissions of a fitness app, she said she would not uninstall it since “I am addicted to it”. Another participant refused to uninstall a pedometer app expressing: “[I] don’t see the need for [access to] contacts and storage, but [I would] still use [it] as other apps ask for the same [permissions].” Another attendee listed camera, contacts, and location as Groupon’s (extra) permissions and commented: “[The app’s] benefits outweigh threats.” Another example is when one of our participants spotted that a university app uses location and stated: “I trust it and I frequently need it.” Overall, we observed that this activity (app permission review) helped our participants to successfully identity over-privileged apps. However, different users chose to react differently on the matter. It seems that this decision-making process was affected by some general mental models such as the ubiquity of the app, the functionality of the app, its advantages vs. the disadvantages, (not) being worried about sharing data, (not) being aware of any real exploitation of these permissions, and trusting the app. Through our discussions with the participants, they stated that they liked this permission review model since they can have an overall picture about each app and its permissions. They also argued that it helped them to keep using certain apps that they enjoy while limiting particular permissions on them. Reviewing apps' accesses per permissionAs mentioned before, in the second round of the workshop in 2018, we asked our participants to also review all the apps that have access to certain permissions, e.g., microphone, location, body sensors, etc. Both recent versions of Android and iOS provide the users with this review option (Figure 3, middle and right). Some of our participants were on older versions of Android, which did not support this activity. These participants were able to use our extra phones to complete this part. All of our participants found some apps with certain permissions that they did not approve of and decided to stop access. For example, when one of our participants realized that more than 35 of his pre-installed apps had access to location, he stated: “some of these [apps’] accesses do not seem necessary” and decided to disable them. Another participant observed that some of the pre-approved accesses such as Messages’ access to heart rate was not reasonable and should be stopped. A few of our participants stated that via this way of reviewing, they felt that giving permission to too many apps without being aware of it is intrusive and upsetting. For example, a participant decided that he would stop access to the camera on some apps commenting: “e.g., Amazon [uses camera] and I don’t like it”. Another user said that the fact that too many apps had access to location and the camera was “quite intrusive when not known,” and they decided to deny some of those permissions. Some of our participants could not find a good explanation of why they needed to allow certain apps to have certain permissions. For example, one of our participants decided to stop access to body sensors, location, and the microphone on some of his apps stating: “some of these apps obviously need [these accesses], but others seem odd [that] they would need these.” We observed that when our participants did not realize the reason behind some of the permission requests and were doubtful, most of them chose to deny access. For example, a participant commented: “Unless I am sure of why [any app] needs it (SMS permission), I delete it (disable the access).” Another participant stated: “maybe [it is] risky to give access to camera to so many apps without knowing why?” and decided to disable some of these accesses. In general, our participant found this way of permission review intuitive. Throughout our discussions with them, they thought that in this way, they could save time by reviewing the permissions that they were most worried about. They also discussed that they could reason better and make a more informed decision since they understood which permissions put them at risk. Recommendations to different stakeholdersAfter we presented the sensor attacks to our participants in the workshops, we observed that they were shocked about the power of motion sensors. However, when completing the app permission review activity, they could not see whether certain apps had access to these sensors or not. For example when reviewing the permissions, one of our participants commented: “why aren’t all of the sensors on this list to review?”. Hence, even if the mobile users were very well aware of the risk of these sensors to their security and privacy, since mobile apps and websites do not ask for permission for many sensors (see Table 2), users will not have the option to disable the access. One way to fix this problem, which is commonly suggested by research papers, is to simply ask for permission for all sensors or sensor groups. However, this approach will introduce many usability problems. People already ignore the permission notifications required for sensitive resources such as the camera and microphone. Other solutions such as using artificial intelligence (AI) for sensor management has not been effectively implemented yet. We believe that more research (both technical and human dimensions) in the field of sensor security should be carried out to contribute to this complex usable security problem. This research should be conducted in collaboration with the industry to achieve impactful results. Based on our research, we make the following recommendations: 1. Researchers and educators: Although the amount of technical research conducted on sensor security is considerable, human dimensions of the technology, especially education aspects, have not been addressed very well. When we asked for more comments on improving sensor security at the end of the workshop, one of the participants commented: “better education/information for smartphone users [is needed, e.g.,] on what app permissions really mean, and how [permission setting] can compromise privacy.” We understand that the focus of technical research might not be education, hence organizing similar workshops might not be the priority. However, apart from raising public knowledge awareness, holding such workshops for a non-technical audience is a strong medium to disseminate technical research. Part 2 of our workshop was a presentation about our research in sensor security. This part can be replaced with any other research in the field of sensor security, without diminishing the workshop’s goal. The feedback from non-technical audiences will lead technical research in an impactful direction. We have published our workshop slides for other educators and the general public. Other ways of raising public awareness include providing related articles on massive open online courses (MOOCs) and publishing user-friendly videos on YouTube. For example, we have provided two articles entitled Is your mobile phone spying on you? and Auditing your mobile app permissions in the online course Cyber Security: Safety at Home, Online, in Life, part of Newcastle University’s series of MOOCs. Through our second workshop, we also witnessed that publishing research findings via public media has an impact on the general knowledge of the users. We strongly encourage researchers to produce educational materials and report their experiences and findings on other aspects of sensor security. 2. App and web developers: Throughout our studies over the years, we have concluded that the factors that contribute to the user's risk inference about technology in general, and mobile sensors in particular, are complicated. As is known, security and privacy issues are low motivations in the adoption of apps. Therefore, app and web developers have a fundamental role in addressing this problem and delivering more secure apps to the users. As discussed by Abu-Salma et al.[33], developers are recommended to secure tools with proven utility. Many mobile apps in app stores are “permission hungry.”[22] These extra permission requests are likely not understood by the majority of developers who copy and paste this code into their applications.[34] This is where app developers end up inserting extra permission requests into their code. We advise developers to not copy code from unreliable sources into their apps. Instead, they should search for stable libraries and APIs to be used in their apps. Accordingly, including minimal permission requests in the app would lead to fewer security decisions to be made by the users when installing and using the app. Moreover, explaining the reason why the app is asking for certain permissions would improve the user experience. As an example, when one of our participants found out that a discount app has access to location, the participant commented: “Location allows me to find nearby offers- app gives explanation”. When we asked for more comments on improving sensor security at the end of the workshop, one of our participants wrote: “let the user know why permission is needed for the app to work and choose which features/permissions are reasonable.” Educating app developers about more secure products seems to be vital and is another topic of research on its own. Android Developer has recently published best practices for app permissions to be followed by programmers.[35] These best practices include: only use the permissions necessary for your app to work, pay attention to permissions required by libraries, be transparent, and make system accesses explicit. These are all consistent with the expectations that our participants expressed during the two workshops. 3. End users: As we observed in our studies, mobile users do not know that many apps have access to their mobile OS resources, either without asking for permission or via the permissions that they ignore. In order to keep their devices safer, we advise users to follow these general security practices:

Each of the above items can be developed by educators as educational material to be taught to mobile users. We believe that the problem of sensor security is already beyond mobile phones. The challenges are more serious when smart kitchens, smart homes, smart buildings, and smart cities are equipped with multiple sensor-enabled devices sensing people and their environment and broadcasting this information via IoT platforms. As a matter of fact, some of our participants listed a few dedicated IoT apps when they were auditing the app permissions. One example is Hive, which is described in its app description as: “a British Gas innovation that creates connected products designed to give people the control they want for their homes anytime, anywhere.” This app offers a wide range of features enabling the users to control their heating and hot water, home electrical appliances, and doors and windows, as well as report on whether movement is spotted inside the user's home via “sophisticated sensors.” One of our participants using this app commented: “It allows me to control my heating/hot water to make it more efficient. I have turned off analytics and location for security. A bit concerned as if someone hacked, they could analyse when I am at home.” We know that the risks of hacking into IoT platforms is beyond knowing whether or not someone is at home. It could be harmful to people’s lives as described by Ronen et al.[36] Hence, we encourage researchers to conduct more studies on human dimensions of sensors in IoT. Limitations and future workThis research is limited in a few ways, which we plan to address in the future. We acknowledge that our participant set was less diverse compared to previous studies[14][17] since the recruitment process was through attending a technical conference, which attracts more tech-friendly people. Note that the first round of the workshop had more female participants due to the title and remit of the host conference in 2016: Thinking Digital Women. More of the second workshop’s participants were male, which is normally the case with Thinking Digital conferences. However, we believe the bias in our participants would not disprove our results since they are compatible with the results of previous papers. Despite our attempt to choose the most functional sensor apps, the ones that we used in our workshops did not offer the whole range of sensors to the users to experiment with. This might not enable the users to understand fully the functionalities of all available sensors on their smart devices. In the future, we plan to develop our fully-functional sensor app and conduct studies by offering that to our participants. In the second part of the workshop, our focus was on side channel attacks on users’ sensitive information such as touch actions and PINs via motion sensors. This part was presented before the app permission review activity. There are other types of attacks using motion sensors and/or other sensors, which we have not studied in this paper. Furthermore, we did not observe and measure the behavior of our participants on disabling permissions and removing apps before and after being presented with these security attacks. This is the case with the concern level before and after knowing the description and working with the sensor apps as well. These choices were made deliberately to keep the workshop length reasonable. Each of these can be a researched on their own, which we leave as future work. Apart from the above, we would like to study all the sensors available on smart devices in IoT platforms specially in smart homes, e.g., smart kitchen items, smart toys, etc. We would like to learn more about the new sensors on other smart devices and what data they are broadcasting about users and their environments. In particular, we are interested in the actual risks of these sensors vs. the perceived risks that the users express for them. This is particularly interesting if studied from a legislation angle, e.g., with regards to General Data Protection Regulations (GDPR). By conducting more research in this area, the academic community and the sensor industry will have a better vision of the human factors of this fast-growing technology, with more robust results. ConclusionsIn this paper, we reflected on the results of two workshops where we mainly explained the following three items to the mobile users: (i) the data generated by mobile sensors, (ii) how that data might be used to undermine their security and privacy, and (iii) what precautionary measures they could and should take. We studied the impact of teaching mobile users about sensors on their perceived risk levels for each sensor. The results showed that teaching about general aspects of sensors might not immediately improve people’s ability to perceive the risks, and other factors such as their prior general knowledge had a stronger impact. On the other hand, when we taught the permission reviewing technique as a precautionary measure, our participants could successfully identify over-privileged apps. Users’ decisions on either modifying the app permissions, uninstalling, or keeping it as-is varied due to various reasons. We believe this suggests that there is much room for more focus on education about mobile and sensor technology. Supplemental materialAppendix A. Mobile Sensors’ Description In the following, we present a brief description of each sensor:

AcknowledgementsWe would like to thank Thinking Digital for hosting this workshop for two years and the participants who attended the workshop. We thank Anssi Kostiainen, from Intel and the chair of the W3C Device and Sensors Working Group, for his feedback on the second section of this article. We would like to thank Matthew Forshaw from Newcastle University for his feedback on the third section. All of our user studies were approved by Newcastle University’s Ethics Committee, and all the data were processed and saved according to the GDPR. This paper is based on Making Sense of Sensors: Mobile sensor security awareness and education, by Maryam Mehrnezhad, Ehsan Toreini, and Sami Alajrami, which appeared in the Proceedings of the International Workshop on Socio-Technical Aspects in Security and Trust (STAST), 2017. Author contributionsBoth authors have contributed during all stages of this paper, including conceptualization, methodology, running the workshop, and writing the paper. M.M. has analysed the self-declared forms completed by the participants in the workshop and presented the results in the paper. FundingThis research received no external funding. Conflicts of interestThe authors declare no conflicts of interest. References

NotesThis presentation is faithful to the original, with only a few minor changes to presentation. Grammar was cleaned up for smoother reading. In some cases important information was missing from the references, and that information was added. A few of the inline URLs were turned into citations for this version. The inline URL to Altmetric and the May 2018 workshop from the original article were removed for this version because they were dead, unarchived URLs. The W3C Device and Sensors Working Group URl also changed; an archived version of the site was used for the citation in this version. Through various parts of this article, the four categories of sensors have been reorganized to be alphabetized, with the associated language tweaked to emphasize this. |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||