Featured article of the week: March 20–26:

"SCIFIO: An extensible framework to support scientific image formats"

No gold standard exists in the world of scientific image acquisition; a proliferation of instruments each with its own proprietary data format has made out-of-the-box sharing of that data nearly impossible. In the field of light microscopy, the Bio-Formats library was designed to translate such proprietary data formats to a common, open-source schema, enabling sharing and reproduction of scientific results. While Bio-Formats has proved successful for microscopy images, the greater scientific community was lacking a domain-independent framework for format translation.

SCIFIO (SCientific Image Format Input and Output) is presented as a freely available, open-source library unifying the mechanisms of reading and writing image data. The core of SCIFIO is its modular definition of formats, the design of which clearly outlines the components of image I/O to encourage extensibility, facilitated by the dynamic discovery of the SciJava plugin framework. SCIFIO is structured to support coexistence of multiple domain-specific open exchange formats, such as Bio-Formats’ OME-TIFF, within a unified environment. (Full article...)

Featured article of the week: March 13–19:

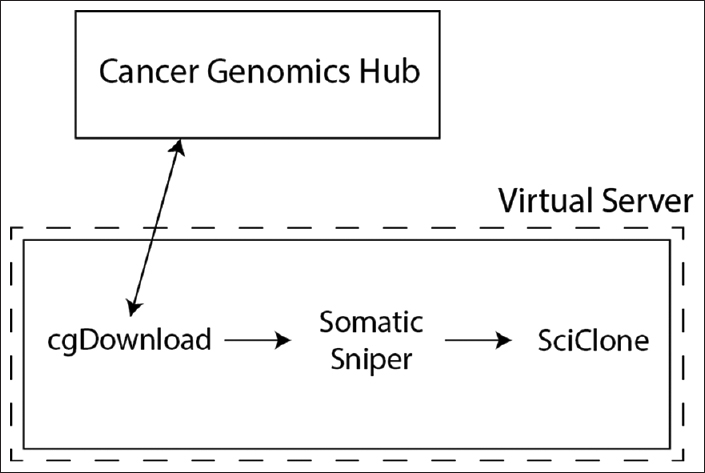

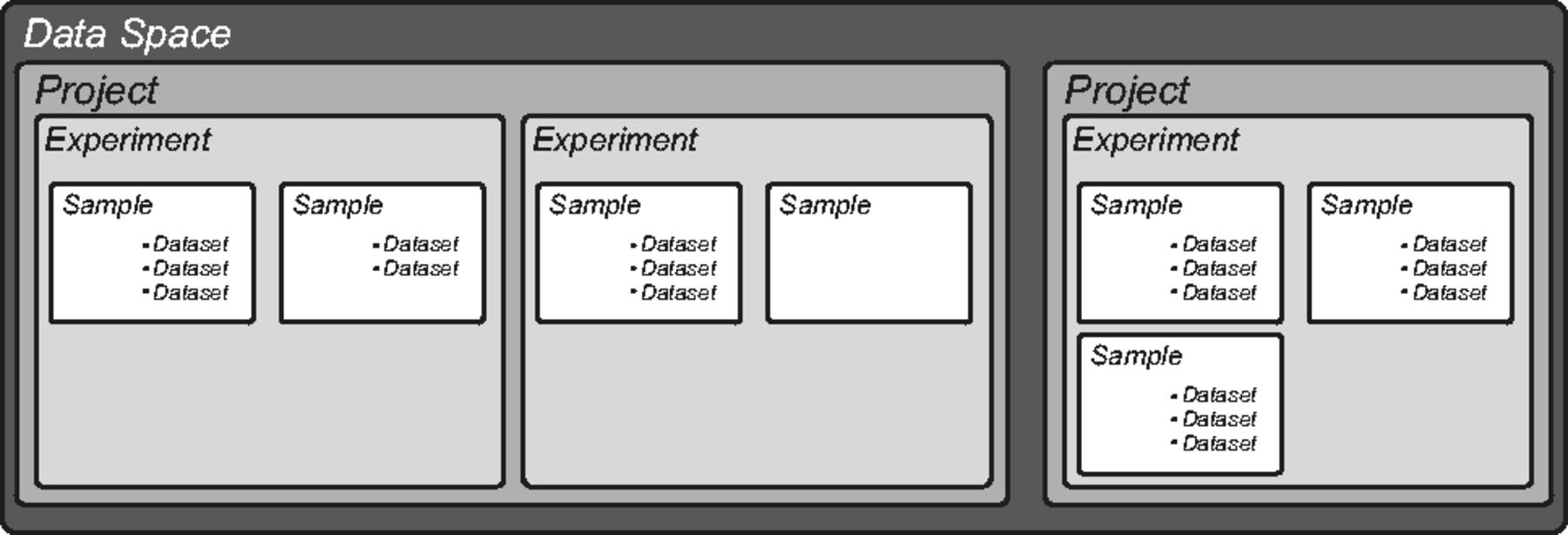

"Use of application containers and workflows for genomic data analysis"

The rapid acquisition of biological data and development of computationally intensive analyses has led to a need for novel approaches to software deployment. In particular, the complexity of common analytic tools for genomics makes them difficult to deploy and decreases the reproducibility of computational experiments. Recent technologies that allow for application virtualization, such as Docker, allow developers and bioinformaticians to isolate these applications and deploy secure, scalable platforms that have the potential to dramatically increase the efficiency of big data processing. While limitations exist, this study demonstrates a successful implementation of a pipeline with several discrete software applications for the analysis of next-generation sequencing (NGS) data. (Full article...)

|

Featured article of the week: March 6–12:

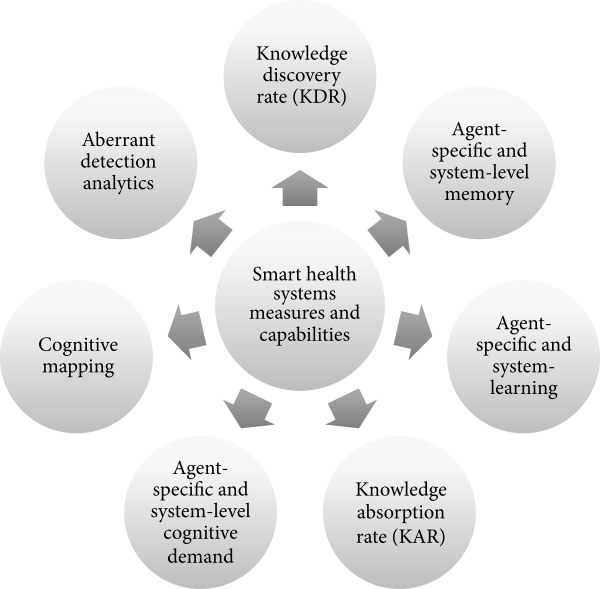

"Informatics metrics and measures for a smart public health systems approach: Information science perspective"

Public health informatics is an evolving domain in which practices constantly change to meet the demands of a highly complex public health and healthcare delivery system. Given the emergence of various concepts, such as learning health systems, smart health systems, and adaptive complex health systems, health informatics professionals would benefit from a common set of measures and capabilities to inform our modeling, measuring, and managing of health system “smartness.” Here, we introduce the concepts of organizational complexity, problem/issue complexity, and situational awareness as three codependent drivers of smart public health systems characteristics. We also propose seven smart public health systems measures and capabilities that are important in a public health informatics professional’s toolkit. (Full article...)

|

Featured article of the week: February 27–March 5:

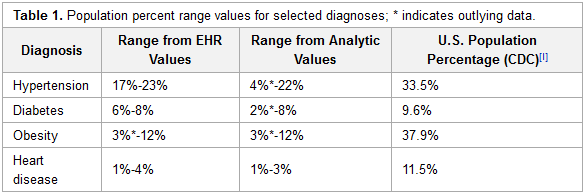

"Deployment of analytics into the healthcare safety net: Lessons learned"

As payment reforms shift healthcare reimbursement toward value-based payment programs, providers need the capability to work with data of greater complexity, scope and scale. This will in many instances necessitate a change in understanding of the value of data and the types of data needed for analysis to support operations and clinical practice. It will also require the deployment of different infrastructure and analytic tools. Community health centers (CHCs), which serve more than 25 million people and together form the nation’s largest single source of primary care for medically underserved communities and populations, are expanding and will need to optimize their capacity to leverage data as new payer and organizational models emerge.

To better understand existing capacity and help organizations plan for the strategic and expanded uses of data, a project was initiated that deployed contemporary, Hadoop-based, analytic technology into several multi-site CHCs and a primary care association (PCA) with an affiliated data warehouse supporting health centers across the state. (Full article...)

|

Featured article of the week: February 20–26:

"The growing need for microservices in bioinformatics"

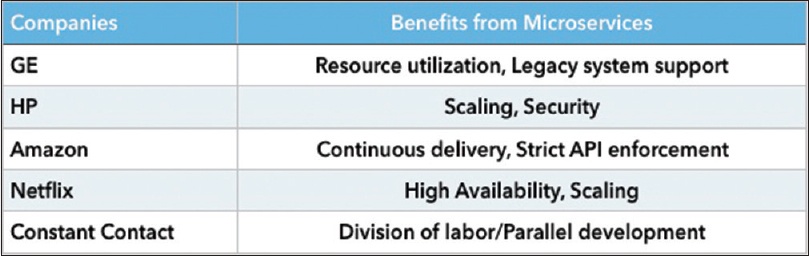

Within the information technology (IT) industry, best practices and standards are constantly evolving and being refined. In contrast, computer technology utilized within the healthcare industry often evolves at a glacial pace, with reduced opportunities for justified innovation. Although the use of timely technology refreshes within an enterprise's overall technology stack can be costly, thoughtful adoption of select technologies with a demonstrated return on investment can be very effective in increasing productivity and at the same time, reducing the burden of maintenance often associated with older and legacy systems.

In this brief technical communication, we introduce the concept of microservices as applied to the ecosystem of data analysis pipelines. Microservice architecture is a framework for dividing complex systems into easily managed parts. Each individual service is limited in functional scope, thereby conferring a higher measure of functional isolation and reliability to the collective solution. Moreover, maintenance challenges are greatly simplified by virtue of the reduced architectural complexity of each constitutive module. This fact notwithstanding, rendered overall solutions utilizing a microservices-based approach provide equal or greater levels of functionality as compared to conventional programming approaches. Bioinformatics, with its ever-increasing demand for performance and new testing algorithms, is the perfect use-case for such a solution. (Full article...)

|

Featured article of the week: February 13–19:

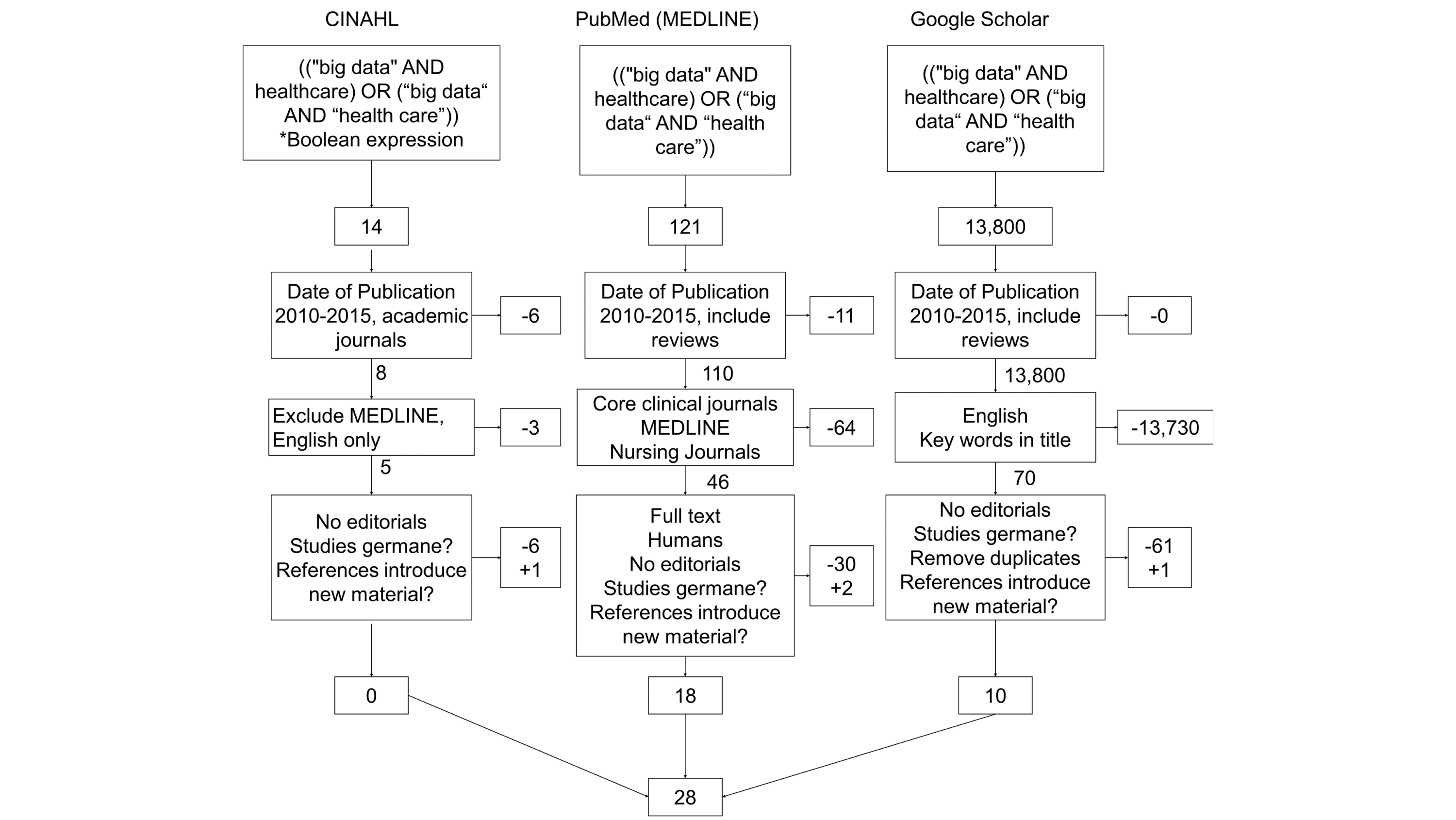

"Challenges and opportunities of big data in health care: A systematic review"

Big data analytics offers promise in many business sectors, and health care is looking at big data to provide answers to many age-related issues, particularly dementia and chronic disease management. The purpose of this review was to summarize the challenges faced by big data analytics and the opportunities that big data opens in health care. A total of three searches were performed for publications between January 1, 2010 and January 1, 2016 (PubMed/MEDLINE, CINAHL, and Google Scholar), and an assessment was made on content germane to big data in health care. From the results of the searches in research databases and Google Scholar (N=28), the authors summarized content and identified nine and 14 themes under the categories "Challenges" and "Opportunities," respectively. We rank-ordered and analyzed the themes based on the frequency of occurrence. (Full article...)

|

Featured article of the week: February 06–12:

"The impact of electronic health record (EHR) interoperability on immunization information system (IIS) data quality"

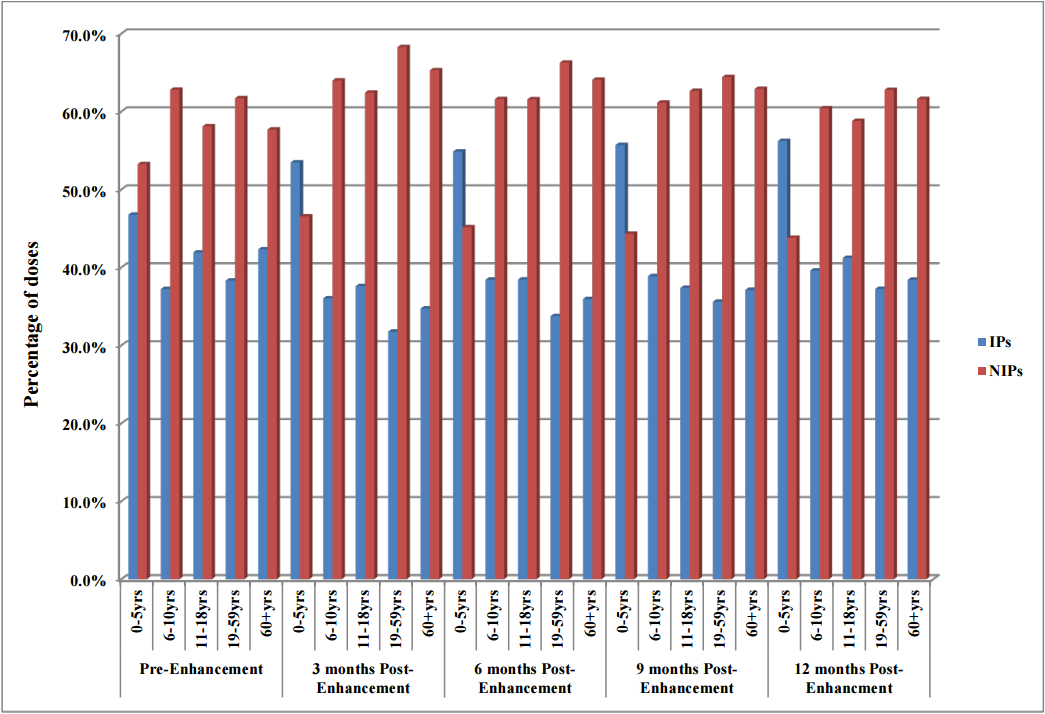

Objectives: To evaluate the impact of electronic health record (EHR) interoperability on the quality of immunization data in the North Dakota Immunization Information System (NDIIS).

NDIIS "doses administered" data was evaluated for completeness of the patient and dose-level core data elements for records that belong to interoperable and non-interoperable providers. Data was compared at three months prior to EHR interoperability enhancement to data at three, six, nine and 12 months post-enhancement following the interoperability go live date. Doses administered per month and by age group, timeliness of vaccine entry and the number of duplicate clients added to the NDIIS was also compared, in addition to immunization rates for children 19–35 months of age and adolescents 11–18 years of age. (Full article...)

|

Featured article of the week: January 30–February 05:

"Bioinformatics workflow for clinical whole genome sequencing at Partners HealthCare Personalized Medicine"

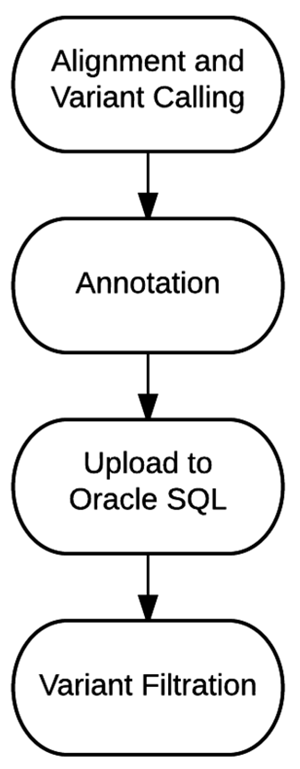

Effective implementation of precision medicine will be enhanced by a thorough understanding of each patient’s genetic composition to better treat his or her presenting symptoms or mitigate the onset of disease. This ideally includes the sequence information of a complete genome for each individual. At Partners HealthCare Personalized Medicine, we have developed a clinical process for whole genome sequencing (WGS) with application in both healthy individuals and those with disease. In this manuscript, we will describe our bioinformatics strategy to efficiently process and deliver genomic data to geneticists for clinical interpretation. We describe the handling of data from FASTQ to the final variant list for clinical review for the final report. We will also discuss our methodology for validating this workflow and the cost implications of running WGS. (Full article...)

|

Featured article of the week: January 23–29:

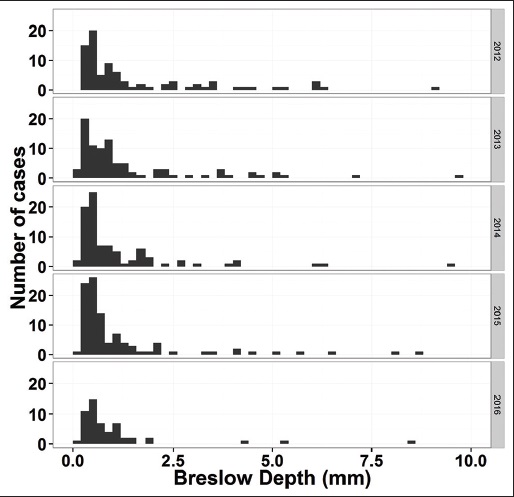

"Pathology report data extraction from relational database using R, with extraction from reports on melanoma of skin as an example"

The olog approach of Spivak and Kent is applied to the practical development of data transfer frameworks, yielding simple rules for construction and assessment of data transfer standards. The simplicity, extensibility and modularity of such descriptions allows discipline experts unfamiliar with complex ontological constructs or toolsets to synthesize multiple pre-existing standards, potentially including a variety of file formats, into a single overarching ontology. These ontologies nevertheless capture all scientifically-relevant prior knowledge, and when expressed in machine-readable form are sufficiently expressive to mediate translation between legacy and modern data formats. A format-independent programming interface informed by this ontology consists of six functions, of which only two handle data. Demonstration software implementing this interface is used to translate between two common diffraction image formats using such an ontology in place of an intermediate format. (Full article...)

|

Featured article of the week: January 16–22:

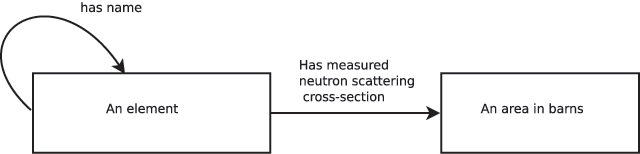

"A robust, format-agnostic scientific data transfer framework"

The olog approach of Spivak and Kent is applied to the practical development of data transfer frameworks, yielding simple rules for construction and assessment of data transfer standards. The simplicity, extensibility and modularity of such descriptions allows discipline experts unfamiliar with complex ontological constructs or toolsets to synthesize multiple pre-existing standards, potentially including a variety of file formats, into a single overarching ontology. These ontologies nevertheless capture all scientifically-relevant prior knowledge, and when expressed in machine-readable form are sufficiently expressive to mediate translation between legacy and modern data formats. A format-independent programming interface informed by this ontology consists of six functions, of which only two handle data. Demonstration software implementing this interface is used to translate between two common diffraction image formats using such an ontology in place of an intermediate format. (Full article...)

|

Featured article of the week: January 9–15:

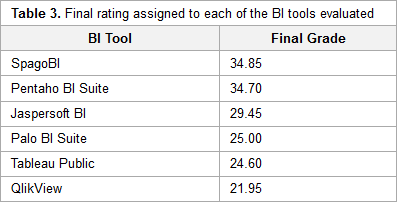

"A benchmarking analysis of open-source business intelligence tools in healthcare environments"

In recent years, a wide range of business intelligence (BI) technologies have been applied to different areas in order to support the decision-making process. BI enables the extraction of knowledge from the data stored. The healthcare industry is no exception, and so BI applications have been under investigation across multiple units of different institutions. Thus, in this article, we intend to analyze some open-source/free BI tools on the market and their applicability in the clinical sphere, taking into consideration the general characteristics of the clinical environment. For this purpose, six BI tools were selected, analyzed, and tested in a practical environment. Then, a comparison metric and a ranking were defined for the tested applications in order to choose the one that best applies to the extraction of useful knowledge and clinical data in a healthcare environment. Finally, a pervasive BI platform was developed using a real case in order to prove the tool's viability. (Full article...)

|

Featured article of the week: January 2–8:

|

|