Journal:Secure data outsourcing in presence of the inference problem: Issues and directions

| Full article title | Secure data outsourcing in presence of the inference problem: Issues and directions |

|---|---|

| Journal | Journal of Information and Telecommunication |

| Author(s) | Jebali, Adel; Sassi, Salma; Jemai, Akderrazak |

| Author affiliation(s) | Tunis El Manar University, Jendouba University, Carthage University |

| Primary contact | Email: adel dot jbali at fst dot utm dot tn |

| Year published | 2020 |

| Volume and issue | 5(1) |

| Article # | 16–34 |

| DOI | 10.1080/24751839.2020.1819633 |

| ISSN | 2475-1847 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.tandfonline.com/doi/full/10.1080/24751839.2020.1819633 |

| Download | https://www.tandfonline.com/doi/pdf/10.1080/24751839.2020.1819633 (PDF) |

Abstract

With the emergence of the cloud computing paradigms, secure data outsourcing—moving some or most data to a third-party provider of secure data management services—has become one of the crucial challenges of modern computing. Data owners place their data among cloud service providers (CSPs) in order to increase flexibility, optimize storage, enhance data manipulation, and decrease processing time. Nevertheless, from a security point of view, access control proves to be a major concern in this situation seeing that the security policy of the data owner must be preserved when data is moved to the cloud. The lack of a comprehensive and systematic review on this topic in the available literature motivated us to review this research problem. Here, we discuss current and emerging research on privacy and confidentiality concerns in cloud-based data outsourcing and pinpoint potential issues that are still unresolved.

Keywords: cloud computing, data outsourcing, access control, inference leakage, secrecy and privacy

Introduction

In light of the increasing volume and variety of data from diverse sources—e.g., from health systems, social insurance systems, scientific and academic data systems, smart cities, and social networks—in-house storage and processing of large collections of data has becoming very costly. Hence, modern database systems have evolved from a centralized storage architecture to a distributed one, and with it the database-as-a-service paradigm has emerged. Data owners are increasingly moving their data to cloud service providers (CSPs) in order to increase flexibility, optimize storage, enhance data manipulation, and decrease processing times. Nonetheless, security concerns are widely recognized as a major barrier to cloud computing and other data outsourcing or database-as-a-service arrangements. Users remain reluctant to place their sensitive data in the cloud due to concerns about data disclosure to potentially untrusted external parties and other malicious parties.[1] Being processed and stored externally, data owners feel they have little control over their sensitive data, consequently putting data privacy at risk. From this perspective, access control is a major challenge seeing that the security policy of a data owner must be preserved when data is moved to the cloud. Access control policies are enforced by CSPs by keeping some sensitive data separated from each other.[2] However, some techniques like encryption are helpful to better guarantee the confidentiality of data.[3][4][5] The intent of encryption is to break sensitive associations among outsourced data by encrypting some attributes of that data. However, other data security concerns exist as well. Security breaches in distributed cloud databases could be exacerbated due to inference leakage, which occurs when a malicious actor uses information from a legitimate public response to discover more sensitive information, often from metadata. During the last two decades, researchers have devoted significant effort to enforcing access control policies and privacy protection requirements externally while maintaining a balance with data utility.[6][7][8][9][10][11][12]

In this paper, we review the current and emerging research on privacy and confidentiality concerns in data outsourcing and highlight research directions in this field. In summary, our systematic review addresses security concerns in cloud database systems for both communicating and non-communicating servers. We also survey this research field in relation to the inference problem and the unresolved problems that are introduced. Recognizing these challenges, this paper provides an overview of our proposed (because this is an ongoing work) solution. The crux of that solution is to firstly optimize data distribution without the need to query the workload, then partition the database in the cloud by taking into consideration access control policies and data utility, before finally running a query evaluation model on a big data framework to securely process distributed queries while retaining access control.

The reminder of this paper is organized as follows. The next section describes the literature review methodology adopted in this paper. After that, we review emerging research on data outsourcing in the context of privacy concerns and data utility. Then we discuss data outsourcing in relation to the inference problem. Afterwards, we introduce our proposed solution to implement a secure distributed cloud database on a big data framework (Apache Spark). We close with future research directions and challenges, as well as our final conclusions.

Literature review methodology

The methodology for literature review adopted in this paper follows the checklist proposed by the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement.[13] It includes, as shown in Figure 1, three steps: input literature, processing steps, and review output.

|

Input literature

In this section we describe selected literature and their selection process. Firstly, our advance keyword research was conducted on the Google Scholar search engine, with a time filter from January 1, 1990 to December 31, 2019. Table 1 lists keywords used in different Google Scholar queries.

| ||||||||||||||||||

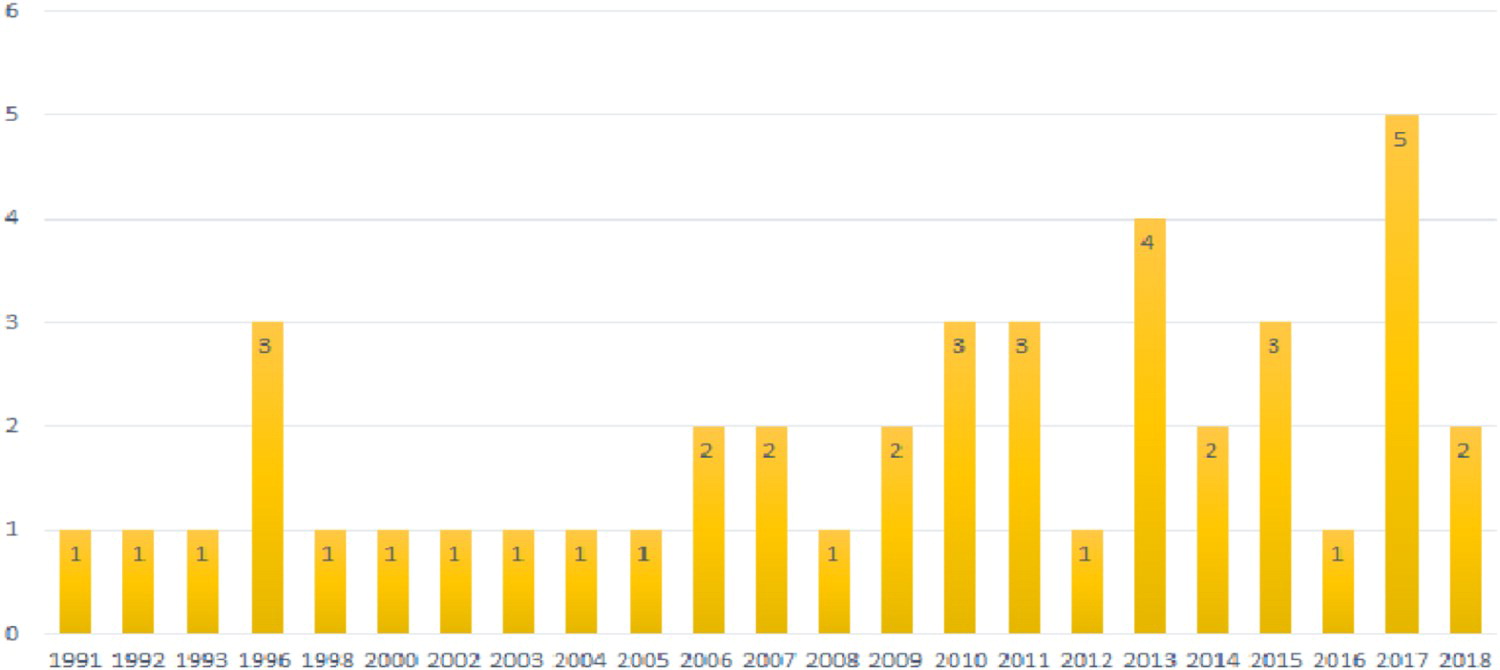

The logical operator used between keywords during search was the "And" operator. Finally, from the 269 viewed papers, 43 articles were retained for review. Figure 2 shows their distribution by publication year.

|

Processing steps

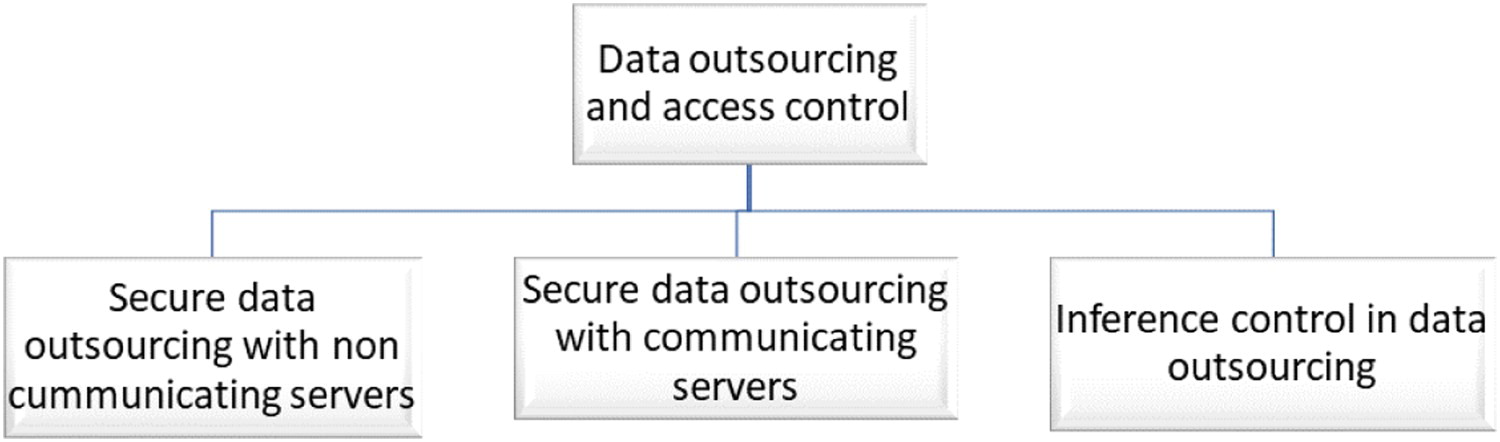

During the review, papers were processed by identifying the problem, understanding the proposed solution process, and listing the important findings. We summarized and compared each paper with the papers associated with the similar problem. Then for each processed paper, three or four critical sentences were introduced to highlight the limits and specify potential directions that may be followed to enhance the proposed approaches. Based on our literature review, we classified the data outsourcing and access control papers into three categories, as shown in Figure 3. The first category of papers addresses the problem of secure data outsourcing when the servers in the cloud are unaware of each other. The second category addresses secure data outsourcing where interaction between servers exists and how this later can aggravate the situation. In the last category, we address data outsourcing in relation to the inference problem, as this later can exploit semantic constraints to bypass authorization policies at the cloud level.

|

Review output

The outcome of the methodological review process is presented later in this paper as our proposed solution. In the "Proposed solution" section, we present an incremental approach composed of three steps, each step treating one of the three problems mentioned in the previous subsection. We believe that our proposed solution is capable of providing good results compared to other reviewed approaches. Afterwards, we report other potential future research areas and challenges.

Preserving confidentiality in data outsourcing scenarios

There is a consensus in the security research community about the efficiency of data outsourcing for solving data management problems.[2] This consists of moving data from in-house storage to cloud databases, while also maintaining a balance between data confidentiality and utility (Figure 4).

|

CSPs are considered honest-but-curious: the database servers answer user queries correctly and do not manage stored data, but they attempt to intelligently analyze data and queries in order to learn as much information as possible from them.

Two powerful techniques have been proposed to enforce access control in cloud databases. The first technique exploits vertical database fragmentation to keep some sensitive data separated from each other. The second technique resorts to encryption to make a single attribute invisible to unauthorized users. These two techniques can be implemented using the following approaches:

- Full outsourcing: The entire in-house database is moved to the cloud. It considers vertical database fragmentation to enforce confidentiality constraints with more than two attributes by keeping them separated from each other among distributed servers. Moreover, it resorts to encryption in order to hide confidentiality constraints with a single attribute.[6]

- "Keep a few": This approach departs from encryption by involving the owner side. The attributes to be encrypted are stored in plain text on the owner side since this later is considered a trusted part. The rest of the database is distributed among servers while maintaining data confidentiality through vertical fragmentation.[11]

Aside from the fact that encrypting data for storing them externally carries a considerable cost[2], previous studies have primarily concentrated on non-communicating cloud servers.[6][10][11][12][14] In this situation, servers are unaware of each other and do not exchange any information. When a master node receives a query, it decomposes it and processes it locally without the need to perform a join query. In recent years, researchers have studied the effect of communication between servers on query execution, and secure query evaluation strategies have been elaborated.[4][15][16][17]

In the rest of this section, we discuss current and emerging research efforts in the first two of the three mentioned architectures. The third, inference control in data outsourcing, will be discussed in the following section.

Secure data outsourcing with non-communicating servers

In 2005, Aggarwal et al. presented some of the earliest research attempting to enforce access control in database outsourcing using vertical fragmentation.[6] Under the assumption that servers do not communicate, the work aimed to split the database on two untrusted servers while preserving data privacy, with some of the attributes possibly encrypted. They demonstrated a secure fragmentation of a relation R is a triple (F1 , F2 , E) where F1 , F2 contain attributes in plain text stored in different servers and E is the set of encrypted attributes. The tuple identifier and the encrypted attributes were replicated with each fragment. The protection measures were also augmented by a query evaluation technique defining how queries on the original table can be transformed into queries on the fragmented table.

The work of Hudic et al.[18] introduces an approach to enforce confidentiality and privacy while outsourcing data to a CSP. The proposed technique relies on vertical fragmentation and applies only minimal encryption to preserve data exposure to malicious parties. However, the fragmentation algorithm enforces the database logic schema to be in a third normal form to produce a good fragmentation design, and the query execution cost was not proven to be minimal.

In 2007, Ciriani et al.[5] addressed the problem of privacy preserving data outsourcing by resorting to the combination of fragmentation and encryption. The former is exploited to break sensitive associations between attributes, while the latter enforces the privacy of singleton confidentiality constraints. The authors go on to define a formal model of minimal fragmentation and propose a heuristic minimal fragmentation algorithm to efficiently execute queries over fragments while preserving security properties. However, when a query executed over a fragment involves an attribute that is encrypted, an additional query is executed to evaluate the conditions of the attribute, leading to performance degradation by slowing down query processing.

In 2011, Ciriani et al.[19] addressed the concept of secure data publishing in the presence of confidentiality and visibility constraints. By modeling these two constraints as Boolean formulas and fragment as complete truth assignments, the authors rely on the Ordered Binary Decision Diagrams (OBDD) technique to check whether a fragmentation satisfies confidentiality and visibility constraints. The proposed algorithm runs using OBDD and returns a fragmentation that guarantees correctness and minimality. However, query execution cost was not investigated in this paper, and the algorithm runs only on a database schema with a single relation.

Xu et al.[1] studied the problem of finding secure fragmentation with minimum cost for query support in 2015. Firstly, they define the cost of a fragmentation F as the sum of the cost of each query Qi executed on F multiplied by the execution frequency of Qi. Secondly, they resort to using a heuristic local search graph-based approach to obtain near optimal fragmentation. The search space was modeled as a fragmentation graph, and transformation between fragmentation as a set of edges E. Then, two search strategies where proposed: a static search strategy, which is invariant with the number of steps in a solution path, as well as a dynamic search strategy based on guided local search, which guarantees the safeness of the final solution while avoiding a dead-end. However, this paper does not investigate visibility constraints, which is an important concept for data utility. Moreover, other heuristic search techniques could have been addressed (e.g., Tabu search or simulated annealing).

The 2009 work of Ciriani et al.[11] puts forward a new paradigm to securely publishing data in the cloud while completely departing from encryption, since encryption is sometimes considered a very rigid tool that is delicate in its configuration, while potentially slowing down query processing. The idea behind this work is to engage the owner side (assumed to be a trusted party) to store a limited portion of data (that is supposed to be encrypted) in the clear and use vertical fragmentation to break sensitive associations among data to be stored in the cloud. Their proposed algorithm computes a fragmentation solution that minimizes the load for the data owner while guaranteeing privacy concerns. Moreover, authors highlight other metrics that can be used to characterize the quality of a fragmentation and decide which attribute is affected to the client side and which attribute is externalized. However, engaging the client to enforce access control requires mediating every query in the system, which could lead to bottlenecks and negatively impact performance.

In 2017, Bollwein and Wiese[8] proposed a separation of duties technique based on vertical fragmentation to address the problem of preserving confidentiality when outsourcing data to a CSP. To ensure privacy requirements were met, confidentiality constraints and data dependencies were introduced. The separation of duties problem was treated as an optimization problem to maximize the utility of the fragmented database and to enhance query execution over the distributed servers. However, the optimization problem was addressed only from the point of minimizing the number of distributed servers. Additionally, when collaboration between servers is established, the separation of duties approach is no longer efficient to preserve confidentiality constraints. The NP-hardness proofness of the separation of duties problem discussed in Bollwein and Wiese[8] was later proven by the authors the following year.[9] The separation of duties problem was addressed as an optimization problem by the combination of the two famous NP-hardness problems: bin packing and vertex coloring. The bin packing problem was introduced to take into consideration the capacity constraints of the servers, with the view that fragments should be placed in a minimum number of servers without exceeding the maximum capacity. Meanwhile, vertex coloring was introduced to enforce confidentiality constraints, seeing that the association of certain attributes in the same server violates confidentiality propriety. We should note, however, that this paper studies the separation of duties problem for single-relation databases, and to make the theory applicable in practical scenarios, a many-relations database should be used.

Keeping in mind the fact that communication between distributed servers in data outsourcing scenarios exacerbates privacy concerns, secure query evaluation strategies should be adopted. In the next subsection we investigate prior research on secure data outsourcing with communicating servers.

Secure data outsourcing: The case of communicating servers

Over the past few years, some researchers have also investigated the problem of data outsourcing with communicating servers.[4][15][16][17] Aside from attempting to guarantee confidentiality and privacy preservation when moving databases to the cloud, these works also implemented secure query evaluation strategies to retain the overall access control policy when servers communicate with each other. It is clear that when servers (containing sensitive attributes whose association is forbidden) interact through join queries, a user’s privacy will be at risk. As such, secure query evaluation strategies aim to prevent the linking of sensitive attributes attempted by malicious actors.

Building on previous work[4], Bkakria et al.[15] propose an approach that securely outsources data based on fragmentation and encryption. It also enforces access control when querying data by resorting to the query privacy technique. The approach examined the case of a many-relations database with new inter-table confidentiality constraints. The approach assumed that distributed servers could collude to break data confidentiality, and as such the connection between servers was intended to be based on a primary-key or foreign key. Additionally, the query evaluation model, which is based on private information retrieval, ensures sensitive attributes remain unlinkable by malicious actors using a semi-join query. However, their proposed technique enforces database schema to be normalized, and it generates a huge number of confidentiality constraints due to the transformation of both inter-table constraints to singleton and association constraints, which could affect the quality of the fragmentation algorithm. More generic queries should be considered.

A join query integrity check was tackled in the 2016 work of di Vimercati et al.[16] Inspired by prior work[17], the authors proposed a new technique for verifying the integrity of join queries computed by potentially untrusted cloud providers. The authors also aimed to prevent servers from learning from answered queries, which could lead to a breach of users' privacy. To do so, the first showed how markers, twins, salts, and buckets can be adapted to preserve integrity when a join query is executed as a semi-join. They then introduce two strategies to minimize the size of the verification: limit the adoption of buckets and salts to twins and markers only, and represent twins and markers through slim tuples. Additionally, the authors demonstrate through their experiments how the computational and communication overhead can be limited with an integrity check.

Discussion

To summarize, we can classify the previously discussed approaches according to the following criterion: confidentiality constraints support, optimal distribution support, and secure query evaluation strategy support. We would like to mention that optimal distribution is treated through secure distributions that guarantee minimum query execution costs over fragments. From this point, it is clear that all mentioned approaches support access control verification through confidentiality constraints. However, query evaluations have not been tackled in all works.[8][5][11][14] Those approaches differ from the fact that some of them ensure minimum query execution costs and data utility for the database application, but other ones addressed the problem of data outsourcing with confidentiality constraints only. However, among the secure database distribution with query evaluation strategies, we find the work of Bkakria et al.[4] provides an integral framework ensuring secure database fragmentation and communication between distributed servers. Also, it shows a reasonable query execution cost.

Nevertheless, Bkakria et al. assume that the threat comes from the cloud service providers that try to collude to break sensitive association between attributes. It does not address the case of an internal threat, where a malicious user aims to bypass access control with an inference channel. As such, we present an insightful discussion about data outsourcing in the presence of the inference problem in the following section.

Data outsourcing and the inference problem

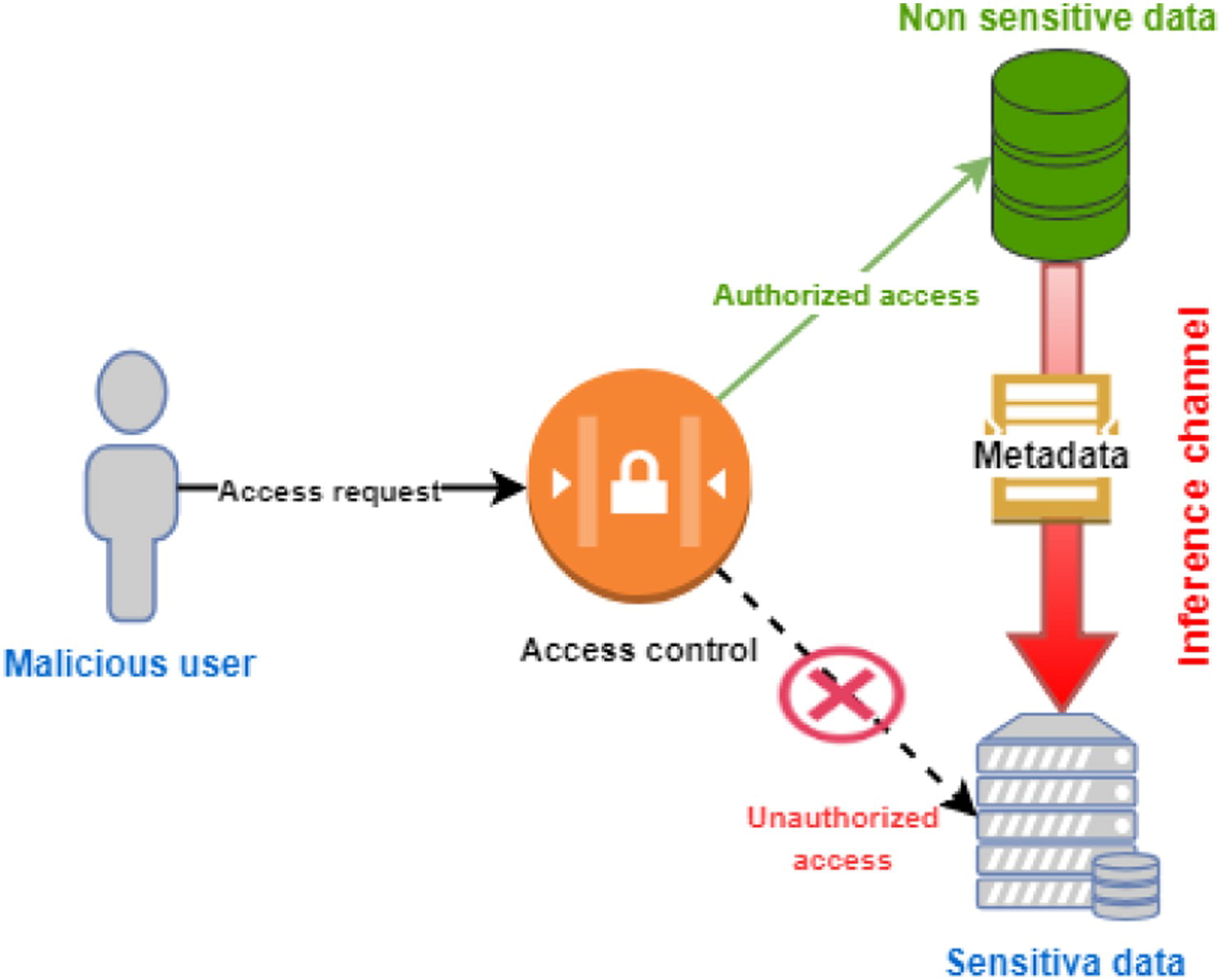

Access control models protect sensitive data from direct disclosure via direct accesses; however, they fail to prevent indirect accesses.[20] Indirect accesses via inference channels occurs when a malicious user combines the legitimate response that he received from the system with metadata (Figure 5). According to Guarnieri et al.[21], types of external information that can be combined with legitimate data in order to produce an inference channel include database schema, system semantics, statistical information, exceptions, error messages, user-defined functions, and data dependencies.

|

Although access control and inference control share the same goal of preventing unauthorized data disclosure, they differ in several fundamental aspects.[22]

Table 2 highlights the major differences between them. According to our comparison in Table 2, we find that access control is more preferable than inference control from a complexity perspective. Consequently, several researchers have attempted to replace inference control engines with access control mechanisms. We refer the interested reader to Biskup et al. (2008)[23], Biskup et al. (2010)[24], and Katos et al.[22], as the discussion of these approaches is beyond the scope of this paper.

| ||||||||||||||||

Inference attacks and prevention methods

According to Farkas and Jajodia[20], there are three types of inference attacks: a statistical attack, semantic attack, and inference attack due to data mining. For each of the mentioned techniques, researchers have devoted significant efforts to dealing with the inference problem. For statistical attacks, techniques like anonymization and data perturbation have been developed to protect data from indirect access. For security threats based on data mining, techniques like privacy-preserving data mining and privacy-preserving data publishing were carried out. Furthermore, additional research has also examined semantic attacks.[25][26][27] Among the literature can be found more than one criterion to classify approaches that deal with inference. One proposed criterion is to classify these approaches according to data and schema level.[28] In such a classification, inference constraints are then classified into a schema constraints level or a data constraints level. Another criterion could classify according to the time when the inference control techniques are performed. According to this criterion, the proposed approaches are classified into two categories: design time[29][30][31][32] and query run time[25][26][33][34]

The purpose of inference control at "design time" is to detect inference channels early on and eliminate them. This approach provides better performance for the system since no monitoring module is needed when the users query the database, as a consequence improving query execution time. Nevertheless, design time approaches are too restrictive and may lead to over-classification of the data. Additionally, it requires that the designer has a firm concept of how the system will be utilized. On the other hand, "run time" approaches provide data availability since they monitor the suspicious queries at run time. However, run time approaches lead to performance degradation of the database server since every query needs to be checked by the inference engine. Furthermore, the inference engine needs to manage a huge number of log files and users. As a result, this could slow down query processing. In addition, run time approaches could induce a non-deterministic access control behavior (e.g., users with the same privileges may not get the same response).

From this perspective, we can conclude that the main evaluation criterion of these techniques is a trade-off between availability and system performance. Some works have been elaborated to overcome these problems, especially for run time approaches. For example, Yang et al.[35] developed a new paradigm of inference control with trusted computing to push the inference control from server side to client side in order to mitigate the bottleneck on the database server. Furthermore, Staddon[36] developed a run time inference control technique that retains fast query processing. The idea behind this work was to make query processing time depend on the length of the inference channel instead of user query history.

Inference control in cloud data integration systems

Data outsourcing and the inference problem is an area of research that has been investigated for many years.[12][37][38][39][40][41][42][43] Inference leakage is recognized as a major barrier to cloud computing and other data outsourcing or database-as-a-service arrangements. The problem is that the designer of the system cannot anticipate the inference channels that arise on the cloud level and could lead to security breaches.

Researchers like da Silva et al.[38] have been able to pinpoint the inference that occurs in a homogeneous peer agent through distributed data mining, calling this process a "peer-to-peer agent-based data mining system." They assert that performing distributed data mining (DDM) in such extremely open distributed systems exacerbates data privacy and security issues. As a matter of fact, inference occurs in DDM when one or more peer sites learn any confidential information (e.g., a model, patterns, or data themselves) about the dataset owned by other peers during a data mining session. The authors classified inference attacks in DDM in two categories:

- Inside attack scenario: This scenario occurs when a peer tries to infer sensitive information from other peers in the same mining group. Depending on the number of attackers, the authors make a distinction between a single attack (when one peer behaves maliciously) and a coalition attack (when many sites collude to attack one site). Moreover, a probe attack was introduced by the authors, which is independent of the number of peers participating in the attack.

- Outside attack scenario: This scenario takes place when a set of malicious peers try to infer useful information from other peers in a different mining group. In this case, an eavesdropping channel attack is performed by malicious peers to steal information from other peers.

After identifying DDM inference attacks, the authors propose an algorithm known as KDEC to control potential inside and outside attacks to particular schema for homogeneous distributed clustering. The main idea behind KDEC is to reconstruct the data from the kernel density estimates, given that a malicious peer can use the reconstruction algorithm to infer non-local data. However, the algorithm proposed by the authors needs to be improved from an accuracy point to expose further possible weakness of the KDEC schema.

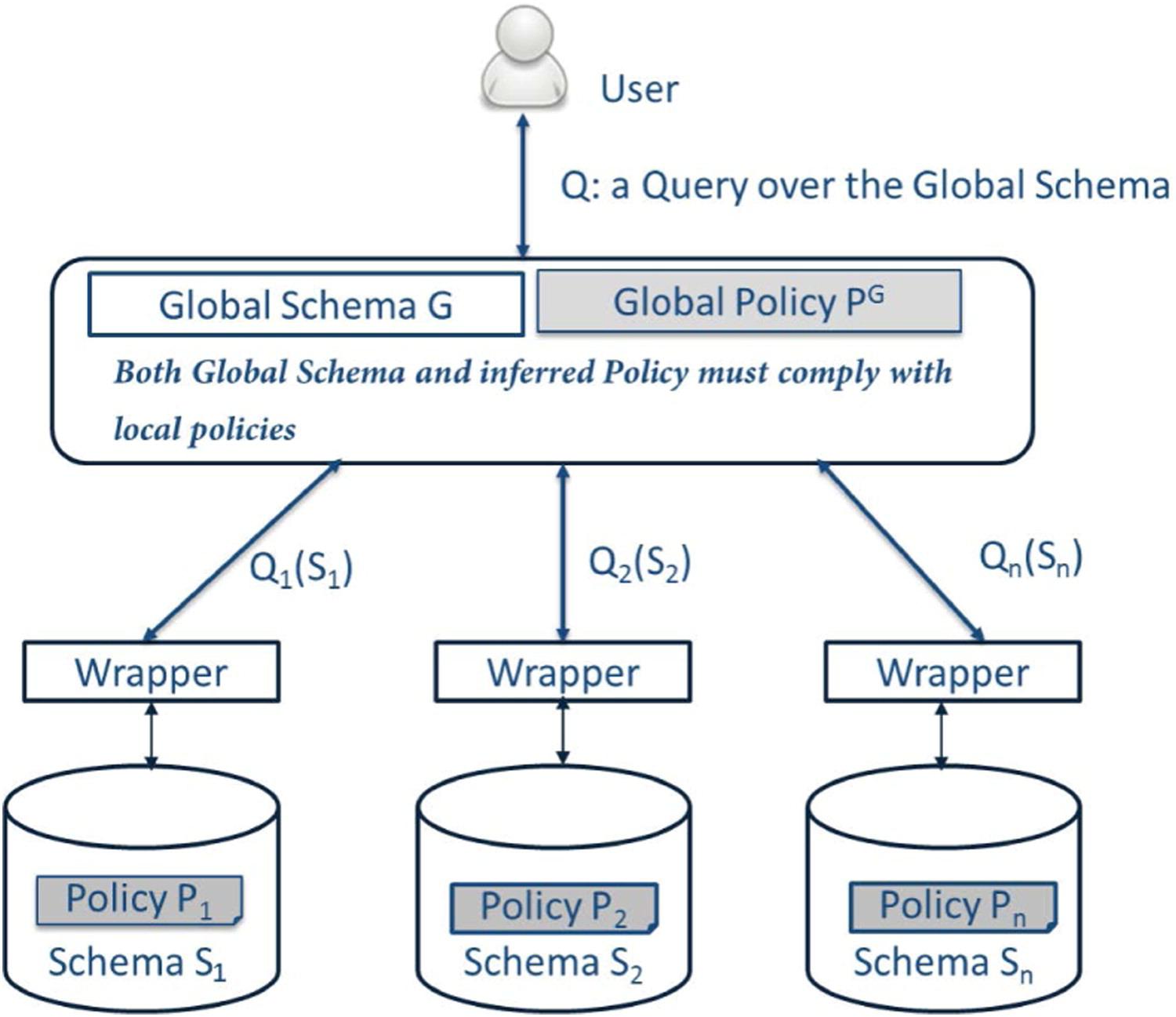

Inference control in cloud integration systems has also been investigated in the last decade through the work of Haddad et al.[39], Sayah et al.[40], and Sellami et al.[41] In such systems, a mediator is defined as a unique entry point to the distributed data sources. It provides the user a unique view of the distributed data. From a security point of view, access control is a major challenge in this situation since the global policy of the mediator in the cloud level must comply with the back-end data source policies, in addition to possibly enforcing additional security properties (Figure 6). The problem is that the system (or the designer of the system) cannot anticipate the inference channels that arise due to the dependencies that appear at the mediator level.

|

The first known work attempting to control inference in data integration systems is by Haddad et al.[39] The authors propose an incremental approach to prevent inference with functional dependencies. The proposed methodology includes three steps:

- Synthesize global policies: Derives the authorization rule of each virtual relation individually by the way that it preserves the local authorization of the local relations involved in the virtual relation.

- Detect violations: By resorting to a graph-based approach, this step aims at identifying all the violations that could occur using functional dependencies. Such violations are called "transaction violations," consisting of a series of innocuous queries that lead to violation of authorization rules.

- Reconfigure authorization policies: The author proposes two methods to forbid the completion of each transaction violation. The first one uses a historical access control by keeping track of previous queries to evaluate the current query (this method is considered to be a run-time approach). The second one proposes to reconfigure the global authorization policies at the mediator level in a way that no authorization violation will occur (this method is considered to be at design-time of the global security policy).

It should be noted that Haddad et al. have discussed only semantic constraints due to functional dependencies. Neither inclusion nor multivalued dependencies were investigated. Additionally, other mapping approaches need to be discussed, such as LAV and GLAV approaches.

Inspired by Haddad et al.[39], Sellami et al.[41] propose an approach aiming to control inference in cloud integration systems. The proposed methodology resorts to formal concept analysis as a formal framework to reason about authorization rules, and functional dependencies as a source of inference. The authors adopt an access control model with authorization views and propose an incremental approach with three steps:

- Generate the global policy, global schema, and global functional dependency (FD): This step takes as input a set of source schema together with their access control policies and starts by translating the schema and policies to formal contexts. Then, the global policy is generated in a way that the source rules are preserved at the global level. Next the schema of the mediator (virtual relations) is generated from the global policy to avoid useless attributes combinations (every attribute in the mediator schema is controlled by the global policy). Finally, a global FD is considered from the source FD as a formal context.

- Identify disclosure transactions: By resorting to formal concept analysis (FCA) as a framework to reason about the global policy, the authors identify the profiles to be denied from accessing sensitive attributes at the mediator level. Then, they extract the transaction violations by reasoning about the global FD.

- Reconfigure: This step is achieved by two methods. At design time, use a policy healing to complete the global policy, with additional rules to ensure that no transaction violation is achieved. At query run time, use a monitoring engine to prohibit suspicious queries.

Sayah et al.[40] have examined inference that arises in the web through a Resource Description Framework (RDF) store. They propose a fine-grained framework for RDF data, and then they exploit a closed graph to verify the consistency property of an access control policy when inference rules and authorization rules interact. Without accessing the data (at policy design-time), the authors propose an algorithm to verify if an information leakage will arise given a policy P and a set of inference rules R. Furthermore, the authors demonstrate the applicability of the access control model using a conflict resolution strategy (most specific takes precedence).

Inference control in distributed cloud database systems

Biskup et al.[37] resort to using a Controlled Query Evaluation strategy (CQE) to detect inference based on the knowledge of non-confidential information contained in the outsourced fragments and prior knowledge that a malicious user might have. As CQE relies on a logic-oriented view of database systems, the main idea of this approach is to model fragmentation in a logic-oriented fashion to allow for inference proofness to be proved formally despite the semantic database constraints that an attacker may hold. This type of vertical database fragmentation technique was also considered by di Vimercati et al.[12] to ensure data confidentiality in the presence of data dependencies among attributes. Those dependencies allow unauthorized users to deduce information about sensitive attributes. In this work, the authors define three types of confidentiality violation that can be caused by data dependencies. First, this can happen when a sensitive attribute or association is exposed by the attributes in a fragment. Second, this can also occur if an attribute appearing in a fragment is also derivable from some attributes in another fragment, thus enabling linkability among such fragments. Finally, this can occur when an attribute is derivable (independently) from attributes appearing in different fragments, thus enabling linkability among these fragments. To tackle these issues, authors reformulate the problem graphically through a hyper-graph representation and then compute the closure of a fragmentation by deducing all information derivable from its fragments via dependencies to identify indirect access. Nevertheless, the major limit of this approach is that it explores the problem only in a single-relation database.

Although data outsourcing was not explicitly mentioned by Turan et al.[42][43], their two works from 2017 and 2018 aim to control the inference problem caused by functional dependencies and meaningful join proactively by decomposing the relational logical schema into a set of views (to be queried by the user) where inference channels cannot appear. In 2017, the authors proposed[43] a proactive and decomposition-based inference control strategy for relational databases to prevent access to a forbidden set via direct or indirect access. The proposed decomposition algorithm controls both functional and probabilistic dependencies by breaking down those leading to the inference of a forbidden attribute set. However, this approach was considered too rigid for the fact that if the ways of associate forbidden set attributes are defined as a chain of functional dependencies, the algorithm breaks these chains from both sides for both attributes. Parting from this limit, the same researchers proposed a graph-based approach[42] consisting of proactive decomposition of the external schema in order to satisfy both the forbidden and required associations of attributes. In this work, functional dependencies are represented as a graph in which vertices are attributes and edges are functional dependencies. The inference channel is then defined as a process of searching a sub-tree in the graph containing the attributes that need to be related. Compared to their prior approach[43], this method sees the cut of the inference channel getting relaxed by cutting the chains only at a single point, consequently minimizing dependency loss. Nevertheless, like the previous technique, it leads to semantic loss and a need to query rewriting techniques to query decomposed views.

Proposed solution

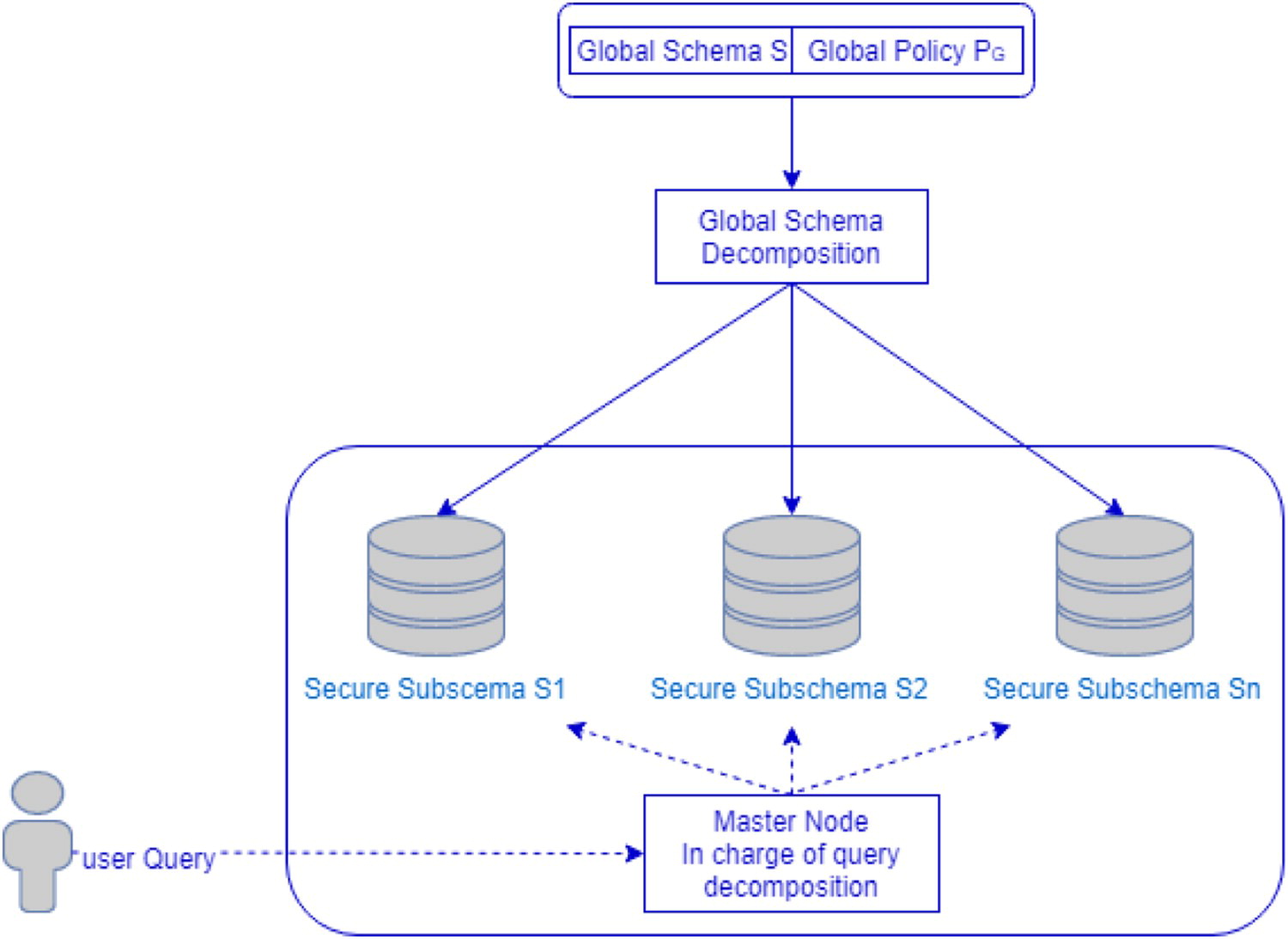

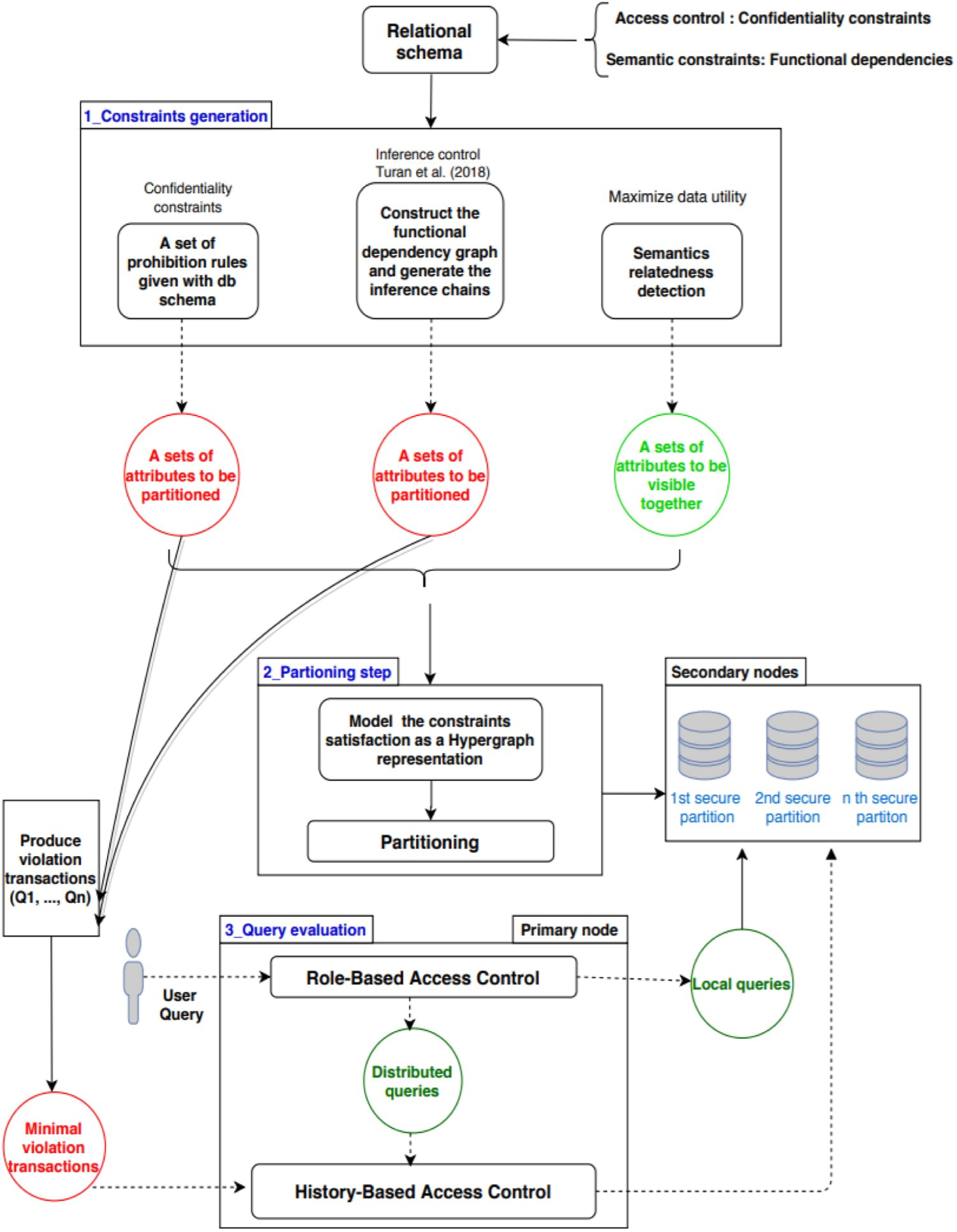

In this section, we present an approach that relies on the relational model and aims to produce a set of secure sub-schemas, with each represented by a partition Pi stored exactly on one server by the CSP (Figure 7). This approach also introduces a secure distributed query evaluation strategy to efficiently request data from distributed partitions while retaining access control policies. To do that, our proposed methodology takes as input a set of functional dependencies (FD), as well as a relational schema R, and applies the following phases:

- Constraints generation: This phase aims at generating two types of constraints that, in addition to the confidentiality constraints, will guide the process of vertical schema partitioning. This is done through two steps: visibility constraints generation and constraint-based inference control generation. During visibility constraints generation, the constraints are enforced as soft constraints in the partitioning phase, given that their severity is less than confidentiality constraints. To generate them, we perform a semantic analysis of the relational schema in order to detect semantic relatedness between attributes and users roles. These constraints should be preserved (stay visible) when the relational schema is fragmented (i.e., we aim to maximize intra-dependency between attributes that seem to be frequently accessed by the same role while minimizing the inter-dependency between attributes in separate parts). Then during constraint-based inference control generation, the constraints are enforced (like confidentiality constraints) as hard constraints. In this step, we resort to the method proposed by Turan et al.[42] to build a functional dependencies graph and generate join chains. Then, we use a relaxed technique to cut the join chains only at a single point in order to minimize dependency loss. By cutting a join chain at a single point, the enforcement of the attributes in the left-hand side (LHS) and right-hand side (RHS) of the functional dependency can occur, with the cut point representing a confidentiality constraint. As a consequence, we guarantee that the join chain is broken.

- Schema partitioning: In this phase, we resort to hypergraph theory to represent the partitioning problem as a hypergraph constraint satisfaction problem. Then, we reformulate the problem as a multi-objective function F to be optimized. Therefore, we propose a greedy algorithm to partition the constrained hypergraph into k parts while minimizing the multi-objective function F.

- Query evaluation model: In this step, we propose a monitor module to mediate every query issued from users against data stored in distributed partitions. The monitor module is built on top of an Apache Spark system, which contains two mechanisms: a role-based access control mechanism and a history-based access control mechanism. The first mechanism checks for the user role of who issued the query and, if it is not granted to execute distributed query, then this later will be forwarded to the CSP. Otherwise, the query is forwarded to the history access control mechanism, which takes as input a set of transaction violations to be prohibited and checks if the cumulative of user past queries and the current query could complete a transaction violation. If that proves to be the case, the query is revoked.

|

Future research directions and challenges

Since the discussed works are recent, there are a number of concepts associated to access control, privacy, data outsourcing, and database semantics which could be considered to ensure better data security and utility in the cloud. As such, there are several research directions to pursue going forward.

Functional dependencies should be considered a threat source in data outsourcing scenarios. Unlike the approaches by Turan et al.[42][43], we aim in our future work to prevent inference from occurring in a distributed cloud database. Our graph-based approach detects inference channels caused by functional dependencies and breaks those channels by exploiting vertical database fragmentation while minimizing dependency loss.

Prior researchers have only dealt with semantic constraints represented by functional and probabilistic dependencies as a source of inference. However, other semantic constraints—e.g., inclusion dependencies, join dependencies, and multivalued dependencies—should be considered as sources of inference.

Another future direction to examine is when the workload will become available after the database is set up in the cloud. The challenge here is in how to dynamically reallocate the distributed database fragments among distributed servers while retaining access control policy.

Conclusion

In this work, we conducted a literature review of current and emerging research on privacy and confidentiality concerns in data outsourcing. We have introduced different research efforts to ensure user privacy in the database-as-a-service paradigm with both communicating and non-communicating servers. Additionally, an insightful discussion about inference control was introduced, as well as a proposed method of tackling the issue. We also pinpoint potential issues that are still unresolved. These issues are expected to be addressed in future work.

Acknowledgements

Conflict of interest

No potential conflict of interest was reported by the author(s).

References

- ↑ 1.0 1.1 Xu, X.; Xiong, L.; Liu, J. (2015). "Database Fragmentation with Confidentiality Constraints: A Graph Search Approach". Proceedings of the 5th ACM Conference on Data and Application Security and Privacy: 263–70. doi:10.1145/2699026.2699121.

- ↑ 2.0 2.1 2.2 Samarati, P.; di Vimarcati, S.D.C. (2010). "Data protection in outsourcing scenarios: Issues and directions". Proceedings of the 5th ACM Symposium on Information, Computer and Communications Security: 1–14. doi:10.1145/1755688.1755690.

- ↑ Biskup, J.; Preuß, M. (2013). "Database Fragmentation with Encryption: Under Which Semantic Constraints and A Priori Knowledge Can Two Keep a Secret?". Data and Applications Security and Privacy XXVIII: 17–32. doi:10.1007/978-3-642-39256-6_2.

- ↑ 4.0 4.1 4.2 4.3 4.4 Bkakria, A.; Cuppens, F.; Cuppens-Boulahia, N. et al. (2013). "Preserving Multi-relational Outsourced Databases Confidentiality using Fragmentation and Encryption". JoWUA 4 (2): 39–62. doi:10.22667/JOWUA.2013.06.31.039.

- ↑ 5.0 5.1 5.2 Ciriani, V.; di Vimaercati, S.D.C.; Foresti, S. et al. (2007). "Fragmentation and Encryption to Enforce Privacy in Data Storage". Computer Security - ESORICS 2007: 171–86. doi:10.1007/978-3-540-74835-9_12.

- ↑ 6.0 6.1 6.2 6.3 Aggarwal, G.; Bawa, M.; Ganesan, P. et al. (2005). "Two Can Keep a Secret: A Distributed Architecture for Secure Database Services". Second Biennial Conference on Innovative Data Systems Research: 1–14. http://ilpubs.stanford.edu:8090/659/.

- ↑ Alsirhani, A.; Bodorik, P. Sampalli, S. (2017). "Improving Database Security in Cloud Computing by Fragmentation of Data". Proceedings of the 2017 International Conference on Computer and Applications: 43–49. doi:10.1109/COMAPP.2017.8079737.

- ↑ 8.0 8.1 8.2 8.3 Bollwein, F.; Wiese, L. (2017). "Separation of Duties for Multiple Relations in Cloud Databases as an Optimization Problem". Proceedings of the 21st International Database Engineering & Applications Symposium: 98–107. doi:10.1145/3105831.3105873.

- ↑ 9.0 9.1 Bollwein, F.; Wiese, L. (2018). "On the Hardness of Separation of Duties Problems for Cloud Databases". Proceedings of TrustBus 2018: Trust, Privacy and Security in Digital Business: 23–38. doi:10.1007/978-3-319-98385-1_3.

- ↑ 10.0 10.1 Ciriani, V.; di Vimercati, S.D.C.; Foresti, S. et al. (2009). "Fragmentation Design for Efficient Query Execution over Sensitive Distributed Databases". Proceedings of the 29th IEEE International Conference on Distributed Computing Systems: 32–39. doi:10.1109/ICDCS.2009.52.

- ↑ 11.0 11.1 11.2 11.3 11.4 Ciriani, V.; di Vimercati, S.D.C.; Foresti, S. et al. (2009). "Keep a Few: Outsourcing Data While Maintaining Confidentiality". Computing Security - ESORICS 2009: 440–55. doi:10.1007/978-3-642-04444-1_27.

- ↑ 12.0 12.1 12.2 12.3 di Vimercati, S.D.C.; Foresti, S.; Jajodia, S. et al. (2014). "Fragmentation in Presence of Data Dependencies". IEEE Transactions on Dependable and Secure Computing 11 (6): 510–23. doi:10.1109/TDSC.2013.2295798.

- ↑ Moher, D.; Liberti, A.; Tetzlaff, J. et al. (2009). "Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement". PLoS One 6 (7): e1000097. doi:10.1371/journal.pmed.1000097. PMC PMC2707599. PMID 19621072. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2707599.

- ↑ 14.0 14.1 di Vimercati, S.D.C.; Foresti, S.; Jajodia, S. et al. (2010). "Fragments and loose associations: respecting privacy in data publishing". Proceedings of the VLDB Endowment 3 (1–2). doi:10.14778/1920841.1921009.

- ↑ 15.0 15.1 15.2 Bkakria, A.; Cuppens, F.; Cuppens-Boulahia, N. et al. (2013). "Confidentiality-Preserving Query Execution of Fragmented Outsourced Data". Proceeding of ICT-EurAsia 2013: Information and Communication Technology 4 (2): 426-440. doi:10.1007/978-3-642-36818-9_47.

- ↑ 16.0 16.1 16.2 di Vimarcati, S.D.C.; Foresti, S.; Jajodia, S. et al. (2016). "Efficient integrity checks for join queries in the cloud". Journal of Computer Security 24 (3): 347–78. doi:10.3233/JCS-160545.

- ↑ 17.0 17.1 17.2 di Vimarcati, S.D.C.; Foresti, S.; Jajodia, S. et al. (2013). "Integrity for join queries in the cloud". IEEE Transactions on Cloud Computing 1 (2): 187–200. doi:10.1109/TCC.2013.18.

- ↑ Hudic, A.; Islam, S.; Kieseberg, P. et al. (2013). "Data confidentiality using fragmentation in cloud computing". International Journal of Pervasive Computing and Communications 9 (1): 37–51. doi:10.1108/17427371311315743.

- ↑ Ciriani, V.; di Vimercati, S.D.C.; Foresti, S. et al. (2011). "Enforcing Confidentiality and Data Visibility Constraints: An OBDD Approach". Proceeding of DBSec 2011: Data and Applications Security and Privacy XXV: 44–59. doi:10.1007/978-3-642-22348-8_6.

- ↑ 20.0 20.1 Farkas, C.; Jajodia, S. (2002). "The inference problem: A survey". ACM SIGKDD Explorations Newsletter 4 (2): 6–11. doi:10.1145/772862.772864.

- ↑ Guarnieri, M.; Marinovic, S.; Basin, D. (2017). "Securing Databases from Probabilistic Inference". Proceedings of the 2017 IEEE 30th Computer Security Foundations Symposium: 343–359. doi:10.1109/CSF.2017.30.

- ↑ 22.0 22.1 Katos, V.; Vrakas, D.; Katsaros, P. (2011). "A framework for access control with inference constraints". Proceedings of the 2011 IEEE 35th Annual Computer Software and Applications Conference: 289–297. doi:10.1109/COMPSAC.2011.45.

- ↑ Biskup, J.; Embley, D.W.; Lochner, J.-H. (2008). "Reducing inference control to access control for normalized database schemas". Information Processing Letters 106 (1): 8-12. doi:10.1016/j.ipl.2007.09.007.

- ↑ Biskup, J.; Hartmann, S.; Link, S. (2010). "Efficient Inference Control for Open Relational Queries". Proceedings of DBSec 2010: Data and Applications Security and Privacy XXIV: 162–76. doi:10.1007/978-3-642-13739-6_11.

- ↑ 25.0 25.1 Brodsky, A. (2000). "Secure databases: Constraints, inference channels, and monitoring disclosures". IEEE Transactions on Knowledge and Data Engineering 12 (6): 900–19. doi:10.1109/69.895801.

- ↑ 26.0 26.1 Chen, Y.; Chu, W.W. (2006). "Database Security Protection Via Inference Detection". Proceedings of ISI 2006: Intelligence and Security Informatics: 452–58. doi:10.1007/11760146_40.

- ↑ Su, T.-A.; Ozsoyoglu, G. (1991). "Controlling FD and MVD inferences in multilevel relational database systems". IEEE Transactions on Knowledge and Data Engineering 3 (4): 474-485. doi:10.1109/69.109108.

- ↑ Yip, R.W.; Levitt, E.N. (1998). "Data level inference detection in database systems". Proceedings of the 11th IEEE Computer Security Foundations Workshop: 179–89. doi:10.1109/CSFW.1998.683168.

- ↑ Delugach, H.S.; Hinke, T.H. (1996). "Wizard: A database inference analysis and detection system". IEEE Transactions on Knowledge and Data Engineering 8 (1): 56–66. doi:10.1109/69.485629.

- ↑ Hinke, T.H.; Delugach, H.S. (1992). "AERIE: An inference modeling and detection approach for databases" (PDF). Proceedings of IFIP WG 11.3 Sixth Working Conference on Database Security: 187–201. https://apps.dtic.mil/sti/pdfs/ADA298828.pdf.

- ↑ Rath, S.; Jones, D.; Hale, J. et al. (1996). "A Tool for Inference Detection and Knowledge Discovery in Databases". Proceedings from Database Security IX: 317–332. doi:10.1007/978-0-387-34932-9_20.

- ↑ Wang, J.; Yang, J.; Guo, F. et al. (2017). "Resist the Database Intrusion Caused by Functional Dependency". Proceedings of the 2017 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery: 54–57. doi:10.1109/CyberC.2017.11.

- ↑ An, X.; Jutla, D.; Cercone, N. (2006). "Dynamic inference control in privacy preference enforcement". Proceedings of the 2006 International Conference on Privacy, Security and Trust: 1–10. doi:10.1145/1501434.1501464.

- ↑ Thuraisingham, B.; Ford, W.; Collins, M. et al. (1993). "Design and implementation of a database inference controller". Data & Knowledge Engineering 11 (3): 271-297. doi:10.1016/0169-023X(93)90025-K.

- ↑ Yang, Y.; Li, Y.; Deng, R.H. (2007). "New Paradigm of Inference Control with Trusted Computing". Proceedings of DBSec 2007: Data and Applications Security XXI: 243–58. doi:10.1007/978-3-540-73538-0_18.

- ↑ Dtaddon, J. (2003). "Dynamic inference control". Proceedings of the 8th ACM SIGMOD workshop on Research issues in data mining and knowledge discovery: 94–100. doi:10.1145/882082.882103.

- ↑ 37.0 37.1 Biskup, J.; Preuß, M.; Wiese, L. (2011). "On the Inference-Proofness of Database Fragmentation Satisfying Confidentiality Constraints". Proceedings of ISC 2011: Information Security: 246–61. doi:10.1007/978-3-642-24861-0_17.

- ↑ 38.0 38.1 da Silva, J.C.; Klusch, M.; Lodi, S. et al. (2004). "Inference attacks in peer-to-peer homogeneous distributed data mining". Proceedings of the 16th European Conference on Artificial Intelligence: 450-54. doi:10.5555/3000001.3000096.

- ↑ 39.0 39.1 39.2 39.3 Haddad, M.; Stevovic, J.; Chiasera, A. et al. (2014). "Access Control for Data Integration in Presence of Data Dependencies". Proceedings of DASFAA 2014: Database Systems for Advanced Applications: 203–17. doi:10.1007/978-3-319-05813-9_14.

- ↑ 40.0 40.1 40.2 Sayah, T.; Coquery, E.; Thion, R. et al. (2015). "Inference Leakage Detection for Authorization Policies over RDF Data". Proceedings of DBSec 2015: Data and Applications Security and Privacy XXIX: 346–61. doi:10.1007/978-3-319-20810-7_24.

- ↑ 41.0 41.1 41.2 Sellami, M.; Hacid, M.-S.; Gammoudi, M.M. (2015). "Inference Control in Data Integration Systems". Proceedings of OTM 2015: On the Move to Meaningful Internet System: 285–302. doi:10.1007/978-3-319-26148-5_17.

- ↑ 42.0 42.1 42.2 42.3 42.4 Turan, U.; Toroslu, I.H.; Kantarcioglu, M. (2018). "Graph Based Proactive Secure Decomposition Algorithm for Context Dependent Attribute Based Inference Control Problem". arXiv: 1–11. https://arxiv.org/abs/1803.00497.

- ↑ 43.0 43.1 43.2 43.3 43.4 Turan, U.; Torslu, I.H.; Kantarcıoğlu, M. (2017). "Secure logical schema and decomposition algorithm for proactive context dependent attribute based inference control". Data & Knowledge Engineering 111: 1–21. doi:10.1016/j.datak.2017.02.002.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, though grammar and word usage was substantially updated for improved readability. In some cases important information was missing from the references, and that information was added. The original paper listed references alphabetically; this wiki lists them by order of appearance, by design.