Difference between revisions of "Journal:Expert search strategies: The information retrieval practices of healthcare information professionals"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) m (Fixed citation issue) |

||

| (10 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

|download = [http://medinform.jmir.org/2017/4/e33/pdf http://medinform.jmir.org/2017/4/e33/pdf] (PDF) | |download = [http://medinform.jmir.org/2017/4/e33/pdf http://medinform.jmir.org/2017/4/e33/pdf] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

'''Background''': Healthcare information professionals play a key role in closing the knowledge gap between medical research and clinical practice. Their work involves meticulous searching of literature databases using complex search strategies that can consist of hundreds of keywords, operators, and ontology terms. This process is prone to error and can lead to inefficiency and bias if performed incorrectly. | '''Background''': Healthcare [[information]] professionals play a key role in closing the knowledge gap between medical research and clinical practice. Their work involves meticulous searching of literature databases using complex search strategies that can consist of hundreds of keywords, operators, and ontology terms. This process is prone to error and can lead to inefficiency and bias if performed incorrectly. | ||

'''Objective''': The aim of this study was to investigate the search behavior of healthcare information professionals, uncovering their needs, goals, and requirements for information retrieval systems. | '''Objective''': The aim of this study was to investigate the search behavior of healthcare information professionals, uncovering their needs, goals, and requirements for information retrieval systems. | ||

| Line 31: | Line 27: | ||

'''Methods''': A survey was distributed to healthcare information professionals via professional association email discussion lists. It investigated the search tasks they undertake, their techniques for search strategy formulation, their approaches to evaluating search results, and their preferred functionality for searching library-style databases. The popular literature search system PubMed was then evaluated to determine the extent to which their needs were met. | '''Methods''': A survey was distributed to healthcare information professionals via professional association email discussion lists. It investigated the search tasks they undertake, their techniques for search strategy formulation, their approaches to evaluating search results, and their preferred functionality for searching library-style databases. The popular literature search system PubMed was then evaluated to determine the extent to which their needs were met. | ||

'''Results''': The 107 respondents indicated that their information retrieval process relied on the use of complex, repeatable, and transparent search strategies. On average it took 60 minutes to formulate a search strategy, with a search task taking | '''Results''': The 107 respondents indicated that their information retrieval process relied on the use of complex, repeatable, and transparent search strategies. On average it took 60 minutes to formulate a search strategy, with a search task taking four hours and consisting of 15 strategy lines. Respondents reviewed a median of 175 results per search task, far more than they would ideally like (100). The most desired features of a search system were merging search queries and combining search results. | ||

'''Conclusions''': Healthcare information professionals routinely address some of the most challenging information retrieval problems of any profession. However, their needs are not fully supported by current literature search systems and there is demand for improved functionality, in particular regarding the development and management of search strategies. | '''Conclusions''': Healthcare information professionals routinely address some of the most challenging information retrieval problems of any profession. However, their needs are not fully supported by current literature search systems, and there is demand for improved functionality, in particular regarding the development and management of search strategies. | ||

'''Keywords''': review, surveys and questionnaires, search engine, information management, information systems | '''Keywords''': review, surveys and questionnaires, search engine, information management, information systems | ||

| Line 39: | Line 35: | ||

==Introduction== | ==Introduction== | ||

===Background=== | ===Background=== | ||

Medical knowledge is growing so rapidly that it is difficult for healthcare professionals to keep up. As the volume of published studies increases each year<ref name="LuPubMed11">{{cite journal |title=PubMed and beyond: A survey of web tools for searching biomedical literature |journal=Database |author=Lu, Z. |volume=2011 |pages=baq036 |year=2011 |doi=10.1093/database/baq036 |pmid=21245076 |pmc=PMC3025693}}</ref>, the gap between research knowledge and professional practice grows.<ref name="BastianSeventy10">{{cite journal |title=Seventy-five trials and eleven systematic reviews a day: How will we ever keep up? |journal=PLoS Medicine |author=Bastian, H.; Glasziou, P.; Chalmers, I. |volume=7 |issue=9 |pages=e1000326 |year=2010 |doi=10.1371/journal.pmed.1000326 |pmid=20877712 |pmc=PMC2943439}}</ref> Frontline healthcare providers (such as general practitioners [GPs]) responding to the immediate needs of patients may employ a web-style search for diagnostic purposes, with Google being reported to be a useful diagnostic tool<ref name="TangGoogling06">{{cite journal |title=Googling for a diagnosis--Use of Google as a diagnostic aid: Internet based study |journal=PLoS Medicine |author=Tang, H.; Ng, J.H. |volume=333 |issue=7579 |pages=1143-5 |year=2006 |doi=10.1136/bmj.39003.640567.AE |pmid=17098763 |pmc=PMC1676146}}</ref>; however, the credibility of results depends on the domain.<ref name="KitchensQuality14">{{cite journal |title=Quality of health-related online search results |journal=Decision Support Systems |author=Kitchens, B.; Harle, C.A.; Li, S. |volume=57 |pages=454-462 |year=22014 |doi=10.1016/j.dss.2012.10.050}}</ref> Medical staff may also perform more in-depth searches, such as rapid evidence reviews, where a concise summary of what is known about a topic or intervention is required.<ref name="HemingwayWhatIs09">{{cite web |url=http://www.bandolier.org.uk/painres/download/whatis/Syst-review.pdf |format=PDF |title=What Is A Systematic Review? |author=Hemingway, P.; Brereton, N. |publisher=Heyward Medical Communications |date=April 2009 |accessdate=05 March 2017}}</ref> | |||

Healthcare information professionals play the primary role in closing the gap between published research and medical practice, by synthesizing the complex, incomplete, and at times conflicting findings of biomedical research into a form that can readily inform healthcare decision making.<ref name="ElliottLiving14">{{cite journal |title=Living systematic reviews: An emerging opportunity to narrow the evidence-practice gap |journal=PLoS Medicine |author=Elliott, J.H.; Turner, T. |volume=333 |issue=7579 |pages=1143-5 |year=2006 |doi=10.1136/bmj.39003.640567.AE |pmid=17098763 |pmc=PMC1676146}}</ref> The systematic literature review process relies on the painstaking and meticulous searching of multiple databases using complex Boolean search strategies that often consist of hundreds of keywords, operators, and ontology terms<ref name="KarimiBoolean10">{{cite journal |title=Boolean versus ranked querying for biomedical systematic reviews |journal=BMC Medical Informatics and Decision Making |author=Karimi, S.; Pohl, S.; Scholer, F. et al. |volume=10 |pages=58 |year=2010 |doi=10.1186/1472-6947-10-58 |pmid=20937152 |pmc=PMC2966450}}</ref> (Textbox 1). | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="6"|'''Textbox 1.''' An example of a multi-line search strategy | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|1. Attention Deficit Disorder with Hyperactivity/<br />2. adhd<br />3. addh<br />4. adhs<br />5. hyperactiv$<br />6. hyperkin$<br />7. attention deficit$<br />8. brain dysfunction<br />9. or/1-8<br />10. Child/<br />11. Adolescent/<br />12. child$ or boy$ or girl$ or schoolchild$ or adolescen$ or teen$ or “young person$” or “young people$” or youth$<br />13. or/10-12<br />14. acupuncture therapy/or acupuncture, ear/or electroacupuncture/<br />15. accupunct$<br />16. or/14-15<br />17. 9 and 13 and 16 | |||

|} | |||

|} | |||

Performing a systematic review is a resource-intensive and time consuming undertaking, sometimes taking years to complete.<ref name="HigginsCochrane11">{{cite web |url=http://training.cochrane.org/handbook |title=Cochrane Handbook for Systematic Reviews of Interventions, Version 5.1.0 |editor=Higgins, J.P.T.; Green, S. |publisher=The Cochrane Collaboration |date=2011 |accessdate=05 March 2017}}</ref> It involves a lengthy content production process whose output relies heavily on the quality of the initial search strategy, particularly in ensuring that the scope is sufficiently exhaustive and that the review is not biased by easily accessible studies.<ref name="TsafnatSystematic14">{{cite journal |title=Systematic review automation technologies |journal=Systematic Reviews |author=Tsafnat, G.; Glasziou, P.; Choong, M.K. et al. |volume=3 |pages=74 |year=2014 |doi=10.1186/2046-4053-3-74 |pmid=25005128 |pmc=PMC4100748}}</ref> | |||

Numerous studies have been performed to investigate the healthcare information retrieval process and to better understand the challenges involved in strategy development, as it has been noted that online health resources are not created by healthcare professionals.<ref name="PottsIsEHealth06">{{cite journal |title=Is e-health progressing faster than e-health researchers? |journal=Journal of Medical Internet Research |author=Potts, H.W. |volume=8 |issue=3 |pages=e24 |year=2006 |doi=10.2196/jmir.8.3.e24 |pmid=17032640 |pmc=PMC2018835}}</ref> For example, Grant<ref name="GrantHowDoes04">{{cite journal |title=How does your searching grow? A survey of search preferences and the use of optimal search strategies in the identification of qualitative research |journal=Health Information and Libraries Journal |author=Grant, M.J. |volume=21 |issue=1 |pages=21–32 |year=2004 |doi=10.1111/j.1471-1842.2004.00483.x |pmid=15023206}}</ref> used a combination of a semi-structured questionnaire and interviews to study researchers’ experiences of searching the literature, with particular reference to the use of optimal search strategies. McGowan ''et al.''<ref name="McGowanPRESS16">{{cite journal |title=PRESS Peer Review of Electronic Search Strategies: 2015 Guideline Statement |journal=Journal of Clinical Epidemiology |author=McGowan, J.; Sampson, M.; Salzwedel, D.M. et al. |volume=75 |pages=40–6 |year=2016 |doi=10.1016/j.jclinepi.2016.01.021 |pmid=27005575}}</ref> used a combination of a web-based survey and peer review forums to investigate what elements of the search process have the most impact on the overall quality of the resulting evidence base. Similarly, Gillies ''et al.''<ref name="GilliesACollab08">{{cite journal |title=A collaboration-wide survey of Cochrane authors |journal=Evidence in the Era of Globalisation: Abstracts of the 16th Cochrane Colloquium |author=Gillies, D.; Maxwell, H.; New, K. et al. |volume=2008 |pages=04–33 |year=2008}}</ref> used an online survey to investigate the review, with a view to identifying problems and barriers for authors of Cochrane reviews. Ciapponi and Glujovsky<ref name="CiapponiSurvey12">{{cite journal |title=Survey among Cochrane authors about early stages of systematic reviews |journal=20th Cochrane Colloquium |author=Ciapponi, A.; Glujovsky, D. |volume=2012 |year=2012 |url=http://2012.colloquium.cochrane.org/abstracts/survey-among-cochrane-authors-about-early-stages-systematic-reviews.html}}</ref> also used an online survey to study the early stages of systematic review. | |||

No single database can cover all the medical literature required for a systematic review, although some are considered to be a core element of any healthcare search strategy, such as MEDLINE<ref name="MEDLINE">{{cite web |url=https://www.nlm.nih.gov/bsd/pmresources.html |title=MEDLINE/PubMed Resources Guide |publisher=U.S. National Library of Medicine |accessdate=05 March 2017}}</ref>, Embase<ref name="Embase">{{cite web |url=https://www.elsevier.com/solutions/embase-biomedical-research |title=Embase |publisher=Elsevier |accessdate=31 August 2017}}</ref>, and the Cochrane Library.<ref name="CochLib">{{cite web |url=http://www.cochranelibrary.com/ |title=Cochrane Library |publisher=John Wiley & Sons, Inc |accessdate=05 March 2017}}</ref> Consequently, healthcare information professionals may consult these sources along with a number of other, more specialized databases to fit the precise scope area.<ref name="ClarkePragmatic00">{{cite journal |title=Pragmatic approach is effective in evidence based health care |journal=BMJ |author=Clarke, J; Wentz, R. |volume=321 |issue=7260 |pages=566–7 |year=2000 |pmid=10968827 |pmc=PMC1118450}}</ref> | |||

A survey<ref name="LuPubMed11" /> of online tools for searching literature databases using PubMed<ref name="PubMed">{{cite web |url=https://www.ncbi.nlm.nih.gov/pubmed |title=PubMed |publisher=U.S. National Library of Medicine |accessdate=05 March 2017}}</ref>, the online literature search service primarily for MEDLINE, showed that most tools were developed for managing search results (such as ranking, clustering into topics and enriching with semantics). Very few tools improved on the standard PubMed search interface or offered advanced Boolean string editing methods in order to support complex literature searching. | |||

===Objective=== | |||

To improve the accuracy and efficiency of the literature search process, it is essential that information retrieval applications (in this case, databases of medical literature and the interfaces through which they are accessed) are designed to support the tasks, needs, and expectations of their users. To do so they should consider the layers of context that influence the search task<ref name="JärvelinInformation14">{{cite journal |title=Information seeking research needs extension towards tasks and technology |journal=Information Research |author=Järvelin, K.; Ingwersen, P. |volume=10 |issue=1 |year=2004 |url=http://files.eric.ed.gov/fulltext/EJ1082037.pdf |format=PDF}}</ref> and how this affects the various phases in the search process.<ref name="KuhlthauSeeking03">{{cite book |title=Seeking Meaning: A Process Approach to Library and Information Services |author=Kuhlthau, C.C. |publisher=Libraries Unlimited |pages=264 |isbn=9781591580942}}</ref> This study was designed to fill gaps in this knowledge by investigating the information retrieval practices of healthcare information professionals and contrasting their requirements to the level of support offered by a widely used literature search tool (PubMed). | |||

The specific research questions addressed by this study were (1) How long do search tasks take when performed by healthcare information professionals? (2) How do they formulate search strategies and what kind of search functionality do they use? (3) How are search results evaluated? (4) What functionality do they value in a literature search system? (5) To what extent are their requirements and aspirations met by the PubMed literature search system? | |||

In answering these research questions we hope to provide direct comparisons within other professions (e.g., in terms of the structure, complexity, and duration of their search tasks). | |||

==Methods== | |||

===Online survey=== | |||

The survey instrument consisted of an online questionnaire of 58 questions divided into five sections. It was designed to align with the structure and content of Joho ''et al.''’s<ref name="JohoASurvey10">{{cite journal |title=A survey of patent users: An analysis of tasks, behavior, search functionality and system requirements |journal=Proceedings of the Third Symposium on Information Interaction in Context |author=Joho, H.; Azzopardi, L.A.; Vanderbauwhede, W. |volume=2010 |pages=13–24 |year=2010 |doi=10.1145/1840784.1840789}}</ref> survey of patent searchers and wherever possible also with Geschwandtner ''et al.''’s<ref name="GschwandtnerD8_11">{{cite web |url=http://www.khresmoi.eu/assets/Deliverables/WP8/KhresmoiD812.pdf |format=PDF |title=D8.1.2: Requirements of the health professional search |author=Gschwandtner, M.; Kritz, M.; Boyer, C. |publisher=KHRESMOI |date=29 August 2011 |accessdate=05 March 2017}}</ref> survey of medical professionals to facilitate comparisons with other professions. The following were the five sections: (1) Demographics, the background and professional experience of the respondents; (2) Search tasks, the tasks that respondents perform when searching literature databases; (3) Query formulation, the techniques respondents used to formulate search strategies; (4) Evaluating search results, how respondents evaluate the results of their search tasks; and (5) Ideal functionality for searching databases, any other features that respondents value when searching literature databases. | |||

The survey was designed to be completed in approximately 15 minutes and was pre-tested for face validity by two health sciences librarians. | |||

Survey respondents were recruited by sending an email invitation with a link to the survey to five healthcare professional association mailing lists that deal with systematic reviews and medical librarianship: LIS-MEDICAL<ref name="LIS-MEDICAL">{{cite web |url=https://www.jiscmail.ac.uk/cgi-bin/webadmin?A0=lis-medical |title=LIS-MEDICAL Home Page |publisher=JISC |accessdate=05 March 2017}}</ref>, CLIN-LIB<ref name="CLIN-LIB">{{cite web |url=https://www.jiscmail.ac.uk/cgi-bin/webadmin?A0=CLIN-LIB |title=CLIN-LIB Home Page |publisher=JISC |accessdate=05 March 2017}}</ref>, EVIDENCE-BASED-HEALTH<ref name="EVID-BASED-HEALTH">{{cite web |url=https://www.jiscmail.ac.uk/cgi-bin/webadmin?A0=EVIDENCE-BASED-HEALTH |title=EVIDENCE-BASED-HEALTH Home Page |publisher=JISC |accessdate=05 March 2017}}</ref>, expertsearching<ref name="expertsearching">{{cite web |url=http://pss.mlanet.org/mailman/listinfo/expertsearching_pss.mlanet.org |title=expertsearching |publisher=Medical Library Association |accessdate=05 March 2017}}</ref>, and the Cochrane Information Retrieval Methods Group (IRMG).<ref name="CochIRMG">{{cite web |url=http://methods.cochrane.org/irmg/welcome |title=Information Retrieval Methods |publisher=The Cochrane Collaboration |accessdate=05 March 2017}}</ref> It was also sent directly to the members of the Chartered Institute of Library and Information Professionals (CILIP) Healthcare Libraries special interest group.<ref name="CILIP">{{cite web |url=https://www.cilip.org.uk/about/special-interest-groups/health-libraries-group |title=Health Libraries Group |publisher=CILIP |accessdate=05 March 2017}}</ref> The recruitment message and start page of the survey described the eligibility criteria for survey participants, expected time to complete the survey, its purpose, and funding source. | |||

The survey (Multimedia Appendix 1) was conducted using SurveyMonkey, a web-based software application.<ref name="SurveyMonk">{{cite web |url=https://www.surveymonkey.co.uk/ |title=SurveyMonkey |publisher=SurveyMonkey Inc |accessdate=05 March 2017}}</ref> Data were collected from July to September 2015. A total of 218 responses were received, of which 107 (49.1%, 107/218) were complete (meaning all pages of the survey had been viewed and all compulsory questions responded to). Only complete surveys were examined. Since the number of unique individuals reached by the mailing list announcements is unknown, the participation rate cannot be determined. | |||

Responses to numeric questions were not constrained to integers, as a pilot survey had shown that respondents preferred to put in approximate and/or expressive values. Text responses corresponding to numerical questions (questions 14 to 22 and 32 to 38; 16 in total) were normalized as follows: (1) when the respondent specified a range (e.g., 10 to 20 hours), the midpoint was entered (e.g., 15 hours); (2) when the respondent indicated a minimum (e.g., 10 years and greater), the minimum was entered (e.g., 10 years); and (3) when the respondent entered an approximate number (e.g., about 20), that number was entered (e.g., 20). | |||

After normalizing, 8.29% (142/1712) responses contained no numerical data and 21.61% (370/1712) responses were normalized. | |||

===Evaluation of PubMed=== | |||

An evaluation of the PubMed search system was performed using online documentation<ref name="PMTutorial">{{cite web |url=https://www.nlm.nih.gov/bsd/disted/pubmedtutorial/cover.html |title=PubMed Tutorial |publisher=U.S. National Library of Medicine |accessdate=05 March 2017}}</ref>, best practice advice<ref name="ChapmanAdvanced09">{{cite journal |title=Advanced search features of PubMed |journal=Journal of the Canadian Academy of Child and Adolescent Psychiatry |author=Chapman, D. |volume=18 |issue=1 |pages=58–9 |year=2009 |pmid=19270851 |pmc=PMC2651214}}</ref>, and direct testing of the interface using Boolean commands. In addition to the search portal, users can register to My NCBI, which provides additional functionality for saving search queries, managing results sets, and customizing filters so this was included in the comparison. The mobile version of PubMed, PubMed Mobile<ref name="PMMobile">{{cite web |url=https://www.ncbi.nlm.nih.gov/m/pubmed/ |title=Welcome to PubMed Mobile |publisher=U.S. National Library of Medicine |accessdate=05 March 2017}}</ref> does not offer extended functionality, so it was not considered in the evaluation. Although beyond the scope of this study, information seeking by healthcare practitioners on hand-held devices has been shown to save time and improve the early learning of new developments.<ref name="MickanEvidence13">{{cite journal |title=Evidence of effectiveness of health care professionals using handheld computers: A scoping review of systematic reviews |journal=Journal of Medical Internet Research |author=Mickan, S.; Tilson, J.K.; Atherton, H. et al. |volume=15 |issue=10 |pages=e212 |year=2013 |doi=10.2196/jmir.2530 |pmid=24165786 |pmc=PMC3841346}}</ref> | |||

==Results== | |||

===Demographics=== | |||

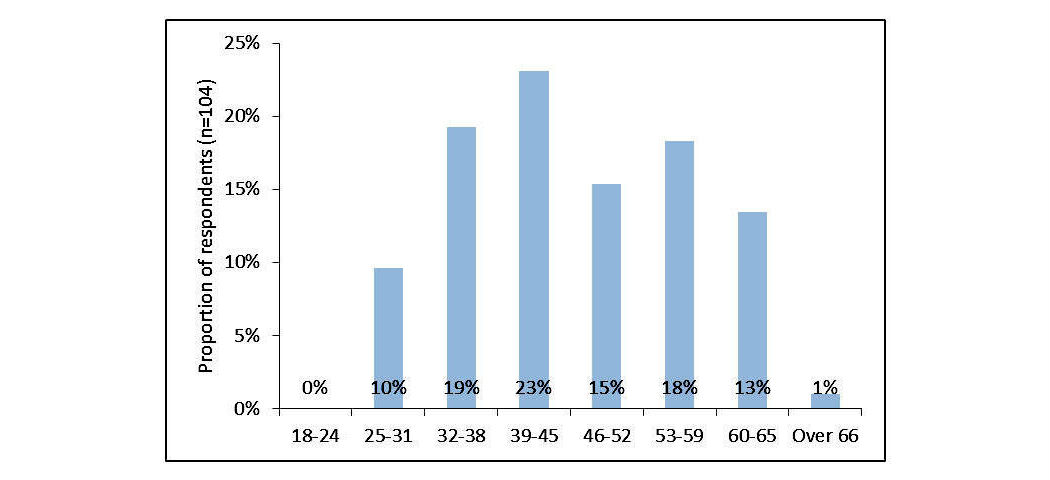

Of the respondents, 89.3% (92/103) were female. Their ages were distributed bi-modally, with peaks at 39 to 45 and 53 to 59, with a conflated average age of 46.0 (SD 10.9, N=104) (Figure 1). | |||

[[File:Fig1 Russell-Rose JMIRMedInfo2017 5-4.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 1''' Age of respondents</blockquote> | |||

|- | |||

|} | |||

|} | |||

The mean time for respondents' experience in their profession was 16.6 years (SD 10.0), greater than their 12.0 (SD 9.0) years of experience in the review of scientific literature (N=107, ''P''<.01, paired ''t'' test). Most respondents worked full time (78.5%, 84/107), and the commissioning agents for their searches were predominantly internal (i.e., within the same organization [72.9%, 78/107]). | |||

The majority of respondents were either based in the U.K. (51.4%, 55/107), the U.S. (27.1%, 29/107), or Canada (7.5%, 8/107). The remaining respondents were from Australia (2.8%, 3/107), Netherlands, Norway, and Germany (1.9% each, 2/107), as well as Denmark, Singapore, Uruguay, South Africa, Belgium, and Ireland (0.9% each, 1/107). All (100.0%, 107/107) respondents stated that the language they used most frequently for searching was English; however, 6.5% (7/107) stated that they did not use English most frequently for communication in their workplace. | |||

The majority of respondents (81.3%, 87/107) worked in organizations that provide systematic reviews. These organizations also provided other services including reference management (72.0%, 77/107), rapid evidence reviews (63.6%, 68/107), background reviews (60.7%, 65/107), and critical appraisals (52.3%, 56/107). | |||

===Search tasks=== | |||

We considered a search task in this context to be the creation of one or more strategy lines to search a specific collection of documents or databases, with task completion resulting in a set of search results that will be subject to further analysis. The output of this process is the search strategy, which is often published as part of the search documentation. This rationalization is in line with a healthcare information professionals’ understanding, but the complexity of search tasks in this domain is discussed in more detail later. | |||

The time respondents spent formulating search strategies, the time spent completing search tasks, and the number of strategy lines they used is shown in Table 1. Respondents were asked to estimate a minimum, average, and maximum for each of these measures, and the values reported here are the medians of each, with the interquartile range (IQR) shown in brackets (in the form Q1 to Q3). The final row shows the minimum, average, and maximum answers to the question: “What would you consider to be the ideal number of results returned for a typical search task?” On average, it takes 60 minutes to formulate a search strategy for a document collection, with the search task taking four hours to complete, and the final strategy consisting of 15 lines. | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="4"|'''Table 1.''' Effort to complete search tasks and evaluate results<br /><sup>a</sup>IQR: interquartile range | |||

|- | |||

! style="padding-left:10px; padding-right:10px;"|Task | |||

! style="padding-left:10px; padding-right:10px;"|Minimum (IQR<sup>a</sup>) | |||

! style="padding-left:10px; padding-right:10px;"|Average (IQR) | |||

! style="padding-left:10px; padding-right:10px;"|Maximum (IQR) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Search time per document collection/database, minutes | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|20 (10-30) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|60 (27.5-150) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|228 (86-480) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Search task completion time, hours | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|1 (0.5-2) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|4 (2-6.5) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|14 (7-30) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Strategy lines per search task, n | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|5 (2.8-10) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|15 (9.1-30) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|59 (30-105) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Results examined from a search task, n | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|10 (5-32) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|175 (75-500) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|850 (400-5250) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Time to assess relevance of a single result/document, minutes | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|1 (0.5-2) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|3 (1-5) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|10 (5-25) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Ideal number of search results per search task, n | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|0 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|10,000 | |||

|- | |||

|} | |||

|} | |||

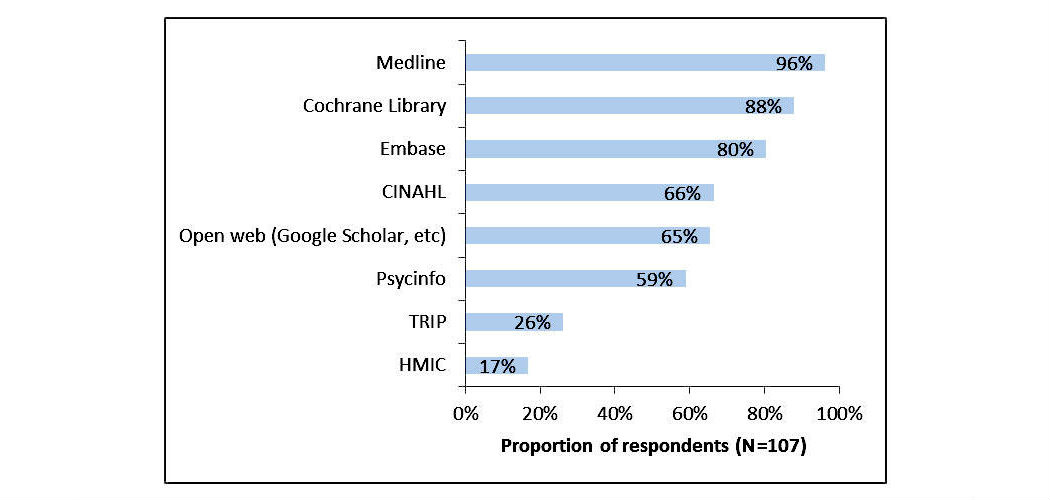

The data sources most frequently searched were MEDLINE (96.3%, 103/107), the Cochrane Library (87.9%, 94/107), and Embase (80.4%, 86/107) (Figure 2). | |||

[[File:Fig2 Russell-Rose JMIRMedInfo2017 5-4.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 2''' Data sources most frequently searched</blockquote> | |||

|- | |||

|} | |||

|} | |||

The majority of respondents (86.9%, 93/107) used previous search strategies or templates at least sometimes, suggesting that the value embodied in them is recognized and should be re-used wherever possible. In addition, most respondents (89.7%, 96/107) routinely share their search strategies in some form, either with colleagues in their workgroup, more broadly within their organization, or in some other capacity (e.g., with clients or as part of a published review). | |||

===Query formulation=== | |||

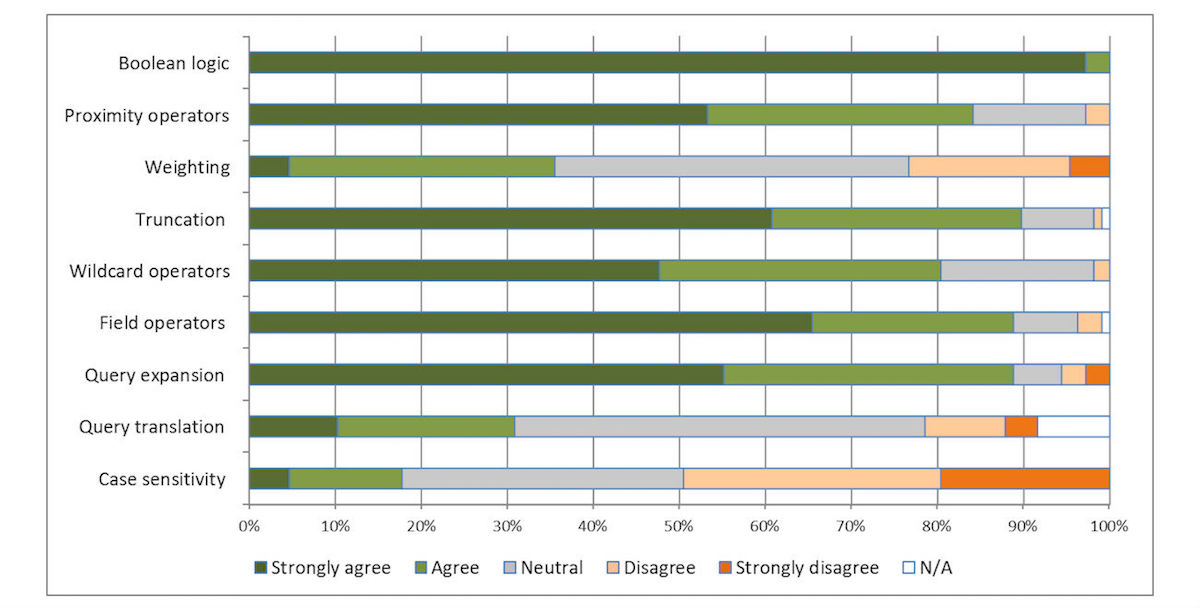

We examined the mechanics of the query formulation process by asking respondents to indicate a level of agreement to statements using a five-point Likert scale ranging from 1 (strong disagreement) to 5 (strong agreement). The results are shown in Figure 3. | |||

[[File:Fig3 Russell-Rose JMIRMedInfo2017 5-4.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 3''' Importance of query formulation functionality</blockquote> | |||

|- | |||

|} | |||

|} | |||

When asked which taxonomies are regularly used, 74.8% (80/107) of respondents indicated they used MeSH, 45.8% (49/107) Emtree, and 18.9% (20/107) CINAHL headings. | |||

When asked which combination of techniques they used to create their search strategies, 44.9% (48/107) stated they used a form-based query builder, 41.1% (44/107) did so manually on paper, and 40.2% (43/107) used a text editor. Only 9.3% (10/107) used some form of visual query builder. | |||

===Evaluating search results=== | |||

Respondents indicated that the ideal number of results returned for a search task would be 100 documents, yet in practice they evaluate more than this (a median of 175 documents; Table 1). The ideal number of results and the actual number of results evaluated are strongly correlated (N=66, ρ=.661 [Spearman rank correlation]). The average time to assess relevance of a single document was three minutes. | |||

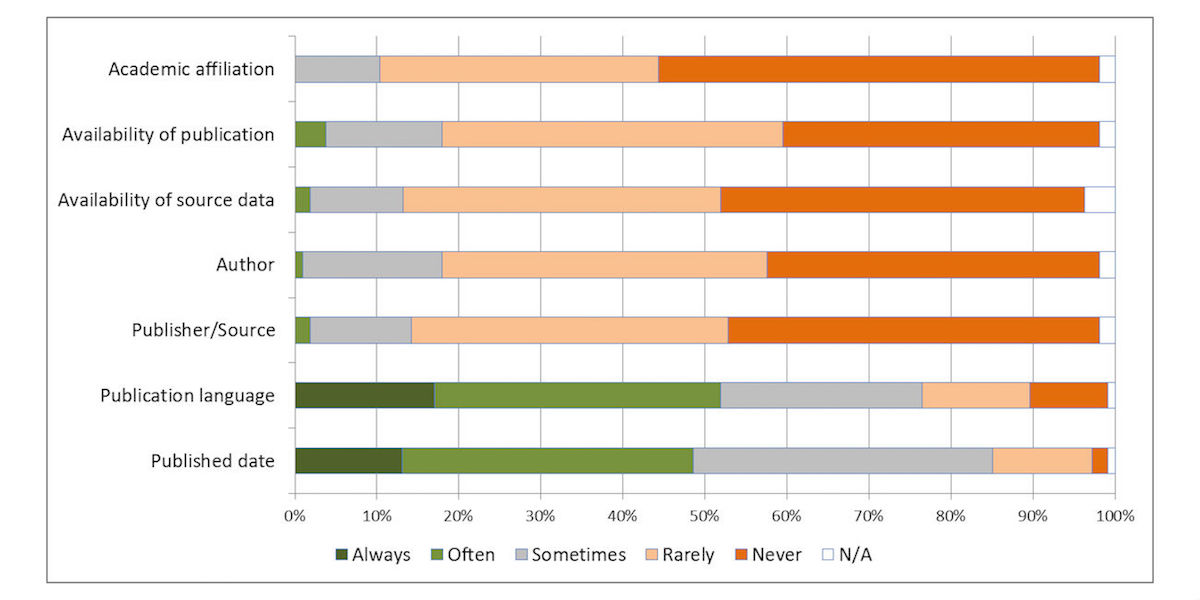

Respondents were asked to indicate on a five-point Likert scale how frequently they use search limits and restriction criteria to narrow down results. The results are shown in Figure 4. | |||

[[File:Fig4 Russell-Rose JMIRMedInfo2017 5-4.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 4''' Usage of restriction criteria</blockquote> | |||

|- | |||

|} | |||

|} | |||

We also examined respondents’ strategies for examining the search results. The most popular approaches were to “start with the result that looked most relevant” (54.2%, 58/107) or simply “select the first result” (23.4%, 25/107). No respondent suggested selecting the “most trustworthy source.” | |||

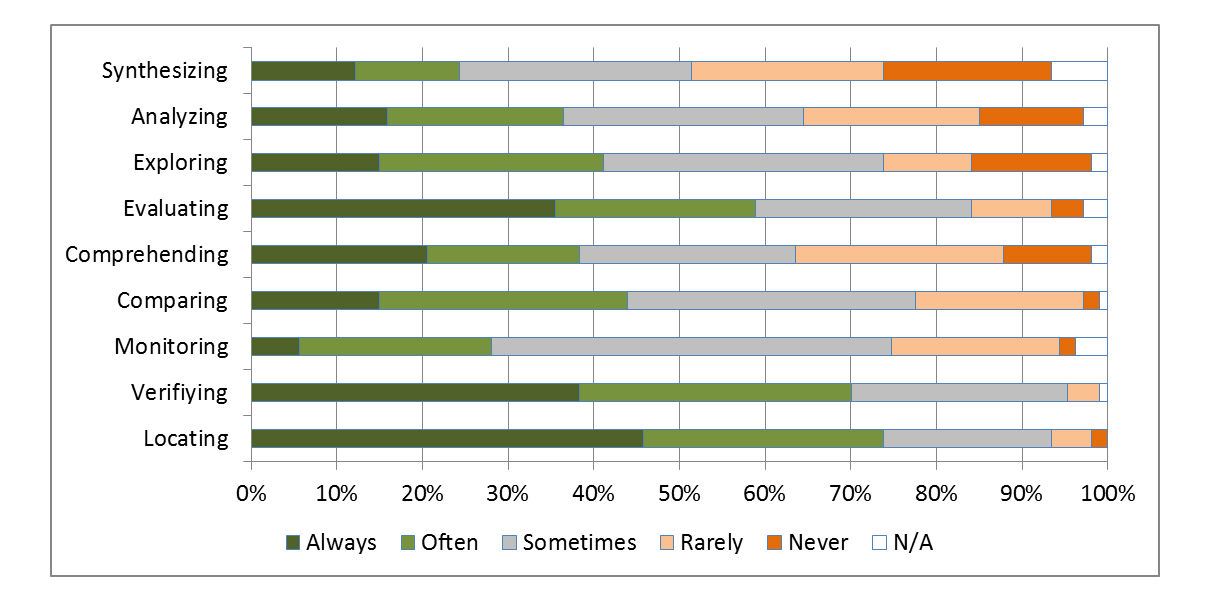

Respondents were asked what types of activities<ref name="Russell-RoseATax11">{{cite journal |title=A taxonomy of enterprise search discovery |journal=Proceedings of HCIR 2011 |author=Russell-Rose, T.; Lamantia, J.; Burrell, M. |volume=2011 |year=2011 |url=https://isquared.wordpress.com/2011/11/02/a-taxonomy-of-enterprise-search-and-discovery/}}</ref> they typically engaged in whilst completing their search task (Figure 5). “Locating, verifying, and evaluating results” were the most common activities (see Multimedia Appendix 1 for the full description of each activity, as provided to the respondents). | |||

[[File:Fig5 Russell-Rose JMIRMedInfo2017 5-4.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 5''' Activities that respondents engage in when completing a search task</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Ideal functionality for searching databases=== | |||

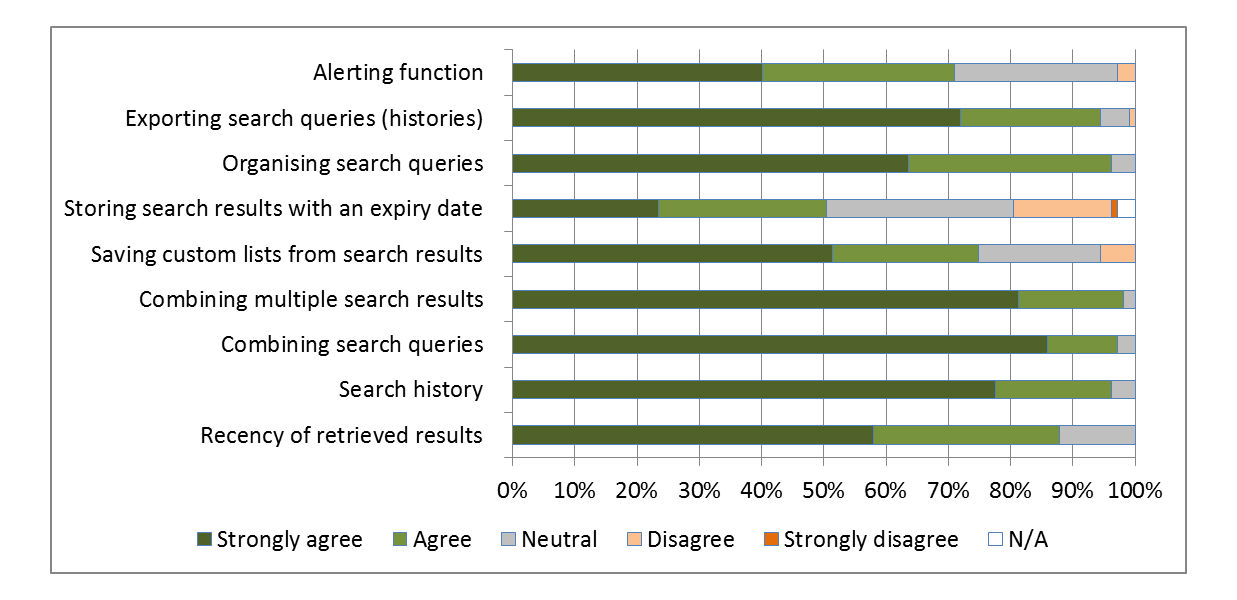

We also examined other features related to search management, organization, and history that respondents value when performing search tasks. Respondents were asked to indicate a level of agreement to a statement using a five-point Likert scale ranging from 1 (strong disagreement) to 5 (strong agreement). The results are shown in Figure 6. | |||

[[File:Fig6 Russell-Rose JMIRMedInfo2017 5-4.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 6''' Ideal features of a literature search system</blockquote> | |||

|- | |||

|} | |||

|} | |||

==Discussion== | |||

Here, the implications of the results with verbatim responses to the question “How could the process of creating and managing search strategies be improved for you?” are discussed, and the findings are contextualized in relation to the PubMed literature search system. | |||

===Search tasks=== | |||

The respondents showed they invest considerable amounts of time performing search tasks and writing search strategies. The time to search a document collection (60 minutes) indicated that their search strategies were more complex to create than most literature search queries, given that 90% of individual queries on PubMed take less than five minutes.<ref name="IslamajDoganUnderst09">{{cite journal |title=Understanding PubMed user search behavior through log analysis |journal=Database |author=Islamaj Dogan, R.; Murray, G.C.; Névéol, A.; Lu, Z. |volume=2009 |pages=bap018 |year=2009 |doi=10.1093/database/bap018 |pmid=20157491 |pmc=PMC2797455}}</ref> It is also longer than diagnostic web searches typical of front-line healthcare professionals (only 14% of medical practitioners reported spending more than 40 minutes on this search task.<ref name="GschwandtnerD8_11" /> | |||

This search effort is often recycled and routinely shared indicating a need for facilities to manage and share strategies such as: “...being able to download, share, remix, transfer and translate search strategies.” PubMed does not offer the ability to share search queries, only the results, in the form of citation Collections. | |||

===Query formulation=== | |||

The results in Figure 3 suggest two observations regarding how healthcare information professionals formulate queries. Firstly, the scores suggest a willingness to adopt a wide range of search functionality to complete search tasks. This represents a marked contrast to the behavior of typical web searchers who rarely, if ever, use any advanced search functionality.<ref name="SpinkSearching01">{{cite journal |title=Searching the web: The public and their queries |journal=Journal of the Association for Information Science and Technology |author=Spink, A.; Wolfram, D.; Jansen, M.B.J.; Saracevic, T. |volume=52 |issue=3 |pages=226–234 |year=2001 |doi=10.1002/1097-4571(2000)9999:9999<::AID-ASI1591>3.0.CO;2-R}}</ref> Secondly, the use of Boolean logic was shown to be the most important feature, closely followed by the use of synonyms and related terms. A number of other syntactic features — notably proximity operators, truncation, and wildcarding — all scored highly, reflecting the need for fine control over search strategies. Field operators were also judged to be important, reflecting the structured nature of the document collections that are searched. Query expansion (i.e., terms are expanded to include synonyms) scored highly, underlining the key role that controlled vocabularies such as MeSH play in forming effective search strategies (75% of respondents were familiar with using MeSH headings) and a requirement for, ideally, with “one universal thesaurus of medical terminology for all databases.” | |||

PubMed offers most of the query formulation functionality described in Figure 3, either through explicit Boolean queries or through related functionality. Simple keyword queries are converted into Boolean queries by using the AND operator, attempting to automatically align the keywords with MeSH terms (called Automatic Term Mapping) and expanding the query to match all search phrases. Boolean operators OR and NOT are also accepted. Users can search specific fields by using square brackets after the search term (such as for searching within abstract, author, title, etc.). Spelling correction and phrase completion are offered as the user types into the textbox. Wildcard and truncation is partially supported by allowing right-truncation only (i.e., child*) would return results for "children" and "childhood." Proximity operators are not supported; however, PubMed offers a list of related articles derived from a word-weighted algorithm.<ref name="PubMedRelated">{{cite web |url=https://ii.nlm.nih.gov/MTI/Details/related.shtml |title=PubMed Related Citations Algorithm |publisher=U.S. National Library of Medicine |date=21 March 2016 |accessdate=05 March 2017}}</ref> Search queries can also be made in multiple languages (although the only non-English data in PubMed is currently limited to the “transliterated title” field). The only functionality PubMed does not appear to offer is weighting search terms and case sensitivity, both of which were rated as the least important functionality by respondents of the survey. This highlights the difference between comprehensive searches for literature as required for a review, compared to more general web searches where relevance ranking with semi-automatic methods would be considered more important. | |||

A previous study has shown that as many as 90% of published strategies contained an error<ref name="SampsonErrors06">{{cite journal |title=Errors in search strategies were identified by type and frequency |journal=Journal of Clinical Epidemiology |author=Sampson, M.; McGowan, J. |volume=59 |issue=10 |pages=1057–63 |year=2006 |doi=10.1016/j.jclinepi.2006.01.007 |pmid=16980145}}</ref> and that reporting of strategies is commonly not in line with best practice.<ref name="YoshiiAnalysis09">{{cite journal |title=Analysis of the reporting of search strategies in Cochrane systematic reviews |journal=Journal of the Medical Library Association |author=Yoshii, A.; Plaut, D.A.; McGraw, K.A. et al. |volume=97 |issue=1 |pages=21–9 |year=2009 |doi=10.3163/1536-5050.97.1.004 |pmid=19158999 |pmc=PMC2605027}}</ref> A number of respondents suggested that healthcare information professionals need advanced query formulation support to help them with search tasks (Textbox 2). | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="80%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="6"|'''Textbox 2.''' Examples of search functionality that require advanced query formulation support | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Search functionality:<br /> <br />* Syntax checking: “…automate checking of parentheses, operators and field codes…”<br />* Truncation: “Wildcards at beginning of words; wildcard within a word (to replace a single or multiple letters eg, $sthetic or wom$n”<br />* Misspellings: “…account for misspellings…” and “UK/American spelling…”<br />* Proximity: “…interpreting proximity within sentence rather than crossing punctuation limits.”<br />* Term frequency and location: “…terms in the first and/or last sentence of the abstract only”<br />* Negation: “…a negation that doesn't exclude articles where the negated concept is preceded by a negation. ex: NOT “palliative care” will exclude abstracts with sentences like this 'in this study we didn't take in account palliative care'” | |||

|} | |||

|} | |||

PubMed allows users to build queries in stages using an HTML form to capture the query, then listing previous queries below in order for the user to make composite strategies of increasing complexity. Given that the average number of strategy lines required for a search task here was 15, this method of query construction can get increasingly complex and difficult for the user to understand and manipulate. Only 5.7% (10/176) of survey respondents reported using a visual query builder, an indication that there is very little support for healthcare information professionals in the intuitive construction of complex search queries. They also indicated a desire for advanced editing functionality, in particular: | |||

* moving search lines up and down the history | |||

* adding tags or descriptions to search strategies, allowing for the ability to sort by name, topic, or date | |||

* taking notes about why they added terms, syntax, etc. | |||

It is clear that respondents commonly work across multiple platforms — in particular MEDLINE, Cochrane, and Embase — and this is in line with findings from previous research.<ref name="BorahAnalysis17">{{cite journal |title=Analysis of the time and workers needed to conduct systematic reviews of medical interventions using data from the PROSPERO registry |journal=BMJ Open |author=Borah, R.; Brown, A.W.; Capers, P.L. et al. |volume=7 |issue=2 |pages=e012545 |year=2017 |doi=10.1136/bmjopen-2016-012545 |pmid=28242767 |pmc=PMC5337708}}</ref> There is therefore a need for standardization and consistency between suppliers: “A service that could map search strategy between databases would save a lot of time.” | |||

===Evaluating search results=== | |||

The figure of 100 average (median) ideal search results masks the non-parametric nature of the data; the number of search results obtained may vary considerably depending on the topic and body of literature available in that domain.<ref name="BorahAnalysis17" /> Healthcare information professionals may adjust their expectations of sensitivity (or recall) in relation to their searches, depending on the need for coverage and inclusiveness. Clearly the ideal response from a search is more nuanced than a single figure can convey; however, respondents indicated that they find more results from their searches than they would ideally like to evaluate. This may be the result of an abundance of published research or that the search parameters are not restrictive enough to return an appropriate number of results. | |||

The time to assess each result (three minutes) seems short when considering the length of some of the documents that will be analyzed. However, the search task is the first stage of a much longer process in which the retrieved documents are exposed to further phases of evaluation (Figure 5). In this context, the time to assess relevance may reflect the dynamics of the initial sift, which is a much smaller fraction of the overall attention given to a document. | |||

Publication date was considered to be the most important results filtering criteria, followed by publication language (Figure 4); however, other criteria were not considered important in the restriction of results. Certain respondents mentioned other criteria they use, including publication type, study scope (e.g., human only), study design, age range, and gender. All filtering and restriction criteria mentioned here can be used to narrow down results in PubMed. | |||

The fact that no respondent valued sorting by most trustworthy source contrasts sharply with the strategies used in another study of the healthcare profession.<ref name="GschwandtnerD8_11" /> This most likely reflects the difference between the largely curated (and to some extent implicitly trusted) databases referred to in our study (MEDLINE, Embase, and the Cochrane Library) and the relatively uncontrolled web resources used in Geschwandtner’s study. | |||

===Ideal functionality=== | |||

Respondents scored all options of ideal functionality highly, indicating a general desire for advanced search functionality. Combining search queries and combining search results were rated as the most important, reflecting the current paradigm for building search queries (i.e., the line-by-line strategy building approach offered by most databases, including PubMed). The participants rated the ability to export search queries (histories) highly, reflecting their need to publish completed search strategies as part of their professional practice. | |||

All of the functionality described in Figure 6 as being desired by healthcare information professionals is available through PubMed, either directly or by registering as a free user of My NCBI. It is therefore surprising that the verbatim responses from the respondents indicated that typical systems fall short in terms of their needs. | |||

One reason may be that PubMed attempts to cater to a wide range of user knowledge (approximately one third of PubMed users are not domain experts<ref name="LacroixTheUS02">{{cite journal |title=The US National Library of Medicine in the 21st century: Expanding collections, nontraditional formats, new audiences |journal=Health Information and Libraries Journal |author=Lacroix, E.M.; Mehnert, R. |volume=19 |issue=3 |pages=126–32 |year=2002 |pmid=12390234}}</ref>) and search expertise, from simple keyword queries to complex search strategies. Query log analysis has shown a difference between how users of different skills perform on PubMed<ref name="YooAnalysis15">{{cite journal |title=Analysis of PubMed User Sessions Using a Full-Day PubMed Query Log: A Comparison of Experienced and Nonexperienced PubMed Users |journal=JMIR Medical Informatics |author=Yoo, I.; Mosa, A.S. |volume=3 |issue=3 |pages=e25 |year=2015 |doi=10.2196/medinform.3740 |pmid=26139516 |pmc=PMC4526974}}</ref>, and PubMed attempts to accommodate all their needs in one interface. One example of this compromise is the lack of truncation and proximity operators, which may be exactly what is required by a healthcare information professional performing a systematic review for a topic with few articles. | |||

===Limitations=== | |||

A limitation of this survey is the sample size, compared to some surveys of healthcare information professionals<ref name="GilliesACollab08" /><ref name="CiapponiSurvey12" />; however, engagement with professionals in this sector has been shown to be challenging, with lower participation rates reported elsewhere.<ref name="GrantHowDoes04" /><ref name="McGowanPRESS16" /> We believe the completion rate of the survey (49.1%, 107/218) is high for a survey of this length (approximately 15 minutes); however, greater participation could have been achieved with a shorter, more targeted survey. We acknowledge that the lack of control over distribution and that it was only administered in English may introduce selection bias. The demographics of this survey have a similar distribution to a larger survey of healthcare information professionals<ref name="CrookHealth14">{{cite web |url=https://www.echima.ca/uploaded/pdf/reports/HI-HIM-HR-Outlook-Report-Final-w-design.pdf |format=PDF |title=Health Informatics and Health Information Management: Human Resources Outlook 2014–2019 |author=Crook, G.; Newsham, D.; Anani, N. et al. |publisher=Prism Economics and Analysis |date=June 2014 |accessdate=05 March 2017}}</ref> (95% females compared to 86% reported here, with an average age of 47.2 compared to 46.0 here), an indication that the sampled population may be representative of the profession. | |||

A further limitation of this study is whether respondents fully understood our distinction between search tasks and search strategies (which follows the precedent of previous survey designs and hence facilitates direct comparison with their results). An additional evaluation of other literature search tools (such as Ovid) would have provided a more extensive survey of functionality available to healthcare information professionals; however, as PubMed was the most frequently used by the respondents, it is more representative of the tools they have at their disposal. A full survey of free and subscription search tools available in healthcare would be useful future work. Despite these limitations, we believe the research provides valuable insight into the requirements of healthcare information professionals. | |||

==Conclusions== | |||

This paper summarizes the results of a survey of the information retrieval practices of healthcare information professionals, focusing in particular on the process of search strategy development. Our findings suggest that they routinely address some of the most challenging information retrieval problems of any profession, but current literature search systems offer only limited support for their requirements. The functionality offered by PubMed goes some way toward meeting those needs, but is compromised by the need to serve all types of users who may not require the same degree of fine control over their search strategies. In particular, there is a need for improved functionality regarding the management of search strategies and the ability to search across multiple databases. | |||

The results of this study will be used to inform the development of future retrieval systems for healthcare information professionals and for others performing healthcare-related search tasks. | |||

==Acknowledgements== | |||

The authors would like to thank the participants for completing the survey, the professional associations that distributed the survey to their members, and to the healthcare information professionals who helped shape the survey instrument and provide context for the results. | |||

==Conflict of interest== | |||

None declared. | |||

==Additional files== | |||

[http://medinform.jmir.org/article/downloadSuppFile/7680/50095 Multimedia Appendix 1: Survey Instrument] (PDF) | |||

==References== | ==References== | ||

Latest revision as of 19:45, 19 September 2021

| Full article title | Expert search strategies: The information retrieval practices of healthcare information professionals |

|---|---|

| Journal | JMIR Medical Informatics |

| Author(s) | Russell-Rose, Tony; Chamberlain, Jon |

| Author affiliation(s) | UXLabs Ltd., University of Essex |

| Primary contact | Email: tgr at uxlabs dot co dot uk |

| Editors | Eysenbach, G. |

| Year published | 2017 |

| Volume and issue | 5 (4) |

| Page(s) | e33 |

| DOI | 10.2196/medinform.7680 |

| ISSN | 2291-9694 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | http://medinform.jmir.org/2017/4/e33/ |

| Download | http://medinform.jmir.org/2017/4/e33/pdf (PDF) |

Abstract

Background: Healthcare information professionals play a key role in closing the knowledge gap between medical research and clinical practice. Their work involves meticulous searching of literature databases using complex search strategies that can consist of hundreds of keywords, operators, and ontology terms. This process is prone to error and can lead to inefficiency and bias if performed incorrectly.

Objective: The aim of this study was to investigate the search behavior of healthcare information professionals, uncovering their needs, goals, and requirements for information retrieval systems.

Methods: A survey was distributed to healthcare information professionals via professional association email discussion lists. It investigated the search tasks they undertake, their techniques for search strategy formulation, their approaches to evaluating search results, and their preferred functionality for searching library-style databases. The popular literature search system PubMed was then evaluated to determine the extent to which their needs were met.

Results: The 107 respondents indicated that their information retrieval process relied on the use of complex, repeatable, and transparent search strategies. On average it took 60 minutes to formulate a search strategy, with a search task taking four hours and consisting of 15 strategy lines. Respondents reviewed a median of 175 results per search task, far more than they would ideally like (100). The most desired features of a search system were merging search queries and combining search results.

Conclusions: Healthcare information professionals routinely address some of the most challenging information retrieval problems of any profession. However, their needs are not fully supported by current literature search systems, and there is demand for improved functionality, in particular regarding the development and management of search strategies.

Keywords: review, surveys and questionnaires, search engine, information management, information systems

Introduction

Background

Medical knowledge is growing so rapidly that it is difficult for healthcare professionals to keep up. As the volume of published studies increases each year[1], the gap between research knowledge and professional practice grows.[2] Frontline healthcare providers (such as general practitioners [GPs]) responding to the immediate needs of patients may employ a web-style search for diagnostic purposes, with Google being reported to be a useful diagnostic tool[3]; however, the credibility of results depends on the domain.[4] Medical staff may also perform more in-depth searches, such as rapid evidence reviews, where a concise summary of what is known about a topic or intervention is required.[5]

Healthcare information professionals play the primary role in closing the gap between published research and medical practice, by synthesizing the complex, incomplete, and at times conflicting findings of biomedical research into a form that can readily inform healthcare decision making.[6] The systematic literature review process relies on the painstaking and meticulous searching of multiple databases using complex Boolean search strategies that often consist of hundreds of keywords, operators, and ontology terms[7] (Textbox 1).

| |||||||

Performing a systematic review is a resource-intensive and time consuming undertaking, sometimes taking years to complete.[8] It involves a lengthy content production process whose output relies heavily on the quality of the initial search strategy, particularly in ensuring that the scope is sufficiently exhaustive and that the review is not biased by easily accessible studies.[9]

Numerous studies have been performed to investigate the healthcare information retrieval process and to better understand the challenges involved in strategy development, as it has been noted that online health resources are not created by healthcare professionals.[10] For example, Grant[11] used a combination of a semi-structured questionnaire and interviews to study researchers’ experiences of searching the literature, with particular reference to the use of optimal search strategies. McGowan et al.[12] used a combination of a web-based survey and peer review forums to investigate what elements of the search process have the most impact on the overall quality of the resulting evidence base. Similarly, Gillies et al.[13] used an online survey to investigate the review, with a view to identifying problems and barriers for authors of Cochrane reviews. Ciapponi and Glujovsky[14] also used an online survey to study the early stages of systematic review.

No single database can cover all the medical literature required for a systematic review, although some are considered to be a core element of any healthcare search strategy, such as MEDLINE[15], Embase[16], and the Cochrane Library.[17] Consequently, healthcare information professionals may consult these sources along with a number of other, more specialized databases to fit the precise scope area.[18]

A survey[1] of online tools for searching literature databases using PubMed[19], the online literature search service primarily for MEDLINE, showed that most tools were developed for managing search results (such as ranking, clustering into topics and enriching with semantics). Very few tools improved on the standard PubMed search interface or offered advanced Boolean string editing methods in order to support complex literature searching.

Objective

To improve the accuracy and efficiency of the literature search process, it is essential that information retrieval applications (in this case, databases of medical literature and the interfaces through which they are accessed) are designed to support the tasks, needs, and expectations of their users. To do so they should consider the layers of context that influence the search task[20] and how this affects the various phases in the search process.[21] This study was designed to fill gaps in this knowledge by investigating the information retrieval practices of healthcare information professionals and contrasting their requirements to the level of support offered by a widely used literature search tool (PubMed).

The specific research questions addressed by this study were (1) How long do search tasks take when performed by healthcare information professionals? (2) How do they formulate search strategies and what kind of search functionality do they use? (3) How are search results evaluated? (4) What functionality do they value in a literature search system? (5) To what extent are their requirements and aspirations met by the PubMed literature search system?

In answering these research questions we hope to provide direct comparisons within other professions (e.g., in terms of the structure, complexity, and duration of their search tasks).

Methods

Online survey

The survey instrument consisted of an online questionnaire of 58 questions divided into five sections. It was designed to align with the structure and content of Joho et al.’s[22] survey of patent searchers and wherever possible also with Geschwandtner et al.’s[23] survey of medical professionals to facilitate comparisons with other professions. The following were the five sections: (1) Demographics, the background and professional experience of the respondents; (2) Search tasks, the tasks that respondents perform when searching literature databases; (3) Query formulation, the techniques respondents used to formulate search strategies; (4) Evaluating search results, how respondents evaluate the results of their search tasks; and (5) Ideal functionality for searching databases, any other features that respondents value when searching literature databases.

The survey was designed to be completed in approximately 15 minutes and was pre-tested for face validity by two health sciences librarians.

Survey respondents were recruited by sending an email invitation with a link to the survey to five healthcare professional association mailing lists that deal with systematic reviews and medical librarianship: LIS-MEDICAL[24], CLIN-LIB[25], EVIDENCE-BASED-HEALTH[26], expertsearching[27], and the Cochrane Information Retrieval Methods Group (IRMG).[28] It was also sent directly to the members of the Chartered Institute of Library and Information Professionals (CILIP) Healthcare Libraries special interest group.[29] The recruitment message and start page of the survey described the eligibility criteria for survey participants, expected time to complete the survey, its purpose, and funding source.

The survey (Multimedia Appendix 1) was conducted using SurveyMonkey, a web-based software application.[30] Data were collected from July to September 2015. A total of 218 responses were received, of which 107 (49.1%, 107/218) were complete (meaning all pages of the survey had been viewed and all compulsory questions responded to). Only complete surveys were examined. Since the number of unique individuals reached by the mailing list announcements is unknown, the participation rate cannot be determined.

Responses to numeric questions were not constrained to integers, as a pilot survey had shown that respondents preferred to put in approximate and/or expressive values. Text responses corresponding to numerical questions (questions 14 to 22 and 32 to 38; 16 in total) were normalized as follows: (1) when the respondent specified a range (e.g., 10 to 20 hours), the midpoint was entered (e.g., 15 hours); (2) when the respondent indicated a minimum (e.g., 10 years and greater), the minimum was entered (e.g., 10 years); and (3) when the respondent entered an approximate number (e.g., about 20), that number was entered (e.g., 20).

After normalizing, 8.29% (142/1712) responses contained no numerical data and 21.61% (370/1712) responses were normalized.

Evaluation of PubMed

An evaluation of the PubMed search system was performed using online documentation[31], best practice advice[32], and direct testing of the interface using Boolean commands. In addition to the search portal, users can register to My NCBI, which provides additional functionality for saving search queries, managing results sets, and customizing filters so this was included in the comparison. The mobile version of PubMed, PubMed Mobile[33] does not offer extended functionality, so it was not considered in the evaluation. Although beyond the scope of this study, information seeking by healthcare practitioners on hand-held devices has been shown to save time and improve the early learning of new developments.[34]

Results

Demographics

Of the respondents, 89.3% (92/103) were female. Their ages were distributed bi-modally, with peaks at 39 to 45 and 53 to 59, with a conflated average age of 46.0 (SD 10.9, N=104) (Figure 1).

|

The mean time for respondents' experience in their profession was 16.6 years (SD 10.0), greater than their 12.0 (SD 9.0) years of experience in the review of scientific literature (N=107, P<.01, paired t test). Most respondents worked full time (78.5%, 84/107), and the commissioning agents for their searches were predominantly internal (i.e., within the same organization [72.9%, 78/107]).

The majority of respondents were either based in the U.K. (51.4%, 55/107), the U.S. (27.1%, 29/107), or Canada (7.5%, 8/107). The remaining respondents were from Australia (2.8%, 3/107), Netherlands, Norway, and Germany (1.9% each, 2/107), as well as Denmark, Singapore, Uruguay, South Africa, Belgium, and Ireland (0.9% each, 1/107). All (100.0%, 107/107) respondents stated that the language they used most frequently for searching was English; however, 6.5% (7/107) stated that they did not use English most frequently for communication in their workplace.

The majority of respondents (81.3%, 87/107) worked in organizations that provide systematic reviews. These organizations also provided other services including reference management (72.0%, 77/107), rapid evidence reviews (63.6%, 68/107), background reviews (60.7%, 65/107), and critical appraisals (52.3%, 56/107).

Search tasks

We considered a search task in this context to be the creation of one or more strategy lines to search a specific collection of documents or databases, with task completion resulting in a set of search results that will be subject to further analysis. The output of this process is the search strategy, which is often published as part of the search documentation. This rationalization is in line with a healthcare information professionals’ understanding, but the complexity of search tasks in this domain is discussed in more detail later.

The time respondents spent formulating search strategies, the time spent completing search tasks, and the number of strategy lines they used is shown in Table 1. Respondents were asked to estimate a minimum, average, and maximum for each of these measures, and the values reported here are the medians of each, with the interquartile range (IQR) shown in brackets (in the form Q1 to Q3). The final row shows the minimum, average, and maximum answers to the question: “What would you consider to be the ideal number of results returned for a typical search task?” On average, it takes 60 minutes to formulate a search strategy for a document collection, with the search task taking four hours to complete, and the final strategy consisting of 15 lines.

| ||||||||||||||||||||||||||||||||

The data sources most frequently searched were MEDLINE (96.3%, 103/107), the Cochrane Library (87.9%, 94/107), and Embase (80.4%, 86/107) (Figure 2).

|

The majority of respondents (86.9%, 93/107) used previous search strategies or templates at least sometimes, suggesting that the value embodied in them is recognized and should be re-used wherever possible. In addition, most respondents (89.7%, 96/107) routinely share their search strategies in some form, either with colleagues in their workgroup, more broadly within their organization, or in some other capacity (e.g., with clients or as part of a published review).

Query formulation

We examined the mechanics of the query formulation process by asking respondents to indicate a level of agreement to statements using a five-point Likert scale ranging from 1 (strong disagreement) to 5 (strong agreement). The results are shown in Figure 3.

|

When asked which taxonomies are regularly used, 74.8% (80/107) of respondents indicated they used MeSH, 45.8% (49/107) Emtree, and 18.9% (20/107) CINAHL headings.

When asked which combination of techniques they used to create their search strategies, 44.9% (48/107) stated they used a form-based query builder, 41.1% (44/107) did so manually on paper, and 40.2% (43/107) used a text editor. Only 9.3% (10/107) used some form of visual query builder.

Evaluating search results

Respondents indicated that the ideal number of results returned for a search task would be 100 documents, yet in practice they evaluate more than this (a median of 175 documents; Table 1). The ideal number of results and the actual number of results evaluated are strongly correlated (N=66, ρ=.661 [Spearman rank correlation]). The average time to assess relevance of a single document was three minutes.

Respondents were asked to indicate on a five-point Likert scale how frequently they use search limits and restriction criteria to narrow down results. The results are shown in Figure 4.

|

We also examined respondents’ strategies for examining the search results. The most popular approaches were to “start with the result that looked most relevant” (54.2%, 58/107) or simply “select the first result” (23.4%, 25/107). No respondent suggested selecting the “most trustworthy source.”

Respondents were asked what types of activities[35] they typically engaged in whilst completing their search task (Figure 5). “Locating, verifying, and evaluating results” were the most common activities (see Multimedia Appendix 1 for the full description of each activity, as provided to the respondents).

|

Ideal functionality for searching databases

We also examined other features related to search management, organization, and history that respondents value when performing search tasks. Respondents were asked to indicate a level of agreement to a statement using a five-point Likert scale ranging from 1 (strong disagreement) to 5 (strong agreement). The results are shown in Figure 6.

|

Discussion

Here, the implications of the results with verbatim responses to the question “How could the process of creating and managing search strategies be improved for you?” are discussed, and the findings are contextualized in relation to the PubMed literature search system.

Search tasks

The respondents showed they invest considerable amounts of time performing search tasks and writing search strategies. The time to search a document collection (60 minutes) indicated that their search strategies were more complex to create than most literature search queries, given that 90% of individual queries on PubMed take less than five minutes.[36] It is also longer than diagnostic web searches typical of front-line healthcare professionals (only 14% of medical practitioners reported spending more than 40 minutes on this search task.[23]

This search effort is often recycled and routinely shared indicating a need for facilities to manage and share strategies such as: “...being able to download, share, remix, transfer and translate search strategies.” PubMed does not offer the ability to share search queries, only the results, in the form of citation Collections.

Query formulation

The results in Figure 3 suggest two observations regarding how healthcare information professionals formulate queries. Firstly, the scores suggest a willingness to adopt a wide range of search functionality to complete search tasks. This represents a marked contrast to the behavior of typical web searchers who rarely, if ever, use any advanced search functionality.[37] Secondly, the use of Boolean logic was shown to be the most important feature, closely followed by the use of synonyms and related terms. A number of other syntactic features — notably proximity operators, truncation, and wildcarding — all scored highly, reflecting the need for fine control over search strategies. Field operators were also judged to be important, reflecting the structured nature of the document collections that are searched. Query expansion (i.e., terms are expanded to include synonyms) scored highly, underlining the key role that controlled vocabularies such as MeSH play in forming effective search strategies (75% of respondents were familiar with using MeSH headings) and a requirement for, ideally, with “one universal thesaurus of medical terminology for all databases.”

PubMed offers most of the query formulation functionality described in Figure 3, either through explicit Boolean queries or through related functionality. Simple keyword queries are converted into Boolean queries by using the AND operator, attempting to automatically align the keywords with MeSH terms (called Automatic Term Mapping) and expanding the query to match all search phrases. Boolean operators OR and NOT are also accepted. Users can search specific fields by using square brackets after the search term (such as for searching within abstract, author, title, etc.). Spelling correction and phrase completion are offered as the user types into the textbox. Wildcard and truncation is partially supported by allowing right-truncation only (i.e., child*) would return results for "children" and "childhood." Proximity operators are not supported; however, PubMed offers a list of related articles derived from a word-weighted algorithm.[38] Search queries can also be made in multiple languages (although the only non-English data in PubMed is currently limited to the “transliterated title” field). The only functionality PubMed does not appear to offer is weighting search terms and case sensitivity, both of which were rated as the least important functionality by respondents of the survey. This highlights the difference between comprehensive searches for literature as required for a review, compared to more general web searches where relevance ranking with semi-automatic methods would be considered more important.

A previous study has shown that as many as 90% of published strategies contained an error[39] and that reporting of strategies is commonly not in line with best practice.[40] A number of respondents suggested that healthcare information professionals need advanced query formulation support to help them with search tasks (Textbox 2).

| |||||||

PubMed allows users to build queries in stages using an HTML form to capture the query, then listing previous queries below in order for the user to make composite strategies of increasing complexity. Given that the average number of strategy lines required for a search task here was 15, this method of query construction can get increasingly complex and difficult for the user to understand and manipulate. Only 5.7% (10/176) of survey respondents reported using a visual query builder, an indication that there is very little support for healthcare information professionals in the intuitive construction of complex search queries. They also indicated a desire for advanced editing functionality, in particular:

- moving search lines up and down the history

- adding tags or descriptions to search strategies, allowing for the ability to sort by name, topic, or date

- taking notes about why they added terms, syntax, etc.

It is clear that respondents commonly work across multiple platforms — in particular MEDLINE, Cochrane, and Embase — and this is in line with findings from previous research.[41] There is therefore a need for standardization and consistency between suppliers: “A service that could map search strategy between databases would save a lot of time.”

Evaluating search results

The figure of 100 average (median) ideal search results masks the non-parametric nature of the data; the number of search results obtained may vary considerably depending on the topic and body of literature available in that domain.[41] Healthcare information professionals may adjust their expectations of sensitivity (or recall) in relation to their searches, depending on the need for coverage and inclusiveness. Clearly the ideal response from a search is more nuanced than a single figure can convey; however, respondents indicated that they find more results from their searches than they would ideally like to evaluate. This may be the result of an abundance of published research or that the search parameters are not restrictive enough to return an appropriate number of results.

The time to assess each result (three minutes) seems short when considering the length of some of the documents that will be analyzed. However, the search task is the first stage of a much longer process in which the retrieved documents are exposed to further phases of evaluation (Figure 5). In this context, the time to assess relevance may reflect the dynamics of the initial sift, which is a much smaller fraction of the overall attention given to a document.

Publication date was considered to be the most important results filtering criteria, followed by publication language (Figure 4); however, other criteria were not considered important in the restriction of results. Certain respondents mentioned other criteria they use, including publication type, study scope (e.g., human only), study design, age range, and gender. All filtering and restriction criteria mentioned here can be used to narrow down results in PubMed.

The fact that no respondent valued sorting by most trustworthy source contrasts sharply with the strategies used in another study of the healthcare profession.[23] This most likely reflects the difference between the largely curated (and to some extent implicitly trusted) databases referred to in our study (MEDLINE, Embase, and the Cochrane Library) and the relatively uncontrolled web resources used in Geschwandtner’s study.

Ideal functionality

Respondents scored all options of ideal functionality highly, indicating a general desire for advanced search functionality. Combining search queries and combining search results were rated as the most important, reflecting the current paradigm for building search queries (i.e., the line-by-line strategy building approach offered by most databases, including PubMed). The participants rated the ability to export search queries (histories) highly, reflecting their need to publish completed search strategies as part of their professional practice.

All of the functionality described in Figure 6 as being desired by healthcare information professionals is available through PubMed, either directly or by registering as a free user of My NCBI. It is therefore surprising that the verbatim responses from the respondents indicated that typical systems fall short in terms of their needs.

One reason may be that PubMed attempts to cater to a wide range of user knowledge (approximately one third of PubMed users are not domain experts[42]) and search expertise, from simple keyword queries to complex search strategies. Query log analysis has shown a difference between how users of different skills perform on PubMed[43], and PubMed attempts to accommodate all their needs in one interface. One example of this compromise is the lack of truncation and proximity operators, which may be exactly what is required by a healthcare information professional performing a systematic review for a topic with few articles.

Limitations