Journal:Popularity and performance of bioinformatics software: The case of gene set analysis

| Full article title | Popularity and performance of bioinformatics software: The case of gene set analysis |

|---|---|

| Journal | BMC Bioinformatics |

| Author(s) | Xie, Chengshu; Jauhari, Shaurya; Mora, Antonio |

| Author affiliation(s) | Guangzhou Medical University, Guangzhou Institutes of Biomedicine and Health |

| Primary contact | Online form |

| Year published | 2021 |

| Volume and issue | 22 |

| Article # | 191 |

| DOI | 10.1186/s12859-021-04124-5 |

| ISSN | 1471-2105 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-021-04124-5 |

| Download | https://bmcbioinformatics.biomedcentral.com/track/pdf/10.1186/s12859-021-04124-5.pdf (PDF) |

Abstract

Background: Gene set analysis (GSA) is arguably the method of choice for the functional interpretation of omics results. This work explores the popularity and the performance of all the GSA methodologies and software published during the 20 years since its inception. "Popularity" is estimated according to each paper's citation counts, while "performance" is based on a comprehensive evaluation of the validation strategies used by papers in the field, as well as the consolidated results from the existing benchmark studies.

Results: Regarding popularity, data is collected into an online open database ("GSARefDB") which allows browsing bibliographic and method-descriptive information from 503 GSA paper references; regarding performance, we introduce a repository of Jupyter Notebook workflows and Shiny apps for automated benchmarking of GSA methods (“GSA-BenchmarKING”). After comparing popularity versus performance, results show discrepancies between the most popular and the best performing GSA methods.

Conclusions: The above-mentioned results call our attention towards the nature of the tool selection procedures followed by researchers and raise doubts regarding the quality of the functional interpretation of biological datasets in current biomedical studies. Suggestions for the future of the functional interpretation field are made, including strategies for education and discussion of GSA tools, better validation and benchmarking practices, reproducibility, and functional re-analysis of previously reported data.

Keywords: bioinformatics, pathway analysis, gene set analysis, benchmark, GSEA

Background

Bioinformatics method and software selection is an important problem in biomedical research, due to the possible consequences of choosing the wrong methods among the existing myriad of computational methods and software available. Errors in software selection may include the use of outdated or suboptimal methods (or reference databases) or misunderstanding the parameters and assumptions behind the chosen methods. Such errors may affect the conclusions of the entire research project and nullify the efforts made on the rest of the experimental and computational pipeline.[1]

This paper discusses two main factors that motivate researchers to make method or software choices, that is, the popularity (defined as the perceived frequency of use of a tool among members of the community) and the performance (defined as a quantitative quality indicator measured and compared to alternative tools). This study is focused on the field of “gene set analysis” (GSA), where the popularity and performance of bioinformatics software show discrepancies, and therefore the question appears whether biomedical sciences have been using the best available GSA methods or not.

GSA is arguably the most common procedure for functional interpretation of omics data, and, for the purposes of this paper, we define it as the comparison of a query gene set (a list or a rank of differentially expressed genes, for example) to a reference database, using a particular statistical method, in order to interpret it as a rank of significant pathways, functionally related gene sets, or ontology terms. Such definition includes the categories that have been traditionally called "gene set analysis," "pathway analysis," "ontology analysis," and "enrichment analysis." All GSA methods have a common goal, which is the interpretation of biomolecular data in terms of annotated gene sets, while they differ depending on characteristics of the computational approach (for more details, see the "Methods" section, as well as Fig. 1 of Mora[2]).

GSA has arrived to 20 years of existence since the original paper of Tavazoie et al.[3], and many statistical methods and software tools have been developed during this time. A popular review paper listed 68 GSA tools[4], while a second review reported an additional 33 tools[5], and a third paper 22 tools.[6] We have built the most comprehensive list of references to date (503 papers), and we have quantified each paper’s influence according to their current number of citations (see Additional file 1 and Mora's GSARefDB[7]). The most common GSA methods include Over-Representation Analysis (ORA), such as that found with DAVID[8]; Functional Class Scoring (FCS), such as that found with GSEA[9]; and Pathway-Topology-based (PT) methods, such as that found with SPIA.[10] All been extensively reviewed. In order to know more about them, the reader may consult any of the 62 published reviews documented in Additional file 1. We have also recently reviewed other types of GSA methods.[2]

The first part of the analysis is a study on GSA method and software popularity based on a comprehensive database of 503 papers for all methods, tools, platforms, reviews, and benchmarks of the GSA field, collectively cited 134,222 times between 1999 and 2019, including their popularity indicators and other relevant characteristics. The second part is a study on performance based on the validation procedures reported in the papers, introducing new methods together with all the existing independent benchmark studies in the above-mentioned database. Instead of recommending one single GSA method, we focus on discussing better benchmarking practices and generating benchmarking tools that follow such practices. Together, both parts of the study allow us to compare the popularity and performance of GSA tools but also to explore possible explanations for the popularity phenomenon and the problems that limit the execution and adoption of independent performance studies. In the end, some practices are suggested to guarantee that bioinformatics software selection is guided by the most appropriate metrics.

Results

Popularity

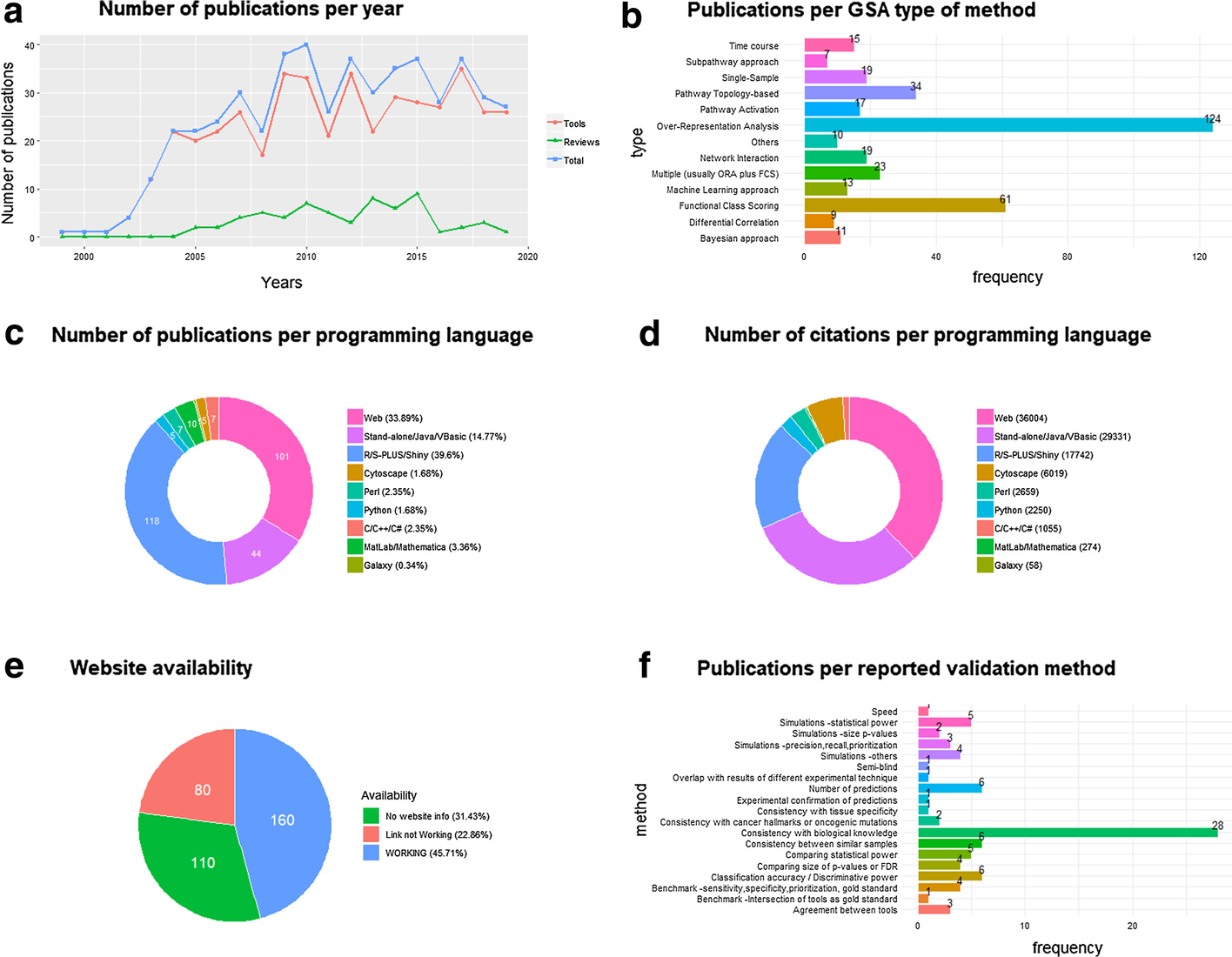

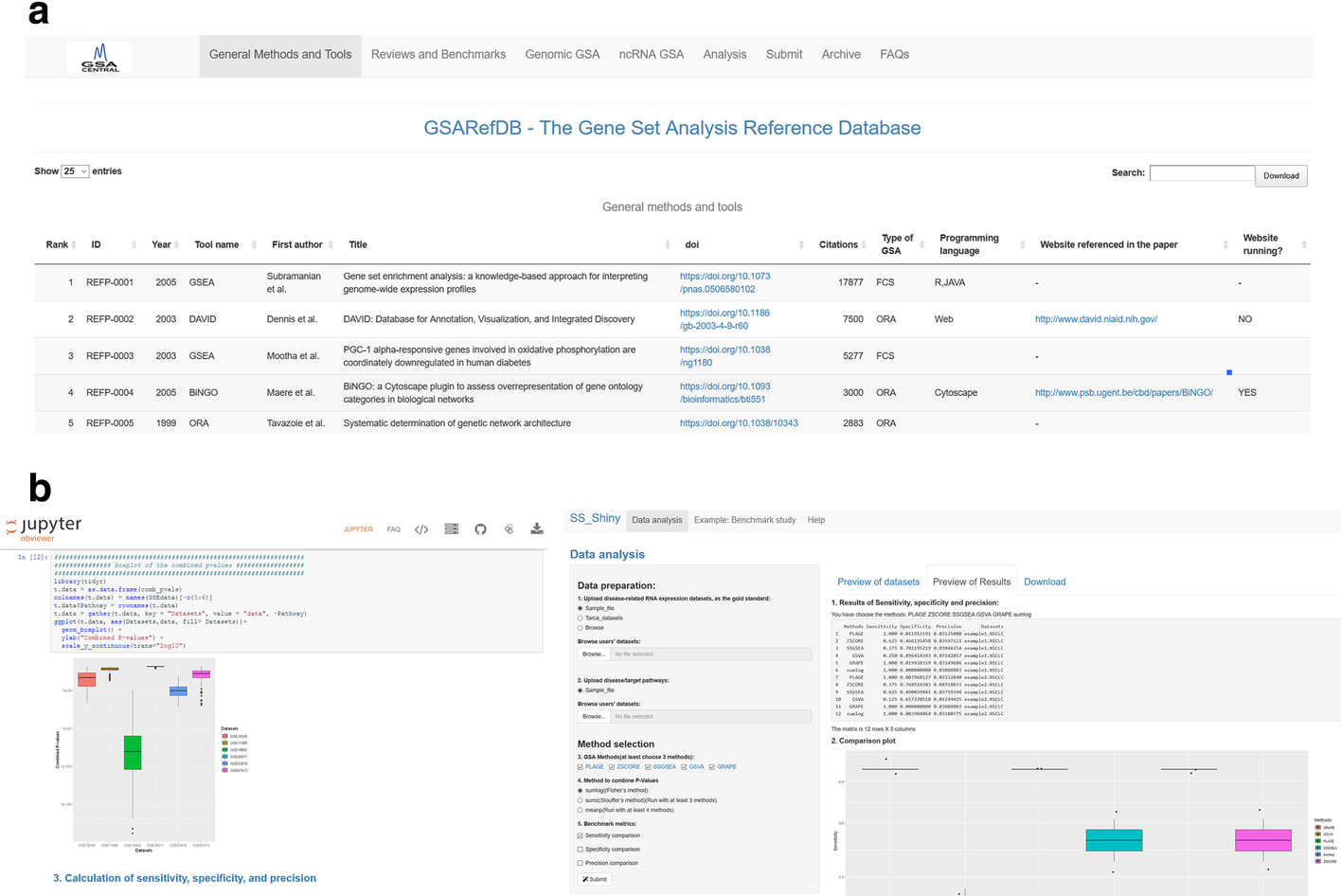

The number of paper citations has been used as a simple (yet imperfect) measure of a GSA method’s popularity. We collected 350 references to GSA papers of methods, software or platforms (Additional file 1: Tab 1), as well as 91 references to GSA papers for non-mRNA omics tools (Additional file 1: Tabs 3, 4), and 62 GSA reviews or benchmark studies (Additional file 1: Tabs 2, 3, 4), which have been organized into a manually curated open database (GSARefDB). GSARefDB can be either downloaded or accessed through a Shiny interface. Figure 1 summarizes some relevant information from the database (GSARefDB v.1.0).

|

The citation count shows that the most influential GSA method in history is Gene Set Enrichment Analysis (GSEA), published in 2003, with more than twice as many citations as its follower, the ORA platform called DAVID (17,877 versus 7,500 citations). In general, the database shows that the field contains a few extremely popular papers and many papers with low popularity. Figure 1b shows that the list of tools is mainly composed of ORA and FCS methods, while the newer and lesser known PT and Network Interaction (NI) methods are less common (and generally found at the bottom of the popularity rank).

It could be hypothesized that the popularity of a GSA tool does not always depend on being the best for that particular project, and it could be related to variables such as its user-friendliness instead. The database allows us to compute citations-per-programming-language, which we use as an approximation to friendliness. Figure 1c shows that the majority of the GSA papers correspond to R tools, but, in spite of that, Fig. 1d shows that most of the citations correspond to web platforms followed by stand-alone applications, which are friendlier to users. Worth mentioning, the last column of the database shows that there are a large number of tools that are not maintained anymore, with broken web links to tools or databases, which makes their evaluation impossible. This phenomenon is a common bioinformatics problem that has also been reported elsewhere.[11] Figure 1e shows that one-third of the reported links in GSA papers are now broken links.

Besides ranking papers according to their all-time popularity, a current-popularity rank was also built (Additional file 1: Tab 5). To accomplish that, a version of the database generated in May 2018 was compared to a version of the database generated in April 2019. The "current popularity" rank revealed the same trends as the "overall" rank. GSEA is still, by far, the most popular method. The other tools that are being currently cited are clusterProfiler[12], Enrichr[13], GOseq[14], DAVID[8], and ClueGO[15], followed by GOrilla, KOBAS, BiNGO, ToppGene, GSVA, WEGO, agriGO, and WebGestalt. ORA and FCS methods are still the most popular ones, with 3534 combined citations for all ORA methods and 2185 citations for all FCS methods, while PT and NI methods have 111 and 50 combined citations respectively. In contrast, single-sample methods have 278 combined citations, while time-course methods have 67. Regarding reviews, an extremely popular paper from 2009[4] is still currently the most popular, even though it doesn’t take into account the achievements of the last 10 years.

Performance

The subject of validation of bioinformatics software deserves more attention.[16] A review of the scientific validation approaches followed by the top 153 GSA tool papers in the database (Additional file 1: Tab 6) found multiple validation strategies that were classified into 19 categories. 61 out of the 153 papers include a validation procedure, and the most commonly found validation strategy is “Consistency with biological knowledge,” defined as the fact that our method’s results explain the knowledge in the field better than the rival methods (which is commonly accomplished through a literature search). Other common strategies (though less common) are the comparisons of the number of hits, classification accuracy, and consistency of results between similar samples. Important strategies, such as comparing statistical power, benchmark studies, and simulations, are less used. The least used strategies include experimental confirmation of predictions and semi-blind procedures where a person collects samples and another person applies the tool to guess tissue or condition. Our results have been summarized in Fig. 1f (above) and Additional file 1: Tab 6. We can see that the frequency of use of the above-mentioned validation strategies is inversely proportional to their reliability. For example, commonly used strategies such as “consistency with biological knowledge” can be subjective, and comparing the number of hits of our method with other methods on a Venn diagram[17] is a measure of agreement, not truth. On the other hand, the least used strategies, such as experimental confirmation or benchmark and simulation studies, are the better alternatives.

The next step of our performance study was a review of all the independent benchmark and simulation studies existing in the GSA field, whose references are collected in Additional file 1: Tab 2. Table 1 summarizes 10 benchmark studies of GSA methods, with different sizes, scopes, and method recommendations. A detailed description and discussion of each of the benchmarking studies can be found in Additional file 2. The sizes of all benchmarking studies are small when compared to the amount of existing methods that we have mentioned before, while their lists of best performing methods show little overlap. Only a few methods are mentioned as best performers in more than one study, including ORA methods (such as hypergeometric)[3], FCS methods (such as PADOG)[18], SS methods (such as PLAGE)[19], and PT methods (such as SPIA/ROntoTools).[20]

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

It is crucial to notice that the previous studies just covered a small fraction of the entire universe of GSA methods. Also, that there is little overlap between the sets of methods included in different studies, but nevertheless we can still find inconsistencies between results, such as the GSVA, Pathifier, and hypergeometric methods, which are reported both as best performer and as poor performer in different benchmarks. From all the above-mentioned high-performance tools, only “ORA” appears in the top 20 currently most popular tools (Additional file 1: Tab 5), suggesting that there is a divorce between popularity and performance (see also Additional file 2: Table 2).

Popularity versus performance

In general terms, it is evident that performance studies are still few, small, inconsistent, and dependent on the quality of the benchmarks; however, they tend to recommend tools different to the popular and friendly ones.

GSEA is one of the most important landmarks in GSA history and the most striking example of the contradictions between popularity and performance metrics of GSA tools. With one exception, none of the recent benchmarks under analysis has reported GSEA to be the best performing method; however, GSEA is still both the all-time and the currently most used tool. Besides that, numerous methods (most of them not included in the previous benchmarks) report that they specifically outperform GSEA in at least one of many possible ways; such methods are highlighted on Additional file 1: Tab 7 with the tag "COMPARED". In addition, there have been plenty of developments to the GSEA method itself; for example, alternative functions to score the gene set, alternative options to the permutation step to finding p-values, or using GSEA as part of an extended method (see methods at Additional file 1: Tab 7, with the tag "PART OF METHOD"), which reportedly outperform the original GSEA. We have identified a total of 79 papers in those categories (Additional file 1: Tab 7). However, in spite of that, GSEA's overwhelming popularity compared to any other method would suggest otherwise. We can also verify that, with the exception of ORA, most of the above-mentioned high-performing methods tend to occupy lower places in the current popularity ranking (Additional file 2: Table 2).

It is hard to estimate how many conclusions from how many articles should be rectified if we rigorously apply our current knowledge on GSA and use the best performing methods in every paper that ever used GSA. However, taking into account that our database (Additional file 1) registers 503 GSA software and tool papers that have been collectively cited 134,222 times, there is a valid concern regarding the need to pay more attention to both the method performance studies and the performance-based tool selection in GSA. Otherwise, the last step of the omics data analysis process may undermine the efforts on the rest of the pipeline.

Performance tools

The previous observation regarding the difference between the most popular and the best performing GSA methods could be incorrect, given that existing benchmarks have followed different methodologies and the proper way to benchmark GSA methods is a discussion in itself. Therefore, we have also reviewed and discussed the existing benchmarking and simulation strategies, in order to extract a few ideas regarding good benchmark practices.

Rigorous benchmark studies are not a straightforward task, given that there is no accepted gold standard to be used in a comparison of GSA tools.[25] Reviewed here are three strategies to build such a gold standard. The first strategy is to apply several different enrichment tools to the same gene sets and use the intersection of the results as a gold-standard. One example of this is EnrichNet[31], which obtains a “set of high confidence benchmark pathways” as the intersection between the top 100 ranks generated by the SAM-GS and GAGE methods. Such a procedure is questionable, as the chosen methods are far from being considered the best (as seen before), and the procedure is more related to agreement than truth. The second strategy is the use of disease datasets clearly associated with one pathway as a gold-standard. For example, Tarca et al.[22] compiled 42 microarray datasets with both healthy and disease-associated samples, where the disease was associated with a KEGG pathway (and, therefore, such “target pathway” should be found significant).[22] The third strategy is to leverage knowledge on gene regulation effects. Some authors have used gene sets built from the known targets of specific transcription factors and miRNAs, and then try to predict the changes after over-expression or deletion of such regulators[21], while some others have used datasets with mouse KOs: Pathways containing the KO gene were considered as target pathways, while the others were considered as negatives.[27] Geistlinger et al.[30] have recently introduced a modification of such approach in which they not only look at “target pathways” matching the original diseases, but instead create a “gene set relevance ranking” for each disease. To build such rankings, the authors used MalaCards gene scores for disease relevance, which are based on both experimental and bibliometric evidence; then, they used the gene scores to build combined gene set relevance scores for all GO and KEGG terms (using the GeneAnalytics tool). As a result, instead of benchmarking against some “target pathways”, the benchmark is done against a “pathway relevance ranking” per disease. Table 2 assesses different performance measurement approaches according to their objectivity, reproducibility, and scalability.

| |||||||||||||||||||||||||||||||||||||||||||||

A second issue is the selection of the benchmark metrics that quantitatively determine who is the best performer. Well-known metrics such as sensitivity, specificity, precision, or the area under the ROC curve, have been traditionally used. Tarca et al.[22] introduced the use of sensitivity, specificity, and “prioritization,” together with False Positive Rate (FPR), where prioritization is a concept related to the rank of the target pathways for a given method. Zyla et al.[29] have recently extended Tarca's work and recommended using a set of five different metrics: Sensitivity, False positive rate (FPR), Prioritization, Computational time, and Reproducibility. In their approach, reproducibility assigned a high score to methods that showed similar results for different datasets coming from the same condition/disease and the same technical platform, but different authors/labs.[29] The chosen metrics are fundamental because no method is the best under all metrics, and every user should start by selecting a method according to the goals of their study, which may need a very sensitive method, a very specific method, or any other of the above-mentioned properties. As a consequence, benchmark studies must be clear regarding the metrics under which they are ranking the method performance[22], and their set of metrics should at least include the previously mentioned ones. Also, it makes no sense to declare success because a new method has better sensitivity than other methods that are known for a poor sensitivity; therefore, it is a logical consequence that any new methods must compare their sensitivity to sensitive methods, their specificity to specific methods, and so on.

A third issue is that validation procedures should not allow the authors to subjectively choose the methods that the authors want to compare to their new method, as it would be possible for the authors to choose those methods that they can outperform. One alternative to that is using well-established, independent, and comprehensive benchmark studies as a reference; then, when a new method appears, validation should be done by comparison to the top methods from such independent benchmarks. This practice is not common; as an exception, the authors of LEGO[32] explicitly use the top five methods in Tarca et al.'s benchmark.[22]

As a final thought, ensembles of methods have been suggested to give better results than any of the single methods.[33] That implies that benchmark studies should not be limited to single tools but include comparisons to ensembles of tools as well. This approach has been followed by at least one benchmark study.[21]

The previous analysis makes us think that future GSA benchmarks should include the most recent developments on benchmark theory, as well as be performed on more GSA methods, in order to extract more meaningful conclusions. In order to do this, we have also created a benchmarking platform, “GSA-BenchmarKING,” which is a repository of apps/workflows/pipelines that follow the above-mentioned good benchmarking practices and allow benchmarking of GSA software in an easy and automated way. Currently, GSA-BenchmarKING contains Jupyter Notebooks with full workflows for benchmarking GSA methods, and Shiny apps that allow benchmarking with the click of a few buttons. The initial benchmarking tools added to the repository were created by our students as an example, following the previous guidelines, but they are open software and can be permanently improved. The initial tools are focused on two types of methods: single-sample GSA (such as GSVA, Pathifier, SSGSEA, PLAGE, ZSCORE) and genomic-region GSA (such as GREAT, ChIP-Enrich, BroadEnrich, Enrichr, Seq2Pathways). All apps allow the user to define different gold standard datasets (or use ours), select the GSA methods to compare, and select the comparison metrics to plot. In addition, it is possible to keep adding more GSA methods to the app with the help of the community, as each app includes instructions for programmers interested in adding new methods. Given the large amount of GSA methods, more benchmarking tools are needed and welcomed.

Discussion

The GSARefDB has been used to make some initial data exploration on the relationship between popularity and performance of GSA tools. Besides the observations highlighted in this article, such a database can also be the source for more in-depth research. For example, some limitations of our approach include (i) that some papers describe more than one method or platform, and (ii) that some methods are only cited for comparison purposes. We observe that such problems are the exception and not the rule, but future studies might want to take them into account and GSARefDB can still be used as a data source for it.

In general terms, it is evident that performance studies are still few, small, inconsistent, and dependent on the quality of the benchmarks; however, they tend to recommend tools different to the popular and friendly ones. Among the possible reasons for the discrepancies between popularity and performance, it has been suggested that software selection may not be entirely related to performance but to factors such as a preference for user-friendly platforms or user-friendly concepts or plots (for example, GSEA’s “enrichment plot”). Also, due to the fact that the objective evaluation of the performance of the different GSA methods is a complicated and time-consuming issue, or to the fact that new methods need more time to be accepted. In network analysis, popularity can be explained by the “rich-gets-richer” (popular-gets-more-popular) effect. Using concepts of the “consumer behavior” field, software selection can be studied as the choice of a popular brand, that is, variables such as confidence in experience (the respect to the researcher/institution associated to the software), social acceptance and personal image (following the software that everyone else is using), or consumer loyalty (after some time, we are attached to our software and not interested to change).[34] In a recent book, Barabasi[35] suggested that popularity and quality usually go together in situations where performance is clearly measurable. Otherwise, popularity can't be equated to quality. Our study agrees with such an idea. We have found that the most popular GSA software is different from the best performing GSA software, and that thorough performance evaluation is still a pending assignment for bioinformaticians.

However, in the specific case of GSA, there is a complementary explanatory hypothesis: Besides all the progress in GSA theory leading to better-performing methods, at the end of the pipeline, the users usually extract the evidence that they consider relevant from the resulting rank of gene sets, choosing the pathways or GO terms of their interest and ignoring the rest of the ranking, and, therefore, any differences between methods regarding lower p-values, prioritization order, and so on, become less important. It is said that in the step of gene set selection from the final gene set ranking, researchers bring "context" to the results but, this way, their subjectivity may be projected to the study. One way out of this is to stimulate the research on “context-based GSA.” For example, a recent effort called contextTRAP[36] combines an impact score (from pathway analysis) with a context score (from text mining information that supports that the pathway is relevant to the context of the experiment). Bayesian approximations to GSA using text mining data as context need to be more developed, as any other method that studies the final gene set ranking as a whole.

Conclusions

Given that popular methods are not necessarily the best, bioinformatics software users should not only be guided by popularity but mainly by performance studies. However, performance studies, in time, must be guided by the general guidelines that we have discussed, that is, researchers should only follow the most convincing benchmark procedures. Such strict recommendations are problematic because performance studies are few, low-coverage, and have a variable quality; therefore, we need more open tools to dynamically review popularity and performance of bioinformatics software, such as those introduced here.

Based on the previous results and discussion, we argue that the functional interpretation field would benefit from:

1. having more information and discussion regarding the nature and scope of the existing functional interpretation methods of omics data, as well as more teaching of new and sophisticated methods in bioinformatics courses, more guidelines for selection of a tool, and more popularization of the functional interpretation shortcomings. Also, deeper discussions of the selection of GSA methods for all biomedical papers should be requested.

2. the permanent evaluation of GSA methods, including better gold standards, an increasing number of comprehensive comparison studies, and better benchmarking practices. The fact that two prestigious computational biology journals have recently created a special edition and a collection specialized on benchmarks are welcome steps in that direction.[37][38]

3. paying attention to reproducibility and offering open code in code-sharing platforms (such as GitHub), containers with the specific software and library versions used in their work (such as Docker), notebooks (such as RStudio and Jupyter) including scripts with a detailed explanation of their methods, and other strategies to allow reproducibility.

4. the creation of a culture of functional re-analysis of existing data using new GSA methods, as well as the computational tools to functionally re-analyze existing omics datasets in a streamlined manner.

5. more rigorous validation procedures for GSA tools. Also, bioinformaticians should get as much training on scientific validation methods and tools as they get on using and building bioinformatics tools.

Proper tool selection is fundamental for generating high-quality results in all scientific fields. This paper suggests that tool performance and tool selection studies, via the popularity-performance evaluation based on an exhaustive reference database, is a methodology that should be followed up, to keep track of the evolution of the tool selection issues in a scientific field. We have also introduced examples of popularity- and performance-measuring software that could help make such studies easier. The reader is here invited to keep following our work for the GSA field, including GSARefDB and GSA-BenchmarKING.

Methods

Definitions

The concepts involved in this study have been defined in several different ways in the literature. For example, the field under study has been called "pathway analysis,"[5] "enrichment analysis,"[23] "gene set analysis,"[2] "functional enrichment analysis,"[12] “gene-annotation enrichment analysis,”[4] and other terms, by different authors. At the same time, the term "gene set analysis" has been used to describe the entire field[2], or just the group of ORA and FCS methods (in opposition to methods including pathway or network topology)[23], or even one specific tool.[39] Finally, the term “pathway analysis” has also been used to both describe the entire field[5], or just the group of methods that include pathway topology.[23] For this reason, we have added the following summary of the definitions used in this study for the above-mentioned and other relevant terms.

benchmark study: A systematic comparison between computational methods, in which all of them are applied to a gold standard dataset and the success of their gene set predictions are summarized in terms of quantitative metrics (such as sensitivity, specificity, and others).

functional class scoring (FCS): A subset of GSA methods in which the values of a gene-level statistic for all genes in the experiment are aggregated into a gene-set-level statistics[5], and gene set enrichment is computed in terms of the significance of such gene-set-level statistic. FCS methods start from a quantitative ranking of the gene-level statistic for all genes under analysis (in contrast to ORA, which only uses a list of differentially expressed genes). Some popular FCS methods find if the relative position of a gene set in the ranking of all genes is shifted to the top or the bottom of the ranking. For example, the WRS test compares the distribution of ranks of the genes in a gene set to the distribution of ranks of the genes in the complement to the gene set, while the KS test compares the ranks of genes in a gene set to a uniform distribution.[23]

gene set analysis (GSA): GSA methods have been defined as a group of "methods that aim to identify the pathways that are significantly impacted in a condition under study"[6] or as "tests which aim to detect pathways significantly enriched between two experimental conditions."[23] More specifically, GSA is an annotation-based approach that statistically compares experimental results to an annotated database in order to transform gene-level results into gene-set-level results. For example, a query gene set (a list of differentially expressed genes, or a rank of all gene’s fold change) is mapped to a gene set reference database, using a particular statistical method, in order to explain the experimental results as a rank of significantly impacted pathways, functionally related gene sets, or ontology terms.

gold standard: A "perfect gold standard" would be an error-free dataset that can be used as a synonym for truth (in our case, an omics dataset associated to a true ranking of pathways); however, in practice, we are limited to use "imperfect" or "alloyed gold standards," which are datasets confidently linked to the truth, but not necessarily datasets lacking error.[40]

network interaction (NI): A subset of GSA methods that not only includes the given gene sets but also the gene products that interact with the members of such gene sets when located on top of an interaction or functional annotation network.

over-representation analysis (ORA): A subset of GSA methods based on comparing a list of query genes (for example, up- or down-regulated genes) to a list of genes in a class or gene set, using a statistical test that detects over-representation. ORA "statistically evaluates the fraction of genes in a particular pathway found among the set of genes showing changes in expression."[5]

pathway-topology-based (PT): A subset of GSA methods that weighs enrichment scores according to the position of a gene in a pathway. Only applies to pathway data and not to other types of gene sets.

performance: The value of a quantitative property (when compared to alternative methods or tools) that measures the agreement between the method's output and either empirical data, simulated data, or the output of another method.

popularity: The frequency of use of a method or tool among members of a community.

simulation study: A systematic comparison of computational methods based on building artificial datasets that possess the properties that we specify for them.

Construction of the database

The Gene Set Analysis Reference Database (GSARefDB) was built from the following sources:

1. Google and PubMed searches of keywords such as: "pathway analysis," "gene set analysis," and "functional enrichment" (approx. 10% of the records)

2. Cross-references from all the collected papers and reviews (approx. 50% of the records)

3. Email alerts received from NCBI and selected journals (approx. 40% of the records)

The information was classified into: (i) generic methods/software/platforms, usually dealing with mRNA datasets; (ii) reviews/benchmark studies; (iii) genomic GSA, which includes GSA applied to enrichment of genomic regions (such as those coming from ChIP-seq, SNP, and methylation experiments); and (iv) ncRNA GSA, which includes methods dealing with miRNA and lncRNA datasets. All information in the database was manually extracted from the papers. Numbers of citations were extracted from Google Scholar. Only methods that associate omics data to annotated gene sets were included (see all types of included methods in the prior Fig. 1b). Bioinformatics methods that associate omics data to newly generated modules on a biological network were not included. GSARefDB was built both as an excel sheet and as a Shiny application (Figure 2a).

|

Descriptive statistics

Plots of summary statistics were generated using the “ggplot2” R package. The R code is open and can be found here.

Popularity rankings

Popularity rankings in GSARefDB are constructed on a per paper basis, not per-method or per-tool. In order to build popularity rankings of methods or tools that are presented in multiple papers, we use the citation count of the most cited paper for that tool.

Performance study

The scientific validation approaches followed by the top 153 GSA tool papers in our database were manually reviewed (see Additional file 1: Tab 6). Validation, as performance, was defined as the success of a method on getting better scores than rival methods for a specific quantitative property that measures the agreement between the method's output and either empirical data, simulated data, or the output of another method. Our definition of validation does not include:

(i) examples of application of the method followed by highlighting the reasonableness of the results (without comparing to other methods);

(ii) arguments (usually statistical) stating that new assumptions are better than old ones, without any comparison to empirical or properly simulated data; or

(iii) comparisons of capabilities between old and new software (such as implementing other algorithms or databases).

Detailed procedures followed during the benchmark and simulation studies are explained in Additional file 2.

Construction of performance-measuring software

The “GSA-BenchmarKING” repository (see Figure 2b and the GSA BenchmarKING page) was created to store and share different tools to measure GSA method performance. For a benchmarking software to be accepted into the repository, it should: (i) be open software (ideally, a Jupyter Notebook, RStudio R Notebook, or Shiny app); (ii) have a clear reason for selecting the GSA methods included; for example, because all of them belong to the same type of methods; (iii) include both a gold standard dataset and options to upload user-selected gold standard datasets; (iv) include either a list of target pathways linked to the gold standard dataset or disease relevance scores per pathway for the diseases related to the gold standard; (v) give the user the option of selecting different benchmarking metrics (as a minimum, precision/sensitivity, prioritization, and specificity/FPR); (vi) give the possibility of selecting ensemble results; and (vii) give the possibility of adding new GSA methods to the code in the future.

The “GSARefDB,” “ss-shiny,” and “gr-shiny” apps were built using the “Shiny” package. “GSARefDB” and “ss-shiny” were built using R 3.6.2, while “gr-shiny” was built using R 4.0.0. Open code can be accessed at GitHub here, here, and here.

Supplementary information

- Additional file 1: GSA Reference DB

- Additional file 2: Detailed Performance Study

Acknowledgements

The authors thank the support of both colleagues and students at Guangzhou Medical University and Guangzhou Institutes of Biomedicine and Health (Chinese Academy of Sciences). We also thank our two anonymous reviewers for their contributions.

Contributions

CSX wrote the Shiny apps for GSARefDB and ss-shiny, and generated the figures. SJ programmed the Shiny app for gr-shiny. AM designed the project, built the reference database, and wrote the paper. All authors read and approved the final version of the paper.

Availability of data and materials

All datasets generated for this study are included in the supplementary material. Updated versions of the datasets will be available at the GSARefDB and GSA BenchmarKING pages.

Funding

This work was funded by the Joint School of Life Sciences, Guangzhou Medical University and Guangzhou Institutes of Biomedicine and Health (Chinese Academy of Sciences). SJ is also funded by the China Postdoctoral Science Foundation Grant No. 2019M652847. The funders had no role in the design of the study, data collection, data analysis, interpretation of results, or writing of the paper.

Competing interests

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- ↑ Dixson, L.; Walter, H.; Schneider, M. et al. (2014). "Retraction for Dixson et al., Identification of gene ontologies linked to prefrontal-hippocampal functional coupling in the human brain". Proceedings of the National Academy of Sciences of the United States of America 111 (37): 13582. doi:10.1073/pnas.1414905111. PMC PMC4169929. PMID 25197092. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4169929.

- ↑ 2.0 2.1 2.2 2.3 Mora, A. (2020). "Gene set analysis methods for the functional interpretation of non-mRNA data-Genomic range and ncRNA data". Briefings in Bioinformatics 21 (5): 1495-1508. doi:10.1093/bib/bbz090. PMID 31612220.

- ↑ 3.0 3.1 Tavazoie, S.; Hughes, J.D.; Campbell, M.J. et al. (1999). "Systematic determination of genetic network architecture". Nature Genetics 22 (3): 281–5. doi:10.1038/10343. PMID 10391217.

- ↑ 4.0 4.1 4.2 Huang, D.W.; Sherman, B.T.; Lempicki, R.A. (2009). "Bioinformatics enrichment tools: Paths toward the comprehensive functional analysis of large gene lists". Nucleic Acids Research 37 (1): 1-13. doi:10.1093/nar/gkn923. PMC PMC2615629. PMID 19033363. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2615629.

- ↑ 5.0 5.1 5.2 5.3 5.4 Khatri, P.; Sirota, M.; Butte, A.J. (2012). "Ten years of pathway analysis: Current approaches and outstanding challenges". PLoS Computational Biology 8 (2): e1002375. doi:10.1371/journal.pcbi.1002375. PMC PMC3285573. PMID 22383865. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3285573.

- ↑ 6.0 6.1 Mitrea, C.; Taghavi, Z.; Bokanizad, B. et al. (2013). "Methods and approaches in the topology-based analysis of biological pathways". Frontiers in Physiology 4: 278. doi:10.3389/fphys.2013.00278. PMC PMC3794382. PMID 24133454. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3794382.

- ↑ Mora, A. (26 March 2021). "GSARefDB". GSA Central. https://gsa-central.github.io/gsarefdb.html.

- ↑ 8.0 8.1 Huang, D.W.; Sherman, B.T.; Tan, Q. et al. (2007). "DAVID Bioinformatics Resources: Expanded annotation database and novel algorithms to better extract biology from large gene lists". Nucleic Acids Research 35 (Web Server Issue): W169-75. doi:10.1093/nar/gkm415. PMC PMC1933169. PMID 17576678. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1933169.

- ↑ Subramanian, A.; Tamayo, P.; Mootha, V.K. et al. (2005). "Gene set enrichment analysis: a knowledge-based approach for interpreting genome-wide expression profiles". Proceedings of the National Academy of Sciences of the United States of America 102 (43): 15545-50. doi:10.1073/pnas.0506580102. PMC PMC1239896. PMID 16199517. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1239896.

- ↑ Draghici, S.; Khatri, P.; Laurentiu, A. et al. (2007). "A systems biology approach for pathway level analysis". Genome Research 17 (10): 1537–45. doi:10.1101/gr.6202607. PMC PMC1987343. PMID 17785539. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1987343.

- ↑ Ősz, Á.; Pongor, L.S.; Szirmai, D. et al. (2019). "A snapshot of 3649 Web-based services published between 1994 and 2017 shows a decrease in availability after two years". Briefings in Bioinformatics 20 (3): 1004-1010. doi:10.1093/bib/bbx159. PMC PMC6585384. PMID 29228189. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6585384.

- ↑ 12.0 12.1 Yu, G.; Wang,L.-G.; Hanf, Y. et al. (2012). "clusterProfiler: an R package for comparing biological themes among gene clusters". OMICS 16 (5): 284–7. doi:10.1089/omi.2011.0118. PMC PMC3339379. PMID 22455463. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3339379.

- ↑ Kuleshov, M.V.; Jones, M.R.; Rouillard, A.D. et al. (2016). "Enrichr: A comprehensive gene set enrichment analysis web server 2016 update". Nucleic Acids Research 44 (W1): W90—7. doi:10.1093/nar/gkw377. PMC PMC4987924. PMID 27141961. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4987924.

- ↑ Young, M.D.; Wakefield, M.J.; Smyth, G.K. et al. (2010). "Gene ontology analysis for RNA-seq: accounting for selection bias". Genome Biology 11 (2): R14. doi:10.1186/gb-2010-11-2-r14. PMC PMC2872874. PMID 20132535. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2872874.

- ↑ Bindea, G.; Mlecnik, B.; Hackl, H. et al. (2009). "ClueGO: A Cytoscape plug-in to decipher functionally grouped gene ontology and pathway annotation networks". Bioinformatics 25 (8): 1091–3. doi:10.1093/bioinformatics/btp101. PMC PMC2666812. PMID 19237447. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2666812.

- ↑ Giannoulatouet, E.; Park, S.-H.; Humphreys, D.T. al. (2014). "Verification and validation of bioinformatics software without a gold standard: A case study of BWA and Bowtie". BMC Bioinformatics 15 (Suppl. 16): S15. doi:10.1186/1471-2105-15-S16-S15. PMC PMC4290646. PMID 25521810. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4290646.

- ↑ Curtis, R.K.; Oresic, M.; Vidal-Puig, A. (2005). "Pathways to the analysis of microarray data". Trends in Biotechnology 23 (8): 429-35. doi:10.1016/j.tibtech.2005.05.011. PMID 15950303.

- ↑ Tarca, A.L.; Draghici, S.; Bhatti, G. et al. (2012). "Down-weighting overlapping genes improves gene set analysis". BMC Bioinformatics 13: 136. doi:10.1186/1471-2105-13-136. PMC PMC3443069. PMID 22713124. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3443069.

- ↑ Tomfohr, J.; Lu, J.; Kepler, T.B. (2005). "Pathway level analysis of gene expression using singular value decomposition". BMC Bioinformatics 6: 225. doi:10.1186/1471-2105-6-225. PMC PMC1261155. PMID 16156896. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1261155.

- ↑ Tarca, A.L.; Draghici, S.; Khatri, P. et al. (2009). "A novel signaling pathway impact analysis". Bioinformatics 25 (1): 75–82. doi:10.1093/bioinformatics/btn577. PMC PMC2732297. PMID 18990722. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2732297.

- ↑ 21.0 21.1 21.2 Naeem, H.; Zimmer, R.; Tavakkolkhah, P. et al. (2012). "Rigorous assessment of gene set enrichment tests". Bioinformatics 28 (11): 1480-6. doi:10.1093/bioinformatics/bts164. PMID 22492315.

- ↑ 22.0 22.1 22.2 22.3 22.4 22.5 Tarca, A.L.; Bhatti, G.; Romero, R. (2013). "A comparison of gene set analysis methods in terms of sensitivity, prioritization and specificity". PLoS One 8 (11): e79217. doi:10.1371/journal.pone.0079217. PMC PMC3829842. PMID 24260172. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3829842.

- ↑ 23.0 23.1 23.2 23.3 23.4 23.5 Bayerlová, M.; Jung, K.; Kramer, F. et al. (2015). "Comparative study on gene set and pathway topology-based enrichment methods". BMC Bioinformatics 16: 334. doi:10.1186/s12859-015-0751-5. PMC PMC4618947. PMID 26489510. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4618947.

- ↑ Jaakkola, M.K.; Elo, L.L. (2016). "Empirical comparison of structure-based pathway methods". Briefings in Bioinformatics 17 (2): 336–45. doi:10.1093/bib/bbv049. PMC PMC4793894. PMID 26197809. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4793894.

- ↑ 25.0 25.1 De Meyer, S. (2016). "Assessing the performance of network crosstalk analysis combined with clustering" (PDF). Univeriteit Gent. https://libstore.ugent.be/fulltxt/RUG01/002/272/484/RUG01-002272484_2016_0001_AC.pdf.

- ↑ Lim, S.; Lee, S.; Jung, I. et al. (2020). "Comprehensive and critical evaluation of individualized pathway activity measurement tools on pan-cancer data". Briefings in Bioinformatics 21 (1): 36–46. doi:10.1093/bib/bby125. PMID 30462155.

- ↑ 27.0 27.1 Nguyen, T.-M.; Shafi, A.; Nguyen, T. et al. (2019). "Identifying significantly impacted pathways: A comprehensive review and assessment". Genome Biology 20 (1): 203. doi:10.1186/s13059-019-1790-4. PMC PMC6784345. PMID 31597578. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6784345.

- ↑ Ma, J.; Shojaie, A.; Michailidis, G. (2019). "A comparative study of topology-based pathway enrichment analysis methods". BMC Bioinformatics 20 (1): 546. doi:10.1186/s12859-019-3146-1. PMC PMC6829999. PMID 31684881. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6829999.

- ↑ 29.0 29.1 29.2 Zyla, J.; Marczyk, M.; Doaszewska, T. et al. (2019). "Gene set enrichment for reproducible science: Comparison of CERNO and eight other algorithms". Bioinformatics 35 (24): 5146–54. doi:10.1093/bioinformatics/btz447. PMC PMC6954644. PMID 31165139. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6954644.

- ↑ 30.0 30.1 Geistlinger, L.; Csaba, G.; Santarelli, M. et al. (2021). "Toward a gold standard for benchmarking gene set enrichment analysis". Briefings in Bioinformatics 22 (1): 545–56. doi:10.1093/bib/bbz158. PMC PMC7820859. PMID 32026945. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7820859.

- ↑ Glaab, E.; Baudot, A.; Krasnogor, N. et al. (2012). "EnrichNet: Network-based gene set enrichment analysis". Briefings in Bioinformatics 28 (18): i451-i457. doi:10.1093/bioinformatics/bts389. PMC PMC3436816. PMID 22962466. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3436816.

- ↑ Dong, X.; Hao, Y.; Wang, X. et al. (2016). "LEGO: A novel method for gene set over-representation analysis by incorporating network-based gene weights". Scientific Reports 6: 18871. doi:10.1038/srep18871. PMC PMC4707541. PMID 26750448. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4707541.

- ↑ Alhamdoosh, M.; Law, C.W.; Tian, L. et al. (2017). "Easy and efficient ensemble gene set testing with EGSEA". F1000Research 6: 2010. doi:10.12688/f1000research.12544.1. PMC PMC5747338. PMID 29333246. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5747338.

- ↑ Kokemuller, N. (28 January 2019). "Why Do People Buy Brand Names?". Chron. https://smallbusiness.chron.com/people-buy-brand-names-69654.html.

- ↑ Barabási, A.-L. (2018). The Formula: The Universal Laws of Success. Little, Brown and Company. ISBN 9780316505499.

- ↑ Lee, J.; Jo, K.; Lee, S. et al. (2016). "Prioritizing biological pathways by recognizing context in time-series gene expression data". BMC Bioinformatics 17 (Suppl 17): 477. doi:10.1186/s12859-016-1335-8. PMC PMC5259824. PMID 28155707. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5259824.

- ↑ Vitek, O.; Robinson, M.D., ed. (2020). "Benchmarking Studies". Genome Biology. https://www.biomedcentral.com/collections/benchmarkingstudies.

- ↑ "PLOS Computational Biology Benchmarking Collection". PLoS. 29 November 2018. https://collections.plos.org/collection/benchmarking/.

- ↑ Efroni, S.; Schaefer, C.F.; Buetow, K.H. (2007). "Identification of key processes underlying cancer phenotypes using biologic pathway analysis". PLoS One 2 (5): e425. doi:10.1371/journal.pone.0000425. PMC PMC1855990. PMID 17487280. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1855990.

- ↑ Wacholder, S.; Armstrong, B.; Hartge, P. (1993). "Validation studies using an alloyed gold standard". American Journal of Epidemiology 137 (11): 1251–8. doi:10.1093/oxfordjournals.aje.a116627. PMID 8322765.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, spelling, and grammar. In some cases important information was missing from the references, and that information was added.