Journal:Digitalization concepts in academic bioprocess development

| Full article title | Digitalization concepts in academic bioprocess development |

|---|---|

| Journal | Engineering in Life Sciences |

| Author(s) | Habich, Tessa; Beutel, Sascha |

| Author affiliation(s) | Leibniz University Hannover |

| Primary contact | Email: beutel at iftc dot uni dash hannover dot de |

| Year published | 2024 |

| Volume and issue | 24(4) |

| Article # | 2300238 |

| DOI | 10.1002/elsc.202300238 |

| ISSN | 1618-2863 |

| Distribution license | Creative Commons Attribution-NonCommercial 4.0 International |

| Website | https://analyticalsciencejournals.onlinelibrary.wiley.com/doi/10.1002/elsc.202300238 |

| Download | https://analyticalsciencejournals.onlinelibrary.wiley.com/doi/pdfdirect/10.1002/elsc.202300238 (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Digitalization with integrated devices, digital and physical assistants, automation, and simulation is setting a new direction for laboratory work. Even with complex research workflows, high staff turnover, and a limited budget, some laboratories have already shown that digitalization is indeed possible. However, academic bioprocess laboratories often struggle to follow the trend of digitalization. Due to their diverse research circumstances, high variety of team composition, goals, and limitations, the concepts are substantially different. Here, we will provide an overview on different aspects of digitalization and describe how academic research laboratories successfully digitalized their working environment. The key aspect is the collaboration and communication between IT-experts and scientific staff. The developed digital infrastructure is only useful if it supports the laboratory worker and does not complicate their work. Thereby, laboratory researchers have to collaborate closely with IT experts in order for a well-developed and maintainable digitalization concept that fits their individual needs and level of complexity to emerge. This review may serve as a starting point or a collection of ideas for the transformation toward a digitalized bioprocess laboratory.

Keywords: academic laboratories, automation, bioprocess, digitalization, FAIR data, Laboratory and Analytical Device Standard (LADS), Standardization in Lab Automation 2 (SiLA2)

Introduction

In contrast to industry, academic research laboratories require more flexibility than production lines. Besides the need for flexibility, loss of knowledge due to high staff turnover in universities is another challenge that makes full laboratory digitalization hard to achieve. [1-4] "Digitalization" (what we'll be addressing) refers to an entire process, workflow, or laboratory infrastructure, whereas "digitization" refers only to the procedure of converting something analog to a digital format (e.g., digitizing a standard operating procedure [SOP] from a piece of paper to a digital file). [5, 6] Working in digital laboratories has the potential for error reduction, prevention of data loss, improved data integrity, faster workflow development times, possible reduction of chemical and material use, and higher sample throughput, leading to modern, transparent, and reproducible research and biomanufacturing. [7-14]

Developing a digitalization strategy for an academic bioprocess laboratory is an interdisciplinary task. Laboratory workers have to work closely with IT experts in order to achieve a digital infrastructure that can be maintained and is supporting their laboratory work rather than hindering it. [5, 7, 9, 15, 16] This kind of collaboration is rarely found in academic bioprocess laboratories, so even the smallest digitalization task can be a major challenge. Hardware vendors selling their devices with proprietary software and restrictive access and information about their interfaces is making digitalization even more difficult. [1, 7, 17] Therefore, academic researchers working in the field of digitalization are developing concepts that are fitting their individual needs at the time. This is why there are manifold digitalization concepts throughout academic laboratories.

This review will present different digitalization concepts throughout academic bioprocess development laboratories. Before looking at different individual concepts in more detail, general aspects like FAIR data (data that is readily findable, accessible, interoperable, and reusable), standard communication protocols, digital twins, and interaction with laboratory devices will be addressed.

Basic concepts of digitalization

FAIR data

With the progressing digitalization of laboratories, the generated amount of data is steadily increasing. Therefore, good data management systems will become inevitable in bioprocess development laboratories. The first step towards good data management is storing the data and metadata according to the FAIR data principles. [18] Both humans and machines should be able to find the data with metadata and a clear unique identifier. The data should be digitally accessible for the user with the appropriate tool. It should be noted that accessible in this context does not mean that the data are "open" or "free" but that transparency around the used data concerning its availability is given. [19, 20] Interoperable data means data are presented using vocabulary and data encoding that follows the FAIR data principles and can be read by machines. In order for data to be reusable they need to be rich in metadata and descriptive documentation on the circumstances in which the data were generated. Rich metadata should ideally describe the data in a meaningful way, including the setup and context of the experiment, technical setting, and information about the provenance. [8, 18, 21–23]

Most research data are currently stored locally and not organized in a standardized way. Storing data according to FAIR data principles allows them to be read by machines and not just the human laboratory worker who generated them. Other advantages of following the FAIR data principles, as well as publishing research data, is that this data will stay available over time. Available or even openly online shared data, including metadata, can help to determine if the publication is of high quality. It can be reproduced, reanalyzed, used for new analyses, or even compared to or combined with other data promoting a deeper understanding of the topic or perhaps generating new knowledge. [12, 21, 24]

Before computers were used on a large scale in laboratories, results of experiments were handwritten into paper notebooks. This is still happening in many cases in today's bioprocess development laboratories, although the use of electronic laboratory notebooks (ELNs) is emerging quickly. [25] Most devices are equipped with a USB, RS232, or RS485 port, thus providing network connectivity. But the absence of a physical network necessitates the usage of, for example, flash drives for data transport. This increases the danger of manipulation or loss of data. [9] Writing down data manually and then digitizing it later on a computer used for data storage and analysis is also not a good solution. The analog handwritten data is prone to error, not good to search through, only accessible from the analog laboratory notebook, and has limited reusability, in contrast to the FAIR data principles. [9, 26] After it has been digitized without human errors, it has all the advantages of digital data. In order to make data gathering faster, simpler, and more accurate, a complete transition to automated data capture has to be made. [26] This includes data flow from a whole bioprocess, including sampling, sample preparation, measurement, and data analysis. [8] When transitioning from analog to digital data, it is important to take the laboratory users needs into consideration. While aiming to automatically store data following FAIR data principles, the end user who is analyzing it still needs to be able to access and work with it.

Standard communication protocols

While a lot of laboratory device vendors still offer their own proprietary software for device control [17, 27], researchers are agreeing that the future of digitalization in the laboratory lies in the common use of standardized device communication protocols. [7, 9, 17, 26, 28–34] To connect all laboratory devices to one digital infrastructure, it is aspirational for all devices to use the same standard communication protocol to achieve a plug-and-play environment in bioprocess laboratories. [26] Device integration is faster, easier, and better to maintain when vendor-independent communication protocols are more common. [35]

While offering device control with standard communication protocols might be costly for vendors in the beginning due to initial software development, it offers advantages as well. Using a communication standard as an instrument vendor will reduce the complexity of their software documentation since the basics have already been well documented. Thus, a detailed documentation of manufacturer-specific proprietary device drivers will become redundant. This simplification of software development can then reduce costs for device vendors. When using standard protocols for device communication, instrument vendors are contributing to the management of data according to the FAIR data principles, leaving them to focus on new innovations and features in their devices. Customers can then choose devices based upon those innovations instead of technical practicalities. Additionally, more companies and laboratories have the ability to integrate devices with standard communication protocols into their digital infrastructures because they are not limited by interface or platform incompatibilities. This will then lead to an increased purchase of consumables for those devices. [36-40] Agreeing on physical standards like the microtiter plate was an advantage for everyone in the end. [30] Using standardized tools and parts in the production process is decreasing costs and complexity due to purchasing larger quantities of the same part rather than needing multiple specific parts. The earlier standards are getting adopted and implemented in the working process the more cost efficient it will be. Manufacturers are also challenged to differentiate their product from other manufacturers in other areas than compatibility. This leads to a better competition and an overall increase in developing new technologies rather than sticking to proprietary solutions. [30, 41] For consumers on the other hand, standardization will help to easily maintain devices even if the manufacturer no longer exists. Standards need to be flexible enough to go beyond their intended purpose or otherwise it will limit their use.

Before implementing a communication standard, a holistic plan needs to be made from scientists in cooperation with IT experts. Laboratory work needs to be simplified, and the scientist needs to be supported through standard device connectivity. Users need to have a clear benefit from using a standard, otherwise digitalization efforts will not be rewarded by regular use. As long as the use of proprietary device control software is more convenient, the use of standard communication protocols is ineffective. These benefits can, for example, be achieved by automating parts of the workflow that would otherwise result in tedious manual work, like data acquisition and central data storage following FAIR data principles. Other means to support the user in the laboratory are semi-automated SOPs, where device parameters are already preset after digitally selecting the required workflow. Using a standard protocol for device communication can realize these aspects when all devices are connected by a central device control server, as demonstrated by Porr et al. [35] and Rachuri et al. [42] After successfully choosing and implementing standard communication, it is important to keep the infrastructure open to new device additions and upgrades without being a burden on laboratory workers. Two standard communication protocols are currently coexisting in bioprocess development laboratories: Standardization in Lab Automation 2 (SiLA2) and the Laboratory and Analytical Device Standard (LADS), based on Open Platform Communications Unified Architecture (OPC UA). [17, 36, 38, 40, 43, 44]

SiLA2

“SiLA's mission is to establish international standards which create open connectivity in lab automation, to enable lab digitalization.” [38] This open-source communication standard is based on a client-server infrastructure where every laboratory device is a SiLA2 server that offers a set of features. SiLA2 servers advertise themselves on the network and are discovered by SiLA2 clients like a laboratory information management system (LIMS) or the SiLA2 Manager developed by Bromig et al. [36, 40, 42] The clients recognize the offered features by the servers and can use or call them. Real-time observation of parameters is possible through the subscription to observable properties. For integration of legacy devices, Porr et al. developed a gateway module to enable SiLA communication. [45] On the software side, the gateway module has the advantage of clearly separating the tasks while still using a standard protocol. This plays a major role in scalability and maintainability. SiLA2 has been implemented in different frameworks using Java, C#, Python, or C++ for fast and easy integration of laboratory devices. [42, 45, 46]

LADS based on OPC UA

OPC UA is a standard in multiple industries for data exchange between devices independent from the device vendor. [44] Just like SiLA2, OPC UA has a client-server infrastructure. OPC clients can be connected to any OPC server that is able to collect and further process provided data from the client. The previous version, OPC Classic, was limited to the operating system Microsoft Windows; however, OPC UA is platform-independent. OPC was used as an established standard in industry even before the need for a standard communication protocol for laboratory equipment manifested itself. [44] The problem of transferring this digital communication standard from industry to bioprocess development research laboratories is the added flexibility and heterogeneity of devices that make those laboratories a lot more complex than industrial production processes when looking at the digitalization part. [43] This is why a working group from the industrial association Spectaris developed the communication protocol LADS based on OPC UA. [17, 47] “The goal of LADS is to create a manufacturer-independent, open standard for analytical and laboratory equipment.” [48] OPC UA provides a way to integrate legacy devices into the digital infrastructure by providing wrappers for existing software. [49] Implementing OPC UA is possible in different programming languages such as Java, Python, C++, or C#. [49] OPC UA is not just enabling digital connectivity inside the laboratory but also connecting research laboratories to industrial infrastructures due to OPC UA already being established in industrial environments. [17]

Digital twins

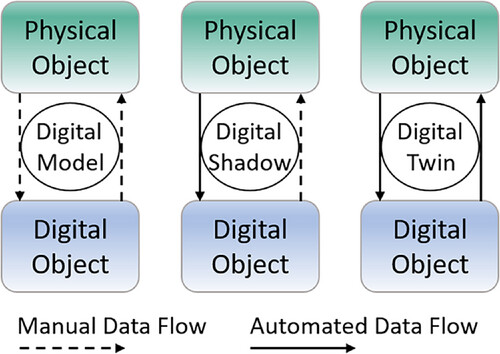

Through the development of Industry 4.0 and progressing internet of things (IoT) connectivity, the number of digital twins is increasing also in the environment of bioprocess engineering laboratories. IoT in this context describes the idea to connect physical objects like laboratory devices to a network or the internet to ensure status and information processing, as well as the ability to communicate and send and receive data. [28, 50–53] This omnipresent keyword "digital twin" is described by Fuller et al. as the “effortless integration of data between a physical and virtual machine in either direction.” [54] This concept is an invaluable advancement in bioprocess development, whereas a differentiation between "digital models," "digital shadows," and "digital twins" is important. The difference between these, as depicted in Figure 1, are the manual and automated data flows between the physical and digital object. All three concepts have a physical and digital object that are connected by data flow. Within the digital model, the data in transported manually between the physical and digital object. The digital shadow has automated data flow from the physical to the digital object, but manual data flow from the digital to the physical object. A digital twin, however, has automated data flow in both directions. Thus, only when using a digital twin, changes in the physical object will result in changes in the digital object and vice versa. [7, 54, 55]

|

Major challenges in the process of implementing digital twins in the bioprocess laboratory are data security and privacy concerns, device connectivity, trust issues regarding the use of technology, and development of a laboratory-wide digital infrastructure, including data storage space. Difficult to predict and complex processes especially in the biological laboratory complicate the development of digital twins as well. The predominant benefits on the other hand include automated data (and metadata) generation, processing, and analyzing of those as well as data traceability. All this is contributing to the aforementioned FAIR data principles. Laboratories that use digital tracking of consumables in their digital twins are able to automate their ordering system making manual inventory obsolete. Therefore, digital twins are the key technology to a new and transformed laboratory work, with the potential to automated knowledge generation. [7, 55]

Interaction with laboratory devices

Besides using standardized device communication protocols, full digitalization cannot be achieved without considering the end user. Digital solutions assisting the laboratory worker but also interacting with devices should therefore be straightforward and convenient to use. The device interactions with laboratory equipment in bioprocess development laboratories has changed over the last years, making it more efficient and intuitive with every additional solution, providing more interaction possibilities. [53]

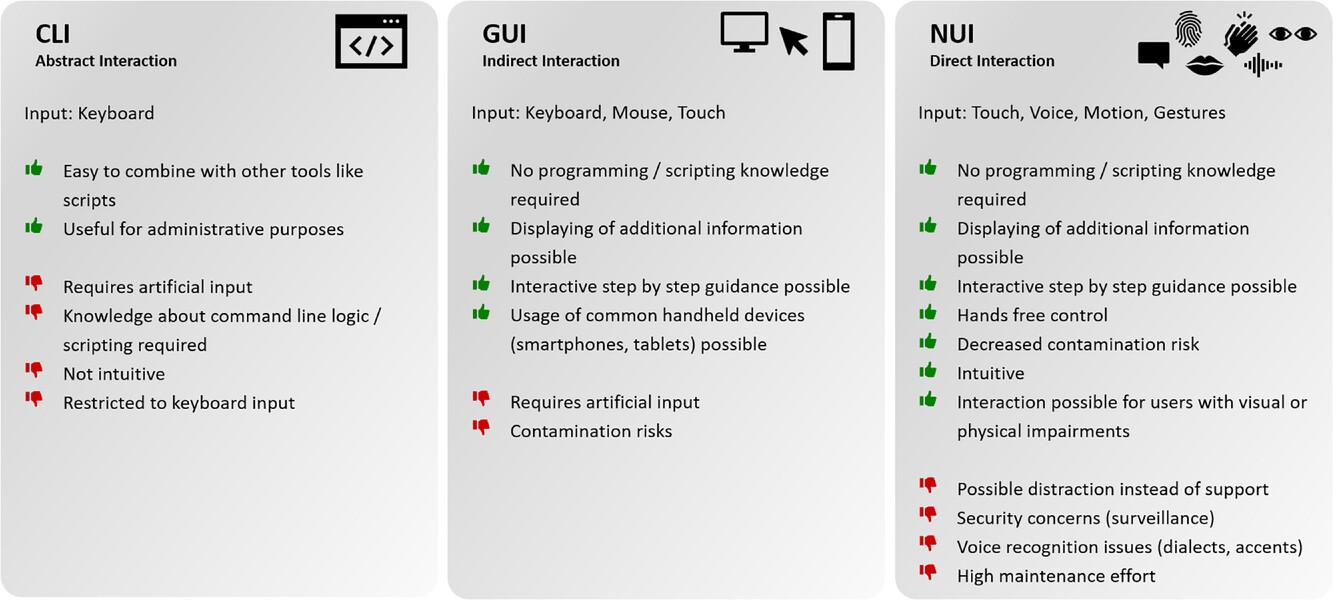

The use of laboratory devices itself is supposed to not interfere with the experiment, slow it down, or complicate it. Consequently, device operation should be as intuitive as possible. There are three different interactions levels for device control. Starting at the lowest level, there is abstract interaction, using command line interfaces (CLI), followed by the next level, the indirect interaction, using graphical user interfaces (GUI). The last and most intuitive level of device interaction is the direct interaction using natural user interfaces (NUI).

CLIs have the advantage of keyboard input from the user and are easy to integrate or combine with other tools like scripts. However, using CLIs as the interaction medium within the laboratory environment requires basic knowledge of command line logic and scripting. This skill cannot be expected from everyone working in the laboratory due to their different educational backgrounds. One approach to accelerate the transformation towards digitalized laboratories is the addition of basic IT classes (e.g., for basic scripting knowledge) to biotechnological education. However, CLIs as the only device interface are not a suitable solution for general and intuitive device interaction. Yet additional CLI device interaction may prove useful for administrative purposes or for integrating supplementary software. [56]

The next level of interaction with laboratory devices is the indirect interaction using GUIs. This has the advantage that no programming or scripting knowledge is needed for device interaction. Thus, all people in the laboratory are able to operate devices equipped with a GUI. Additionally, more information can be displayed for the user on the screen while mouse and keyboard input is enabled. A lot of devices have a vendor-specific and often proprietary control software. This often runs on a desktop PC positioned next to the device inside the laboratory. Modern devices, however, do not only have the possibility of local interaction but also offer remote interaction via network connection. Those GUIs are then deployed as touch-based network-like web interfaces, also allowing the usage of handheld devices as the end device. These handheld devices like smartphones, tablets, or touch beamers have the advantage that people are used to operating touch displays from their personal life making device interaction even more intuitive. Additionally, the use of mobile applications on smartphones and tablets is enabled. [57-59]

The use of touch-based interaction media is introducing the next level of device interaction: NUI. Besides touch-based interaction media, NUIs also include voice user interfaces (VUI) and interaction via gestures and motions. Artificial input like keyboard and mouse input becomes obsolete. This direct interaction with laboratory devices is leading to a simplified and even more intuitive way of working in the digitalized laboratory environment. Even though NUIs have a lot of advantages (Figure 2) they are not undisputed due to security concerns or voice recognition issues. [60, 61] Austerjost et al. showed in their work that VUIs can enable hands-free device control, which can be particularly advantageous in microbiology laboratories regarding contamination risks. [34] The topic of human-device interaction is described in further detail in the work of Söldner et al. [53]

|

Digitalization strategies

The way digitalization in laboratories looks can be very different everywhere. Bioprocess development is still relying on experienced workers doing experiments in the laboratory and analyzing data afterwards. Digitalization with integrating devices, digital and physical assistants, automation, and simulation is setting a new direction for laboratory work. [2, 62] One digitalization strategy alone will not be able to meet all needs of the various laboratories that exists in the academic bioprocess development field. [30] In the following subsections, different approaches of digitalization with their respective applications are presented and discussed.

Digital integration of laboratory devices

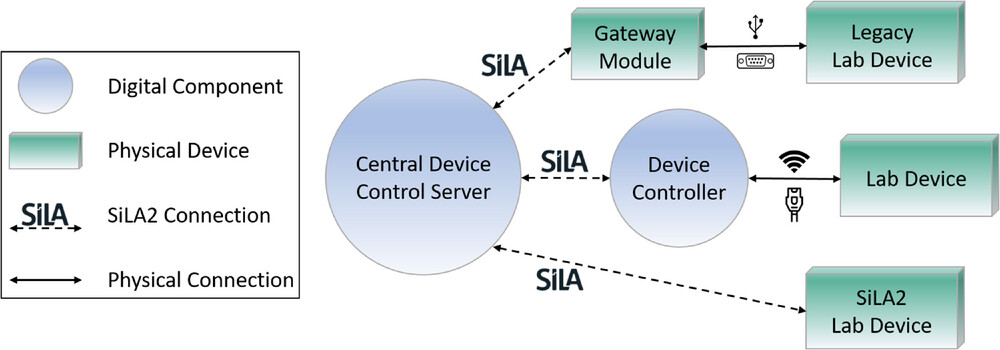

The first step to digitalize any laboratory is to connect devices to a digital infrastructure. This connection can look different for each laboratory, depending on their individual needs. Due to high topic variance in some academic research laboratories, the devices are usually waiting to be used by a researcher in their required manual way. [62] Devices in these laboratories are described by Porr et al. as a “craftsman's toolbox.” [45] Integrating them into a highly automated workflow might hinder research and flexibility, which is why there are different approaches to hardware integration in academic research laboratories. Laboratory devices can be divided into three categories with different levels of technical prerequisites. In order to connect or control any device with a digital infrastructure, a central device control server needs to be developed first. The SiLA2 manager developed by Bromig et al. [42] or the DeviceLayer from Porr et al. [35] are examples of this. The connection to different laboratory devices will then look different due to their category. While legacy laboratory devices do not have internet access connection points but rather a USB or other serial connection ports (like RS232), they first need to be connected to a physical device that bridges the connection to the network as well as to the central control server and enables standard communication. This can be achieved using a gateway module. Laboratory devices with network access (WLAN or Ethernet) do not need such a physical device in order to be connected using a standard device communication protocol. A simple digital component like a device controller is sufficient to integrate that device into the digital infrastructure. Laboratory devices that come with a standard driver like SiLA2 or OPC UA can be controlled directly from the central control server. [35] Figure 3 shows this concept with SiLA2 as the example for the standard device communication protocol.

|

For the integration of legacy laboratory devices, Porr et al. developed a gateway module to enable SiLA-based communication. This gateway module is based on an embedded computer that runs a Linux image. The legacy device is connected to this module via USB or RS232 and a SiLA2 server on the other side that can control the device hosted on the gateway module. Thus, communication using the SiLA communication protocol is enabled. In their work, they showed how to use this connection device to integrate a magnetic stirrer into the digital infrastructure. [45] Bromig et al. also connected their laboratory devices using the SiLA2 communication protocol in their work. They developed a SiLA2 Manager that is able to discover, connect to and control SiLA2 drivers generated with SiLA2 Python. Using this software framework, they connected various laboratory devices needed for their use case cultivation workflow with intermittent glucose feeding. [42]

Schmid et al. used the iLAB software framework (meanwhile known as zenLAB from infoteam [63]) as a basis to develop their own software framework called iLAB-Bio. The iLAB software framework is able to communicate with SiLA and OPC interface protocols generating their own generic driver for each device. The communication with both emerging standard protocols on the market (SiLA and OPC) is making it a good middleware framework for a digitalized laboratory. Using this framework, they were able to integrate a liquid handling platform using SiLA as the device communication protocol. On top of that, they used SiLA-based communication to integrate a bioreactor system with forty-eight 10 mL reaction vessels for parallelization and miniaturization. They also integrated the design of experiment (DoE) software MODDE into their digital infrastructure. For this, they used a combination of SiLA-based communication and manually matching process data to MODDE responses. While this method includes proprietary data formats, it still improves the data exchange with the software, decreasing the possibilities of data loss or damage. [9]

Seidel et al. and Poongothai et al. used various communication protocols (like SiLA2, OPC UA, gRPC, MQTT, or REST) to integrate laboratory devices into their comprehensive IT platform in an IoT fashion. Integrating devices using different interface options increases the maintenance cost and time, as well as the complexity, but this also allows the infrastructure to stay as flexible as possible. Automatically storing all available data in one place saves time, ensures data transparency, and reduces human error. [28]

Austerjost et al. also used the concept of IoT while using proprietary commands for laboratory device control. [34] They generated a digital device shadow for each device which was synchronized with the real laboratory device using the cloud-based IoT broker AWS IoT. The modular device integration was realized using the programming tool Node-RED [64], deployed on a Windows PC with both devices serially connected to the PC. The serial communication was possible with proprietary port commands that were provided by the vendor. The modular setup allows more device integration in the future, but using proprietary elements in the digitalization process will not lead to a universal solution for digitalized laboratories, [26, 34, 43]

In 2021, Porr et al. developed a digital infrastructure where device integration was achieved using SiLA-based communication. [35] The underlying concept of this solution was already published by Porr et al. in 2019. [33] Ethernet, USB, and RS232 is used to connect the (legacy) devices [45] with a wire making digital communication more stable than wireless communication due to less signal interfering possibilities. The infrastructure is based on a server-client-logic. It includes a process control system as their central process intelligence. Here, workflows, the sequence of steps required to complete the bioprocess, are planned and controlled. The process control system is connected to the aforementioned DeviceLayer, which communicates with the laboratory devices. After creating a workflow with the process control system using an intuitive GUI, the user can be guided through the laboratory work with the help of smartglasses as a NUI. The smartglasses show, for example, the next step of a complex assay that was incorporated into the workflow. Here it is important that this additional information is helping rather than distracting the user. [33] The use of smartglasses is just one example of using assistive devices in the laboratory, which will be further examined in the next subsection.

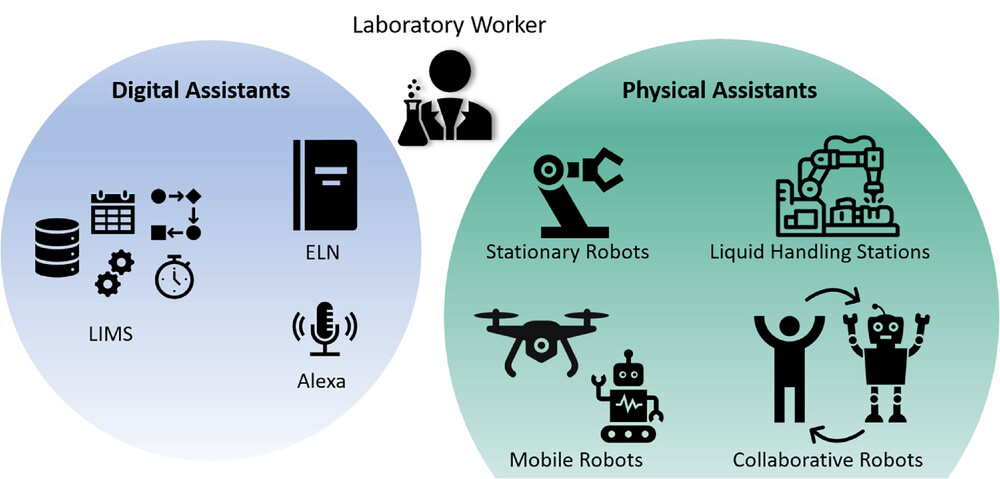

Laboratory assistants

Next to digitalizing laboratory devices and thus enabling digital communication, there are also other means for digital assistance in the laboratory. They can be distinguished into digital and physical assistants (Figure 4), but for both groups, their main goal is to help and support the person working in the laboratory. When working with laboratory assistants, it is unavoidable to address issues like privacy and confidentiality beforehand. Encrypted connections are an important factor for secure file transport. Other security methods like authentication, usage of (voice-)fingerprints, logging of system activities, message signing, or version control are becoming equally relevant when working with digital and physical assistants in digitalized laboratories. [28, 34, 36, 43, 50, 52, 57, 65]

|

Digital assistants

Digital assistants include everything that might help the person working in the laboratory in their digital work, such as workflow scheduling, data gathering, or documenting experiments. The first digital assistant gaining importance in academic laboratories is the ELN. Requirements for the ELN are becoming more and more sophisticated and complex. It is not just about digital documentation of experiments but also about automated data gathering, connectivity to laboratory devices, sharing progress in team projects, as well as improving user-friendliness, data security, research quality. [25, 66] With the ongoing digitalization of academic laboratories, most data are already digitally available and can be linked, copied, or integrated into the ELN. With the increasing availability of ELNs with different sizes and scopes, as well as increasing need for ELNs becoming evident, it is only a matter of time until all laboratories (including academia) will use an ELN instead of an analog laboratory notebook. [67, 68] When switching an entire laboratory or institute to ELN, IT support needs to be considered: data might get stored on servers that need to be maintained; mobile devices, computers, and WIFI needs to be available; and upgrade and backup-support might be needed as well. After switching to an ELN, most users are preferring it over the paper-based version. [67] When going through the transformation towards a digitalized laboratory, it is important to take concerns raised by future users serious to increase acceptance of new tools and this new way of working in the laboratory.

The next level of digital assistance in bioprocess development laboratories is using a LIMS. The LIMS is where all data are collected, processed, managed, represented, and stored. The ELN is, in most cases, integrated into this management system. Using LIMS will give the laboratory user the advantage to digitally combine various parts of the laboratory such as data gathering, inventory management, workflow scheduling, sample tracking, data mining, device controlling, report creation, information overview, and metadata analysis. [28, 53, 67] Just as ELNs, there are various LIMS that differ in their scope and capabilities. [65] LIMS is also the basis of starting to enable model-based control of bioprocesses and automating workflows. [7] Another mean of digital assistance in the lab is the use of smart assistants like Amazon's Alexa. Laboratory workers can ask simple questions about melting points, molecular weight, and isoelectric points, or get answers to simple calculations during a workflow without having to physically interrupt their experiment. [69, 70]

Physical assistants

Liquid handling stations, autosamplers, and real-time imaging devices are physically helping researchers with laboratory work. In recent years, however, stationary and mobile robots, as well as cobots, are starting to appear sporadically in academic laboratories supporting workers with various physical tasks. [71] Stationary robots are usually fixed to a place in the laboratory, unable to change their location by themselves, thus being mainly used to execute clear and repetitive workflows. Mounting them on rails gives them added spatial flexibility, increasing their potential application possibilities. [8] Mobile robots are taking on the task of transporting objects or samples inside the laboratory using their sensors, actuators, and information processing for navigation. [8, 62] These mobile robots might even be drones in the future that use the air space in laboratories as transportation routes. [8] They are independent from human cooperation, mostly needing input or commands to start but then work on their own. The development of sensors and also the setup of the whole laboratory or building needs to be considered when using mobile robots. When they transport an object to a different floor, there needs to be some kind of elevator, automatic doors, and no unplanned obstacles in the way. [31]

Cobots on the other hand are collaborative robots that can be used in cooperation with humans and, due to their light weight design and improvement of safety features, they can also be used close to humans. [8, 72] Robots in general are not common in academic laboratories yet, but researchers are starting to see the advantages that robots (stationary and mobile) and cobots offer. They rule out human volatility errors, do not mislabel samples, always transfer the right liquid or the right sample to the intended place, and will always log the right parameters. [12] Long development times, high maintenance needs, high monetary investments, and even reconstruction of whole laboratory areas are hindering the progress of increasing the amount of physical help in form of robots in academic laboratories. Additionally, in order for robots to be implemented into the academic laboratory, a digital infrastructure with a suited LIMS needs to be implemented first. [62] However, using robotics in the laboratory is helping to automate workflows or even whole processes, which will be further discussed in the following subsection.

Automated laboratories

A high level of automation is possible for a few laboratories in the field of academic bioprocess development. However, automation and high-throughput is not possible for most experimental settings in research laboratories due to the need of high flexibility and high variety of process workflows. [8] The complexity of academic laboratories is also justified in the high diversity of laboratory staff composition. It is not rare that personnel from more than one research project are sharing laboratories and or devices resulting in a different use of those. More challenges on the way to automating workflows are organizing the setup and ordering system [73], integrating standalone devices that are already automated by themselves [9], matching software requirements like legacy technology, proprietary interfaces, and new standard communication protocols (SiLA2, OPC UA). [9]

To collect as much data as possible for optimization of a bioprocess, Schmid et al. automated a setup for a DoE for cultivation experiments. [9] Using iLAB as the software framework, they integrated a liquid handling station and the DoE software MODDE into their digital infrastructure. The process parameters of cultivation experiments performed by two different bioreactor systems (96-well plate and 10 mL scale) were gathered and analyzed automatically in order to optimize the bioprocess. The integration of the liquid handling station and the standalone software MODDE, which was used to plan the experiments, was realized using SiLA2 and an iLAB plugin. This highly specific automated workflow made it possible to compare data and results from variously scaled experiments due to the focus on data integrity throughout the entire automated process. [9]

In the laboratory group of Buzz Baum at the University College London, Jennifer Rohn set up an automated microscopy for high-throughput screening of genes. Using an automated liquid handling system, short interfering RNA (siRNA), and automated microscopy for imaging, they are now able to screen genome-wide for genes that play a role in different morphological properties of human cells. After they overcame struggles to set up the workflow in the beginning, considering basic needs like freezer space or inventory control, they also found it challenging to find a good algorithm for analyzing the cell morphology. Using the automated setup saved their group a lot of time and made it possible to exploit a lot more of the available technology. [73]

Seidel et al. developed a comprehensive IT infrastructure for data handling and device integration. [28] Thus, they were able to automate a bioprocess workflow from online selection of enzymes through ordering gene sequences, cloning, transformation, cultivation, and preproduction to the final scale up to production scale. They focused on using a software that is comprehensive and easy to understand. Human error and workload of laboratory personnel as well as bioprocess developing times are reduced. Even though data storage and sharing is improved using their IT infrastructure, maintenance costs for databases, devices, and interfaces have increased. The whole digital infrastructure is only user-friendly as long as it helps the laboratory personnel in their tasks rather than making it more complicated. [28]

The potential of fully automated workflows or even fully automated laboratories in academic bioprocess development laboratories is yet to be discovered and will also not be possible everywhere due to the need of high flexibility and complexity. Dividing whole processes into sub-processes, developing a digital twin, using a modular setup, and staying flexible throughout the planning will ultimately help to automate an entire workflow. [7, 8, 28, 74, 75]

Artificial intelligence and machine learning

Artificial intelligence (AI) is a general term for the idea of code or algorithms to understand data in a way a human would, or carry out complex tasks based on data. Using AI tools like machine learning (ML) to analyze collected data can help to generate predictions or knowledge from big datasets efficiently. Besides analyzing simulated experiment data, AI and ML can also be used to solve challenges in everyday laboratory work. Especially when it comes to classification of data sets, protein structure predictions, or image recognition, these increasingly common techniques can prove useful. [76, 77]

In 2021, Jumper et al. published their AI-based program AlphaFold that uses ML to predict the three-dimensional structure of proteins, based on their amino acid sequence, with a high accuracy. [78] AI-ML applications can help to make process control decisions that will impact the quality of the target product, as Nikita et al. showed in their work on continuous manufacturing of monoclonal antibodies. [77] While ML methods helped Volpato et al. to predict the enzymatic class of a protein based solely on the full amino acid sequence, other works showed the power of ML for classification problems as well. Austerjost et al. developed a mobile application for an image-based counting algorithm for Escherichia coli colonies on agar plates. Their idea was to use ML in order to have a more efficient sample throughput with high accuracy. [57] Biermann et al. used an algorithm-based image processing for the at-line determination of endospores from microscopic pictures. [79] Here, too, the aim was to achieve a faster result using ML. Thus, the presented applications are reducing the time to determine a certain number (E. coli colonies and endospores, respectively), making everyday laboratory work faster and more efficient.

Further and deeper information of ML in the field of bioprocess engineering can be read in the work of Duong-Trung et al. [80] As shown in these examples, AI or ML can make laboratory work faster and more efficient. To achieve these results, large amounts of data needed to be generated or harvested from online databases first. This data then needs to be explored, preprocessed, and or annotated manually. Here, education on how to handle big sets of data is beneficial for scientific staff. After data preparation models, neural networks or algorithms need to be developed, trained, and optimized before they can provide the support the laboratory worker is anticipating. [76] Therefore, to unlock the whole potential of AI or ML in the laboratory, digitalization for automated secure data capture is needed as the first step.

Simulation of experiments using process models

Digital twins, as previously discussed, have, among other things, potential to gather great amounts of data due to their real-time data monitoring. With advancing digitalization in bioprocess laboratories, the amount of data from experiments is therefore also increasing. Based upon this obtained data, process models can be designed that represent the biotechnological process as a whole to run simulations, with the aim to extract new valuable data. [11, 81, 82] Modeling experiments and simulating their respective outcomes using parameter adjustment is unlocking new potential to get information without having to do all the actual work inside the laboratory. [76] After a process model has been developed, it is important to verify its accuracy either through experimental validation experiments or through expert knowledge. [7]

Zobel-Roos et al. used the data of only a small amount of experiments for a process model to evaluate the outcome of different continuous chromatography setups. These small experiments were done to estimate the parameters for the process model. The results determined by the model were then experimentally verified in the laboratory. Simulation and tracer experiments showed a deviation as small as 0.8%. [83] Ladd Effio et al. showed successful process modeling approaches for the downstream part of a bioprocess optimizing anion-exchange chromatography. After experimentally determining adsorption isotherms and mass transfer parameters they were subsequently able to use their simulation-based model to identify optimal elution conditions. [84] Boi et al. were able to develop a model of affinity membrane chromatography to help with the prediction of membrane performance in bioprocess design. [85]

Cell type, physiology, metabolism, and more factors are substantially increasing the complexity of bioprocesses when whole cells are involved. While representing a reliable mathematical model of a whole cell is still seemingly impossible, model-based methods are able to make predictions. These predictions cannot be fully trusted and have to be verified with experiments in the laboratory. However, designing bioprocesses by analyzing data with ML methods and simulations can help to optimize experimental setups that can subsequently be transferred to the laboratory. [2, 7]

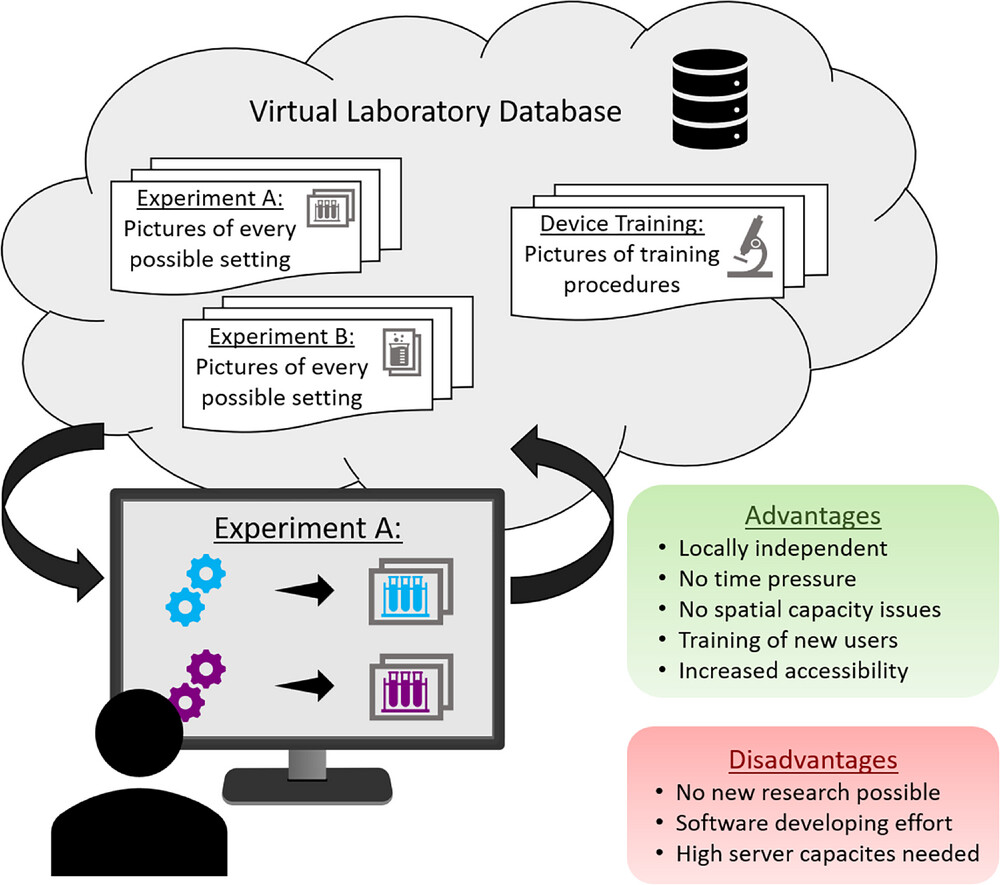

Virtual laboratories

Besides digitalizing the physical laboratory, there is another approach that is particularly practical for educational purposes: virtual laboratories. The concept of these laboratories is that results of experiments are being photographed or otherwise documented and stored with their respective settings. These pictures and information are then uploaded to the virtual laboratory (online database). As depicted in Figure 5, users can then access this virtual laboratory through, for example, a graphical web-service, alternating the settings of the virtual experimental setup and receiving the associated picture or outcome. [86] This tool proves to be useful for a deeper understanding of complex device equipment. A study found that students who completed an online device training in a virtual laboratory before conducting the experiment in the physical lab needed less guidance and were able to spend more time for the actual experiment rather than getting familiar with the complex equipment. Having learned the basic equipment knowledge online, these students were better prepared and more confident than those who did not use the virtual laboratory beforehand. [87, 88] When students use virtual instead of traditional laboratories for educational purposes, there is no difference in the effectiveness of content knowledge. [89] The easy and automated generation of virtual laboratories is well described in the work of De La Torre et al. [86] Even if this digitalization strategy has no impact for academic laboratories to generate new research data, this can well be used for training or educational purposes. [90]

|

The use of virtual reality (VR) is another way to interpret the term virtual laboratories. For better understanding, the terms "VR" and "augmented reality" (AR) are shortly explained by Nakhle et al. [91]:

- Virtual reality: “Immerses users into interactive and explorative virtual environments based on 3D representation and simulation of the real world and objects within it.” Requirements: VR headset, PC. [91]

- Augmented reality: “Superimposes valuable visual content and information over a live view of the real world to provide users an enriched version of reality, allowing them to interact with digital objects in real-time.” Requirements: Smartphone & tablet, AR glasses. [91]

Harfouche et al. showed that when studying biotechnology, bioethics can be taught via VR, making a virtual classroom experience possible for students. [92] Another example for education via VR is the Arizona State University, which is offering a biology degree solely based on VR laboratory work. [88, 93] Advantages of these VR classrooms or laboratories include the student's local independence, no exposure to toxic chemicals, no spatial limitations, the possibility to slow down very fast reactions for visualization, and no safety concerns when learning about, for example, explosives or toxins. Thus, the learning experience using VR will be enhanced in a cost and time effective manner. Additionally, students will interactively be taught with reduced human bias regarding gender, age, ethnicity, and race. [88, 91, 92]

VR can be a helpful tool for scientists to visualize 3D images to grasp cell's morphological features with confocal microscopy. ConfocalVR is a VR application that can be installed on consumer-grade VR systems to visualize microscopic image stacks into 3D images in the virtual world. The user can then interact with the digital object and examine its properties in a way that would not be possible with 2D imaging on a computer screen. [94]

Device training and or maintenance can be improved by using AR. Device experts do not have to travel to laboratories but can guide the scientists through maintenance procedures with instructions while being able to see what the scientists who wears AR glasses sees. Instructions and information can be displayed in the user's view for guidance. This way requesting assistance from local independent experts, teachers, or colleagues is not more than a call away linking more knowledge together. [95-97]

Conclusions

Digitalizing academic bioprocess development laboratories can lead to a new transformed era of research. When supported by digital assistants, using connected devices, more intuitive user interfaces, and data gathering according to FAIR data principles, academic research will become less time consuming and more effective and accurate, as well as less prone to (human) error. This will also lead to a better reproducibility of experiments due to improved data integrity and availability. Integrating devices into digital infrastructures is becoming easier because of the increased use of standard communication protocols like SiLA2 and LADS. New students can be prepared for these new kinds of digital laboratories with proper education, including learning basic IT skills, like scripting knowledge or the ability to handle big data sets.

Digital or physical assistants can be a good way to help personnel working in the laboratory, but their implementation and maintenance is complex. Collaborating with IT experts will help to overcome this issue, as well as the task of seamless integration and communication of laboratory devices. When working in automated laboratories, IT experts working closely with laboratory workers are crucial for creating and executing automated research workflows. Communication between scientific researchers and IT experts will become essential because even the most sophisticated (from the IT perspective) digitalization concept will be useless when it complicates the laboratory work. This communication is only possible if both sides have a basic vocabulary and understanding of the other topic. Simulating experiments using process models have potential to decrease laboratory work while shifting the work to IT experts, who also have to understand the basic science behind the experiment. Besides IT experts with experimental knowledge, laboratory staff with IT knowledge will advance the highly interdisciplinary process of digitalization as well.

Virtual laboratories are good for training or educational purposes but will not produce any new scientifically relevant data. Integrating VR or AR in laboratory work or laboratory education programs is a cost-effective way of protecting scientific staff and or students. Besides, the possibility of new points of view on experiments, cells, or other small particles or fast processes can add new value to research.

Finally, there is not a single digitalization strategy that fits all. The needs and requirements, as well as the level of flexibility and complexity, is very broad in the field of academic bioprocess development. Thus, quality collaboration and communication between IT experts and laboratory staff, consideration of standard device communication protocols, following FAIR data principles, and making sure that the solution supports the laboratory worker rather than disturb them will lead to a maintainable digital infrastructure fitting researchers' individual needs. Additions to the education of new scientists and interdisciplinary networking is also a key aspect to drive this transformation towards digitalized laboratories in the academic field of bioprocess development.

Abbreviations, acronyms, and initialisms

- AI: artificial intelligence

- AR: augmented reality

- CLI: command line interface

- DoE: design of experiments

- ELN: electronic laboratory notebook

- FAIR: findable, accessible, interoperable, reusable

- GUI: graphical user interface

- IoT: internet of things

- LADS: Laboratory and Analytical Device Standard

- LIMS: laboratory information management system

- ML: machine learning

- NUI: natural user interface

- OPC UA: open platform communications unified architecture

- SiLA2: Standardization in Lab Automation 2

- VR: virtual reality

- VUI: voice user interface

Acknowledgements

The authors thank the German Ministry of Education and Research (BMBF) for funding within the National Joint Research Project Digitalization in Industrial Biotechnology (DigInBio). Grant number: FKZ 031B0463B. The authors would like to thank the Open Access fund of Leibniz University Hannover for the funding of the publication of this article. T.H. would like to thank Ferdinand Lange for his help with technical questions.

Open access funding enabled and organized by Projekt DEAL.

Data availability

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

Conflict of interest

The authors declare no conflicts of interest.

References

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. A fair amount of grammar and punctuation was updated from the original. In some cases important information was missing from the references, and that information was added.