Journal:Health data privacy through homomorphic encryption and distributed ledger computing: An ethical-legal qualitative expert assessment study

| Full article title | Health data privacy through homomorphic encryption and distributed ledger computing: An ethical-legal qualitative expert assessment study |

|---|---|

| Journal | BMC Medical Ethics |

| Author(s) | Scheibner, James; Ienca, Marcello; Vayena, Effy |

| Author affiliation(s) | ETH Zürich, Flinders University, Swiss Federal Institute of Technology Lausanne |

| Primary contact | Email: effy dot vayena at hest dot ethz dot ch |

| Year published | 2022 |

| Volume and issue | 23 |

| Article # | 121 |

| DOI | 10.1186/s12910-022-00852-2 |

| ISSN | 1472-6939 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://bmcmedethics.biomedcentral.com/articles/10.1186/s12910-022-00852-2 |

| Download | https://bmcmedethics.biomedcentral.com/counter/pdf/10.1186/s12910-022-00852-2.pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

Background: Increasingly, hospitals and research institutes are developing technical solutions for sharing patient data in a privacy preserving manner. Two of these technical solutions are homomorphic encryption and distributed ledger technology. Homomorphic encryption allows computations to be performed on data without this data ever being decrypted. Therefore, homomorphic encryption represents a potential solution for conducting feasibility studies on cohorts of sensitive patient data stored in distributed locations. Distributed ledger technology provides a permanent record on all transfers and processing of patient data, allowing data custodians to audit access. A significant portion of the current literature has examined how these technologies might comply with data protection and research ethics frameworks. In the Swiss context, these instruments include the Federal Act on Data Protection and the Human Research Act. There are also institutional frameworks that govern the processing of health related and genetic data at different universities and hospitals. Given Switzerland’s geographical proximity to European Union (EU) member states, the General Data Protection Regulation (GDPR) may impose additional obligations.

Methods: To conduct this assessment, we carried out a series of qualitative interviews with key stakeholders at Swiss hospitals and research institutions. These included legal and clinical data management staff, as well as clinical and research ethics experts. These interviews were carried out with two series of vignettes that focused on data discovery using homomorphic encryption and data erasure from a distributed ledger platform.

Results: For our first set of vignettes, interviewees were prepared to allow data discovery requests if patients had provided general consent or ethics committee approval, depending on the types of data made available. Our interviewees highlighted the importance of protecting against the risk of reidentification given different types of data. For our second set, there was disagreement amongst interviewees on whether they would delete patient data locally, or delete data linked to a ledger with cryptographic hashes. Our interviewees were also willing to delete data locally or on the ledger, subject to local legislation.

Conclusion: Our findings can help guide the deployment of these technologies, as well as determine ethics and legal requirements for such technologies.

Keywords: data protection, privacy preserving technologies, qualitative research, vignettes, interviews, distributed ledger technology, homomorphic encryption

Introduction

Advanced technological solutions are increasingly used to resolve privacy and security challenges with clinical and research data sharing. [1, 2] The legal assessment of these technologies so far has focused on compliance. Significant attention has been paid to the modernized General Data Protection Regulation (GDPR) of the European Union (EU) and its impacts. In particular, the GDPR restricts the use and transfer of sensitive personal data, including genetic-, medical-, and health-related data. Further, under the GDPR, data custodians and controllers must take steps to ensure the auditability of personal data, including patient data. Specifically, the GDPR’s provisions guarantee the right to access information about processing (particularly automated processing) and allow data subjects to monitor the use of their data. [3] These rights exist alongside requirements for data custodians and controllers to keep records of how they have processed personal data. [4] The GDPR also introduces a right of erasure, which allows an individual to request that a particular data controller or data custodian delete their data.

At the same time, the increased use of big data techniques in health research and personalized medicine has led to a surge in hospitals and healthcare institutions collecting data. [1] Therefore, guaranteeing patient privacy—particularly for data shared between hospitals and healthcare institutions—represents a significant technical and organizational challenge. The relative approach to determining anonymization under the GDPR means that whether data is anonymized depends on both the data and the environment in which it is shared. Accordingly, at present it is unclear whether the GDPR permits general or “broad” consent for a research project. [5] A further issue concerns how patient data might be used for research. On the one hand, patients are broadly supportive of their data being used for research purposes or for improving the quality of healthcare. On the other hand, patients have concerns about data privacy, as well as having their data be misused or handled incorrectly. [6]

In response to these challenges, several technological solutions have emerged to aid compliance with data protection legislation. [7] Two examples of these with differing objectives are homomorphic encryption (HE) and distributed ledger technology (DLT). HE can allow single data custodians to share aggregated results without the need to share the data used to answer that query. [8, 9] For example, HE can be particularly useful for performing queries on data which must remain confidential, such as trade secrets. [10] In addition, as we discuss in this paper, HE can be useful for researchers who wish to conduct data discovery or feasibility studies on patient records. [11] A feasibility study is a piece of research conducted before a main research project. The purpose of a feasibility study is to determine whether it is possible to conduct a larger structured research project. Feasibility studies can be used to assess the number of patients required for a main study, as well as response rates and strategies to improve participation. [12] A challenge with conducting feasibility studies is that datasets may be held by separate data custodians at multiple locations (such as multiple hospitals). To conduct a feasibility study, the data custodian at each location would need to guarantee data security before transferring the data. Although necessary, this process can be time-consuming, particularly due to the need for bespoke governance arrangements. [13] However, HE can allow for data discovery queries to be performed on patient records without the need for that data to be transferred. [11] With adequate organizational controls, the lack of transfer of data using HE could satisfy the GDPR’s definition of anonymized data and state-of-the-art encryption measures. [2, 7]

By contrast, DLT is not designed to guarantee privacy, but rather increase transparency and trust. DLT attempts to achieve this objective by offering each agent in a processing network a copy of a chain of content. This chain of content, known as the ledger, is read-only, and all access to the content on it is time-stamped. Accordingly, this ledger provides all agents with a record of when access to data occurs. [14] To prevent tampering, the ledger uses a cryptographic “proof of work” algorithm before new records can be added. [15]

Perhaps the most famous use case for DLT is blockchain, which is designed to record agents transacting with digital assets. [16] However, proof of work algorithms were initially employed to certify the authenticity of emails and block spam messages. [17] Further, a similar algorithm to that employed in many blockchain implementations was initially used to record the order in which digital documents were created. [14, 18] These examples demonstrate that there may be possible uses for DLT outside current implementations. [18]

Accordingly, some scholars have proposed using DLT-style implementations to create an auditable record of access to patient data. [19, 20] Proponents of this approach argue that access to an auditable record could help increase patient trust in the security of their data. [21, 22] Further, DLT could be coupled with advanced privacy enhancing technologies to enable auditing for feasibility studies and ensure that only authorized entities can access patient records. [20]

Nevertheless, there are ongoing questions as to the degree to which HE and DLT can be used to store and process personal data whilst remaining GDPR-compliant. [7, 23] In particular, the read-only nature of the ledger underpinning DLT might conflict with the right of erasure contained in the GDPR. The relationship between data protection law and these novel technologies is further complicated when considering data transfer outside the EU [24, 25]. Beyond these legal considerations, there are deeper normative issues regarding the relationship between individual privacy and the benefits flowing from information exchange. These issues are particularly pronounced when dealing with healthcare, medical, or biometric data, where patients are forced into an increasingly active role in how their data is used. [26]

Accordingly, the purpose of this paper is to assess the degree to which novel privacy enhancing technologies can assist key data custodian stakeholders in complying with regulations. For this paper, we will focus on Switzerland as a case study. Switzerland is not an EU member state and is therefore not required to implement the GDPR into its national data protection law (the Datenschutzgesetz, or Federal Act on Data Protection [FADP]). However, because of Switzerland’s regional proximity to other EU member states, there is significant data transfer between Swiss and EU data custodians. [27] Accordingly, the ongoing transfer of data between Switzerland and the EU requires the FADP to offer adequate protection, as assessed under Article 45 of the GDPR. [28, 29] Because the FADP was last updated in 1992, the Swiss Federal Parliament in September 2020 passed a draft version of the updated FADP. Estimated to come into effect in 2022, this FADP is designed to achieve congruence with the GDPR. [30]

Switzerland also has separate legislation concerning the processing of health-related and human data for medical research: the Human Research Act (HRA) and the Human Research Ordinance (HRO). These instruments impose additional obligations beyond the FADP for the processing of health-related data for scientific research purposes. [29, 31] Crucially, the HRA permits the reuse of health-related data for future secondary research subject to consent and ethics approval. The HRA also creates a separate regime of genetic exceptionalism for non-genetic and genetic data. [32] Specifically, anonymized genetic data requires the patient “not to object” to secondary use, whilst anonymized non-genetic data can be transferred without consent. Under the HRA, research with coded health-related data can be conducted with general consent, whilst coded genetic data requires consent for a specific research project. [1] Recent studies indicate that most health research projects in Switzerland use coded data. [33] This definition is considered analogous to pseudonymized data under the GDPR. [1, 34]

Finally, Switzerland is a federated country, with different cantonal legislation for data protection and health related data. This federated system has the potential to undermine nationwide strategies for interoperable data sharing. Therefore, the Swiss Personalised Health Network (SPHN) was established to encourage interoperable patient data sharing. In addition to technical infrastructure, the SPHN has provided a governance framework to standardise the ethical processing of health-related data by different hospitals. [31]

In this paper we describe a series of five vignettes designed to test how key stakeholders perceive using HE and DLT for healthcare data management. The first three vignettes focus on governing data transfer with several different types of datasets using HE. These include both genetic and non-genetic data, to capture the genetic exceptionalism under the Swiss regulatory framework. The latter two vignettes focus on erasure requests for records of data stored using DLT. We gave these vignettes to several key stakeholders in Swiss hospitals, healthcare institutions, and research institutions to answer. We then transcribed and coded those answers. We offer recommendations in this paper regarding the use of these technologies for healthcare management.

Our paper is the product of an ethico-legal assessment of HE and DLT, conducted as part of the Data Protection and Personalised Health (DPPH) project. The DPPH project is an SPHN-Personalized Health and Related Technologies (PHRT) driver project designed to assess the use of these technologies for multisite data sharing. Specifically, the platform MedCo, which is the main technical output from the DPPH project, uses HE for data feasibility requests on datasets stored locally at Swiss hospitals. [11] Access to this data will be monitored using decentralized ledger technology that will guarantee auditability. Therefore, the purpose of this study is to empirically assess the degree to which the technologies above can help bridge the gap between these multiple layers of regulation.

Methods

To explore the impact of these technologies on regulation-governing health data sharing, we conducted an interview study with expert participants in the Swiss health data sharing landscape. Our interviewees were drawn from legal practitioners, ethics practitioners, and clinical data managers working at Swiss hospitals, research institutes, and governance centers. We used vignette studies, with two sets of vignettes for each technology we tested. For this part of our project, we developed an interview study protocol with vignettes, describing two parts dedicated to the two technologies described above. Vignettes are a useful tool for both qualitative and quantitative research, as well as research with small and large samples. In qualitative research, they allow for both structured data and comparison of responses. [35, 36] Further, vignettes can provoke interviewees to explain the reasons for their responses more comprehensively than open-ended questions. [37] Accordingly, for this study, vignettes were chosen because we not only wanted to assess perspectives on specific technologies but also on how various stakeholders would apply these principles in practice. [38] Our findings can help assess the degree to which these technologies can help bridge the gap between different layers of the regulatory framework described above.

Part A of our vignette contained three scenarios concerning a data discovery or feasibility request on patient data. These scenarios were designed using example data discovery protocols from the DPPH project. [11] The first scenario pertained to a data discovery request on non-genetic patient data to build a cohort of patients who had received an anti-diabetic drug. The second scenario pertained to a data discovery request on genetic patient data to build a cohort of patients with biomarkers responding to skin cutaneous melanomas. The third scenario pertained to a data discovery request performed on a larger set of melanoma related biomarkers. However, unlike the other two scenarios, this scenario concerned a data discovery request from a non-SPHN-affiliated commercial research institute, for conducting drug discovery on an anti-melanoma drug. For each scenario, participants were asked whether they would accept the request with general consent from patients, request specific informed consent, or refer the matter to an ethics committee. In the alternative, patients were given the option to indicate another method of handling the request. For each scenario, participants were asked an alternative question as to whether they would permit the feasibility request if general consent had not been sought from patients. The Swiss Academy of Medical Sciences general consent form was used as the template for all the vignettes in our interview guide.

Part B of our vignette contained two additional scenarios. The fourth overall scenario concerned a data erasure request for patient data from the second scenario of Part A that was included as part of a cohort. In this scenario, the patient was concerned about an adverse finding regarding their visa status. [39] The fifth scenario was identical to the fourth scenario, except that the patient’s data had already been included in a cohort that was sufficiently large for research and sent for publication. Both the background and scenario mentioned that personally identifying data was not stored as part of the ledger. Instead, the locally available data was linked to the data via by a cryptographic hash which linked to the record in the local ledger. This hash could be deleted, breaking the link between the data and the ledger, and making the patient’s data unavailable for further research.

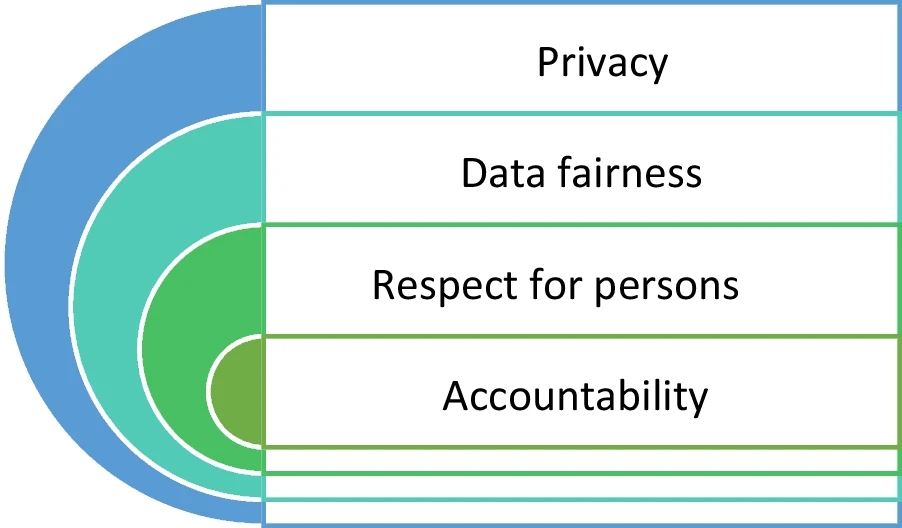

In addition, for each of our scenarios, we coupled the qualitative answers with a set of Likert-like scale questions for the four ethical processing factors from the SPHN Ethical Framework for Responsible Data Processing in Personalized Health. [31] These four ethical factors were privacy, data fairness, respect for persons, and accountability (see Fig. 1). The purpose of this was to assess the degree to which interviewees rated the importance of competing ethical factors in research. (The vignettes and questionnaires used in this project are contained in Additional File 1.)

|

An initial list of interviewees was compiled by examining the web pages of relevant institutions in Switzerland to see whether there were any appropriate subject matter experts. We targeted our survey at clinical data management experts, data protection experts, clinical ethics advisors, in-house legal counsel, external legal advisors affiliated with institutions, and health policy experts. These interviewees work at institutions across the different linguistic and geographic barriers in Switzerland. Specifically, these included the five major university hospitals in Switzerland—Geneva University Hospitals (Hôpitaux universitaires de Genève, or HUG), the University Hospital of Bern (Universitätsspital Bern, or Inselspital), the Lausanne University Hospital (Centre Hospitalier Universitaire Vaudois), and the University Hospital of Zürich (Universitätsspital Zürich, or USZ)—as well as various universities and research institutions. We also drew interviewees from any institutions involved in ethics or legal governance for healthcare. Interview invitations were sent out to those participants who had a publicly available email address. After two weeks, reminders were sent out to interviewees. If interviewees had another person that they thought was a more appropriate fit for the research project, we asked them to nominate another set of potential interviewees.

Ethics approval was obtained for this project with the ETH Zürich ethics review committee (2019-N-69). Interviewees who agreed to participate in our research project were first asked to sign a consent form and were then asked again to provide verbal consent at the start of the interview. We also sought approval to perform chain sampling for this project, such that at the end of each interview we asked our interviewee groups for recommendations on other interviewees. Some interviews were conducted with more than one interviewee as part of a group. We obliged this request on the grounds that it would grant results more consistency if multiple experts from the same institution agreed on answers. Five potential interviewees opted out of this study because of concerns that they did not have expertise to participate in the study. All interviews were recorded with two devices, one device provided by the ETH Zürich audio visual department, and one device owned by the first author. The interviews were conducted either in-person or via voice-over-IP (VoIP) software such as Skype or Zoom. On average, the interviews took approximately 42 minutes each to complete.

The interviews were transcribed and then inductively-deductively coded for themes by the first author. This inductive coding process involved the first author reading through the transcript and familiarizing themselves with the answers of the interviewee and identifying the interview’s justifications. For example, if the interviewee wanted an ethics committee to review a data discovery request due to existing policy, this answer was labelled as "policy consistency." The first author then generated initial codes around the interview questions and answers. These included whether the interviewee would release the data without further consent, seek informed consent from patients, or refer the matter to an ethics committee. These initial codes were then used to generate further codes from the research, such as reasons for an interviewee’s decision or the interviewee’s understanding of technical terms. For example, these could include why the interviewee would refer the matter to an ethics committee for examination or the meaning of anonymized data. [40] Once these initial codes were applied, the first named author grouped them into themes for each vignette. This grouping aided the analysis of the results, depending on the scenario and the circumstances. [41] This qualitative data analysis was complemented with numerical data analysis to measure how many interviewees were willing to accept data transfer requests under the scenarios depicted in our vignettes.

Results

References

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added.