Journal:Development and governance of FAIR thresholds for a data federation

| Full article title | Development and governance of FAIR thresholds for a data federation |

|---|---|

| Journal | Data Science Journal |

| Author(s) | Wong, Megan; Levett, Kerry; Lee, Ashlin; Box, Paul; Simons, Bruce; David, Rakesh; MacLeod, Andrew; Taylor, Nicolas; Schneider, Derek; Thompson, Helen |

| Author affiliation(s) | Federation University, Australian Research Data Commons, Commonwealth Scientific and Industrial Research Organisation, University of Adelaide, The University of Western Australia, University of New England |

| Primary contact | Email: mr dot wong at federation dot edu dot au |

| Year published | 2022 |

| Volume and issue | 21(1) |

| Article # | 13 |

| DOI | 10.5334/dsj-2022-013 |

| ISSN | 1683-1470 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://datascience.codata.org/articles/10.5334/dsj-2022-013/ |

| Download | https://datascience.codata.org/articles/10.5334/dsj-2022-013/galley/1138/download/ (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

The FAIR (findable, accessible, interoperable, and re-usable) principles and practice recommendations provide high-level guidance and recommendations that are not research-domain specific in nature. There remains a gap in practice at the data provider and domain scientist level, demonstrating how the FAIR principles can be applied beyond a set of generalist guidelines to meet the needs of a specific domain community.

We present our insights developing FAIR thresholds in a domain-specific context for self-governance by a community (in this case, agricultural research). "Minimum thresholds" for FAIR data are required to align expectations for data delivered from providers’ distributed data stores through a community-governed federation (the Agricultural Research Federation, AgReFed).

Data providers were supported to make data holdings more FAIR. There was a range of different FAIR starting points, organizational goals, and end user needs, solutions, and capabilities. This informed the distilling of a set of FAIR criteria ranging from "Minimum thresholds" to "Stretch targets." These were operationalized through consensus into a framework for governance and implementation by the agricultural research domain community.

Improving the FAIR maturity of data took resourcing and incentive to do so, highlighting the challenge for data federations to generate value whilst reducing costs of participation. Our experience showed a role for supporting collective advocacy, relationship brokering, tailored support, and low-bar tooling access, particularly across the areas of data structure, access, and semantics that were challenging to domain researchers. Active democratic participation supported by a governance framework like AgReFed’s will ensure participants have a say in how federations can deliver individual and collective benefits for members.

Keywords: agriculture, AgReFed, FAIR data, community, governance, RM-ODP, data federation

Context and contribution

The agriculture data landscape is complex, comprising of a range of data types, standards, repositories, stakeholder needs, and commercial interests, creating data silos and potential "lock-ins" for consumers. (Kenney, Serhan & Trystram 2020; Ingram et al. 2022) There is an urgent need to work toward clear, ethical, efficient agricultural data sharing practices (Jakku et al. 2019; Wiseman & Sanderson 2018) that incorporate improvements to discoverability, accessibility, interoperability, and quality of data across the value chain. (Barry et al. 2017; Perrett et al. 2017; Sanderson, Reeson & Box 2017) A priority stakeholder question across the agri-tech sector is "how do we create systems whereby people feel confident in entering and sharing data, and in turn how do we create systems to govern data for the benefit of all?" (Ingram et al. 2022: 6)

Agricultural data stakeholders span the public and private sector, including farmers, traders, researchers, universities, consultants, and consumers. Their varied needs around data type, trustworthiness, timeliness, availability, and accuracy shape the many data capture, storage, delivery, and value-add products emerging across the public and private sector. (Allemang & Bobbin 2016; Kenney, Serhan & Trystram 2020) Data providers require confidence in data infrastructure governance before they share their data, in turn requiring ethics of ownership, access, and control. Strong value propositions are also key. This helps grow participants via a "network effect," increasing infrastructure value further. (Chiles et al. 2021; Ingram et al. 2022; Sanderson, Reeson & Box 2017)

Offerings of the many data infrastructures vary and may include a means for:

- depositing data for persistence, citation, publisher, and funding requirements (Datacite, 2022);

- increasing collaborative opportunities;

- enhancing regulatory compliance;

- improving on-farm operations;

- leveraging standardization, quality assurance, and quality control pipelines and specialist analysis capacity (Harper et al. 2018; Wicquart et al. 2022);

- running simulations through virtual research environments (VREs) (Knapen el al. 2020);

- performing cross-domain data integration (Kruseman el al. 2020); and

- linking data and models to knowledge products and decision support tooling (Antle et al. 2017).

If the goal is to make data trusted, discoverable, and re-usable across the sector (Peason et al. 2021; Ernst & Young 2019), then a single platform is unlikely to meet all (public, private, commercial) needs. (Pearson et al. 2021; Ingram et al. 2022) Sector concerns include among others vendor lock-ins and a tendency towards stifling innovation. (Ingram et al. 2022) As such, a grand challenge is found in how data can be discovered and made interoperable among so many different databases and infrastructures. One solution is a decentralized federated approach where there is no single master data repository or registry (Harper et al. 2018) but rather a network of independent databases and infrastructures that can deliver data through a shared platform using standard transfer protocols via application programming interface (API). The data still remains with providers, as can access controls.

Preferring a single front-end source of data, as found in data federation, is not novel, and many of the FAIR (findable, accessible, interoperable, and re-usable) principles (Wilkinson et al. 2016) underpin data federations’ functions. Some examples include the Earth System Grid Federation (Petrie et al. 2021), materials science data discovery (Plante et al. 2021), and OneGeology (One Geology 2020). In the case of agriculture, there is the AgDataCommons (USDA 2021), the proposed U.K. Food Data Trust (Pearson et al. 2021), AgINFRA (Drakos et al. 2015), and CGIAR Platform for Big Data In Agriculture. (CGIAR 2021) Many of these data federation initiatives specify standards for the description and exchange of data, focusing on a particular data type of provider and/or providing a central intermediate space to standardize data. However, we believe agriculture requires a different approach given the diversity of data stores, one that addresses the ways data is structured, described, and delivered; differences in organizational and research requirements and norms; and economic, trust, and intellectual property concerns connected to agricultural data in general.

Since 2018, we have piloted a community-governed federation approach via the Agricultural Research Federation (AgReFed). (Box et al. 2019a) Participants provisioned their data holdings from their own choice of data repository aligned to their organization's capabilities and requirements of their research field. Concurrently, they aligned with collective expectations for FAIR data. This required developing acceptable levels of FAIR data to be implemented and governed by AgReFed participants. Current practices adopt FAIR as a high-level set of guiding principles (Wilkinson et al. 2016) or a set of generalist practice recommendations. (Bahim et al. 2020) This case study addressed this gap in an agricultural-specific implementation of FAIR in practice. As part of this study, we co-developed FAIR threshold criteria for participants to deliver data through a federation, and, through a consensus process, we integrated these FAIR thresholds into a framework for ongoing governance by a research domain community, for generating individual and collective benefit and growth of a data federation.

Use cases

The datasets of the pilot included point observations, as well as spatial, temporal, on-ground, sensor, and remote sensed data. The data described plants (yield, crop rotation, metabolomic, proteomic, hyperspectral), soil, and climatic variables from across Australia (Table 1).

| ||||||||||||||||||||||||||||||||||||

The data providers defined a set of research use cases for the data in Table 1 (MacLeod et al. 2020: 29–31), identifying the current and anticipated data users and their ideal user experience. We then identified the requirements of the AgReFed platform, the data and metadata needed to deliver the use cases, and the FAIR principles that supported these requirements. These requirements are:

- Allow the datasets and the services delivering the data to be discovered through metadata. Ideally the ability to discover should be persistent and through multiple avenues (Findable Q1, Q2, and Q3, and Accessible Q4 and Q7; Table 2).

- Support appropriate data reuse and access controlled from the providers’ infrastructure through licensing, data access controls, and attribution (Accessible Q5 and Q6, and Reusable Q12 and Q14; Table 2).

- Allow the data to be queried on user-defined parameters, including temporal and spatial properties, what is being measured (e.g., "wheat," "water"), the observed property being measured, the result, the procedure used to obtain the result, and the units of measurement (Interoperable Q9 and Q10, and Accessible Q6; Table 2).

- Allow a subset of the data to be visualized through the platform and downloaded in a useable format (e.g., .csv). This requires a web service interface (Accessible Q6 and Interoperable Q8 and Q9; Table 2).

- Allow the combining of data from different datasets (Interoperable Q8 and Q9; Table 2). This requires the ability to map terms in the data to external vocabularies and semantics (e.g., replacing local descriptive terms with published controlled vocabulary concepts, such as "m" or "meter" with "http://qudt.org/vocab/unit/M") (Interoperable Q10; Table 2).

- Allow locality to be interoperable between datasets (e.g., latitude and longitude with coordinate reference system) (Interoperable Q9 and Q10; Table 2).

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Data collection and service records need to be discoverable through the federation’s platform. (AgReFed, 2021) AgReFed currently harvests from Research Data Australia. (MacLeod et. al. 2020) Therefore, it is an additional requirement that minimum metadata is entered into or harvestable by Research Data Australia. (Box et al. 2019a: 36–37)

Developing and testing the FAIR thresholds

The development of the FAIR thresholds for AgReFed participation were co-developed by the participating research data experts and data providers. Baseline assessments of the "FAIRness" of providers’ data ("Start-status" in Table 2) were made using the Australian Research Data Commons (ARDC) FAIR data self-assessment tool. (Schweitzer et al. 2021) The manual ARDC self-assessment tool articulates various levels of FAIR maturity (or "FAIRness") of data and metadata from "not at all discoverable" or "machine understandable," through to "fully understandable by both humans and machines." It also serves as an education resource for providers working to improve the FAIR maturity of their holdings.

Following this baseline assessment, providers determined where improvements could be made to move their data products along the FAIR continuum. Solutions were identified that met their own organization's goals and capabilities, as well as their end users’ needs. These were combined with requirements in the use cases to identify "Minimum thresholds" of data maturity required to support key platform functionality for data and metadata discovery, access and reuse through AgReFed. "Stretch targets" were also defined to communicate to the agricultural research community the level of data maturity that enables maximal data integration and (re)use (see the shading in Table 2).

As well as the addition of "Minimum thresholds" and "Stretch Targets," the content of the ARDC FAIR tool was modified somewhat to assist with ease of interpretation (Table 2). Changes made in response to user feedback included:

- Examples of some possible information and technology solutions were worked into the questions and answers.

- The concept of "comprehensive" metadata was clearly specified for both data collection and service records (Box et al. 2019a:36-37).

- "Preferred citation" in the metadata was added as an AgReFed requirement (Q14).

- The openness of the file format was separated from the machine readability of the data (Q8). The term "Machine-readable" was defined in terms of both syntax and structure, that is, as the representation of data products in a standard computer language structured in a way that is interpretable by machines (Q9).

- A challenge for data providers was that their data and metadata were not only individual datasets contained in a single file but multiple collections, derivations (e.g., maps) and data service endpoints. Hence the ARDC FAIR assessment was refocused from the word "data" to "data product," being the data collection or product that is provided to users, along with any associated metadata or services required for its delivery. For simplicity of a manual assessment, our Q1 – 4, 7, 10, 12 and 13 are focused on assessment of the metadata and 5, 6, 8, 9 and 10 on the data. It is acknowledged that data and metadata can be assessed for FAIRness independently (Bahim et al. 2020), and the feasibility of assessing this way for AgReFed’s purposes should be evaluated in the future.

The ARDC and the Centre for eResearch and Digital Innovation (CeRDI) supported the data providers to improve the level of FAIR maturity of their data across a twelve-month period (2018–2019). The baseline assessments, progress to the final states, and the information and technology solutions used at those states are available as supplementary data. (Levett et al. 2022) Some notable experiences informed the AgReFed FAIR "Minimum thresholds" to "Stretch targets" (Table 2). These included:

- Providers’ exemplar data products each had different FAIR starting points (see "Start-status," Table 2).

- Improvements to metadata records to meet AgReFed "Minimum threshold" requirements were possible with organizational library and ARDC support (Findability Q1 to Q4), so "Minimum thresholds" were set high for Q1 to Q3.

- Access requirements and licensing varied. These were accommodated across the thresholds of Q5 and Q12.

- Data format and structure (Interoperability Q8 and Q9) and data access method (Accessibility Q6) varied between providers, as did FAIR solutions. The solutions varied depending on the data types and the organizational/research group aspirations, skills, and IT support available. So, examples of acceptable solutions were given for Interoperability Q8 and Q9, and "Minimum thresholds" to "Stretch targets" highlighted for Accessibility Q6. Provider examples included a data service provider converting sensor data from web-viewable-only HTML to O&M structured data in machine-readable format (JSON), delivered by Sensor Things API via Frost-server. Agronomic researchers converted data in Microsoft Excel tables to PostgreSQL and MySQL databases with partial O&M design patterns. These delivered JSON and CSV by Swagger PostgREST API and OpenAPI.

- The semantic interoperability (Q10) of the data products was initially highly variable. However, no data providers utilized vocabularies that were FAIR (Cox et al. 2021) or near to FAIR. This was a "Stretch target" for providers, reflected in the sliding scale from the "Minimum threshold" (Q10). Providers described data with the URIs of external machine-readable vocabularies from within their database headers or lookup tables. These were expressed through the API endpoints. Challenges included finding and selecting vocabularies—including evaluating authority and persistence—and the need to create (e.g., Cox & Gregory, 2020) and therefore upskill.

- Provenance was recorded in different formats, reflected in "Minimum threshold" to "Stretch target" (Reusable Q13). Improvements were inconsistent and further work is needed defining "comprehensive" content.

FAIR threshold governance and implementation

The FAIR thresholds were presented to the AgReFed Council and approved through consensus (see AgReFed Council Terms of Reference) (Wong et al. 2021) for integration into AgReFed’s Membership and Technical Policy. (Wong et al. 2021; MacLeod et al. 2020) An in-depth discussion of AgReFed’s architecture is not the focus of this practice paper and is reported elsewhere. (Box et al. 2019a) However, we provide an overview in the context of how founding members implemented the governance around the FAIR thresholds.

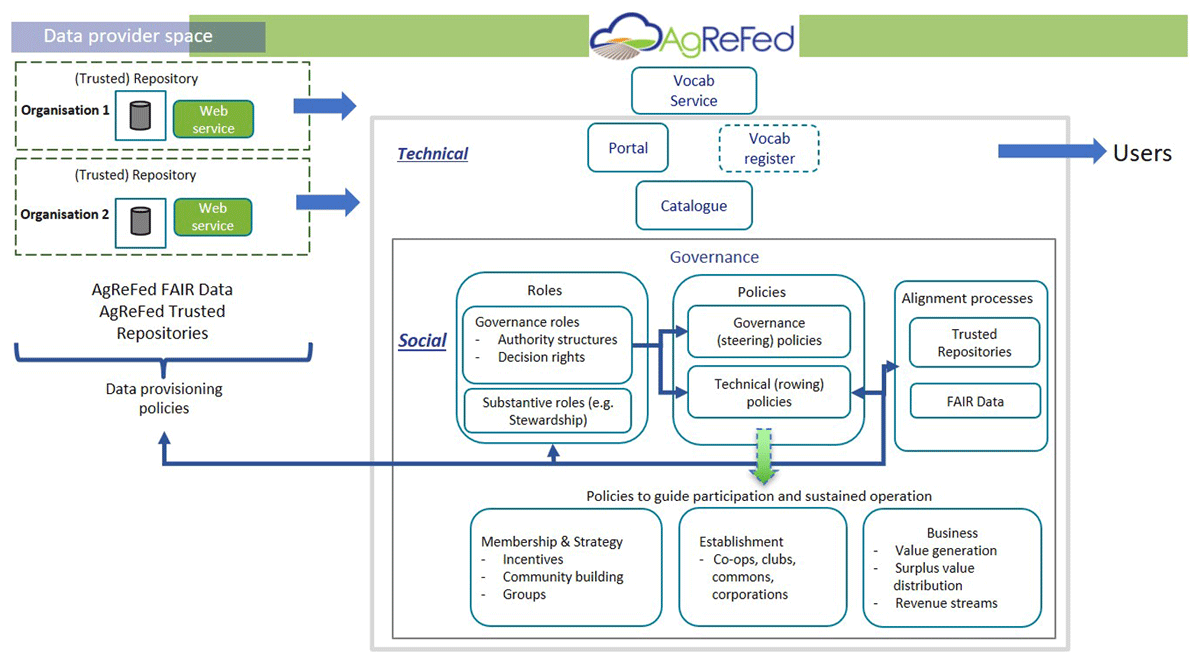

AgReFed’s operation and design is a federated architecture (Figure 1). (Box et al. 2019a) It draws on a Service-Orientated Architecture Reference Model design for Open Distributed Processing (RM-ODP) (ISO/IEC 1996), with the addition of a unique "Social Architecture" viewpoint to structure social aspects of the system, such as governance. AgReFed’s Social Architecture adopts a democratic cooperative governance approach. It is led by its members to meet shared goals of self-governance, trust through active participation, and self-determination. (Buchanan 1965; Pentland & Hardjono 2020) Governance, roles, and responsibilities are defined in the social (i.e., membership, financial, and strategic) and technical policies (Wong et al. 2021, MacLeod et al. 2020) that determine operation of AgReFed, including the implementation and governance of FAIR thresholds.

|

The recommended process is that applications for membership to AgReFed are assessed by a Federation Data Steward. (Box et al. 2019a: 11, Wong et al. 2021: 22) They assess if the provider/provider community meets the membership policy, including whether the thresholds are met. The Technical Committee works with the Federation Data Steward, as well as potentially the Data Standards and Vocabularies Steward or delegated expert advisors/groups, to ensure the partners’ solutions align with and are integrated into the technical policy.

The provider has now demonstrated alignment with collective expectations for FAIR data. (Box et al. 2019a) They nominate a Data Provider Collection Custodian and member to Council and (optionally) Technical Committee. Their data and metadata are made discoverable/harvestable to AgReFed, and they officially become AgReFed members. All members have equal participation and decision rights. In this way, the community participates in the governance of the FAIR threshold settings, including how they are maintained and implemented.

Reflections and next steps

The manual AgReFed FAIR thresholds assessment, with practical examples and definitions, was useful for helping data providers conduct meaningful assessments of their data across the full continuum of data maturity. It was also useful for developing and implementing works plans. However, to enable transparency, repeatability, and scalability of assessments across the agricultural domain, some improvements could be made. Where data, metadata, and services are machine-actionable, automated assessment could be used to support scalability and repeatability. (Devarju et al. 2021) In contrast, manual assessment is still required for less mature data and metadata, or where a more nuanced interpretation is required, e.g., content to enhance re-usability. In the current platform phase (ARDC, 2020) we plan to improve repeatability of the FAIR threshold assessment by integrating a hybrid (semi-automated) approach. (Peters-von Gehlen et al. 2022) To improve transparency and repeatability, the evidence required for both manual and machine assessment will need to be specified and may include, as examples, screen shots and automated assessment outputs.

Our experience highlighted the expertise of a Federation Data Steward will be essential for assisting partners with FAIR threshold assessments. As the federation grows, the assessments will encompass more standards and technology solutions used by different communities (e.g., FAIRsharing) (Sansone et al. 2019). If various solutions align with or should be integrated into AgReFed technical policy, this will need to be evaluated by the steward in consultation with the Technical Committee. Keeping up-to-date with current developments such as the FAIR Data Maturity Model (Bahim et al. 2020) will ensure the relevance and currency of the policy and thresholds. A dedicated Federation Standards and Vocabulary Steward (Box et al. 2019a: 18) would be valuable here for brokering conversations with expert domain communities and working groups that can advise or make delegated decisions.

Here, we focused on defining FAIR data thresholds. However, we recognize that repositories that the data is served from should be "FAIR data-enabling" as a critical component of the broader "FAIR data ecosystem." (Collins et al. 2018; Devaraju et al. 2021) There are various ways of assessing or accrediting repositories relating to areas of security risk management, organizational and physical infrastructure, and digital object management. (Lin et al. 2020) As a preliminary trial, we included assessment of a "pass" or "fail' of several CoreTrustSeal requirements deemed necessary for persistent delivery of trusted agricultural data whilst not being onerous and disincentivizing participation. (Box et al. 2019a: 23) Our early experience showed that research scientists and even data managers had difficulty knowing if their repositories complied. Furthermore, there were challenges knowing what to assess if the data products were served from multiple repositories. AgReFed could play a role helping providers assess and choose repositories that meet community expectations. We look forward to learning about the solutions of other domains here.

Our experience was that the starting point for FAIRness of pilot participants’ data varied, as did their priorities, capacities, and solutions for improving. To ensure these viewpoints were encapsulated, setting the FAIR thresholds and their governance and implementation was done through consensus with providers. It is envisaged that this active participation will help ensure the settings are realistic and promote trust and self-determination, giving providers incentive to participate. The thresholds aimed to strike a balance between the realities and priorities of providers so as not to disincentivize participation whilst also aiming to inspire, support, and educate for fully FAIR data while meeting end-user needs.

Improving the FAIRness of data took resourcing, and as such, we recognize value propositions are required for providers to have confidence in participation. Benefits to founding partners included being an exemplar of FAIR best practice at the institutional level, making access and re-use easier for end-users, and being able to combine data types for research insights (see AgReFed's use case stories) (AgReFed 2021). Providers benefited from metadata guidance through education resources, library, and licensing support. Expert assistance, including from providers’ organizational IT, was required for data structuring, access through APIs, and finding, selecting, creating, and applying vocabularies. In one case (SMN-2), institutional IT resourcing for data service work was a challenge but raised the prioritization of upgrades now being worked on. Data federations can support collective advocacy, relationship brokering, and tailored support across these areas.

The provision, assembling, and demonstration of tooling resources for data providers’ various needs, priorities and capabilities would also lower the cost of delivering FAIR data, thereby incentivizing federation participation. Examples across the data management cycle include data management plans, data collection tools (Devare et al. 2021), data deposition tools (Shaw et al. 2020), and example protocol/reference implementations. (FAIR Data Points 2022) This is a focus of AgReFed’s next phase. Virtual research environments with example workflows are also being integrated. Furthermore, the federation can continue to align/encourage membership with intermediates or broker platforms that offer value in specific fields of research, including in data standardization.

The current phase has focused on research institutes. Expanding participation to cooperatives, research development corporations, industry, and farmers—as envisaged by members (Box et al. 2019b)—will require incentivization. The governance structure of AgReFed enables the community to make policy adjustments to support this. For example, alternative funding models may be leveraged, such as user-pays for certain services and data in the competitive space. Stakeholders can bring assets aside from data to the table to help meet the varied needs of participants. Recognizing this, membership was recently expanded to providers of tooling, infrastructure, and other resources. Active participation through the federation will help ensure individual and collective benefits are delivered across the agricultural research sector, including through FAIR and trusted data.

Acknowledgements

We gratefully acknowledge the assistance from Catherine Brady and Melanie Barlow (ARDC) for metadata and services support; the data contributions of Dr. Ben Biddulph (Department of Primary Industries and Regional Development, WA), Southern Farming Systems, and Corangamite Catchment Management Authority; technical development by Andrew MacLeod, Scott Limmer, and Heath Gillett (Federation University); Linda Gregory (CSIRO, National Soil Data and Information); Daniel Watkins (University of New England); vocabulary support by Simon Cox (CSIRO, Environmental Informatics); and policy work and manuscript feedback from Dr. Joel Epstein. Thank you to all those providing review of AgReFed documents cited herein, including Dr. Andrew Treloar (ARDC), Prof. Harvey Millar (The University of Western Australia), Peter Wilson (CSIRO, National Soil Data, and Information), Assoc. Prof. Peter Dahlhaus and Jude Channon (Federation University), Prof. Matthew Gilliham (University of Adelaide), Dr. Bettina Berger (University of Adelaide), Dr. Kay Steel (Federation University), and Dr. Rachelle Hergenhan (University of New England).

Author contributions

Methodology and framework was developed by P. Box, derived from previous work and experiences, with contributions to development and implementation of the framework by B. Simons, K. Levett, A. MacLeod, and M. Wong. Testing and feedback by R. David, D. Schneider, and N. Taylor. Contributions to writing social and or technical policies were made by A. Lee, P. Box, H. Thompson, B. Simons, A. MacLeod, and M. Wong. M. Wong led article writing, with significant writing contributions from K. Levett and A. Lee. All authors contributed to writing, including revising critically for intellectual input. P. Box refined methodological scope and significance in early drafts.

Funding

This research was supported by the Australian Research Data Commons (ARDC) Agriculture Research Data Cloud project (DC063) and ARDC Discovery Activities (TD018). The ARDC is supported by the Australian Government through the National Collaborative Research Infrastructure Strategy (NCRIS).

Data accessibility

Datasets informing this research are available by open licence permitting unrestricted access (https://doi.org/10.25955/5c1c6b8f4d8d2, https://doi.org/10.4225/08/55E5165EC0D29, https://doi.org/10.25919/5c36d77a6299c, https://doi.org/10.4225/08/546ED604ADD8A, and https://doi.org/10.26182/5cedf001186f3) unless otherwise stated (https://doi.org/10.4226/95/5b10d5ca18aef and https://doi.org/10.25955/5cdcff6168a76). The FAIR assessments are available at https://doi.org/10.5281/zenodo.6541413.

Competing interests

The authors have no competing interests to declare.

References

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, grammar, and punctuation. In some cases important information was missing from the references, and that information was added.