Journal:Shared metadata for data-centric materials science

| Full article title | Shared metadata for data-centric materials science |

|---|---|

| Journal | Scientific Data |

| Author(s) | Ghiringhelli, Luca M.; Baldauf, Carsten; Bereau, Tristan; Brockhauser, Sandor; Carbogno, Christian; Chamanara, Javad; Cozzini, Stefano; Curtarolo, Stefano; Draxl, Claudia; Dwaraknath, Shyam; Fekete, Ádám; Kermode, James; Koch, Christoph T.; Kühbach, Markus; Ladines, Alvin Noe; Lambrix, Patrick; Himmer, Maja-Olivia; Levchenko, Sergey V.; Oliveira, Micael; Michalchuk, Adam; Miller, Ronald E.; Onat, Berk; Pavone, Pasquale; Pizzi, Giovanni; Regler, Benjamin; Rignanese, Gian-Marco; Schaarschmidt, Jörg; Scheidgen, Markus; Schneidewind, Astrid; Sheveleva, Tatyana; Su, Chuanxun; Usvyat, Denis; Valsson, Omar; Wöll, Christof; Scheffler, Matthias |

| Author affiliation(s) | Friedrich-Alexander Universität, Humboldt-Universität zu Berlin, Fritz-Haber-Institut of the Max-Planck-Gesellschaft, University of Amsterdam, TIB – Leibniz Information Centre for Science and Technology and University Library, AREA Science Park, Duke University, Lawrence Berkeley National Laboratory, University of Warwick, Linköping University, Skolkovo Institute of Science and Technology, Max Planck Institute for the Structure and Dynamics of Matter, Federal Institute for Materials Research and Testing, University of Birmingham, Carleton University, École Polytechnique Fédérale de Lausanne, Paul Scherrer Institut, Chemin des Étoiles, Karlsruhe Institute of Technology, Forschungszentrum Jülich GmbH, University of Science and Technology of China, University of North Texas |

| Primary contact | Email: luca dot ghiringhelli at physik dot hu dash berlin dot de |

| Year published | 2023 |

| Volume and issue | 10 |

| Article # | 626 |

| DOI | 10.1038/s41597-023-02501-8 |

| ISSN | 2052-4463 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.nature.com/articles/s41597-023-02501-8 |

| Download | https://www.nature.com/articles/s41597-023-02501-8.pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

|

|

This article contains rendered mathematical formulae. You may require the TeX All the Things plugin for Chrome or the Native MathML add-on and fonts for Firefox if they don't render properly for you. |

Abstract

The expansive production of data in materials science, as well as their widespread sharing and repurposing, requires educated support and stewardship. In order to ensure that this need helps rather than hinders scientific work, the implementation of the FAIR data principles (that ask for data and information to be findable, accessible, interoperable, and reusable) must not be too narrow. At the same time, the wider materials science community ought to agree on the strategies to tackle the challenges that are specific to its data, both from computations and experiments. In this paper, we present the result of the discussions held at the workshop on “Shared Metadata and Data Formats for Big-Data Driven Materials Science.” We start from an operative definition of metadata and the features that a FAIR-compliant metadata schema should have. We will mainly focus on computational materials science data and propose a constructive approach for the "FAIR-ification" of the (meta)data related to ground-state and excited-states calculations, potential energy sampling, and generalized workflows. Finally, challenges with the FAIR-ification of experimental (meta)data and materials science ontologies are presented, together with an outlook of how to meet them.

Keywords: materials science, data sharing, FAIR data principles, file formats, metadata, ontologies, workflows

Introduction: Metadata and FAIR data principles

The amount of data that has been produced in materials science up to today, and its day-by-day increase, are massive. [1] The dawn of the data-centric era [2] requires that such data are not just stored, but also carefully annotated in order to find, access, and possibly reuse them. Terms of good practice to be adopted by the scientific community for the management and stewardship of its data, the so-called FAIR data principles, have been compiled by the FORCE11 group. [3] Here, the acronym "FAIR" stands for "findable, accessible, interoperable, and reusable," which applies not only to data but also to metadata. Other terms for the “R” in FAIR are “repurposable” and “recyclable.” The former term indicates that data may be used for a different purpose than the original one for which they were created. The latter term hints at the fact that data in materials science are often exploited only once for supporting the thesis of a single publication, and then they are stored and forgotten. In this sense, they would constitute a “waste” that can be recycled, provided that they can be found and they are properly annotated.

Before examining the meaning and importance of the four terms of the FAIR acronym, it is worth defining what metadata are with respect to data. To that purpose, we start by introducing the concept of a data object, which represents the collective storage of information related to an elementary entry in a database. One can consider it as a row in a table, where the columns can be occupied by simple scalars, higher-order mathematical objects, strings of characters, or even full documents (or other media objects). In the materials science context, a data object is the collection of attributes (the columns in the above-mentioned table) that represent a material or, even more fundamentally, a snapshot of the material captured by a single configuration of atoms, or it may be a set of measurements from well-defined equivalent samples (see below for a discussion on this concept). For instance, in computational materials science, the attributes of a data object could be both the inputs (e.g., the coordinates and chemical species of the atoms constituting the material, the description of the physical model used for calculating its properties), and the outputs (e.g., total energy, forces, electronic density of states, etc.) of a calculation. Logically and physically, inputs and outputs are at different levels, in the sense that the former determine the latter. Hence, one can consider the inputs as metadata describing the data, i.e., the outputs. In turn, the set of coordinates A that are metadata to some observed quantities, may be considered as data that depend on another set of coordinates B, and the forces acting on the atoms in that set A. So, the set of coordinates B and the acting forces are metadata to the set A, now regarded as data. Metadata can always be considered to be data as they could be objects of different, independent analyses than those performed on the calculated properties. In this respect, whether an attribute of a data object is data or metadata depends on the context. This simple example also depicts a provenance relationship between the data and their metadata.

The above discussion can be summarized in a more general definition of the term metadata:

Metadata are attributes that are necessary to locate, fully characterize, and ultimately reproduce other attributes that are identified as data.

The metadata include a clear and unambiguous description of the data as well as their full provenance. This definition is reminiscent of the definition given by the National Institute of Standards and Technology (NIST) [4]: “Structured information that describes, explains, locates, or otherwise makes it easier to retrieve, use, or manage an information resource. Metadata is often called data about information or information about information.” With our definition, we highlight the role of data “reproducibility,” which is crucial in science.

Within the “full characterization” requirement, we highlight interpretation of the data as a crucial aspect. In other words, the metadata must provide enough information on a stored value (therein including, e.g., adimensional constants) to make it unambiguous whether two data objects may be compared with respect to the value of a given attribute or not.

Next, we should notice that, whereas in computational materials science the concept of data object identified by a single atomic configuration is well defined, in experimental materials science the concept of a class of equivalent samples is very hard to implement operationally. For instance, a single specimen can be altered by a measurement operation and thus cannot, strictly speaking, be measured twice. At the same time, two specimens prepared with the same synthesis recipe, may differ in substantial aspects due to the presence of different impurities or even crystal phases, thus yielding different values of a measured quantity. In this respect, here we use the term "equivalent sample" in its abstract, ideal meaning, but we also mention that one of the main purposes of introducing well-defined metadata in materials science is to provide enough characterization of experimental samples to put into practice the concept of equivalent samples.

The need for storing and characterizing data by means of metadata is determined by two main aspects, related to data usage. The first aspect is as old as science: reproducibility. In an experiment or computation, all the necessary information needed to reproduce the measured/calculated data (i.e., the metadata) should be recorded, stored, and retrievable. The second aspect becomes prominent with the demand for reusability. Data can and should be also usable for purposes that were not anticipated at the time they were recorded. A useful way of looking at metadata is that they are attributes of data objects answering the questions who, what, when, where, why, and how. For example, “Who has produced the data?”, “What are the data expected to represent (in physical terms)?”, “When were they produced?”, “Where are they stored?”, “For what purpose were they produced?”, and “By means of which methods were the data obtained?”. The latter two questions also refer to the concept of provenance, i.e., the logical sequence of operations that determine, ideally univocally, the data. Keeping track of the provenance requires the possibility to record the whole workflow that has lead to some calculated or measured properties (for more details, see the later section “Metadata for computational workflows”).

From a practical point of view, the metadata are organized in a schema. We summarize what the FAIR principles imply in terms of a metadata schema as follows:

- Findability is achieved by assigning unique and persistent identifiers (PIDs) to data and metadata, describing data with rich metadata, and registering (see below) the (meta)data in searchable resources. Widely known examples of PIDs are digital object identifiers (DOIs) and (permanent) Uniform Resource Identifiers (URIs). According to ISO/IEC 11179, a metadata registry (MDR) is a database of metadata that supports the functionality of registration. Registration accomplishes three main goals: identification, provenance, and monitoring quality. Furthermore, an MDR manages the semantics of the metadata, i.e., the relationships (connections) among them.

- Accessibility is enabled by application programming interfaces (APIs), which allow one to query and retrieve single entries as well as entire archives.

- Interoperability implies the use of formal, accessible, shared, and broadly applicable languages for knowledge representation (these are known as formal ontologies and will be discussed in the later section “Outlook on ontologies in materials science”), use of vocabularies to annotate data and metadata, and inclusion of references.

- Reusability hints at the fact that data in materials science are often exploited only once for a focus-oriented research project, and many data are not even properly stored as they turned out to be irrelevant for the focus. In this sense, many data constitute a “waste” that can be recycled, provided that the data can be found and they are properly annotated.

Establishing one or more metadata schemas that are FAIR-compliant, and that therefore enable the materials science community to efficiently share the heterogeneously and decentrally produced data, needs to be a community effort. The workshop “Shared Metadata and Data Formats for Big-Data Driven Materials Science: A NOMAD–FAIR-DI Workshop” was organized and held in Berlin in July 2019 to ignite this effort. In the following sections, we describe the identified challenges and first-stage plans, divided into different aspects that are crucial to be addressed in computational materials science.

In the next section, we describe the identified challenges and first plans for FAIR metadata schemas for computational materials science, where we also summarize as an example the main ideas behind the metadata schema implemented in the Novel-Materials Discovery (NOMAD) Laboratory for storing and managing millions of data objects produced by means of atomistic calculations (both ab initio and molecular mechanics), employing tens of different codes, which cover the overwhelming majority of what is actually used in terms of volume-of-data production in the community. We then follow with more detailed sections discussing the specific challenges related to interoperability and reusability for ground-state calculations (Section “Metadata for ground-state electronic-structure calculations”), perturbative and excited-state calculations (Section “Metadata for external-perturbation and excited-state electronic-structure calculations”), potential-energy sampling (molecular-dynamics and more, Section “Metadata for potential-energy sampling”), and generalized workflows (Section “Metadata for computational workflows”) are addressed in detail in the following sections. Challenges related to the choice of file formats are discussed in Section “File Formats.” An outlook on metadata schema(s) for experimental materials science and on the introduction of formal ontologies for materials science databases constitute Sections “Metadata schemas for experimental materials science” and “Outlook on ontologies in materials science,” respectively.

Towards FAIR metadata schemas for computational materials science

The materials science community has realized long ago that it is necessary to structure data by means of metadata schemas. In this section, we describe the pioneering and recent examples of such schemas, and how a metadata schema becomes FAIR-compliant.

To our knowledge, the first systematic effort to build a metadata schema for exchanging data in chemistry and materials science is CIF, an acronym that originally stood for "Crystallographic Information File," the data exchange standard file format introduced in 1991 by Hall, Allen and Brown. [5,6] Later, the CIF acronym was extended to also mean "Crystallographic Information Framework" [7], a broader system of exchange protocols based on data dictionaries and relational rules expressible in different machine-readable manifestations. These include the Crystallographic Information File itself, but also, for instance, XML (Extensible Markup Language), a general framework for encoding text documents in a format that is meant to be at the same time human and machine readable. CIF was developed by the International Union of Crystallography (IUCr) working party on Crystallographic Information and was adopted in 1990 as a standard file structure for the archiving and distribution of crystallographic information. It is now well established and is in regular use for reporting crystal structure determinations to Acta Crystallographica and other journals. More recently, CIF has been adapted to different areas of science such as structural biology (mmCIF, the macromolecular CIF [8]) and spectroscopy. [9] The CIF framework includes strict syntax definition in a machine-readable form and dictionary defining (meta)data items. It has been noted that the adoption of the CIF framework in IUCr publications has allowed for a significant reduction of the amount of errors in published crystal structures. [10,11]

An early example of an exhaustive metadata schema for chemistry and materials science is the Chemical Markup Language (CML) [12,13,14], whose first public version was released in 1995. CML is a dictionary, encoded in XML for chemical metadata. CML is accessible (for reading, writing, and validation) via the Java library JUMBO (Java Universal Molecular/Markup Browser for Objects). [14] The general idea of CML is to represent with a common language all kinds of documents that contain chemical data, even though currently the language—as of the latest update in 2012 [15]—covers mainly the description of molecules (e.g., IUPAC name, atomic coordinates, bond distances) and of inputs/outputs of computational chemistry codes such as Gaussian03 [16] and NWChem. [17] Specifically, in the CML representation of computational chemistry calculations [18], (ideally) all the information on a simulation that is contained in the input and output files is mapped onto a format that is in principle independent of the code itself. Such information is:

- Administrative data like the code version, libraries for the compilation, hardware, user submitting the job;

- Materials-specific (or materials-snapshot-specific) data like computed structure (e.g., atomic species, coordinates), the physical method (e.g., electronic exchange-correlation treatment, relativistic treatment), numerical settings (basis set, integration grids, etc.);

- Computed quantities (energies, forces, sequence of atomic positions in case a structure relaxation or some dynamical propagation of the system is performed, etc.).

The different types of information are hierarchically organized in modules, e.g., environment (for the code version, hardware, run date, etc.), initialization (for the exchange correlation treatment, spin, charge), molgeom (for the atomic coordinates and the localized basis set specification), and finalization (for the energies, forces, etc.). The most recent release of the CML schema contains more than 500 metadata-schema items, i.e., unique entries in the metadata schema. It is worth noticing that CIF is the dictionary of choice for the crystallography domain within CML.

Another long-standing activity is JCAMP-DX (Joint Committee on Atomic and Molecular Physical Data - Data Exchange) [19], a standard file format for exchange of infrared spectra and related chemical and physical information that was established in 1988 and then updated with IUPAC recommendations until 2004. It contains standard dictionaries for infrared spectroscopy, chemical structure, nuclear magnetic resonance (NMR) spectroscopy [20], and mass spectrometry [21], and ion-mobility spectrometry. [22] The European Theoretical Spectroscopy Facility (ETSF) File Format Specifications were proposed in 2007 [23,24,25], in the context of the European Network of Excellence NANOQUANTA, in order to overcome widely known portability issues of input/output file formats across platforms. The Electronic Structure Common Data Format (ESCDF) Specifications [26] is the ongoing continuation of the ETSF project and is part of the CECAM Electronic Structure Library, a community-maintained collection of software libraries and data standards for electronic-structure calculations. [27]

The largest databases of computational materials science data, AFLOW [28], Materials Cloud [29], Materials Project [30], the NOMAD Repository and Archive [31,32,33], OQMD [34], and TCOD [35] offer APIs that rely on dedicated metadata schemas. Similarly, AiiDA [36,37,38] and ASE [39], which are schedulers and workflow managers for computational materials science calculations, adopt their own metadata schema. OpenKIM [40] is a library of interatomic models (force fields) and simulation codes that test the predictions of these models, complemented with the necessary first-principles and experimental reference data. Within OpenKIM, a metadata schema is defined for the annotation of the models and reference data. Some of the metadata in all these schemas are straightforward to map onto each other (e.g., those related to the structure of the studied system, i.e., atomic coordinates and species, and simulation-cell specification), others can be mapped with some care. The OPTIMADE (Open Databases Integration for Materials Design [41]) consortium has recognized this potential and has recently released the first version of an API that allows users to access a common subset of metadata-schema items, independent of the schema adopted for any specific database/repository that is part of the consortium.

In order to clarify how a metadata schema can explicitly be FAIR-compliant, we describe as an example the main features of the NOMAD Metainfo, onto which the information contained in the input and output files of atomistic codes, both ab initio and force-field based, is mapped. The first released version of the NOMAD Metainfo is described by Ghiringhelli et al. [26] and it has powered the NOMAD Archive since the latter went online in 2014, thus predating the formal introduction of the FAIR data principles. [3]

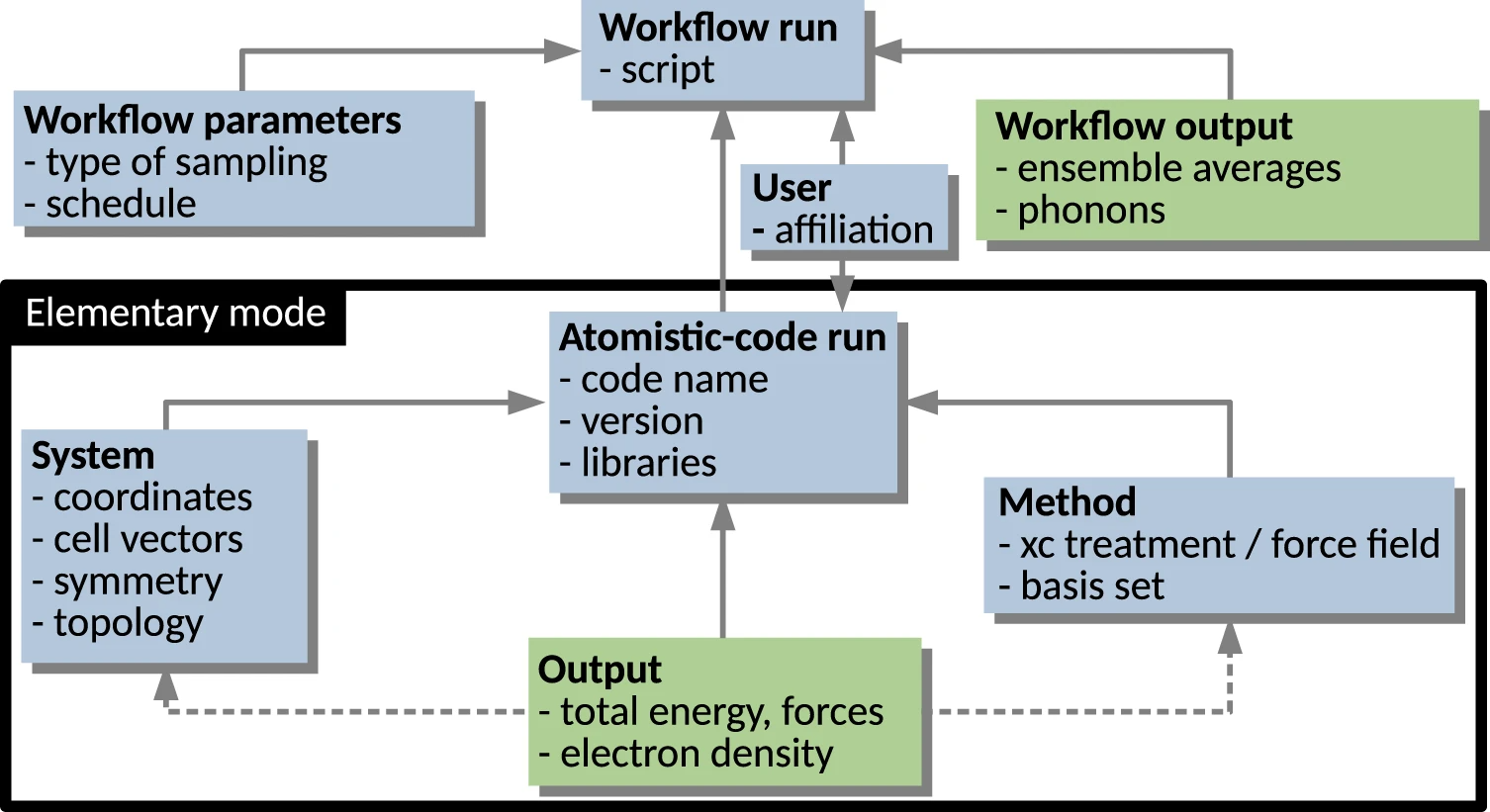

Here, we give a simplified description, graphically aided by Fig. 1, which highlights the hierarchical/modular architecture of the metadata schema. The elementary mode in which an atomistic materials science code is run (encompassed by the black rectangle) yields the computation of some observables (Output) for a given System, specified in terms of atomic species arranged by their coordinates in a box, and for a given physical model (Method), including specification of its numerical implementation. Sequences or collections of such runs are often defined via a Workflow. Examples of workflows are:

- Perturbative physical models (e.g., second-order Møller–Plesset, MP2, Green’s function based methods such as G0W0, random-phase approximation, RPA) evaluated using self-consistent solutions provided by other models (e.g., density-functional theory, DFT, Hartree-Fock method, HF) applied on the same System;

- Sampling of some desired thermodynamic ensemble by means of, e.g., molecular dynamics;

- Global- and local-minima structure searches;

- Numerical evaluations of equations of state, phonons, or elastic constants by evaluating energies, forces, and possibly other observables; and

- Scans over the compositional space for a given class of materials (high-throughput screening).

|

The workflows can also be nested, e.g., a scan over materials (different compositions and/or crystal structures) contains a local optimization for each material and extra calculations based on each local optimum structure such as evaluation of phonons, bulk modulus, or elastic constants, etc.

The NOMAD Metainfo organizes metadata into sections, which are represented in Fig. 1 by the labeled boxes. The sections are a type of metadata, which group other metadata, e.g., other sections or quantity-type metadata. The latter are metadata related to scalars, tensors, and strings, which represent the physical quantities resulting from calculations or measurements. In a relational database model, the sections would correspond to tables, where the data objects would be the rows, and the quantity-type metadata the columns. In its most simple realization, a metadata schema is a key-value dictionary, where the key is a name identifying a given metadata. In NOMAD Metainfo, similarly to CML, the key is a complex entity grouping the several attributes. Each item in NOMAD Metainfo has attributes, starting with its name, a string that must be globally unique, well defined, intuitive, and as short as possible. Other attributes are the human-understandable description, which clarifies the meaning of the metadata, the parent section, i.e., the section the metadata belongs to, and the type, whether the metadata is, e.g., a section or a quantity. Another possible type, the category type, will be discussed below. For the quantity-type metadata, other important attributes are physical units and shape, i.e., the dimensions (scalar, vector of a certain length, a matrix with a certain number of rows and columns, etc.), and allowed values, for metadata that admit only a discrete and finite set of values.

All definitions in the NOMAD Metainfo have the following attributes:

- A globally unique qualified name;

- Human-readable/interpretable description and expected format (e.g., scalar, string of a given length, array of given size);

- Allowed values;

- Provenance, which is realized in terms of a hierarchical and modular schema, where each data object is linked to all the metadata that concur to its definition. Related to provenance, an important aspect of NOMAD Metainfo is its extensibility. It stems from the recognition that reproducibility is an empirical concept, thus at any time, new, previously unknown or disregarded metadata may be recognized as necessary. The metadata schema must be ready to accommodate such extensions seamlessly.

The representation in Fig. 1 is very simplified for tutorial purposes. For instance, a workflow can be arbitrarily complex. In particular, it may contain a hierarchy of sub-workflows. In the currently released version of the NOMAD Metainfo, the elementary-code-run modality is fully supported, i.e., ideally all the information contained in a code run is mapped onto the metadata schema. However, the workflow modality is still under development. An important implication of the hierarchical schema is the mapping of any (complex) workflow onto the schema. That way, all the information obtained by its steps is stored. This is achieved by parsers, which have been written by the NOMAD team for each supported simulation code. One of the outcomes of the parsing is the assignment of a PID to each parsed data object, thus allowing for its localization, e.g., via a URI.

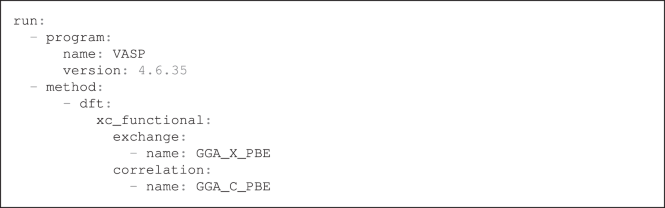

The NOMAD Metainfo is inspired by the CML, in particular in being hierarchical/modular. Each instance of a metadata-schema is uniquely identified, so that it can be associated with a URI for its convenient accessibility. An instance of a metadata schema can be generated by using a dedicated parser by pairing each parsed value with its corresponding metadata label. As an example, in Listing 1, we show a portion of the YAML file (see section “File Formats”) instantiating Metainfo for a specific entry of the NOMAD Archive. This entry can be searched by typing “entry_id = zvUhEDeW43JQjEHOdvmy8pRu-GEq” in the search bar at https://nomad-lab.eu/prod/v1/gui/search/entries. In Listing 1, key-value pairs are visible, as well as the nested-section structuring.

|

The modularity and uniqueness together allow for a straightforward extensibility, including customization, i.e., introduction of metadata-schema items that do not need to be shared among all users, but may be used by a smaller subset of users, without conflicts.

In Fig. 1, the solid arrows stand for the relationship is contained in between section-type metadata. A few examples of quantity-type metadata are listed in each box/section. Such metadata are also in an is-contained-in relationship with the section they are listed in. The dashed arrows symbolize the relationship has reference in. In practice, in the example of an Output section, the quantity-type metadata contained in such a section are evaluated for a given system described in a System section and for a given physical model described in a Method section. So, the section Output contains a reference to the specific System and Method sections holding the necessary input information. At the same time, the Output section is contained in a given Atomistic-code run section. These relationships among metadata already build a basic ontology, induced by the way computational data are produced in practice, by means of workflows and code runs. This aspect will be reexamined in the later Section “Outlook on ontologies in materials science.”

We now come to the category-type metadata that allow for complementary, arbitrarily complex ontologies to be built by starting from the same metadata. They define a concept, such as “energy” or “energy component,” in order to specify that a given quantity-type metadata has a certain meaning, be it physical (such as “energy”) or computer-hardware related, or administrative. To the purpose, each section and quantity-type metadata is related to a category-type metadata, by means of an is-a kind of relationship. Each category-type metadata can be related to another category-type metadata by means of the same is-a relationship, thus building another ontology on the metadata, which can be connected with top-down ontologies such as EMMO [42] (see Section “Outlook on ontologies in materials science” for a short description of EMMO).

The current version of NOMAD Metainfo includes more than 400 metadata-schema items. More specifically, these are the common metadata, i.e., those that are code-independent. Hundreds more metadata are code-specific, i.e., mapping pieces of information in the codes’ input/output that are specific to a given code and not transferable to other codes. The NOMAD Metainfo can be browsed at https://nomad-lab.eu/prod/v1/gui/analyze/metainfo.

To summarize, the NOMAD Metainfo addresses the FAIR data principles in the following sense:

- Findability is enabled by unique names and a human-understandable description;

- Accessibility is enabled by the PID assigned to each metadata-schema item, which can be accessed via a RESTful [43] API (i.e., an API supporting the access via web services, through common protocols, such as HTTP), specifically developed for the NOMAD Metainfo. Essentially all NOMAD data are open-access, and users who wish to search and download data do not need to identify themselves. They only need to accept the CC BY license. Uploaders can decide for an embargo. These data are then shared with a selected group of colleagues.

- Interoperability is enabled by the extensibility of the schema and the category-type metadata, which can be linked to existing and future ontologies (see Section “Outlook on ontologies in materials science”).

- Reusability/Repurposability/Recyclability is enabled by the modular/hierarchical structure that allows for accessing calculations at different abstraction scales, from the single observables in a code run to a whole complex workflow (see Section “Metadata for Computational Workflows”).

The usefulness and versatility of a metadata schema are demonstrated by the multiple access modalities it allows for. The NOMAD Metainfo schema is the basis of the whole NOMAD Laboratory infrastructure, which supports access to all the data in the NOMAD Archive, via the NOMAD API (also an implementation of the OPTIMADE API [41] is supported). This API powers three different access modes of the Archive: the Browser [44], which allows searches for single or groups of calculations, the Encyclopedia [45], which display the content of the Archive organized by materials, and the Artificial-Intelligence (AI) Toolkit [46,47,48], which connects in Jupyter Notebook's script-based queries and AI (machine learning [ML], data mining) analyses of the filtered data. All the three services are accessible via a web browser running the dedicated GUI offered by NOMAD.

Metadata for ground-state electronic-structure calculations

By ground-state calculations, we mean calculations of the electronic structure—e.g., eigenvalues and eigenfunctions of the single-particle Kohn-Sham equations, the electron density, the total energy and possibly its derivatives (forces, force constants)—for a fixed configuration of nuclei. This refers to a point located on the Born-Oppenheimer potential-energy surface, and is a necessary step in geometry optimization, molecular dynamics, the computation of vibrational (phonon) spectra or elastic constants, and more. Thus, ground-state calculations represent the most common task in computational materials science, and the involved approximations are relatively well established. For this reason, they are already extensively covered by the NOMAD Metainfo. Nevertheless, some challenges in defining metadata for such calculations still remain, as discussed below. In particular, density-functional theory (DFT) is the workhorse approach for the great majority of ground-state calculations in materials science. Highly accurate quantum-chemistry models are more computationally expensive than DFT and their use in applications is less widespread. However, they can provide accurate benchmark references for DFT, making high-quality quantum-chemical data essential also for DFT-based studies. Below we analyze the ground-state electronic structure calculations mainly in reference to DFT, but most of the stated principles are also valid for quantum-chemical calculations. A detailed discussion of the latter is deferred to Section “Quantum-chemistry methods.”

Approximations to the DFT exchange-correlation functional

Approximations to the DFT exchange-correlation (xc) functionals are identified by a name or acronym (e.g., “PBE”), although sometimes this identification is not unique or complete. As metadata, we suggest to use the identifiers of the Libxc library [49,50], which is the largest bibliography of xc functionals. In order to be both human and computer friendly, the Libxc identifiers consist of a human-readable string that has a unique integer associated with it. Often, the above-noted identification needs some refinement, because xc functionals typically depend on a set of parameters and these may be modified for a given calculation. Obviously, there is a need to standardize the way in which such parameters are referenced. Just like it is possible to use the Libxc identifiers for the functionals themselves, one may also use the Libxc naming scheme for their internal parameters. Obviously, code developers have to ensure that this information is contained in the respective input and/or output files. As Libxc provides version numbers of the xc functionals, it is important that this information is also available.

Basic sets

Complete and unambiguous specification of the basis set is crucial for judging the precision of a calculation. Ground-state calculations should include the full information about the basis sets used, including a DOI that a basis may be referred to. The use of repositories of basis sets, like the Basis Set Exchange repository [51], is therefore strongly recommended.

Basis sets can be coarsely divided into two classes, i.e., atom-position-dependent (atom-centered, bond-centered) and cell-dependent (such as plane waves) ones. Also, a combination of both is possible, as, e.g., realized in augmented plane-wave or projector-augmented-wave methods. For the atom-centered basis, the list of centers needs to be provided, and these may even contain positions where no actual atomic nucleus is located. The NOMAD Metainfo contains a rather complete set of metadata to describe atom-centered basis sets. A more complete description of cell-dependent basis sets can be found in the ESCDF, which is planned to be merged with the NOMAD Metainfo.

Energy reference

In order to enable interoperability and reusability of energies computed with different electronic-structure methods, it is necessary to define a “general energy zero.” An analysis of this problem and some clues on how to tackle it were already discussed by some of us in a previous work. [26] The following is a further attempt to advance and systematize ideas and solutions.

The problem of comparing energies is not restricted to computational materials science and chemistry. In fact, it also arises in experimental chemistry, as for instance, only enthalpy or entropy differences can be measured, but not absolute values. To solve this, chemists have defined a reference state for each element, called the "standard state," which is defined as the element in its natural form at standard conditions, while the heat of formation is used to measure the change from the elements to the compound. In computational materials science and chemistry, we can adopt a similar approach. For each element we need to define a reference system as the zero of the energy scale. To do so, we introduce some definitions:

- A system is a defined set of one or more atoms, with a given geometry and, if periodic, a given unit cell. It can be an atom, a molecule, a periodic crystal, etc. If relevant, the charge, the spin-state or magnetic ordering needs to be specified.

- A reference system is a well-defined system to which other systems are compared to.

- A calculated energy is the energy obtained by a numerical simulation of a system with given input data and parameters, defining the Hamiltonian (i.e., DFT xc-functional approximation) or the many-electron model (e.g., Hartree-Fock, MP2, “coupled-cluster singles, doubles, and perturbative triples”, CCSD[T]), the basis set, and the numerical parameters.

Whether the reference system is an atom, an element in its natural form, some molecule or other system, does not matter, as long as it is well-defined. Defining the system by atoms requires specifying how the orbitals are occupied, whether the atom is spherical, spin-polarized, etc. For each computational method and numerical settings, the energy per atom of the reference system must be calculated. The standard energy is then obtained by subtracting these values (multiplied by the number of constituents) from the calculated total energy. For example, to determine the energy of formation of a molecule like H2O or a crystal like SiC, we calculate the difference in total energies as or , respectively. Here, H2 and O2 are isolated, neutral molecules while Si and C are free, neutral atoms. However, using the energy per atom of Si and C in their crystalline ground-state structure would be an option as well. We propose to tabulate the reference energies for the most common computational methods, so that they can be applied without further computations and preferably automatically by the codes themselves.

Finally, we need to define what is meant by a computational method. The Hamiltonian and DFT functional are clearly part of the definition, as is the basis set and the potential shape (including pseudopotentials (PP) and effective core potentials). The specific implementation may also be relevant. Gaussian-based molecular-orbital codes may give the same energy for an identical setup (see Section “Quantum-chemistry methods”), while plane-wave DFT codes may not.

One factor here is the choice of the PP. Irrespective of the used method, the computational settings determine the quality of a calculation. Most decisive here is the basis-set cut-off. For the plane-wave basis, convergence with respect to this parameter is straightforward. In any case, depending on the code, the method and details of the calculation, care needs to be taken to define all the adjustable parameters that significantly affect the energy when defining computational methods.

To tabulate standard energies, as suggested above, every computational method needs to be applied to all reference systems. This requires care in choosing the reference systems to ensure that an as-wide-as-possible range of codes and methods are actually suited for these calculations. It may be that some codes cannot constrain the occupancies of atoms, or keep them spherical, which would be a problem if spherical atoms were chosen as the reference. Clearly, periodic crystals such as silicon are not suitable for molecular codes. It is possible, however, that some other codes could help with bridging this gap. For example, FHI-aims [52] is not only capable of simulating crystalline system, but can also handle atoms and molecules and it can employ Gaussian-type orbitals (GTO) basis sets. Thus, FHI-aims is able to reproduce energy differences between atoms/molecules and crystals. In this way, it can support codes such as Gaussian16 or GAMESS. [53]

Metadata for external-perturbation and excited-state electronic-structure calculations

A direct link from the DFT ground state (GS) to excitations is provided by time-dependent DFT (TDDFT). Alternatively, charged and neutral electronic excitations are described by means of Green-function approaches from many-body perturbation theory (MBPT). This route is predominantly (but not exclusively) used for the solid state, while TDDFT and quantum-chemistry approaches are typically preferred for finite systems. For strongly correlated materials, in turn, dynamical mean-field theory (DMFT) is often the methodology of choice, potentially combined with DFT and Green-function methods. Lattice excitations, if not directly treated by DFT molecular dynamics, are often handled by density-functional perturbation theory (DFTP); for their interaction with light, also Green-function techniques are used. DFPT not only allows for the description of vibrational properties, but also for treating macroscopic electric fields, applied macroscopic strains, or combinations of these. The type of perturbation is intimately related to the physical properties of interest, e.g., harmonic and anharmonic phonons, effective charges, Raman tensors, dielectric constants, hyper-polarizabilities, and many others.

Characterizing the corresponding research data is a very complex and complicated task, for various reasons. First, such calculations rely on an underlying ground-state calculation, and thus carry along all uncertainties from it. Second, the methodology for excited states is scientifically and technically more involved by including many-body effects that govern diverse interactions. The methods thus rely on various, often not fully characterized approximations.

Diagrammatic techniques and TDDFT

The most common application of the GW approximation (the one-body Green's function G and the dynamically screened Coulomb interaction W [1]) is to compute quasi-particle energies, i.e., energies that describe the removal or addition of a single electron. For this, the many-body electron-electron interaction is described by a two-particle operator, called the electronic self-energy. To compute this object, on the technical side we may need an additional (auxiliary) basis set, not the same as the one used in the ground-state calculation, coming with additional parameters. Likewise, there are various ways for doing the analytical continuation of the Green’s function, as there are various ways for carrying out the required frequency integration, possibly employing a plasmon-pole model as an approximation. And there are also different ways of how to evaluate the screened Coulomb potential W. Most important is the flavor of GW, i.e., whether it is done in a single-shot manner, called G0W0, or in a self-consistent way. If the latter, what kind of self-consistency (scf) is used; any type of partial scf, quasi-particle scf, or any other type which would remedy any starting-point dependence, i.e., the dependence of the results on the xc functional of the initial DFT (or Hartree-Fock or alike) used in the GS.

While GW approximation is the method of choice for quasi-particle energies (and potentially also life times) within the realm of MBPT, we need to solve the Bethe-Salpeter equation (BSE) to tackle electron-hole interactions. This approach should typically be applied on top of a GW calculation, but often the quasi-particle states are approximated by DFT results adjusted by a scissors operator to widen the band gap in a similar way to the latter. In all cases, BSE carries along all subtleties from the underlying steps. In addition, it comes with its own issues, like the way of screening the Coulomb interaction (electron-hole this time), the representation of non-local operators, and alike.

DMFT, as a rather young and quickly developing field, naturally experiences a plenitude of “experimental” implementations, differing in many aspects, with one of the major obstacles being the quite vast amount of combinations of software. Some of the approaches are computationally light, allowing for the construction of model Hamiltonians based on DFT calculations; others are computationally too demanding and can be applied only to simple systems with a few orbitals; most of the methods rely on Green’s functions and self-energies. Diagrammatic extensions beyond standard DMFT methods employ various kinds of vertex functions. Other issues concern the definition of how to handle the Coulomb interactions, where the parameters can either be chosen empirically or can be calculated by first principles.

Specific issues of TDDFT concern, in a first place, the distinction between the linear-response regime and the time-propagation of the electronic states in presence of a time-dependent potential. For the former, the xc kernel plays the same role as the xc functional of the GS, raising (besides numerical precision) questions related to accuracy. For the latter, there are various ways and flavors for how to implement the time-evolution operator. Moreover, one can write this operator as a simple exponential or use more elaborate expressions, like the Magnus expansion or the enforced time reversal symmetry. Regarding the exponential, one can employ a Crank-Nicolson expansion, expand in a Taylor series or employ Houston states. Obviously, each of them comes with approximations and additionally, numerical issues.

In summary, all the variety captured by the different methods together with the related multitude of computational parameters, needs to be carefully reflected by the metadata schema. This is not only imperative for ensuring reproducible results but also for evaluating the accuracy of methods and commonly used approximations. Besides, further subtleties related to algorithms in the actual implementations in different codes requires the code developers to embark on this challenge.

Density-functional perturbation theory

Density-functional perturbation theory is used to obtain physical properties that are related to the (density-)response of the system to external perturbations, like the displacement potential according to lattice vibrations. Also in this case, the calculation relies on a preliminary GS run, inheriting all issues therefrom. After having chosen the type of perturbation, which requires method-dependent definitions and inputs, one needs to choose the order of perturbation: The linear response approach, that is implemented in many codes (e.g., VASP [54], octopus [55], CASTEP [56], FHI-aims [57], Quantum Espresso [58], ABINIT [59]), allows for the determination of second-order derivatives of the total energy. Among these codes, some of them also allow for the calculation of third-order derivatives, like anharmonic vibrational effects. The variation of the Kohn-Sham orbitals can be obtained from the Sternheimer equation, where different methods are used for deriving its solution (iterative methods, direct linearization, integral formulation).

Quantum-chemistry methods

Quantum chemistry offers several methodological hierarchies for calculating quantities related to excited states, such as excitation energies, transition moments, ionization potentials, etc. As high-quality methods are computationally intensive, without additional approximations such methods can be applied to relatively small molecular systems only.

Among the standard quantum chemical approaches that can be routinely applied to study excited states of small to medium-sized molecules one can distinguish two large groups, i.e., single-reference and multi-reference methods. The single-reference coupled-cluster (CC) hierarchy for excited states can be formulated in terms of the so-called equation-of-motion approach or time-dependent linear response.

Generally, for well-behaving closed-shell molecules, the single-reference quantum-chemical methods can be used as a black box. The formalisms of the MP n and CC models are uniquely defined and well documented. The GTO basis sets from the standard basis set families (Pople, Dunning, etc.) are also uniquely defined by the acronym. In practical implementations of these methods, of course various thresholds are usually introduced for prescreening, convergence, etc., but the default values for these thresholds are routinely set very conservatively to guarantee a sub-microhartree precision of the final total energies. Problems might, however, arise due to the iterative character of most of the mentioned techniques, as convergence to a certain state (both in the ground-state and/or excited-state parts of the calculation) depends on starting guess, preconditioner, possible level shifts, type of convergence accelerator, etc. Unfortunately, the parameters that control the convergence are often not sufficiently well-documented and might not be found in the output. Such problems mainly occur in open-shell cases (note that in the Delta methods at least one of the calculations has to involve an open-shell system). Sometimes a cross-check between several codes becomes essential to detect convergence faults.

When it comes to larger systems and approximate CC models are utilized, the importance of the involved tolerances and underlying protocols substantially increases. The approximations can include, for example, the density-fitting technique, local approximation, Laplace transform, and others. Important parameters here are the auxiliary basis set, the fitting metric, the type of fitting (local or non-local), and if local, how the fit domains are determined, etc. The result of the calculations that use local correlation techniques are influenced by the choice of the virtual space and the corresponding truncation protocols and tolerances, the pair hierarchies and the corresponding approximations for the CC terms, etc. For Laplace-transform-based methods, the details of the numerical quadrature matter. Unfortunately, these subtleties are very specific and technical and even if given in the output, can hardly be properly understood and analyzed by non-specialists who are not involved in the development of the related methods. Therefore, the protocols behind the approximations are usually appropriately automatized, and the defaults are chosen such that for certain (benchmarking) sets of systems the deviations in the energy are substantially smaller than the expected error of the method itself (e.g., 0.01 eV for the excitation energy). However, for these methods, additional benchmarks and cross-checks between different programs and approaches would be very important.

Multi-reference methods come with quite a number of different flavors, where the most widely used ones are complete active-space self-consistent Field (CASSCF), complete active-space second-order perturbation theory (CASPT2), and multi-reference configuration interaction (MRCI). For difficult cases (e.g., strongly correlated systems), these methods might remain the only option to obtain qualitatively and quantitatively correct result. Unfortunately, compared to the single reference methods, they are computationally expensive and much less of a black box. First of all, for each calculation one has to specify the active space or active spaces. The results may depend dramatically on this choice. Furthermore, the underlying theory is not always uniquely defined by the used acronym. For example, different formulations of CASPT2, MRCI, or other theories are not mutually equivalent depending on whether and how much internal contraction is used and additional approximations that neglect certain terms (e.g., many-electron density matrices) can be implicitly invoked. Besides, certain deficiencies of these methods, such as for example lack of size consistency in MRCI or intruder states in CASPT2, are often corrected by additional (sometimes empirical) schemes, which again are not always fully specified. All this makes the interpretation of deviations in results and cross-checks of these methods less conclusive.

To summarize, quantum-chemical methods offer an excellent toolbox for accurate ab initio calculations for molecules (especially so for small and medium sized ones). However, severe issues concerning reproducibility and replicability remain, in particular for extended and/or open-shell systems. This calls for a more detailed specification of the implemented techniques by the developers, for example, a better design of the outputs, and a thorough analysis and documentation of the employed methods and parameters by the users. A possible strategy addressing these issues would be two-fold:

- Promoting the compliance of the developed software with the FAIR principles for software [60,61], which comprise the recommendation to publish the software in a repository with version control, have a well-defined license, register the code in a community registry, assign to each version a PID, and enable its proper citation. [62,63] Reproducibility can be enhanced by publishing software code under the Free Libre Open-Source Software (FLOSS) [64,65] license and by documenting the computation environment (hardware, operating system version, computational framework and libraries that were used, if any); and #Creation of well-defined benchmark datasets.

Interoperability among different implementations of (in the intention) the same theoretical model can be assessed by the quantitative comparison over different codes (including different versions thereof) of a set of properties on an agreed-upon set of materials. Such datasets would obviously need to be stored in a FAIR-compliant fashion. A large community-based effort in this direction is being carried on in the DFT community [66], while in the many-body-theory community, implementation of this idea is just at its beginning. [67]

Metadata for potential-energy sampling

Molecular dynamics (MD) simulations model the time evolution of a system. They employ either ab initio calculated forces and energies (aiMD) or molecular mechanics (MM) i.e., forces and energies are defined through empirical atomistic and coarse-grained potentials. The FAIR storing and sharing of their inputs and outputs comes with a number of specific challenges in comparison to single-point electronic-structure calculations.

Conceptually, aiMD and MM are similar, as a sequence of system configurations is evolved at discrete time steps. Positions, velocities, and forces at a given time step are used to evaluate positions and velocities, and hence forces in the new configuration, and so on. In practice, MM simulations are orders of magnitude faster than aiMD, enabling much longer time scales and/or much larger system sizes. Even though the trend towards massive parallelization will enable aiMD in the near future system to handle sizes comparable to today’s standards for MM simulations, the latter will probably always enable larger systems. However, with machine-learned potentials and active learning techniques for their training, aiMD and MM may grow together in the future.

In this Section, we focus on challenges more specific to MM simulations, having in mind large length scales, long time scales, and complex phase-space-exploration algorithms and workflows. They can be summarized as follows:

- (i). In many cases, the investigated systems feature thousands of atoms with complex short- and long-range order and disorder, e.g., describing microstructural evolution such as crack propagation. This requires large, complex simulation cells with a range of chemical species to be correctly described and categorized.

- (ii). Force-fields exist in a wide variety of flavors that require proper classification. On top of that, they allow for granular fine-tuning of the interactions, even for individual atoms. Faithfully representing complex force fields thus requires to also capture the chemical-bonding topology that is often needed to define the actual interactions.

- (iii). The large length and long time scales presently come together with a multitude of simulation protocols, which use specific boundary conditions, thermostats, constraints, integrators, etc. The various approaches enable the computations of additional observables to be computed as statistical averages or correlations. Representing these properties implies the need to efficiently store and access large volumes of data, e.g., trajectories, including positions, and possibly also velocities and forces, for each atom at each time step.

For the purpose of illustration, we start by identifying some typical use cases, then describe what is currently implemented in the NOMAD infrastructure and what is missing. The examples we adopt fall into two classes: (i) high-throughput systems that are individually simple (1,000–10,000 particles) where the value of sharing comes from the ability to run analysis across many variants of, e.g., chemical composition or force field; (ii) sporadic simulations of very large systems or very long time scales which cannot readily be repeated by other researchers and thus are individually valuable to share. Examples of the first class, could be MD simulations in the NVT ensemble for liquid butane or bulk silicon, using well-defined standard force fields (e.g., CHARMM or Stillinger-Weber). Quantities of interest are typically computed during MD simulations (e.g., liquid densities). For flexibility, full trajectory files should also be stored but some important observables might be worth precomputing (e.g., radial distribution functions). The second class could include multi-billion atom MD simulations of dislocation formation [68] or solidification [69,70] or very long time-scale simulations of protein folding. [71] For more complex use cases, the current infrastructure as discussed in Section “Towards FAIR metadata schemas for computational materials science” is not yet sufficient. The challenges to be addressed are the need for support for (i) complex, heterogeneous, possibly multi-resolution systems; (ii) custom force fields; (iii) advanced sampling; (iv) classes of sampling besides MD (e.g., Monte Carlo, global structure prediction/search); and (iv) larger simulations (i.e., need for sparsification of the stored data with minimal loss of information).

Complex systems include heterogeneous systems, e.g., adsorbate and surfaces, interfaces, solute (macro)molecules in solvent fluids, and multi-resolution systems, i.e., systems that are described at different granularity. The representation of complex systems requires a hierarchy of structural components, from atoms, through moieties, molecules, and larger (super)structures. Annotating such complexity will require human intervention as well as algorithms for automatically recognizing the structural elements (see, e.g., Leitherer et al. [72]).

Annotation of force fields into publicly accessible databases has been pioneered by OpenKIM [40] in materials science and MoSDeF [73] for soft matter. However, many simulations are performed with customized force fields. The field is already being augmented and will likely be further supported by ML force fields. So far, the great majority of ML force fields are used only in the publication where they are defined. The reusability-oriented annotation of force fields, including ML ones, require also establishing a criterion for comparing them. Comparisons can be carried out by means of standardized benchmark datasets, with a well-defined set of properties. Differences among predicted properties can establish a metric for the similarity of the force fields.

Advanced sampling techniques (e.g., metadynamics [74], umbrella sampling [75], replica exchange [76], transition-path sampling [77], and forward-flux [78] sampling) are typically supported by libraries such as PLUMED [79] and OpenPathSampling. [80] These libraries are used as plugins to codes where classical-force-field-based (e.g. GROMACS [81], DL_POLY [82], LAMMPS [83]) or ab initio (e.g., CP2K [84] and Quantum Espresso [58]) MD, or both (e.g., i-Pi [85]), are performed. The input and output of these plugins will serve as the basis for the metadata related to these sampling techniques. In this regard, it would also be interesting to connect materials science databases, such as the NOMAD Repository and Archive [31] or Materials Cloud Archive [29] to the PLUMED-NEST [86], the public repository of the PLUMED consortium [87], for example by allowing for automatic uploading of PLUMED input files to the PLUMED-NEST when uploading to the data repositories.

For long time- and large length-scale simulations, several questions arise: How should we deal with these simulations, where the extensive amount of data produced by MD simulations becomes overwhelmingly large to systematically store and share? Can we afford to store and share all of it? If the storage is limited or data retrieval is unpractically slow, how can we identify the significant and crucial part of the simulation to store it in a reduced form? Keeping the whole data locally and sharing the metadata with only the important parts of the simulations would be a viable alternative, assuming the different servers have enough redundancy. Standard analysis techniques such as similarity analysis and monitoring dynamics can also be used to identify the changes in structure and dynamics to store only the significant frames or specific regions in MD simulations (e.g., some QM/MM models uses large MM buffer-atom regions that may not be stored entirely). Furthermore, on the one hand the cost/benefit of storing versus running a new simulation must be weighed. On the other hand, researchers may soon face increased requirements from funding agencies to store their data for a number of years, in which case the present endeavor offers a convenient implementation. We note ongoing algorithmic developments on compression algorithms for trajectories; see, for example, the work of Brehm and Thomas. [88]

Metadata for computational workflows

A computational workflow represents the coordinated execution of repeatable (computational) steps while accounting for dependencies and concurrency of tasks. In other words, a workflow can be thought as a script, a wrapper code that manages the scheduling of other codes, by controlling what should run in parallel, what sequentially and/or iteratively. This definition can be extended to workflows in experimental materials science or hybrid computational-experimental investigations, but, consistently with the previous sections, we limit the discussion to computational aspects only.

Once shared, workflows become useful building blocks that can be combined or modified for developing new ones. Furthermore, FAIR data can be reused as part of workflows completely unrelated to the workflows with which they were generated. An obvious example is AI-based data analytics, which can entail complex workflows involving data originally created for different purposes. During the last decade, the interest in workflow development has grown considerably in the scientific community [89], and various multi-purpose engines for managing calculation workflows have been developed, including AFLOW [28,90,91], AiiDA [36,92], ASE [39], and Fireworks. [93] Using these infrastructures, a number of workflows have been used for scientific purposes, like convergence studies [94], equations of state (e.g., AFLOW Automatic Gibbs Library [95] and the AiiDA common workflows ACWF [96]), phonons [97,98,99,100,101], elastic properties (e.g., the elastic-properties library for Inorganic Crystalline Compounds of the Materials Project [102], AFLOW Automatic Elasticity Library, AEL [103], ElaStic [104]), anharmonic properties (e.g., the Anharmonic Phonon Library, APL [105], AFLOW Automatic Anharmonic Phonon Library, AAPL [106]), high-throughput in the compositional space (e.g., AFLOW Partial Occupation, POCC [107]), charge transport (e.g., organic semiconductors [108,109]), of covalent organic frameworks (COFs) for gas storage applications [110], of spin-dynamics simulations [111], high-throughput automated extraction of tight-binding Hamiltionians via Wannier functions [112], and high-throughput on-surface chemistry. [113]

There are two types of metadata associated to workflows. Thinking of a workflow as a code to be run, the first type of metadata characterizes the code itself. The second type is the annotation of a run of a workflow, i.e., its inputs and outputs. This type of metadata has been already described in the Section “Towards FAIR metadata schemas for computational materials science,” together with a schematic list of possible workflow classes. It is important to realize that the inputs and outputs of the elementary-mode runs of the atomistic codes that are invoked in a workflow run are complemented by the inputs and outputs of the overarching workflows. A simple example: In an equation-of-state type of workflow, the energy and volume per unit cell of each single configuration that is part of the workflow is the output of the elementary run of the code, while the energy-vs-volume equation of state, e.g., fit to the Birch-Murnagham model, is an output of the workflow.

File formats

On an abstract level, a metadata schema is independent from its representation in computer memory, on a hard drive, or on just a piece of paper. But on a practical level, all data and metadata need to be managed, i.e., stored, indexed, accessed, shared, deleted, archived, etc. File formats used in the community address different requirement and intended use cases. Some file formats privilege human readability (e.g., XML, JSON, YAML) but are not very storage-efficient, while others are binary and overall optimized for efficient searches, but require interpreters to be understood by a person (e.g., HDF5 [114]). There are a few use-cases and data properties in the domain of computational materials science that are worth mentioning. First, such data are very heterogeneous and contain many simple properties (e.g., the name of a used code, or a list of considered atoms) that are mixed with properties in the form of large vectors, matrices, or tensors (e.g., the density of states or wave functions). The number of different properties requires hierarchical organization (e.g., with XML, JSON, YAML, or HDF5). It is desirable that many properties are easily human readable (e.g., to quickly verify the sanity of a piece of data), on the other hand large matrices should be stored as efficiently as possible for archiving, retrieving, and searching purposes. Second, there are use cases where random (non-sequential) access of individual properties is desirable (e.g., return all band structures from a set of DFT calculations). Third, computational material science (meta)data need to be archived (efficient storage, prevention of corruption, backups, etc.) on one side, but they also need to be shared via APIs, e.g., for search queries. This requires to transform (meta)data from one representation in one file format (e.g., BagIt and HDF5) to another representation in a different format (e.g., JSON or XML).

These use cases and data properties lead to several conclusions. Even on a technical level, (meta)data need to be handled independently of the file format. Pieces of information have to be managed in different formats, and we need to be able to transform from one representation into another. If many different resources (files, databases, etc.) are used to store (meta)data from a logically conjoined dataset, references to these resources qualify to become an important piece of metadata itself. We propose to use an abstract interface (e.g., implemented as a Python library) based on an abstract schema. This interface allows to manage (meta)data independent of the actual representation used underneath. Various implementations of such an abstract interface can then realize storage in various file formats and access to databases.

Metadata schemas for experimental materials science

In contrast to computational materials science, in experimental materials science the atomic structure and composition is only approximately known. Several techniques are used to collect data that may be more or less directly interpreted in terms of the atomic and/or electronic structure of the material. In cases where the structure of the material is already known, careful characterization of properties helps to establish valuable relationships between structure and properties which, in turn, may help to refine theoretical models of these structure-properties links. The inherent uncertainty in every measurement process causes the precision with which data can be reproduced to be lower, in most cases, than in theoretical/computational materials science. These uncertainties are present even in a well-characterized experimental setup, i.e., when a comprehensive set of metadata is used. In many cases it is not even the focus of an experiment to produce the most perfectly characterized data, but to invest just enough effort to address the specific question that drives the experiment.

The information available about the material whose properties are to be measured is also much less complete than in the computational world, where often the position of every atom is known. However, while physical measurements may be limited in their precision, the accuracy with which a physically observable quantity is obtained is by definition of being physically observable much higher than in computational materials science, where the accuracy of the obtained physical quantity may depend strongly on the validity of approximations being applied.

The uncertainty in retrieving structure-property relationships in computational materials science, which depends on the suitability of the applied theoretical model and its computational implementation, translates in the realm of experiments to an uncertainty in the atomic structure of the object that is being characterized and generally also some uncertainty in the measurement process itself. The metadata necessary to reproduce a given experimental data set must thus include detailed information about the material and its history together with all the parameters which are required to describe the state of the instrument used for the characterization. In most cases, both classes of metadata, i.e., those describing the material and those describing the instrument are going to be incomplete. While, for example, the full history of temperature, air pressure, humidity, and other relevant environmental parameters are not commonly tracked for the complete lifetime of a material (counter-examples exist, e.g., in pharmaceutical research), also information about the state of the instrument is not generally as comprehensive as it should ideally be (e.g., parameters are not recorded, or are not properly controlled, such as hysteresis effects in devices involving magnetic fields, or many mechanical setups).

To overcome part of the uncertainty in the data, one needs to collect as many metadata about the material and its history, as possible, including those that one has no immediate use for at the moment, but might potentially need in the future. Since most of the research equipment being used for characterization tasks is commercial instrumentation, collecting this metadata in an (ideally) fully automated fashion requires the manufacturer’s support. In many cases the formats in which scientific data are provided by these instruments is proprietary. Even if all the data to describe the instrument’s condition of operation are stored, large parts of them may get lost when using the vendor’s software to export the data to other formats; mostly because the “standard format” does not foresee storing vendor- and instrument-specific metadata. It is however worth mentioning here that the CIF dictionaries (see the Section “Towards FAIR metadata schemas for computational materials science”) already contain (meta)data names to describe instrumentation, sample history, and standard uncertainties in both measured and computed values. As a useful addition, the CIF framework provides tools for implementing quality criteria, which can be used for evaluating the trustworthiness of data objects. In this respect, the community has been developing with CIF a powerful tool onto which a FAIR representation of at least structural data can be built.

At large research infrastructures like synchrotrons and neutron-scattering facilities, where a significant fraction of instruments is custom built, and data are often shared with external partners, standards for file formats and metadata structures are being agreed upon, a prominent one being the NeXus standard. NeXus [115] defines hierarchies and rules on how metadata should be described and allows compliant storage using HDF5. Experimental research communities can profit from these activities and provide NeXus-format application definitions which describe necessary metadata that should be stored in a dataset, along with definitions for some optional metadata. This common file format for scientific data is slowly beginning to spread to other communities. Having a standard file format for different types of scientific data seems to be an important step forward towards FAIR data management, since it severely reduces the threshold to share data across communities. Note that NeXus provides a glossary and connected ontology which helps in machine interpretability, and so in reusability.

While standard file formats are of very high value in making data findable and accessible, due to common use of keywords to describe a given parameter, they also make them more interoperable, since the barrier for reading the data is lowered. However, making experimental data truly reproducible requires in many cases more metadata to be collected. Only if the uncertainty with which data can be reproduced is well understood, they may also be fully reusable. As discussed in the previous paragraph, part of these metadata must be provided by manufacturers of commercially available components of the experimental setup. Often this just requires more exhaustive data export functions and/or proper, i.e. versioned descriptions, for all of the instrument-state-describing metadata which are being collected during the experiment. Additionally, it may be necessary to equip home-built laboratory equipment with additional sensors and functionalities for logging their signals.

Even with added sensors and automated logging of all accessible metadata, in many cases, it is also necessary to compile and complete the record of metadata describing the current and past states of the sample that is being characterized by manually adding information and/or combining data from different sources. Tools for doing this in a machine-readable fashion are electronic laboratory notebooks (ELNs) and/or laboratory information management systems (LIMS). Many such systems are already available [116,117,118,119,120,121,122], including open-source solutions that combine features of both ELN and LIMS into one software. Server-client solutions that do not require a specific client, but may be accessed through any web browser, have the advantage that information may be accessed and edited from any electronic device capable of interacting with the server. Such ease of access, combined with the establishment of rules and practices of holistic metadata recording about sample conditions and experimental workflows will also help to increase the reproducibility, and thus with that the reusability of experimental data. The easier the use of such a system is, and the more apparent it makes the benefits of the availability of FAIR experimental data, the faster it will be adopted by the scientific community.

Outlook on ontologies in materials science

In data science, an ontology is a formal representation of the knowledge of a community about a domain of interest, for a purpose. As ontologies are currently less common in basic materials science than in other fields of science, let us explain these terms:

- Formal representation means that: (1) the ontology is a representation, hence it is a simplification, or a model, of the target domain, and (2) the attribute formal communicates that the ontological terms and relationships between them must have a deterministic and unambiguous meaning. Furthermore, formal representation implies that the mechanism to specify the ontology must have a degree of logical processing capability, e.g., inference and reasoning should be possible. Crucially, the attribute formal refers to the fact that an ontology should be machine-readable.

- Knowledge is the accumulated set of facts, pieces of information, and skills of the experts of the domain of interest that are represented in the ontology.

- The community influences the ontology in two aspects; (1) it implies an overall agreement between a group of experts/users of the knowledge as represented in the ontology and (2) it indicates that the ontology is not meant to convince a whole population nor wants to be universal. However, if it fulfills the requirements of bigger communities, the ontology will be adopted by broader audiences and will find its way towards standardization.

- The domain of interest is the common ground for the community, e.g., a scientific discipline, a subordinate of discipline, or a market section. It is often used as a boundary to limit the scope of the ontology. It is a proper tool to detect overlapping concepts, modularizing ontologies, and identifying extension and integration points.

- The purpose conveys the goals of the ontology designers so that the ontology is applicable to a set of situations. In many ontology design efforts, the purpose is formulated by a collection of so-called competency questions. These questions and the answers provided to them identify the intent and viewpoint of the designers and set the potential applications of the ontology.

In practice, ontologies are often mapped onto, and visualized by means of, directed acyclic graphs, where an edge is one of a well-defined set of relationships (e.g., is a, has property) and each node is a class, i.e., a concept which is specific to the domain of interest. Each node-edge-node triple is interpreted as a subject-predicate-object expression. For instance, in an ontology for catalysis, one could find the triples: “catalytic material–has property–selectivity”, and “selectivity–refers to–reaction product.” Ontologies address the interoperability requirement of FAIR data. By means of a machine-readable formal structure, which can be connected to an existing or ex novo derived metadata schema of a database, ontologies allow queries over various databases, even from different fields.