Difference between revisions of "LII:Planning for Disruptions in Laboratory Operations"

Shawndouglas (talk | contribs) (Final edit) |

Shawndouglas (talk | contribs) m (→Abbreviations, acronyms, and initialisms: Tweak) |

||

| Line 412: | Line 412: | ||

'''BHT''': butylated hydroxytoluene (BHT) | '''BHT''': butylated hydroxytoluene (BHT) | ||

'''CDS''': | '''CDS''': chromatography data system | ||

'''ELN''': | '''ELN''': electronic laboratory notebook | ||

'''GLP''': good laboratory practice | '''GLP''': good laboratory practice | ||

| Line 420: | Line 420: | ||

'''GMP''': good manufacturing practice | '''GMP''': good manufacturing practice | ||

'''IT''': | '''IT''': information technology | ||

'''LIMS''': | '''LIMS''': laboratory information management system | ||

'''LSE''': | '''LSE''': laboratory systems engineer | ||

'''QC''': quality control | '''QC''': quality control | ||

Revision as of 17:58, 21 March 2022

Title: Planning for Disruptions in Laboratory Operations

Author for citation: Joe Liscouski, with editorial modifications by Shawn Douglas

License for content: Creative Commons Attribution-ShareAlike 4.0 International

Publication date: March 2022

Introduction

A high-level of productivity is something laboratory management wants and those working for them strive to achieve. However, what happens when reality trips us up? We found out when COVID-19 appeared.

This work examines how laboratory operations can be organized to meet that disruption, as well as other disruptions we may have to face. Many of these changes, including the introduction of new technologies and changing attitudes about work, were in the making already but at a much slower pace.

A brief look at "working"

Over the years, productivity has had many measures, from 40 to 60 hour work weeks and piece-work to pounds of material processed to samples run, all of which comes from a manufacturing mind set. People went to work in an office, lab, or production site, did their work, put in their time, and went home. That was in the timeframe leading up to the 1950s and '60s. Today, in 2022, things have changed.

People went to a work site because that’s where the work, and the tools they needed to do it, was located, along with the people they needed to interact with. Secure electronic communications changed all that. As long as carrying out your work depended on specialized, fixed-in-place equipment, you went to the work site. Once it became portable, doing the work depended on where you were and the ability to connect with those you worked with.

The activity of working was a normal, routine thing. Changes in the way operations were carried out were a function of the adoption of new technologies and practices, i.e., normal organizational evolution. However, just as in the development of living systems, the organizational evolutionary process will eventually face a new challenge that throws work operations into disorder. It's a given that disruptions in lab operations are going to occur, and you need to be prepared to meet them.

To that point, the emergence of COVID-19 in our society has accelerated shifts in organizational behavior that might otherwise have taken a decade to develop. That order of magnitude of increase in the rate of change exposed both opportunities people were able to take advantage of and understandable gaps that a slower pace may have planned for. We need to look at what we have learned in responding to the constraints imposed by the pandemic, how we can prepare for the continuation of its impact, and how we can take advantage of technologies to adapt to new ways of conducting scientific work.

This work is not just a historical curiosity but an examination of what we will have to do to meet the challenges of emerging transmissible diseases and geographical population fragmentation caused by, for example, climate change (e.g., disruptions due to storms, power outages, difficulties traveling, etc.) and people’s mobility. All of that doesn’t begin to take into account difficulties in retaining personnel and hiring new people.

Much of the “mobility” issue (i.e., “we can work from anywhere”) comes from the idea of “knowledge workers” in office environments and doesn’t apply to manufacturing and lab bench work. Yes, lab work is also knowledge-based, but its execution may not be portable, depending on the equipment used and regulatory restrictions (corporate or otherwise) that might be in place.

Why does this matter?

A number of articles have detailed how the COVID-driven shift to remote and hybrid working environments has changed people's attitudes about work, particularly in regard to office activities. Employees more critically examined what work they were doing and how they were doing it. This resulted, in part, in a need for a change in balance between work and personal lives, as well as recognition that “the way things have always been done” isn’t the way things have to be. As it turns out, where possible, some people would like choices in working conditions. That isn’t universal, however.

Writing for Fortune, journalist Geoff Colvin notes that people who are driven to be fast-track successful prefer to return to a traditional office work environment where closer contact between management and employees can occur; their efforts are more visible.[1] Granted those surveyed for Colvin's article are in financial companies and are perhaps more driven by shorter term advancement. In addition, a second article written by Erica Pandey for Axios shows that the laboratory real estate market is “hot” and growing. More importantly it is growing where lab-wise intelligence is concentrating, places where similar working environments exist, as well as educational opportunities.[2] That means a growing job market with opportunities for growth and change, but potentially in a confined set of geographies that are vulnerable to the problems noted earlier. How do we relieve that potential stress? What happens when severe weather, power disruptions, and contagious disease outbreaks[3] push against concentrations of people working in tight quarters? Is the lab environment flexible enough to adapt to changes in how work gets done?

We need to look at what “lab work” is and where it differs from other forms of work, and where it is similar.

The nature of laboratory work

There are two aspects to working in a laboratory: meeting goals that are important to your organization, and meeting goals that are important to you, both professionally and personally. After all, productivity isn’t the only measure of satisfaction with lab work; people’s satisfaction with their work is also very important.

When you think of lab operations, what functions come to mind? It's relatively easy to think of experiments, analysis, reporting, and planning the next set of experiments. Some may even consider the supply chain and waste disposal as part of laboratory operations. But what of data and information management practices in the lab?

In today's lab, everything revolves around data and information, including its:

- production (including method development and experimental planning),

- management,

- utilization,

- analysis,

- application (to answering questions),

- reporting, and

- distribution.

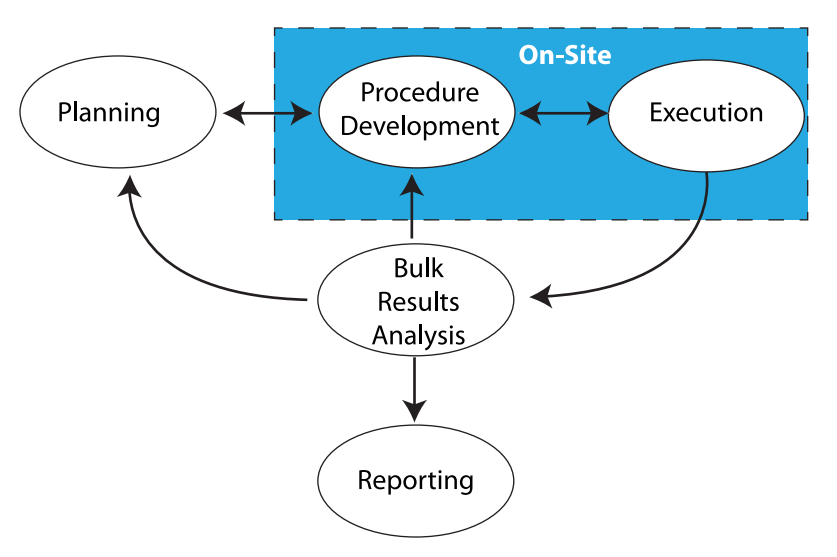

Everything we do in laboratory work addresses those points. Sometimes it is done with our hands and manual skills, while other times it takes place in the three pounds of grey matter in our heads. Stated another way, there's the work that needs to be done on-site, and the work that can be done remotely (Figure 1).

|

The work that needs to be done on-site is found in the blue box in Figure 1, consisting of benchtop work, instrumentation, materials preparation, support equipment, etc. This is the stuff people usually think about when “lab work” is mentioned. Aside from equipment that is designed to be portable and made for field use, most laboratory equipment isn’t able to be relocated without a lot of time-consuming tear-down and set-up work, as well as proving that the equipment/instrumentation is operating properly according to specifications for use afterwards. Additionally, there are concerns with materials (e.g., solvents, etc.) that need to be used, their proper handing, storage, and disposal. That isn’t to say that transportable instrumentation isn’t possible; mobile labs exist and work well, but because they are designed to. Those are special cases and not representative of the routine lab setting.

The space outside the blue box in Figure 1 consists of planning, academic research, meetings, and working with documents, models, analytics, etc. That work should be portable and may only be restricted by policies concerning external access to laboratory/corporate systems, as well as those governing the removal of corporate property (e.g., intellectual property and computers) from the normal work site. Permitting/restricting external access isn’t a trivial point; if you can get access to information from outside the lab, so can someone else unless security measures are carefully planned.

The next section will focus on addressing laboratory work in the age of COVID-19 and other transmissible diseases. To be sure, the SARS-CoV-2 virus and its variants are just the immediate concern; other infectious elements may manifest themselves as a normal part of people interacting with the world, as well as by releases of disease resulting from climate change. There are other disruptive events that need to be addressed, and they will be covered later.

On-site work and automation: A preview

In a situation where on-site work activities are limited due to a contagion, getting work done while minimizing people’s involvement is critical, and that means employing automation, which could take several forms. There are a few reasons for employing automation and limiting human activity in this scenario:

- Automated systems reduce error and variability in results.

- Minimizing human activity reduces the potential for disease transmission and would help maintain people’s health.

- Automated systems have a higher level of productivity and free people from mind-numbing, repetitive tasks; if there are problems finding enough people to carry out the work, we should strive to use the people we have to do things people are better suited for rather than repetitive actions.

Despite the advantages of automation in this scenario, just saying “automation can be a solution” doesn’t say much until you consider all of the ramifications of what those words mean, and what it will take to make them a reality. That’s where we’re going with this.

Before we get too deep into this, I'd like to point out that a lot of what we’ll be discussing is based on Considerations in the Automation of Laboratory Procedures, published in January 2021. That work notes that in order for someone to pursue the automation of a procedure or method, there are a few requirements to consider[4]:

- The method under consideration must be proven, validated, and stable (i.e., not changing, with no moving targets).

- The method's use in automation is predicated on there being sufficient sample throughput to make it worth doing.

- The automated procedure should be in use over a long enough period of time to justify the work and make the project financially justifiable.

It's important to note that the concept of "scientific development" ends with the first consideration. In other words, method development, modification, adjustment, and add-ons end once a documented, proven process for accomplishing a lab task is fully developed. The other two considerations involve work post-scientific-development, specifically process engineering, as a sub-heading under the concept of "laboratory systems engineering" (as discussed in Laboratory Technology Planning and Management: The Practice of Laboratory Systems Engineering). That's not to say that over time changes won't be needed. There may be improvements required (i.e., "evolutionary operations," as described in Directions in Laboratory Systems: One Person's Perspective), but at least get the initial system working well before you tweak it.

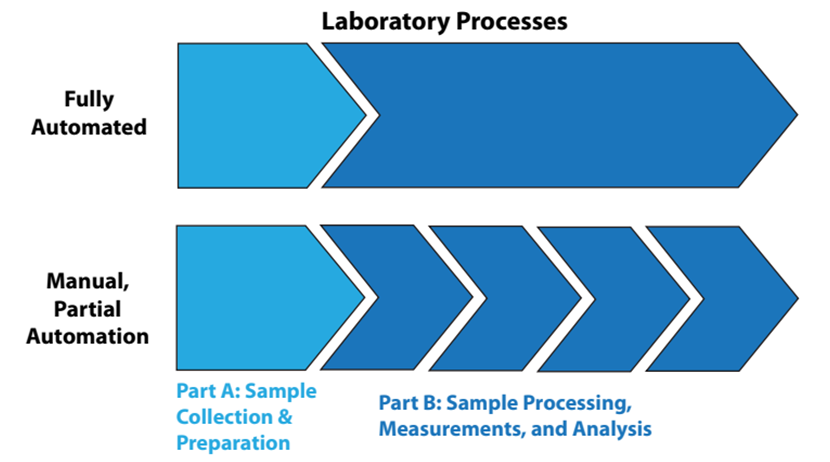

That said, let's look at the automation of lab processes from two viewpoints: “fully” automated laboratory processes and manually or partially automated lab processes, as shown in Figure 2.

|

In fully automated processes, the items in Part B of Figure 2 are a continuous sequence of events, without human intervention. In the lower portion of the illustration, Part B is a sequence of disconnected steps, usually connected by manual effort. Figure 2 might seem simplistic and obvious, but it is there to emphasize a point: sample collection and preparation are often separated from automated processing. Unless the samples have characteristics that make them easy to handle (e.g., fluids, free flowing powders), preparation of the samples for analysis is usually a time-consuming manual effort that can frustrate the benefits of automation. The same goes for sample storage and retrieval. The solution to these issues will vary from industry to industry, but they are worth addressing since good solutions would considerably improve the operational efficiency of labs and reduce manual labor requirements.

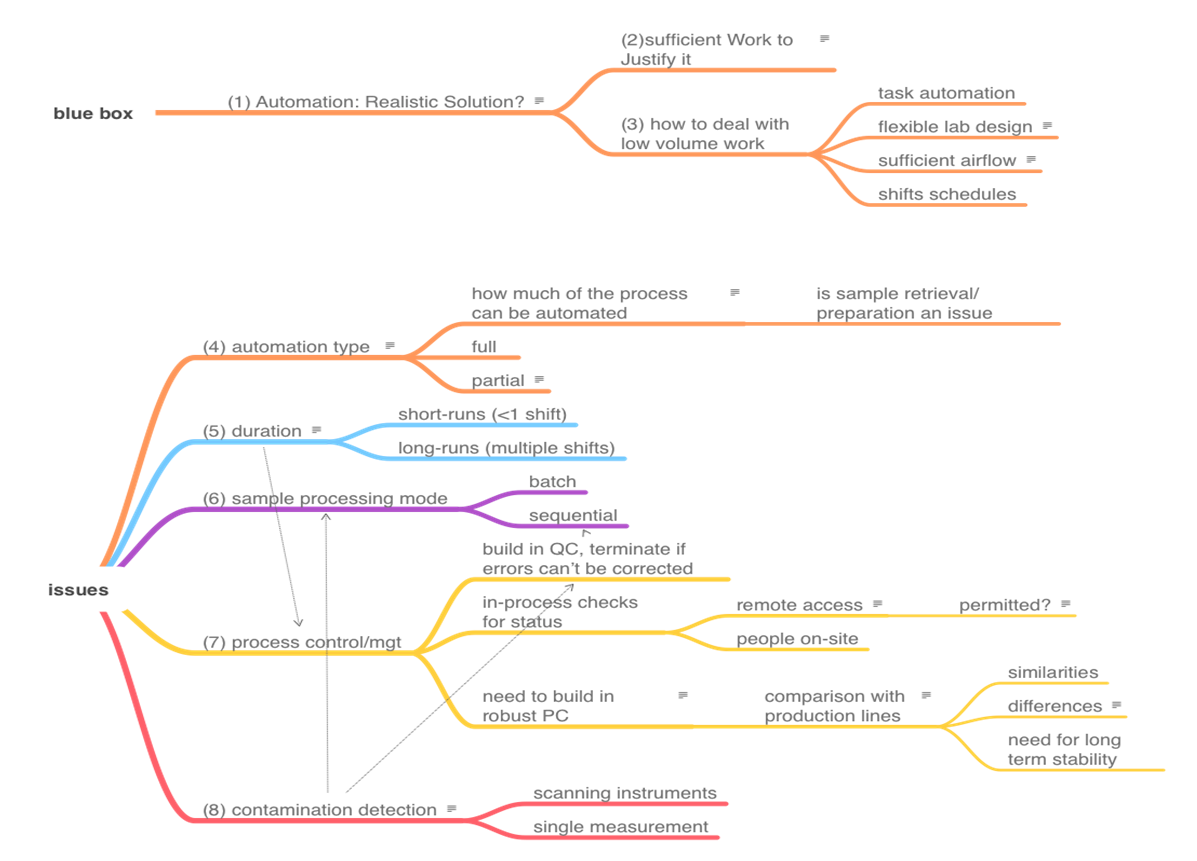

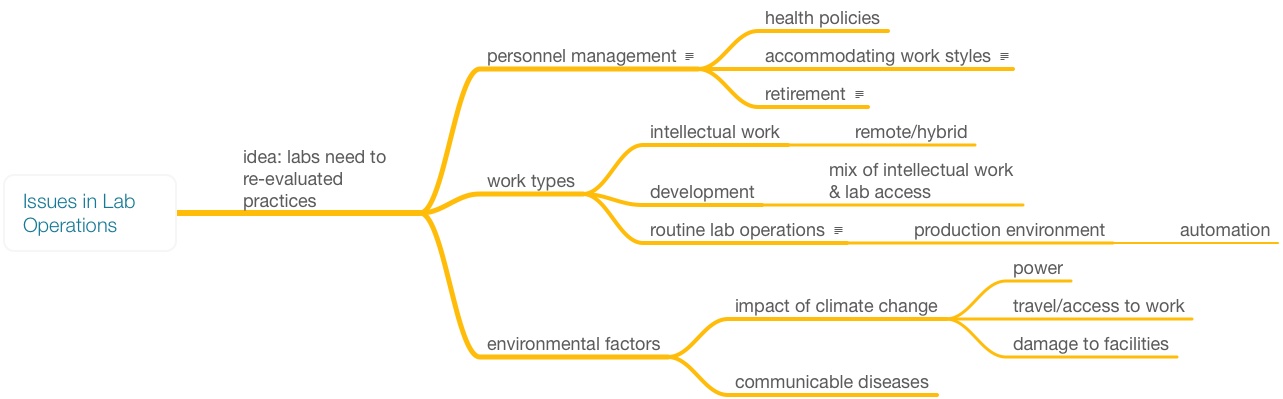

Preventing disruptions through the automation of on-site laboratory work

The topics that we need to cover in order to address the automation of on-site work are shown in the mindmap in Figure 3. There is no reasonable way to present the topics as a smooth narrative since they are heavily interrelated. View this mindmap as the organizing feature or "table of contents" of this section, and then we'll cover each item. The numbers in parentheses in Figure 3 represent subsection numbers, intended to make finding things easier.

|

1. Is automation a realistic solution to lab productivity and reducing personnel involvement?

Automation in lab work can be an effective method of addressing productivity and reducing the amount of human interaction with a process. That reduction in personnel can be advantageous in dealing with disease transmission and working with hazardous materials. But how much automation is appropriate? That question is going to be looked at throughout this discussion, but before we get into it, there are a couple of points that need to be made.

Rather than discussing procedures in the abstract, we’re going to use two methods as a basis for highlighting key points. Those methods are the analysis of BHT in polyolefins, and the ELISA assay, which is widely used in the life sciences.

Method 1: Analysis of antioxidants in polyolefins

Antioxidants are used to prevent oxidative breakdown of polyolefins. Butylated hydroxytoluene (BHT) is used at concentrations of ~1000 ppm in polyolefins (e.g., polypropylene, polyethylene). Samples may arrive as fabricated parts or as raw materials, usually in pellets ranging in size from 1/8 to 1/4". The outline below is an abbreviated summary to highlight key points. However, the overall method is a common one for additive analysis in plastics, packaging, etc.[5][6] This was a common procedure in the first lab I worked in:

- Standards are prepared from high-quality BHT material in a solvent (methylene chloride, aka dichloromethane). Note that these standards are used to calibrate the chromatograph's detector response to a different concentration of BHT; they are different than standard/reference samples used to monitor the process.

- Samples are ground in a Wiley mill (with a 20 mesh screen) to make the material uniform in size and increase the surface area for solvent extraction (Figure 4). A Wiley mill has a hopper feed, rotating blades, and a mesh screen that controls particle size (bottom of blade chamber). The ground sample exits through the bottom into the container. As to its use, imagine making 20 cups of coffee; the beans for each cup have to be ground individually, and the grinder partially disassembled and cleaned to prevent cross-contamination. Cleaning involves brushes, air hoses, and vacuum cleaners. It makes a lot of noise. This is an example of equipment designed for human use that is difficult or impossible to include in a fully automated processing stream, and an example of why the sample preparation discussion above is important.

- Five grams of sample are weighed out and put into a flask, then 50 ml of solvent is added, and the container is sealed.

- The flasks are put on a shaker for a period of time, after which they are ready for chromatographic analysis.

Method 2: ELISA, the enzyme-linked immunosorbent assay

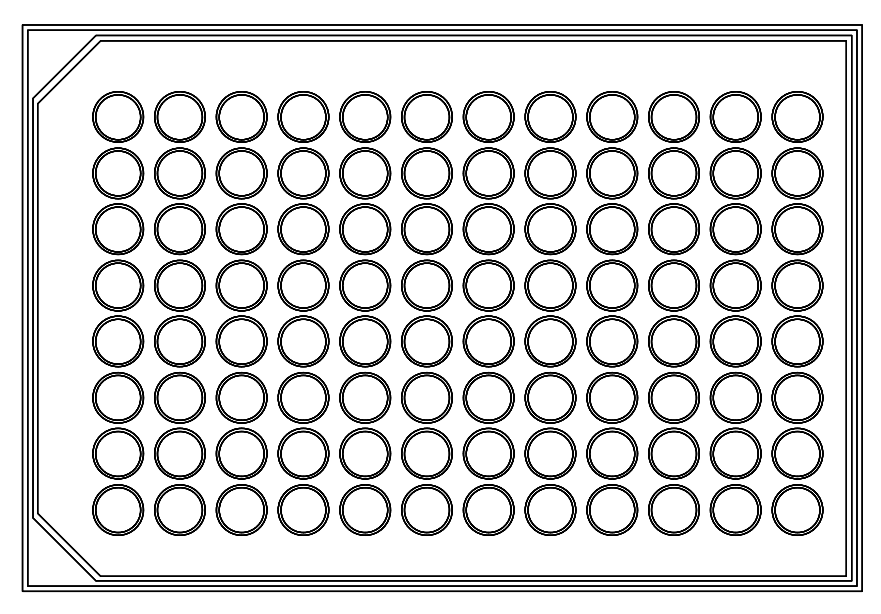

ELISA assays are microplate-based (ranging from 96-384 wells per plate; see Figure 5) procedures that use antibodies to detect and measure an antigen using highly specific antibody-antigen reactions. The basic outline of the assay is as follows[7][8]:

- Directly or indirectly immobilize antigens to the surface of the microplate wells.

- Add an irrelevant protein or some other molecule to cover the unsaturated surface-binding portions of the microplate wells.

- Incubate the microplate wells with antigen-specific antibodies that have a common affinity of binding to the antigens.

- Detect the signal generated by any primary or secondary tag found on the specific antibody.

Basically, a series of material additions (liquids) and washings (removal of excess material) is made in preparation for measurement. This is a procedure I’ve observed in several labs, though not personally executed.

2. Is there sufficient work to justify automation? and 3. How do you tackle automation in low-volume work environments?

The automation of lab processes is very much like automation in any industrial process. There are two big differences, however. First, in an industrial process, management will go to considerable lengths to maximize automation efforts. Higher throughput and reduced error and variability in the results means more profits, and that—among other points like employee safety and reduced labor costs—can justify the cost of engineering automation. There is also the point that considerable effort will be made to custom design automation stations so that they will work; this is a lot different than lab situations where doing the best you can with what is available is the more common practice. Second, industrial processes tend to remain stable, varying only as technological improvements translate to higher throughput and high profitability.

The impact of automation may not be as dramatic in lab systems since labs are usually classified as cost centers rather than profit centers. (Clinical labs are one exception, where total lab automation has made a considerable impact.) Another issue is that the technologies used in lab instruments and systems are developed as independent entities, designed to do a particular task, with little concern as to how they fit into a lab's specific procedures. It’s up to the lab to make things work by putting components together in sequence, at least for one generation. However, engineering systems need to operate over the long term, preferably with built-in upgrade facilities, something that usually hasn't been considered in lab automation work. One notable departure from this is the automation system built upon microplate technologies (e.g., ELISA). Components from varied vendors have to meet the requirements of microplate geometries and thus work together via robotics automation or manual effort (i.e., people acting as the robots).

As an example, industrial production lines rarely if ever come into being without precursors. Manufacturing something starts with people carrying out tasks that may be assisted by machinery, and gradually a production line develops, with multiple people doing specialized work that we would recognize as a production line. As demand for products increases, higher productivity is needed. Automation is added until we have a fully automated production facility. The development of this automation is based on justified need; there is sufficient work to warrant its implementation.

Laboratories are no different in this regard. Procedures are executed manually until the process is worked out and proven, and eventually the lab can look to gradually improving throughput and efficiency as the volume of work begins to increase. The leads to several approaches to dealing with increasing volume.

Looking at Method 1, the following automation additions may take place over time:

- Manual injection of samples/standards/references into the chromatograph gives way to an autosampler.

- Manual analysis of chromatograms gives way to a chromatography data system (CDS). (More on this in part 6.)

- The extraction and mixing stages could be automated using a device similar to the Agilent 7693A automatic liquid sampler.[9] This would use a much smaller amount of sample and solvent, though sensitivity could become an issue, as could small particles of ground sample blocking or entering the syringe.

Looking at Method 2, the following automation additions may eventually find their way into the lab:

- Depending on financial resources, using increasingly capable manual pipettes, followed by automated units, would limit the amount of manual work involved. Eventually as the need scales up, robotics subsystems for reagent handing would take over.

- Because of the nature of microplates, an array of automated washers, readers, etc. would process the plates, with people transferring them from one station to another. When justified, the entire process could be handled by robotics.

Lab design considerations for implementing automation to limit human contact and pathogen transmission

Up to this point we've addressed the justification of automation and given a few examples of how it can gradually be added to laboratory workflow. But the primary issue we hope to address is, in addition to improving overall efficiency in the lab, how can automation be implemented to minimize human contact and avoid disease transmission? Task automation along the lines of that noted above is one way of addressing it. Other ways of addressing it revolve around limiting the likelihood of contact, as well as spreading infectious material.

Thoughtful physical lab design is one option. Writing for Lab Manager, architect Douglas White offers several methods to go about such design.[10] In new or renovated facilities, installing fixed lab benches with modular designs would permit reconfiguration of the lab depending on the immediate workflow, and as labs introduce automation, which normally requires a change in configuration of counter space, power, and other utilities.

Another option is to improve airflow though the lab, bringing in air from outside the lab and exhausting lab air directly outdoors. In some lab situations, the lab air pressure should be lower than hallways to keep particles from spreading. The CDC’s Guidelines for Environmental Infection Control in Health-Care Facilities (Appendix B. Air) recommends that labs have a minimum total air change per hour of 4 to 12 times, depending on the type area designation.[11] While these guidelines were developed for healthcare facilities, they should be applicable to most labs. Other references on the subject include:

- Yale University's Guidelines for Safe Laboratory Design[12]

- ASHRAE's ASHRAE Laboratory Design Guide: Planning and Operation of Laboratory HVAC Systems[13]

- TSI Incorporated's Laboratory Design Handbook[14]

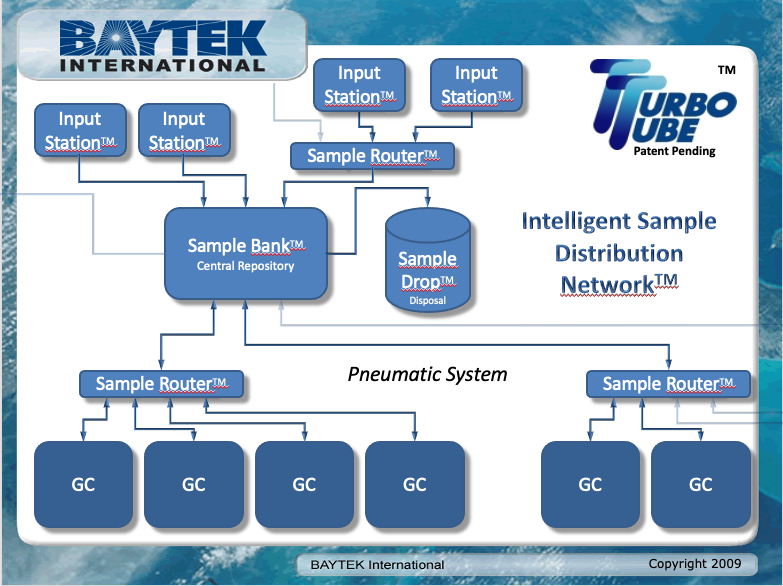

Another option is to separate functions such as sample preparation, holding, and distribution. Baytek International markets a product called the TurboTube, which is a distribution system for sample vials primarily used in gas chromatography (the system isn’t limited to gas chromatography; it’s a matter of vial sizing). The system provides an intelligent routing and holding system, with an option for disposal. If a prepared sample needs multiple tests, the sample can be passed from one system to another, returned to a holding area, and retested if needed.[15] A diagram from a 2009 presentation is shown in Figure 6.

|

Similarly, research has shown pneumatic tube transportation has beneficial effects in improving sample turnaround time (TAT), without damage to samples, in a hospital setting.[16]

All said, robotics should be expected to play a useful role in this aspect of the work. Sample trays could be moved from one place to another using independent robots such as those described in a recent article by journalist Daniella Genovese[17]:

Room service robots are being deployed at hotels around the nation, aiding staff at a time when the industry is facing a labor crunch driven by the coronavirus pandemic. Savioke Relay Plus robots take on simple tasks such as providing room service, freeing up hotel staff to focus on more important tasks especially during a labor crunch, CEO Steve Cousins told FOX Business.

Granted, your labs aren't hotels, but in terms of moving material from lab station to lab station, this is pretty similar. Such automation should be adaptable to lab facilities. They’d need the ability to be sent from one place to another. One key feature would be the ability of a robot to use an elevator; a navigational strategy to do just that was presented at the 2007 International Conference on Control, Automation and Systems.[18]

Beyond that, there is the potential for change from running one laboratory shift per day to multiple shifts. That reduces the amount of interpersonal interactions, but it does increase the need for clear communications—preferably written—so that expectations, schedule issues, and points about processing lab work are clearly understood.

To summarize, the options for reducing personnel contact and the potential for disease transmission, prior to embracing a full or partial automation process, are:

- Task-level automation: Use existing commercial products to carry out repetitive tasks (with little customization), which allows people to remove themselves from the lab and its processes for a time (i.e., people need to set up equipment, monitor the equipment, and move material, but once it is running, time in the lab is minimized).

- Flexible lab design: Permit people to isolate themselves, with the benefit of being able to reorganize space should automation demand it.

- Air circulation: Provide sufficient airflow through the lab in order to sweep fumes and particulates (including airborne pathogens) from the working environment.

- Shift management: Schedule multiple shifts to reduce the number of people on-site.

The situation noted above is typical of labs outside of the biotechnology and clinical chemistry industries. Biotech tends to base their processes on microplate technologies (as seen in the prior-mentioned Method 2) and has wide vendor support for compatible equipment. Clinical chemistry, by virtue of its total laboratory automation program, also enjoys wide vendor support. Though the instrumentation in clinical chemistry is varied, the development of industry-standard communication protocols and validated, standardized industry-wide methods gives vendors a basis for developing equipment that easily integrates into lab processes. This is a model that would benefit most industries.

The remainder of the automation discussion will focus on labs that have to develop their own approaches to lab process automation. If we were the industrial production world, this is where we would begin developing specialized workstations, and then we'd integrate those workstations into a continuous production line.

4. Automation type and 5. Duration

Now our discussion of full and partial automation will differ from the previous few paragraphs in the nature of the commitment made to the activities of automation. We’re not just purchasing existing equipment, which is able to be used in multiple processes, to make life easier; we’re in the process of building a dedicated production system. Doing this means that the laboratory's need, as well as an associated business and financial justification, for automation is there to provide support for the project. It also means that implementation of that automation—particularly when partially automating a process—should be cognizant of the lab's workflow. For example, implementation ideally would begin at the end point of the workflow and move toward the start to avoid creating bottlenecks.

The differences between full and partial automation are essentially twofold. First, the difference can be gauged by determining how much of the procedure is automated, potentially excluding sample preparation. Second, automation type differs based upon the duration of the production run. Full automation may cover multiple laboratory shifts, whereas partial automation may have the non-automated work be done within one shift. A laboratory could realistically assign someone to doing the manual setup work at the start of a given day and then permit the automated work to run overnight.

Table 1 examines duration of automation, comparing short runs of approximately one shift with long runs of multiple shifts.

| ||||||||||

6. Sample processing modes

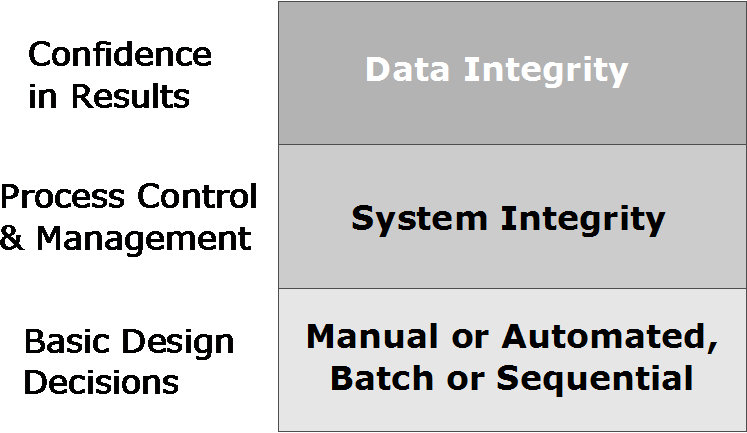

Some of the differences between full and partial automation and the issues around duration don’t fully come to light until we consider operating modes, and later, process control and management. The goal isn’t just to process samples and experiments; it’s to produce high-quality results, which depends on the data integrity stack (Figure 7). In short, data integrity depends upon system integrity, which in turn is a function of design decisions for automating the process.

|

What we’ve introduced is two modes of operation: sequential processing of samples (i.e., Method 1) and batch processing of samples (i.e., Method 2; each microplate is a batch). On the surface, the differences between the two are straightforward. In Method 1, samples are prepared and processed by the instrument one at a time. In Method 2, plates are treated as a unit (batch), but the individual sample cells may be processed in different steps: one cell at a time, a row or column at a time, or a plate at a time, depending on the implementation (full or partial automation, manual or equipment-assisted).

There are two consequences to this. First, in either batch or sequential methods, the more steps that are done manually, the more opportunity there is for error and variability. Manual execution has a higher variability than automated systems, and if you are pipetting material into a 96-well plate, that’s 96 operations instead of one for an automated plate-at-a-time reagent addition. Depending on the type of pipette used, as many as six wells can be accessed at a time. (Note that typical microplates have as few as six wells, or as many as 384 or 1536; higher densities are available in tape-based formats. Microplates with more than 96 wells are normally used in robotic equipment.)

The second is a matter of when results become available. In Method 1, each result is available as soon as the computer processes the chromatographic output. They can be examined for anomalies and against expected norms (deviations can cause the system to halt) and determine if everything is operating properly, and then continue. This reduces waste of materials, samples, and processing time. Method 2’s batch mode doesn’t give us that luxury; the results for a full plate become available at the same time, and if there are problems, the entire batch may be wasted.

There are a couple of other issues with batch systems. One is flexibility: what happens when a series of batches are set up and programmed into the system, and then a rush sample comes in? Can the system accommodate that? Another issue relates to the minimum number of samples that have to be run to make the system cost effective to operate.

Finally, sequential systems have their own issues. They are slower processing material, and more expensive to replicate if the sample volume increases significantly due to the cost of the measuring and support equipment.

7. Process control and management

One characteristic of production lines is that they can run for extended periods of time and provide quality products. The quality of a product can be tested by comparing the item or material produced against its specifications. Size, shape, and composition are just a few characteristics that can be measured against the ideal standard. What if your “products” are measurements, numbers, and descriptors? How do you know if the expected level of quality is there, and what does that really mean? What does that mean for automated laboratory processes?

What we have to be able to demonstrate is that we have confidence in the results that laboratory procedures yield. Whether it is a research or testing lab, a QC or a contract lab, the bottom line is the same: how do I know that the process as executed is giving me results that I can trust? We can only do that by inference, an inference that is built on a foundation of facts and history:

- The process is a fully validated implementation of a proven validated method. This is not a good laboratory practice/good manufacturing practice (GLP/GMP) or similar issue, just a matter of proper engineering practices.

- Those working with the system are fully qualified.

- We have a history of running reference samples that shows that the procedure's execution is under control.

- In-process check points provide an early warning if the process is changing in an uncontrolled manner.

This is another area where the duration of run is a factor. If it is all within a shift, people can periodically check the execution and see if it is working properly, taking corrective action when needed. Longer runs, particularly if unattended for periods of time, would require more robust process control and management.

Wherever possible, checkpoints should be built into the process. For example, if liquids are being dispensed, are they being used at a rate consistent with the number of samples processed? Too much liquid used could mean a leak, and too little used could be a plugged dispenser. The nature of the checkpoints will depend on the characteristics of the process. The appearance of a solution can be checked to see if it is the right color, turbidity, clarity, etc. Bubble traps can be used and monitored to make sure air isn’t being drawn into the system and interfering with flows and measurements.

Another opportunity can be found with remote access to the process monitors. This can be a database of control measurements, or in the case of instrument systems, logging into the system and looking at the results of completed analysis, including digitized analog data. Some organizations prohibit remote logins to data systems with good reason; if you can get in so can someone else. It should be possible to balance security with access so you can have confidence things are working well. If that isn’t possible, then checks will be left to people on-site.

The extent of having built-in process checks, running reference samples, and implementing statistical process control, etc. should be subjected to a risk-based cost-benefit analysis. The goals are simple: process lab procedures economically and efficiently, manage costs, and develop a system with results you can have confidence in.

As noted earlier, this is analogous to production lines in general. They face the same issues of producing quality products efficiently with minimum waste. People with process engineering backgrounds can be invaluable to making things function. The major difference between a production line making products and laboratory workflows is that those manufactured products can be tested and examined to separate those products that meet desired characteristics from those that don’t. For the most part, the results of laboratory work can’t directly be "tested and examined"; laboratory quality has to be inferred by external factors (e.g., using reference samples, etc.). Re-running a sample and getting the same result can just mean the same error was repeated. (Note, however, that a reference sample is different than running an instrument calibration standard. The latter only evaluates an instrument's detector response, whereas the former is subjected to the entire process.)

8. Contamination detection

Let’s assume you have an automated laboratory process that ends with an instrument taking measurements, a computer processing the results, and those results being entered in a laboratory information management system (LIMS) or electronic laboratory notebook (ELN) (both Method 1 and 2 are good examples). Do your systems check for contamination that may compromise those results? As we move toward increasing levels of automation, particularly with instrumentation, this is a significant problem.

Let's take Method 1 for example. The end process is a chromatogram processed by a CDS. (The same issue exists with scanning spectrometers, etc.). The problem comes with your CDS or other instrument system: is it programmed to look for things that shouldn’t be there, like extra peaks, distorted peaks, etc.? You want to avoid processing samples if contamination is detected; you have to rule out the problem coming from reagents and solvents. You also need to determine if it is just one sample or a more systematic problem. Then there is the issue of identifying the contamination. There are different approaches to handling this. One is to create a reference catalog of possible contaminants along with their relative retention times (relative to one or more of the analyzed components) and use that as a means of differentiating problem peaks from those that can be ignored. If a peak is found that isn’t in the catalog, you have an issue to work through.

When chromatograms, spectra, etc., were processed by hand, they were automatically inspected for issues such as these; the complete data set was in front of you. With current equipment, is the digitized analog signal available? It should be for every sample as a first step in tracking down problems. One of the things we’ve lost in moving away from analog measurements toward digital systems is the intimacy with the data. Samples go in, numbers come out. What is going on in between?

The same issue exists with single-point measurements like the single beam/single wavelength detectors that would be used in Method 2. You have a single measurement, no data on either side of that detection point, and no way of knowing if the measured signal is solely the component you are looking for or if something else is present. One way of handling this is to repeat the measurements at two additional wavelengths on either side of the primary measurement (the choice would depend on the shape of the spectral curve) and see if the analytical result is consistent. Without those assurances, you are just automating the process of generating questionable results.

Detecting contamination is one matter, but taking appropriate corrective action is another matter entirely, one that should be built into the automation process. At a minimum, the automated system should notify you that there is an issue. Ideally it would automatically take corrective action, which could be as simple as stopping the process and avoiding wasting material and samples. This is easier with sequential sample processing than it is with batch processing. When you are dealing with processing runs that take place within one shift, the person responsible for the run should be immediately available; that may not be the case if extended runs are being used. Careful communications between shifts is essential.

What of the work that can be done remotely?

Looking back at Figure 1, most everything we've discussed up to this point has been in the blue box, signifying the on-site work. But what of the laboratory work outside the blue box? While different from other offices in an organization, the work in this area—planning, bulk results handling, and reporting—is essentially office work and open to the same concerns as found in other similar environments. The basic issue with making this type of work of a more remote nature is restructuring how the work is done. Figure 8 shows the key issues in lab operations; some have been addressed in the above material, while others—particularly environmental—will be covered shortly.

|

One work culture change that has emerged from the complexities of working in a pandemic environment is that the old way of working, the manufacturing model of an 8 a.m. to 5 p.m. work day, isn’t the only model that works. We have come out of a one-size-fits-all work culture into one beginning to recognize people may have their own methods and styles of working. The results should matter, not the operational approaches to achieving them. Some people are comfortable with the “I’m going to sit at my desk and be creative” approach, others aren’t. What models work for different individuals? Should they have the flexibility to choose, and is it fair to those who are tied to equipment on the bench? What are the requirements for allowing flexibility: is it “I’d just rather work from home,” or is there a real need? How many days per week should someone be in the office?

One clear measure is productivity. Are they achieving their goals, and is the quality of their work product up to expectations? If the answer is “yes,” then does it really matter where they work? That said, there are some requirements that you still might want to put in place:

- Coworkers will inevitably need to contact each other. That contact may be routine (e.g., email), urgent (e.g., cell phone, text message), or mandatory e.g., group meeting, video conferencing). However, one of the reasons for working off-site may be to escape the background office noise and interruptions typical to the office, such that you can get in a few hours of uninterrupted productivity. Keep communication policies realistic to encourage productivity.

- Laboratory personnel will still require secure access to laboratory and other appropriate corporate database systems. This may be to access information, check on the progress of experiments, or perform routine administrative functions. For those working remotely, the security of this access is critical. Two-factor authentication and other methods should be used to prevent unauthorized access (though in some working environments this may not be feasible).

- The lab will still require reliable storage and back-up services. Cloud storage is useful in this regard, allowing remote workers to securely commit their valuable work to company data storage. It may be a corporate data structure or an encrypted cloud-based system. In the latter case, you want to be careful of legal issues that govern rights of access. For example, if you are using a cloud storage service and you are sharing a hard drive with another company's data (separate partitions with protection), and their data is seized by law enforcement, yours will be too. Private storage is more expensive and preferred.

Flexible work environments and hours may be needed to accommodate individual needs. The recent shift in the economy and loss of access to childcare services, for example, may force people to work off-site to take care of children or other family members. Sudden school closures due to fears or the reality of potential infections (i.e., unplanned events) may require schedule changes. Instead of adding stress to the system, relieve it. A day off is one thing, but shifting work hours and working from home while still achieving goals is another. As this is being written, I’m reminded of one manager who had a unique approach to personnel management: her job, as she saw it, was to give us whatever resources we needed to get our work done. In the 1980s this was unusual. If you needed to work from home, take a day off, leave early, borrow equipment, or something else, she trusted you to get your work done, and her employees delivered. The group worked well together and met its goals routinely.

Offices, including lab offices, are going to change. According to Eric Mosley, the CEO and co-founder of Workhuman, a "new normal" for the office can be distilled down to several factors[19]:

- The advent of the "Great Resignation" will act as a true wake-up call for society.

- Going forward, work will need to be hybrid, agile, and interdependent.

- When recruiting and retaining personnel, employers will have to be more inclusive and diverse.

- Getting women back to the workforce has its own set of challenges.

The last item is important. Caregiving largely falls on women's shoulders. Some may elect to fill those caregiver roles while also still desiring a career, while others may be in position to forego caregiving or find a way to make work-from-home or hybrid arrangements work. In some cases, there is no other choice but to forgo work. One thing that companies can do to facilitate women getting back to the workplace is to provide on-site child care. There is a cost (which could be shared between the organization and the employee), but if it helps retain valuable people and reduces the stress in their lives, it may be worth it. A lot depends on the nature of the facility. In many cases, on-site care may not work because of exposure to hazardous materials. An alternative would be to contract with a nearby facility that provides the needed services.

All of this depends on the nature of the lab’s operations (e.g., research, testing, QC, etc.) and the lab's ability to support/tolerate non-traditional working styles. Additionally, considering and planning for the points noted above have more to do with factors beyond the immediate COVID-19 pandemic.

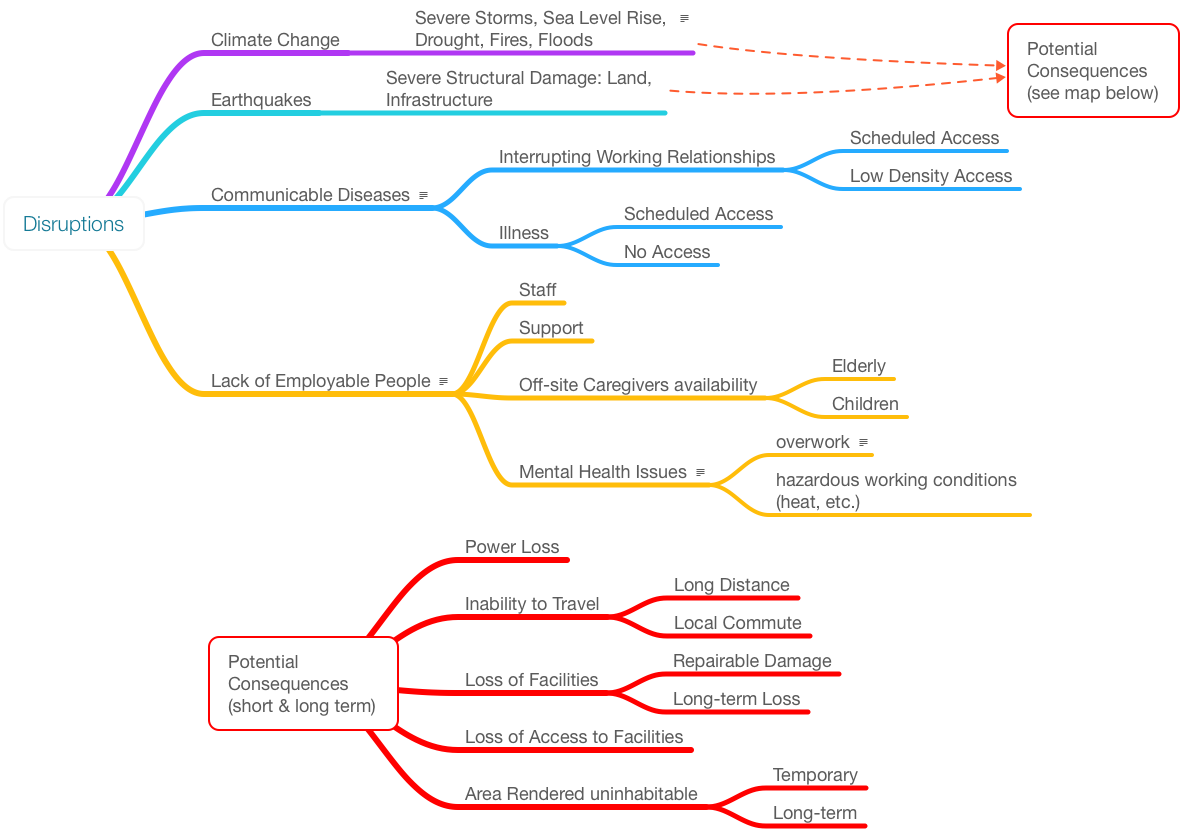

External factors disrupting lab operations

Numerous issues can affect a lab's ability to function (Figure 9). A lot depends on where you are located geographically. Climate change is a major issue since it affects wide areas and its effect can range from annoying to devastating. Many of the issues in this figure do not warrant comment; you are living through them. The issue is how we prepare for them in the near future.

|

Meteorological and power issues

Meteorological issues can cause problems with infrastructure. When facilities are planned and built, critical infrastructure controls may be relegated to the basement or lower levels of the facility. Consider moving them above projected high-water levels. Wiring can be protected by conduits, and steps can be taken to mitigate water damage to those wires. Control systems that people interact with may need to be moved so that controlled shutdowns and startups can occur without risk to personnel.

If an increase in severe and tornadic storms is anticipated, have plans in place so that non-essential personnel can work from home without risking themselves traveling. Those who are essential may require local housing for the same reason: eliminate travel and have them close to the site. As previously suggested, another option is to run controlled shutdowns where feasible to reduce potential damage.

Power loss due to storms, earthquakes, floods, and accidents is one of the simpler issues to address. One obvious answer is to add a generator to a facility; however, you have to take into account what happens when power is lost and then restored, and over what period of time. When power is lost, do operations fail safely or dangerously? What type of experiments and procedures are you running, and what are the consequences of losing power for each step of the process? When power is finally restored, does restoring power create an unsafe condition as a result of an unplanned shut down? Will something jam in the automated workflow? Keep in mind that components with electronic settings (rather than mechanical) may be reset to the manufacturer's default conditions instead of your normal operating settings. Also consider whether equipment that has to be taken off-line, cleaned, and restarted can be done so safely if power is dropping then quickly restored and lost again before it fails.

Regarding generators, a couple things must be kept in mind: the quality of the power provided and the delay in providing it. Not all generators provide the clean power needed for modern electronics. Electrical noise can adversely affect computers, instruments, and other electronic equipment.

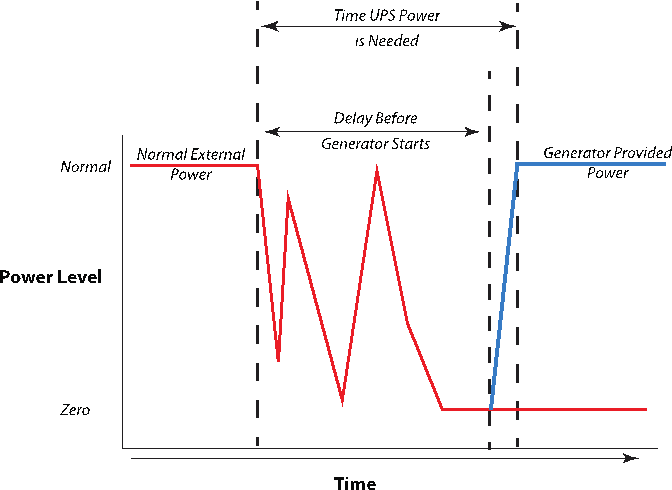

One of the characteristics of power loss is the way it is lost. It may be a single sudden event, or it may be lost, come back, and be lost again in cycles until it finally is restored or drops out entirely. In order to keep generators from chaotically starting and stopping for each of the cycles in the latter situation, you may want the generators to wait for a period of time after power is lost before starting up. Note that this may take several minutes. Your equipment needs to be protected during that phase (Figure 10) or it can sustain electrical damage or worse. An uninterruptable power source (UPS), which acts as a temporary power supply, can fill the gap by either being instantly available or quickly available (down to a two-millisecond delay, depending on model). Some can also filter electrical noise from generators and further protect equipment.

|

Note that Figure 10 shows the power level characteristics for typical computer workstations and home systems. The switch-over delay ranges from zero to two milliseconds. An industrial battery UPS system may provide power within 10 to 20 seconds. A diesel generator may take two minutes or longer. Our home propane-fueled generator takes 11 seconds after the last completed power loss (the long delay is to ensure that power is really out and not just on/off because of a faulty component; turning a generator on and off too frequently can damage the system). These times include built in delays and start-up times for the generator to warm up and reach a normal operation.

As labs become more dependent upon instrumentation and automation, they will become more dependent upon clean, reliable, uninterruptable power. In a fully automated procedure, power loss would compromise samples, equipment, and data. Your choice of UPS type and power capacity will depend on the equipment you have. The type used for home computers might work for a computer, pH meter, balance, etc., but higher power utilization will need correspondingly greater capacity (Table 2). In particular, anything that generates heat (e.g., laser printer, thermal analyzer, incubator, chromatographic oven) will demand lots of power. As part of your lab's planning, you need to determine how much backup power is needed. Instead of the home UPS, you may need an industrial battery backup system. How much are the integrity of your systems and data worth?

| ||||||||||||

Any planning for UPS systems should be done in conjunction with facilities management and, where appropriate, information technology services. Of course, remember that internet access may be lost with power loss since the same supporting structures (e.g., poles, etc.) are used to support communications wiring and power lines.

Data storage, supply chain, and other issues

Labs are there to produce data and information. If your facility is compromised due to power loss, problems with structural integrity, or physical access issues, you need to ensure that the results of the lab's work (that could span years) are still accessible. This calls for large-scale backup storage of laboratory information and data. On-site backup is one useful tactic, but remote backup is preferred if there is likely to be infrastructure damage to a facility. The remote storage facility should be located away from your geographical area such that 1. problems that affect your facility don’t compromise its data and information as well, and 2. other facilities that need access to the data and information aren’t stopped in their work.

Supply chain interruptions are realistically something else that need to be considered and planned for. Can you identify alternate suppliers of materials if your primary suppliers' operations are compromised due to the issues noted above, or due to geopolitical problems? Similar concerns exist for hazardous waste disposal. If your facility is damaged, are waste materials protected? Are there alternate disposal methods pre-arranged? And of course, structural damage may causes problems. If your labs are damaged and out of service, are there alternate or back-up labs that can pick up the work?

Closing

Over the decades, laboratory work has undoubtedly shifted from a paradigm where the lab worker was in control of all aspects of executing lab procedures to one where a dependence on automation and electronic technologies is apparent. We’ve gained higher productivity and efficiency in doing work, but at the expense of being dependent upon these technologies and in turn the underlying infrastructure that supports them. The reliability of that infrastructure is being challenged by forces outside the lab and its organization. Unless we take steps to mitigate those challenges, our ability to work is going to be at risk. Once upon a time, if we were going to shift laboratory operations from one facility to another, it was a matter of packing up glassware and chemicals and shipping them. Now it is a complex process of decommissioning equipment, transferring electronic systems, and reinstalling and rebuilding networks, robotics, and data management systems. Depending on where you are located and the changing conditions around you, you may have to reconsider your lab's structure and build it around modularity and mobility.

Using automation as a means to not only improve workflows but also limit disruptions to them is likely here to stay. However, as we’ve become increasingly dependent upon computing technologies, robotics, and other electronic systems we need to take several actions.

- Train laboratory systems engineers who can bridge the gap between scientific work and the application of automation technologies to assist in its execution.

- Work cooperatively with IT groups and facilities management to address infrastructure requirements to make those systems work, while being flexible enough to meet the challenges of potentially disruptive external environments.

- Ensure that careful planning, workflows, and communication are all used to better meet the organizational and operational goals the laboratory put in place to accomplish.

Abbreviations, acronyms, and initialisms

BHT: butylated hydroxytoluene (BHT)

CDS: chromatography data system

ELN: electronic laboratory notebook

GLP: good laboratory practice

GMP: good manufacturing practice

IT: information technology

LIMS: laboratory information management system

LSE: laboratory systems engineer

QC: quality control

TAT: turnaround time

About the author

Initially educated as a chemist, author Joe Liscouski (joe dot liscouski at gmail dot com) is an experienced laboratory automation/computing professional with over forty years of experience in the field, including the design and development of automation systems (both custom and commercial systems), LIMS, robotics and data interchange standards. He also consults on the use of computing in laboratory work. He has held symposia on validation and presented technical material and short courses on laboratory automation and computing in the U.S., Europe, and Japan. He has worked/consulted in pharmaceutical, biotech, polymer, medical, and government laboratories. His current work centers on working with companies to establish planning programs for lab systems, developing effective support groups, and helping people with the application of automation and information technologies in research and quality control environments.

References

- ↑ Colvin, G. (3 December 2021). "Why employers offering lavish work-from-home perks could be making a strategic blunder". Fortune. Archived from the original on 07 December 2021. https://web.archive.org/web/20211207025431/https://fortune.com/2021/12/03/remote-work-from-home-office-attracting-talent-productivity/. Retrieved 16 March 2022.

- ↑ Pandey, E. (7 December 2021). "Exploding demand for laboratory space". Axios. https://www.axios.com/demand-for-lab-office-space-rising-cbre-f45b20dd-8adf-48d9-a728-b43f7bb8b676.html. Retrieved 16 March 2022.

- ↑ Fox, M. (9 December 2021). "The world is unprepared for the next pandemic, study finds". CNN Health. https://www.cnn.com/2021/12/08/health/world-unprepared-pandemic-report/index.html. Retrieved 16 March 2022.

- ↑ Liscouski, J. (22 January 2021). "Considerations in the Automation of Laboratory Procedures".

- ↑ Fasihnia, Seyedeh Homa; Peighambardoust, Seyed Hadi; Peighambardoust, Seyed Jamaleddin; Oromiehie, Abdulrasoul; Soltanzadeh, Maral; Peressini, Donatella (1 August 2020). "Migration analysis, antioxidant, and mechanical characterization of polypropylene‐based active food packaging films loaded with BHA, BHT, and TBHQ" (in en). Journal of Food Science 85 (8): 2317–2328. doi:10.1111/1750-3841.15337. ISSN 0022-1147. https://onlinelibrary.wiley.com/doi/10.1111/1750-3841.15337.

- ↑ Nielson, R.C. (October 1989). "Overview of Polyolefin Additive Analysis" (PDF). Waters Chromatography Division. https://www.waters.com/webassets/cms/library/docs/940507.pdf. Retrieved 17 March 2022.

- ↑ "ELISA technical guide and protocols" (PDF). Thermo Scientific. 2010. http://tools.thermofisher.com/content/sfs/brochures/TR0065-ELISA-guide.pdf. Retrieved 17 March 2022.

- ↑ "The complete ELISA guide". Abcam plc. 31 January 2026. https://www.abcam.com/protocols/the-complete-elisa-guide. Retrieved 17 March 2022.

- ↑ "Agilent Has The Right Sample Introduction Product For Your Lab" (PDF). Agilent. 15 March 2021. https://www.agilent.com/cs/library/brochures/5991-1287EN-Autosampler_portfolio_brochure.pdf. Retrieved 17 March 2022.

- ↑ White, D. (3 December 2020). "Designing Labs for a Post-COVID-19 World". Lab Manager. https://www.labmanager.com/lab-design-and-furnishings/designing-labs-for-a-post-covid-19-world-24491. Retrieved 17 March 2022.

- ↑ "Guidelines for Environmental Infection Control in Health-Care Facilities". Centers for Disease Control and Prevention. July 2019. https://www.cdc.gov/infectioncontrol/guidelines/environmental/index.html. Retrieved 17 March 2022.

- ↑ "Guidelines for Safe Laboratory Design" (PDF). Yale University Environmental Health & Safety. June 2021. https://ehs.yale.edu/sites/default/files/files/laboratory-design-guidelines.pdf. Retrieved 17 March 2022.

- ↑ American Society of Heating, Refrigerating and Air-Conditioning Engineers, ed. (2015). ASHRAE Laboratory Design Guide: Planning and Operation of Laboratory HVAC Systems (Second edition ed.). Atlanta: ASHRAE. ISBN 978-1-936504-98-5.

- ↑ "Laboratory Design Handbook" (PDF). TSI Incorporated. 2014. http://www.mtpinnacle.com/pdfs/2980330C-LabControlsHandbook.pdf. Retrieved 17 March 2022.

- ↑ "TurboTube for Baytek LIMS software". Baytek International, Inc. https://www.baytekinternational.com/products/turbotube. Retrieved 17 March 2022.

- ↑ Subbarayan, Devi; Choccalingam, Chidambharam; Lakshmi, Chittode Kodumudi Anantha (2 July 2018). "The Effects of Sample Transport by Pneumatic Tube System on Routine Hematology and Coagulation Tests" (in en). Advances in Hematology 2018: 1–4. doi:10.1155/2018/6940152. ISSN 1687-9104. PMC PMC6051325. PMID 30079089. https://www.hindawi.com/journals/ah/2018/6940152/.

- ↑ Genovese, D. (22 January 2022). "Hotels from New York to California deploy room service robots amid virus-related labor crunch". Fox Business. https://www.foxbusiness.com/lifestyle/hotel-room-service-robots-labor-crunch-savioke-relay. Retrieved 18 March 2022.

- ↑ Jeong-Gwan Kang; Su-Yong An; Se-Young Oh (2007). "Navigation strategy for the service robot in the elevator environment". 2007 International Conference on Control, Automation and Systems (Seoul, South Korea: IEEE): 1092–1097. doi:10.1109/ICCAS.2007.4407062. http://ieeexplore.ieee.org/document/4407062/.

- ↑ Mosley, E. (24 December 2021). "Offices in 2022: Here's What the 'New Normal' May Look Like". Inc. https://www.inc.com/eric-mosley/offices-in-2022-heres-what-new-normal-may-look-like.html. Retrieved 18 March 2022.