Difference between revisions of "Journal:AI meets exascale computing: Advancing cancer research with large-scale high-performance computing"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

||

| Line 19: | Line 19: | ||

|download = [https://www.frontiersin.org/articles/10.3389/fonc.2019.00984/pdf https://www.frontiersin.org/articles/10.3389/fonc.2019.00984/pdf] (PDF) | |download = [https://www.frontiersin.org/articles/10.3389/fonc.2019.00984/pdf https://www.frontiersin.org/articles/10.3389/fonc.2019.00984/pdf] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

The application of data science in [[cancer]] research has been boosted by major advances in three primary areas: (1) data: diversity, amount, and availability of biomedical data; (2) advances in [[artificial intelligence]] (AI) and machine learning (ML) algorithms that enable learning from complex, large-scale data; and (3) advances in computer architectures allowing unprecedented acceleration of simulation and machine learning algorithms. These advances help build ''in silico'' ML models that can provide transformative insights from data, including molecular dynamics simulations, [[Sequencing|next-generation sequencing]], omics, [[Molecular imaging|imaging]], and unstructured clinical text documents. Unique challenges persist, however, in building ML models related to cancer, including: (1) access, sharing, labeling, and integration of multimodal and multi-institutional data across different cancer types; (2) developing AI models for cancer research capable of scaling on next-generation high-performance computers; and (3) assessing robustness and reliability in the AI models. In this paper, we review the [[National Cancer Institute]] (NCI) -Department of Energy (DOE) collaboration, the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C), a multi-institution collaborative effort focused on advancing computing and data technologies to accelerate cancer research on the molecular, cellular, and population levels. This collaboration integrates various types of generated data, pre-exascale compute resources, and advances in ML models to increase understanding of basic cancer biology, identify promising new treatment options, predict outcomes, and, eventually, prescribe specialized treatments for patients with cancer. | The application of data science in [[cancer]] research has been boosted by major advances in three primary areas: (1) data: diversity, amount, and availability of biomedical data; (2) advances in [[artificial intelligence]] (AI) and machine learning (ML) algorithms that enable learning from complex, large-scale data; and (3) advances in computer architectures allowing unprecedented acceleration of simulation and machine learning algorithms. These advances help build ''in silico'' ML models that can provide transformative insights from data, including molecular dynamics simulations, [[Sequencing|next-generation sequencing]], omics, [[Molecular imaging|imaging]], and unstructured clinical text documents. Unique challenges persist, however, in building ML models related to cancer, including: (1) access, sharing, labeling, and integration of multimodal and multi-institutional data across different cancer types; (2) developing AI models for cancer research capable of scaling on next-generation high-performance computers; and (3) assessing robustness and reliability in the AI models. In this paper, we review the [[National Cancer Institute]] (NCI) -Department of Energy (DOE) collaboration, the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C), a multi-institution collaborative effort focused on advancing computing and data technologies to accelerate cancer research on the molecular, cellular, and population levels. This collaboration integrates various types of generated data, pre-exascale compute resources, and advances in ML models to increase understanding of basic cancer biology, identify promising new treatment options, predict outcomes, and, eventually, prescribe specialized treatments for patients with cancer. | ||

| Line 100: | Line 95: | ||

An outstanding challenge of the SEER program is how to achieve near real-time cancer surveillance. Information abstraction is a critical step to facilitate data-driven explorations. However, the process is fully manual to ensure high-quality data. As the SEER program increases the breadth of information captured, the manual process is no longer scalable. By partnering computational and data scientists from DOE with NCI SEER domain experts, Pilot Three, ''Population Information Integration, Analysis, and Modeling for Precision Surveillance'', aims to leverage high-performance computing and artificial intelligence to meet the emerging needs of cancer surveillance. Moreover, Pilot Three envisions a fully integrated data-driven modeling and simulation framework to enable meaningful translation of big SEER data. By collecting and linking additional patient data, we can generate profiles for patients with cancer that include information about healthcare delivery system parameters and continuity of care. Such rich data will facilitate data-driven modeling and simulation of patient-specific health trajectories to support precision oncology research at the population level. | An outstanding challenge of the SEER program is how to achieve near real-time cancer surveillance. Information abstraction is a critical step to facilitate data-driven explorations. However, the process is fully manual to ensure high-quality data. As the SEER program increases the breadth of information captured, the manual process is no longer scalable. By partnering computational and data scientists from DOE with NCI SEER domain experts, Pilot Three, ''Population Information Integration, Analysis, and Modeling for Precision Surveillance'', aims to leverage high-performance computing and artificial intelligence to meet the emerging needs of cancer surveillance. Moreover, Pilot Three envisions a fully integrated data-driven modeling and simulation framework to enable meaningful translation of big SEER data. By collecting and linking additional patient data, we can generate profiles for patients with cancer that include information about healthcare delivery system parameters and continuity of care. Such rich data will facilitate data-driven modeling and simulation of patient-specific health trajectories to support precision oncology research at the population level. | ||

To date, Pilot Three has mainly focused on the development, scaling, and deployment of cutting-edge AI tools to semi-automate information abstraction from unstructured pathology text reports, the main source of information of cancer registries. In partnership with the Louisiana Tumor Registry and the Kentucky Cancer Registry, several AI-based natural language processing (NLP) tools have been developed and benchmarked for abstraction of fundamental cancer data elements such as cancer site, laterality, behavior, histology, and grade.<ref name="QiuScalable18">{{cite journal |title=Scalable deep text comprehension for Cancer surveillance on high-performance computing |journal=BMC Bioinformatics |author=Qiu, J.X.; Yoon, H.J.; Srivastava, K. et al. |volume=19 |issue=Suppl. 18 |page=488 |year=2018 |doi=10.1186/s12859-018-2511-9 |pmid=30577743 |pmc=PMC6302459}}</ref><ref name="GaoHeir17">{{cite journal |title=Hierarchical attention networks for information extraction from cancer pathology reports |journal=JAMIA |author=Gao, S.; Young, M.T.; Qiu, J.X. et al. |volume=25 |issue=3 |pages=321–330 |year=2017 |doi=10.1093/jamia/ocx131 |pmid=29155996}}</ref><ref name="QiuDeep18">{{cite journal |title=Deep Learning for Automated Extraction of Primary Sites From Cancer Pathology Reports |journal=IEEE Journal of Biomedical and Health Informatics |author=Qiu, J.X.; Yoon, H.J.; Fearn, P.A. et al. |volume=22 |issue=1 |pages=244–51 |year=2018 |doi=10.1109/JBHI.2017.2700722 |pmid=28475069}}</ref><ref name="TourassiDeep18">{{cite journal |title=DeepAbstractor: A scalable deep learning framework for automated information extraction from free-text pathology reports |journal=Proceedings of the 30th Anniversary AACR Special Conference Convergence: Artificial Intelligence, Big Data, and Prediction in Cancer |author=Tourassi, G. |year=2018 |url=https://www.aacr.org/Meetings/Pages/MeetingDetail.aspx?EventItemID=149&DetailItemID=772}}</ref><ref name="AlawadRetro18">{{cite journal |title=Retrofitting Word Embeddings with the UMLS Metathesaurus for Clinical Information Extraction |journal=Proceedings from the 2018 IEEE International Conference on Big Data |author=Alawad, M.; Hasan, S.M.S.; Christian, J.B. et al. |pages=2838-2846 |year=2018 |doi=10.1109/BigData.2018.8621999}}</ref><ref name="AlawadCoarse18">{{cite journal |title=Coarse-to-fine multi-task training of convolutional neural networks for automated information extraction from cancer pathology reports |journal=Proceedings from the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics |author=Alawad, M.; Yoon, H.-J.; Tourassi, G.D. |pages=218-221 |year=2018 |doi=10.1109/BHI.2018.8333408}}</ref><ref name="YoonFilter18">{{cite journal |title=Filter pruning of Convolutional Neural Networks for text classification: A case study of cancer pathology report comprehension |journal=Proceedings from the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics |author=Yoon, H.-J.; Robinson, S.; Christian, J.B. et al. |pages=345-348 |year=2018 |doi=10.1109/BHI.2018.8333439}}</ref> The NLP tools rely on the latest AI advances, including multi-task learning and attention mechanisms. Scalable training and hyperparameter optimization of the tools is managed by relying on pre-exascale computing infrastructure available within the DOE laboratory complex.<ref name="YoonHPC18">{{cite journal |title=HPC-Based Hyperparameter Search of MT-CNN for Information Extraction from Cancer Pathology Reports |journal=Fourth Computational Approaches for Cancer Workshop |author=Yoon, H.-J., Alawad, M., Christian, J.B. et al. |year=2018 |url=https://sc18.supercomputing.org/proceedings/workshops/workshop_pages/ws_cafcw107.html}}</ref> Following an iterative optimization protocol, the most computationally efficient and clinically effective tools are deployed for evaluation across participating SEER registries. Based on preliminary testing, the NLP tools have been able to accurately classify all five data elements for 42.5% of cancer cases. Further refinement of this accuracy level is underway in subsequent versions, as well as incorporation of an uncertainty quantification component to ease and increase user confidence. | To date, Pilot Three has mainly focused on the development, scaling, and deployment of cutting-edge AI tools to semi-automate information abstraction from unstructured pathology text reports, the main source of information of cancer registries. In partnership with the Louisiana Tumor Registry and the Kentucky Cancer Registry, several AI-based natural language processing (NLP) tools have been developed and benchmarked for abstraction of fundamental cancer data elements such as cancer site, laterality, behavior, histology, and grade.<ref name="QiuScalable18">{{cite journal |title=Scalable deep text comprehension for Cancer surveillance on high-performance computing |journal=BMC Bioinformatics |author=Qiu, J.X.; Yoon, H.J.; Srivastava, K. et al. |volume=19 |issue=Suppl. 18 |page=488 |year=2018 |doi=10.1186/s12859-018-2511-9 |pmid=30577743 |pmc=PMC6302459}}</ref><ref name="GaoHeir17">{{cite journal |title=Hierarchical attention networks for information extraction from cancer pathology reports |journal=JAMIA |author=Gao, S.; Young, M.T.; Qiu, J.X. et al. |volume=25 |issue=3 |pages=321–330 |year=2017 |doi=10.1093/jamia/ocx131 |pmid=29155996}}</ref><ref name="QiuDeep18">{{cite journal |title=Deep Learning for Automated Extraction of Primary Sites From Cancer Pathology Reports |journal=IEEE Journal of Biomedical and Health Informatics |author=Qiu, J.X.; Yoon, H.J.; Fearn, P.A. et al. |volume=22 |issue=1 |pages=244–51 |year=2018 |doi=10.1109/JBHI.2017.2700722 |pmid=28475069}}</ref><ref name="TourassiDeep18">{{cite journal |title=DeepAbstractor: A scalable deep learning framework for automated information extraction from free-text pathology reports |journal=Proceedings of the 30th Anniversary AACR Special Conference Convergence: Artificial Intelligence, Big Data, and Prediction in Cancer |author=Tourassi, G. |year=2018 |url=https://www.aacr.org/Meetings/Pages/MeetingDetail.aspx?EventItemID=149&DetailItemID=772}}</ref><ref name="AlawadRetro18">{{cite journal |title=Retrofitting Word Embeddings with the UMLS Metathesaurus for Clinical Information Extraction |journal=Proceedings from the 2018 IEEE International Conference on Big Data |author=Alawad, M.; Hasan, S.M.S.; Christian, J.B. et al. |pages=2838-2846 |year=2018 |doi=10.1109/BigData.2018.8621999}}</ref><ref name="AlawadCoarse18">{{cite journal |title=Coarse-to-fine multi-task training of convolutional neural networks for automated information extraction from cancer pathology reports |journal=Proceedings from the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics |author=Alawad, M.; Yoon, H.-J.; Tourassi, G.D. |pages=218-221 |year=2018 |doi=10.1109/BHI.2018.8333408}}</ref><ref name="YoonFilter18">{{cite journal |title=Filter pruning of Convolutional Neural Networks for text classification: A case study of cancer pathology report comprehension |journal=Proceedings from the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics |author=Yoon, H.-J.; Robinson, S.; Christian, J.B. et al. |pages=345-348 |year=2018 |doi=10.1109/BHI.2018.8333439}}</ref> The NLP tools rely on the latest AI advances, including multi-task learning and attention mechanisms. Scalable training and hyperparameter optimization of the tools is managed by relying on pre-exascale computing infrastructure available within the DOE [[laboratory]] complex.<ref name="YoonHPC18">{{cite journal |title=HPC-Based Hyperparameter Search of MT-CNN for Information Extraction from Cancer Pathology Reports |journal=Fourth Computational Approaches for Cancer Workshop |author=Yoon, H.-J., Alawad, M., Christian, J.B. et al. |year=2018 |url=https://sc18.supercomputing.org/proceedings/workshops/workshop_pages/ws_cafcw107.html}}</ref> Following an iterative optimization protocol, the most computationally efficient and clinically effective tools are deployed for evaluation across participating SEER registries. Based on preliminary testing, the NLP tools have been able to accurately classify all five data elements for 42.5% of cancer cases. Further refinement of this accuracy level is underway in subsequent versions, as well as incorporation of an uncertainty quantification component to ease and increase user confidence. | ||

Although the patient information currently collected across SEER registries is mainly clinical (clin-omics), increasingly other -omics types of information are expected to become part of cancer surveillance. Specifically, radiomics (i.e., biomarkers automatically extracted from histopathological and radiological images via targeted image processing algorithms), as well as [[genomics]], will provide important insight to understand the effectiveness of cancer treatment choices. | |||

Moving forward, Pilot Three will implement the latest NLP tools into production application across participating SEER registries using [[application program interface]]s (APIs) to determine the most effective human-AI workflow integration for broad and standardized technology integration across registries. The APIs will be integrated into the registries' workflows. In addition, working collaboratively with domain experts, the team will extend the information extraction across biomarkers and capture disease progression such as metastasis and recurrence. This pilot is engaging in several partnerships with academic and commercial entities to bring in heterogeneous data sources for more effective longitudinal trajectory modeling. Efforts to understand causal inference beyond treatment (social, economic, and environmental) impact in the real world are also part of future plans. | |||

==Looking ahead: Opportunities and challenges== | |||

In addition to large-scale computing as a critical and necessary element to pursue the many opportunities for AI in cancer research, other areas must also develop to realize the tremendous potential. In this section, we list some of these opportunities. | |||

First, HPC platforms provide a high-speed interconnection between compute nodes that is integral in handling the communication for data or model parallel training. While [[Cloud computing|cloud]] platforms have recently made significant investments in improving interconnects, this remains a challenge and would encourage projects like Pilot Three to limit distributed training to a single node. That said, the on-demand nature of cloud platforms can allow for more efficient resource utilization of AI workflows, and the modern Linux environments and familiar hardware configurations available on cloud platforms offer superior support for AI workflow software, which can increase productivity. | |||

Second, the level of available data currently limits the potential for AI in cancer research. Developing data resources of sufficient size, quality, and coherence will be essential for AI to develop robust models within the domain of the available data resources. | |||

Third, evaluation and validation of data-driven AI models, and quantifying the uncertainty in individual predictions, will continue to be an important aspect for the adoption of AI in cancer research, posing a challenge to the community to concurrently develop criteria for evaluation and validation of models while delivering the necessary data and large-scale computational resources required. | |||

In the next two subsections, we highlight two efforts within the JDAC4C collaboration to address these challenges. The first focuses on scaling the training of the deep neural network application on HPC systems, and the second quantifies the uncertainty in the trained models to build a measure of confidence and limits on how to use them in production. | |||

===CANDLE: Cancer Distributed Learning Environment=== | |||

The CANDLE project<ref name="WozniakCANDLE18" /> builds a single, scalable deep neural network application and is being used to address the challenges in each of the JDACS4C pilots. | |||

The primary challenge for CANDLE is enabling the most challenging deep learning problems in cancer research to run on the most capable supercomputers in the DOE and NIH. Implementations of CANDLE have been tested on the DOE Titan, Cori, Theta, and Summit systems, as well as on the NIH Biowulf system using container technologies.<ref name="ZakiPort18">{{cite journal |title=Portable and Reusable Deep Learning Infrastructure with Containers to Accelerate Cancer Studies |journal=Proceedings from the 2018 IEEE/ACM 4th International Workshop on Extreme Scale Programming Models and Middleware |author=Zaki, G.F.; Wozniak, J.M.; Ozik, J. et al. |pages=54-61 |year=2018 |doi=10.1109/ESPM2.2018.00011}}</ref> The CANDLE software builds on open-source deep learning frameworks, including Keras, TesnsorFlow, and PyTorch. Through collaborations with DOE computing centers, HPC vendors, and the Exascale Computing Project's (ECP) co-design and software technology projects, CANDLE is being prepared for the coming DOE exascale platforms. | |||

Features currently supported in CANDLE include feature selection, hyperparameter optimization, model training, inferencing, and UQ. Future release plans call for supporting experimental design, model acceleration, uncertainty guided inference, network architecture search, synthetic data generation, and data modality conversion. These features have been used to evaluate over 20,000 models in a single run on a DOE HPC system. | |||

The CANDLE project also features a set of deep learning benchmarks that are aimed at solving a problem associated with each of the pilots. These benchmarks embody different deep learning approaches to problems in cancer biology, and they are implemented in compliance with CANDLE standards, making them amenable to large-scale model search and inferencing experiments. | |||

===Uncertainty quantification=== | |||

UQ is a critical component across all three JDACS4C pilots. It is a field of analysis that estimates accuracy under multi-modal uncertainties. UQ allows detecting unreliable model predictions<ref name="HengartnerCAT18">{{cite journal |title=CAT: Computer aided triage improving upon the Bayes risk through ε-refusal triage rules |journal=BMC Bioinformatics |author=Hengartner, N.; Cuellar, L.; Wu, X.C. et al. |volume=19 |issue=Suppl. 18 |page=485 |year=2018 |doi=10.1186/s12859-018-2503-9 |pmid=30577756 |pmc=PMC6302364}}</ref> and provides for improved design of experiments. UQ quantifies the effects of statistical fluctuations, extrapolation, overfitting, model misspecification, and sampling biases, resulting in confidence measures for individual model prediction. | |||

Historically, results from computational modeling in the biological sciences did not incorporate UQ, but measures of certainty are essential for actionable predictive analytics.<ref name="BegoliTheNeed19">{{cite journal |title=The need for uncertainty quantification in machine-assisted medical decision making |journal=Nature Machine Intelligence |author=Begoli, E.; Bhattacharya, T.; Kusnezov, D. et al. |volume=1 |page=20–23 |year=2019 |doi=10.1038/s42256-018-0004-1}}</ref> The problems are exacerbated as we start addressing problems with poorly understood causal models using large yet noisy, multimodal, and incomplete data sets. Methodological advances are allowing all three pilots to use HPC technology to simultaneously estimate the uncertainty along with the results. | |||

In addition to providing confidence intervals, the development of new UQ technology allows assessment and improvement of data quality<ref name="ThulasidasanCombat19">{{cite journal |title=Combating Label Noise in Deep Learning using Abstention |journal=Proceedings of Machine Learning Research |author=Thulasidasan, S.; Bhattacharya, T.; Bilmes, J. et al. |volume=97 |page=6234-6243 |year=2019 |url=http://proceedings.mlr.press/v97/thulasidasan19a.html}}</ref>, evaluation and design of models appropriate to the data quality and quantity, and prioritization of further observations or experiments that can best improve model quality. These developments are currently being tested in the JDACS4C pilots and are likely to impact the wider application of large-data-driven modeling. | |||

==Conclusion== | |||

The JDACS4C collaboration continues to provide valuable insights into the future for AI in cancer research and the essential role that extreme-scale computing will have in shaping and informing that future. Concepts have been transformed into preliminary practice in a short period of time, as a result of multi-disciplinary teamwork and access to advanced computing resources. AI is being used to guide experimental design to make more effective use of valuable laboratory resources, to develop new capabilities for molecular simulation, and to streamline and improve efficiencies in the acquisition of clinical data. | |||

The JDACS4C collaboration established a foundation for team science and is enabling innovation at the intersection of advanced computing technologies and cancer research. The opportunities for extreme-scale computing in AI and cancer research extend well beyond these pilots. | |||

==Acknowledgements== | |||

===Author contributions=== | |||

GZ made the paper plan, wrote the abstract, and assembled the manuscript. ES, EG, AG, TH, and CL contributed to the introduction. RS, JD, and YE made substantial contribution to the conception and design of the work in Pilot One. TBr and FX provided acquisition, analysis, and interpretation of data in Pilot One. FS and DN made substantial contributions to the conception, design, and execution of Pilot Two. GT and LP made substantial contribution in designing, developing, and writing the section about Pilot Three. RS made substantial contribution to the conception and design of the work in CANDLE. RS, TBr, TBh, and FX developed and optimized predictive models with UQ in Pilots One, Three, and CANDLE. GZ contributed to extending the application of CANDLE at NIH. ES and EG contributed to the opportunities and challenges. TBh wrote and developed the section related to UQ. EG, AG, TH, and CL contributed to the conclusion. | |||

===Funding=== | |||

This work has been supported in part by the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C) program established by the U.S. Department of Energy (DOE) and the National Cancer Institute (NCI) of the National Institutes of Health. This work was performed under the auspices of the U.S. Department of Energy by Argonne National Laboratory under Contract DE-AC02-06-CH11357. This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. LLNL-JRNL-773355. This work was performed under the auspices of the U.S. Department of Energy by Los Alamos National Laboratory under Contract DE-AC52-06NA25396. Computing support for this work came in part from the Lawrence Livermore National Laboratory Institutional Computing Grand Challenge program. This project was funded in part with federal funds from the NCI, NIH, under contract no. HHSN261200800001E. This research was supported in part by the Exascale Computing Project (17-SC-20-SC), a collaborative effort of the U.S. Department of Energy Office of Science and the National Nuclear Security Administration. This research used resources of the Argonne Leadership Computing Facility and the Oak Ridge Leadership Computing Facility, which are DOE Office of Science User Facilities. This research used resources of the Lawrence Livermore Computing Facility and the Los Alamos National Laboratory supported by the DOE National Nuclear Security Administration's Advanced Simulation and Computing (ASC) Program. This manuscript has been authored in part by UT-Battelle, LLC under Contract No. DE-AC05-00OR22725 with the U.S. Department of Energy. This research used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725. | |||

The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paidup, irrevocable, world-wide license to publish or reproduce the published form of the manuscript, or allow others to do so, for United States Government purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-public-access-plan). | |||

===Conflict of interest=== | |||

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. | |||

==References== | ==References== | ||

Revision as of 20:07, 19 November 2019

| Full article title | AI meets exascale computing: Advancing cancer research with large-scale high-performance computing |

|---|---|

| Journal | Frontiers in Oncology |

| Author(s) |

Bhattacharya, Tanmoy; Brettin, Thomas; Doroshow, James H.; Evrard, Yvonne A.; Greenspan, Emily J.; Gryshuk, Amy L.; Hoang, Thuc T.; Vea Lauzon, Carolyn, B.; Nissley, Dwight; Penberthy, Lynne; Stahlberg, Eric; Stevens, Rick; Streitz, Fred; Tourassi, Georgia; Xia, Fangfang; Zaki, George |

| Author affiliation(s) |

Los Alamos National Laboratory, Argonne National Laboratory, National Cancer Institute, Frederick National Laboratory for Cancer Research, Lawrence Livermore National Laboratory, National Nuclear Security Administration, U.S. Department of Energy Office of Science, University of Chicago, Oak Ridge National Laboratory |

| Primary contact | Email: george dot zaki at nih dot gov |

| Editors | Meerzaman, Daoud |

| Year published | 2019 |

| Volume and issue | 9 |

| Page(s) | 984 |

| DOI | 10.3389/fonc.2019.00984 |

| ISSN | 2234-943X |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.frontiersin.org/articles/10.3389/fonc.2019.00984/full |

| Download | https://www.frontiersin.org/articles/10.3389/fonc.2019.00984/pdf (PDF) |

Abstract

The application of data science in cancer research has been boosted by major advances in three primary areas: (1) data: diversity, amount, and availability of biomedical data; (2) advances in artificial intelligence (AI) and machine learning (ML) algorithms that enable learning from complex, large-scale data; and (3) advances in computer architectures allowing unprecedented acceleration of simulation and machine learning algorithms. These advances help build in silico ML models that can provide transformative insights from data, including molecular dynamics simulations, next-generation sequencing, omics, imaging, and unstructured clinical text documents. Unique challenges persist, however, in building ML models related to cancer, including: (1) access, sharing, labeling, and integration of multimodal and multi-institutional data across different cancer types; (2) developing AI models for cancer research capable of scaling on next-generation high-performance computers; and (3) assessing robustness and reliability in the AI models. In this paper, we review the National Cancer Institute (NCI) -Department of Energy (DOE) collaboration, the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C), a multi-institution collaborative effort focused on advancing computing and data technologies to accelerate cancer research on the molecular, cellular, and population levels. This collaboration integrates various types of generated data, pre-exascale compute resources, and advances in ML models to increase understanding of basic cancer biology, identify promising new treatment options, predict outcomes, and, eventually, prescribe specialized treatments for patients with cancer.

Keywords: cancer research, high-performance computing, artificial intelligence, deep learning, natural language processing, multi-scale modeling, precision medicine, uncertainty quantification

Introduction

Predictive computational models for patients with cancer can in the future support prevention and treatment decisions by informing choices to achieve the best possible clinical outcome. Toward this vision, in 2015, the national Precision Medicine Initiative (PMI)[1] was announced, motivating efforts to target and advance precision oncology, including looking ahead to the scientific, data, and computational capabilities needed to advance this vision. At the same time, the horizon of computing was changing in the life sciences, as the capabilities and transformations enabled by exascale computing were coming into focus, driven by the accelerated growth in data volumes and anticipated new sources of information catalyzed by new technologies and initiatives such as PMI.

The National Strategic Computing Initiative (NSCI) in 2015 named the Department of Energy (DOE) as a lead agency for “advanced simulation through a capable exascale computing program” and the National Institutes of Health (NIH) as one of the deployment agencies to participate “in the co-design process to integrate the special requirements of their respective missions.” This interagency coordination structure opened the avenue for a tight collaboration between the NCI and the DOE. With shared aims to advance cancer research while shaping the future for exascale computing, the NCI and DOE established the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C) in June of 2016 through a five-year memorandum of understanding with three co-designed pilot efforts to address both national priorities. The high-level goals of these three pilots were to push the frontiers of computing technologies in specific areas of cancer research:

- at the cellular level: advance the capabilities of patient-derived pre-clinical models to identify new treatments;

- at the molecular level: further understand the basic biology of undruggable targets; and

- at the population level: gain critical insights on the drivers of population cancer outcomes.

The pilots would also develop new uncertainty quantification (UQ) methods to evaluate confidence in the AI model predictions.

Using co-design principles, each of the pilots in the JDACS4C collaboration is based on—and driven by—team science, which is the hallmark of the collaboration's success. Enabled by deep learning, Pilot One (cellular-level) combines data in innovative ways to develop computationally predictive models for tumor response to novel therapeutic agents. Pilot Two (molecular-level) combines experimental data, simulation, and AI to provide new windows to understand and explore the biology of cancers related to the Ras superfamily of proteins. Pilot Three (population-level) uses AI and clinical information at unprecedented scales to enable precision cancer surveillance to transform cancer care.

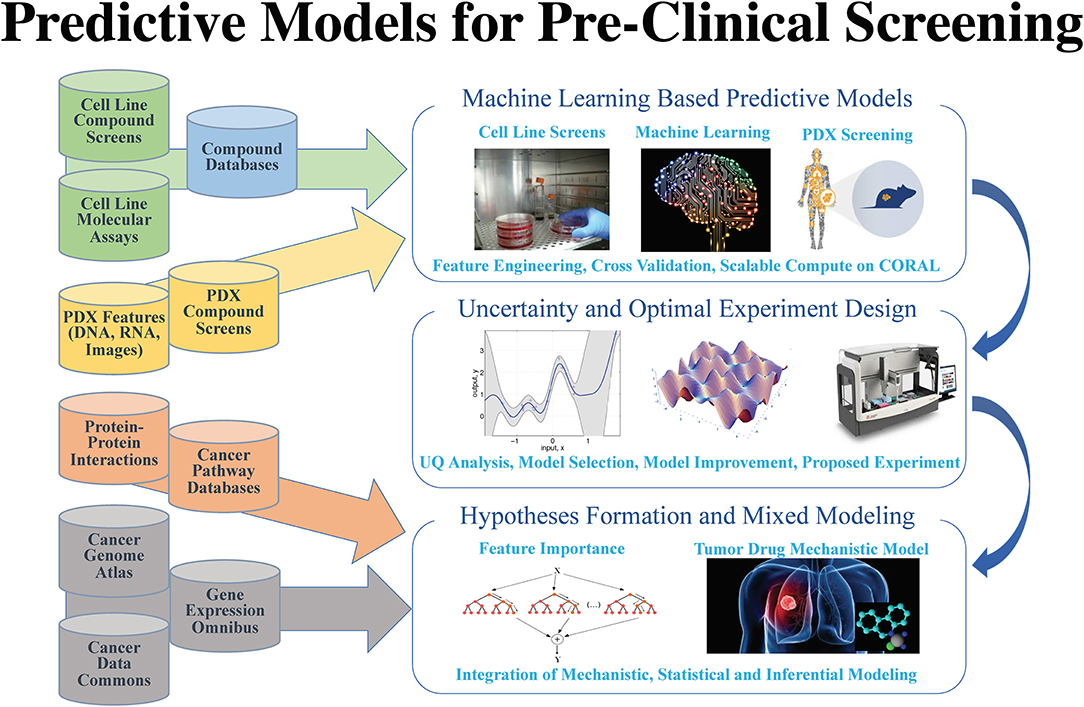

AI and large-scale computing to predict tumor treatment response

After years of efforts within the research and pharmaceutical sectors, many patients with cancer still do not respond to standard-of-care treatments, and emergence of therapy resistance is common. Efforts in precision medicine may someday change this by using a targeted therapeutics approach, individually tailored to each patient based on predictive models that use molecular and drug signatures. The Predictive Modeling for Pre-Clinical Screening Pilot (Pilot One) aims to develop predictive capabilities of drug response in pre-clinical models of cancer to improve and expedite the selection and development of new targeted therapies for patients with cancer. Highlights of the work done in Pilot One are shown in Figure 1.

|

As omics data continues to accumulate, computational models integrating multimodal data sources become possible. Multimodal deep learning[2] aims to enhance learned features for one task by learning features over multiple modalities. Early Pilot One work[3] measured performance of multi-modal deep neural network drug pair response models with five-fold cross validation. Using the NCI-ALMANAC[4] data, best model performance was demonstrated when gene expression, microRNA, proteome, and Dragon7 drug descriptors[5] were combined obtaining an R-squared value of 0.944, which indicates that over 94% of the variation in tumor response is explained by the variation among the contributing gene expression, micro RNA expression, proteomics, and drug property data.

Mechanistically informed feature selection is an alternative approach that has the potential to increase predictive model performance. The LINCS landmark genes[6] for example have been used to train deep learning models to predict gene expression of non-landmark genes[7] and to classify drug-target interactions.[8] Ongoing work in Pilot One is exploring the impact on prediction using gene sets like that of the LINCS landmark genes and other mechanistically defined gene sets. The potential of employing mechanistically informed feature selection extends beyond improving prediction accuracy, to the realm of building models on the basis of existing biological knowledge.

Transfer learning is another area of important research activity. The goal of transfer learning is to improve learning in the target learning task by leveraging knowledge from an existing source task.[9] Given challenges in obtaining sufficient data for target Patient Derived Xenografts (PDXs), where tumors are grown in mouse host animals, ongoing transfer learning work holds promise for learning on cell lines as a source for the target PDX model predictions. Pilot One is first working on generating models that generalize across cell line studies, a precursor to transfer learning from cell lines to PDXs.

Using data from the NCI-ALMANAC[4], NCI-60[10], GDSC[11], CTRP[12], gCSI[13], and CCLE[14], models can be constructed that generalize across cell-line studies. For example, using multi-task networks which combine additional learning of three different classification tasks—tumor/normal, cancer type, and cancer site—with learning of the drug response task, it could be possible to capture more of the total variance and improve precision and recall when training on CTRP and predicting on CCLE. Demonstrating cross-study model capability will provide additional confidence that general models can be developed for prediction tasks on cell lines, PDXs, and organoids.

Answering questions of how much data and what methods are suitable is a critical part of Pilot One. Although it is generally held that deep learning methods outperform traditional machine learning methods when large data sets are used, this has not yet been explored in the context of drug response prediction problem. Early efforts underway in Pilot One are exploring the relationship among sample size, deep learning methods, and traditional machine learning methods to better characterize the dependencies on predictive performance. This information of sample size, together with model accuracy metrics, will be of critical importance to future experimental designs for those who wish to pursue deep learning approaches to the drug response prediction problem.

Such extensive deep learning and machine learning investigations require significant computational resources, such as those available at DOE Leadership Computing Facilities (LCF) employed by Pilot One. A recent experiment searched 23,200 deep neural network models using COXEN[15] selected features and Bayesian optimization ideas[16] to find the best model hyperparameters (hyperparameters generally define the choice of functions and relationship among functions in a given deep learning model). This produced the best cross-study validation results to-date, underscoring the critical need for feature selection and hyperparameter optimization when building predictive models. Further, uncertainty quantification (explained in more depth later) adds a new level of computing demand. Uncertainty quantification experiments involving over 30 billion predictions from 450 of the best models generated on the DOE Summit LCF system are ongoing to understand the relationship to between best model uncertainty and the model that performs best in cross-study validation experiments.

Reflecting on insights from Pilot One activities and current gaps in available literature, future work will focus on exploring new predictive models to better utilize, ground, and enrich biological knowledge. Efforts to improve drug representations for response prediction are expected to benefit from research involving training semi-supervised networks on millions of compounds. In efforts to improve understanding of trained models, mechanistic information is being incorporated into more interpretable deep learning models. Active learning in response prediction—which balances uncertainty, accuracy, and lead discovery—will be used to guide the acquisition of experimental data for animal models in a cost-effective and timely manner. And finally, a necessary step toward precision models is gaining a fine-grained understanding of prediction error, an insight enabled by the demonstrated capability in large-scale model sweeps.

Oncogenic mutations in Ras genes are associated with more than 30% of cancers and are particularly prevalent in those of the lung, colon, and pancreas. Though Ras mutations have been studied for decades, there are currently no Ras inhibitors, and a detailed molecular mechanism for how Ras engages and activates proximal signaling proteins (RAF) remains elusive.[17] Ras signaling takes place at and is dependent on cellular membranes, a complex cellular environment that is difficult to recapitulate using current experimental technologies.

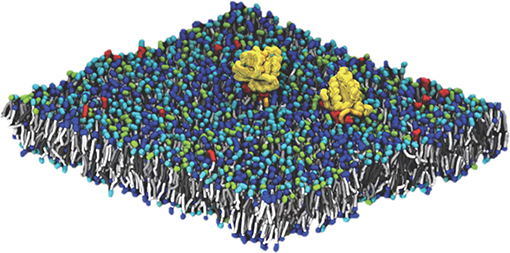

Pilot Two, Improving Outcomes for RAS-related Cancer, is focused on delivering a validated multiscale model of Ras biology on a cell membrane by combining the experimental capabilities at the Frederick National Laboratory for Cancer Research with the computational resources of the National Nuclear Security Administration (NNSA), a semi-autonomous agency of the DOE. The principal challenge in modeling this system is the diverse length and time scales involved. Lipid membranes evolve over a macroscopic scale (micrometers and milliseconds). Capturing this evolution is critical, as changes in lipid concentration define the local environment in which Ras operates. The Ras protein itself, however, binds over time and length scales which are microscopic (nanometers and microseconds). In order to elucidate the behavior of Ras proteins in the context of a realistic membrane, our modeling effort must span the multiple orders of magnitude between microscopic and macroscopic behavior. The Pilot Two team has built such a framework, developing a macroscopic model that captures the evolution of the lipid environment and which is consistent with an optimized microscopic model that captures protein-protein and protein-lipid interactions at the molecular scale. Macroscopic model components (lipid environment, lipid-lipid interactions, protein behavior, and protein-lipid interactions) were characterized through close collaboration between the experimenters at Frederick National Laboratory and the computational scientists from the DOE/NNSA. The microscopic model is based on standard Martini force fields for coarse-grained molecular dynamics (CGMD), modified to correctly capture certain details of lipid phase behavior.[18][19][20][21] A snapshot from a typical micro-scale simulation run, showing two Ras proteins on a 30 nm × 30 nm patch of lipid membrane (containing ~150,000 particles) is shown in Figure 2.

|

In order to bring the two scales together, the team devised a novel workflow whereby microscopic subsections of a running macroscopic model are scored for uniqueness using a machine learning algorithm operating in a reduced order space that has been trained on previous simulations. The most unique subsections in the macroscopic simulation are identified and re-created as CGMD simulations, which explore the microscopic behavior. Information from the many thousands of microscopic simulations is then fed back into the macroscopic model, so that it is continually improving even as the simulations are running.[22]

This modeling infrastructure was designed to exploit the Sierra supercomputer at Lawrence Livermore National Laboratory. The scale and heterogeneous architecture of Sierra make it ideal for such a workflow that combines AI technology with predictive simulation. Running on the entire machine, the team was able to simulate at the macroscopic level a 1 by 1μm, 14-lipid membrane with 300 Ras proteins, generating over 100,000 microscopic simulations capturing over 200 ms of protein behavior. This unprecedented achievement represents an almost two orders of magnitude improvement on the previously state of the art. That being said, the space of all possible lipid mixtures is huge, requiring tens of thousands of samples for any meaningful coverage. This type of Multiple Metrics Modeling Infrastructure (MuMMI) simulation will always be limited by the available high-performance computing (HPC) resources. With an exascale machine, we can substantially increase the dimensionality of the input space and its coverage, significantly improving the applicability of future campaigns.

In the coming years, the team will exploit this capability to explore Ras behavior on lipid membranes and extend the model in three important directions. First, both the macro and micro models will be modified to incorporate the RAF kinase, which binds to RAS as the first step in the MAPK pathway that leads to growth signaling. Second, we will extend the infrastructure to include fully atomistic resolution, creating a three-level (macro/micro/atomistic) multiscale model. Third, we will incorporate membrane curvature into the dynamics of the membrane, which is currently constrained to remain flat. The improved infrastructure will allow the largest and most accurate computational exploration of RAS biology to date.

Advancing cancer surveillance using AI and high-performance computing

The Surveillance, Epidemiology, and End Results (SEER) program funded by the NCI was established in 1973 for the advancement of public health and for reducing the cancer burden in the United States. SEER currently collects and publishes cancer incidence and survival data from population-based cancer registries covering ~34.6% of the U.S. population. The curated, population-level SEER data provide a rich information source for data-driven discovery to understand drivers of cancer outcomes in the real world.

An outstanding challenge of the SEER program is how to achieve near real-time cancer surveillance. Information abstraction is a critical step to facilitate data-driven explorations. However, the process is fully manual to ensure high-quality data. As the SEER program increases the breadth of information captured, the manual process is no longer scalable. By partnering computational and data scientists from DOE with NCI SEER domain experts, Pilot Three, Population Information Integration, Analysis, and Modeling for Precision Surveillance, aims to leverage high-performance computing and artificial intelligence to meet the emerging needs of cancer surveillance. Moreover, Pilot Three envisions a fully integrated data-driven modeling and simulation framework to enable meaningful translation of big SEER data. By collecting and linking additional patient data, we can generate profiles for patients with cancer that include information about healthcare delivery system parameters and continuity of care. Such rich data will facilitate data-driven modeling and simulation of patient-specific health trajectories to support precision oncology research at the population level.

To date, Pilot Three has mainly focused on the development, scaling, and deployment of cutting-edge AI tools to semi-automate information abstraction from unstructured pathology text reports, the main source of information of cancer registries. In partnership with the Louisiana Tumor Registry and the Kentucky Cancer Registry, several AI-based natural language processing (NLP) tools have been developed and benchmarked for abstraction of fundamental cancer data elements such as cancer site, laterality, behavior, histology, and grade.[23][24][25][26][27][28][29] The NLP tools rely on the latest AI advances, including multi-task learning and attention mechanisms. Scalable training and hyperparameter optimization of the tools is managed by relying on pre-exascale computing infrastructure available within the DOE laboratory complex.[30] Following an iterative optimization protocol, the most computationally efficient and clinically effective tools are deployed for evaluation across participating SEER registries. Based on preliminary testing, the NLP tools have been able to accurately classify all five data elements for 42.5% of cancer cases. Further refinement of this accuracy level is underway in subsequent versions, as well as incorporation of an uncertainty quantification component to ease and increase user confidence.

Although the patient information currently collected across SEER registries is mainly clinical (clin-omics), increasingly other -omics types of information are expected to become part of cancer surveillance. Specifically, radiomics (i.e., biomarkers automatically extracted from histopathological and radiological images via targeted image processing algorithms), as well as genomics, will provide important insight to understand the effectiveness of cancer treatment choices.

Moving forward, Pilot Three will implement the latest NLP tools into production application across participating SEER registries using application program interfaces (APIs) to determine the most effective human-AI workflow integration for broad and standardized technology integration across registries. The APIs will be integrated into the registries' workflows. In addition, working collaboratively with domain experts, the team will extend the information extraction across biomarkers and capture disease progression such as metastasis and recurrence. This pilot is engaging in several partnerships with academic and commercial entities to bring in heterogeneous data sources for more effective longitudinal trajectory modeling. Efforts to understand causal inference beyond treatment (social, economic, and environmental) impact in the real world are also part of future plans.

Looking ahead: Opportunities and challenges

In addition to large-scale computing as a critical and necessary element to pursue the many opportunities for AI in cancer research, other areas must also develop to realize the tremendous potential. In this section, we list some of these opportunities.

First, HPC platforms provide a high-speed interconnection between compute nodes that is integral in handling the communication for data or model parallel training. While cloud platforms have recently made significant investments in improving interconnects, this remains a challenge and would encourage projects like Pilot Three to limit distributed training to a single node. That said, the on-demand nature of cloud platforms can allow for more efficient resource utilization of AI workflows, and the modern Linux environments and familiar hardware configurations available on cloud platforms offer superior support for AI workflow software, which can increase productivity.

Second, the level of available data currently limits the potential for AI in cancer research. Developing data resources of sufficient size, quality, and coherence will be essential for AI to develop robust models within the domain of the available data resources.

Third, evaluation and validation of data-driven AI models, and quantifying the uncertainty in individual predictions, will continue to be an important aspect for the adoption of AI in cancer research, posing a challenge to the community to concurrently develop criteria for evaluation and validation of models while delivering the necessary data and large-scale computational resources required.

In the next two subsections, we highlight two efforts within the JDAC4C collaboration to address these challenges. The first focuses on scaling the training of the deep neural network application on HPC systems, and the second quantifies the uncertainty in the trained models to build a measure of confidence and limits on how to use them in production.

CANDLE: Cancer Distributed Learning Environment

The CANDLE project[16] builds a single, scalable deep neural network application and is being used to address the challenges in each of the JDACS4C pilots.

The primary challenge for CANDLE is enabling the most challenging deep learning problems in cancer research to run on the most capable supercomputers in the DOE and NIH. Implementations of CANDLE have been tested on the DOE Titan, Cori, Theta, and Summit systems, as well as on the NIH Biowulf system using container technologies.[31] The CANDLE software builds on open-source deep learning frameworks, including Keras, TesnsorFlow, and PyTorch. Through collaborations with DOE computing centers, HPC vendors, and the Exascale Computing Project's (ECP) co-design and software technology projects, CANDLE is being prepared for the coming DOE exascale platforms.

Features currently supported in CANDLE include feature selection, hyperparameter optimization, model training, inferencing, and UQ. Future release plans call for supporting experimental design, model acceleration, uncertainty guided inference, network architecture search, synthetic data generation, and data modality conversion. These features have been used to evaluate over 20,000 models in a single run on a DOE HPC system.

The CANDLE project also features a set of deep learning benchmarks that are aimed at solving a problem associated with each of the pilots. These benchmarks embody different deep learning approaches to problems in cancer biology, and they are implemented in compliance with CANDLE standards, making them amenable to large-scale model search and inferencing experiments.

Uncertainty quantification

UQ is a critical component across all three JDACS4C pilots. It is a field of analysis that estimates accuracy under multi-modal uncertainties. UQ allows detecting unreliable model predictions[32] and provides for improved design of experiments. UQ quantifies the effects of statistical fluctuations, extrapolation, overfitting, model misspecification, and sampling biases, resulting in confidence measures for individual model prediction.

Historically, results from computational modeling in the biological sciences did not incorporate UQ, but measures of certainty are essential for actionable predictive analytics.[33] The problems are exacerbated as we start addressing problems with poorly understood causal models using large yet noisy, multimodal, and incomplete data sets. Methodological advances are allowing all three pilots to use HPC technology to simultaneously estimate the uncertainty along with the results.

In addition to providing confidence intervals, the development of new UQ technology allows assessment and improvement of data quality[34], evaluation and design of models appropriate to the data quality and quantity, and prioritization of further observations or experiments that can best improve model quality. These developments are currently being tested in the JDACS4C pilots and are likely to impact the wider application of large-data-driven modeling.

Conclusion

The JDACS4C collaboration continues to provide valuable insights into the future for AI in cancer research and the essential role that extreme-scale computing will have in shaping and informing that future. Concepts have been transformed into preliminary practice in a short period of time, as a result of multi-disciplinary teamwork and access to advanced computing resources. AI is being used to guide experimental design to make more effective use of valuable laboratory resources, to develop new capabilities for molecular simulation, and to streamline and improve efficiencies in the acquisition of clinical data.

The JDACS4C collaboration established a foundation for team science and is enabling innovation at the intersection of advanced computing technologies and cancer research. The opportunities for extreme-scale computing in AI and cancer research extend well beyond these pilots.

Acknowledgements

Author contributions

GZ made the paper plan, wrote the abstract, and assembled the manuscript. ES, EG, AG, TH, and CL contributed to the introduction. RS, JD, and YE made substantial contribution to the conception and design of the work in Pilot One. TBr and FX provided acquisition, analysis, and interpretation of data in Pilot One. FS and DN made substantial contributions to the conception, design, and execution of Pilot Two. GT and LP made substantial contribution in designing, developing, and writing the section about Pilot Three. RS made substantial contribution to the conception and design of the work in CANDLE. RS, TBr, TBh, and FX developed and optimized predictive models with UQ in Pilots One, Three, and CANDLE. GZ contributed to extending the application of CANDLE at NIH. ES and EG contributed to the opportunities and challenges. TBh wrote and developed the section related to UQ. EG, AG, TH, and CL contributed to the conclusion.

Funding

This work has been supported in part by the Joint Design of Advanced Computing Solutions for Cancer (JDACS4C) program established by the U.S. Department of Energy (DOE) and the National Cancer Institute (NCI) of the National Institutes of Health. This work was performed under the auspices of the U.S. Department of Energy by Argonne National Laboratory under Contract DE-AC02-06-CH11357. This work was performed under the auspices of the U.S. Department of Energy by Lawrence Livermore National Laboratory under Contract DE-AC52-07NA27344. LLNL-JRNL-773355. This work was performed under the auspices of the U.S. Department of Energy by Los Alamos National Laboratory under Contract DE-AC52-06NA25396. Computing support for this work came in part from the Lawrence Livermore National Laboratory Institutional Computing Grand Challenge program. This project was funded in part with federal funds from the NCI, NIH, under contract no. HHSN261200800001E. This research was supported in part by the Exascale Computing Project (17-SC-20-SC), a collaborative effort of the U.S. Department of Energy Office of Science and the National Nuclear Security Administration. This research used resources of the Argonne Leadership Computing Facility and the Oak Ridge Leadership Computing Facility, which are DOE Office of Science User Facilities. This research used resources of the Lawrence Livermore Computing Facility and the Los Alamos National Laboratory supported by the DOE National Nuclear Security Administration's Advanced Simulation and Computing (ASC) Program. This manuscript has been authored in part by UT-Battelle, LLC under Contract No. DE-AC05-00OR22725 with the U.S. Department of Energy. This research used resources of the Oak Ridge Leadership Computing Facility, which is a DOE Office of Science User Facility supported under Contract DE-AC05-00OR22725.

The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paidup, irrevocable, world-wide license to publish or reproduce the published form of the manuscript, or allow others to do so, for United States Government purposes. The Department of Energy will provide public access to these results of federally sponsored research in accordance with the DOE Public Access Plan (http://energy.gov/downloads/doe-public-access-plan).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- ↑ "What is the Precision Medicine Iniative?". Genetics Home Reference. National Institutes of Health. 2019. https://ghr.nlm.nih.gov/primer/precisionmedicine/initiative. Retrieved 20 September 2019.

- ↑ Sun, D.; Wang, M.; Li, A. (2019). "A Multimodal Deep Neural Network for Human Breast Cancer Prognosis Prediction by Integrating Multi-Dimensional Data". IEEE/ACM Transactions on Computational Biology and Bioinformatics 16 (3): 841–50. doi:10.1109/TCBB.2018.2806438.

- ↑ Xia, F.; Shukla, M.; Brettin, T. et al. (2018). "Predicting tumor cell line response to drug pairs with deep learning". BMC Bioinformatics 19 (Suppl. 18): 486. doi:10.1186/s12859-018-2509-3. PMC PMC6302446. PMID 30577754. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6302446.

- ↑ 4.0 4.1 Holbeck, S.L.; Camalier, R.; Crowell, J.A. et al. (2017). "The National Cancer Institute ALMANAC: A Comprehensive Screening Resource for the Detection of Anticancer Drug Pairs with Enhanced Therapeutic Activity". Cancer Research 77 (13): 3564-3576. doi:10.1158/0008-5472.CAN-17-0489. PMC PMC5499996. PMID 28446463. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5499996.

- ↑ "Dragon". Kode Chemoinformatics srl. 2019. https://chm.kode-solutions.net/products_dragon.php. Retrieved 30 April 2019.

- ↑ Subramanian, A.; Narayan, R.; Corsello, S.M. et al. (2017). "A Next Generation Connectivity Map: L1000 Platform and the First 1,000,000 Profiles". Cell 171 (6): P1437-1452.E17. doi:10.1016/j.cell.2017.10.049. PMC PMC5990023. PMID 29195078. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5990023.

- ↑ Chen, Y.; Li, Y.; Narayan, R. et al. (2016). "Gene expression inference with deep learning". Bioinformatics 32 (12): 1832-9. doi:10.1093/bioinformatics/btw074. PMC PMC4908320. PMID 26873929. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4908320.

- ↑ Xie, L.; He, S.; Song, X. et al. (2018). "Deep learning-based transcriptome data classification for drug-target interaction prediction". BMC Genomics 19 (Suppl. 7): 667. doi:10.1186/s12864-018-5031-0. PMC PMC6156897. PMID 30255785. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6156897.

- ↑ Torrey, L.; Shavlik, J. (2010). "Chapter 11: Transfer Learning". In Olivas, E.S.; Guerrero, J.D.M.; Martinez-Sober, M. et al.. Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques. IGI Global. pp. 242–64. doi:10.4018/978-1-60566-766-9. ISBN 9781605667669.

- ↑ "NCI-60 Human Tumor Cell Lines Screen". Developmental Therapeutics Program. National Institutes of Health. 26 August 2015. https://dtp.cancer.gov/discovery_development/nci-60/default.htm.

- ↑ Yang, W.; Soares, J.; Greninger, P. et al. (2013). "Genomics of Drug Sensitivity in Cancer (GDSC): A resource for therapeutic biomarker discovery in cancer cells". Nucleic Acids Research 41 (DB1): D955-61. doi:10.1093/nar/gks1111. PMC PMC3531057. PMID 23180760. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3531057.

- ↑ Basu, A.; Bodycombe, N.E.; Cheah, J.H. et al. (2013). "An interactive resource to identify cancer genetic and lineage dependencies targeted by small molecules". Cell 154 (5): 1151–61. doi:10.1016/j.cell.2013.08.003. PMC PMC3954635. PMID 23993102. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3954635.

- ↑ Klijn, C.; Durinck, S.; Stawiski, E.W. et al. (2015). "A comprehensive transcriptional portrait of human cancer cell lines". Nature Biotechnology 33 (3): 306–12. doi:10.1038/nbt.3080. PMID 25485619.

- ↑ Barretina, J.; Caponigro, G.; Stransky, N. et al. (2012). "The Cancer Cell Line Encyclopedia enables predictive modelling of anticancer drug sensitivity". Nature 483 (7391): 603-7. doi:10.1038/nature11003. PMC PMC3320027. PMID 22460905. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3320027.

- ↑ Smith, S.C.; Baras, A.S.; Lee, J.K. et al. (2010). "The COXEN principle: translating signatures of in vitro chemosensitivity into tools for clinical outcome prediction and drug discovery in cancer". Cancer Research 70 (5): 1753-8. doi:10.1158/0008-5472.CAN-09-3562. PMC PMC2831138. PMID 20160033. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2831138.

- ↑ 16.0 16.1 Wozniak, J.M.; Jain, R.; Balaprakash, P. et al. (2018). "CANDLE/Supervisor: A workflow framework for machine learning applied to cancer research". BMC Bioinformatics 19 (Suppl. 18): 491. doi:10.1186/s12859-018-2508-4. PMC PMC6302440. PMID 30577736. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6302440.

- ↑ Simanshu, D.K.; Nissley, D.V.; McCormick, F. (2017). "RAS Proteins and Their Regulators in Human Disease". Cell 170 (1): 17–33. doi:10.1016/j.cell.2017.06.009. PMC PMC5555610. PMID 28666118. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5555610.

- ↑ Carpenter, T.S.; López, C.A.; Neale, C. et al. (2018). "Capturing Phase Behavior of Ternary Lipid Mixtures with a Refined Martini Coarse-Grained Force Field". Journal of Chemical Theory and Computation 14 (11): 6050-6062. doi:10.1021/acs.jctc.8b00496. PMID 30253091.

- ↑ Neale, C.; García, A.E. (2018). "Methionine 170 is an Environmentally Sensitive Membrane Anchor in the Disordered HVR of K-Ras4B". Journal of Physical Chemistry B 122 (44): 10086-10096. doi:10.1021/acs.jpcb.8b07919. PMID 30351122.

- ↑ Ingólfsson, H.I.; Carpenter, T.S.; Bhatia, H. et al. (2017). "Computational Lipidomics of the Neuronal Plasma Membrane". Biophysical Journal 113 (10): 2271-2280. doi:10.1016/j.bpj.2017.10.017. PMC PMC5700369. PMID 29113676. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5700369.

- ↑ Travers, T;. López, C.A.; Van, Q.N. et al. (2018). "Molecular recognition of RAS/RAF complex at the membrane: Role of RAF cysteine-rich domain". Scientific Reports 8 (1): 8461. doi:10.1038/s41598-018-26832-4. PMC PMC5981303. PMID 29855542. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5981303.

- ↑ Natale, F.D.; Bhatia, H.; Carpenter, T.S. et al. (2019). "A massively parallel infrastructure for adaptive multiscale simulations: Modeling Ras initiation pathway for cancer". Proceedings of the 2019 International Conference for High Performance Computing, Networking, Storage and Analysis: 57. doi:10.1145/3295500.3356197.

- ↑ Qiu, J.X.; Yoon, H.J.; Srivastava, K. et al. (2018). "Scalable deep text comprehension for Cancer surveillance on high-performance computing". BMC Bioinformatics 19 (Suppl. 18): 488. doi:10.1186/s12859-018-2511-9. PMC PMC6302459. PMID 30577743. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6302459.

- ↑ Gao, S.; Young, M.T.; Qiu, J.X. et al. (2017). "Hierarchical attention networks for information extraction from cancer pathology reports". JAMIA 25 (3): 321–330. doi:10.1093/jamia/ocx131. PMID 29155996.

- ↑ Qiu, J.X.; Yoon, H.J.; Fearn, P.A. et al. (2018). "Deep Learning for Automated Extraction of Primary Sites From Cancer Pathology Reports". IEEE Journal of Biomedical and Health Informatics 22 (1): 244–51. doi:10.1109/JBHI.2017.2700722. PMID 28475069.

- ↑ Tourassi, G. (2018). "DeepAbstractor: A scalable deep learning framework for automated information extraction from free-text pathology reports". Proceedings of the 30th Anniversary AACR Special Conference Convergence: Artificial Intelligence, Big Data, and Prediction in Cancer. https://www.aacr.org/Meetings/Pages/MeetingDetail.aspx?EventItemID=149&DetailItemID=772.

- ↑ Alawad, M.; Hasan, S.M.S.; Christian, J.B. et al. (2018). "Retrofitting Word Embeddings with the UMLS Metathesaurus for Clinical Information Extraction". Proceedings from the 2018 IEEE International Conference on Big Data: 2838-2846. doi:10.1109/BigData.2018.8621999.

- ↑ Alawad, M.; Yoon, H.-J.; Tourassi, G.D. (2018). "Coarse-to-fine multi-task training of convolutional neural networks for automated information extraction from cancer pathology reports". Proceedings from the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics: 218-221. doi:10.1109/BHI.2018.8333408.

- ↑ Yoon, H.-J.; Robinson, S.; Christian, J.B. et al. (2018). "Filter pruning of Convolutional Neural Networks for text classification: A case study of cancer pathology report comprehension". Proceedings from the 2018 IEEE EMBS International Conference on Biomedical & Health Informatics: 345-348. doi:10.1109/BHI.2018.8333439.

- ↑ Yoon, H.-J., Alawad, M., Christian, J.B. et al. (2018). "HPC-Based Hyperparameter Search of MT-CNN for Information Extraction from Cancer Pathology Reports". Fourth Computational Approaches for Cancer Workshop. https://sc18.supercomputing.org/proceedings/workshops/workshop_pages/ws_cafcw107.html.

- ↑ Zaki, G.F.; Wozniak, J.M.; Ozik, J. et al. (2018). "Portable and Reusable Deep Learning Infrastructure with Containers to Accelerate Cancer Studies". Proceedings from the 2018 IEEE/ACM 4th International Workshop on Extreme Scale Programming Models and Middleware: 54-61. doi:10.1109/ESPM2.2018.00011.

- ↑ Hengartner, N.; Cuellar, L.; Wu, X.C. et al. (2018). "CAT: Computer aided triage improving upon the Bayes risk through ε-refusal triage rules". BMC Bioinformatics 19 (Suppl. 18): 485. doi:10.1186/s12859-018-2503-9. PMC PMC6302364. PMID 30577756. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6302364.

- ↑ Begoli, E.; Bhattacharya, T.; Kusnezov, D. et al. (2019). "The need for uncertainty quantification in machine-assisted medical decision making". Nature Machine Intelligence 1: 20–23. doi:10.1038/s42256-018-0004-1.

- ↑ Thulasidasan, S.; Bhattacharya, T.; Bilmes, J. et al. (2019). "Combating Label Noise in Deep Learning using Abstention". Proceedings of Machine Learning Research 97: 6234-6243. http://proceedings.mlr.press/v97/thulasidasan19a.html.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added.