Difference between revisions of "Journal:Research on information retrieval model based on ontology"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 121: | Line 121: | ||

{| border="0" cellpadding="5" cellspacing="0" width="350px" | {| border="0" cellpadding="5" cellspacing="0" width="350px" | ||

|- | |- | ||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig .3''' Pseudo-code for select weight factor by genetic algorithm</blockquote> | | style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 3''' Pseudo-code for select weight factor by genetic algorithm</blockquote> | ||

|- | |- | ||

|} | |} | ||

|} | |} | ||

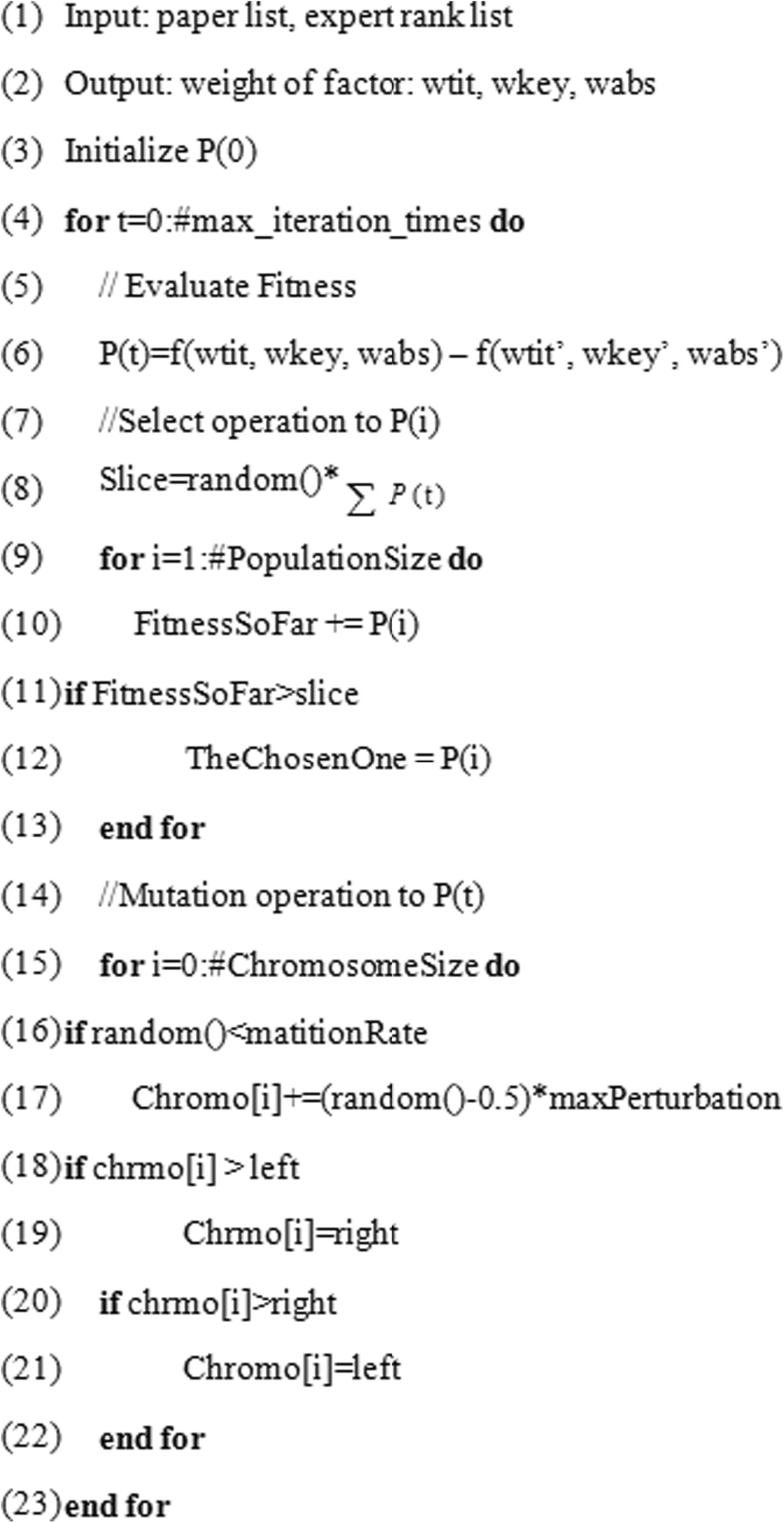

This algorithm simulates the evolution process by gradually adjusting weight factor and eliminating factor combination with a low fitness value. If the fitness result for one combination is lower than the other one, this group will be likely excluded in the next generation. To avoid the local optimization, we select many original generations and decrease the unqualified group time by time. In each iteration, the factor interval lies in [''w<sub>i</sub>'' − 0.2, ''w<sub>i</sub>'' + 0.4] to lower the negative factors. Fitness function ''P''(''t'') determines how fit an individual is with new weighted combination (''w'''<sub>tit</sub>, ''w'''<sub>key</sub>, ''w'''<sub>abs</sub>). The traditional factor set is replaced by ''P''(''t'') with higher fitness, then calculated with a query word for the similarity of each paper, and the rank list is generated. The penalty function ''f'' is used to get the distance of the expert list. | |||

Then, for each semantic meaning of ontology term, whether it exists in the extracting characteristic vocabulary is checked. If the semantic exists, the document and weight of the semantic term is calculated to manifest the text with semantic information. | |||

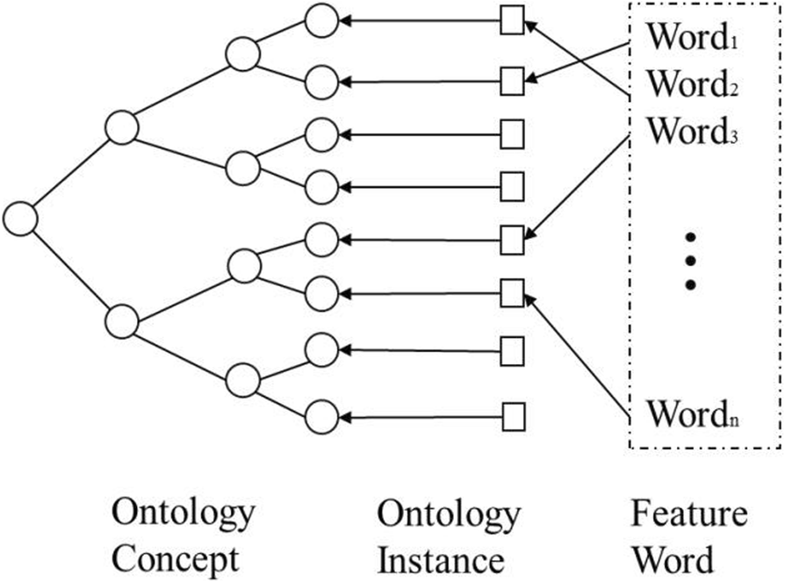

After document feature extraction, a document index based on the concept to reflect the internal relation between text index terms is established, and ambiguity during annotation is excluded. An index based on the concept consists of feature words with their relation given by semantic parsing. Feature words connect through ontology instance and documents. The structure of the ontology concept index is shown in Fig. 4. | |||

[[File:Fig4 Yu JOnWireCommNet2019 2019.png|450px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="450px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 4''' Index structure based on the ontology concept</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Ontology document retrieval=== | |||

The procedure of document retrieval is listed below: | |||

#The user inputs search words or phrases in the search interface, then the system removes function words and reserves the nouns and verbs. Term extraction from words is implemented to get semantic conceptual words and phrases. The result is passed to the query transition module. | |||

#The query transition module sends the results to the ontology server to search for a corresponding semantic concept, including hypernym, hyponym, synonym, and conceptual meanings.<ref name="MesserlyInfo97">{{cite web |url=https://patents.google.com/patent/US6161084 |title=Information retrieval utilizing semantic representation of text by identifying hypernyms and indexing multiple tokenized semantic structures to a same passage of text |author=Messerly, J.J.; Heidorn, G.E.; Richardson, S.D. et al. |work=Google Patents |date=07 March 1997}}</ref> If the word is not found in the ontology database, it prompts the user to adjust the retrieval strategy. | |||

#For the matching concept in domain ontology, the query transition module implements search, semantic judgment, and query extension to add semantic information to the query. The module submits the query to a retrieval agent for searching. For words with an uncertain semantic message, it executes a keyword matching method to search. | |||

#Handled by the custom process module, the user interface then lists query results according to exact word, synonym, hypernym, and hyponym words. | |||

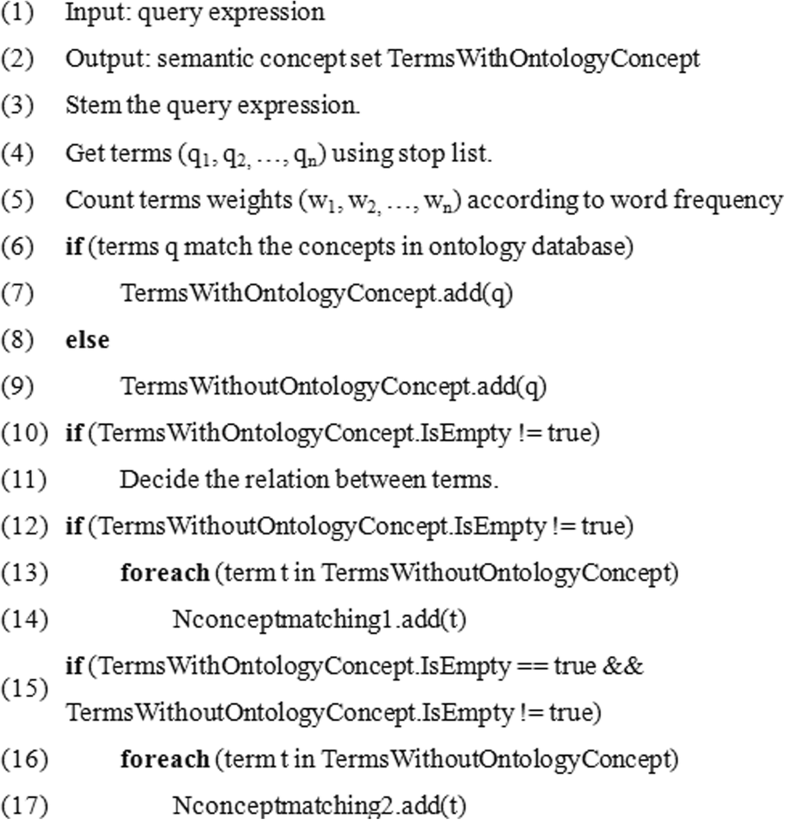

Before the retrieval process, the system executes semantic analysis for the user query request. A keyword is extracted from stop words, and the determination of whether or not the keyword belongs to the ontology database is made. Through combining concepts in the ontology library, more semantic information is obtained by semantic reasoning. The pseudo-code of a query using the semantic analysis algorithm is shown in Fig. 5. | |||

[[File:Fig5 Yu JOnWireCommNet2019 2019.png|450px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="450px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 5''' Pseudo-code for the query semantic analysis algorithm</blockquote> | |||

|- | |||

|} | |||

|} | |||

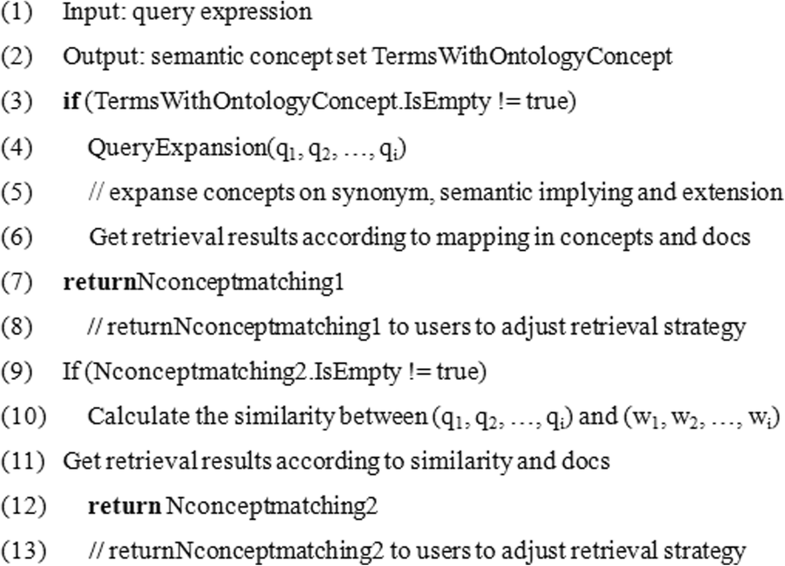

After applying semantic analysis on the user request, semantic information is able to be used in the retrieval strategy. The pseudo-code of information retrieval algorithm is shown in Fig. 6. | |||

[[File:Fig6 Yu JOnWireCommNet2019 2019.png|450px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="450px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 6''' Pseudo-code for the information retrieval algorithm</blockquote> | |||

|- | |||

|} | |||

|} | |||

==Experiment and results== | |||

===The experimental design of the information retrieval model based on ontology=== | |||

In order to evaluate the performance of the information retrieval model based on ontology, it is necessary to use ontology tools for modelling, such as Protégé<ref name="KeßlerSemantic09">{{cite journal |title=Semantic Rules for Context-Aware Geographical Information Retrieval |journal=Proceedings from EuroSSC 2009 Smart Sensing and Context |author=Keßler, C.; Raubal, M.; Wosniok, C. |pages=77–92 |year=2009 |doi=10.1007/978-3-642-04471-7_7}}</ref> as an ontology modeling tool, ICTCLAS<ref name="CaoInfo06">{{cite journal |title=Information Retrieval Oriented Adaptive Chinese Word Segmentation System |journal=Journal of Software |author=Cao, Y.-G.; Cao, Y.-Z.; Jin, M.-Z.; Liu, C. |volume=3 |issue=17 |year=2006 |url=http://en.cnki.com.cn/Article_en/CJFDTOTAL-RJXB200603003.htm}}</ref> as a word segmentation tool, Jena [20] as a semantic parsing tool, and Lucene as a semantic indexing tool.<ref name="CastellsSelf05">{{cite journal |title=Self-tuning Personalized Information Retrieval in an Ontology-Based Framework |journal=Proceedings from On the Move to Meaningful Internet Systems 2005 |author=Castells, P.; Fernández, M.; Vallet, D. et al. |pages=977–986 |year=2005 |doi=10.1007/11575863_119}}</ref> | |||

The data set contains 1000 scientific papers, as well as papers from the IEEE digital library, which are used to extract the core concepts in the domain ontology. Then the final conceptualization system is established. The literature is divided into 10 groups. Each group contains 100 papers related to a query subject or keywords (e.g., "computer architecture" and "operating system"). Therefore, 10 expert rank lists are available for retrieval. | |||

The evaluation criterion considers the similarity of each paper towards every query word. For example, the mistaken sort term distance of the top neighboring papers is higher than the ones of the lowest papers. The formula below is used to collect the distance within rank list ''R'' and ''R''': | |||

<math>P(t) = \frac{\sum\limits_{i = 1}^{n}\left\lbrack \left( n - i \right) \times {dis}(i) \right\rbrack}{\sum\limits_{i = 1}^{\lfloor\frac{n}{2}\rfloor}\left\lbrack \left( n - i \right) \times i \right\rbrack + \sum\limits_{\lfloor\frac{n}{2}\rfloor}^{n}\left\lbrack \left( n - i \right)^{2} \right\rbrack}</math> | |||

Here, ''n'' represents the paper numbers in the rank list. The dis(''i'') represents the position distance for paper ''i'' in the rank list and expert rank list. ''P''(''t'') represents the distance between the two rank lists of the denominator specification. | |||

===Analysis of experimental results=== | |||

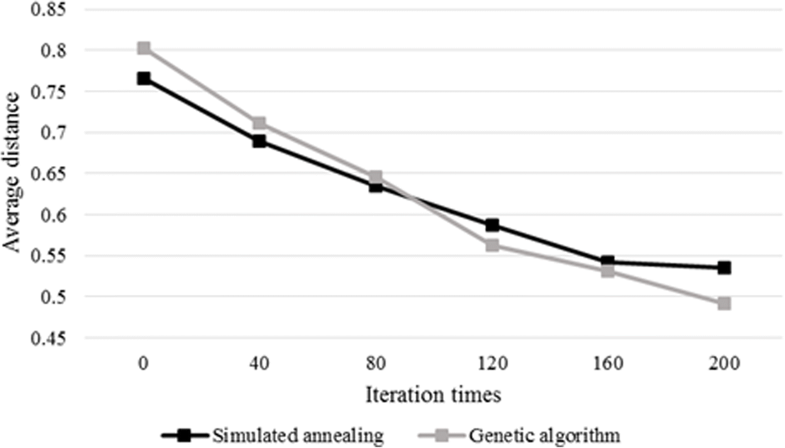

The genetic algorithm with simulated annealing method is compared in relation to iteration numbers and average distance of the rank list. The result is shown in Fig. 7. | |||

[[File:Fig7 Yu JOnWireCommNet2019 2019.png|450px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="450px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Fig. 7''' Comparison of the simulated annealing and genetic algorithm in average distance and iteration times</blockquote> | |||

|- | |||

|} | |||

|} | |||

The ''X''-axis time is the number of iterations in two algorithms, and the ''Y''-axis average distance is calculated by the formula prior, demonstrating the difference of the ranking list with the expert list. After 200 iterations, the average distance is close to overall optimal. The algorithm deduces the optimized weight combination of factors which are ''w<sub>tit</sub>'' = 3, ''w<sub>abs</sub>'' = 2, ''w<sub>key</sub>'' = 0.6. | |||

The different threshold similarity value ζ is taken, in which ζ = 0.5 means sim(''S<sub>q</sub>'', ''S<sub>j</sub>'') ≥ 0.55. Every experiment counts retrieval documents set results |''A''|, ontology relevant documents |''B''|, and user query relevant document in the retrieval set |''A'' ∩ ''B''| to calculate the precision and recall rate. The result is shown in Table 1. | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="7"|'''Table 1.''' Precision and recall rate of ontology retrieval | |||

|- | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Threshold | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" colspan="2"|ζ = 0.5 | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" colspan="2"|ζ = 0.55 | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" colspan="2"|ζ = 0.6 | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Group num. | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Precison | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Recall | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Precision | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Recall | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Precision | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;"|Recall | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|1 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|84.50% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|83.36% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|82.45% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|81.85% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|2 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|38.92% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|93.12% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|51.00% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|3 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|74.35% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|94.65% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|94.43% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|99.12% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|94.65% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|4 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|83.23% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|93.68% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96.34% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|45.74% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|5 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|51.36% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|95.44% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|100.00% | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Average | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|66.47% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|96.67% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|95.38% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|95.38% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|99.09% | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|74.65% | |||

|- | |||

|} | |||

|} | |||

==References== | ==References== | ||

Revision as of 01:03, 13 February 2019

| Full article title | Research on information retrieval model based on ontology |

|---|---|

| Journal | EURASIP Journal on Wireless Communications and Networking |

| Author(s) | Yu, Binbin |

| Author affiliation(s) | Jilin University, Beihua University |

| Primary contact | Email: yubinbin80 at sina dot com |

| Year published | 2019 |

| Volume and issue | 2019 |

| Page(s) | 30 |

| DOI | 10.1186/s13638-019-1354-z |

| ISSN | 1687-1499 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://jwcn-eurasipjournals.springeropen.com/articles/10.1186/s13638-019-1354-z |

| Download | https://jwcn-eurasipjournals.springeropen.com/track/pdf/10.1186/s13638-019-1354-z (PDF) |

|

|

This article contains rendered mathematical formulae. You may require the TeX All the Things plugin for Chrome or the Native MathML add-on and fonts for Firefox if they don't render properly for you. |

Abstract

An information retrieval system not only occupies an important position in the network information platform, but also plays an important role in information acquisition, query processing, and wireless sensor networks. It is a procedure to help researchers extract documents from data sets as document retrieval tools. The classic keyword-based information retrieval models neglect the semantic information which is not able to represent the user’s needs. Therefore, how to efficiently acquire personalized information that users need is of concern. The ontology-based systems lack an expert list to obtain accurate index term frequency. In this paper, a domain ontology model with document processing and document retrieval is proposed, and the feasibility and superiority of the domain ontology model are proved by the method of experiment.

Keywords: ontology, information retrieval, genetic algorithm, sensor networks

Introduction

Information retrieval is the process of extracting relevant documents from large data sets. Along with the increasing accumulation of data and the rising demand of high-quality retrieval results, traditional information retrieval techniques are unable to meet the task of high-quality search results. As a newly emerged knowledge organization system, ontology is vitally important in promoting the function of information retrieval in knowledge management.

Existing information retrieval models, such as the vector space model (VSM)[1], are based on certain rules to model text in pattern recognition and other fields. For example, a VSM splits, filters, and classifies text that looks very abstract and using certain rules calculates statistics such as word frequency.

Probability models[2] mainly rely on probabilistic operation and Bayes rules to match data information, in which the weight values of feature words are all multivalued. The probabilistic model uses the index word to represent the user’s interest, that is, the personalized query request submitted by the user. Meanwhile, there is no vocabulary set with a standard semantic feature and document label. Traditional weighted strategies lack semantic information of the document, which is not representative for the document description. On the basis of semantic annotation results, weighted item frequency[3] and domain ontology of the semantic relation are used to express the semantics of the document.[4]

The VSM and probability model can simplify the text processing into a vector space or probability set. It uses the "term frequency" property to describe the number of occurrences of query words in the paper. Considering the particularity of document segmentation, the word in different sections has a different weight of summarization for the paper, meaning that calculating word appearance is not sufficient. Meanwhile, there is no vocabulary set with standard semantic features and document labels.

The introduction of ontology into the information retrieval system can query users’ semantic information based on ontology and better satisfy users’ personalized retrieval needs.[5] Short of a vocabulary set with semantic description, attempts at a logic view of user information demand are insufficient to express the semantic of the user’s requirement. In such an information retrieval model, even if we choose the appropriate sort function R (R is the reciprocal of the distance between points), the logical view cannot represent the requirements of the document and the user, and the retrieval results will be unconvincing to the user.

In order to improve the accuracy and efficiency of user retrieval, we build a model based on information retrieval and a domain ontology knowledge base. The ontology-based information retrieval system provides semantic retrieval, while the keyword-based information retrieval system calculates a better factor set in document processing, with better recall and precision results.

In order to accomplish this, a genetic algorithm was designed and implemented. A genetic algorithm is a kind of search method that refers to the evolution rule of the biological world. It mainly includes coding mechanisms and control parameters. The genetic algorithm provides a heuristic method which simulates the population evolution by searching through the solution space in each selection, crossover, and mutation to select an optimal factor set by combinations of factors. The option-weighted factor, tuned by a training set using genetic algorithms, is applied to a practical retrieval system.[6]

Domain ontology was applied as the base of semantic representation to effectually represent user requirement and document semantics. Domain ontology involves the detailed description of domain conceptualization which expresses the abstract object, relation, and class in one vocabulary set.[7]

Designing and implementing the information retrieval system was composed of two parts: document processing and document retrieval. In this information retrieval model, an ontology server is added to tag and index the retrieval sources based on ontology; the query conversion module implements semantic processing in users’ needs and expanses the initial query on its synonym, hypernym, and its senses. The retrieval agent module uses the conversion of queries for retrieving the information source.

We've already provided an overview of an ontology-based information retrieval system. The next part introduces the relevant work and methods of this study. The third part discusses the design of an information retrieval model based on domain ontology. The fourth part details the experimental study and analyses of the results. The final part summarizes the full text and declares related issues that need further study.

Methods

Faced with the problem of managing a large volume of data in a network, it remains vital for users to acquire information accurately and efficiently. So far, retrieval methods have been developed using various mathematical models. The classical information retrieval models include the Boolean model[8], probability model[9], vector model[10], binary independent retrieval model, and BM25 model. The following are the solutions of these models.

Suppose ki is the index term, dj is the document, wi,j ≥ 0 is the weight of tuples (ki, dj), which is the significance of ki to dj semantic contents. Let t refer to the number of index terms. K = {k1, …, kt} is index term set. If an index term does not appear in the document, then wi,j = 0. So the document dj is represented by an index term vector :

The Boolean model is a classical information retrieval (IR) model based on set theory and Boolean algebra. Boolean retrieval can be effective if a query requires unambiguous selection.[11] But it can only result in whether the document is related or not related. The Boolean model lacks the ability to describe the situation that query words partially match a paper. The similarity result of document dj and query q is binary, either 0 or 1. The binary value has limitations and the Boolean queries are hard to construct.

The VSM, which is proposed earlier by Salton, is based on the vector space model theory and vector linear algebra operation, which abstract the query conditions and text into vectors in the multidimensional vector space. The multi-keyword matching here can express the meaning of the text more.[1] Compared with the Boolean model, the VSM calculates relevant document ranking by comparing the angle relating similarity between the vector of each document and the original query vector in the spatial representation.

The probabilistic model[2] mainly relies on probabilistic operation and Bayes rules to match data information. The probabilistic model not only considers the internal relations between keywords and documents, but it also retrieves texts based on probability dependency. The model, usually based on a group of parameterized probability distributions, consumes the internal relation between keywords and documents and retrieves according to probabilistic dependency. The model requires strong independent assumptions for tractability.

The binary independence retrieval model[12] is evolved from the probabilistic model with better performance. Assuming that document D and index term q is described in a two-valued vector (x1, x2, … xn), if index term ki ∈ D, then xi = 1; otherwise, xi = 0. The correlation function of index term and document are shown below.

Here, pi = ri/r, qi = (fi − ri)/(f − r), f refers to amount of documents in the training document set. r is the number of documents related to the user query in the training document set. fi represents a number of documents, including index term ki in the training document set. Ri is the number of documents, including ki in r relation documents.

The Okapi BM25 model is called BM25, which is an algorithm based on the probabilistic retrieval model. The Okapi BM25 model[13][14] is a model developed from the probabilistic model that incorporates term frequency and length normalization. The local weights are computed as parameterized frequencies, including term frequency, document frequency, and global weights as RSJ weights. Local weights are based on a 2D Poisson model, while the global weights are based on the Robertson-Spärck-Jones Probabilistic Model. By reducing the number of parameters to be learned and approximated, based on these heuristic techniques, BM25 often achieves better performance compared to TF-IDF (term frequency–inverse document frequency).

Based on the domain ontology information retrieval model

The concept of domain ontology has a relation to other concepts simultaneously. The interrelation between concepts of the semantic relative network implements synonym expansion retrieval, semantic entailment expansion, and semantic correlation expansion. We introduce a domain ontology information retrieval model to apply ontology into the traditional information retrieval model by query expansion to improve efficiency.

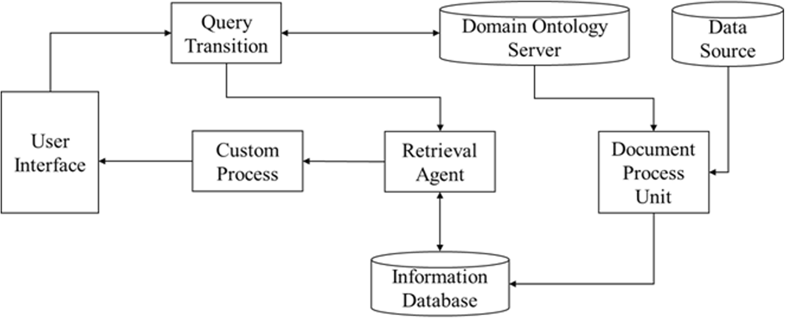

An illustration of the structure for the information retrieval model is shown in Fig. 1.

|

The system consists of two parts: ontology document processing (including domain ontology servers, data source, document process unit, and information database) and ontology document retrieval (including domain ontology server, query transition, custom process, and retrieval agent).

Ontology documents processing

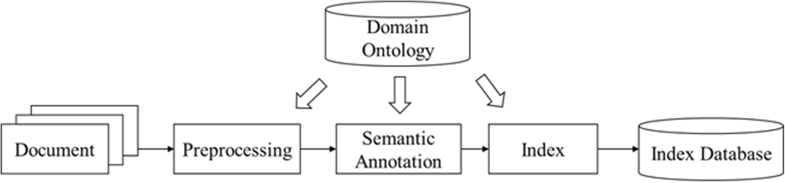

Document processing extracts useful information from an unstructured text message and establishes mapping relations between document terms and concepts based on domain ontology.[15] Document processing is shown in Fig. 2.

|

In the preprocessing procedure, each document in the document set implements vocabulary, analyzes words, and filters numbers, hyphens, and punctuation. Using a stop word list removes function words to leave useful words such as nouns and verbs.[16] Extracting stem words and removing the prefix and suffix improve the accuracy of retrieval. Finally, determining certain words as an index element expresses literature content conception.

Annotating semantic on a retrieved object by analyzing characteristic vocabulary builds the mapping relation between words and concepts. First, characteristic words are extracted and the weight of each word is calculated by counting word frequency to distinguish the importance of words. In this paper, the genetic algorithm is used to calculate the best weighting factor. In the end, it is applied to the actual retrieval system.

The system automatically learns weighted factor by genetic algorithm. It is a heuristic method which simulates biological evolution processes and through factor mutation eliminates the non-ideal factor sets and leaves the optimal factor set. The algorithm tries to maximize the fitness function as a parameter estimation to search a population consisting of the fittest individual; in our case, those are the parameters of the weighted term in retrieving. In Fig. 3, the pseudo-code of genetic algorithm for weighted term frequency is described.

|

This algorithm simulates the evolution process by gradually adjusting weight factor and eliminating factor combination with a low fitness value. If the fitness result for one combination is lower than the other one, this group will be likely excluded in the next generation. To avoid the local optimization, we select many original generations and decrease the unqualified group time by time. In each iteration, the factor interval lies in [wi − 0.2, wi + 0.4] to lower the negative factors. Fitness function P(t) determines how fit an individual is with new weighted combination (w'tit, w'key, w'abs). The traditional factor set is replaced by P(t) with higher fitness, then calculated with a query word for the similarity of each paper, and the rank list is generated. The penalty function f is used to get the distance of the expert list.

Then, for each semantic meaning of ontology term, whether it exists in the extracting characteristic vocabulary is checked. If the semantic exists, the document and weight of the semantic term is calculated to manifest the text with semantic information.

After document feature extraction, a document index based on the concept to reflect the internal relation between text index terms is established, and ambiguity during annotation is excluded. An index based on the concept consists of feature words with their relation given by semantic parsing. Feature words connect through ontology instance and documents. The structure of the ontology concept index is shown in Fig. 4.

|

Ontology document retrieval

The procedure of document retrieval is listed below:

- The user inputs search words or phrases in the search interface, then the system removes function words and reserves the nouns and verbs. Term extraction from words is implemented to get semantic conceptual words and phrases. The result is passed to the query transition module.

- The query transition module sends the results to the ontology server to search for a corresponding semantic concept, including hypernym, hyponym, synonym, and conceptual meanings.[17] If the word is not found in the ontology database, it prompts the user to adjust the retrieval strategy.

- For the matching concept in domain ontology, the query transition module implements search, semantic judgment, and query extension to add semantic information to the query. The module submits the query to a retrieval agent for searching. For words with an uncertain semantic message, it executes a keyword matching method to search.

- Handled by the custom process module, the user interface then lists query results according to exact word, synonym, hypernym, and hyponym words.

Before the retrieval process, the system executes semantic analysis for the user query request. A keyword is extracted from stop words, and the determination of whether or not the keyword belongs to the ontology database is made. Through combining concepts in the ontology library, more semantic information is obtained by semantic reasoning. The pseudo-code of a query using the semantic analysis algorithm is shown in Fig. 5.

|

After applying semantic analysis on the user request, semantic information is able to be used in the retrieval strategy. The pseudo-code of information retrieval algorithm is shown in Fig. 6.

|

Experiment and results

The experimental design of the information retrieval model based on ontology

In order to evaluate the performance of the information retrieval model based on ontology, it is necessary to use ontology tools for modelling, such as Protégé[18] as an ontology modeling tool, ICTCLAS[19] as a word segmentation tool, Jena [20] as a semantic parsing tool, and Lucene as a semantic indexing tool.[20]

The data set contains 1000 scientific papers, as well as papers from the IEEE digital library, which are used to extract the core concepts in the domain ontology. Then the final conceptualization system is established. The literature is divided into 10 groups. Each group contains 100 papers related to a query subject or keywords (e.g., "computer architecture" and "operating system"). Therefore, 10 expert rank lists are available for retrieval.

The evaluation criterion considers the similarity of each paper towards every query word. For example, the mistaken sort term distance of the top neighboring papers is higher than the ones of the lowest papers. The formula below is used to collect the distance within rank list R and R':

Here, n represents the paper numbers in the rank list. The dis(i) represents the position distance for paper i in the rank list and expert rank list. P(t) represents the distance between the two rank lists of the denominator specification.

Analysis of experimental results

The genetic algorithm with simulated annealing method is compared in relation to iteration numbers and average distance of the rank list. The result is shown in Fig. 7.

|

The X-axis time is the number of iterations in two algorithms, and the Y-axis average distance is calculated by the formula prior, demonstrating the difference of the ranking list with the expert list. After 200 iterations, the average distance is close to overall optimal. The algorithm deduces the optimized weight combination of factors which are wtit = 3, wabs = 2, wkey = 0.6.

The different threshold similarity value ζ is taken, in which ζ = 0.5 means sim(Sq, Sj) ≥ 0.55. Every experiment counts retrieval documents set results |A|, ontology relevant documents |B|, and user query relevant document in the retrieval set |A ∩ B| to calculate the precision and recall rate. The result is shown in Table 1.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

References

- ↑ 1.0 1.1 Tang, M.; Bian, Y.; Tao, F. (2010). "The Research of Document Retrieval System Based on the Semantic Vector Space Model". Journal of Intelligence 5 (29): 167–77. http://en.cnki.com.cn/Article_en/CJFDTOTAL-QBZZ201005036.htm.

- ↑ 2.0 2.1 Ma, C.; Liang, W.; Zheng, M. et al. (2016). "A Connectivity-Aware Approximation Algorithm for Relay Node Placement in Wireless Sensor Networks". IEEE Sensors Journal 16 (2): 515-528. doi:10.1109/JSEN.2015.2456931.

- ↑ Yang, X.Q.; Yang, D.; Yuan, M. (2014). "Scientific Literature Retrieval Model Based on Weighted Term Frequency". Proceedings of the 2014 Tenth International Conference on Intelligent Information Hiding and Multimedia Signal Processing: 427–430. doi:10.1109/IIH-MSP.2014.113.

- ↑ Xu, M.; Yang, Q.; Kwak, K.S. (2016). "Distributed Topology Control With Lifetime Extension Based on Non-Cooperative Game for Wireless Sensor Networks". IEEE Sensors Journal 16 (9): 3332-3342. doi:10.1109/JSEN.2016.2527056.

- ↑ Yang, Y.; Du, J.P.; Ping, Y. (2015). "Ontology-based intelligent information retrieval system". Journal of Software 26 (7): 1675–87. https://mathscinet.ams.org/mathscinet-getitem?mr=3408856.

- ↑ Lu, T.; Liang, M. (2014). "Improvement of Text Feature Extraction with Genetic Algorithm". New Technology of Library and Information Service 30 (4): 48–57. doi:10.11925/infotech.1003-3513.2014.04.08.

- ↑ Vallet, D.; Fernández, M.; Castells, P. (2005). "An Ontology-Based Information Retrieval Model". Proceedings from ESWC 2005, The Semantic Web: Research and Applications: 455–70. doi:10.1007/11431053_31.

- ↑ Manning, C.D.; Raghavan, P.; Schütze, H. (2008). Introduction to Information Retrieval. Cambridge University Press. doi:10.1017/CBO9780511809071. ISBN 9780511809071.

- ↑ Jones, K.S.; Walker, S.; Robertson, S.E. (2000). "A probabilistic model of information retrieval: Development and comparative experiments: Part 1". Information Processing & Management: 779–808. doi:10.1016/S0306-4573(00)00015-7.

- ↑ Wong, S.K.M.; Ziarko, W.; Wong, P.C.N. (1985). "Generalized vector spaces model in information retrieval". Proceedings of the 8th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval: 18–25. doi:10.1145/253495.253506.

- ↑ Baeza-Yates, R.; Ribeiro-Neto, B. (1999). Modern Information Retrieval. Addison Wesley. pp. 544. ISBN 9780201398298.

- ↑ Premalatha, R.; Srinivasan, S. (2014). "Text processing in information retrieval system using vector space model". Proceedings from the 2014 International Conference on Information Communication and Embedded Systems: 1–6. doi:10.1109/ICICES.2014.7033837.

- ↑ Voorhees, E.M.; Harman, D.K., ed. (2005). TREC: Experiment and Evaluation in Information Retrieval. MIT Press. pp. 368. ISBN 9780262220736.

- ↑ Pereira, R.A.M.; Molinari, A.; Pasi, G. (2005). "Contextual weighted representations and indexing models for the retrieval of HTML documents". Soft Computing 9 (7): 481-92. doi:10.1007/s00500-004-0361-z.

- ↑ Zhang, K.; Nan, K.; Ma, Y. (2008). "Research on ontology-based information retrieval system models". Application Research of Computers 8 (25): 2241-49. https://www.oriprobe.com/journals/jsjyyyj/2008_8.html.

- ↑ Kim, H.; Han, S.-W. (2015). "An Efficient Sensor Deployment Scheme for Large-Scale Wireless Sensor Networks". IEEE Communications Letters 19 (1): 98–101. doi:10.1109/LCOMM.2014.2372015.

- ↑ Messerly, J.J.; Heidorn, G.E.; Richardson, S.D. et al. (7 March 1997). "Information retrieval utilizing semantic representation of text by identifying hypernyms and indexing multiple tokenized semantic structures to a same passage of text". Google Patents. https://patents.google.com/patent/US6161084.

- ↑ Keßler, C.; Raubal, M.; Wosniok, C. (2009). "Semantic Rules for Context-Aware Geographical Information Retrieval". Proceedings from EuroSSC 2009 Smart Sensing and Context: 77–92. doi:10.1007/978-3-642-04471-7_7.

- ↑ Cao, Y.-G.; Cao, Y.-Z.; Jin, M.-Z.; Liu, C. (2006). "Information Retrieval Oriented Adaptive Chinese Word Segmentation System". Journal of Software 3 (17). http://en.cnki.com.cn/Article_en/CJFDTOTAL-RJXB200603003.htm.

- ↑ Castells, P.; Fernández, M.; Vallet, D. et al. (2005). "Self-tuning Personalized Information Retrieval in an Ontology-Based Framework". Proceedings from On the Move to Meaningful Internet Systems 2005: 977–986. doi:10.1007/11575863_119.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. Grammar and punctuation was edited to American English, and in some cases additional context was added to text when necessary. In some cases important information was missing from the references, and that information was added.