Difference between revisions of "Data center"

Shawndouglas (talk | contribs) (Updated last of original Wikipedia content.) |

Shawndouglas (talk | contribs) m (Added image.) |

||

| Line 1: | Line 1: | ||

[[File:Intel Team Inside Facebook Data Center.jpg|thumb|right|400px|A team from Intel reviews the inner workings of Facebook's first built-from-scratch data center in 2013.]] | |||

A '''data center''' is a facility used to house computer systems and associated components such as telecommunications and storage systems. A data center generally includes redundant or backup power supplies, redundant data communications connections, environmental controls (e.g. air conditioning and fire suppression), and various security devices. Data centers vary in size<ref name="CCDA640-864">{{cite book |url=http://books.google.com/books?id=cuOoV5u3WCUC&pg=PA130 |title=Official Cert Guide: CCDA 640-864 |author=Bruno, Anthony; Jordan, Steve |publisher=Cisco Press |edition=4th |year=2011 |page=130 |isbn=9780132372145 |accessdate=26 August 2014}}</ref>, with some large data centers capable of using as much electricity as a "medium-size town."<ref name="GlanzData">{{cite web |url=http://www.nytimes.com/2012/09/23/technology/data-centers-waste-vast-amounts-of-energy-belying-industry-image.html |title=Power, Pollution and the Internet |author=Glanz, James |work=The New York Times |date=22 September 2012 |accessdate=26 August 2014}}</ref> | A '''data center''' is a facility used to house computer systems and associated components such as telecommunications and storage systems. A data center generally includes redundant or backup power supplies, redundant data communications connections, environmental controls (e.g. air conditioning and fire suppression), and various security devices. Data centers vary in size<ref name="CCDA640-864">{{cite book |url=http://books.google.com/books?id=cuOoV5u3WCUC&pg=PA130 |title=Official Cert Guide: CCDA 640-864 |author=Bruno, Anthony; Jordan, Steve |publisher=Cisco Press |edition=4th |year=2011 |page=130 |isbn=9780132372145 |accessdate=26 August 2014}}</ref>, with some large data centers capable of using as much electricity as a "medium-size town."<ref name="GlanzData">{{cite web |url=http://www.nytimes.com/2012/09/23/technology/data-centers-waste-vast-amounts-of-energy-belying-industry-image.html |title=Power, Pollution and the Internet |author=Glanz, James |work=The New York Times |date=22 September 2012 |accessdate=26 August 2014}}</ref> | ||

| Line 13: | Line 14: | ||

==Standards and design guidelines== | ==Standards and design guidelines== | ||

[[File:Datacenter-telecom.jpg|thumb|left|Racks of telecommunications equipment in part of a data center]] | [[File:Datacenter-telecom.jpg|thumb|left|240px|Racks of telecommunications equipment in part of a data center]] | ||

IT operations are a crucial aspect of most organizations' business continuity, often relying on their information systems to run business operations. Organizations thus depend on reliable infrastructure for IT operations in order to minimize any chance of disruption caused by power failure and/or security breach. That reliable infrastructure is normally built on a sound set of widely accepted standards and design guidelines. | IT operations are a crucial aspect of most organizations' business continuity, often relying on their information systems to run business operations. Organizations thus depend on reliable infrastructure for IT operations in order to minimize any chance of disruption caused by power failure and/or security breach. That reliable infrastructure is normally built on a sound set of widely accepted standards and design guidelines. | ||

Latest revision as of 22:37, 27 August 2014

A data center is a facility used to house computer systems and associated components such as telecommunications and storage systems. A data center generally includes redundant or backup power supplies, redundant data communications connections, environmental controls (e.g. air conditioning and fire suppression), and various security devices. Data centers vary in size[1], with some large data centers capable of using as much electricity as a "medium-size town."[2]

The main purpose of many data centers is for running applications that handle the core business and operational data of one or more organizations. Often those applications will be composed of multiple hosts, each running a single component. Common components of such applications include databases, file servers, application servers, and middleware. Such systems may be proprietary and developed internally by the organization or bought from enterprise software vendors. However, a data center may also solely be concerned with operations architecture or other services.

Data centers are also used for off-site backups. Companies may subscribe to backup services provided by a data center. This is often used in conjunction with backup tapes. Backups can be taken off servers locally on to tapes. However, tapes stored on site pose a security threat and are also susceptible to fire and flooding. Larger companies may also send their backups off site for added security. This can be done by backing up to a data center.[3] Encrypted backups can be sent over the Internet to another data center where they can be stored securely.

History

Data centers have their roots in the huge computer rooms of the early ages of the computing industry. Early computer systems were complex to operate and maintain, and required a special environment in which to operate. Many cables were necessary to connect all the components, and methods to accommodate and organize these were devised, such as standard racks to mount equipment, raised floors, and cable trays (installed overhead or under the elevated floor). Also, a single mainframe required a great deal of power and had to be cooled to avoid overheating. Security was important; computers were expensive, and were often used for military purposes.[4]

During the boom of the microcomputer industry, especially during the 1980s, computers started to be deployed everywhere, in many cases with little or no care about operating requirements. However, as information technology (IT) operations started to grow in complexity, companies grew aware of the need to control IT resources.[5] With the advent of Linux and the subsequent proliferation of freely available Unix-compatible PC operating systems during the 1990s, as well as MS-DOS finally giving way to a multi-tasking capable Windows operating system, personal computers started to replace the older systems found in computer rooms.[4][6] These were called "servers," as time sharing operating systems like Unix rely heavily on the client-server model to facilitate sharing unique resources between multiple users. The availability of inexpensive networking equipment, coupled with new standards for network structured cabling, made it possible to use a hierarchical design that put the servers in a specific room inside the company. The use of the term "data center," as applied to specially designed computer rooms, started to gain popular recognition.[7]

The dot-com boom of the late '90s and early '00s brought about significant investment into what would be called Internet data centers (IDCs). As these grew in size, new technologies and practices were designed to better handle the scale and operational requirements of these facilities. These practices eventually migrated toward private data centers and were adopted largely because of their practical results.[8][4] As cloud computing became more prominent in the 2000s, business and government organizations scrutinized data centers to a higher degree in areas such as security, availability, environmental impact, and adherence to standards.[8]

Standards and design guidelines

IT operations are a crucial aspect of most organizations' business continuity, often relying on their information systems to run business operations. Organizations thus depend on reliable infrastructure for IT operations in order to minimize any chance of disruption caused by power failure and/or security breach. That reliable infrastructure is normally built on a sound set of widely accepted standards and design guidelines.

The Telecommunications Industry Association's (TIA's) Telecommunications Infrastructure Standard for Data Centers (ANSI/TIA-942) is one such example, specifying "the minimum requirements for the telecommunications infrastructure" of organizations large and small, from large "multi-tenant Internet hosting data centers" to smaller "single-tenant enterprise data centers."[9] Up until early 2014, TIA also ranked data centers from Tier 1, essentially a server room, to Tier 4, which hosts mission-critical computer systems with fully redundant subsystems and compartmentalized security zones controlled by biometric access controls methods.[9]

The Uptime Institute, "an unbiased, third-party data center research, education, and consulting organization,"[10] also has a four-tier standard (described in their document Tier Standard: Operational Sustainability) that describes the availability of data from the hardware at a data center, with the higher tiers offering greater availability.[11] The institute, however, at times voiced discontent with TIA's use of tiers in its standard, and in March 2014 TIA announced it would remove the word "tier" from its ANSI/TIA-942 standard.[12]

Other standards and guidelines for data center planning, installation, and operation include:

- BICSI's (Building Industry Consulting Services International's) ANSI/BICSI 002-2011: a data center standard which integrates information and standards from other entities[13]

- OVE's ÖVE/ÖNORM EN 50600-1: a developing European standard for "data centre facilities and infrastructures"[14][15]

- Telcordia's NEBS (Network Equipment - Building System) documents: telecommunication and environmental design guidelines for data center spaces[16]

- eco's Datacenter Star Audit (DCSA): auditing documents that allow IT personnel to assess the functionality of planned or operational data centers[17]

- American Institute of CPAs' (AICPA's) SOC (Service Organization Control) Reports: auditing reports which provide "a standard benchmark by which two data center audits can be compared against the same set of criteria"[18][19]

- International Organization for Standardization's (ISO's) various standards: optimal operation of a data center is dictated by several ISO standards, including ISO 14001:2004 Environmental Management System Standard, ISO / IEC 27001:2005 Information Security Management System Standard, and ISO 50001:2011 Energy Management System Standard[20][21]

Design considerations

A data center can occupy one room of a building, one or more floors, or an entire building. Most of the equipment is often in the form of servers mounted in 19-inch rack cabinets, which are usually placed in single rows forming corridors or aisles between them. This allows people access to the front and rear of each cabinet. Servers differ greatly in size from rack units to large freestanding storage silos which occupy many square feet of floor space. Some equipment such as mainframe computers and storage devices are often as big as the racks themselves and are placed alongside them. Local building codes may govern minimum ceiling heights.

What follows is a list of other considerations made during the design and implementation phase.

| Data center design considerations | |

|---|---|

| Consideration | Description |

| Design programming | Architecture of the building aside, three additional considerations to design programming data centers are facility topology design (space planning), engineering infrastructure design (mechanical systems such as cooling and electrical systems including power), and technology infrastructure design (cable plant). Each is influenced by performance assessments and modelling to identify gaps pertaining to the operator's performance desires over time.[1] |

| Design modeling and recommendation | Modeling criteria are used to develop future-state scenarios for space, power, cooling, and costs.[22] Based on previous design reviews and the modeling results, recommendations on power, cooling capacity, and resiliency level can be made. Additionally, availability expectations may also be reviewed. |

| Conceptual and detail design | A conceptual design combines the results of design recommendation with "what if" scenarios to ensure all operational outcomes are met in order to future-proof the facility, including the addition of modular expansion components that can be constructed, moved, or added to quickly as needs change. This process yields a proof of concept, which is then incorporated into a detail design that focuses on creating the facility schematics, construction documents, and IT infrastructure design and documentation.[1] |

| Mechanical and electrical engineering infrastructure design | Mechanical engineering infrastructure design addresses mechanical systems involved in maintaining the interior environment of a data center, while electrical engineering infrastructure design focuses on designing electrical configurations that accommodate the data center's various services and reliability requirements.[23]

Both phases involve recognizing the need to save space, energy, and costs. Availability expectations are fully considered as part of these savings. For example, if the estimated cost of downtime within a specified time unit exceeds the amortized capital costs and operational expenses, a higher level of availability should be factored into the design. If, however, the cost of avoiding downtime greatly exceeds the cost of downtime itself, a lower level of availability will likely get factored into the design.[24] |

| Technology infrastructure design | Numerous cabling systems for data center environments exist, including horizontal cabling; voice, modem, and facsimile telecommunications services; premises switching equipment; computer and telecommunications management connections; monitoring station connections; and data communications. Technology infrastructure design addresses all of these systems.[1] |

| Site selection | When choosing a location for a data center, aspects such as proximity to available power grids, telecommunications infrastructure, networking services, transportation lines, and emergency services must be considered. Another consideration is climatic conditions, which may dictate what cooling technologies should be deployed.[25] |

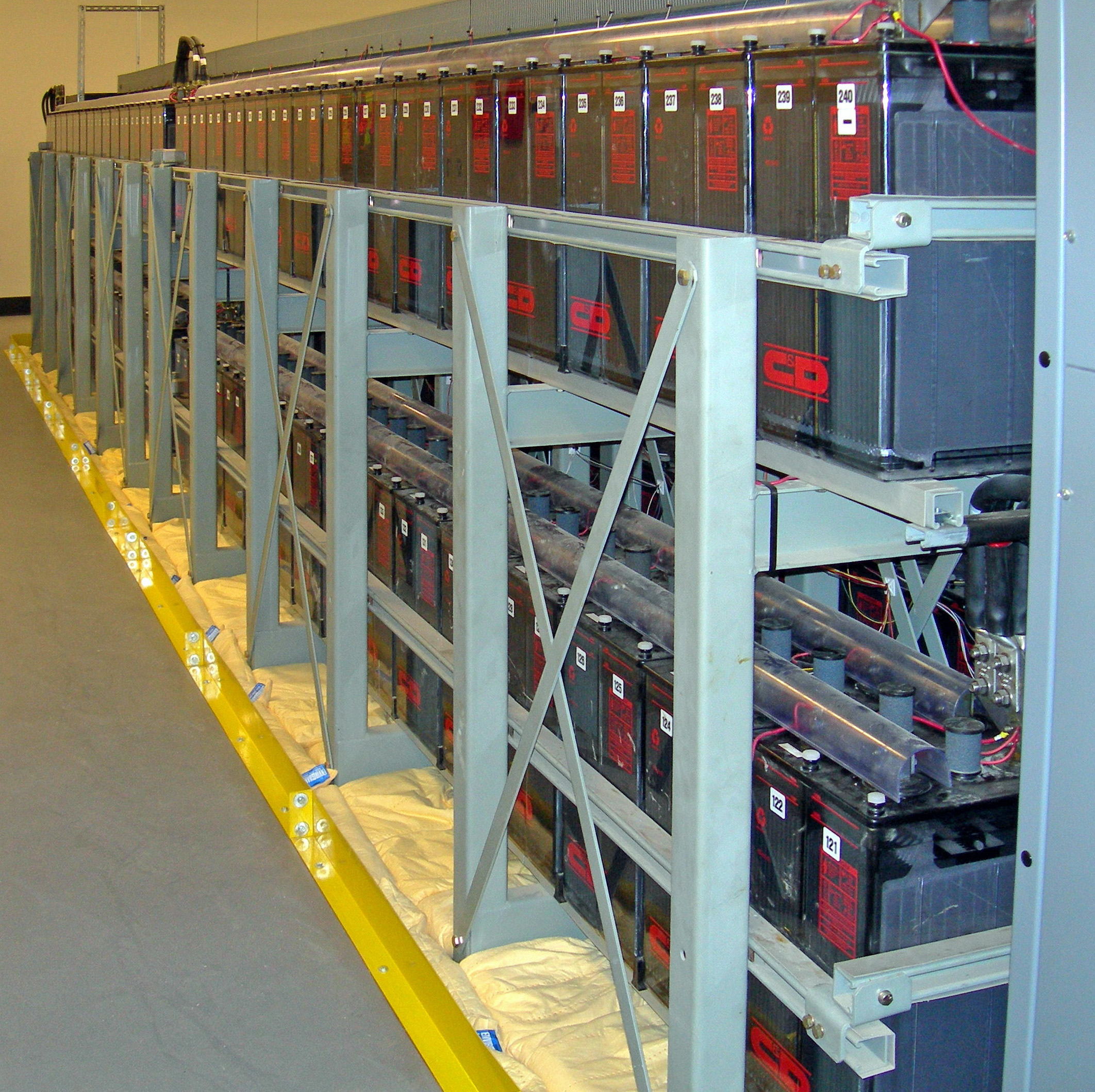

| Power management | The power supply to a data center must be uninterrupted. To achieve this, designers will use a combination of uninterruptible power supplies, battery banks, and/or fueled turbine generators. Static transfer switches are typically used to ensure instantaneous switchover from one supply to the other in the event of a power failure. |

| Environmental control | As heat is a byproduct of supplying power to electrical components, appropriate cooling mechanisms like air conditioning or economizer cooling — which uses outside air to cool components — must be used. In addition to cooling the air, humidity levels must be controlled to prevent both condensation from too much humidity and static electricity discharge from too little humidity.[27] Additional considerations are made for using raised flooring to cater for better and uniform air distribution and circulation as well as providing cabling space.[28] However, the concern of zinc whisker build-up on those raised floor tiles and other metal racks must also be addressed; they risk short circuiting electrical components after being dislodged during maintenance and installs.[29] |

| Fire protection | Like most other buildings, fire protection and prevention systems are required. However, due to the investment in and criticality of the data center, extra measures are designed into the facility and its operation. High-sensitivity aspirating smoke detectors can be connected to several types of alarms, allowing for a soft warning alarm for technicians to investigate at one threshold and the activation of fire suppression systems at another threshold. Aside from manual fire extinguishers, gas-based or clean agent fire suppression systems provide fire suppression without the possibility of ruining more equipment than necessary using water-based systems, which are still used for the most critical of situations.[30] Passive fire wall materials are also installed to restrict fire to only a portion of the facility. |

| Physical security | Depending on the sensitivity of information contained in the data center, physical access controls are used to prevent unauthorized entry into the facility. Small posts of bollards may be placed to prevent vehicles or carts above a certain size from passing. Access control vestibules or "man traps" with two sets of interlocking doors may be used to force verification of credentials before admittance into the center. Video cameras, security guards, and fingerprint recognition devices may also be applied as part of a security protocol.[31] |

Carbon footprint

Energy use is a central issue for data centers. Power draw for data centers ranges from a few kW for a rack of servers in a closet to several tens of MW for large facilities. Some facilities have power densities more than 100 times that of a typical office building.[32] For power-dense facilities, electricity costs are a dominant operating expense; in 2007 electricity purchases accounted for over 10 percent of the total cost of ownership of a data center.[33] On a larger, national scale, the U.S. Environmental Protection Agency (EPA) found 1.5 percent of the country's power supply (61 billion kilowatt-hours) was used to power data centers in 2006. The following year in Western Europe, 56 terawatt-hours of energy fed data centers, with the European Union estimating that number to nearly double by 2020.[34]

As with any other power consumption, a data center's power consumption has an associated carbon footprint associated with it. That footprint is generally defined as "the carbon emissions equivalent of the total amount of electricity a particular data center consumes."[34] Data centers that use numerous diesel generators for backup power stand to increase that carbon footprint even further.[35] However, in the U.S. at least, new non-mobile diesel generators will need to comply with new EPA Tier 4 Final requirements of reduced emissions and toxins by 2015[36]

One way designers and operators of data centers can track energy use is through an energy efficiency analysis, which measures factors such as a data center's power use effectiveness (PUE) against industry standards, identifies mechanical and electrical sources of inefficiency, and identifies air-management metrics.[37] The average data center in the US has a PUE of 2.0[38], meaning that the facility uses one watt of overhead power for every watt delivered to IT equipment. Energy efficiency can also be analyzed through the use of a power and cooling analysis. This can help identify hot spots, over-cooled areas that can handle greater power use density, the breakpoint of equipment loading, the effectiveness of a raised-floor strategy, and optimal equipment positioning (such as AC units) to balance temperatures across the data center.

The building's location is also a factor that affects the energy consumption and environmental effects of a data center. In areas where climate favors cooling and lots of renewable electricity is available, the environmental footprint will be more moderate. Thus countries with such favorable conditions — like Canada[39], Finland[40], Sweden[41], and Switzerland[42] — are trying to attract cloud computing data centers. Meanwhile, companies like Apple have tried to lessen their data centers' carbon footprints by siting new data centers in places like the more arid U.S. state of Nevada.[43]

Efficiency ratings

In the U.S., the EPA began offering in 2010 an Energy Star rating for standalone or large data centers. To qualify for the ecolabel, a data center had to be within the top quartile of energy efficiency of all reported facilities.[44] The European Union also developed a similar initiative: The European Code of Conduct for Energy Efficiency in Data Centre.[45]

Notes

This article reuses numerous content elements from the Wikipedia article.

References

- ↑ 1.0 1.1 1.2 1.3 Bruno, Anthony; Jordan, Steve (2011). Official Cert Guide: CCDA 640-864 (4th ed.). Cisco Press. p. 130. ISBN 9780132372145. http://books.google.com/books?id=cuOoV5u3WCUC&pg=PA130. Retrieved 26 August 2014.

- ↑ Glanz, James (22 September 2012). "Power, Pollution and the Internet". The New York Times. http://www.nytimes.com/2012/09/23/technology/data-centers-waste-vast-amounts-of-energy-belying-industry-image.html. Retrieved 26 August 2014.

- ↑ Geer, David (25 July 2012). "4 Steps For Secure Tape Backups". InformationWeek. UBM Tech. http://www.informationweek.com/4-steps-for-secure-tape-backups/d/d-id/1105506?. Retrieved 27 August 2014.

- ↑ 4.0 4.1 4.2 Bartels, Angela (31 August 2011). "[INFOGRAPHIC Data Center Evolution: 1960 to 2000"]. The Rackspace Blog! & Newsroom. Rackspace, US Inc. http://www.rackspace.com/blog/datacenter-evolution-1960-to-2000/. Retrieved 26 August 2014.

- ↑ Murray, John P. (26 October 1981). "Data Center Problems: You're Not Alone". Computerworld. http://books.google.com/books?id=1REkdf3I86oC&pg=PA35. Retrieved 26 August 2014.

- ↑ Barton, Jim (1 October 2003). "From Server Room to Living Room". Queue. Association for Computing Machinery. http://queue.acm.org/detail.cfm?id=945076. Retrieved 26 August 2014.

- ↑ Axelrod, C. Warren; Blanding, Steve (ed.) (1998). "Enterprise Operations Management Handbook". CRC Press. pp. 75–84. ISBN 9781420052169. http://books.google.com/books?id=XrEwl7VWXXMC&pg=PA75. Retrieved 26 August 2014.

- ↑ 8.0 8.1 Katz, Randy H (1 February 2009). "Tech Titans Building Boom". IEEE Spectrum. IEEE. http://spectrum.ieee.org/green-tech/buildings/tech-titans-building-boom. Retrieved 26 August 2014.

- ↑ 9.0 9.1 "TIA-942: Telecommunications Infrastructure Standard for Data Centers". Telecommunications Industry Association. 1 March 2014. https://global.ihs.com/doc_detail.cfm?&rid=TIA&input_doc_number=TIA-942&item_s_key=00414811&item_key_date=860905&input_doc_number=TIA-942&input_doc_title=#abstract. Retrieved 26 August 2014.

- ↑ "About Uptime Institute". 451 Group, Inc. http://uptimeinstitute.com/about-us. Retrieved 26 August 2014.

- ↑ "Uptime Institute Publications". 451 Group, Inc. http://uptimeinstitute.com/publications. Retrieved 26 August 2014.

- ↑ "TIA to remove the word ‘Tier’ from its 942 Data Center standards". Cabling Installation & Maintenance. PennWell Corporation. 18 March 2014. http://www.cablinginstall.com/articles/2014/03/tia-942-tiers.html. Retrieved 26 August 2014.

- ↑ "ANSI/BICSI 002-2011, Data Center Design and Implementation Best Practices". BISCI. https://www.bicsi.org/book_details.aspx?Book=BICSI-002-CM-11-v5&d=0. Retrieved 26 August 2014.

- ↑ "The European Standard EN 50600". CIS International. http://www.cis-cert.com/Pages/com/System-Zertifizierung/Data-Centers/Certification/European-Standard-EN-50600.aspx. Retrieved 26 August 2014.

- ↑ "ÖVE/ÖNORM EN 50600-1:2013-06-01". Österreichischer Verband für Elektrotechnik. https://www.ove.at/webshop/artikel/9f5a9db895-ove-onorm-en-50600-1-2013-06-01.html. Retrieved 26 August 2014.

- ↑ "NEBS Documents and Technical Services". Telcordia Technologies, Inc. http://telecom-info.telcordia.com/site-cgi/ido/docs2.pl?ID=239065400&page=nebs. Retrieved 26 August 2014.

- ↑ "About DCSA". eco. http://www.dcaudit.com/about-dcsa.html. Retrieved 26 August 2014.

- ↑ Klein, Mike (3 March 2011). "SAS 70, SSAE 16, SOC and Data Center Standards". Data Center Knowledge. iNET Interactive. http://www.datacenterknowledge.com/archives/2011/03/03/sas-70-ssae-16-soc-and-data-center-standards/. Retrieved 26 August 2014.

- ↑ "SOC Reports Information for CPAs". AICPA. http://www.aicpa.org/interestareas/frc/assuranceadvisoryservices/pages/cpas.aspx. Retrieved 26 August 2014.

- ↑ "ISO Certified Data Centers". Equinix, Inc. http://www.equinix.com/solutions/by-services/colocation/standards-and-compliance/iso-certified-data-centers/. Retrieved 26 August 2014.

- ↑ "Inside our data centers". Google Data Centers. Google. https://www.google.com/about/datacenters/inside/. Retrieved 26 August 2014.

- ↑ Mullins, Robert (29 June 2011). "Romonet Offers Predictive Modelling Tool For Data Center Planning". Information Week Network Computing. UBM Tech. http://www.networkcomputing.com/data-centers/romonet-offers-predictive-modeling-tool-for-data-center-planning/d/d-id/1232857?. Retrieved 26 August 2014.

- ↑ Jew, Jonathan (May/June 2010). "BICSI Data Center Standard: A Resource for Today’s Data Center Operators and Designers". BICSI News Magazine. BISCI. pp. 26–30. http://www.nxtbook.com/nxtbooks/bicsi/news_20100506/#/26. Retrieved 26 August 2014.

- ↑ Clark, Jeffrey (12 October 2011). "The Price of Data Center Availability". The Data Center Journal. http://www.datacenterjournal.com/design/the-price-of-data-center-availability/. Retrieved 26 August 2014.

- ↑ Tucci, Linda (7 May 2008). "Five tips on selecting a data center location". SearchCIO. TechTarget. http://searchcio.techtarget.com/news/1312614/Five-tips-on-selecting-a-data-center-location. Retrieved 26 August 2014.

- ↑ "Evaluating the Economic Impact of UPS Technology" (PDF). Liebert Corporation. 2004. Archived from the original on 22 November 2010. https://web.archive.org/web/20101122074817/http://emersonnetworkpower.com/en-US/Brands/Liebert/Documents/White%20Papers/Evaluating%20the%20Economic%20Impact%20of%20UPS%20Technology.pdf. Retrieved 27 August 2014.

- ↑ ASHRAE Technical Committee 9.9 (2012). Thermal Guidelines for Data Processing Environments (3rd ed.). American Society of Heating, Refrigerating and Air-Conditioning Engineers. pp. 136. ISBN 9781936504336. http://books.google.com/books?id=AgWcMQEACAAJ. Retrieved 27 August 2014.

- ↑ GR-2930 "NEBS: Raised Floor Generic Requirements for Network and Data Centers". Telcordia Technologies, Inc. July 2012. http://telecom-info.telcordia.com/site-cgi/ido/docs.cgi?ID=SEARCH&DOCUMENT=GR-2930& GR-2930. Retrieved 27 August 2014.

- ↑ "Other Metal Whiskers". Tin Whisker (and Other Metal Whisker) Homepage. NASA. 24 January 2011. http://nepp.nasa.gov/whisker/other_whisker/index.htm. Retrieved 27 August 2014.

- ↑ Tubbs, Jeffrey; DiSalvo, Garr; Neviackas, Andrew (December 2013). "Data Center Fire Protection". FacilitiesNet. Trade Press. http://www.facilitiesnet.com/datacenters/article/A-Comprehensive-Approach-To-Data-Center-Fire-Safety--14593. Retrieved 27 August 2014.

- ↑ "Effective Data Center Physical Security Best Practices for SAS 70 Compliance". NDB LLP. 2008. http://www.sas70.us.com/industries/data-center-colocations.php. Retrieved 27 August 2014.

- ↑ "Data Center Energy Consumption Trends". U.S. Department of Energy. 30 May 2009. Archived from the original on 22 February 2012. https://web.archive.org/web/20120222115559/http://www1.eere.energy.gov/femp/program/dc_energy_consumption.html. Retrieved 27 August 2014.

- ↑ Koomey, Jonathan G. ; Belady, Christian; Patterson, Michael; Santos, Anthony; Lange, Klaus-Dieter (17 August 2009). "Assessing Trends Over Time in Performance, Costs, and Energy Use for Servers" (PDF). Intel Corporation. http://www.intel.com/assets/pdf/general/servertrendsreleasecomplete-v25.pdf. Retrieved 27 August 2014.

- ↑ 34.0 34.1 Bouley, Dennis (2010). "Estimating a Data Center's Electrical Carbon Footprint" (PDF). Schneider Electric. http://ensynch.com/content/dam/insight/en_US/pdfs/apc/apc-estimating-data-centers-carbon-footprint.pdf. Retrieved 27 August 2014.

- ↑ Glanz, James (23 September 2012). "Data Barns in a Farm Town, Gobbling Power and Flexing Muscle". The New York Times. http://www.nytimes.com/2012/09/24/technology/data-centers-in-rural-washington-state-gobble-power.html. Retrieved 27 August 2014.

- ↑ "Answers to Your Tier 4 Questions". Cummins Power Generation, Inc. http://tier4answers.com/tier-4-answers. Retrieved 27 August 2014.

- ↑ "Efficiency: How we do it". Google Data Centers. Google. http://www.google.com/about/datacenters/efficiency/internal/. Retrieved 27 August 2014.

- ↑ "Report to Congress on Server and Data Center Energy Efficiency" (PDF). ENERGY STAR Program, EPA. 7 August 2007. http://www.energystar.gov/ia/partners/prod_development/downloads/EPA_Datacenter_Report_Congress_Final1.pdf. Retrieved 27 August 2014.

- ↑ Ladurantaye, Steve (22 June 2011). "Canada Called Prime Real Estate for Massive Data Computers". The Globe & Mail. http://www.theglobeandmail.com/report-on-business/canada-called-prime-real-estate-for-massive-data-computers/article2071677/. Retrieved 27 August 2014.

- ↑ "Finland - First Choice for Siting Your Cloud Computing Data Center". Invest in Finland. Archived from the original on 10 January 2013. https://web.archive.org/web/20130110111357/http://www.fincloud.freehostingcloud.com/. Retrieved 27 August 2014.

- ↑ "Stockholm sets sights on data center customers". Stockholm Business Region. 19 August 2010. Archived from the original on 19 August 2010. https://web.archive.org/web/20100819190918/http://www.stockholmbusinessregion.se/templates/page____41724.aspx?epslanguage=EN. Retrieved 27 August 2014.

- ↑ Wheeland, Matthew (30 June 2010). "Swiss Carbon-Neutral Servers Hit the Cloud". GreenBiz Group. http://www.greenbiz.com/news/2010/06/30/swiss-carbon-neutral-servers-hit-cloud. Retrieved 27 August 2014.

- ↑ Levy, Steven (21 April 2014). "Apple Aims to Shrink Its Carbon Footprint With New Data Centers". Wired. Condé Nast. http://www.wired.com/2014/04/green-apple/. Retrieved 27 August 2014.

- ↑ Pouchet, Jack (15 June 2010). "Introducing EPA ENERGY STAR for Data Centers". Efficient Data Centers. Emerson Electric Co. Archived from the original on 29 February 2012. https://web.archive.org/web/20120229143352/http://www.efficientdatacenters.com/post/2010/06/15/Introducing-EPA-ENERGY-STARc2ae-for-Data-Centers.aspx. Retrieved 27 August 2014.

- ↑ "Data Centres Energy Efficiency". Joint Research Centre, Institute for Energy and Transport. 22 August 2014. http://iet.jrc.ec.europa.eu/energyefficiency/ict-codes-conduct/data-centres-energy-efficiency. Retrieved 27 August 2014.