Difference between revisions of "Journal:Histopathology image classification: Highlighting the gap between manual analysis and AI automation"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) (Finished adding rest of content) |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

|download = [https://www.frontiersin.org/journals/oncology/articles/10.3389/fonc.2023.1325271/pdf?isPublishedV2=false https://www.frontiersin.org/journals/oncology/articles/10.3389/fonc.2023.1325271/pdf] (PDF) | |download = [https://www.frontiersin.org/journals/oncology/articles/10.3389/fonc.2023.1325271/pdf?isPublishedV2=false https://www.frontiersin.org/journals/oncology/articles/10.3389/fonc.2023.1325271/pdf] (PDF) | ||

}} | }} | ||

{{ | {{Ombox math}} | ||

}} | |||

==Abstract== | ==Abstract== | ||

The field of [[Histopathology|histopathological]] [[Medical imaging|image]] analysis has evolved significantly with the advent of [[digital pathology]], leading to the development of [[Laboratory automation|automated]] models capable of classifying tissues and structures within diverse [[Pathology|pathological]] images. [[Artificial intelligence]] (AI) algorithms, such as [[convolutional neural network]]s (CNNs), have shown remarkable capabilities in pathology image analysis tasks, including tumor identification, metastasis detection, and patient prognosis assessment. However, traditional manual analysis methods have generally shown low accuracy in diagnosing colorectal [[cancer]] using histopathological images. | The field of [[Histopathology|histopathological]] [[Medical imaging|image]] analysis has evolved significantly with the advent of [[digital pathology]], leading to the development of [[Laboratory automation|automated]] models capable of classifying tissues and structures within diverse [[Pathology|pathological]] images. [[Artificial intelligence]] (AI) algorithms, such as [[convolutional neural network]]s (CNNs), have shown remarkable capabilities in pathology image analysis tasks, including tumor identification, metastasis detection, and patient prognosis assessment. However, traditional manual analysis methods have generally shown low accuracy in diagnosing colorectal [[cancer]] using histopathological images. | ||

| Line 36: | Line 30: | ||

==Introduction== | ==Introduction== | ||

[[Histopathology|Histopathological]] [[Medical imaging|image]] analysis is a fundamental method for diagnosing and screening [[cancer]], especially in disorders affecting the digestive system. It is a type of analysis used to diagnose and treat cancer. In the case of [[Pathology|pathologists]], the physical and visual examinations of complex images often come in the form of resolutions up to 100,000 x 100,000 pixels. On the other hand, the method of pathological image analysis has long been dependent on this approach, known for its time-consuming and labor-intensive characteristics. New approaches are needed to increase the efficiency and accuracy of pathological image analysis. Up to this point, the realization of [[digital pathology]] approaches has seen significant progress. Digitization of high-resolution histopathology images allows comprehensive analysis using complex computational methods. As a result, there has been a significant increase in interest in medical image analysis for creating [[Laboratory automation|automatic]] models that can precisely categorize relevant tissues and structures in various clinical images. Early research in this area focused on predicting the malignancy of colon lesions and distinguishing between malignant and normal tissue by extracting features from [[Microscope|microscopic]] images. Esgiar ''et al.'' | [[Histopathology|Histopathological]] [[Medical imaging|image]] analysis is a fundamental method for diagnosing and screening [[cancer]], especially in disorders affecting the digestive system. It is a type of analysis used to diagnose and treat cancer. In the case of [[Pathology|pathologists]], the physical and visual examinations of complex images often come in the form of resolutions up to 100,000 x 100,000 pixels. On the other hand, the method of pathological image analysis has long been dependent on this approach, known for its time-consuming and labor-intensive characteristics. New approaches are needed to increase the efficiency and accuracy of pathological image analysis. Up to this point, the realization of [[digital pathology]] approaches has seen significant progress. Digitization of high-resolution histopathology images allows comprehensive analysis using complex computational methods. As a result, there has been a significant increase in interest in medical image analysis for creating [[Laboratory automation|automatic]] models that can precisely categorize relevant tissues and structures in various clinical images. Early research in this area focused on predicting the malignancy of colon lesions and distinguishing between malignant and normal tissue by extracting features from [[Microscope|microscopic]] images. Esgiar ''et al.''<ref>{{Cite journal |last=Nasser Esgiar |first=A. |last2=Naguib |first2=R.N.G. |last3=Sharif |first3=B.S. |last4=Bennett |first4=M.K. |last5=Murray |first5=A. |date=Sept./1998 |title=Microscopic image analysis for quantitative measurement and feature identification of normal and cancerous colonic mucosa |url=http://ieeexplore.ieee.org/document/735785/ |journal=IEEE Transactions on Information Technology in Biomedicine |volume=2 |issue=3 |pages=197–203 |doi=10.1109/4233.735785}}</ref> analyzed 44 healthy and 58 cancerous features obtained from microscope images. As a result of the analysis, the percentage of occurrence matrices used equaled 90 percent. These first steps form the basis for more complex procedures that integrate rapid image processing techniques and the functions of visualization software. Digital pathology has recently emerged as a widespread diagnostic tool, primarily through [[artificial intelligence]] (AI) algorithms.<ref>{{Cite journal |last=Shafi |first=Saba |last2=Parwani |first2=Anil V. |date=2023-10-03 |title=Artificial intelligence in diagnostic pathology |url=https://diagnosticpathology.biomedcentral.com/articles/10.1186/s13000-023-01375-z |journal=Diagnostic Pathology |language=en |volume=18 |issue=1 |pages=109 |doi=10.1186/s13000-023-01375-z |issn=1746-1596 |pmc=PMC10546747 |pmid=37784122}}</ref><ref>{{Cite journal |last=Zuraw |first=Aleksandra |last2=Aeffner |first2=Famke |date=2022-01 |title=Whole-slide imaging, tissue image analysis, and artificial intelligence in veterinary pathology: An updated introduction and review |url=http://journals.sagepub.com/doi/10.1177/03009858211040484 |journal=Veterinary Pathology |language=en |volume=59 |issue=1 |pages=6–25 |doi=10.1177/03009858211040484 |issn=0300-9858}}</ref> It has demonstrated impressive capability in processing pathology images in an advanced manner.<ref>{{Cite journal |last=Mobadersany |first=Pooya |last2=Yousefi |first2=Safoora |last3=Amgad |first3=Mohamed |last4=Gutman |first4=David A. |last5=Barnholtz-Sloan |first5=Jill S. |last6=Velázquez Vega |first6=José E. |last7=Brat |first7=Daniel J. |last8=Cooper |first8=Lee A. D. |date=2018-03-27 |title=Predicting cancer outcomes from histology and genomics using convolutional networks |url=https://pnas.org/doi/full/10.1073/pnas.1717139115 |journal=Proceedings of the National Academy of Sciences |language=en |volume=115 |issue=13 |doi=10.1073/pnas.1717139115 |issn=0027-8424 |pmc=PMC5879673 |pmid=29531073}}</ref><ref>{{Cite journal |last=Yi |first=Faliu |last2=Yang |first2=Lin |last3=Wang |first3=Shidan |last4=Guo |first4=Lei |last5=Huang |first5=Chenglong |last6=Xie |first6=Yang |last7=Xiao |first7=Guanghua |date=2018-12 |title=Microvessel prediction in H&E Stained Pathology Images using fully convolutional neural networks |url=https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-018-2055-z |journal=BMC Bioinformatics |language=en |volume=19 |issue=1 |pages=64 |doi=10.1186/s12859-018-2055-z |issn=1471-2105 |pmc=PMC5828328 |pmid=29482496}}</ref> Advanced techniques, identification of tumors, detection of metastasis, and assessment of patient prognosis are utilized regularly. Through the utilization of this process, the automatic segmentation of pathological images, generation of predictions, and the utilization of relevant observations from this complex visual data have been planned.<ref name=":0">{{Cite journal |last=Kather |first=Jakob Nikolas |last2=Weis |first2=Cleo-Aron |last3=Bianconi |first3=Francesco |last4=Melchers |first4=Susanne M. |last5=Schad |first5=Lothar R. |last6=Gaiser |first6=Timo |last7=Marx |first7=Alexander |last8=Zöllner |first8=Frank Gerrit |date=2016-06-16 |title=Multi-class texture analysis in colorectal cancer histology |url=https://www.nature.com/articles/srep27988 |journal=Scientific Reports |language=en |volume=6 |issue=1 |pages=27988 |doi=10.1038/srep27988 |issn=2045-2322 |pmc=PMC4910082 |pmid=27306927}}</ref><ref>{{Cite journal |last=Kim |first=Inho |last2=Kang |first2=Kyungmin |last3=Song |first3=Youngjae |last4=Kim |first4=Tae-Jung |date=2022-11-15 |title=Application of Artificial Intelligence in Pathology: Trends and Challenges |url=https://www.mdpi.com/2075-4418/12/11/2794 |journal=Diagnostics |language=en |volume=12 |issue=11 |pages=2794 |doi=10.3390/diagnostics12112794 |issn=2075-4418 |pmc=PMC9688959 |pmid=36428854}}</ref> | ||

[[Convolutional neural network]]s (CNNs) have received significant focus among various [[machine learning]] (ML) techniques in AI research. As a result of the application of deep learning in previous biological research, ML has been extensively accepted and used.<ref>{{Cite journal |last=Le |first=Nguyen Quoc Khanh |date=2022-01 |title=Potential of deep representative learning features to interpret the sequence information in proteomics |url=https://analyticalsciencejournals.onlinelibrary.wiley.com/doi/10.1002/pmic.202100232 |journal=PROTEOMICS |language=en |volume=22 |issue=1-2 |pages=2100232 |doi=10.1002/pmic.202100232 |issn=1615-9853}}</ref><ref>{{Cite journal |last=Yuan |first=Qitong |last2=Chen |first2=Keyi |last3=Yu |first3=Yimin |last4=Le |first4=Nguyen Quoc Khanh |last5=Chua |first5=Matthew Chin Heng |date=2023-01-19 |title=Prediction of anticancer peptides based on an ensemble model of deep learning and machine learning using ordinal positional encoding |url=https://academic.oup.com/bib/article/doi/10.1093/bib/bbac630/6987656 |journal=Briefings in Bioinformatics |language=en |volume=24 |issue=1 |pages=bbac630 |doi=10.1093/bib/bbac630 |issn=1467-5463}}</ref><ref name=":1">{{Cite journal |last=Anari |first=Shokofeh |last2=Tataei Sarshar |first2=Nazanin |last3=Mahjoori |first3=Negin |last4=Dorosti |first4=Shadi |last5=Rezaie |first5=Amirali |date=2022-08-25 |editor-last=Darba |editor-first=Araz |title=Review of Deep Learning Approaches for Thyroid Cancer Diagnosis |url=https://www.hindawi.com/journals/mpe/2022/5052435/ |journal=Mathematical Problems in Engineering |language=en |volume=2022 |pages=1–8 |doi=10.1155/2022/5052435 |issn=1563-5147}}</ref> CNNs distinguish themselves from other ML methods because of their extraordinary accuracy, generalization capacity, and computational economy. Each patient’s histopathology photographs contain important quantitative data, known as hematoxylin-eosin (H&E) stained tissue slides. Notably, Kather ''et al.''<ref name=":2">{{Cite journal |last=Kather |first=Jakob Nikolas |last2=Krisam |first2=Johannes |last3=Charoentong |first3=Pornpimol |last4=Luedde |first4=Tom |last5=Herpel |first5=Esther |last6=Weis |first6=Cleo-Aron |last7=Gaiser |first7=Timo |last8=Marx |first8=Alexander |last9=Valous |first9=Nektarios A. |last10=Ferber |first10=Dyke |last11=Jansen |first11=Lina |date=2019-01-24 |editor-last=Butte |editor-first=Atul J. |title=Predicting survival from colorectal cancer histology slides using deep learning: A retrospective multicenter study |url=https://dx.plos.org/10.1371/journal.pmed.1002730 |journal=PLOS Medicine |language=en |volume=16 |issue=1 |pages=e1002730 |doi=10.1371/journal.pmed.1002730 |issn=1549-1676 |pmc=PMC6345440 |pmid=30677016}}</ref> have explored the potential of CNN-based approaches to predict disease progression directly from the available H&E images. In a retrospective study, their findings underscored CNN’s remarkable ability to assess the human tumor microenvironment and prognosticate outcomes based on the analysis of histopathological images. This breakthrough showcases the transformative potential of such AI-based methodologies in revolutionizing the field of [[Medical image computing|medical image analysis]], offering new avenues for efficient and objective diagnostic and prognostic assessments. | |||

On the other hand, in the literature, manual analysis methods are also available to classify and predict disease outcomes using the H&E images. Compared to AI-based algorithms, traditional manual analysis generally performs lower. It is highlighted in the literature that the performance of traditional methods like local binary pattern (LBP) and Haralick is poor.<ref>{{Cite journal |last=Nanni |first=Loris |last2=Lumini |first2=Alessandra |last3=Brahnam |first3=Sheryl |date=2012-02 |title=Survey on LBP based texture descriptors for image classification |url=https://linkinghub.elsevier.com/retrieve/pii/S0957417411013637 |journal=Expert Systems with Applications |language=en |volume=39 |issue=3 |pages=3634–3641 |doi=10.1016/j.eswa.2011.09.054}}</ref><ref>{{Cite journal |last=Alhindi |first=Taha J. |last2=Kalra |first2=Shivam |last3=Ng |first3=Ka Hin |last4=Afrin |first4=Anika |last5=Tizhoosh |first5=Hamid R. |date=2018-07 |title=Comparing LBP, HOG and Deep Features for Classification of Histopathology Images |url=https://ieeexplore.ieee.org/document/8489329/ |journal=2018 International Joint Conference on Neural Networks (IJCNN) |publisher=IEEE |place=Rio de Janeiro |pages=1–7 |doi=10.1109/IJCNN.2018.8489329 |isbn=978-1-5090-6014-6}}</ref> These studies emphasized that deep learning is more effective in diagnosing colorectal cancer using histopathology images, and that traditional ML methods are poor. The accuracy of LBP is 0.76 percent, and Haralick’s is 0.75. In this context, since methods such as LBP and Haralick showed low accuracy in the literature, we decided to adopt an approach other than these two methods. We chose to carry out this study with the [[histogram of oriented gradients]] (HoG) method. Unlike other studies in the literature, we performed analysis using HoG features for the first time in this study. Our choice offers an alternative perspective to traditional methods and deep learning studies. The results obtained using HoG features make a new contribution to the literature. This study offers a unique perspective to the literature by highlighting the value of analysis using HoG on a specific data set. | |||

Table 1 provides an overview of manual analysis and AI-based studies from various literature sources. In a study by Jiang<ref name=":3">{{Cite journal |last=Jiang |first=Liwen |last2=Huang |first2=Shuting |last3=Luo |first3=Chaofan |last4=Zhang |first4=Jiangyu |last5=Chen |first5=Wenjing |last6=Liu |first6=Zhenyu |date=2023-11-13 |title=An improved multi-scale gradient generative adversarial network for enhancing classification of colorectal cancer histological images |url=https://www.frontiersin.org/articles/10.3389/fonc.2023.1240645/full |journal=Frontiers in Oncology |volume=13 |pages=1240645 |doi=10.3389/fonc.2023.1240645 |issn=2234-943X |pmc=PMC10679330 |pmid=38023227}}</ref>, a high accuracy rate of 0.89 was achieved using InceptionV3 Multi-Scale Gradients and [[generative adversarial network]] (GAN) for classifying colorectal cancer histopathological images. Kather ''et al.''<ref name=":0" /> resulted in an accuracy metric of 0.87 using texture-based approaches, decision trees, and support vector machines (SVMs) to analyze tissues of multiple classes in colorectal cancer histology. Other studies include Popovici ''et al.''<ref name=":4">{{Cite journal |last=Popovici |first=Vlad |last2=Budinská |first2=Eva |last3=Čápková |first3=Lenka |last4=Schwarz |first4=Daniel |last5=Dušek |first5=Ladislav |last6=Feit |first6=Josef |last7=Jaggi |first7=Rolf |date=2016-05-11 |title=Joint analysis of histopathology image features and gene expression in breast cancer |url=https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-016-1072-z |journal=BMC Bioinformatics |language=en |volume=17 |issue=1 |pages=209 |doi=10.1186/s12859-016-1072-z |issn=1471-2105}}</ref> at 0.84 with VGG-f (MatConvNet library) for the prediction of molecular subtypes, 0.84 with Xu ''et al.''<ref name=":5">{{Cite journal |last=Xu |first=Jun |last2=Luo |first2=Xiaofei |last3=Wang |first3=Guanhao |last4=Gilmore |first4=Hannah |last5=Madabhushi |first5=Anant |date=2016-05 |title=A Deep Convolutional Neural Network for segmenting and classifying epithelial and stromal regions in histopathological images |url=https://linkinghub.elsevier.com/retrieve/pii/S0925231216001004 |journal=Neurocomputing |language=en |volume=191 |pages=214–223 |doi=10.1016/j.neucom.2016.01.034 |pmc=PMC5283391 |pmid=28154470}}</ref> using CNN for the classification of stromal and epithelial regions in histopathology images, and 0.83 with Mossotto ''et al.''<ref name=":6">{{Cite journal |last=Mossotto |first=E. |last2=Ashton |first2=J. J. |last3=Coelho |first3=T. |last4=Beattie |first4=R. M. |last5=MacArthur |first5=B. D. |last6=Ennis |first6=S. |date=2017-05-25 |title=Classification of Paediatric Inflammatory Bowel Disease using Machine Learning |url=https://www.nature.com/articles/s41598-017-02606-2 |journal=Scientific Reports |language=en |volume=7 |issue=1 |pages=2427 |doi=10.1038/s41598-017-02606-2 |issn=2045-2322 |pmc=PMC5445076 |pmid=28546534}}</ref> using optimized SVM for the classification of inflammatory bowel disease. Tsai and Tao<ref name=":7">{{Cite journal |last=Tsai |first=Min-Jen |last2=Tao |first2=Yu-Han |date=2019-12 |title=Machine Learning Based Common Radiologist-Level Pneumonia Detection on Chest X-rays |url=https://ieeexplore.ieee.org/document/9008684/ |journal=2019 13th International Conference on Signal Processing and Communication Systems (ICSPCS) |publisher=IEEE |place=Gold Coast, Australia |pages=1–7 |doi=10.1109/ICSPCS47537.2019.9008684 |isbn=978-1-7281-2194-9}}</ref> demonstrated 0.80 accuracy metrics with CNN for detecting pneumonia in chest X-rays. These results show that AI-based classification studies generally achieve high accuracy rates. The primary emphasis of these studies revolves around AI methods employed in analyzing histopathological images, with a particular focus on CNNs. These networks have demonstrated exceptional levels of precision in a wide range of medical applications. These algorithms have demonstrated remarkable outcomes in cancer diagnosis and screening domains. CNNs provide substantial benefits compared to conventional approaches, owing to their ability to handle and evaluate intricate histological data. These methods also excel in their capacity to detect patterns, textures, and structures in high-resolution images, thereby complementing or, in certain instances, even substituting the human review processes of pathologists. The promise of these AI-based techniques to change the field of medical picture processing is well acknowledged. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' Overview of the literature on manual analysis and AI-based studies. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Author | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Aim of research | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Method | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Accuracy metric | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Jiang ''et al.''<ref name=":3" /> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Colorectal cancer histopathological images classification | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |InceptionV3 multi-scale gradient generative adversarial network | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.89 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Kather ''et al.''<ref name=":0" /> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Analysis of multiple classes of textures in colorectal cancer histology | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Texture-based approaches, decision trees, and SVMs | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.87 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Popovici ''et al.''<ref name=":4" /> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Prediction of molecular subtypes | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |VGG-f (MatConvNet library) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.84 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Xu ''et al.''<ref name=":5" /> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Classifying the stromal and epithelial sections of histopathology pictures | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |CNN | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.84 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Mossotto ''et al.''<ref name=":6" /> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Classification of inflammatory bowel disease | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Optimized SVM | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.83 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Sena ''et al.''<ref>{{Cite journal |last=Sena |first=Paola |last2=Fioresi |first2=Rita |last3=Faglioni |first3=Francesco |last4=Losi |first4=Lorena |last5=Faglioni |first5=Giovanni |last6=Roncucci |first6=Luca |date=2019-09-27 |title=Deep learning techniques for detecting preneoplastic and neoplastic lesions in human colorectal histological images |url=http://www.spandidos-publications.com/10.3892/ol.2019.10928 |journal=Oncology Letters |doi=10.3892/ol.2019.10928 |issn=1792-1074}}</ref> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Tumor tissue classification | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Custom CNN (4CL, 3FC) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.81 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Tsai and Tao<ref name=":7" /> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Chest X-ray pneumonia detection | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |CNN | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.80 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Shapcott ''et al.''<ref>{{Cite journal |last=Shapcott |first=Mary |last2=Hewitt |first2=Katherine J. |last3=Rajpoot |first3=Nasir |date=2019-03-27 |title=Deep Learning With Sampling in Colon Cancer Histology |url=https://www.frontiersin.org/article/10.3389/fbioe.2019.00052/full |journal=Frontiers in Bioengineering and Biotechnology |volume=7 |pages=52 |doi=10.3389/fbioe.2019.00052 |issn=2296-4185 |pmc=PMC6445856 |pmid=30972333}}</ref> | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Classification of nuclei | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |CNN based on Tensorflow "ciFar" model | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.76 | |||

|- | |||

|} | |||

|} | |||

==Materials and methods== | |||

===Dataset=== | |||

Our research was based on the use of two separate datasets, carefully selected and prepared for use as our training and testing sets. We carefully compiled the training dataset (NCT-CRC-HE-100K) from the pathology archives of the NCT Biobank (National Center for Tumor Diseases, Germany), including records from 86 patients. The University Medical Center Mannheim (UMM), Germany<ref name=":2" /><ref>{{Citation |last=Kather |first=Jakob Nikolas |last2=Halama |first2=Niels |last3=Marx |first3=Alexander |date=2018-04-07 |title=100,000 Histological Images Of Human Colorectal Cancer And Healthy Tissue |url=https://zenodo.org/record/1214456 |work=Zenodo |publisher= |doi=10.5281/zenodo.1214456 |accessdate=2024-05-21}}</ref> generated the testing dataset using the NCT-VAL-HE-7K dataset. It included data from 50 patients. We obtained the datasets from open-source images after carefully removing them from formalin-fixed paraffin-embedded tissues of colorectal cancer. The dataset we used for training and testing consisted of 100,000 high-resolution H&E (hematoxylin and eosin) images. | |||

From these images, we selected 7,180 non-overlapping sub-images, also known as sub-images. Each of these sub-images measures 0.5 microns in thickness and boasts dimensions of 224x224 pixels. The richness of our dataset is further highlighted by the inclusion of nine distinct tissue textures, each encapsulating the subtle difficulties of various tissue types. These encompass a broad spectrum, from adipose tissue to lymphocytes, mucus, and cancer epithelial cells. Table 2 meticulously presents the distribution of images within the test and training datasets, segmented by their respective tissue classes. For instance, we meticulously assembled a training dataset featuring a robust 14,317 samples within the colorectal cancer tissue class. Simultaneously, the testing dataset for this class comprises 1,233 samples. These detailed statistics play a crucial role in providing readers with a comprehensive understanding of the data distribution and the relative sizes of each class within the study, forming the foundation for our subsequent analyses and model development. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' The number of H&E images in the training and test sets used in the study. ADI - adipose; BACK - background; DEB - debris; LYM - lymphocytes; MUC - mucus; MUS - smooth muscle; NORM - normal colonic mucosa; STR - cancer-associated stroma; TUM - colorectal adenocarcinoma epithelium. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Classes | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |# of images in training set | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |# of images in test set | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ADI | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |10,407 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |1,338 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |BACK | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |10,566 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |847 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |DEB | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |11,512 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |339 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |LYM | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |11,557 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |634 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |MUC | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |8,896 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |1,035 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |MUS | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |13,536 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |592 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |NORM | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |8,763 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |741 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |STR | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |10,446 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |421 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |TUM | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |14,317 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |1,233 | |||

|- | |||

|} | |||

|} | |||

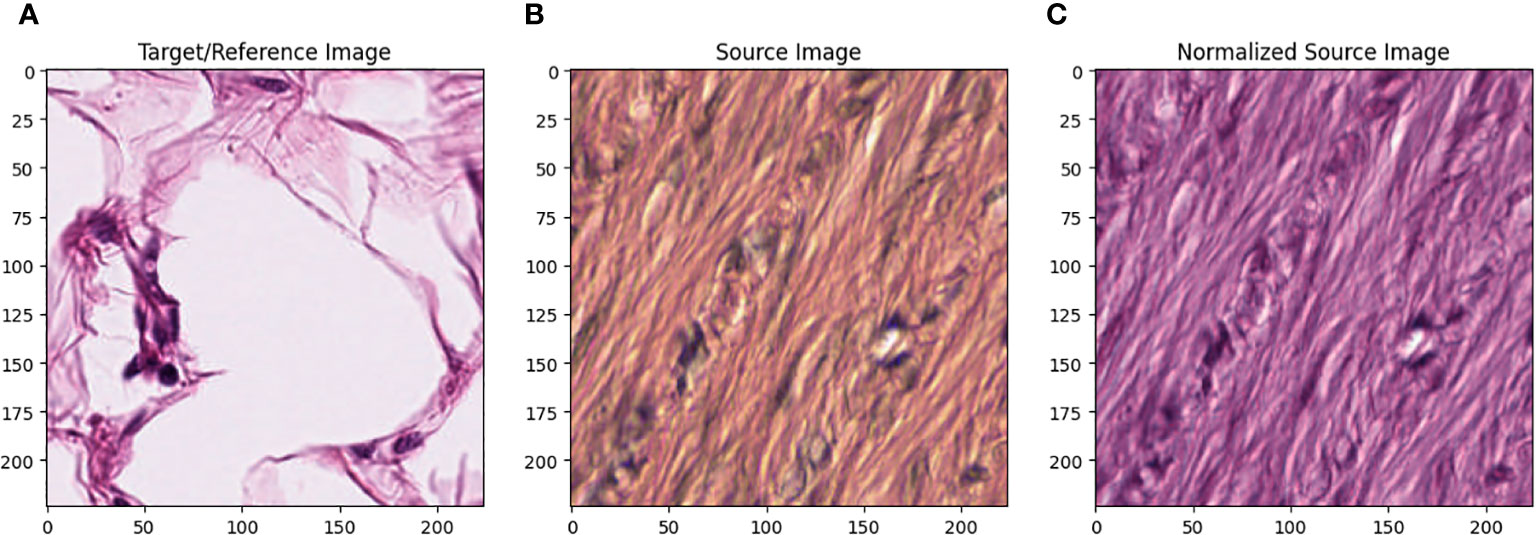

All images in the training set were normalized using the Macenko method.<ref>{{Cite web |title=A method for normalizing histology slides for quantitative analysis {{!}} IEEE Conference Publication {{!}} IEEE Xplore |work=ieeexplore.ieee.org |url=https://ieeexplore.ieee.org/document/5193250/ |doi=10.1109/isbi.2009.5193250 |accessdate=2024-05-21}}</ref> Figure 1 describes the effect of Macenko normalization on sample images. The torchstain library<ref>{{Cite web |last=Barbano, C.A.; Pedersen, A. |date=02 March 2023 |title=torchstain 1.3.0 |work=pypi.org |url=https://pypi.org/project/torchstain/ |accessdate=16 November 2023}}</ref>, which supports a PyTorch-based approach, is available for color normalization of the image using the Macenko method. | |||

[[File:Fig1 Dogan FrontOnc2024 13.jpg|1100px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="1100px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' '''(A)''' Target/reference image, '''(B)''' source image, and '''(C)''' normalized source image.</blockquote> | |||

|- | |||

|} | |||

|} | |||

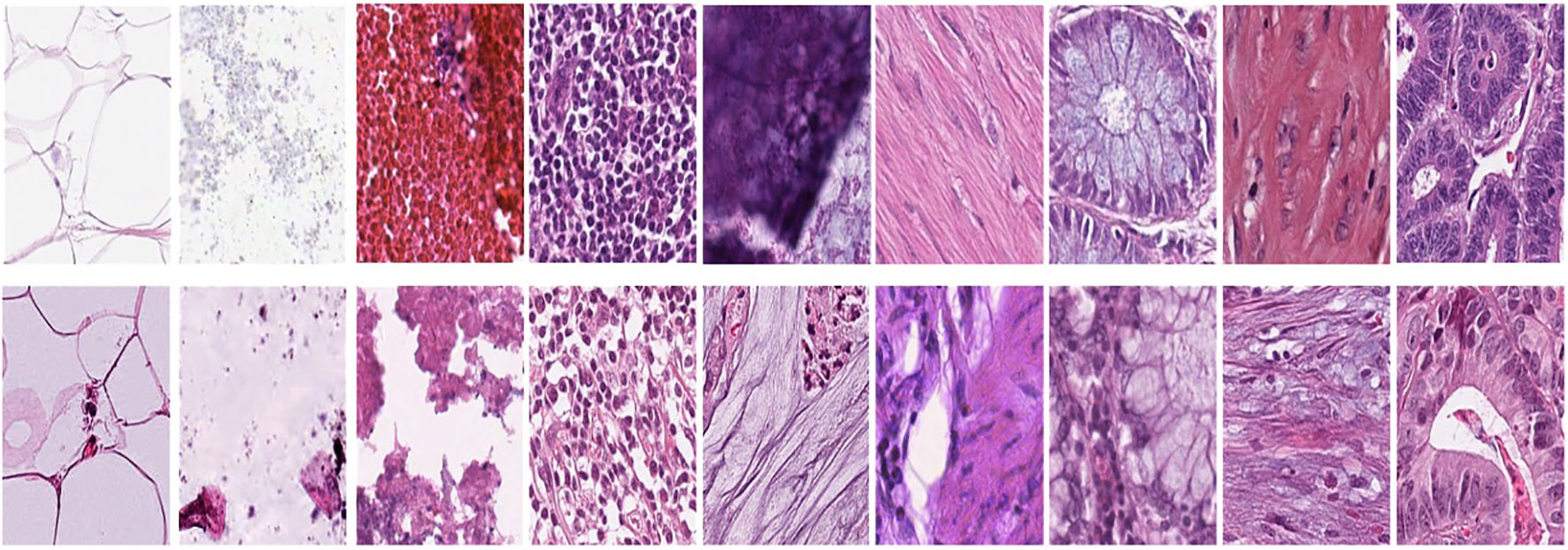

Figure 1A represents this method’s target/reference image, while Figure 1B represents the source images. Macenko normalization aims to make the color distribution of the source images compatible with the target image. In the example shown in the figure, the result of the normalization process applied on the source images (Figure 1B), taking the target image (Figure 1A) as a reference, allows us to obtain a more consistent and similar color profile by reducing color mismatches, as seen in Figure 1C. This will make obtaining more reliable results in machine learning or image analytics applications possible. Normalization was performed on the dataset on which the model was trained, and applying this normalization to the test set can increase the model’s generalization ability. However, the test set represents real-world setups and consists of images routinely obtained in the pathology department. Therefore, since these images wanted to train a clinically meaningful model with different color conditions, they were not applied to the normalization test set. In this way, we also investigated the effect of applying color normalization on classifying different types of tissues. The original data set—shown in the first row of Figure 2—from nine different tissue samples has substantially different color stains; however, the second row of Figure 2 shows their normalized versions. These images are transformed to the same average intensity level. | |||

[[File:Fig2 Dogan FrontOnc2024 13.jpg|1100px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="1100px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' First row represents Adipose (ADI), background (BACK), debris (DEB), lymphocytes (LYM), mucus (MUC), smooth muscle (MUS), normal colonic mucosa (NORM), cancer-associated stroma (STR), and colorectal adenocarcinoma epithelium (TUM). The second row data set was obtained by applying normalization to the same tissue examples in the first row.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Manual analysis algorithm=== | |||

In traditional manual analysis, the classification process was emphasized by extracting HoG features. HoG features represent a class of local descriptors that extract crucial characteristics from images and videos. They have found typical applications across various domains, encompassing tasks such as image classification, object detection, and object tracking.<ref>{{Cite journal |last=Tanjung |first=Juliansyah Putra |last2=Muhathir |first2=Muhathir |date=2020-01-20 |title=Classification of facial expressions using SVM and HOG |url=https://ojs.uma.ac.id/index.php/jite/article/view/3182 |journal=JOURNAL OF INFORMATICS AND TELECOMMUNICATION ENGINEERING |volume=3 |issue=2 |pages=210–215 |doi=10.31289/jite.v3i2.3182 |issn=2549-6255}}</ref><ref>{{Cite journal |last=Dept. of Computer Science, College of Science, University of Duhok, Duhok, Kurdistan Region, Iraq |last2=Mohammed |first2=Mohammed G. |last3=Melhum |first3=Amera I. |date=2020-10-05 |title=Implementation of HOG Feature Extraction with Tuned Parameters for Human Face Detection |url=http://www.ijmlc.org/index.php?m=content&c=index&a=show&catid=109&id=1160 |journal=International Journal of Machine Learning and Computing |volume=10 |issue=5 |pages=654–661 |doi=10.18178/ijmlc.2020.10.5.987}}</ref> | |||

The following parameters were used to extract HoG features: | |||

*Number of orientations: nine; this is the number of gradient directions calculated in each cell. | |||

*Cells per pixel: each cell consists of 10x10 pixels. | |||

*Blocks per cell: each block contains 2x2 cells. | |||

*Rooting and block normalization: Using the `transform_sqrt=True` and `block_norm=“L1”` options, rooting and L1 norm-based block normalization were performed to reduce lighting and shading effects. The resulting features are more robust and amenable to comparison, especially under variable lighting conditions. This can improve the model’s overall performance in image recognition and classification tasks. | |||

Using these parameters increases the efficiency and accuracy of the HoG feature extraction process, thus ensuring high performance in colorectal cancer tissue classification. | |||

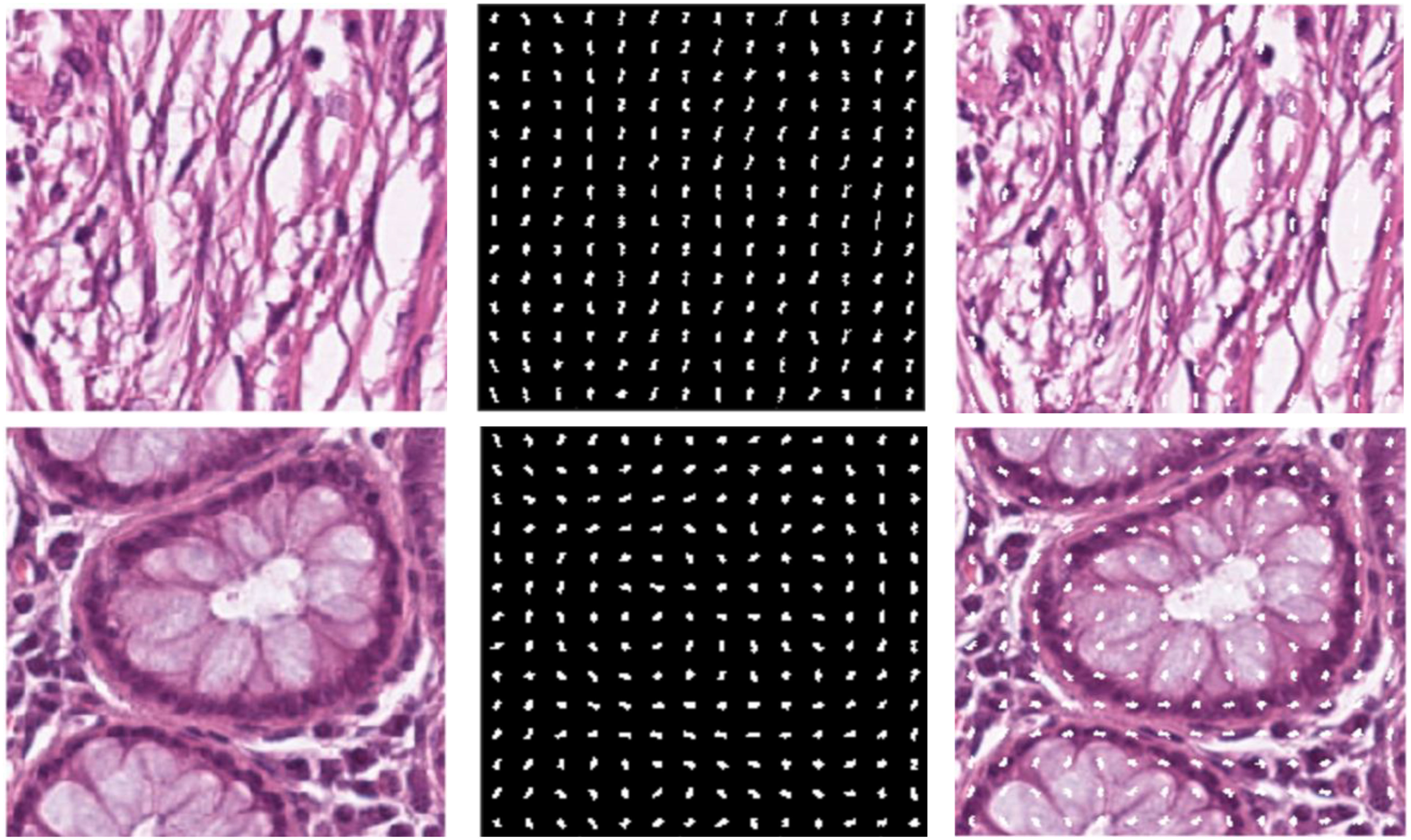

We chose HoG features, one of the local descriptors, because HoG processes the image by dividing it into small regions called cells. As illustrated in Figure 3, the cells are created with the original images. In the created cells, the gradients of the pixels in the x-direction (Sx) and the y-direction (Sy) are calculated as such: | |||

:<math>S = \sqrt{{S_{x}}^{2} + {S_{y}}^{2}}</math> | |||

The gradient direction θ is computed using the computed gradients as such<ref>{{Cite journal |last=Dalal |first=N. |last2=Triggs |first2=B. |date=2005 |title=Histograms of Oriented Gradients for Human Detection |url=http://ieeexplore.ieee.org/document/1467360/ |journal=2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR'05) |publisher=IEEE |place=San Diego, CA, USA |volume=1 |pages=886–893 |doi=10.1109/CVPR.2005.177 |isbn=978-0-7695-2372-9}}</ref>: | |||

:<math>\theta = \tan^{- 1}(\frac{S_{x}}{S_{y}})</math> | |||

[[File:Fig3 Dogan FrontOnc2024 13.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 3.''' '''(A)''' Original image. '''(B)''' Extracted HoG features from the image. '''(C)''' HoG features shown on the original image.</blockquote> | |||

|- | |||

|} | |||

|} | |||

After calculating the gradients, the histograms are calculated, and these histograms are combined to form blocks. Normalization is performed on the blocks to avoid lighting and shading effects. The study involved a comprehensive analysis of the image set, where all images were initially standardized to a dimension of 224x224. This standardization was conducted to enhance the classification performance of the features derived from these images. To achieve this, a bilinear interpolation method was applied, resizing the images to a standardized dimension of 200x200. The decision to reduce the image dimensions from 224x224 pixels to 200x200 pixels was taken to optimize the calculation time. In particular, a 224x224 image produces 7,200 feature vectors, while a 200x200 image performing the same process produces 5,832 feature vectors. The feature vector formula is explained as follows: | |||

:<math>Feature\ vector\ size = N\ \times \left( {\frac{W}{C_{W}} - CpB_{X} + 1} \right) \times \left( {\frac{H}{C_{H}} - CpB_{y} + 1} \right) \times Orientations \times CpB_{x} \times CpB_{y}</math> | |||

... where N is the number of pixels in the image; W and H are the image’s width and height, respectively; C<sub>W</sub> cell weight and C<sub>H</sub> cell height define cell dimensions; and CpB<sub>x</sub> and CpB<sub>y</sub> are the number of cells per block, representing the number of directions calculated for each cell in the HoG feature vector. Not only does dimensionality reduction have the advantage of reducing computational time, but smaller-sized feature vectors can potentially reduce memory usage and the overall complexity of the model. This is due to optimizing model training and prediction times, especially when working on large data sets. | |||

This study preferred the [[random forest]] (RF) algorithm as the ML model for colorectal cancer tissue classification. RF is one of the ensembles learning methods and creates a robust and generalizable model by combining multiple decision trees. The main reason for this choice is that RF performs well on different data sets. It can work effectively on complex and multidimensional data sets. RF can operate effectively on large data sets and high-dimensional feature spaces. RF is resilient to noise and anomalies in the data set. It can also evaluate relationships between variables, increasing the stability of the model. RF can deal with overfitting problems, preventing the model from overfitting the training data. These features support the suitability of the RF algorithm for colorectal cancer tissue classification. As a result of preliminary tests and analyses, it was decided that RF was the most suitable model. This choice is intended to obtain reliable results. | |||

RF applications are practical biomedical imaging and tissue analysis tools with features such as high-dimensional data processing ability, accuracy, and robustness. Challenges to this implementation, such as computational efficiency, potential overfitting, and especially interpretability and explainability, are also significant. It was stated that the algorithm was possible and was considered to increase security, especially for the future of medicine and clinical research. It is interesting to note that comprehensive feature selection plays a critical role in learning and comprehensively makes the consolidated results more accurate and robust. This is extremely important in increasing efficiency in medicine and clinical research.<ref>{{Cite journal |last=Plass |first=Markus |last2=Kargl |first2=Michaela |last3=Kiehl |first3=Tim‐Rasmus |last4=Regitnig |first4=Peter |last5=Geißler |first5=Christian |last6=Evans |first6=Theodore |last7=Zerbe |first7=Norman |last8=Carvalho |first8=Rita |last9=Holzinger |first9=Andreas |last10=Müller |first10=Heimo |date=2023-07 |title=Explainability and causability in digital pathology |url=https://pathsocjournals.onlinelibrary.wiley.com/doi/10.1002/cjp2.322 |journal=The Journal of Pathology: Clinical Research |language=en |volume=9 |issue=4 |pages=251–260 |doi=10.1002/cjp2.322 |issn=2056-4538 |pmc=PMC10240147 |pmid=37045794}}</ref><ref>{{Citation |last=Pfeifer |first=Bastian |last2=Holzinger |first2=Andreas |last3=Schimek |first3=Michael G. |date=2022-05-25 |editor-last=Séroussi |editor-first=Brigitte |editor2-last=Weber |editor2-first=Patrick |editor3-last=Dhombres |editor3-first=Ferdinand |editor4-last=Grouin |editor4-first=Cyril |editor5-last=Liebe |editor5-first=Jan-David |title=Robust Random Forest-Based All-Relevant Feature Ranks for Trustworthy AI |url=https://ebooks.iospress.nl/doi/10.3233/SHTI220418 |work=Studies in Health Technology and Informatics |publisher=IOS Press |doi=10.3233/shti220418 |isbn=978-1-64368-284-6 |accessdate=2024-05-21}}</ref> | |||

===AI-based automation=== | |||

In this study, a remarkable CNN architecture was developed for the image classification problem. The developed model aimed to achieve high performance with less complexity through specific parameters and layers. We used a simple CNN-based architecture and trained it using the same H&E images, the same data we used in the manual analysis part. We aim to compare the performance of manual analysis and AI-based automation methods in classifying colorectal cancer tissue images. Table 3 shows the parameter and structure information of the CNN model. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 3.''' Details, complexity, and hyperparameters of the simple CNN architecture we built for the AI automation approach. ReLU - rectified linear unit. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |CNN layers | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Parameters and explanations | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Complexity | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |1. Image input | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |28x28x3 images with "zscore" normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low (preprocessing step) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |2. Convolution | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Eight 3x3 convolutions with stride [1 1] and padding "same" | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Moderate (only eight filters) | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |3. Batch normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Batch normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low to moderate | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |4. ReLU | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ReLU | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |5. Max pooling | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |2x2 max pooling with stride [2 2] and padding [0 0 0 0] | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |6. Convolution | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Sixteen 3x3 convolutions with stride [1 1] and padding "same" | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Moderate | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |7. Batch normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Batch normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low to moderate | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |8. ReLU | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ReLU | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |9. Max pooling | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |2x2 max pooling with stride [2 2] and padding [0 0 0 0] | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |10. Convolution | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Thirty-two 3x3 convolutions with stride [1 1] and padding "same" | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Higher due to the increased number of filters | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |11. Batch normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Batch normalization | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low to moderate | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |12. ReLU | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ReLU | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |13. Fully connected | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Three fully connected layers | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |High | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |14. Softmax | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |softmax | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |15. Loss function | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |creossentropyex | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Low | |||

|- | |||

|} | |||

|} | |||

In the manual analysis section, steps were taken to extract features from images and train the model using these features. Nevertheless, we used images directly as input in the AI automation part, then created a model suitable for the purpose and carried out the training process. In the AI automation approach, we used local filters, intermediate steps, and a multilayer artificial neural network model to train the base CNN model (Table 3).<ref name=":1" /> This table explains the layers, structures, and hyperparameters of the CNN model used in the AI automation section. This model includes direct use of images and essential operations such as sequential convolution, batch normalization, and rectified linear unit (ReLU) activation. Finally, it uses the classification of results with softmax activation and a cross-entropy-based loss function. This model reflects a complex structure aimed at classifying colorectal cancer tissue images. This approach has played an important role in comparing the performance of manual analysis and AI automation methods. | |||

This AI automation model is designed to extract and classify features in histopathological images. In the first layer, the model gets input from color (RGB) images of 28x28 pixels. Input images are processed with "zscore" normalization, which brings the mean of the data to zero and its standard deviation to one. The model's architecture then includes a series of convolutional layers, batch normalization, and ReLU activation functions. Convolution layers move over the image to extract feature maps and highlight important features. Batch normalization helps train the network faster and provides more stable performance. ReLU activation functions filter out negative pixel values, increasing the learning ability of the model. Maximum pooling layers shrink the feature maps and increase the model’s scalability. As a result of these layers, the model includes high-level features such as learning and increasing complexity. Finally, the model uses fully connected layers to assign learned features to specific classes and uses the softmax activation function to make the results more consistent. | |||

On the other hand, its existence allows a probability distribution to be provided for each class. The cross-entropy loss function optimizes the learning of the model with accurate classification labels and manual analysis techniques, which are examples of techniques that can be used to optimize the process. As a result of this model, AI automation can improve feature extraction and classification capabilities in histopathology image classification tasks. | |||

The study selected parameters to train the model based on starting values commonly accepted in the literature.<ref>{{Cite journal |last=Fardad |first=Mohammad |last2=Mianji |first2=Elham Mohammadzadeh |last3=Muntean |first3=Gabriel-Miro |last4=Tal |first4=Irina |date=2022-06-15 |title=A Fast and Effective Graph-Based Resource Allocation and Power Control Scheme in Vehicular Network Slicing |url=https://ieeexplore.ieee.org/document/9828750/ |journal=2022 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB) |publisher=IEEE |place=Bilbao, Spain |pages=1–6 |doi=10.1109/BMSB55706.2022.9828750 |isbn=978-1-6654-6901-2}}</ref> In the early stages of the training process, researchers attempted to achieve gradual improvement by choosing a varying initial learning rate. The researchers determined that the maximum number was 50 and presented the maximum number as 100, allowing the model to follow the training data over a long period. At each epoch, the model rearranged the training data to produce a more comprehensive and independent representation unaffected by prior learning. We chose a batch size ranging from 32 to 64 to ensure uniform processing of the samples. We used these parameters to select validation data and determine the evaluation frequency. Continuous evaluation of the model throughout the training process is not only optimal but also guaranteed. By eliminating cases of overfitting, the model achieved greater generalizability, and more reliable results were confirmed. We conducted rigorous testing and used a trial-and-error approach to determine these parameters to monitor the model’s performance. Research findings show that the selected parameters yield the best results, and the model effectively facilitates learning from the dataset. | |||

During the last training session, we carefully determined the exact parameter values that led to the successful training of the model: We set the initial learning rate to 0.01 and the maximum number of epochs to 50, blending is the process of combining data we carried out from different sources. Every complete pass across the entire dataset is performed at every epoch. This is intended to ensure the size is set to 64 during the process. You will also see that 64 data samples are processed together. He managed to complete the task for 20 days successfully. The model in question is a specific learning rate that uses the number of epochs and other parameters that reflect a unique scenario to be trained. We carefully chose these parameters for the model to achieve the necessary level of success and assure the best possible fit to the data set. | |||

In this study, the researchers developed a CNN model to improve their ability to extract essential features and classify histopathology images. The model takes 28x28x3 RGB images as input, processing them with "zscore" normalization. The model structure includes three 3x3 convolution layers containing 8, 16, and 32 filters. The ReLU activation function was used after each convolution layer. Additionally, there are 2x2 sized max pooling layers following each convolution layer. In the final stages of the model, there are three fully connected layers; the softmax activation function was preferred as the last layer. In terms of training parameters, explained in Table 4, the model is initially trained with a learning rate of 0.01 and works on the data set for a maximum of 50 epochs. Data shuffling is applied at the end of each epoch, and 64 is selected as the batch size. The performance and generalization ability of the model are continuously monitored with validation dataset evaluations performed every 20 epochs. Determining these parameters ensures that the model adapts to the data set most appropriately and reaches the desired level of success, and also helps the model avoid possible problems such as overfitting during the training process. With this configuration, the model has the necessary feature extraction and classification capabilities to produce effective and accurate results in histopathological image classification. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 4.''' The CNN-based automation model training parameters. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Training parameter | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Value | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Initial learning rate | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.01 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Maximum number of epochs | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |50 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Data shuffle | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |After each epoch | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Batch size | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |64 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Verification data and evaluation frequency | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Every 20 epochs | |||

|- | |||

|} | |||

|} | |||

The cross-entropy loss calculates the difference between the probability distribution that the model predicts and the probability distribution of the actual labels. The formula for binary classification is as follows: | |||

:<math>{L{({y,p})} = - {({\text{ylog}{(\text{p})} + {({1 - \text{y}})}\log{({1 - \text{p}})}})}~}</math> | |||

... where L represents the loss function, y represents the actual label value (1 or 0), and p represents the probability predicted by the model. | |||

The formula for multiclass classification is usually: | |||

:<math>{L = - \sum\limits_{c = 1}^{M}{({y_{o,c}\text{log}(p_{o,c}})}~}</math> | |||

... where M denotes the number of classes, y<sub>o,c</sub> is the binary representation (1 or 0) indicating o whether or not the instance belongs to class c. If that instance belongs to class c, this value is 1; otherwise, it is 0. p<sub>o,c</sub> is the probability that the model predicts that sample o belongs to class c. | |||

One of the reasons for choosing cross-entropy loss is direct probability evaluation. Cross-entropy directly evaluates how close the probabilities produced by the model are to the actual labels, making it a natural choice for classification problems. Faster convergence is another reason for choosing it. This function helps the model converge faster and more efficiently during gradient-based optimization, mainly thanks to the logarithmic component. Cross entropy works on probabilities directly affecting the model’s performance in classification problems. This directly improves the model’s ability to predict class labels accurately. Cross-entropy loss imposes a significant penalty on incorrect predictions, especially in cases where the model is very confident in its incorrect prediction. This prevents the model from making incorrect predictions that are overconfident. | |||

The CNN model developed in this study was evaluated in terms of computational complexity depending on the model’s architecture and learning process. Our model is analyzed for time and space complexity, considering factors such as interlayer transitions and filter sizes. Each convolution layer has O (k.n<sup>2</sup>) time complexity to extract features between adjacent pixels. Here k represents the filter size and n<sup>2</sup> represents the size of the image. Our model has a time complexity of O (k.n<sup>2</sup>.d) for one training epoch, where d is expressed as depth (number of layers). Space complexity is directly related to weight matrices and feature maps and specifies the amount of memory the model requires. As a result of this study, it was observed that the model can scale effectively in large data sets and exhibit high performance in practical applications. | |||

The number of parameters for the first convolutional layer can be calculated using the following equation: | |||

:<math>{\# parameters = {({FilterHeight\ *\ FilterWidth\ *\ InputChannels + 1})}\ *\ NumberofFilters~~~}</math> | |||

Plugging in our parameters, we get (3 * 3 * 3 + 1) * 8, and obtain 224. This operation is computationally exhaustive but with only eight filters, therefore, complexity is moderate. | |||

===Model evaluation=== | |||

This study used two essential methods to evaluate model performance and obtain reliable results. First, the metrics used to evaluate the model’s performance and the reasons for choosing these metrics are stated. Then, it details why the 10-fold stratified cross-validation method was preferred during the training of the manual analysis model. | |||

It is crucial to choose the right metrics to evaluate model performance. In this study, commonly used metrics such as accuracy, precision, recall, and F1 score were preferred to measure the model’s classification performance, explained in the following equations, respectively: | |||

:<math>{Accuracy = \ \frac{TP + \ TN}{TP + \ TN + FP + FN\ }~~~}</math> | |||

:<math>{Precision~{(P)} = ~\frac{TP}{TP + FP}~~~~~~~~~~~}</math> | |||

:<math>{Recall~{(R)} = ~\frac{TP}{TP + FN}~~~~~~~~~~~~~~}</math> | |||

:<math>{F1~score = 2 \times \frac{P \times R}{P + R}~~~~~~~~~~~~~~~}</math> | |||

Accuracy is the ratio of correctly classified samples to the total number of samples. That is, it refers to the ratio of true positives and true negatives to the total samples. Precision shows the proportion of samples predicted to be positive that are positive. It refers to the ratio of true positives to total positive predictions. Recall shows the ratio of true positives to the total number of positive samples. While accuracy refers to overall correct predictions, precision and sensitivity evaluate the model’s performance in more detail, especially in unbalanced class distributions. F1 score is a performance measure calculated as the harmonic mean of precision and recall values. In unbalanced class distributions, the F1 score is used to evaluate the model’s performance on both classes in a balanced way. In unbalanced class distributions, especially in cases where the majority class has more samples, the model must make true positive and true negative predictions in a balanced way. | |||

For the model to generalize reliably and to avoid overfitting problems, the 10-fold stratified cross-validation method was preferred. This method divides the dataset into 10 equal folds and uses each fold as validation data while training the model using the remaining nine-fold as training data. This process continues until each fold is used as validation data. Stratification preserves the class proportions in each tissue type, allowing the model to learn and evaluate equally in each class. This ensures the model can generalize over various data samples, allowing us to obtain reliable results. | |||

In this study, a paired t-test was used to determine that the classification results of the proposed approach were not obtained by chance. The significance of the difference in the overall accuracy achieved by the models was assessed by calculating Paired t-test p values for the overall accuracy of the classification performance achieved by the models. Statistical analysis was performed using the stats module of the Scipy library (version 1.11.3)<ref>{{Cite web |date=27 September 2023 |title=SciPy |url=https://scipy.org/ |publisher=NumFOCUS, Inc |accessdate=17 November 2023}}</ref> of [[Python (programming language)|Python]] (version 3.8). P values less than 0.05 were considered statistically significant. The p-value of the paired t-test is explained in the results part. | |||

Within the scope of this research, a CNN model was developed using MATLAB R2023a and AI automation Toolbox 16.3 versions for AI automation tasks. MATLAB was used for data manipulation, training, and evaluation of results. The manual analysis classification model was built using Python 3.8 along with the deep learning model. This model is integrated with scikit-learn (v0.24.2) and NumPy (v1.20.3) libraries. Python has been used in feature extraction and classification tasks. In terms of hardware, the study was run on a personal computer, MacBook Air, with an Apple M1 chip with eight cores and 8 GB of memory. macOS Big Sur (v11.2.3) was used as the operating system. These hardware and software configurations increase the understandability of the methodology, ensure the reproducibility of results, and facilitate comparability of similar studies. | |||

Our research used a large dataset of H&E images of various classes. Specifically, the dataset contains 10,407 ADI, 10,566 BACK, 11,512 DEB, 11,557 LYM, 8,896 MUC, 13,536 MUS, 8,763 NORM, 10,446 STR, and 14,317 TUM images for training, and the test sets are detailed in Table 2. Remarkably, training our CNN model on a dataset of approximately 100,000 images was completed in 200 seconds, demonstrating that our approach is practical even with large-scale data. | |||

==Results== | |||

Our classification study started with a comprehensive examination aimed at distinguishing normal and tumor tissues selected from a diverse collection of nine distinct tissue types. This initial phase of our research involved utilizing HoG features extracted from the images. We employed the random forest classifier model to assess the effectiveness of this approach. | |||

For a more visual representation of our results, figures explain the confusion matrices derived from the CNN model and the manual analysis results in the supplementary part. This visual insight provides a comprehensive view of the classification performance. | |||

These complex confusion matrices in high-dimensional datasets provide in-depth information about the model’s ability to classify tissues. In particular, the ADI class has a high accuracy rate in both normalized and non-normalized scenarios. The LYM class exhibits low sensitivity when the data is not standardized. Furthermore, the TUM class demonstrates high accuracy and sensitivity, especially in normalized situation. It is clear from this that the model can accurately identify cancerous tumors. | |||

On the other hand, the STR class has low accuracy and sensitivity, particularly in a non-normalized situation. This may indicate that this texture is more challenging to categorize than other textures. As a consequence of this, these matrices are an essential instrument for assessing the performance of the model on various types of tissue and for gaining an understanding of the model’s strengths and shortcomings. In addition, it sheds light on the challenges associated with the classification of uncommon classes and the potential for normalization to ease these challenges. These findings potentially provide valuable direction for future work to enhance the model and improve its performance. | |||

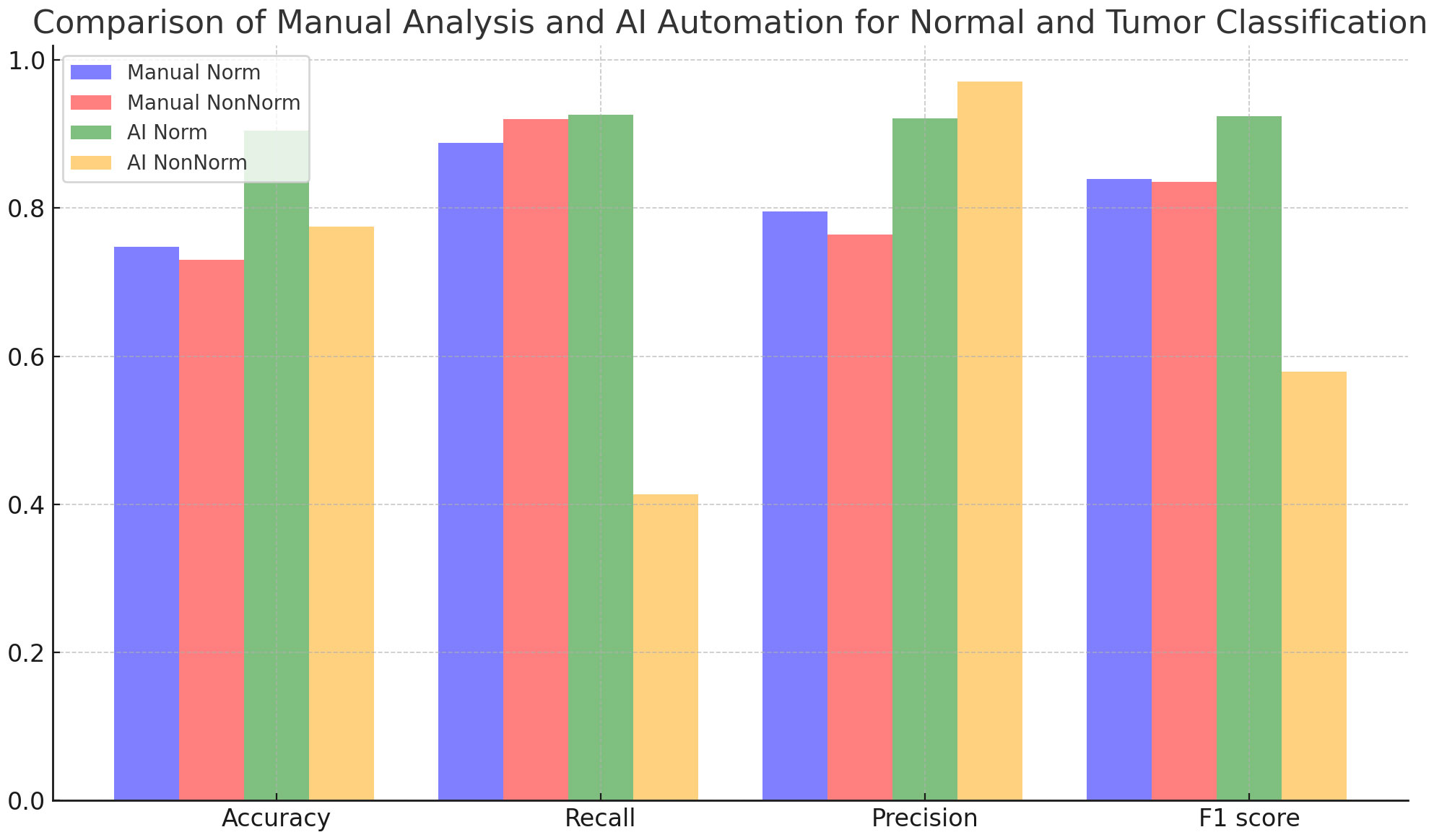

Figure 4 and Table 5 evaluate the effect of normalization in four different cases, with and without normalization, and includes accuracy results obtained with manual analysis and AI automation models for different tissues. When normalized, manual analysis accuracy for N-T tissues increased from 0.75 to 0.91, which was highly significant (p=7,72x10<sup>-41</sup>). Without normalization, accuracy increased from 0.74 to 0.78 for the same comparison, which was statistically significant (p=0.0033). Additionally, the effect of normalization varies between tissues; significant changes in accuracy are observed depending on the tissues (p<0.05). According to the analysis results, the p-value is under 0.05, a statistically significant level. This shows that the differences between the analyzed results are unlikely to be coincidental, and the reliability of the findings is statistically supported. | |||

[[File:Fig4 Dogan FrontOnc2024 13.jpg|800px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="800px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 4.''' Comparison of manual analysis and AI automation for normal and tumor classification.</blockquote> | |||

|- | |||

|} | |||

|} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="5" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 5.''' Accuracy results (N, Normal; T, Tumor). | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Normalization | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Tissue type | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Manual analysis | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |AI automation | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Significance | |||

|- | |||

| rowspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |No | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |N-T | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.74 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.78 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Nine tissues | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.41 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.86 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| rowspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |N-T | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.75 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.91 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Nine tissues | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.44 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.97 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

|} | |||

|} | |||

Table 6 compares the classification results performed by manual analysis with the effect of normalization. Although normalization increased accuracy, this was not statistically significant (p = 0.2108). While recall decreased slightly with normalization, this decrease is statistically significant (p = 0.0005). Precision increases with normalization, which is statistically significant (p=0.0162). Regarding the F1 score, the effect of normalization is not statistically significant (p = 0.7144). | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 6.''' Normal and tumor classification evaluation metrics with and without normalization using manual analysis. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Metric | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Normalized | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Non-normalized | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Significance | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Accuracy | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.7475 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.7300 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |No | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Recall | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.8879 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9204 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Precision | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.7958 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.7641 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |F1 score | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.8393 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.8350 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |No | |||

|- | |||

|} | |||

|} | |||

Table 7 contains an analysis in which normal and tumor classifications performed by AI automation are evaluated under the influence of normalization. Normalization caused statistically significant improvements in accuracy, recall, precision, and F1 score metrics (p=1.08x10<sup>-28</sup>, p=1.66x10<sup>-26</sup>, p=3.87x10<sup>-12</sup>, p=2.01x10<sup>-14</sup>), respectively. These results show that normalization is efficacious in improving AI automation-based classification performance. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | |||

|- | |||

| colspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 7.''' Normal and tumor classification evaluation metrics with and without normalization using AI automation. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Metric | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Normalized | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Non-normalized | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Significance | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Accuracy | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9048 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.7751 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Recall | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9262 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.4130 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Precision | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9217 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9714 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |F1 score | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9239 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.5795 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | |||

|- | |||

|} | |||

|} | |||

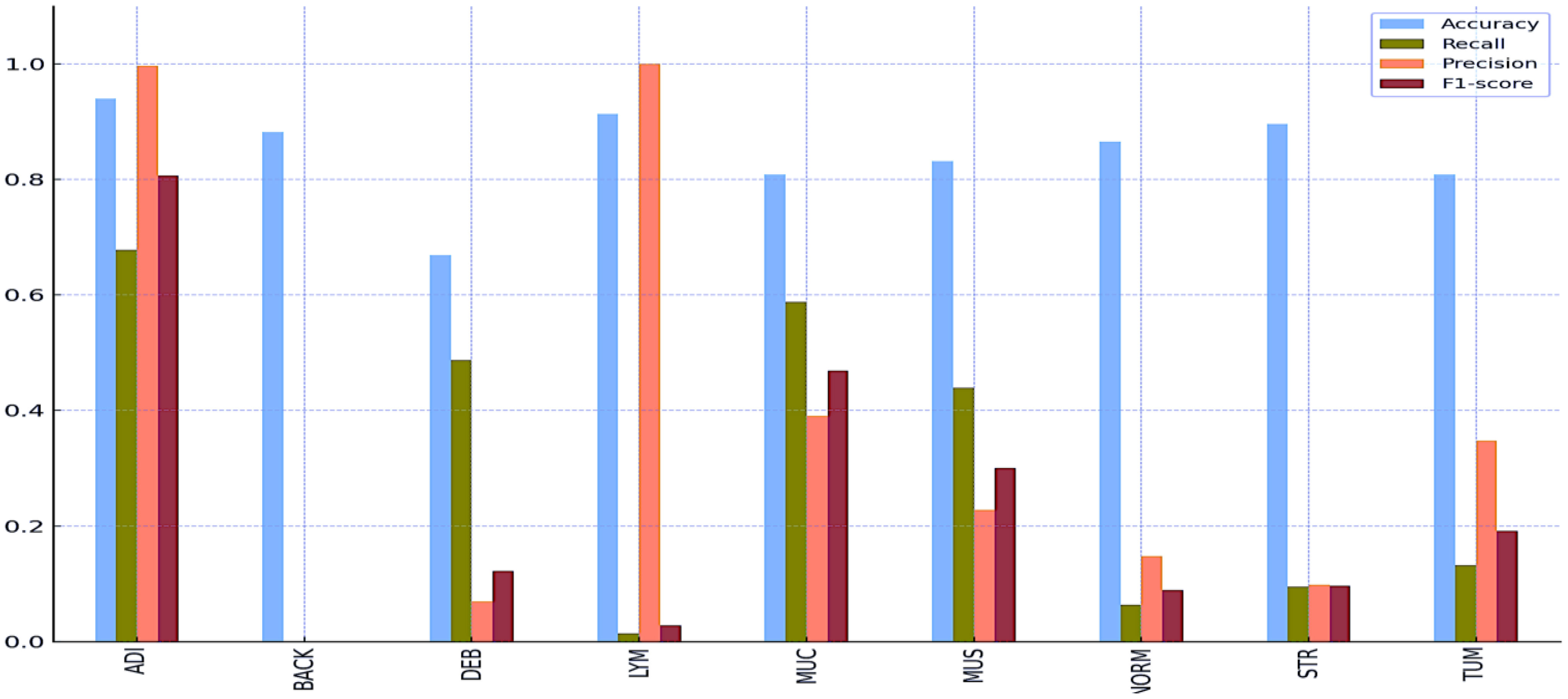

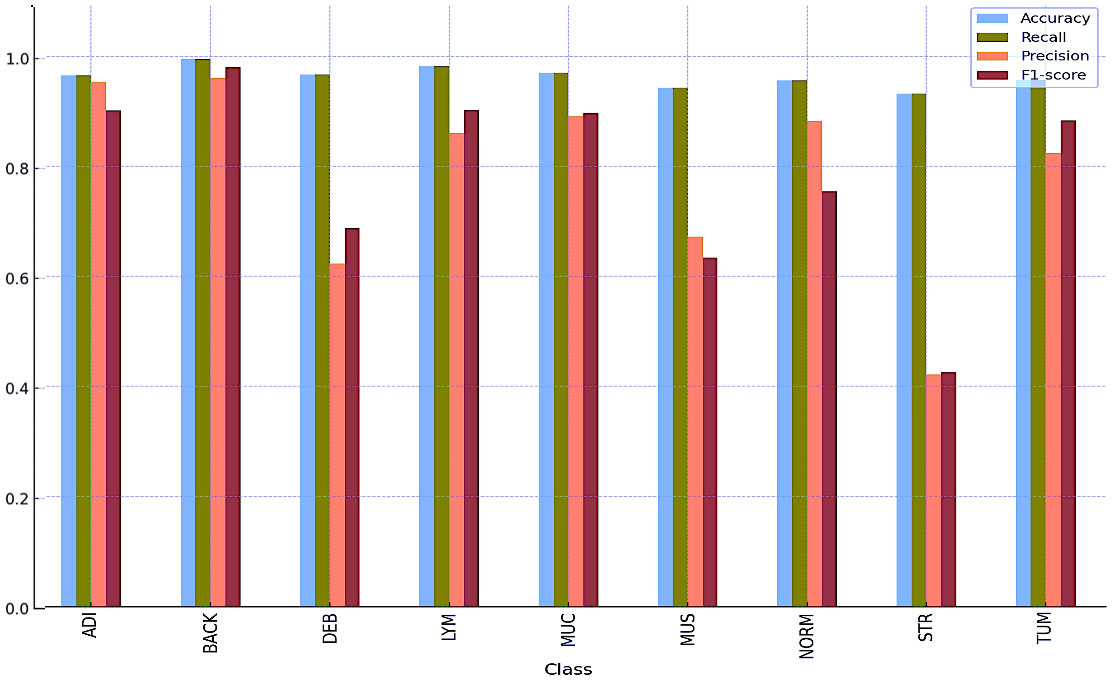

Tables 8 and 9 and Figures 5 and 6 also include the multiple classification results using AI automation for all classes before and after normalization was applied. With the application of normalization, the increases observed in the evaluation metrics of especially low-performing classes show that the model significantly improves its classification. Table 8 contains two values of particular significance: 0 and NaN (Not a Number) within the “BACK” category. This can indicate that the class failed or did not manage to calculate for specific metrics. As shown in Table 9, an overall performance improvement was observed after updating these NaN and 0 values. As a result of normalization, the performance of each class became more consistent and equal. This suggests that the model exhibits enhanced robustness and consistency in its output to normalization. | |||

{| | |||

| style="vertical-align:top;" | | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="60%" | |||

|- | |||

| colspan="5" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 8.''' Multi-class classification results without normalization using AI automation. ADI - adipose; BACK - background; DEB - debris; LYM - lymphocytes; MUC - mucus; MUS - smooth muscle; NORM - normal colonic mucosa; STR - cancer-associated stroma; TUM - colorectal adenocarcinoma epithelium; NaN - not a number. | |||

|- | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |H&E image class | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Accuracy | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Recall | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Precision | |||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |F1 score | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |ADI | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9396 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.6779 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.9967 | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.8069 | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |BACK | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |0.8820 | |||