Difference between revisions of "Journal:The NOMAD Artificial Intelligence Toolkit: Turning materials science data into knowledge and understanding"

Shawndouglas (talk | contribs) (Created stub. Saving and adding more.) |

Shawndouglas (talk | contribs) (→Notes: Cats) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 18: | Line 18: | ||

|website = [https://www.nature.com/articles/s41524-022-00935-z https://www.nature.com/articles/s41524-022-00935-z] | |website = [https://www.nature.com/articles/s41524-022-00935-z https://www.nature.com/articles/s41524-022-00935-z] | ||

|download = [https://www.nature.com/articles/s41524-022-00935-z.pdf https://www.nature.com/articles/s41524-022-00935-z.pdf] (PDF) | |download = [https://www.nature.com/articles/s41524-022-00935-z.pdf https://www.nature.com/articles/s41524-022-00935-z.pdf] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

| Line 31: | Line 25: | ||

==Introduction== | ==Introduction== | ||

Data-centric science has been identified as the fourth paradigm of scientific research. We observe that the novelty introduced by this paradigm is twofold. First, we have seen the creation of large, interconnected [[database]]s of scientific data, which are increasingly expected to comply with the so-called [[Journal:The FAIR Guiding Principles for scientific data management and stewardship|FAIR principles]]<ref>{{Cite journal |last=Wilkinson |first=Mark D. |last2=Dumontier |first2=Michel |last3=Aalbersberg |first3=IJsbrand Jan |last4=Appleton |first4=Gabrielle |last5=Axton |first5=Myles |last6=Baak |first6=Arie |last7=Blomberg |first7=Niklas |last8=Boiten |first8=Jan-Willem |last9=da Silva Santos |first9=Luiz Bonino |last10=Bourne |first10=Philip E. |last11=Bouwman |first11=Jildau |date=2016-03-15 |title=The FAIR Guiding Principles for scientific data management and stewardship |url=https://www.nature.com/articles/sdata201618 |journal=Scientific Data |language=en |volume=3 |issue=1 |pages=160018 |doi=10.1038/sdata.2016.18 |issn=2052-4463 |pmc=PMC4792175 |pmid=26978244}}</ref> of scientific [[Information management|data management]] and stewardship, meaning that the data and related [[metadata]] need to be findable, accessible, interoperable, and reusable (or repurposable, or recyclable). Second, we have seen growing use of [[artificial intelligence]] (AI) algorithms, applied to scientific data, in order to find patterns and trends that would be difficult, if not impossible, to identify by unassisted human observation and intuition. | |||

In the last few years, [[materials science]] has experienced both of these novelties. Databases, in particular from computational materials science, have been created via high-throughput screening initiatives, mainly boosted by the U.S.-based Materials Genome Initiative (MGI), starting in the early 2010s, e.g., AFLOW<ref>{{Cite journal |last=Curtarolo |first=Stefano |last2=Setyawan |first2=Wahyu |last3=Wang |first3=Shidong |last4=Xue |first4=Junkai |last5=Yang |first5=Kesong |last6=Taylor |first6=Richard H. |last7=Nelson |first7=Lance J. |last8=Hart |first8=Gus L.W. |last9=Sanvito |first9=Stefano |last10=Buongiorno-Nardelli |first10=Marco |last11=Mingo |first11=Natalio |date=2012-06 |title=AFLOWLIB.ORG: A distributed materials properties repository from high-throughput ab initio calculations |url=https://linkinghub.elsevier.com/retrieve/pii/S0927025612000687 |journal=Computational Materials Science |language=en |volume=58 |pages=227–235 |doi=10.1016/j.commatsci.2012.02.002}}</ref>, the Materials Project<ref>{{Cite journal |last=Jain |first=Anubhav |last2=Ong |first2=Shyue Ping |last3=Hautier |first3=Geoffroy |last4=Chen |first4=Wei |last5=Richards |first5=William Davidson |last6=Dacek |first6=Stephen |last7=Cholia |first7=Shreyas |last8=Gunter |first8=Dan |last9=Skinner |first9=David |last10=Ceder |first10=Gerbrand |last11=Persson |first11=Kristin A. |date=2013-07-01 |title=Commentary: The Materials Project: A materials genome approach to accelerating materials innovation |url=https://doi.org/10.1063/1.4812323 |journal=APL Materials |volume=1 |issue=1 |doi=10.1063/1.4812323 |issn=2166-532X}}</ref>, and OQMD.<ref>{{Cite journal |last=Saal |first=James E. |last2=Kirklin |first2=Scott |last3=Aykol |first3=Muratahan |last4=Meredig |first4=Bryce |last5=Wolverton |first5=C. |date=2013-11 |title=Materials Design and Discovery with High-Throughput Density Functional Theory: The Open Quantum Materials Database (OQMD) |url=http://link.springer.com/10.1007/s11837-013-0755-4 |journal=JOM |language=en |volume=65 |issue=11 |pages=1501–1509 |doi=10.1007/s11837-013-0755-4 |issn=1047-4838}}</ref> At the end of 2014, the NOMAD (Novel Materials Discovery) Laboratory launched the NOMAD Repository & Archive<ref>{{Cite journal |last=Draxl |first=Claudia |last2=Scheffler |first2=Matthias |date=2018-09 |title=NOMAD: The FAIR concept for big data-driven materials science |url=http://link.springer.com/10.1557/mrs.2018.208 |journal=MRS Bulletin |language=en |volume=43 |issue=9 |pages=676–682 |doi=10.1557/mrs.2018.208 |issn=0883-7694}}</ref><ref>{{Cite journal |last=Draxl |first=Claudia |last2=Scheffler |first2=Matthias |date=2019-07-01 |title=The NOMAD laboratory: from data sharing to artificial intelligence |url=https://iopscience.iop.org/article/10.1088/2515-7639/ab13bb |journal=Journal of Physics: Materials |volume=2 |issue=3 |pages=036001 |doi=10.1088/2515-7639/ab13bb |issn=2515-7639}}</ref><ref>{{Citation |last=Draxl |first=Claudia |last2=Scheffler |first2=Matthias |date=2020 |editor-last=Andreoni |editor-first=Wanda |editor2-last=Yip |editor2-first=Sidney |title=Big Data-Driven Materials Science and Its FAIR Data Infrastructure |url=http://link.springer.com/10.1007/978-3-319-44677-6_104 |work=Handbook of Materials Modeling |language=en |publisher=Springer International Publishing |place=Cham |pages=49–73 |doi=10.1007/978-3-319-44677-6_104 |isbn=978-3-319-44676-9 |accessdate=2023-11-20}}</ref>, the first FAIR storage infrastructure for computational materials science data. NOMAD’s servers and storage are hosted by the Max Planck Computing and Data Facility (MPCDF) in Garching (Germany). The NOMAD Repository stores, as of today, input and output files from more than 50 different atomistic (''ab initio'' and molecular mechanics) codes. It total, more than 100 million total-energy calculations have been uploaded by various materials scientists from their local storage, or from other public databases. The NOMAD Archive stores the same [[information]], but it is converted, normalized, and characterized by means of a metadata schema, the NOMAD Metainfo<ref name=":0">{{Cite journal |last=Ghiringhelli |first=Luca M. |last2=Carbogno |first2=Christian |last3=Levchenko |first3=Sergey |last4=Mohamed |first4=Fawzi |last5=Huhs |first5=Georg |last6=Lüders |first6=Martin |last7=Oliveira |first7=Micael |last8=Scheffler |first8=Matthias |date=2017-11-06 |title=Towards efficient data exchange and sharing for big-data driven materials science: metadata and data formats |url=https://www.nature.com/articles/s41524-017-0048-5 |journal=npj Computational Materials |language=en |volume=3 |issue=1 |pages=46 |doi=10.1038/s41524-017-0048-5 |issn=2057-3960}}</ref>, which allows for the labeling of most of the data in a code-independent representation. The translation from the content of raw input and output files into the code-independent NOMAD Metainfo format makes the data ready for AI analysis. | |||

Besides the above-mentioned databases, other platforms for the open-access storage and access of materials science data appeared in recent years, such as the Materials Data Facility<ref>{{Cite journal |last=Blaiszik |first=B. |last2=Chard |first2=K. |last3=Pruyne |first3=J. |last4=Ananthakrishnan |first4=R. |last5=Tuecke |first5=S. |last6=Foster |first6=I. |date=2016-08 |title=The Materials Data Facility: Data Services to Advance Materials Science Research |url=http://link.springer.com/10.1007/s11837-016-2001-3 |journal=JOM |language=en |volume=68 |issue=8 |pages=2045–2052 |doi=10.1007/s11837-016-2001-3 |issn=1047-4838}}</ref><ref>{{Cite journal |last=Blaiszik |first=Ben |last2=Ward |first2=Logan |last3=Schwarting |first3=Marcus |last4=Gaff |first4=Jonathon |last5=Chard |first5=Ryan |last6=Pike |first6=Daniel |last7=Chard |first7=Kyle |last8=Foster |first8=Ian |date=2019-12 |title=A data ecosystem to support machine learning in materials science |url=http://link.springer.com/10.1557/mrc.2019.118 |journal=MRS Communications |language=en |volume=9 |issue=4 |pages=1125–1133 |doi=10.1557/mrc.2019.118 |issn=2159-6859}}</ref> and Materials Cloud.<ref>{{Cite journal |last=Talirz |first=Leopold |last2=Kumbhar |first2=Snehal |last3=Passaro |first3=Elsa |last4=Yakutovich |first4=Aliaksandr V. |last5=Granata |first5=Valeria |last6=Gargiulo |first6=Fernando |last7=Borelli |first7=Marco |last8=Uhrin |first8=Martin |last9=Huber |first9=Sebastiaan P. |last10=Zoupanos |first10=Spyros |last11=Adorf |first11=Carl S. |date=2020-09-08 |title=Materials Cloud, a platform for open computational science |url=https://www.nature.com/articles/s41597-020-00637-5 |journal=Scientific Data |language=en |volume=7 |issue=1 |pages=299 |doi=10.1038/s41597-020-00637-5 |issn=2052-4463 |pmc=PMC7479138 |pmid=32901046}}</ref> Furthermore, many groups have been storing their materials science data on Zenodo<ref>{{Cite web |date=2022 |title=Zenodo |url=https://zenodo.org/ |publisher=CERN}}</ref> and have provided the digital object identifier (DOI) to openly access them in publications. The peculiarity of the NOMAD Repository & Archive is in the fact that users upload the full input and output files from their calculations into the Repository, and then such information is mapped onto the Archive, which (other) users can access via a unified [[application programming interface]] (API). | |||

Materials science has embraced also the second aspect of the fourth paradigm, i.e., AI-driven analysis. The applications of AI to materials science span two main classes of methods. One is the modeling of potential-energy surfaces by means of statistical models that promise to yield ''ab initio'' accuracy at a fraction of the evaluation time<ref>{{Cite journal |last=Lorenz |first=Sönke |last2=Groß |first2=Axel |last3=Scheffler |first3=Matthias |date=2004-09 |title=Representing high-dimensional potential-energy surfaces for reactions at surfaces by neural networks |url=https://linkinghub.elsevier.com/retrieve/pii/S000926140401125X |journal=Chemical Physics Letters |language=en |volume=395 |issue=4-6 |pages=210–215 |doi=10.1016/j.cplett.2004.07.076}}</ref><ref>{{Cite journal |last=Behler |first=Jörg |last2=Parrinello |first2=Michele |date=2007-04-02 |title=Generalized Neural-Network Representation of High-Dimensional Potential-Energy Surfaces |url=https://link.aps.org/doi/10.1103/PhysRevLett.98.146401 |journal=Physical Review Letters |language=en |volume=98 |issue=14 |pages=146401 |doi=10.1103/PhysRevLett.98.146401 |issn=0031-9007}}</ref><ref>{{Cite journal |last=Bartók |first=Albert P. |last2=Payne |first2=Mike C. |last3=Kondor |first3=Risi |last4=Csányi |first4=Gábor |date=2010-04-01 |title=Gaussian Approximation Potentials: The Accuracy of Quantum Mechanics, without the Electrons |url=https://link.aps.org/doi/10.1103/PhysRevLett.104.136403 |journal=Physical Review Letters |language=en |volume=104 |issue=13 |pages=136403 |doi=10.1103/PhysRevLett.104.136403 |issn=0031-9007}}</ref><ref>{{Cite journal |last=Bartók |first=Albert P. |last2=Kondor |first2=Risi |last3=Csányi |first3=Gábor |date=2013-05-28 |title=On representing chemical environments |url=https://link.aps.org/doi/10.1103/PhysRevB.87.184115 |journal=Physical Review B |language=en |volume=87 |issue=18 |pages=184115 |doi=10.1103/PhysRevB.87.184115 |issn=1098-0121}}</ref><ref>{{Cite journal |last=Schütt |first=Kristof T. |last2=Arbabzadah |first2=Farhad |last3=Chmiela |first3=Stefan |last4=Müller |first4=Klaus R. |last5=Tkatchenko |first5=Alexandre |date=2017-01-09 |title=Quantum-chemical insights from deep tensor neural networks |url=https://www.nature.com/articles/ncomms13890 |journal=Nature Communications |language=en |volume=8 |issue=1 |pages=13890 |doi=10.1038/ncomms13890 |issn=2041-1723 |pmc=PMC5228054 |pmid=28067221}}</ref><ref>{{Cite journal |last=Xie |first=Tian |last2=Grossman |first2=Jeffrey C. |date=2018-04-06 |title=Crystal Graph Convolutional Neural Networks for an Accurate and Interpretable Prediction of Material Properties |url=https://link.aps.org/doi/10.1103/PhysRevLett.120.145301 |journal=Physical Review Letters |language=en |volume=120 |issue=14 |pages=145301 |doi=10.1103/PhysRevLett.120.145301 |issn=0031-9007}}</ref> (if the CPU time necessary to produce the training data set is not considered). The other class is the advent of so-called [[materials informatics]], i.e., the statistical modeling of materials aimed at predicting their physical, often technologically relevant properties<ref>{{Cite journal |last=Rajan |first=Krishna |date=2005-10 |title=Materials informatics |url=https://linkinghub.elsevier.com/retrieve/pii/S1369702105711238 |journal=Materials Today |language=en |volume=8 |issue=10 |pages=38–45 |doi=10.1016/S1369-7021(05)71123-8}}</ref><ref>{{Cite journal |last=Pilania |first=Ghanshyam |last2=Wang |first2=Chenchen |last3=Jiang |first3=Xun |last4=Rajasekaran |first4=Sanguthevar |last5=Ramprasad |first5=Ramamurthy |date=2013-09-30 |title=Accelerating materials property predictions using machine learning |url=https://www.nature.com/articles/srep02810 |journal=Scientific Reports |language=en |volume=3 |issue=1 |pages=2810 |doi=10.1038/srep02810 |issn=2045-2322 |pmc=PMC3786293 |pmid=24077117}}</ref><ref>{{Cite journal |last=Ghiringhelli |first=Luca M. |last2=Vybiral |first2=Jan |last3=Levchenko |first3=Sergey V. |last4=Draxl |first4=Claudia |last5=Scheffler |first5=Matthias |date=2015-03-10 |title=Big Data of Materials Science: Critical Role of the Descriptor |url=https://link.aps.org/doi/10.1103/PhysRevLett.114.105503 |journal=Physical Review Letters |language=en |volume=114 |issue=10 |pages=105503 |doi=10.1103/PhysRevLett.114.105503 |issn=0031-9007}}</ref><ref>{{Cite journal |last=Isayev |first=Olexandr |last2=Fourches |first2=Denis |last3=Muratov |first3=Eugene N. |last4=Oses |first4=Corey |last5=Rasch |first5=Kevin |last6=Tropsha |first6=Alexander |last7=Curtarolo |first7=Stefano |date=2015-02-10 |title=Materials Cartography: Representing and Mining Materials Space Using Structural and Electronic Fingerprints |url=https://pubs.acs.org/doi/10.1021/cm503507h |journal=Chemistry of Materials |language=en |volume=27 |issue=3 |pages=735–743 |doi=10.1021/cm503507h |issn=0897-4756}}</ref><ref name=":1">{{Cite journal |last=Ouyang |first=Runhai |last2=Curtarolo |first2=Stefano |last3=Ahmetcik |first3=Emre |last4=Scheffler |first4=Matthias |last5=Ghiringhelli |first5=Luca M. |date=2018-08-07 |title=SISSO: A compressed-sensing method for identifying the best low-dimensional descriptor in an immensity of offered candidates |url=https://link.aps.org/doi/10.1103/PhysRevMaterials.2.083802 |journal=Physical Review Materials |language=en |volume=2 |issue=8 |pages=083802 |doi=10.1103/PhysRevMaterials.2.083802 |issn=2475-9953}}</ref><ref>{{Cite journal |last=Jha |first=Dipendra |last2=Ward |first2=Logan |last3=Paul |first3=Arindam |last4=Liao |first4=Wei-keng |last5=Choudhary |first5=Alok |last6=Wolverton |first6=Chris |last7=Agrawal |first7=Ankit |date=2018-12-04 |title=ElemNet: Deep Learning the Chemistry of Materials From Only Elemental Composition |url=https://www.nature.com/articles/s41598-018-35934-y |journal=Scientific Reports |language=en |volume=8 |issue=1 |pages=17593 |doi=10.1038/s41598-018-35934-y |issn=2045-2322 |pmc=PMC6279928 |pmid=30514926}}</ref>, by knowing limited input information about them, often just their stoichiometry. The latter aims at identifying the minimal set of descriptors (the materials’ genes) that correlate with properties of interest. This aspect, together with the observation that only a very small amount of the almost infinite number of possible materials is known today, may lead to the identification of undiscovered materials that have properties (e.g., conductivity, plasticity, elasticity, etc.) superior to the known ones. | |||

The NOMAD CoE has recognized the importance of enabling the AI analysis of the stored FAIR data and has launched the NOMAD AI Toolkit. This web-based infrastructure allows users to run in web-browser [[Electronic laboratory notebooks|computational notebooks]] (i.e., interactive documents that freely mix code, results, graphics, and text, supported by a suitable virtual environment) for performing complex queries and AI-based exploratory analysis and predictive modeling on the data contained in the NOMAD Archive. In this respect, the AI Toolkit pushes to the next, necessary step the concept of FAIR data, by recognizing that the most promising purpose of the FAIR principles is enabling AI analysis of the stored data. As a mnemonic, the next step in FAIR data starts by upgrading its meaning to "findable and AI-ready data."<ref>{{Cite journal |last=Scheffler |first=Matthias |last2=Aeschlimann |first2=Martin |last3=Albrecht |first3=Martin |last4=Bereau |first4=Tristan |last5=Bungartz |first5=Hans-Joachim |last6=Felser |first6=Claudia |last7=Greiner |first7=Mark |last8=Groß |first8=Axel |last9=Koch |first9=Christoph T. |last10=Kremer |first10=Kurt |last11=Nagel |first11=Wolfgang E. |date=2022-04-28 |title=FAIR data enabling new horizons for materials research |url=https://www.nature.com/articles/s41586-022-04501-x |journal=Nature |language=en |volume=604 |issue=7907 |pages=635–642 |doi=10.1038/s41586-022-04501-x |issn=0028-0836}}</ref> | |||

The mission of the NOMAD AI Toolkit is threefold, as reflected in the access points shown in its home page (Fig. 1): | |||

*Providing an API and libraries for accessing and analyzing the NOMAD Archive data via state-of-the-art (and beyond) AI tools; | |||

*Providing a set of tutorials with a shallow learning curve, from the hands-on introduction to the mastering of AI techniques; and | |||

*Maintaining a community-driven, growing collection of computational notebooks, each dedicated to an AI-based materials science publication. (By providing both the annotated data and the scripts for their analysis, students and scholars worldwide are enabled to retrace all the steps that the original researchers followed to reach publication-level results. Furthermore, the users can modify the existing notebooks and quickly check alternative ideas.) | |||

[[File:Fig1 Sbailò npjCompMat22 8.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' The NOMAD AI Toolkit homepage showcases the three purposes of the NOMAD AI toolkit: querying (and analyzing) the content of the NOMAD Archive, providing tutorials for AI tools, and accessing the AI workflow of published work. The fourth access point, "Get to work," is for experienced users, who can create and manage their own workspace.</blockquote> | |||

|- | |||

|} | |||

|} | |||

The data science community has introduced several platforms for performing AI-based [[Data analysis|analysis]] of scientific data, typically by providing rich libraries for [[machine learning]] (ML) and AI, and often offering users online resources for running electronic notebooks. General purpose frameworks such as Binder<ref>{{Cite journal |last=Jupyter |first=Project |last2=Bussonnier |first2=Matthias |last3=Forde |first3=Jessica |last4=Freeman |first4=Jeremy |last5=Granger |first5=Brian |last6=Head |first6=Tim |last7=Holdgraf |first7=Chris |last8=Kelley |first8=Kyle |last9=Nalvarte |first9=Gladys |last10=Osheroff |first10=Andrew |last11=Pacer |first11=M |date=2018 |title=Binder 2.0 - Reproducible, interactive, sharable environments for science at scale |url=https://conference.scipy.org/proceedings/scipy2018/project_jupyter.html |place=Austin, Texas |pages=113–120 |doi=10.25080/Majora-4af1f417-011}}</ref> and Google Colab<ref>{{Cite web |date=2022 |title=Welcome to Colaboratory |url=https://colab.research.google.com/ |publisher=Google}}</ref>—as well as dedicated materials science frameworks such as nanoHUB<ref>{{Cite journal |last=Klimeck |first=Gerhard |last2=McLennan |first2=Michael |last3=Brophy |first3=Sean P. |last4=Adams III |first4=George B. |last5=Lundstrom |first5=Mark S. |date=2008-09 |title=nanoHUB.org: Advancing Education and Research in Nanotechnology |url=http://ieeexplore.ieee.org/document/4604500/ |journal=Computing in Science & Engineering |volume=10 |issue=5 |pages=17–23 |doi=10.1109/MCSE.2008.120 |issn=1521-9615}}</ref>, pyIron<ref>{{Cite journal |last=Janssen |first=Jan |last2=Surendralal |first2=Sudarsan |last3=Lysogorskiy |first3=Yury |last4=Todorova |first4=Mira |last5=Hickel |first5=Tilmann |last6=Drautz |first6=Ralf |last7=Neugebauer |first7=Jörg |date=2019-06 |title=pyiron: An integrated development environment for computational materials science |url=https://linkinghub.elsevier.com/retrieve/pii/S0927025618304786 |journal=Computational Materials Science |language=en |volume=163 |pages=24–36 |doi=10.1016/j.commatsci.2018.07.043}}</ref>, AiidaLab<ref>{{Cite journal |last=Yakutovich |first=Aliaksandr V. |last2=Eimre |first2=Kristjan |last3=Schütt |first3=Ole |last4=Talirz |first4=Leopold |last5=Adorf |first5=Carl S. |last6=Andersen |first6=Casper W. |last7=Ditler |first7=Edward |last8=Du |first8=Dou |last9=Passerone |first9=Daniele |last10=Smit |first10=Berend |last11=Marzari |first11=Nicola |date=2021-02 |title=AiiDAlab – an ecosystem for developing, executing, and sharing scientific workflows |url=https://linkinghub.elsevier.com/retrieve/pii/S092702562030656X |journal=Computational Materials Science |language=en |volume=188 |pages=110165 |doi=10.1016/j.commatsci.2020.110165}}</ref>, and MatBench<ref>{{Cite journal |last=Dunn |first=Alexander |last2=Wang |first2=Qi |last3=Ganose |first3=Alex |last4=Dopp |first4=Daniel |last5=Jain |first5=Anubhav |date=2020-09-15 |title=Benchmarking materials property prediction methods: the Matbench test set and Automatminer reference algorithm |url=https://www.nature.com/articles/s41524-020-00406-3 |journal=npj Computational Materials |language=en |volume=6 |issue=1 |pages=138 |doi=10.1038/s41524-020-00406-3 |issn=2057-3960}}</ref>—are the most used by the community. In all these cases, great effort is devoted to education via online and in-person tutorials. The main specificity of the NOMAD AI Toolkit is in connecting within the same infrastructure the data, as stored in the NOMAD Archive, to their AI analysis. Moreover, as detailed below, users have in the same environment all available AI tools, as well as access to the NOMAD data, without the need to install anything. | |||

The rest of this paper is structured as follows. In the “Results” section, we describe the technology of the AI Toolkit. In the “Discussion” and “Data Availability” sections, we describe two exemplary notebooks: one notebook is a tutorial introduction to the interactive querying and exploratory analysis of the NOMAD Archive data, and the other notebook demonstrates the possibility to report publication-level materials science results<ref name=":2">{{Cite journal |last=Cao |first=Guohua |last2=Ouyang |first2=Runhai |last3=Ghiringhelli |first3=Luca M. |last4=Scheffler |first4=Matthias |last5=Liu |first5=Huijun |last6=Carbogno |first6=Christian |last7=Zhang |first7=Zhenyu |date=2020-03-23 |title=Artificial intelligence for high-throughput discovery of topological insulators: The example of alloyed tetradymites |url=https://link.aps.org/doi/10.1103/PhysRevMaterials.4.034204 |journal=Physical Review Materials |language=en |volume=4 |issue=3 |pages=034204 |doi=10.1103/PhysRevMaterials.4.034204 |issn=2475-9953}}</ref>, while enabling the users to put their hands on the [[workflow]], by modifying the input parameters and observing the impact of their interventions. | |||

==Results== | |||

===Technology=== | |||

We provide a user-friendly infrastructure to apply the latest AI developments and the most popular ML methods to materials science data. The NOMAD AI Toolkit aims at facilitating the deployment of sophisticated AI algorithms by means of an intuitive interface that is accessible from a webpage. In this way, AI-powered methodologies are transferred to materials science. In fact, the most recent advances in AI are usually available as software stored on web repositories. However, these need to be installed in a local environment, which requires specific bindings and environment variables. Such an installation can be a tedious process, which limits the diffusion of these computational methods, and also brings in the problem of reproducibility of published results. The NOMAD AI Toolkit offers a solution to this, by providing the software, that we install and maintain, in an environment that is accessible directly from the web. | |||

Docker<ref>{{Cite web |date=2022 |title=Docker |url=https://www.docker.com/ |publisher=Docker, Inc}}</ref> allows the installation of software in a container that is isolated from the host machine where it is running. In the NOMAD AI Toolkit, we maintain such a container, installing therein software that has been used to produce recently published results and taking care of the versioning of all required packages. [[Jupyter Notebook]]s are then used inside the container to interact with the underlying computational engine. Interactions include the execution of code, displaying the results of computations, and writing comments or explanations by using markup language. We opted for Jupyter Notebooks because such interactivity is ideal for combining computation and analysis of the results in a single framework. The kernel of the notebooks, i.e., the computational engine that runs the code, is set to read [[Python (programming language)|Python]]. Python has built-in support for scientific computing as the SciPy ecosystem, and it is highly extensible because it allows the wrapping of code written in compiled languages such as C or C++. This technological infrastructure is built using JupyterHub<ref>{{Cite web |date=2022 |title=JupyterHub |url=https://jupyter.org/hub |publisher=Jupyter Software Steering Council}}</ref> and deploys servers that are orchestrated by Kubernetes on computing facilities offered by the MPCDF in Garching, Germany. Users of the AI Toolkit can currently run their analyses on up to eight CPU cores, with up to 10 GB RAM. | |||

A key feature of the NOMAD AI Toolkit is that we allow users to create, modify, and store computational notebooks where original AI workflows are developed. From the “Get to work” button accessible at https://nomad-lab.eu/aitoolkit, registered users are redirected to a personal space, where we provide 10 GB of [[Cloud computing|cloud storage]] and where work can also be saved. Jupyter Notebooks, which are created inside the “work” directory in the user's personal space, are stored on our servers and can be accessed and edited over time. These notebooks are placed in the NOMAD AI Toolkit environment, which means that all software and methods demonstrated in other tutorials can be deployed therein. The versatility of Jupyter Notebooks in fact facilitates an interactive and instantaneous combination of different methods. This is useful if one aims at, e.g., combining different methods available in the NOMAD AI Toolkit in an original manner, or to deploy a specific algorithm to a dataset that is retrieved from the NOMAD Archive. The original notebook, which is developed in the "work" directory, might then lead to a publication, and the notebook can then be added to the “Published results” section of the AI Toolkit. | |||

===Contributing=== | |||

The NOMAD AI Toolkit aims to promote reproducibility of published results. Researchers working in the field of AI applied to materials science are invited to share their software and install it in the NOMAD AI Toolkit. The shared software can be used in citeable Jupyter Notebooks, which are accessible online, to reproduce results that have been recently published in scientific journals. Sharing software and methods in a user-friendly infrastructure such as the NOMAD AI Toolkit can also promote the visibility of research and boost interdisciplinary collaborations. | |||

All Jupyter Notebooks currently available in the NOMAD AI Toolkit are located in the same Docker container, thus allowing transferability of methods and pipelines between different notebooks. This also implies that software employed is constrained to be installed using the same package versions for each notebook. However, to facilitate a faster and more robust integration of external contributions to the NOMAD AI Toolkit, we allow the creation of separated Docker containers which can have their own [[Version control|versioning]]. Having a separate Docker container for a notebook allows to minimize maintenance of the notebook, and it avoids further updates when, e.g., package versions are updated in the main Docker container. | |||

Contributing to the NOMAD AI Toolkit is straightforward, and consists of the following system considerations: | |||

*Data must be uploaded to the NOMAD Archive & Repository, either in the public server (https://nomad-lab.eu/prod/rae/gui/uploads) or in the local, self-contained variant. | |||

*Software needs to be installed in the base image of the NOMAD AI Toolkit. | |||

*The whole workflow of a (published) project, from importing the data to generating results, has to be placed in a Jupyter Notebook. The package(s) and notebook are then uploaded to GitLab in a public repository (https://gitlab.mpcdf.mpg.de/nomad-lab/analytics), where the back-end code is stored. | |||

*A DOI is generated for the notebook, which is versioned in GitLab. In the spirit of, e.g., Cornell University’s arXiv.org, the latest version of the notebook is linked to the DOI, but all previous versions are maintained. | |||

Researchers interested in contributing to the NOMAD AI Toolkit are invited to contact us for further details. | |||

===Data management policy=== | |||

For maintenance reasons, NOMAD keeps anonymous access logs for API calls for a limited amount of time. However, those logs are not associated with NOMAD users; in fact, users do not need to provide authentication to use the NOMAD APIs. We also would like to note that query commands used for extracting the data that are analyzed in a given notebook are part of the notebook itself, hence stored. This guarantees reproducibility of the AI analysis as the same query commands will always yield the same outcome, e.g., the same data points for the AI analysis. Publicly shared notebooks on the AI Toolkit platform are required to adopt the Apache License Version 2. Finally, we note that the overall NOMAD infrastructure, including the AI Toolkit, will be maintained for at least 10 years after the last data upload. | |||

===AI Toolkit app=== | |||

In addition to the web-based toolkit, we also maintain an app that allows the deployment of the NOMAD AI Toolkit environment<ref>{{Cite web |date=2022 |title=NOMAD AI-Toolkit App |url=https://gitlab.mpcdf.mpg.de/nomad-lab/aitoolkit-app |publisher=NOMAD Laboratory}}</ref> on a local machine. This app employs the same graphical user interface (GUI) as the online version; in particular, the user accesses it via a normal web browser. However, the browser does not need to have access to the web and can therefore run behind firewalls. Software and methods installed in the NOMAD AI Toolkit will deploy the user's personal computational resources. This can be useful when calculations are particularly demanding, and also when AI methods are applied to private data that should not access the web. Through the local app, both the data on the NOMAD server and locally stored data can be accessed. The latter access is supported by NOMAD OASIS, the stand-alone version of the NOMAD infrastructure.<ref>{{Cite web |date=2022 |title=Operating an OASIS |work=NOMAD Documentation |url=https://nomad-lab.eu/prod/v1/docs/oasis/install.html |publisher=NOMAD Laboratory}}</ref> | |||

===Querying the NOMAD Archive and performing AI modeling on retrieved data=== | |||

The NOMAD AI Toolkit features the tutorial “Querying the archive and performing artificial intelligence modeling” notebook<ref name=":3">{{Cite web |last=Sbailò, L.; Scheffler, M.; Ghiringhelli, L.M. |date=14 April 2021 |title=query_nomad_archive |url=https://nomad-lab.eu/aitutorials/query_nomad_archive |publisher=NOMAD Laboratory}}</ref> (also accessible from the “Query the archive” button at https://nomad-lab.eu/aitoolkit), which demonstrates all steps required to perform AI analysis on data stored in the NOMAD Archive. These steps are the following: (i) querying the data by using the RESTful API (see below) that is built on the NOMAD Metainfo; (ii) loading the needed AI packages, including the library of features that are used to fingerprint the data points (materials) in the AI analysis; and (iii) performing the AI training and [[Data visualization|visualizing]] the results. | |||

The NOMAD Laboratory has developed the NOMAD Python package, which includes a client module to query the Archive using the NOMAD API. All functionalities of the NOMAD Repository & Archive are offered through a RESTful API, i.e., an API that uses HTTP methods to access data. In other words, each item in the Archive (typically a JSON data file) is reachable via a URL accessible from any web browser. | |||

In the example notebook<ref name=":3" />, we use the NOMAD Python client library to retrieve ternary elements containing oxygen. We also request that the ''ab initio'' calculations were carried out with the VASP code, using exchange-correlation (xc) functionals from the generalized gradient approximation (GGA) family. In addition, to ensure that calculations have converged, we also set that the energy difference during geometry optimization has converged. As of April 2022, this query retrieves almost 8,000 entries, which are the results of simulations carried out at different laboratories. We emphasize that in this notebook we show how data with heterogeneous origin can be used consistently for ML analyses. | |||

Here, we target atomic density, which is obtained by a geometrically converged DFT calculation. The client module in the NOMAD Python package establishes a client-server connection in a so-called lazy manner, i.e., data are not fetched altogether, but with an iterative query. Entries are then iteratively retrieved, and each entry allows to access data and metadata relative to the simulation results that have been uploaded. In this example, the queried materials are composed of three different elements, where one of the elements is required to be oxygen. From each entry of the query, we retrieve the converged value of the atomic density and the name and stoichiometric ratio of the other two chemical elements. During the query, we use the atomic features library (see below) to add other atomic features to the dataframe that is built with the retrieved data. Before discussing the actual analysis performed in the notebook, let us briefly comment on NOMAD Metainfo and the libraries of input (atomic) features. | |||

===NOMAD Metainfo=== | |||

The NOMAD API has access to the data in the NOMAD Archive, which are organized by means of NOMAD Metainfo.<ref name=":0" /><ref>{{Cite journal |last=Ghiringhelli |first=Luca M. |last2=Baldauf |first2=Carsten |last3=Bereau |first3=Tristan |last4=Brockhauser |first4=Sandor |last5=Carbogno |first5=Christian |last6=Chamanara |first6=Javad |last7=Cozzini |first7=Stefano |last8=Curtarolo |first8=Stefano |last9=Draxl |first9=Claudia |last10=Dwaraknath |first10=Shyam |last11=Fekete |first11=Ádám |date=2022 |title=Shared Metadata for Data-Centric Materials Science |url=https://arxiv.org/abs/2205.14774 |doi=10.48550/ARXIV.2205.14774}}</ref> Here, we mention that it is a hierarchical and modular schema, where each piece of information contained in an input/output file of an atomistic simulation code has its own metadata entry. The metadata are organized in sections (akin to tables in a relational database) such as "System," containing information on the geometry and composition of the simulated system, and "Method," containing information on the physical model (e.g., type of xc functional, type of relativistic treatment, and basis set). Crucially, each item in any section (i.e., a column, in the relational database analogy, where each data object is a row) has a unique name. Such name (e.g., “atoms,” which is a list of the atomic symbols of all chemical species present in a simulation cell) is associated with values that can be searched via the API. In practice, one can search all compounds containing oxygen by specifying <tt>query={’atoms’: [’O’]}</tt> as the argument of the <tt>query_archive()</tt> function, which is the backbone of the NOMAD API. | |||

===Libraries of input features=== | |||

Together with the materials data, the other important piece of information for an AI analysis is the representation of each data point. A possible choice, useful for exploratory analysis, but also the training of predictive models, is to represent the atoms in the simulation cell by means of their periodic table properties (also called atomic features), e.g., atomic number, row and column in the periodic table, ionic or covalent radii, and electronegativity. In order to facilitate access to these features, we maintain the <tt>atomic_collections</tt> library, containing features for all atoms in the periodic table (up to ''Z'' = 100), calculated via DFT with a selection of xc functionals. Furthermore, we have also installed the MATMINER package<ref>{{Cite journal |last=Ward |first=Logan |last2=Dunn |first2=Alexander |last3=Faghaninia |first3=Alireza |last4=Zimmermann |first4=Nils E.R. |last5=Bajaj |first5=Saurabh |last6=Wang |first6=Qi |last7=Montoya |first7=Joseph |last8=Chen |first8=Jiming |last9=Bystrom |first9=Kyle |last10=Dylla |first10=Maxwell |last11=Chard |first11=Kyle |date=2018-09 |title=Matminer: An open source toolkit for materials data mining |url=https://linkinghub.elsevier.com/retrieve/pii/S0927025618303252 |journal=Computational Materials Science |language=en |volume=152 |pages=60–69 |doi=10.1016/j.commatsci.2018.05.018}}</ref>, a recently introduced rich library of atomic properties from calculations and experiment. In this way, all atomic properties defined in the various sources are available within the toolkit environment. | |||

===Example of exploratory analysis: Clustering=== | |||

We now proceed with the discussion of the showcase notebook, which performs an unsupervised learning analysis called "clustering." The evolutionary human ability to recognize patterns in empirical data has led to the most disparate scientific findings, from, e.g., Kepler’s Laws to the Lorenz attractor. However, finding patterns in highly multidimensional data requires automated tools. Here, we would like to understand whether the data retrieved form the NOMAD Archive can be grouped into clusters of data that share a similar representation, where data points within the same cluster are similar to each other while being different from data points belonging to other clusters. The notion of similarity in the discussed unsupervised learning task is strictly related to the representation of the data, here a set of atomic properties of the constituent material. | |||

A plethora of different clustering algorithms has been developed in the last few years, each with different ideal applications (see, e.g., our tutorial notebook introducing the most popular clustering algorithms<ref>{{Cite web |last=Sbailò, L.; Ghiringhelli, L. M. |date=21 January 2021 |title=clustering_tutorial |url=https://nomad-lab.eu/aitutorials/clustering_tutorial |publisher=NOMAD Laboratory}}</ref>). Among the various algorithms currently available, we chose a recent algorithm, which we will briefly outline below, that stands out for simplicity, quality of the results, and robustness. | |||

The clustering algorithm that is employed in this notebook is the hierarchical density-based spatial clustering of applications with noise (HDBSCAN)<ref>{{Cite journal |last=McInnes |first=Leland |last2=Healy |first2=John |last3=Astels |first3=Steve |date=2017-03-21 |title=hdbscan: Hierarchical density based clustering |url=http://joss.theoj.org/papers/10.21105/joss.00205 |journal=The Journal of Open Source Software |volume=2 |issue=11 |pages=205 |doi=10.21105/joss.00205 |issn=2475-9066}}</ref>, a recent extension of the popular DBSCAN algorithm.<ref>{{Cite journal |last=Ester, M.; Kriegel, H.-P.; Sander, J. |year=1996 |title=A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise |url=https://dl.acm.org/doi/10.5555/3001460.3001507 |journal=Proceedings of the Second International Conference on Knowledge Discovery and Data Mining |pages=226–31 |doi=10.5555/3001460.3001507}}</ref> As density-based algorithms, HDBSCAN relies on the idea that clusters are islands of high-density points separated by a sea of low-density points. The data points in the low-density region are labeled as "outliers" and are not associated with any clusters. Outlier identification is at the core of the HDBSCAN algorithm, which uses the mutual reachability distance, i.e., a specific distance metric to distort the space so as to “push” outliers away from the high-density regions. | |||

Cluster definition is to some extent subtle, as many possible different combinations are acceptable. One of the main challenges is represented by nested clusters, where it is not always trivial to decide whether a relatively large cluster should be decomposed into more subclusters, or if instead a unique supercluster should be taken. The HDBSCAN algorithm performs a hierarchical exploration that evaluates possible subdivisions of the data into clusters. Initially, for low values of the distance threshold, there is only one large cluster that includes all points. As the threshold is lowered, the cluster can eventually split into smaller subclusters. This algorithm automatically decides whether to split the supercluster, and this decision is based on how robust—with respect to further divisions—the new subclusters would be. If, for example, after a cluster division many other splittings would shortly follow while lowering the threshold distance, then the larger supercluster is taken; if, otherwise, the subclusters do not immediately face further subdivisions, they are selected instead of the large supercluster. | |||

===Dimension reduction: the Visualizer=== | |||

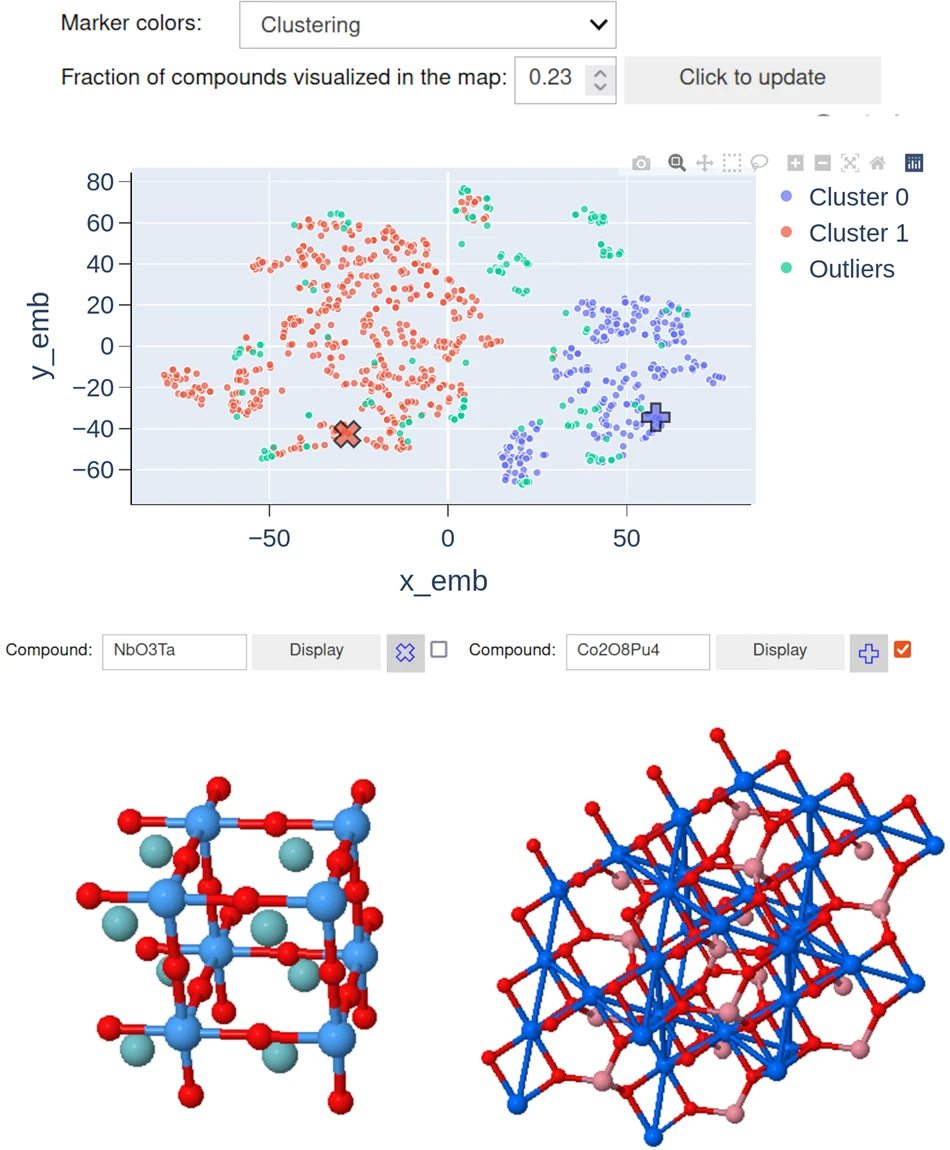

The NOMAD AI Toolkit also comes with Visualizer, a package which allows a straightforward analysis of tabulated data that contain materials structures, and which is optimized for data retrieved from the NOMAD Archive. Visualizer is built using the PLOTLY package<ref>{{Cite web |date=2022 |title=Plotly |url=https://plotly.com/ |publisher=Plotly Technologies, Inc}}</ref>, which allows the creation of an interactive map, whose usability is improved using ipywidgets. An example is shown in Fig. 2. The map shows with distinct colors different clusters of materials, that were embedded into a two-dimensional plane using the dimension reduction algorithm t-SNE.<ref>{{Cite journal |last=van der Maaten, L.; Hinton, G. |year=2008 |title=Visualizing Data using t-SNE |url=https://jmlr.org/papers/v9/vandermaaten08a.html |journal=Journal of Machine Learning Research |volume=9 |issue=86 |pages=2579−2605}}</ref> We would like to remark that axes in this embedding do not have a meaning and cannot be expressed as a global function of the features spanning the original space. This embedding algorithm, as many nonlinear embedding algorithms, finds a low dimensional representation where pairwise distances between data points are preserved, which makes it possible to visualize clusters of points in a two-dimensional plot. | |||

[[File:Fig2 Sbailò npjCompMat22 8.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' Snapshot of Visualizer in the "Querying the Archive and performing artificial intelligence modeling’ notebook. The visualization of a two-dimensional map allows to identify subsets (in AI nomenclature: clusters) of materials with similar properties. Two windows at the bottom of the map allow viewing the structures of the compounds in the map. Clicking a point shows the structure of the selected material. Ticking the box on top of the windows selects which one of the two windows is used for the next visualization. The two windows have different types of symbols (here, crosses) to mark the position on the map. It is also possible to display a specific material chosen from the "Compound" text box to show its structure and its position on the map, which is then labeled with a cross. In this figure, two compounds are visualized, and it is possible to spot the position of the materials on the map.</blockquote> | |||

|- | |||

|} | |||

|} | |||

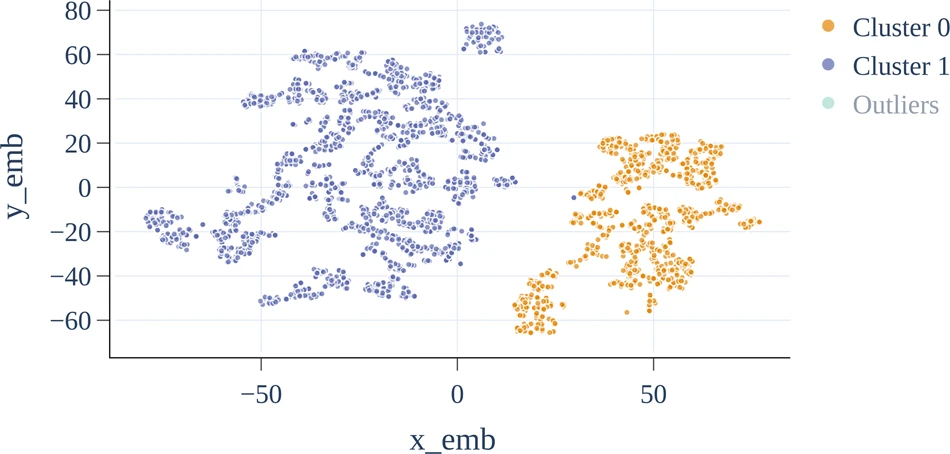

Clicking on any of the points in the map displays the atomic structure of the material in one of the windows at the bottom of the map. The position of the compound that is displayed is marked with a cross on the map. There are two different display windows to facilitate the comparison of different structures, and the window for the next visualization is selected with a tick box on top of Visualizer. By clicking “Display,” the structure of the material and its position on the map are shown. We also provide some plotting utilities to generate high-quality plots (see Fig. 3). Controls for fine-tuning the printing quality and appearance are displayed by clicking the “For a high-quality print …” button. | |||

[[File:Fig3 Sbailò npjCompMat22 8.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 3.''' An example of a high-quality plot that can be produced using Visualizer. The “Toggle on/off plot appearance utils” button displays a number of controls that can be used to modify and generate the plots. It is possible to change resolution, format file, color palette for the markers, text format and size, and markers' size.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Discovering of new topological insulators: Application of SISSO to alloyed tetradymites=== | |||

As a second, complementary example, we discuss a notebook that addresses an analysis of topological semiconductors.<ref name=":2" /> The employed AI method is SISSO (sure-independent screening combined with sparsifying operator<ref name=":1" />), which combines symbolic regression with compressed sensing. In practice, for a given target property of a class of materials, SISSO identifies a low-dimensional descriptor, out of a huge number of candidates (billions, or more). The candidate descriptors, the materials genes, are constructed as algebraic expressions, by combining mathematical operators (e.g., sums, products, exponentials, powers) with basic physical quantities, called primary features. These features are properties of the materials, or their constituents (e.g., the atomic species in the material’s composition), that are (much) easier to evaluate (or measure) than the target properties that are modeled by using the SISSO-selected features as input and with the mathematical relationship identified as well by SISSO. In the work of Cao ''et al.''<ref name=":2" />, the materials property of interest was the classification between topological vs. trivial insulators. | |||

The addressed class of materials was the tetradymites family, i.e., materials with the general chemical formula ''AB − LMN'', where the cations ''A'', ''B'' ∈ {As, Sb, Bi} and the anions ''L'', ''M'', ''N'' ∈ {S, Se, Te}, and a trigonal (R3m) symmetry. Some of these materials are known to be topological insulators, and the data-driven task was to predict the classification into topological vs. trivial insulators of all possible such materials, just by knowing their formula, by using as training data a set of 152 tetradymites for which the topological invariant ''Z''<sub>2</sub> is calculated via DFT for the optimized geometries. | |||

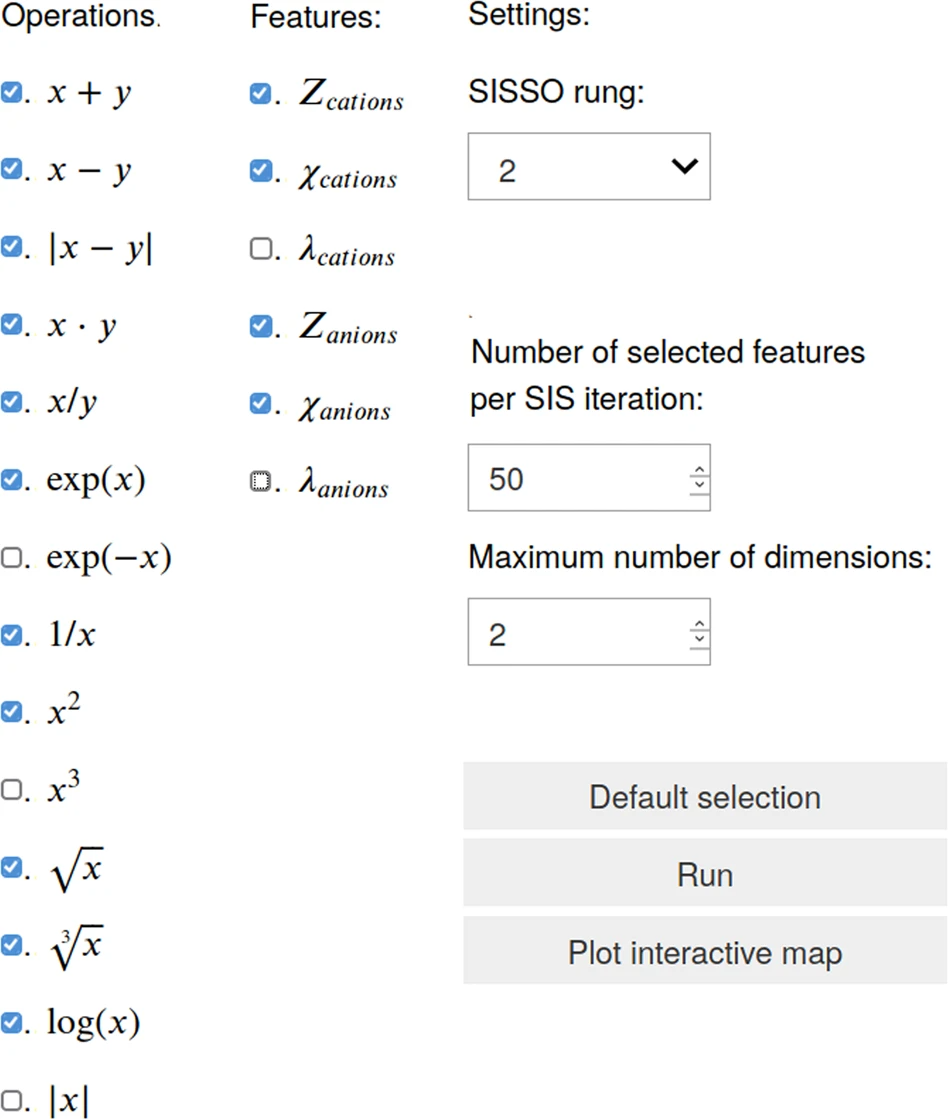

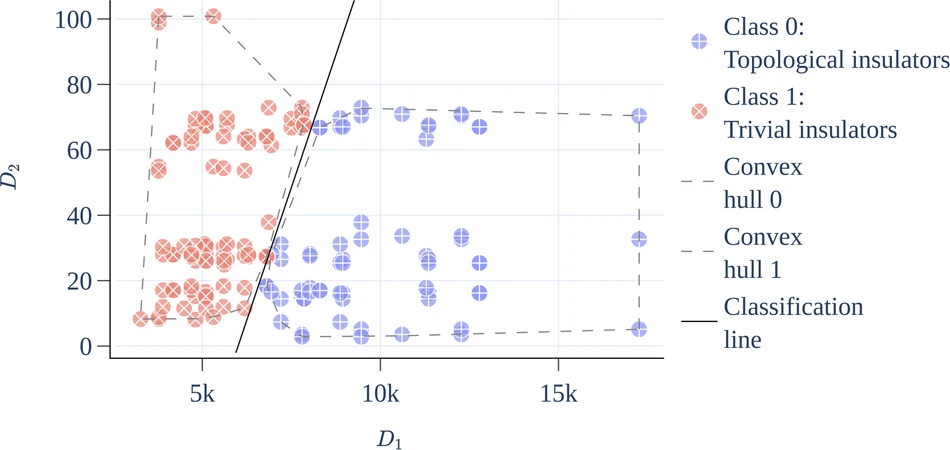

In the notebook “Discovery of new topological insulators in alloyed tetradymites”<ref name=":4">{{Cite web |last=Sbailò, L.; Purcell, T.A.R.; Ghiringhelli, L.M. et al. |date=15 September 2020 |title=tetradymite_PRM2020 |url=https://nomad-lab.eu/aitutorials/tetradymite_prm2020 |publisher=NOMAD Laboratory}}</ref>, we invite the user to interactively reproduce the results of Cao ''et al.''<ref name=":2" />, namely the materials property map as shown in Fig. 5. The map is obtained within the notebook, after selecting as input settings the same primary features and other SISSO parameters as used for the publication. In Figure 4, we show a snapshot of the input widget, where users can select features, operators, and SISSO parameters according to their preference and test alternative results. When clicking “Run,” the SISSO code is running within the container created for the user at the NOMAD server. In the notebook, the map as shown in Fig. 5 is managed by the same Visualizer for the query-and-analyze notebook. This means that by mouse hovering, the chemical formula of the compound represented by the marker is shown in a tooltip. By clicking a marker, the crystal structure of the corresponding material is shown in a box below the plot. | |||

[[File:Fig4 Sbailò npjCompMat22 8.png|600px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="600px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 4.''' Graphical input interface for the SISSO training of tetradymite-materials classification. The snapshot is taken from the "Discovery of new topological insulators in alloyed tetradymites" notebook.</blockquote> | |||

|- | |||

|} | |||

|} | |||

[[File:Fig5 Sbailò npjCompMat22 8.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="600px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 5.''' Interactive map of tetradymite materials, as produced with the AI Toolkit's Visualizer. The topological (trivial) insulator training points are marked in red (blue). All materials falling in the convex hulls delimited by the dashed line enveloping the red (blue) points are predicted to be topological (trivial) insulators. The axes, D1 and D2, are the components of the descriptor identified by SISSO, in terms of analytical function of the selected input parameters (see Cao ''et al.''<ref name=":2" /> and the AI Toolkit notebook<ref name=":4" /> for more details).</blockquote> | |||

|- | |||

|} | |||

|} | |||

In summary, with the notebook “Discovery of new topological insulators in alloyed tetradymites,” we provide an interactive, complementary support to Cao ''et al.''<ref name=":2" />, where the user can reproduce the results of the paper starting with the same input, by using the same code, and by going as far as re-obtaining exactly the same main result plot (except for the different graphical style). More than what can be found in the paper, the user can change the input settings to the SISSO learning, explore the results by changing the visualization settings, and browse the structures of the single data points. The user can also use the notebook as a template and start from other data, retrieved from the NOMAD Archive, to perform an analysis with the same method, etc. | |||

==Discussion== | |||

We presented the NOMAD AI Toolkit, a web-browser-based platform for performing AI analysis of materials science data, both online on NOMAD servers and locally on own computational resources, even behind firewalls. The purpose of the AI Toolkit is to provide the tools for exploiting the "findable and AI-ready" (F-AIR) materials science data that are contained in the NOMAD Repository & Archive, as well as several other databases in the field. The platform provides integrated access, via Jupyter Notebooks, to state-of-the-art AI methods and concepts. Shallow learning curve hands-on tutorials are provided, in the form of interactive Jupyter Notebooks, for all the available tools. A particular focus is on the reproducibility of AI-based workflows associated with high-profile publications: The AI Toolkit offers a selection of notebooks demonstrating such workflows, so that users can understand step by step what was done in publications and readily modify and adapt the workflows to their own needs. We hope this example could be an inspiration to augment future publications with similar hands-on notebooks. This will allow for enhanced reproducibility of data-driven materials science papers and dampen the learning curve for newcomers to the field. The community is invited to contribute more notebooks in order to share cutting-edge knowledge in an efficient and scientifically robust way. | |||

==Acknowledgements== | |||

We would like to acknowledge Fawzi Mohammed, Angelo Ziletti, Markus Scheidgen, and Lauri Himanen for inspiring discussions. This work received funding from the European Union’s Horizon 2020 research and innovation program under the grant agreement No. 951786 (NOMAD CoE), the ERC Advanced Grant TEC1P (No. 740233), and the German Research Foundation (DFG) through the NFDI consortium “FAIRmat”, project 460197019. | |||

===Author contributions=== | |||

L.M.G. and M.S. initiated and supervised the project. L.S. and A.F. implemented the web-based version of the Toolkit. L.S. implemented the local-app version of the AI Toolkit and coded the notebooks discussed in this paper. L.S. and L.M.G. wrote the initial version of the manuscript. All authors contributed to the final version of the manuscript. | |||

===Funding=== | |||

Open Access funding enabled and organized by Projekt DEAL. | |||

===Data and code availability=== | |||

Data and code used in this study are openly accessible on the NOMAD AI Toolkit at https://nomad-lab.eu/aitoolkit. See Sbailò ''et al.''<ref name=":3" /> and Sbailò ''et al.''<ref name=":4" /> for the code (in notebook form) of the specific examples discussed in this paper. | |||

===Competing interests=== | |||

The authors declare no competing interests. | |||

==References== | ==References== | ||

| Line 42: | Line 195: | ||

<!--Place all category tags here--> | <!--Place all category tags here--> | ||

[[Category:LIMSwiki journal articles (added in 2023]] | [[Category:LIMSwiki journal articles (added in 2023)]] | ||

[[Category:LIMSwiki journal articles (all)]] | [[Category:LIMSwiki journal articles (all)]] | ||

[[Category:LIMSwiki journal articles on FAIR data principles]] | |||

[[Category:LIMSwiki journal articles on laboratory informatics]] | [[Category:LIMSwiki journal articles on laboratory informatics]] | ||

[[Category:LIMSwiki journal articles on materials informatics]] | [[Category:LIMSwiki journal articles on materials informatics]] | ||

Latest revision as of 16:30, 29 April 2024

| Full article title | The NOMAD Artificial Intelligence Toolkit: Turning materials science data into knowledge and understanding |

|---|---|

| Journal | npj Computational Materials |

| Author(s) | Sbailò, Luigi; Fekete, Ádám; Ghiringhelli, Luca M.; Scheffler, Matthias |

| Author affiliation(s) | Humboldt-Universität zu Berlin, Max-Planck-Gesellschaft |

| Primary contact | Email: ghiringhelli at fhi dash berlin dot mpg dot de |

| Year published | 2022 |

| Volume and issue | 8 |

| Article # | 250 |

| DOI | 10.1038/s41524-022-00935-z |

| ISSN | 2057-3960 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.nature.com/articles/s41524-022-00935-z |

| Download | https://www.nature.com/articles/s41524-022-00935-z.pdf (PDF) |

Abstract

We present the Novel Materials Discovery (NOMAD) Artificial Intelligence (AI) Toolkit, a web-browser-based infrastructure for the interactive AI-based analysis of materials science data under FAIR (findable, accessible, interoperable, and reusable) data principles. The AI Toolkit readily operates on FAIR data stored in the central server of the NOMAD Archive, the largest database of materials science data worldwide, as well as locally stored, user-owned data. The NOMAD Oasis, a local, stand-alone server can also be used to run the AI Toolkit. By using Jupyter Notebooks that run in a web-browser, the NOMAD data can be queried and accessed; data mining, machine learning (ML), and other AI techniques can then be applied to analyze them. This infrastructure brings the concept of reproducibility in materials science to the next level by allowing researchers to share not only the data contributing to their scientific publications, but also all the developed methods and analytics tools. Besides reproducing published results, users of the NOMAD AI Toolkit can modify Jupyter Notebooks toward their own research work.

Keywords: computational methods, theory and computation, artificial intelligence, materials science, FAIR principles

Introduction

Data-centric science has been identified as the fourth paradigm of scientific research. We observe that the novelty introduced by this paradigm is twofold. First, we have seen the creation of large, interconnected databases of scientific data, which are increasingly expected to comply with the so-called FAIR principles[1] of scientific data management and stewardship, meaning that the data and related metadata need to be findable, accessible, interoperable, and reusable (or repurposable, or recyclable). Second, we have seen growing use of artificial intelligence (AI) algorithms, applied to scientific data, in order to find patterns and trends that would be difficult, if not impossible, to identify by unassisted human observation and intuition.

In the last few years, materials science has experienced both of these novelties. Databases, in particular from computational materials science, have been created via high-throughput screening initiatives, mainly boosted by the U.S.-based Materials Genome Initiative (MGI), starting in the early 2010s, e.g., AFLOW[2], the Materials Project[3], and OQMD.[4] At the end of 2014, the NOMAD (Novel Materials Discovery) Laboratory launched the NOMAD Repository & Archive[5][6][7], the first FAIR storage infrastructure for computational materials science data. NOMAD’s servers and storage are hosted by the Max Planck Computing and Data Facility (MPCDF) in Garching (Germany). The NOMAD Repository stores, as of today, input and output files from more than 50 different atomistic (ab initio and molecular mechanics) codes. It total, more than 100 million total-energy calculations have been uploaded by various materials scientists from their local storage, or from other public databases. The NOMAD Archive stores the same information, but it is converted, normalized, and characterized by means of a metadata schema, the NOMAD Metainfo[8], which allows for the labeling of most of the data in a code-independent representation. The translation from the content of raw input and output files into the code-independent NOMAD Metainfo format makes the data ready for AI analysis.

Besides the above-mentioned databases, other platforms for the open-access storage and access of materials science data appeared in recent years, such as the Materials Data Facility[9][10] and Materials Cloud.[11] Furthermore, many groups have been storing their materials science data on Zenodo[12] and have provided the digital object identifier (DOI) to openly access them in publications. The peculiarity of the NOMAD Repository & Archive is in the fact that users upload the full input and output files from their calculations into the Repository, and then such information is mapped onto the Archive, which (other) users can access via a unified application programming interface (API).

Materials science has embraced also the second aspect of the fourth paradigm, i.e., AI-driven analysis. The applications of AI to materials science span two main classes of methods. One is the modeling of potential-energy surfaces by means of statistical models that promise to yield ab initio accuracy at a fraction of the evaluation time[13][14][15][16][17][18] (if the CPU time necessary to produce the training data set is not considered). The other class is the advent of so-called materials informatics, i.e., the statistical modeling of materials aimed at predicting their physical, often technologically relevant properties[19][20][21][22][23][24], by knowing limited input information about them, often just their stoichiometry. The latter aims at identifying the minimal set of descriptors (the materials’ genes) that correlate with properties of interest. This aspect, together with the observation that only a very small amount of the almost infinite number of possible materials is known today, may lead to the identification of undiscovered materials that have properties (e.g., conductivity, plasticity, elasticity, etc.) superior to the known ones.

The NOMAD CoE has recognized the importance of enabling the AI analysis of the stored FAIR data and has launched the NOMAD AI Toolkit. This web-based infrastructure allows users to run in web-browser computational notebooks (i.e., interactive documents that freely mix code, results, graphics, and text, supported by a suitable virtual environment) for performing complex queries and AI-based exploratory analysis and predictive modeling on the data contained in the NOMAD Archive. In this respect, the AI Toolkit pushes to the next, necessary step the concept of FAIR data, by recognizing that the most promising purpose of the FAIR principles is enabling AI analysis of the stored data. As a mnemonic, the next step in FAIR data starts by upgrading its meaning to "findable and AI-ready data."[25]

The mission of the NOMAD AI Toolkit is threefold, as reflected in the access points shown in its home page (Fig. 1):

- Providing an API and libraries for accessing and analyzing the NOMAD Archive data via state-of-the-art (and beyond) AI tools;

- Providing a set of tutorials with a shallow learning curve, from the hands-on introduction to the mastering of AI techniques; and

- Maintaining a community-driven, growing collection of computational notebooks, each dedicated to an AI-based materials science publication. (By providing both the annotated data and the scripts for their analysis, students and scholars worldwide are enabled to retrace all the steps that the original researchers followed to reach publication-level results. Furthermore, the users can modify the existing notebooks and quickly check alternative ideas.)

|

The data science community has introduced several platforms for performing AI-based analysis of scientific data, typically by providing rich libraries for machine learning (ML) and AI, and often offering users online resources for running electronic notebooks. General purpose frameworks such as Binder[26] and Google Colab[27]—as well as dedicated materials science frameworks such as nanoHUB[28], pyIron[29], AiidaLab[30], and MatBench[31]—are the most used by the community. In all these cases, great effort is devoted to education via online and in-person tutorials. The main specificity of the NOMAD AI Toolkit is in connecting within the same infrastructure the data, as stored in the NOMAD Archive, to their AI analysis. Moreover, as detailed below, users have in the same environment all available AI tools, as well as access to the NOMAD data, without the need to install anything.

The rest of this paper is structured as follows. In the “Results” section, we describe the technology of the AI Toolkit. In the “Discussion” and “Data Availability” sections, we describe two exemplary notebooks: one notebook is a tutorial introduction to the interactive querying and exploratory analysis of the NOMAD Archive data, and the other notebook demonstrates the possibility to report publication-level materials science results[32], while enabling the users to put their hands on the workflow, by modifying the input parameters and observing the impact of their interventions.

Results

Technology

We provide a user-friendly infrastructure to apply the latest AI developments and the most popular ML methods to materials science data. The NOMAD AI Toolkit aims at facilitating the deployment of sophisticated AI algorithms by means of an intuitive interface that is accessible from a webpage. In this way, AI-powered methodologies are transferred to materials science. In fact, the most recent advances in AI are usually available as software stored on web repositories. However, these need to be installed in a local environment, which requires specific bindings and environment variables. Such an installation can be a tedious process, which limits the diffusion of these computational methods, and also brings in the problem of reproducibility of published results. The NOMAD AI Toolkit offers a solution to this, by providing the software, that we install and maintain, in an environment that is accessible directly from the web.

Docker[33] allows the installation of software in a container that is isolated from the host machine where it is running. In the NOMAD AI Toolkit, we maintain such a container, installing therein software that has been used to produce recently published results and taking care of the versioning of all required packages. Jupyter Notebooks are then used inside the container to interact with the underlying computational engine. Interactions include the execution of code, displaying the results of computations, and writing comments or explanations by using markup language. We opted for Jupyter Notebooks because such interactivity is ideal for combining computation and analysis of the results in a single framework. The kernel of the notebooks, i.e., the computational engine that runs the code, is set to read Python. Python has built-in support for scientific computing as the SciPy ecosystem, and it is highly extensible because it allows the wrapping of code written in compiled languages such as C or C++. This technological infrastructure is built using JupyterHub[34] and deploys servers that are orchestrated by Kubernetes on computing facilities offered by the MPCDF in Garching, Germany. Users of the AI Toolkit can currently run their analyses on up to eight CPU cores, with up to 10 GB RAM.

A key feature of the NOMAD AI Toolkit is that we allow users to create, modify, and store computational notebooks where original AI workflows are developed. From the “Get to work” button accessible at https://nomad-lab.eu/aitoolkit, registered users are redirected to a personal space, where we provide 10 GB of cloud storage and where work can also be saved. Jupyter Notebooks, which are created inside the “work” directory in the user's personal space, are stored on our servers and can be accessed and edited over time. These notebooks are placed in the NOMAD AI Toolkit environment, which means that all software and methods demonstrated in other tutorials can be deployed therein. The versatility of Jupyter Notebooks in fact facilitates an interactive and instantaneous combination of different methods. This is useful if one aims at, e.g., combining different methods available in the NOMAD AI Toolkit in an original manner, or to deploy a specific algorithm to a dataset that is retrieved from the NOMAD Archive. The original notebook, which is developed in the "work" directory, might then lead to a publication, and the notebook can then be added to the “Published results” section of the AI Toolkit.

Contributing

The NOMAD AI Toolkit aims to promote reproducibility of published results. Researchers working in the field of AI applied to materials science are invited to share their software and install it in the NOMAD AI Toolkit. The shared software can be used in citeable Jupyter Notebooks, which are accessible online, to reproduce results that have been recently published in scientific journals. Sharing software and methods in a user-friendly infrastructure such as the NOMAD AI Toolkit can also promote the visibility of research and boost interdisciplinary collaborations.

All Jupyter Notebooks currently available in the NOMAD AI Toolkit are located in the same Docker container, thus allowing transferability of methods and pipelines between different notebooks. This also implies that software employed is constrained to be installed using the same package versions for each notebook. However, to facilitate a faster and more robust integration of external contributions to the NOMAD AI Toolkit, we allow the creation of separated Docker containers which can have their own versioning. Having a separate Docker container for a notebook allows to minimize maintenance of the notebook, and it avoids further updates when, e.g., package versions are updated in the main Docker container.

Contributing to the NOMAD AI Toolkit is straightforward, and consists of the following system considerations:

- Data must be uploaded to the NOMAD Archive & Repository, either in the public server (https://nomad-lab.eu/prod/rae/gui/uploads) or in the local, self-contained variant.

- Software needs to be installed in the base image of the NOMAD AI Toolkit.

- The whole workflow of a (published) project, from importing the data to generating results, has to be placed in a Jupyter Notebook. The package(s) and notebook are then uploaded to GitLab in a public repository (https://gitlab.mpcdf.mpg.de/nomad-lab/analytics), where the back-end code is stored.

- A DOI is generated for the notebook, which is versioned in GitLab. In the spirit of, e.g., Cornell University’s arXiv.org, the latest version of the notebook is linked to the DOI, but all previous versions are maintained.

Researchers interested in contributing to the NOMAD AI Toolkit are invited to contact us for further details.

Data management policy

For maintenance reasons, NOMAD keeps anonymous access logs for API calls for a limited amount of time. However, those logs are not associated with NOMAD users; in fact, users do not need to provide authentication to use the NOMAD APIs. We also would like to note that query commands used for extracting the data that are analyzed in a given notebook are part of the notebook itself, hence stored. This guarantees reproducibility of the AI analysis as the same query commands will always yield the same outcome, e.g., the same data points for the AI analysis. Publicly shared notebooks on the AI Toolkit platform are required to adopt the Apache License Version 2. Finally, we note that the overall NOMAD infrastructure, including the AI Toolkit, will be maintained for at least 10 years after the last data upload.

AI Toolkit app

In addition to the web-based toolkit, we also maintain an app that allows the deployment of the NOMAD AI Toolkit environment[35] on a local machine. This app employs the same graphical user interface (GUI) as the online version; in particular, the user accesses it via a normal web browser. However, the browser does not need to have access to the web and can therefore run behind firewalls. Software and methods installed in the NOMAD AI Toolkit will deploy the user's personal computational resources. This can be useful when calculations are particularly demanding, and also when AI methods are applied to private data that should not access the web. Through the local app, both the data on the NOMAD server and locally stored data can be accessed. The latter access is supported by NOMAD OASIS, the stand-alone version of the NOMAD infrastructure.[36]

Querying the NOMAD Archive and performing AI modeling on retrieved data

The NOMAD AI Toolkit features the tutorial “Querying the archive and performing artificial intelligence modeling” notebook[37] (also accessible from the “Query the archive” button at https://nomad-lab.eu/aitoolkit), which demonstrates all steps required to perform AI analysis on data stored in the NOMAD Archive. These steps are the following: (i) querying the data by using the RESTful API (see below) that is built on the NOMAD Metainfo; (ii) loading the needed AI packages, including the library of features that are used to fingerprint the data points (materials) in the AI analysis; and (iii) performing the AI training and visualizing the results.

The NOMAD Laboratory has developed the NOMAD Python package, which includes a client module to query the Archive using the NOMAD API. All functionalities of the NOMAD Repository & Archive are offered through a RESTful API, i.e., an API that uses HTTP methods to access data. In other words, each item in the Archive (typically a JSON data file) is reachable via a URL accessible from any web browser.

In the example notebook[37], we use the NOMAD Python client library to retrieve ternary elements containing oxygen. We also request that the ab initio calculations were carried out with the VASP code, using exchange-correlation (xc) functionals from the generalized gradient approximation (GGA) family. In addition, to ensure that calculations have converged, we also set that the energy difference during geometry optimization has converged. As of April 2022, this query retrieves almost 8,000 entries, which are the results of simulations carried out at different laboratories. We emphasize that in this notebook we show how data with heterogeneous origin can be used consistently for ML analyses.

Here, we target atomic density, which is obtained by a geometrically converged DFT calculation. The client module in the NOMAD Python package establishes a client-server connection in a so-called lazy manner, i.e., data are not fetched altogether, but with an iterative query. Entries are then iteratively retrieved, and each entry allows to access data and metadata relative to the simulation results that have been uploaded. In this example, the queried materials are composed of three different elements, where one of the elements is required to be oxygen. From each entry of the query, we retrieve the converged value of the atomic density and the name and stoichiometric ratio of the other two chemical elements. During the query, we use the atomic features library (see below) to add other atomic features to the dataframe that is built with the retrieved data. Before discussing the actual analysis performed in the notebook, let us briefly comment on NOMAD Metainfo and the libraries of input (atomic) features.

NOMAD Metainfo

The NOMAD API has access to the data in the NOMAD Archive, which are organized by means of NOMAD Metainfo.[8][38] Here, we mention that it is a hierarchical and modular schema, where each piece of information contained in an input/output file of an atomistic simulation code has its own metadata entry. The metadata are organized in sections (akin to tables in a relational database) such as "System," containing information on the geometry and composition of the simulated system, and "Method," containing information on the physical model (e.g., type of xc functional, type of relativistic treatment, and basis set). Crucially, each item in any section (i.e., a column, in the relational database analogy, where each data object is a row) has a unique name. Such name (e.g., “atoms,” which is a list of the atomic symbols of all chemical species present in a simulation cell) is associated with values that can be searched via the API. In practice, one can search all compounds containing oxygen by specifying query={’atoms’: [’O’]} as the argument of the query_archive() function, which is the backbone of the NOMAD API.

Libraries of input features

Together with the materials data, the other important piece of information for an AI analysis is the representation of each data point. A possible choice, useful for exploratory analysis, but also the training of predictive models, is to represent the atoms in the simulation cell by means of their periodic table properties (also called atomic features), e.g., atomic number, row and column in the periodic table, ionic or covalent radii, and electronegativity. In order to facilitate access to these features, we maintain the atomic_collections library, containing features for all atoms in the periodic table (up to Z = 100), calculated via DFT with a selection of xc functionals. Furthermore, we have also installed the MATMINER package[39], a recently introduced rich library of atomic properties from calculations and experiment. In this way, all atomic properties defined in the various sources are available within the toolkit environment.

Example of exploratory analysis: Clustering

We now proceed with the discussion of the showcase notebook, which performs an unsupervised learning analysis called "clustering." The evolutionary human ability to recognize patterns in empirical data has led to the most disparate scientific findings, from, e.g., Kepler’s Laws to the Lorenz attractor. However, finding patterns in highly multidimensional data requires automated tools. Here, we would like to understand whether the data retrieved form the NOMAD Archive can be grouped into clusters of data that share a similar representation, where data points within the same cluster are similar to each other while being different from data points belonging to other clusters. The notion of similarity in the discussed unsupervised learning task is strictly related to the representation of the data, here a set of atomic properties of the constituent material.

A plethora of different clustering algorithms has been developed in the last few years, each with different ideal applications (see, e.g., our tutorial notebook introducing the most popular clustering algorithms[40]). Among the various algorithms currently available, we chose a recent algorithm, which we will briefly outline below, that stands out for simplicity, quality of the results, and robustness.