Difference between revisions of "Journal:Establishing reliable research data management by integrating measurement devices utilizing intelligent digital twins"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (→Notes: Cats) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 18: | Line 18: | ||

|website = [https://www.mdpi.com/1424-8220/23/1/468 https://www.mdpi.com/1424-8220/23/1/468] | |website = [https://www.mdpi.com/1424-8220/23/1/468 https://www.mdpi.com/1424-8220/23/1/468] | ||

|download = [https://www.mdpi.com/1424-8220/23/1/468/pdf?version=1672904970 https://www.mdpi.com/1424-8220/23/1/468/pdf] (PDF) | |download = [https://www.mdpi.com/1424-8220/23/1/468/pdf?version=1672904970 https://www.mdpi.com/1424-8220/23/1/468/pdf] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

| Line 31: | Line 25: | ||

==Introduction== | ==Introduction== | ||

Initiated through the ongoing efforts of digitization, one of the new fields of activity within [[research]] concerns the [[Information management|management of research data]]. New technologies and the related increase in computing power can now generate large amounts of data, providing new paths to scientific knowledge. | Initiated through the ongoing efforts of digitization, one of the new fields of activity within [[research]] concerns the [[Information management|management of research data]]. New technologies and the related increase in computing power can now generate large amounts of data, providing new paths to scientific knowledge.<ref name=":0">{{Cite journal |last=Raptis |first=Theofanis P. |last2=Passarella |first2=Andrea |last3=Conti |first3=Marco |date=2019 |title=Data Management in Industry 4.0: State of the Art and Open Challenges |url=https://ieeexplore.ieee.org/document/8764545/ |journal=IEEE Access |volume=7 |pages=97052–97093 |doi=10.1109/ACCESS.2019.2929296 |issn=2169-3536}}</ref> Research is increasingly adopting toolsets and techniques raised by Industry 4.0 while gearing itself up for Research 4.0.<ref>{{Cite web |last=Jones, E.; Kalantery, N.; Glover, B. |date=01 October 2019 |title=Research 4.0: Interim Report |url=https://apo.org.au/node/262636 |publisher=Analysis & Policy Observatory |accessdate=06 December 2022}}</ref> The requirement for reliable research data management (RDM) can be managed by [[Journal:The FAIR Guiding Principles for scientific data management and stewardship|FAIR]] data management principles, which indicate that data must be findable, accessible, interoperable, and reusable through the entire data lifecycle in order to provide value to researchers.<ref name=":1">{{Cite journal |last=Wilkinson |first=Mark D. |last2=Dumontier |first2=Michel |last3=Aalbersberg |first3=IJsbrand Jan |last4=Appleton |first4=Gabrielle |last5=Axton |first5=Myles |last6=Baak |first6=Arie |last7=Blomberg |first7=Niklas |last8=Boiten |first8=Jan-Willem |last9=da Silva Santos |first9=Luiz Bonino |last10=Bourne |first10=Philip E. |last11=Bouwman |first11=Jildau |date=2016-03-15 |title=The FAIR Guiding Principles for scientific data management and stewardship |url=https://www.nature.com/articles/sdata201618 |journal=Scientific Data |language=en |volume=3 |issue=1 |pages=160018 |doi=10.1038/sdata.2016.18 |issn=2052-4463 |pmc=PMC4792175 |pmid=26978244}}</ref> In practice, however, implementation often fails due to the high heterogeneity of hardware and software, as well as outdated or decentralized data [[backup]] mechanisms.<ref name=":2">{{Cite book |last=Mons |first=Barend |date=2018-03-09 |title=Data Stewardship for Open Science: Implementing FAIR Principles |url=https://www.taylorfrancis.com/books/9781498753180 |language=en |edition=1 |publisher=Chapman and Hall/CRC |doi=10.1201/9781315380711 |isbn=978-1-315-38071-1}}</ref><ref name=":3">{{Cite book |last=Diepenbroek, M.; Glöckner, F.O.; Grobe, P. et al. |year=2014 |title=Informatik 2014 |url=https://dl.gi.de/items/618c1a92-fff2-423e-8c60-a0d6a65fe04f |chapter=Towards an integrated biodiversity and ecological research data management and archiving platform: the German federation for the curation of biological data (GFBio) |publisher=Gesellschaft für Informatik e.V |pages=1711–21 |isbn=978-3-88579-626-8}}</ref> This experience can be confirmed by the work at the Center for Mass Spectrometry and Optical Spectroscopy (CeMOS), a research institute at the Mannheim University of Applied Sciences which employs approximately 80 interdisciplinary scientific staff. In the various fields within the institute’s research landscape—including medical technology, [[biotechnology]], [[artificial intelligence]] (AI), and digital transformation, a wide variety of hardware and software is required to collect and process the data that are generated, which in initial efforts is posing a significant challenge for achieving holistic [[data integration]]. | ||

To cater to the respective disciplines, researchers of the institute develop experimental equipment such as middle infrared (MIR) scanners for the rapid detection and [[imaging]] of biochemical substances in medical tissue sections, multimodal imaging systems generating hyperspectral images of tissue slices, or photometrical measurement devices for detection of particle concentration. Nevertheless, they also use non-customizable equipment such as [[Mass spectrometry|mass spectrometers]], [[microscope]]s, and cell imagers for their experiments. These appliances provide great benefits for further development within the respective research disciplines, which is why the data are of immense value and must be brought together accordingly in a reliable RDM system. | To cater to the respective disciplines, researchers of the institute develop experimental equipment such as middle infrared (MIR) scanners for the rapid detection and [[imaging]] of biochemical substances in medical tissue sections, multimodal imaging systems generating hyperspectral images of tissue slices, or photometrical measurement devices for detection of particle concentration. Nevertheless, they also use non-customizable equipment such as [[Mass spectrometry|mass spectrometers]], [[microscope]]s, and cell imagers for their experiments. These appliances provide great benefits for further development within the respective research disciplines, which is why the data are of immense value and must be brought together accordingly in a reliable RDM system. | ||

Research practice shows that the step into the digital world seems to be associated with obstacles. As an innovative technology, the [[digital twin]] (DT) can be seen as a secure data source, as it mirrors a physical device (also called a physical twin or PT) into the digital world through a bilateral communication stream. | Research practice shows that the step into the digital world seems to be associated with obstacles. As an innovative technology, the [[digital twin]] (DT) can be seen as a secure data source, as it mirrors a physical device (also called a physical twin or PT) into the digital world through a bilateral communication stream.<ref name=":8">{{Cite journal |last=Grieves |first=Michael |date=2016 |title=Origins of the Digital Twin Concept |url=http://rgdoi.net/10.13140/RG.2.2.26367.61609 |doi=10.13140/RG.2.2.26367.61609}}</ref> DTs are key actors for the implementation of Industry 4.0 prospects.<ref>{{Cite journal |last=Mihai |first=Stefan |last2=Yaqoob |first2=Mahnoor |last3=Hung |first3=Dang V. |last4=Davis |first4=William |last5=Towakel |first5=Praveer |last6=Raza |first6=Mohsin |last7=Karamanoglu |first7=Mehmet |last8=Barn |first8=Balbir |last9=Shetve |first9=Dattaprasad |last10=Prasad |first10=Raja V. |last11=Venkataraman |first11=Hrishikesh |date=24/2022 |title=Digital Twins: A Survey on Enabling Technologies, Challenges, Trends and Future Prospects |url=https://ieeexplore.ieee.org/document/9899718/ |journal=IEEE Communications Surveys & Tutorials |volume=24 |issue=4 |pages=2255–2291 |doi=10.1109/COMST.2022.3208773 |issn=1553-877X}}</ref> Consequently, additional reconfigurability of hardware and software of the digitally imaged devices becomes a reality. The data mapped by the DT thus enable the bridge to the digital world and hence to the digital use and management of the data.<ref name=":0" /> Depending on the domain and use case, industry and research are creating new types of standardization-independent DTs. In most cases, only a certain part of the twin’s life cycle is reflected. Only when utilized over the entire life cycle of the physical entity does the DT becomes a powerful tool of digitization.<ref name=":9">{{Cite journal |last=Massonet |first=Angela |last2=Kiesel |first2=Raphael |last3=Schmitt |first3=Robert H. |date=2020-04-07 |title=Der Digitale Zwilling über den Produktlebenszyklus: Das Konzept des Digitalen Zwillings verstehen und gewinnbringend einsetzen |url=https://www.degruyter.com/document/doi/10.3139/104.112324/html |journal=Zeitschrift für wirtschaftlichen Fabrikbetrieb |language=en |volume=115 |issue=s1 |pages=97–100 |doi=10.3139/104.112324 |issn=0947-0085}}</ref><ref>{{Cite journal |last=Semeraro |first=Concetta |last2=Lezoche |first2=Mario |last3=Panetto |first3=Hervé |last4=Dassisti |first4=Michele |date=2021-09 |title=Digital twin paradigm: A systematic literature review |url=https://linkinghub.elsevier.com/retrieve/pii/S0166361521000762 |journal=Computers in Industry |language=en |volume=130 |pages=103469 |doi=10.1016/j.compind.2021.103469}}</ref> With the development of semantic modeling, hardware, and communication technology, there are more degrees of freedom to leverage the semantic representation of DTs, improving their usability.<ref>{{Cite journal |last=Bao |first=Qiangwei |last2=Zhao |first2=Gang |last3=Yu |first3=Yong |last4=Dai |first4=Sheng |last5=Wang |first5=Wei |date=2021-11 |title=The ontology-based modeling and evolution of digital twin for assembly workshop |url=https://link.springer.com/10.1007/s00170-021-07773-1 |journal=The International Journal of Advanced Manufacturing Technology |language=en |volume=117 |issue=1-2 |pages=395–411 |doi=10.1007/s00170-021-07773-1 |issn=0268-3768}}</ref> For the internal interconnection in particular, the referencing of knowledge correlations distinguishes intelligent DTs.<ref name=":10">{{Cite journal |last=Sahlab |first=Nada |last2=Kamm |first2=Simon |last3=Muller |first3=Timo |last4=Jazdi |first4=Nasser |last5=Weyrich |first5=Michael |date=2021-05-10 |title=Knowledge Graphs as Enhancers of Intelligent Digital Twins |url=https://ieeexplore.ieee.org/document/9468219/ |journal=2021 4th IEEE International Conference on Industrial Cyber-Physical Systems (ICPS) |publisher=IEEE |place=Victoria, BC, Canada |pages=19–24 |doi=10.1109/ICPS49255.2021.9468219 |isbn=978-1-7281-6207-2}}</ref> The analysis of relevant literature reveals a research gap in the combination of both approaches (RDM and DTs), which the authors intend to address with this work. | ||

In this paper, a centralized solution-based approach for data processing and storage is chosen, which is in contrast to the decentralized practice in RDM. Common problems of data management include having many locally, decentrally distributed research data; missing access authorizations; and missing experimental references, which is why the results become unusable over long periods of time. The resulting replication of data is followed by inconsistencies and interoperability issues. | In this paper, a centralized solution-based approach for data processing and storage is chosen, which is in contrast to the decentralized practice in RDM. Common problems of data management include having many locally, decentrally distributed research data; missing access authorizations; and missing experimental references, which is why the results become unusable over long periods of time. The resulting replication of data is followed by inconsistencies and interoperability issues.<ref>{{Citation |last=Pang |first=Candy |last2=Szafron |first2=Duane |date=2014 |editor-last=Franch |editor-first=Xavier |editor2-last=Ghose |editor2-first=Aditya K. |editor3-last=Lewis |editor3-first=Grace A. |editor4-last=Bhiri |editor4-first=Sami |title=Single Source of Truth (SSOT) for Service Oriented Architecture (SOA) |url=http://link.springer.com/10.1007/978-3-662-45391-9_50 |work=Service-Oriented Computing |language=en |publisher=Springer Berlin Heidelberg |place=Berlin, Heidelberg |volume=8831 |pages=575–589 |doi=10.1007/978-3-662-45391-9_50 |isbn=978-3-662-45390-2 |accessdate=2023-09-12}}</ref> Furthermore, these circumstances were also determined by empirical surveys at the authors’ institute. Therefore, a holistic infrastructure for data management is introduced, starting with the collection of the measurement series of the physical devices, up to the final reliable reusability of the data. Relevant requirements for a sustainable RDM leveraged by intelligent DTs are elaborated based on the related work. By enhancing with DT paradigms, the efficiency of a reliable RDM can be further extended. This forms the basis for an architectural concept for reliable data integration into the infrastructure with the DTs of the fully mapped physical devices. | ||

Due to the broad spectrum and interdisciplinarity of the institution, myriad data of different origins, forms, and quantities are created. The generic concept of DT allows evaluation units to be created agnostically from their specific use cases. Not only do the physical measuring devices and apparatuses benefit in the form of flexible reconfiguration through the possibilities of providing their virtual representation with intelligent functions, but also directly through the great variety of harmonized data structures and interfaces made possible by DTs. The bidirectional communication stream between the twins enables the physical devices to be directly influenced. Accordingly, parameterization of the physical device takes place dynamically using the DT, instead of statically using firmware as is usually the case. In addition, due to the real-time data transmission and the seamless integration of the DT, an immediate and reliable response to outliers is possible. Both data management and DTs as disruptive technology are mutual enablers in terms of their realization. | Due to the broad spectrum and interdisciplinarity of the institution, myriad data of different origins, forms, and quantities are created. The generic concept of DT allows evaluation units to be created agnostically from their specific use cases. Not only do the physical measuring devices and apparatuses benefit in the form of flexible reconfiguration through the possibilities of providing their virtual representation with intelligent functions, but also directly through the great variety of harmonized data structures and interfaces made possible by DTs. The bidirectional communication stream between the twins enables the physical devices to be directly influenced. Accordingly, parameterization of the physical device takes place dynamically using the DT, instead of statically using firmware as is usually the case. In addition, due to the real-time data transmission and the seamless integration of the DT, an immediate and reliable response to outliers is possible. Both data management and DTs as disruptive technology are mutual enablers in terms of their realization.<ref name=":0" /> Therefore, the designed infrastructure is based on the interacting functionality of both technologies to leverage their synergies providing sustainable and reliable data management. In order to substantiate the feasibility and practicability, a demo implementation of a measuring device within the realized infrastructure is carried out using a photometrical measuring device developed at the institute. This also forms the basis for the proof of concept and the evaluation of the overall system. | ||

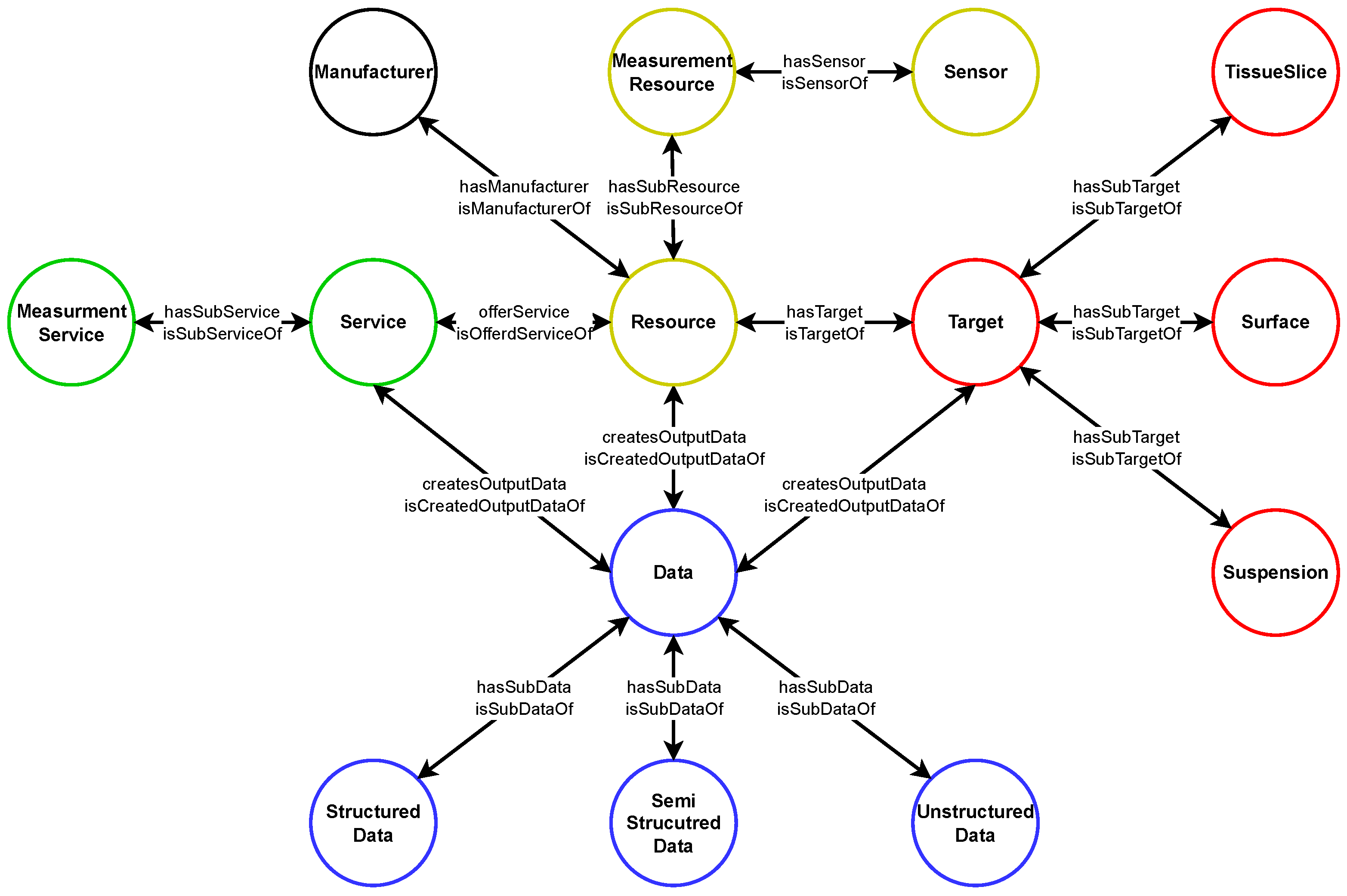

As main contributions, the paper (1) presents a new type of approach for dealing with large amounts of research data according to FAIR principles; (2) identifies the need for the use of DTs to break down barriers for the digital transformation in research institutes in order to arm them for Research 4.0; (3) elaborates a high-level knowledge graph that addresses the pending issues of interoperability and meta-representation of experimental data and associated devices; (4) devises an implementation variant for reactive DTs as a basis for later proactive realizations going beyond DTs as pure, passive state representations; and (5) works out a design approach that is highly reconfigurable, using the example of a photometer, which opens up completely new possibilities with less development effort in hardware and software engineering by using the DT rather than the physical device itself. | As main contributions, the paper (1) presents a new type of approach for dealing with large amounts of research data according to FAIR principles; (2) identifies the need for the use of DTs to break down barriers for the digital transformation in research institutes in order to arm them for Research 4.0; (3) elaborates a high-level knowledge graph that addresses the pending issues of interoperability and meta-representation of experimental data and associated devices; (4) devises an implementation variant for reactive DTs as a basis for later proactive realizations going beyond DTs as pure, passive state representations; and (5) works out a design approach that is highly reconfigurable, using the example of a photometer, which opens up completely new possibilities with less development effort in hardware and software engineering by using the DT rather than the physical device itself. | ||

| Line 49: | Line 43: | ||

===Research data management=== | ===Research data management=== | ||

The motivating force for reliable RDM should not be the product per se, but rather the necessity to build a body of knowledge enabling the subsequent integration and reuse of data and knowledge by the research community through a reliable RDM process. | The motivating force for reliable RDM should not be the product per se, but rather the necessity to build a body of knowledge enabling the subsequent integration and reuse of data and knowledge by the research community through a reliable RDM process.<ref name=":1" /> Therefore, the primary objective of RDM is to capture data in order to pave the way for new scientific knowledge in the long term. | ||

To bridge the gap from simple [[information]] to actual knowledge generation in order to bring greater value to researchers, data are the fundamental resource that enables the integration of the physical world with the virtual world, and finally, the interaction with each other. | To bridge the gap from simple [[information]] to actual knowledge generation in order to bring greater value to researchers, data are the fundamental resource that enables the integration of the physical world with the virtual world, and finally, the interaction with each other.<ref>{{Cite journal |last=Miksa |first=Tomasz |last2=Cardoso |first2=Joao |last3=Borbinha |first3=Jose |date=2018-12 |title=Framing the scope of the common data model for machine-actionable Data Management Plans |url=https://ieeexplore.ieee.org/document/8622618/ |journal=2018 IEEE International Conference on Big Data (Big Data) |publisher=IEEE |place=Seattle, WA, USA |pages=2733–2742 |doi=10.1109/BigData.2018.8622618 |isbn=978-1-5386-5035-6}}</ref> The DT as an innovative concept of Industry 4.0 enables the convergence of the physical world with the virtual world through its definition-given bilateral data exchange. Data from physical reality are seamlessly transferred into virtual reality, allowing developed applications and services to influence the behavior and impact on the physical reality. Data are the underlying structure that enables the DT; as such, having good data management practices in place provides the realization of the concept.<ref name=":0" /> | ||

Specifically, in the context of the ongoing advances in innovative technologies, data have evolved from being merely static in nature to being a continuous stream of information. | Specifically, in the context of the ongoing advances in innovative technologies, data have evolved from being merely static in nature to being a continuous stream of information.<ref name=":4">{{Cite journal |last=Gray |first=Jim |last2=Liu |first2=David T. |last3=Nieto-Santisteban |first3=Maria |last4=Szalay |first4=Alex |last5=DeWitt |first5=David J. |last6=Heber |first6=Gerd |date=2005-12 |title=Scientific data management in the coming decade |url=https://dl.acm.org/doi/10.1145/1107499.1107503 |journal=ACM SIGMOD Record |language=en |volume=34 |issue=4 |pages=34–41 |doi=10.1145/1107499.1107503 |issn=0163-5808}}</ref> In practice, the data generated in research activities are commonly stored in a decentralized manner on the computers of individual researchers or on local data mediums.<ref name=":3" /> A recent study showed that only 12 percent of research data is stored in reliable repositories accessible by others. The far greater part, the so-called “shadow data,” remains in the hands of the researchers, resulting in the loss of non-reproducible data sets, devoid of the possibility of extracting further knowledge from this data.<ref name=":2" /> In addition, the [[Backup|backed-up]] data may become inconsistent and lose significance without the entire measurement series being available. According to Schadt ''et al.''<ref>{{Cite journal |last=Schadt |first=Eric E. |last2=Linderman |first2=Michael D. |last3=Sorenson |first3=Jon |last4=Lee |first4=Lawrence |last5=Nolan |first5=Garry P. |date=2010-09 |title=Computational solutions to large-scale data management and analysis |url=https://www.nature.com/articles/nrg2857 |journal=Nature Reviews Genetics |language=en |volume=11 |issue=9 |pages=647–657 |doi=10.1038/nrg2857 |issn=1471-0056 |pmc=PMC3124937 |pmid=20717155}}</ref>, the most efficient method currently available for transmitting large amounts of data to collaborative partners entails copying the data to a sufficiently large storage drive, which is then sent to the intended recipient. This observation can also be confirmed within CeMOS, where this practice of data transfer prevails. Not only is this method inefficient and a barrier to data sharing, but it can also become a security issue when dealing with sensitive data. With such an abundance of data flows, large amounts of data need to be processed and reliably stored, causing RDM to gain momentum within the researcher’s community.<ref name=":4" /> | ||

Based on the increasing awareness and the initiated ambition towards a reformation of publishing and communication systems in research, the international coalition of Wilkinson ''et al.'' | Based on the increasing awareness and the initiated ambition towards a reformation of publishing and communication systems in research, the international coalition of Wilkinson ''et al.''<ref name=":1" /> proposed the FAIR Data Principles in 2016. These principles are intended to serve as a guide for those seeking to improve the reusability of their data assets, according to which data are expected to be findable, accessible, interoperable, and reusable (FAIR) throughout the data lifecycle. The FAIR principles are briefly outlined below within the context of the technical requirements, as modeled by Wilkinson ''et al.''<ref name=":1" />: | ||

* '''Findable''': Data are described with extensive [[metadata]], which are given a globally unique and persistent identifier and are stored in a searchable resource. | *'''Findable''': Data are described with extensive [[metadata]], which are given a globally unique and persistent identifier and are stored in a searchable resource. | ||

* '''Accessible''': Metadata are retrievable by their individual indicators through a standardized protocol, which is publicly free and universally implementable, as well as enabling an authentication procedure. The metadata must remain accessible even if the data are no longer available. | *'''Accessible''': Metadata are retrievable by their individual indicators through a standardized protocol, which is publicly free and universally implementable, as well as enabling an authentication procedure. The metadata must remain accessible even if the data are no longer available. | ||

* '''Interoperable''': (Meta)-data utilize a formal, broadly applicable language and follow FAIR principles; moreover, references exist between (meta)-data. | *'''Interoperable''': (Meta)-data utilize a formal, broadly applicable language and follow FAIR principles; moreover, references exist between (meta)-data. | ||

* '''Reusable''': (Meta)-data are characterized by relevant attributes and released on the basis of clear data usage licenses. The origin of the (meta)-data is clearly referenced. In addition, (meta)-data comply with domain-relevant community standards. | *'''Reusable''': (Meta)-data are characterized by relevant attributes and released on the basis of clear data usage licenses. The origin of the (meta)-data is clearly referenced. In addition, (meta)-data comply with domain-relevant community standards. | ||

While the FAIR principles define the core foundation for a reliable RDM, there is also a need to ensure that the necessary scientific infrastructure is in place to support RDM. | While the FAIR principles define the core foundation for a reliable RDM, there is also a need to ensure that the necessary scientific infrastructure is in place to support RDM.<ref name=":5">{{Cite journal |last=Andreas |first=Fürholz |last2=Martin |first2=Jaekel |date=2021 |title=Data life cycle management pilot projects and implications for research data management at universities of applied sciences |url=https://digitalcollection.zhaw.ch/handle/11475/23073 |language=en |doi=10.21256/ZHAW-23073}}</ref> In addition to the FAIR criteria, the concept of a data management plan (DMP) has a significant impact on the success of any RDM effort. The DMP is a comprehensive document that details the management of a research project’s data throughout its entire lifecycle.<ref name=":6">{{Cite journal |last=Redkina |first=N. S. |date=2019-04 |title=Current Trends in Research Data Management |url=http://link.springer.com/10.3103/S0147688219020035 |journal=Scientific and Technical Information Processing |language=en |volume=46 |issue=2 |pages=53–58 |doi=10.3103/S0147688219020035 |issn=0147-6882}}</ref> A standard DMP in fact does not exist, as it must be individually tailored to the requirements of the respective research project. This requires an extensive understanding of the individual research project and an awareness of the complexity and project-specific research data. The actual implementation of a DMP often creates additional work for researchers, such as data preparation or documentation.<ref name=":5" /> With the aim of providing researchers with a useful instrument, a number of web-based collaborative tools for creating DMPs has since emerged, such as DMPTool, DMPonline, and Research Data Management Organizer (RDMO).<ref>{{Cite journal |last=Engelhardt, C.; Enke, H.; Klar, J. et al. |year=2017 |title=Research Data Management Organiser |url=http://www-archive.cseas.kyoto-u.ac.jp/ipres2017.jp/wp-content/uploads/27Claudia-Engelhardt.pdf |format=PDF |journal=Proceedings of the 14th International Conference on Digital Preservation |pages=25–29}}</ref> | ||

In addition to the benefits already mentioned, the use of a research data infrastructure facilitates the visibility of scientists’ research as well as identifying new collaboration partners in industry, research, or funding bodies. | In addition to the benefits already mentioned, the use of a research data infrastructure facilitates the visibility of scientists’ research as well as identifying new collaboration partners in industry, research, or funding bodies.<ref name=":3" /><ref name=":5" /> In the meantime, funding bodies in particular have recognized the necessity of effective RDM, making it a prerequisite for the submission of research proposals.<ref name=":5" /><ref name=":6" /> | ||

===Digital twins=== | ===Digital twins=== | ||

The first pioneering principles for twinning systems can be dated back to training and simulation facilities of the National Aeronautics and Space Administration (NASA). In 1970, these facilities gained particular prominence during the thirteenth mission of the Apollo lunar landing program. Using a full-scale simulation environment of the command and lunar landing capsule, NASA engineers on Earth mirrored the condition of the seriously damaged spacecraft and tested all necessary operations for a successful return of the astronauts. All the possibilities could thus be simulated and validated before executing the real protocol to avoid the potential fatal outcome of a mishandling. | The first pioneering principles for twinning systems can be dated back to training and simulation facilities of the National Aeronautics and Space Administration (NASA). In 1970, these facilities gained particular prominence during the thirteenth mission of the Apollo lunar landing program. Using a full-scale simulation environment of the command and lunar landing capsule, NASA engineers on Earth mirrored the condition of the seriously damaged spacecraft and tested all necessary operations for a successful return of the astronauts. All the possibilities could thus be simulated and validated before executing the real protocol to avoid the potential fatal outcome of a mishandling.<ref>{{Cite journal |last=Rosen |first=Roland |last2=von Wichert |first2=Georg |last3=Lo |first3=George |last4=Bettenhausen |first4=Kurt D. |date=2015 |title=About The Importance of Autonomy and Digital Twins for the Future of Manufacturing |url=https://linkinghub.elsevier.com/retrieve/pii/S2405896315003808 |journal=IFAC-PapersOnLine |language=en |volume=48 |issue=3 |pages=567–572 |doi=10.1016/j.ifacol.2015.06.141}}</ref><ref>{{Citation |last=Wang |first=Zongyan |date=2020-03-18 |editor-last=Bányai |editor-first=Tamás |editor2-last=Petrilloand Fabio De Felice |editor2-first=Antonella |title=Digital Twin Technology |url=https://www.intechopen.com/books/industry-4-0-impact-on-intelligent-logistics-and-manufacturing/digital-twin-technology |work=Industry 4.0 - Impact on Intelligent Logistics and Manufacturing |language=en |publisher=IntechOpen |doi=10.5772/intechopen.80974 |isbn=978-953-51-6996-3 |accessdate=2023-09-12}}</ref> The actual paradigm of a virtual representation of physical entities was initiated later in 2002. After the first introduction, Michael Grieves further developed his product life cycle (PLC) model, which was later given the term "digital twin" by NASA engineer John Vickers. The mirrored systems approach was popularized in 2010 when it was incorporated into NASA’s technical road map.<ref>{{Cite journal |last=Barricelli |first=Barbara Rita |last2=Casiraghi |first2=Elena |last3=Fogli |first3=Daniela |date=2019 |title=A Survey on Digital Twin: Definitions, Characteristics, Applications, and Design Implications |url=https://ieeexplore.ieee.org/document/8901113/ |journal=IEEE Access |volume=7 |pages=167653–167671 |doi=10.1109/ACCESS.2019.2953499 |issn=2169-3536}}</ref><ref>{{Cite web |last=Shafto, M.; Conroy, M.; Doyle, R. et al. |date=April 2012 |title=Modeling, Simulation, Information Technology & Processing Roadmap |url=https://www.nasa.gov/sites/default/files/501321main_TA11-ID_rev4_NRC-wTASR.pdf |format=PDF |publisher=National Aeronautics and Space Administration}}</ref><ref name=":7">{{Cite journal |last=Grieves |first=Michael |date=2022-05-25 |title=Intelligent digital twins and the development and management of complex systems |url=https://digitaltwin1.org/articles/2-8/v1 |journal=Digital Twin |language=en |volume=2 |pages=8 |doi=10.12688/digitaltwin.17574.1 |issn=2752-5783}}</ref> | ||

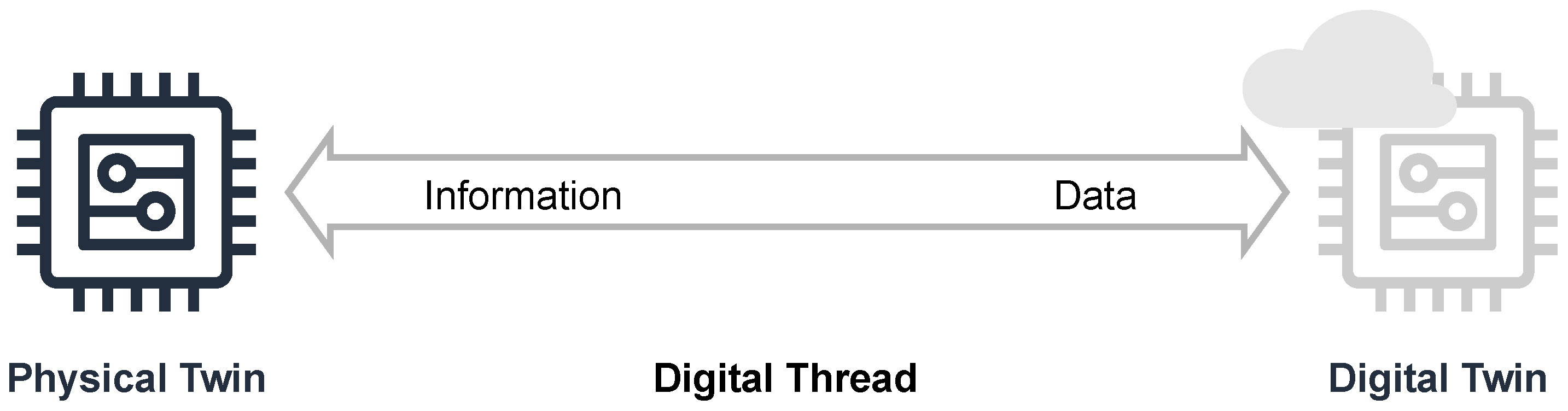

The fundamental concept can be divided into the duality of the physical and virtual world. According to Figure 1, the physical world or space contains tangible components, i.e., machines, apparatuses, production assets, measurement devices, or even physical processes, the so-called PTs. In this context, the illustration shows a stylized device of arbitrary complexity on the left-hand side. On the right side, its virtual counterpart is shown in the virtual world or space. The coexistence of both is ensured by the bilateral stream of data and information, which is introduced as a digital thread. All raw data accumulated from the physical world are sent by the PT to its DT, which aggregates them and provides accessibility. Vice versa, by processing these data, the DT provides the PT with refined analytical information. Each PT is allocated to precisely one DT. One of the goals is to transfer work activities from the physical world to the virtual world so that efficiency and resources are preserved. | The fundamental concept can be divided into the duality of the physical and virtual world. According to Figure 1, the physical world or space contains tangible components, i.e., machines, apparatuses, production assets, measurement devices, or even physical processes, the so-called PTs. In this context, the illustration shows a stylized device of arbitrary complexity on the left-hand side. On the right side, its virtual counterpart is shown in the virtual world or space. The coexistence of both is ensured by the bilateral stream of data and information, which is introduced as a digital thread. All raw data accumulated from the physical world are sent by the PT to its DT, which aggregates them and provides accessibility. Vice versa, by processing these data, the DT provides the PT with refined analytical information. Each PT is allocated to precisely one DT. One of the goals is to transfer work activities from the physical world to the virtual world so that efficiency and resources are preserved.<ref name=":7" /> Systems with a multitude of devices especially require flexible approaches for orchestration. Processes and devices must be able to be varied, rescheduled, and reconfigured.<ref>{{Cite journal |last=Dorofeev |first=Kirill |last2=Zoitl |first2=Alois |date=2018-07 |title=Skill-based Engineering Approach using OPC UA Programs |url=https://ieeexplore.ieee.org/document/8471978/ |journal=2018 IEEE 16th International Conference on Industrial Informatics (INDIN) |publisher=IEEE |place=Porto |pages=1098–1103 |doi=10.1109/INDIN.2018.8471978 |isbn=978-1-5386-4829-2}}</ref> Twin technologies as enablers for this, providing the greatest possible degree of freedom. | ||

| Line 78: | Line 72: | ||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | {| border="0" cellpadding="5" cellspacing="0" width="700px" | ||

|- | |- | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' Concept of digital twins according to Grieves. | | style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' Concept of digital twins according to Grieves.<ref name=":8" /><ref name=":7" /></blockquote> | ||

|- | |- | ||

|} | |} | ||

|} | |} | ||

In the evolution of DTs, gradations concerning integration depth can be identified. A distinction is made between "digital model," "digital shadow," and the "digital twin" itself. Sepasgozar | In the evolution of DTs, gradations concerning integration depth can be identified. A distinction is made between "digital model," "digital shadow," and the "digital twin" itself. Sepasgozar<ref>{{Cite journal |last=Sepasgozar |first=Samad M. E. |date=2021-04-02 |title=Differentiating Digital Twin from Digital Shadow: Elucidating a Paradigm Shift to Expedite a Smart, Sustainable Built Environment |url=https://www.mdpi.com/2075-5309/11/4/151 |journal=Buildings |language=en |volume=11 |issue=4 |pages=151 |doi=10.3390/buildings11040151 |issn=2075-5309}}</ref> investigates this coherence and elaborates that digital models are created before the actual physical life cycle of a DT, whereas digital shadows have a unidirectional mirroring of a physical entity. To meet the characteristics of a real twin, communication must be in a bivalent way. Van der Valk ''et al.''<ref>{{Cite journal |last=van der Valk |first=Hendrik |last2=Haße |first2=Hendrik |last3=Möller |first3=Frederik |last4=Otto |first4=Boris |date=2022-06 |title=Archetypes of Digital Twins |url=https://link.springer.com/10.1007/s12599-021-00727-7 |journal=Business & Information Systems Engineering |language=en |volume=64 |issue=3 |pages=375–391 |doi=10.1007/s12599-021-00727-7 |issn=2363-7005}}</ref> deduce DT archetypes from characteristics as well as industry interviews. Starting from basic digital twins which, similar to a digital shadow, just represent the state of a physical object, up to increasingly complex twin variations, the following archetypes are further differentiated: enriched digital twin, autonomous control twin, enhanced autonomous control twin, exhaustive twin. Starting with the autonomous control twin, the DT emerges from its passive role and receives autonomous, intelligent features, which reach their completion in the exhaustive twin. While the first three archetypes can already be found in industry, the more advanced approaches are rather domain-limited or limited to research activities. Grieves<ref name=":7" /> also criticizes this and argues that intelligent DTs must shift from their passive role and become active, online, goal-seeking, and anticipatory. DT technologies still need a long time to reveal their full potential. Just by identifying and focusing on the domain-specific challenges, this lack of utilizing the opportunities can be tackled.<ref name=":9" /><ref>{{Cite journal |last=Singh |first=Maulshree |last2=Fuenmayor |first2=Evert |last3=Hinchy |first3=Eoin |last4=Qiao |first4=Yuansong |last5=Murray |first5=Niall |last6=Devine |first6=Declan |date=2021-05-24 |title=Digital Twin: Origin to Future |url=https://www.mdpi.com/2571-5577/4/2/36 |journal=Applied System Innovation |language=en |volume=4 |issue=2 |pages=36 |doi=10.3390/asi4020036 |issn=2571-5577}}</ref> | ||

Addressing some of these problems, semantic web technologies are inevitably needed labeling the required data streams. | Addressing some of these problems, semantic web technologies are inevitably needed labeling the required data streams.<ref>{{Cite journal |last=Zehnder |first=Philipp |last2=Riemer |first2=Dominik |date=2018-12 |title=Representing Industrial Data Streams in Digital Twins using Semantic Labeling |url=https://ieeexplore.ieee.org/document/8622400/ |journal=2018 IEEE International Conference on Big Data (Big Data) |publisher=IEEE |place=Seattle, WA, USA |pages=4223–4226 |doi=10.1109/BigData.2018.8622400 |isbn=978-1-5386-5035-6}}</ref> Lehmann ''et al.''<ref name=":11">{{Cite journal |last=Lehmann |first=Joel |last2=Lober |first2=Andreas |last3=Rache |first3=Alessa |last4=Baumgärtel |first4=Hartwig |last5=Reichwald |first5=Julian |date=2022 |title=Collaboration of Semantically Enriched Digital Twins based on a Marketplace Approach: |url=https://www.scitepress.org/DigitalLibrary/Link.aspx?doi=10.5220/0011141200003286 |journal=Proceedings of the 19th International Conference on Wireless Networks and Mobile Systems |publisher=SCITEPRESS - Science and Technology Publications |place=Lisbon, Portugal |pages=35–45 |doi=10.5220/0011141200003286 |isbn=978-989-758-592-0}}</ref> show that a knowledge-based approach for the representation of DTs is indispensable. Only then interaction between intelligent DTs can take place, and they are able to proactively negotiate with others so that, for example, optimal process flows emerge. Sahlab ''et al.''<ref name=":10" /> use knowledge graphs to refine intelligent DTs. Particularly in industrial applications, these approaches are distinguished by the management of dynamically emerging DTs. Only through reasoning over the knowledge graphs do opportunities for self-adaptation and self-adaptation emerge. Göppert ''et al.''<ref>{{Cite journal |last=Göppert |first=Amon |last2=Grahn |first2=Lea |last3=Rachner |first3=Jonas |last4=Grunert |first4=Dennis |last5=Hort |first5=Simon |last6=Schmitt |first6=Robert H. |date=2023-06 |title=Pipeline for ontology-based modeling and automated deployment of digital twins for planning and control of manufacturing systems |url=https://link.springer.com/10.1007/s10845-021-01860-6 |journal=Journal of Intelligent Manufacturing |language=en |volume=34 |issue=5 |pages=2133–2152 |doi=10.1007/s10845-021-01860-6 |issn=0956-5515}}</ref> develop a reference architecture for the development of DTs based on an end-to-end workflow that addresses definition, modeling, and deployment for the description of a pipeline for ontology-based DT creation. Zhang ''et al.''<ref>{{Cite journal |last=Zhang |first=Chao |last2=Zhou |first2=Guanghui |last3=He |first3=Jun |last4=Li |first4=Zhi |last5=Cheng |first5=Wei |date=2019 |title=A data- and knowledge-driven framework for digital twin manufacturing cell |url=https://linkinghub.elsevier.com/retrieve/pii/S2212827119306985 |journal=Procedia CIRP |language=en |volume=83 |pages=345–350 |doi=10.1016/j.procir.2019.04.084}}</ref> combine DTs, dynamic knowledge bases, and knowledge-based intelligent skills to realize an autonomous framework for manufacturing cells. Due to the manufacturing context, other ontologies are relevant for the definition phase. Therefore, various other requirements and constraints are needed for different applications. | ||

Finally, Segovia and Garcia-Alfaro | Finally, Segovia and Garcia-Alfaro<ref>{{Cite journal |last=Segovia |first=Mariana |last2=Garcia-Alfaro |first2=Joaquin |date=2022-07-20 |title=Design, Modeling and Implementation of Digital Twins |url=https://www.mdpi.com/1424-8220/22/14/5396 |journal=Sensors |language=en |volume=22 |issue=14 |pages=5396 |doi=10.3390/s22145396 |issn=1424-8220 |pmc=PMC9318241 |pmid=35891076}}</ref> investigate DTs in terms of design, modeling, and implementation and derive functional specifications. Lober ''et al.''<ref>{{Cite journal |last=Lober |first=Andreas |last2=Lehmann |first2=Joel |last3=Hausermann |first3=Tim |last4=Reichwald |first4=Julian |last5=Baumgartel |first5=Hartwig |date=2022-11-22 |title=Improving the Engineering Process of Control Systems Based on Digital Twin Specifications |url=https://ieeexplore.ieee.org/document/9965259/ |journal=2022 4th International Conference on Emerging Trends in Electrical, Electronic and Communications Engineering (ELECOM) |publisher=IEEE |place=Mauritius |pages=1–6 |doi=10.1109/ELECOM54934.2022.9965259 |isbn=978-1-6654-6697-4}}</ref> also elaborate general specifications for DTs in their work on improving control systems based on them. Introducing a general framework and use case studies, Onaji ''et al.''<ref>{{Cite journal |last=Onaji |first=Igiri |last2=Tiwari |first2=Divya |last3=Soulatiantork |first3=Payam |last4=Song |first4=Boyang |last5=Tiwari |first5=Ashutosh |date=2022-08-03 |title=Digital twin in manufacturing: conceptual framework and case studies |url=https://www.tandfonline.com/doi/full/10.1080/0951192X.2022.2027014 |journal=International Journal of Computer Integrated Manufacturing |language=en |volume=35 |issue=8 |pages=831–858 |doi=10.1080/0951192X.2022.2027014 |issn=0951-192X}}</ref> show important characteristics that DTs must fulfil. | ||

In accordance with the insights outlined above and the specifically developed guidelines, the following requirements have to be considered in the present work to realize proper virtual representations of physical entities: | In accordance with the insights outlined above and the specifically developed guidelines, the following requirements have to be considered in the present work to realize proper virtual representations of physical entities: | ||

# '''Replication, representation, and interoperability''': The virtual counterpart of a physical entity should be as detailed as possible, but at the same time as less complex as required without violating the fidelity of the replicated device. A representation should not only include the data of a device but also describe the meaning of this data to lay the foundations for autonomous interoperability. | #'''Replication, representation, and interoperability''': The virtual counterpart of a physical entity should be as detailed as possible, but at the same time as less complex as required without violating the fidelity of the replicated device. A representation should not only include the data of a device but also describe the meaning of this data to lay the foundations for autonomous interoperability. | ||

# '''Interconnectivity and data acquisition''': All physical devices must be connected bi-directionally via suitable communication standards. The incoming data must be processed in a time-appropriate manner and reflected in the twin. The data forms to be taken into account can be of a descriptive, static, or dynamic nature and must be considered accordingly during processing. Processed information from the DT must also be reflected back into the PT. | #'''Interconnectivity and data acquisition''': All physical devices must be connected bi-directionally via suitable communication standards. The incoming data must be processed in a time-appropriate manner and reflected in the twin. The data forms to be taken into account can be of a descriptive, static, or dynamic nature and must be considered accordingly during processing. Processed information from the DT must also be reflected back into the PT. | ||

# '''Data storage''': All aggregated data must be stored agnostic of format immediately. For reusability, it is necessary to store the data with reference and labeling in suitable storage forms. Not only time but also version, as well as change management, are useful options regarding this. | #'''Data storage''': All aggregated data must be stored agnostic of format immediately. For reusability, it is necessary to store the data with reference and labeling in suitable storage forms. Not only time but also version, as well as change management, are useful options regarding this. | ||

# '''Synchronization''': Whenever possible, the bivalent data connection should be carried out in real-time and under adequate latency conditions. Both twins should replicate the condition of their counterparts if possible. | #'''Synchronization''': Whenever possible, the bivalent data connection should be carried out in real-time and under adequate latency conditions. Both twins should replicate the condition of their counterparts if possible. | ||

# '''Interface and interaction''' In order to enable collaboration and interaction between and with the twins, suitable interfaces are required. On the one hand, it must be possible for data to be exchanged and accessed by machines, and on the other hand, data must be readable and interpretable by humans providing suitable interaction modes. | #'''Interface and interaction''' In order to enable collaboration and interaction between and with the twins, suitable interfaces are required. On the one hand, it must be possible for data to be exchanged and accessed by machines, and on the other hand, data must be readable and interpretable by humans providing suitable interaction modes. | ||

# '''Optimization, analytics, simulation, and decision-making''': To gain further advantages, additional features should be accessible through the DTs. Thus, real-time analyses and optimizations, as well as independent algorithms for data evaluation, can be applied to the data basis of the DT. It should be possible to use AI technologies, establish decision making, or use far-reaching simulations, for example. The DT is intended to create context awareness and to facilitate collaborative approaches to reliably choreograph the twins. | #'''Optimization, analytics, simulation, and decision-making''': To gain further advantages, additional features should be accessible through the DTs. Thus, real-time analyses and optimizations, as well as independent algorithms for data evaluation, can be applied to the data basis of the DT. It should be possible to use AI technologies, establish decision making, or use far-reaching simulations, for example. The DT is intended to create context awareness and to facilitate collaborative approaches to reliably choreograph the twins. | ||

# '''Security''': Each entity must comply with current security standards, i.e., authorization, policies, and [[encryption]]. Both privacy and integrity must be preserved. Optionally, the DT could monitor the current security through "what-if" scenarios and initiate countermeasures. | #'''Security''': Each entity must comply with current security standards, i.e., authorization, policies, and [[encryption]]. Both privacy and integrity must be preserved. Optionally, the DT could monitor the current security through "what-if" scenarios and initiate countermeasures. | ||

After a detailed examination of both concepts (RDM and DTs), it is obvious that symbiosis of both can draw certain advantages. The requirements for reliable and sustainable RDM especially align well with the DT characteristics described above. Both data management and DTs—as disruptive technology—are mutual enablers in terms of their realization. | After a detailed examination of both concepts (RDM and DTs), it is obvious that symbiosis of both can draw certain advantages. The requirements for reliable and sustainable RDM especially align well with the DT characteristics described above. Both data management and DTs—as disruptive technology—are mutual enablers in terms of their realization.<ref name=":0" /> The works analyzed in this section reveal a gap in research, which this paper attempts to address. The synthesized architecture built out of these pillars is presented subsequently. | ||

==Concept architecture== | ==Concept architecture== | ||

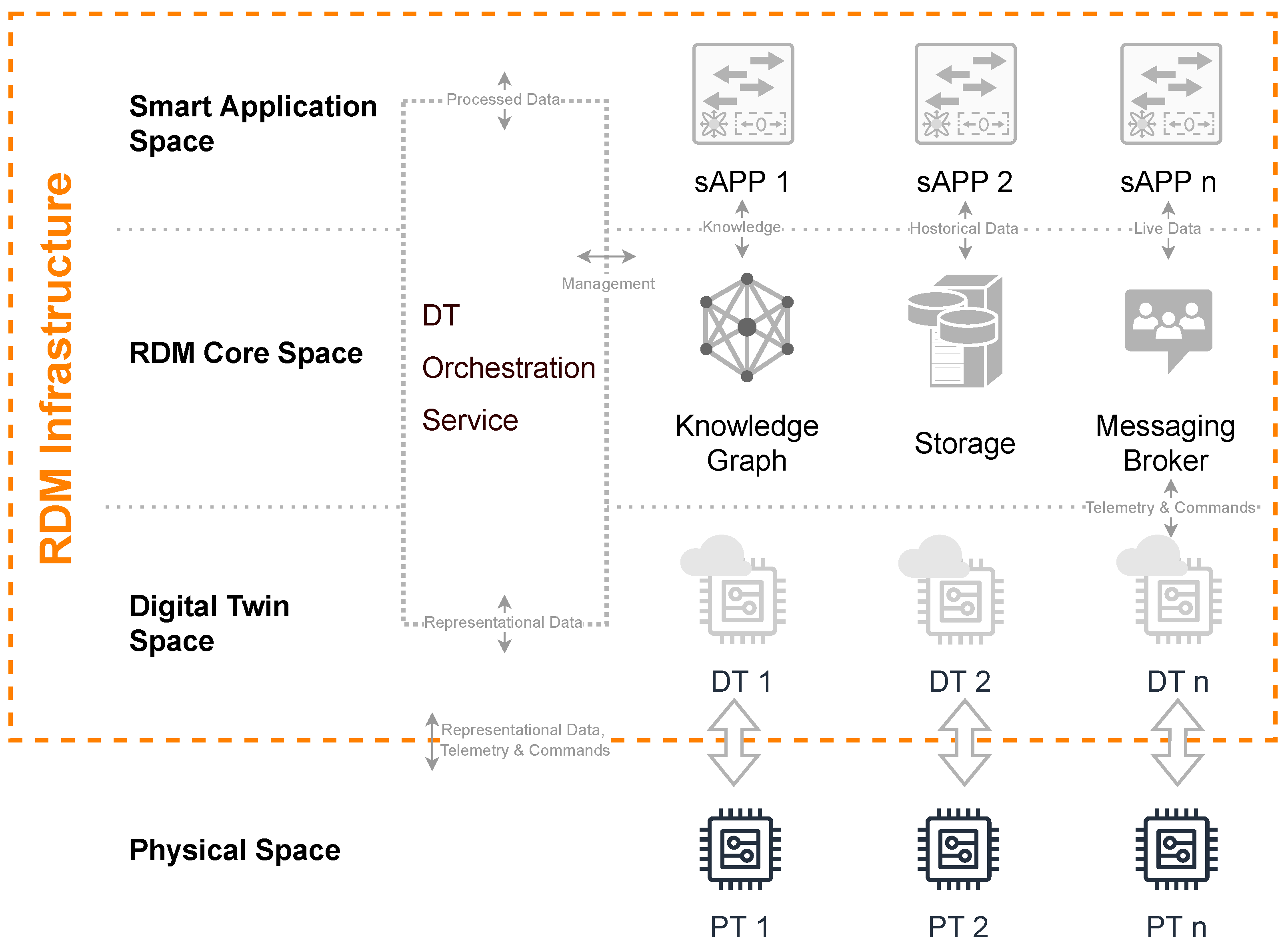

| Line 132: | Line 126: | ||

The top-level Smart Application Space allows arbitrary services to consume data via the interfaces of the messaging broker or the DTOS and use it for their purposes. It would also be conceivable for smart applications to proactively offer their capabilities as a service to the DTs of the devices, or even as a service twin. Realizing this, they could also be represented in the knowledge graph and the Digital Twin Space and get into contact with other DTs. | The top-level Smart Application Space allows arbitrary services to consume data via the interfaces of the messaging broker or the DTOS and use it for their purposes. It would also be conceivable for smart applications to proactively offer their capabilities as a service to the DTs of the devices, or even as a service twin. Realizing this, they could also be represented in the knowledge graph and the Digital Twin Space and get into contact with other DTs. | ||

Due to the nature of the research devices, a partly decentralized (data acquisition and preprocessing are commonly facilitated decentrally), mostly asynchronous event-based architecture is needed. Instead of choosing a monolithic software approach, which makes perfect sense on a central system, independent microservices are utilized here. A microservice-based architecture offers the greatest possible advantages in the context of RDM through separate areas of responsibility, independence, autarky, scalability, and fault tolerance through modularity | Due to the nature of the research devices, a partly decentralized (data acquisition and preprocessing are commonly facilitated decentrally), mostly asynchronous event-based architecture is needed. Instead of choosing a monolithic software approach, which makes perfect sense on a central system, independent microservices are utilized here. A microservice-based architecture offers the greatest possible advantages in the context of RDM through separate areas of responsibility, independence, autarky, scalability, and fault tolerance through modularity.<ref>{{Cite journal |last=Blinowski |first=Grzegorz |last2=Ojdowska |first2=Anna |last3=Przybylek |first3=Adam |date=2022 |title=Monolithic vs. Microservice Architecture: A Performance and Scalability Evaluation |url=https://ieeexplore.ieee.org/document/9717259/ |journal=IEEE Access |volume=10 |pages=20357–20374 |doi=10.1109/ACCESS.2022.3152803 |issn=2169-3536}}</ref> In order to make the later implementation approaches more comprehensible, a typical use case of the authors’ research institute is presented subsequently. | ||

==Research landscape and use case description of a photometrical measurement device | ==Research landscape and use case description of a photometrical measurement device== | ||

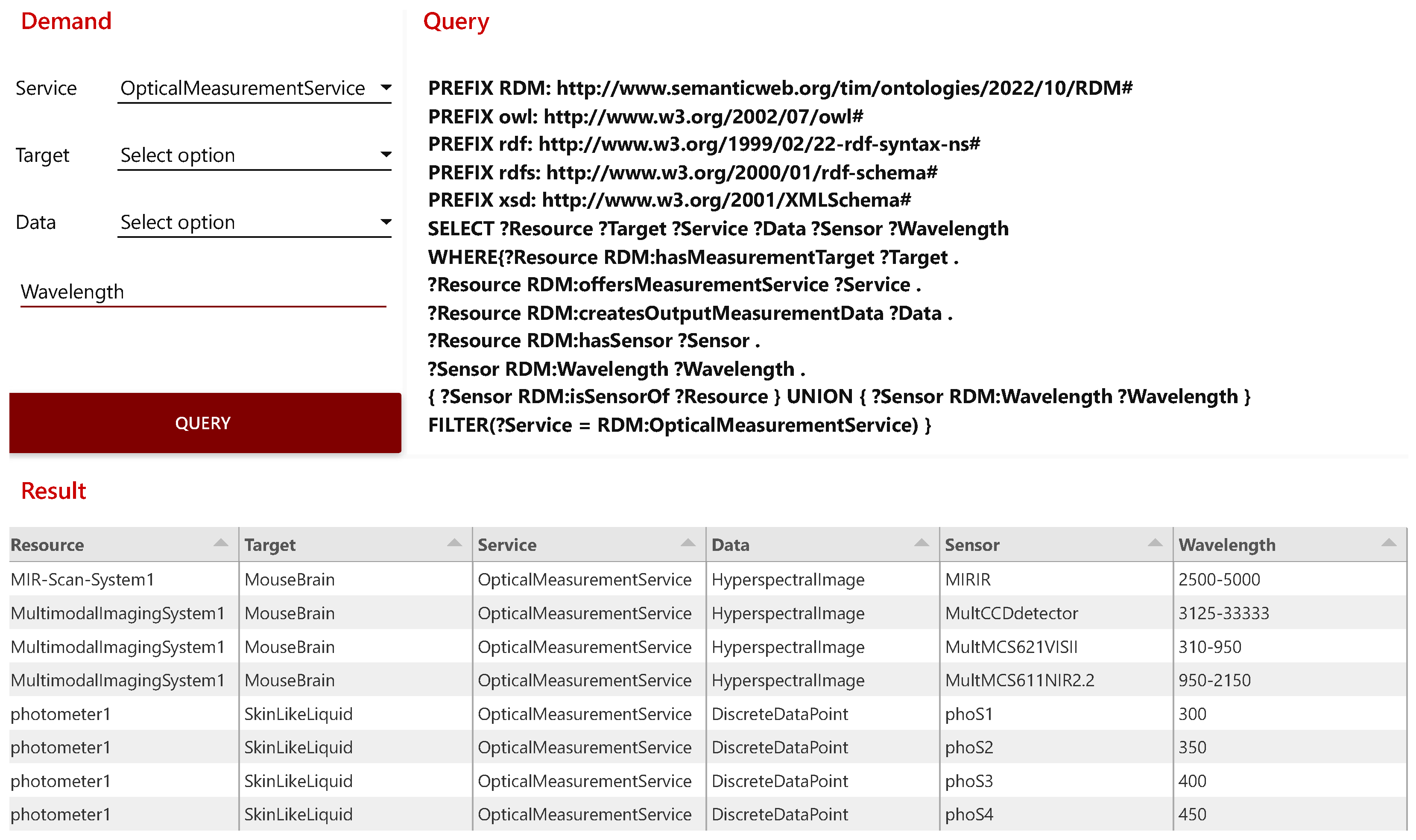

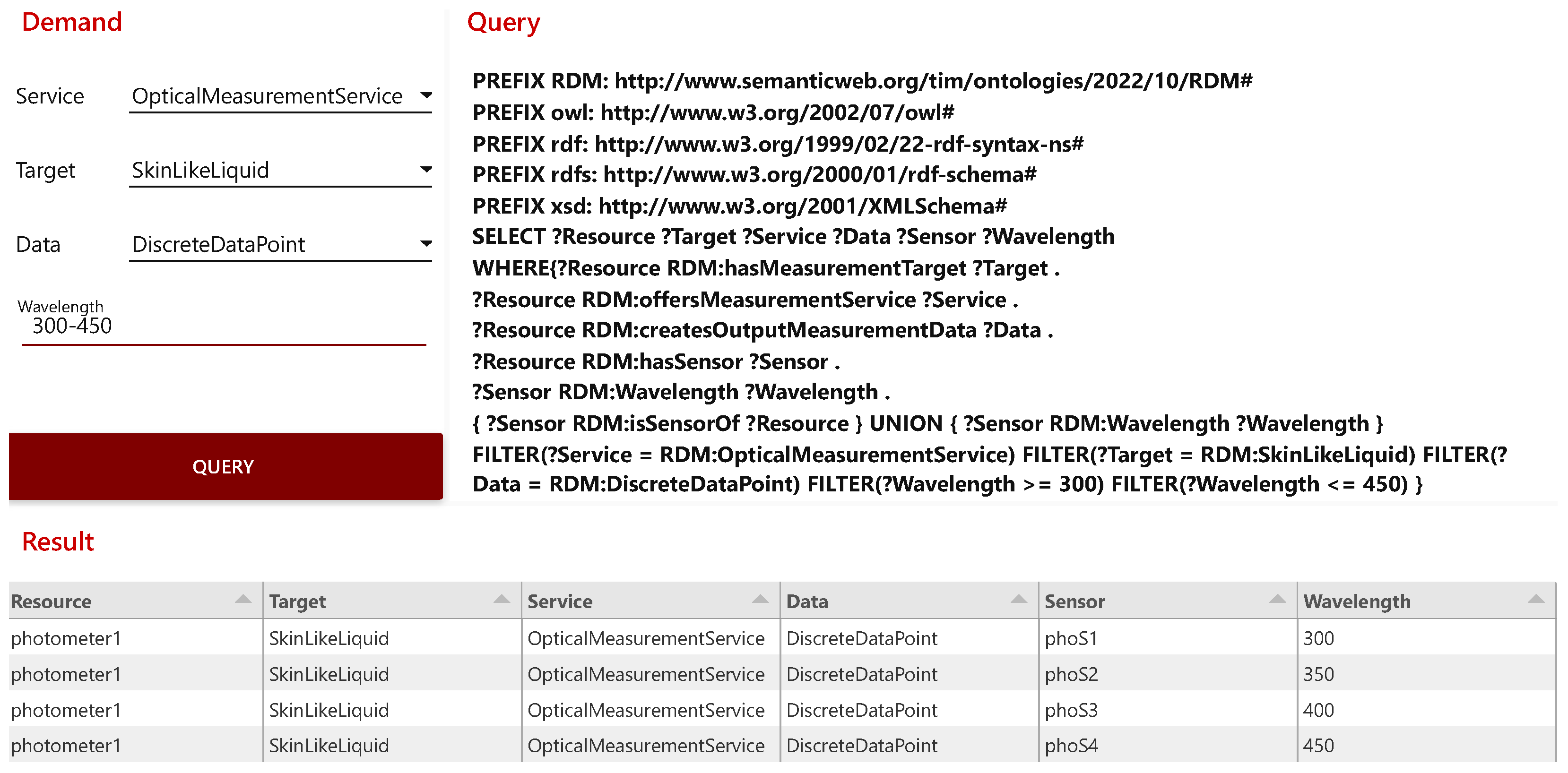

After the basic concept architecture for RDM has been presented, the subsequent implementation of a PT will take place on the basis of a specific measuring device and its use cases in order to integrate it prototypically within the RDM architecture as a first application example. Therefore, the research and device landscape of the institute will be considered first. The CeMOS conducts interdisciplinary research in the fields of medical biotechnology or medical technology and intelligent sensor technology in order to create synergies between mass spectrometry and optical device development. Based on a variety of covered research areas, several devices from different manufacturers, as well as self-built ones, are used to create a wide-ranging heterogeneous equipment landscape. It includes microscopes, cell imagers, and various mass spectrometers for generating hyperspectral images, as well as other hyperspectral imagers for specific use cases and optical measuring devices. Some of these imagers and measuring devices were developed, built, and are currently operating at the institute itself. | After the basic concept architecture for RDM has been presented, the subsequent implementation of a PT will take place on the basis of a specific measuring device and its use cases in order to integrate it prototypically within the RDM architecture as a first application example. Therefore, the research and device landscape of the institute will be considered first. The CeMOS conducts interdisciplinary research in the fields of medical biotechnology or medical technology and intelligent sensor technology in order to create synergies between mass spectrometry and optical device development. Based on a variety of covered research areas, several devices from different manufacturers, as well as self-built ones, are used to create a wide-ranging heterogeneous equipment landscape. It includes microscopes, cell imagers, and various mass spectrometers for generating hyperspectral images, as well as other hyperspectral imagers for specific use cases and optical measuring devices. Some of these imagers and measuring devices were developed, built, and are currently operating at the institute itself. | ||

Representatives of these self-developed and manufactured measuring devices are the MIR scanner | Representatives of these self-developed and manufactured measuring devices are the MIR scanner<ref name=":12">{{Cite journal |last=Kümmel |first=Tim |last2=van Marwick |first2=Björn |last3=Rittel |first3=Miriam |last4=Ramallo Guevara |first4=Carina |last5=Wühler |first5=Felix |last6=Teumer |first6=Tobias |last7=Wängler |first7=Björn |last8=Hopf |first8=Carsten |last9=Rädle |first9=Matthias |date=2021-05-28 |title=Rapid brain structure and tumour margin detection on whole frozen tissue sections by fast multiphotometric mid-infrared scanning |url=https://www.nature.com/articles/s41598-021-90777-4 |journal=Scientific Reports |language=en |volume=11 |issue=1 |pages=11307 |doi=10.1038/s41598-021-90777-4 |issn=2045-2322 |pmc=PMC8163866 |pmid=34050224}}</ref>, the Multimodal Imaging System<ref name=":13">{{Cite journal |last=Heintz |first=Annabell |last2=Sold |first2=Sebastian |last3=Wühler |first3=Felix |last4=Dyckow |first4=Julia |last5=Schirmer |first5=Lucas |last6=Beuermann |first6=Thomas |last7=Rädle |first7=Matthias |date=2021-05-23 |title=Design of a Multimodal Imaging System and Its First Application to Distinguish Grey and White Matter of Brain Tissue. A Proof-of-Concept-Study |url=https://www.mdpi.com/2076-3417/11/11/4777 |journal=Applied Sciences |language=en |volume=11 |issue=11 |pages=4777 |doi=10.3390/app11114777 |issn=2076-3417}}</ref>, and a multipurpose, multichannel photometer. The MIR scanner is used for generating hyperspectral images of tissue sections and consists of a laser unit with four lasers with different wavenumbers, a detector unit, a focusing unit, an agile mirror unit, and a movable object slide. It is used for frozen section analysis in tumor detection for the identification of tissue morphologies or tumor margins.<ref name=":12" /> The Multimodal Imaging System also generates hyperspectral data for tissue sections with various procedures and consists of a modular upright light microscope combined with a [[Raman spectroscopy|Raman spectrometer]], a visible (VIS) / [[Near-infrared spectroscopy|near-Infrared]] (NIR) reflectance spectrometer, and a detector unit. Its applications are in brightfield, darkfield, and polarization microscopy of normal mouse brain tissue, and an exemplary application provides the ability to make a distinction between white and grey matter.<ref name=":13" /> The majority of all devices currently do not use a network interface. The resulting measurement data are mostly stored locally and manually collected for analysis purposes. Due to the decentralized processing of the data, no problems arose with regard to security and confidentiality. Likewise, due to the low level of automation, no problems occurred with regard to emerging experimental errors or technical failures. Manual intervention could directly mitigate these errors. In the future, with an increased degree of automation of the RDM infrastructure, issues regarding security, confidentiality, and functional safety have to be taken into account. In addition to the previously mentioned devices, the photometer also serves as an essential component of the institute’s research. For the subsequent implementation of the presented architecture, the feasibility is to be proven on the basis of the photometer. | ||

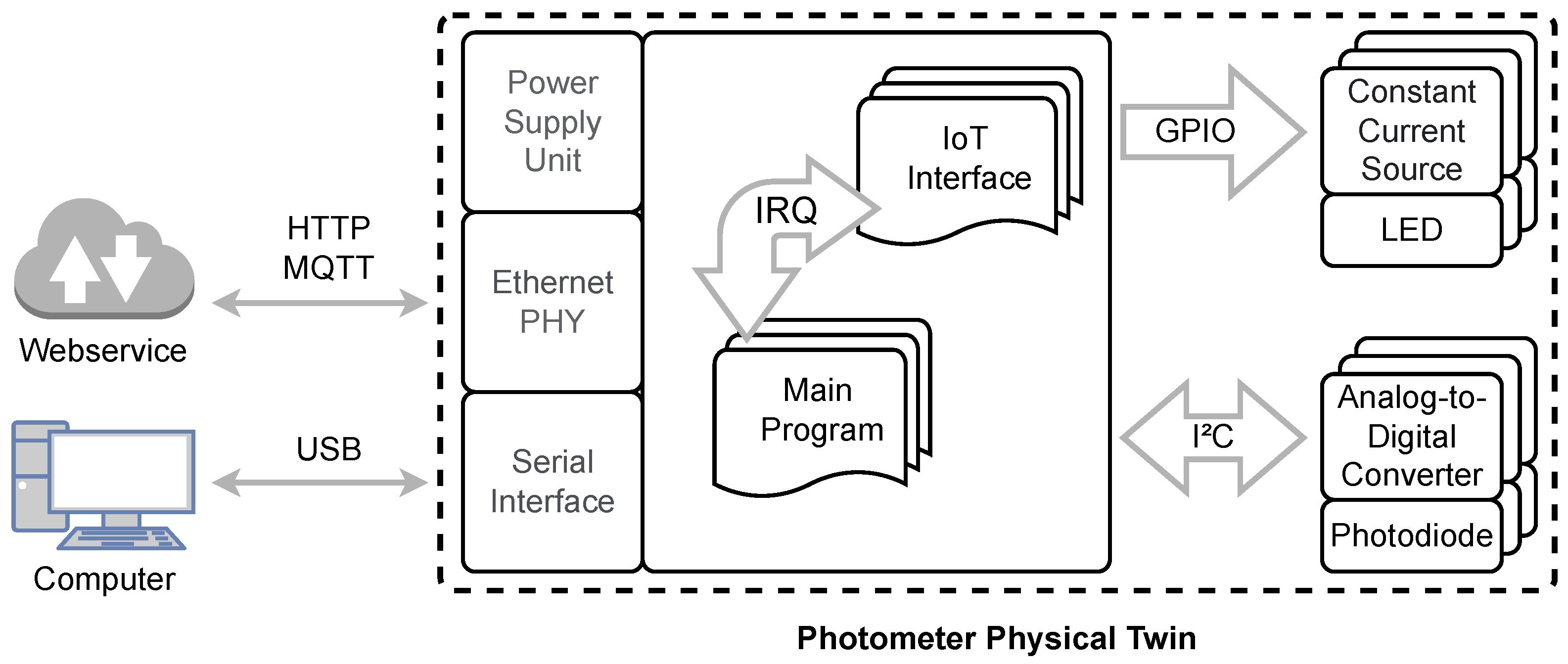

The developed photometer system, schematically shown in Figure 3, essentially consists of three main components: the parts for digitizing the analog sensors, the driver for controlling the light sources, and a powerful microcontroller. | The developed photometer system, schematically shown in Figure 3, essentially consists of three main components: the parts for digitizing the analog sensors, the driver for controlling the light sources, and a powerful microcontroller.<ref>{{Cite web |date=April 2019 |title=i.MX RT1060 Crossover Processors for Consumer Products |url=https://www.pjrc.com/teensy/IMXRT1060CEC_rev0_1.pdf |format=PDF |publisher=NXP B.V |accessdate=08 December 2022}}</ref> These components and their interaction are described in the following. Depending on the application and measuring principle (transmission, reflection), photodiodes with different spectral sensitivities are used. Ideally, these sensors should have high photon sensitivity, fast response time, and low capacitance. Since these criteria are in mutual interaction, an application case-individual consideration is necessary. Exposure of the photodiode causes electrons to be released from the photocathode, resulting in a slight change in the diode’s dark current. A current-to-voltage converter integrated circuit (IC)<ref>{{Cite web |date=2017 |title=AS89010 Current-to-Digital Converter |url=https://ams.com/as89010 |publisher=ams-OSRAM AG |accessdate=06 December 2022}}</ref> senses this photocurrent from the diode. The application-specific integrated circuit (ASIC) is a low-noise sensor interface and is suitable for coupling optical sensors with current output. These input currents are quantized into a digital output signal (with up to 16 bits, depending on the integration time). The integration time can be varied between 1 ms and 1024 ms, and the current sensitivity can be varied in steps from 20 fA/Least Significant Bit (LSB) to 5000 pA/LSB. Measurements can be continuous or manually triggered. An advantage of the integration of the input signals performed by the device is the resulting significant increase in the dynamic range. Furthermore, high-frequency components are filtered, and periodic disturbances with a multiple of the period duration are suppressed. | ||

| Line 153: | Line 147: | ||

|} | |} | ||

The microcontroller, connected via an inter-integrated circuit (I²C) interface, is configured in 400 kHz fast mode to communicate with the sensor interfaces. The parameters for writing and reading the analog-to-digital | The microcontroller, connected via an inter-integrated circuit (I²C) interface, is configured in 400 kHz fast mode to communicate with the sensor interfaces. The parameters for writing and reading the analog-to-digital converters (ADCs) must follow a format specified by the manufacturer. The current state of each ADC (i.e., measurement running, measurement finished) can also be queried by reading special registers. Likewise, dedicated general purpose input/output (GPIO) pins can be used to signal the status of the ADCs to the controller as an interrupt request (IRQ). This avoids permanent polling of the corresponding register or GPIO pin and saves resources. After a completed measurement, the controller reads the corresponding data packet via the internal interface. Subsequently, a new measurement can be initiated. | ||

In addition to communication with the ADCs, the controller has the task of controlling the LEDs. These light sources are controlled via switchable constant current sources. The selection of the light-emitting diodes used is again very much dependent on the selected measuring principle and the detector. In addition to the wavelength and power of the light source, the rise times (𝑡<sub>𝑟𝑖𝑠𝑒</sub>) and fall times (𝑡<sub>𝑓𝑎𝑙𝑙</sub>) in particular must be taken into account in the LED selection. These times can be stored in the controller as parameters of each light source individually. Before starting and after finishing each measurement, these specific times are taken into account. Especially for complex measurement setups, low detection limits, and/or short measurement duration, these parameters have a significant influence on the results and the maximum possible scanning speed. Via an integrated USB connection, all parameters can be configured between the photometer and the computer, and the raw measurement data can be sent. By means of the built-in ethernet PHY IC DP83825 from Texas Instruments | In addition to communication with the ADCs, the controller has the task of controlling the LEDs. These light sources are controlled via switchable constant current sources. The selection of the light-emitting diodes used is again very much dependent on the selected measuring principle and the detector. In addition to the wavelength and power of the light source, the rise times (𝑡<sub>𝑟𝑖𝑠𝑒</sub>) and fall times (𝑡<sub>𝑓𝑎𝑙𝑙</sub>) in particular must be taken into account in the LED selection. These times can be stored in the controller as parameters of each light source individually. Before starting and after finishing each measurement, these specific times are taken into account. Especially for complex measurement setups, low detection limits, and/or short measurement duration, these parameters have a significant influence on the results and the maximum possible scanning speed. Via an integrated USB connection, all parameters can be configured between the photometer and the computer, and the raw measurement data can be sent. By means of the built-in ethernet PHY IC DP83825 from Texas Instruments<ref>{{Cite web |date=August 2019 |title=DP83825I: Smallest form factor (3-mm by 3-mm), low-power 10/100-Mbps Ethernet PHY transceiver with 50-MHz c |url=https://www.ti.com/product/DP83825I |publisher=Texas Instruments |accessdate=30 November 2022}}</ref>, 10/100 MBit communication via Ethernet is also possible. An [[internet of things]] (IoT) interface implemented on the software side, consisting of a Hypertext Transfer Protocol (HTTP) server and Message Queueing Telemetry Transport (MQTT) client, enables the connection to further IT infrastructure or web services. | ||

The design of the photometer is highly flexible given its configurability and modularity. According to the selected configuration of the individual components, measurements can be performed in wavelength ranges of ultraviolet (UV), VIS, NIR, and infrared (IR). Furthermore, measurements of, e.g., particle sizes can be performed with special probe designs adapted to the task. It is even possible to conduct Raman measurements with probes that are extended by additional optical components. Some specific examples are listed below: | The design of the photometer is highly flexible given its configurability and modularity. According to the selected configuration of the individual components, measurements can be performed in wavelength ranges of ultraviolet (UV), VIS, NIR, and infrared (IR). Furthermore, measurements of, e.g., particle sizes can be performed with special probe designs adapted to the task. It is even possible to conduct Raman measurements with probes that are extended by additional optical components. Some specific examples are listed below: | ||

#'''Use of a scattered light sensor for monitoring the dispersed surface in crystallization''': The specific surface area of the dispersed phase in suspensions, emulsions, bubble columns, and aerosols plays a decisive role in the increment of heat and mass transfer processes. This has a direct effect on the space-time yield in large-scale chemical/process engineering production plants. An easy-to-install optical backscatter sensor outputs the dispersed surface area as a direct primary signal under certain boundary conditions. The sensor works even in highly concentrated suspensions and emulsions, where conventional nephelometry already fails. Several trends and limitations have been found so far for the sensor, which can be used in-line in batch and continuously operated crystallizers, even in harsh production environments, and in potentially explosive zones. The specific dispersed surface is directly detected as the primary measurand. | #'''Use of a scattered light sensor for monitoring the dispersed surface in crystallization''': The specific surface area of the dispersed phase in suspensions, emulsions, bubble columns, and aerosols plays a decisive role in the increment of heat and mass transfer processes. This has a direct effect on the space-time yield in large-scale chemical/process engineering production plants. An easy-to-install optical backscatter sensor outputs the dispersed surface area as a direct primary signal under certain boundary conditions. The sensor works even in highly concentrated suspensions and emulsions, where conventional nephelometry already fails. Several trends and limitations have been found so far for the sensor, which can be used in-line in batch and continuously operated crystallizers, even in harsh production environments, and in potentially explosive zones. The specific dispersed surface is directly detected as the primary measurand.<ref name=":14">{{Cite journal |last=Schmitt |first=Lukas |last2=Meyer |first2=Conrad |last3=Schorz |first3=Stefan |last4=Manser |first4=Steffen |last5=Scholl |first5=Stephan |last6=Rädle |first6=and Matthias |date=2022-08 |title=Use of a Scattered Light Sensor for Monitoring the Dispersed Surface in Crystallization |url=https://onlinelibrary.wiley.com/doi/10.1002/cite.202200076 |journal=Chemie Ingenieur Technik |language=de |volume=94 |issue=8 |pages=1177–1184 |doi=10.1002/cite.202200076 |issn=0009-286X}}</ref> | ||

# '''Development and application of optical sensors and measurement devices for the detection of deposits during reaction fouling''': In many chemical/pharmaceutical processes, the technically viable efficiencies and throughputs have not been achieved yet because of the reduction in heat transfer (e.g., in heat transfer units, reactors, etc.) due to the formation of wall deposits. Considerable amounts of energy can be saved by reducing or entirely preventing this problematic area. Therefore, a measurement device and its optical and electronic parts were developed for the detection and measurement of deposits in polymerization reactors, simultaneously aiming the in-line monitoring. The design strategy was carried out systematically via theoretical calculations—such as optical ray tracing and photon flux analysis—via test designs, [[laboratory]] investigations, and then industrial use. The developed sensors are based on fiber-optic technology and thus can be integrated into the smallest and most complex apparatus, even in explosion-hazardous areas. Critical product and process states in the reactant are detected at an early stage by combining several multi-spectral backscattering technologies. Thus, the formation of deposits can be prevented by changing process parameters. | #'''Development and application of optical sensors and measurement devices for the detection of deposits during reaction fouling''': In many chemical/pharmaceutical processes, the technically viable efficiencies and throughputs have not been achieved yet because of the reduction in heat transfer (e.g., in heat transfer units, reactors, etc.) due to the formation of wall deposits. Considerable amounts of energy can be saved by reducing or entirely preventing this problematic area. Therefore, a measurement device and its optical and electronic parts were developed for the detection and measurement of deposits in polymerization reactors, simultaneously aiming the in-line monitoring. The design strategy was carried out systematically via theoretical calculations—such as optical ray tracing and photon flux analysis—via test designs, [[laboratory]] investigations, and then industrial use. The developed sensors are based on fiber-optic technology and thus can be integrated into the smallest and most complex apparatus, even in explosion-hazardous areas. Critical product and process states in the reactant are detected at an early stage by combining several multi-spectral backscattering technologies. Thus, the formation of deposits can be prevented by changing process parameters.<ref name=":15">{{Cite journal |last=Teumer |first=Tobias |last2=Medina |first2=Isabel |last3=Strischakov |first3=Johann |last4=Schorz |first4=Stefan |last5=Kumari |first5=Pooja |last6=Hohlen |first6=Annika |last7=Scholl |first7=Stephan |last8=Schwede |first8=Christian |last9=Melchin |first9=Timo |last10=Welzel |first10=Stefan |last11=K. Hungenberg |date=2021 |title=Development and application of optical sensors and measurement devices for the detection of deposits during reaction fouling 13th ECCE and 6th ECAB -post 31762 |url=http://rgdoi.net/10.13140/RG.2.2.25284.55681 |language=en |doi=10.13140/RG.2.2.25284.55681}}</ref> | ||

# '''Photometric inline monitoring of the pigment concentration of highly filled coatings''': This involves inline monitoring of particle concentration in highly filled dispersions and paint systems using fiber-optic backscatter sensors. Due to the miniaturization of the distance between emitter and receiver fiber to <600 µm, the transmitted light can also penetrate high dispersion phase fractions of up to 60%. Due to the measurement setup, both transmission and scattering influences are found in the resulting signal. In this setup, the photometer is configured with detectors and light sources for the red wavelength range (660 nm). The measurement interval of 128 ms is sufficiently small to allow very close monitoring of the measured values. | #'''Photometric inline monitoring of the pigment concentration of highly filled coatings''': This involves inline monitoring of particle concentration in highly filled dispersions and paint systems using fiber-optic backscatter sensors. Due to the miniaturization of the distance between emitter and receiver fiber to <600 µm, the transmitted light can also penetrate high dispersion phase fractions of up to 60%. Due to the measurement setup, both transmission and scattering influences are found in the resulting signal. In this setup, the photometer is configured with detectors and light sources for the red wavelength range (660 nm). The measurement interval of 128 ms is sufficiently small to allow very close monitoring of the measured values.<ref name=":16">{{Cite journal |last=Guffart |first=Julia |last2=Bus |first2=Yannick |last3=Nachtmann |first3=Marcel |last4=Lettau |first4=Markus |last5=Schorz |first5=Stefan |last6=Nieder |first6=Helmut |last7=Repke |first7=Jens‐Uwe |last8=Rädle |first8=Matthias |date=2020-06 |title=Photometric Inline Monitoring of Pigment Concentration in Highly Filled Lacquers |url=https://onlinelibrary.wiley.com/doi/10.1002/cite.201900186 |journal=Chemie Ingenieur Technik |language=en |volume=92 |issue=6 |pages=729–735 |doi=10.1002/cite.201900186 |issn=0009-286X}}</ref> | ||

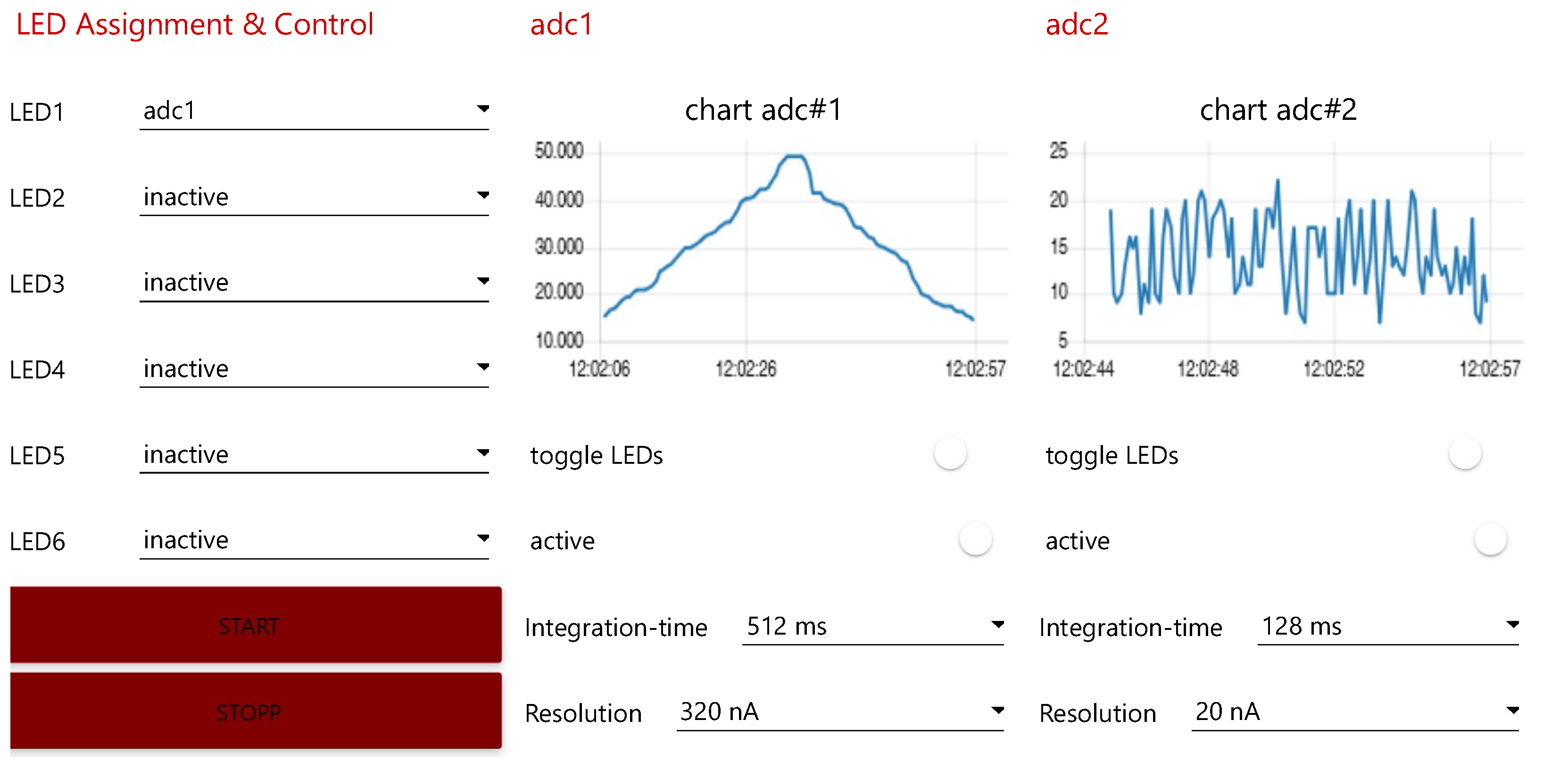

As described above, a number of hardware and software settings and modifications have to be made, especially during the pre-test phase, in order to fulfil the intended task. This usually requires a modification of the firmware of the photometer with the corresponding parameters of the installed components. This time-consuming step, which cannot be performed by every end user, can be eliminated by utilizing the DT of the physical device. Hardware-specific settings can be made comfortably via a graphical user interface (GUI). At the same time, a plausibility check of the selected parameters can be realized in a simple way. A misconfiguration of the device can be made more difficult, and the end user has the option to check the settings again. | As described above, a number of hardware and software settings and modifications have to be made, especially during the pre-test phase, in order to fulfil the intended task. This usually requires a modification of the firmware of the photometer with the corresponding parameters of the installed components. This time-consuming step, which cannot be performed by every end user, can be eliminated by utilizing the DT of the physical device. Hardware-specific settings can be made comfortably via a graphical user interface (GUI). At the same time, a plausibility check of the selected parameters can be realized in a simple way. A misconfiguration of the device can be made more difficult, and the end user has the option to check the settings again. | ||

| Line 190: | Line 184: | ||

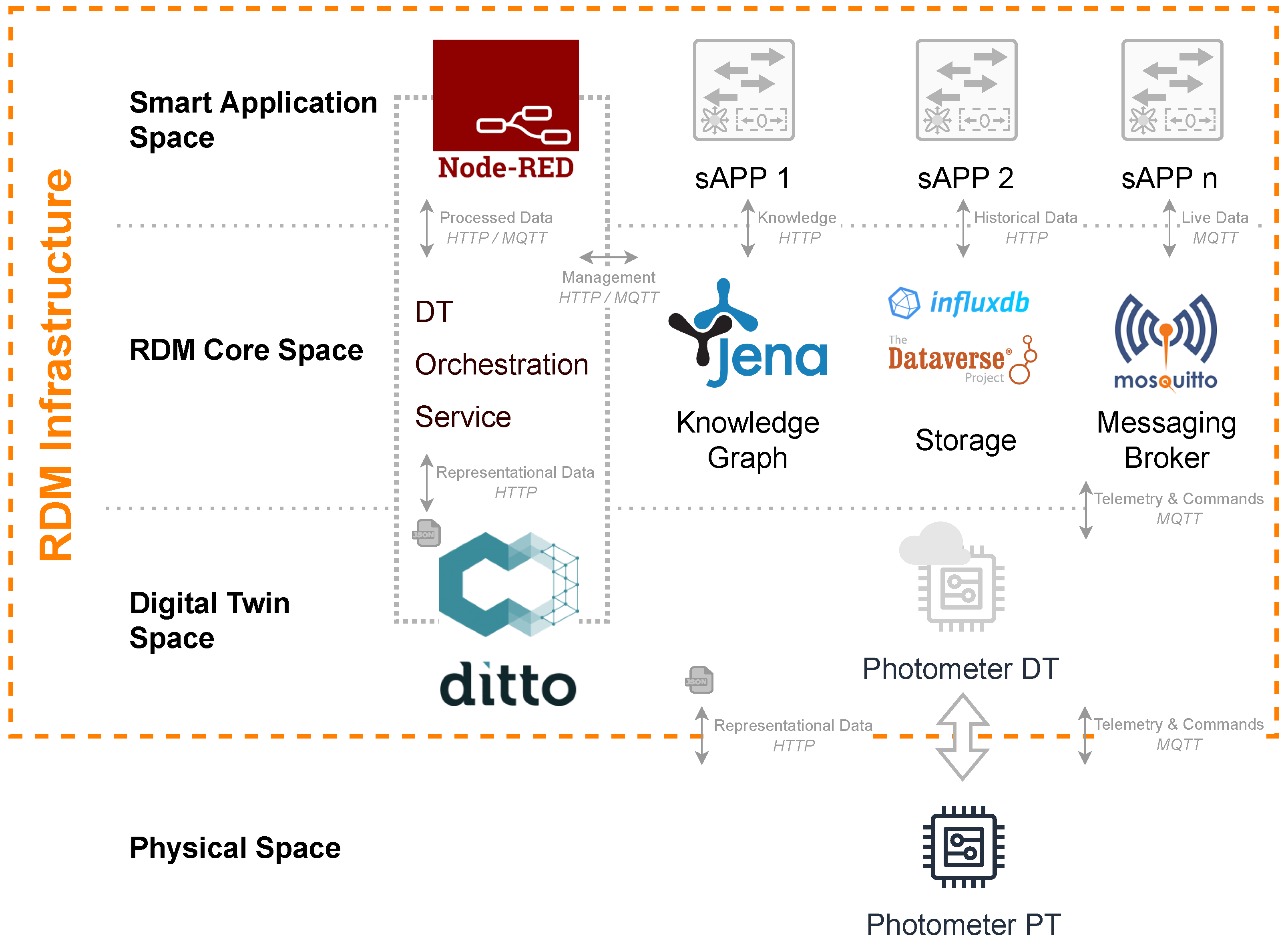

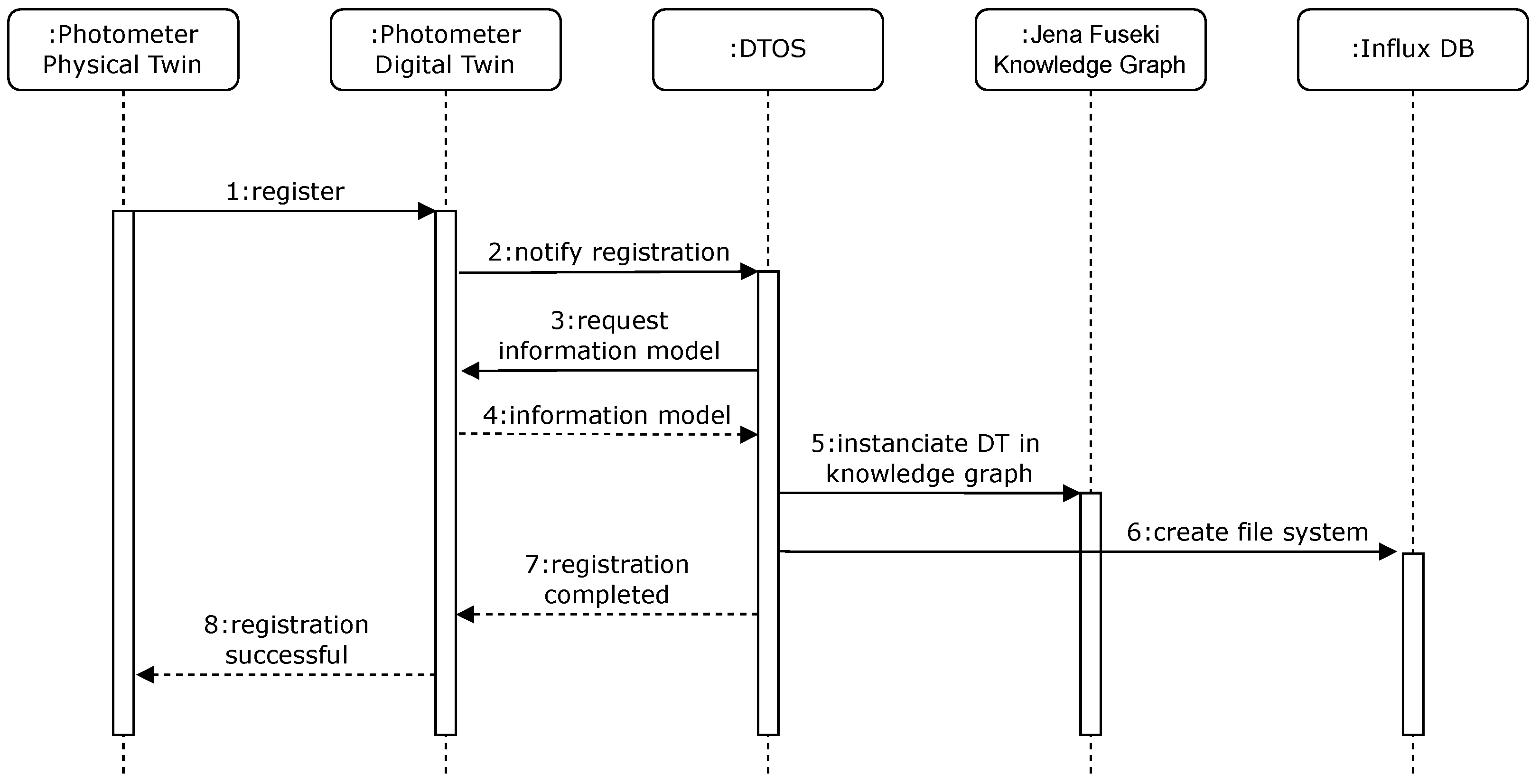

===Digital Twin Space=== | ===Digital Twin Space=== | ||

At the base of the RDM infrastructure itself, the Digital Twin Space contains the DTs of the physical measuring devices. It is based on the open-source project Eclipse Ditto | At the base of the RDM infrastructure itself, the Digital Twin Space contains the DTs of the physical measuring devices. It is based on the open-source project Eclipse Ditto<ref>{{Cite web |title=Eclipse Ditto documentation overview |work=Eclipse Ditto Documentation |url=https://eclipse.dev/ditto/intro-overview.html |publisher=Eclipse Foundation |accessdate=09 November 2022}}</ref>, which aims to cope with representations of DTs. With a scalable basis, the Ditto project offers the possibility of integrating physical devices and their digital representations at a high abstraction level. Not only the organization but also the entire physical-virtual interaction is thus made possible for further back-end applications in a simplified manner. The PT and its DT can be accessed bi-directionally via the provided [[application programming interface]] (API). As a result, the Physical Space can be influenced by changes within the Digital Twin Space. Eclipse Ditto is, as the rest of the authors’ infrastructure, built on various microservices. Individual scalability, space-saving deployment, and separation of different task areas as a robust, distributed system, let the project become a universal [[middleware]] for the provision of DTs. The essential system components of Eclipse Ditto are briefly outlined below. | ||

* '''Connectivity service''': Ensuring frictionless communication between physical devices, their virtual counterparts, and data consuming back-end applications, the Connectivity service provides a direct interface for various protocols and communication standards such as HTTP, Websockets, MQTT, or Advanced Message Queuing Protocol (AMQP). A specially developed, unified JSON-based Ditto Protocol as the payload of messages of the listed communication standards opens up numerous interaction possibilities. For example, messages can be also mapped via scripts for preprocessing and post-processing, as well as structuring. Furthermore, by using the Ditto Protocol, the entire Ditto instance can be managed, thereby a complete interface is established to interact efficiently with the DTs and their physical counterparts. | *'''Connectivity service''': Ensuring frictionless communication between physical devices, their virtual counterparts, and data consuming back-end applications, the Connectivity service provides a direct interface for various protocols and communication standards such as HTTP, Websockets, MQTT, or Advanced Message Queuing Protocol (AMQP). A specially developed, unified JSON-based Ditto Protocol as the payload of messages of the listed communication standards opens up numerous interaction possibilities. For example, messages can be also mapped via scripts for preprocessing and post-processing, as well as structuring. Furthermore, by using the Ditto Protocol, the entire Ditto instance can be managed, thereby a complete interface is established to interact efficiently with the DTs and their physical counterparts. | ||

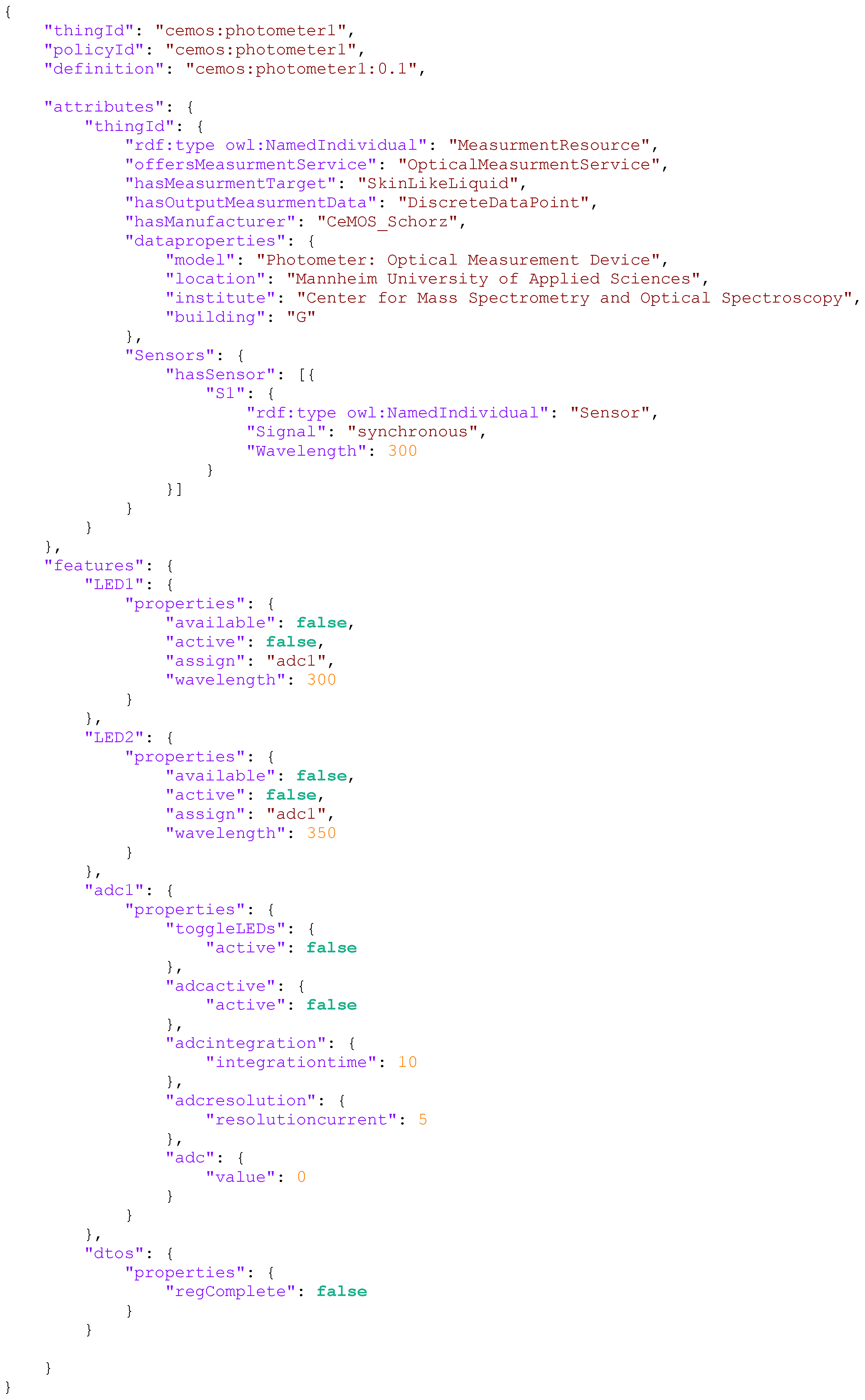

* '''Things service''': The Things service contains the actual structure and telemetry representation of the PTs. This abstract representation consists of a simple JSON file. While the first part of the JSON includes the static describing attributes of a DT, such as a unique identifier, the assigned policy, or other semantically describing properties, the second part contains the dynamic features to which all telemetry data belong. These mirror the constantly changing status of the PTs. | *'''Things service''': The Things service contains the actual structure and telemetry representation of the PTs. This abstract representation consists of a simple JSON file. While the first part of the JSON includes the static describing attributes of a DT, such as a unique identifier, the assigned policy, or other semantically describing properties, the second part contains the dynamic features to which all telemetry data belong. These mirror the constantly changing status of the PTs. | ||