Difference between revisions of "Journal:Handling metadata in a neurophysiology laboratory"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 19: | Line 19: | ||

|download = [https://www.frontiersin.org/articles/10.3389/fninf.2016.00026/pdf https://www.frontiersin.org/articles/10.3389/fninf.2016.00026/pdf] (PDF) | |download = [https://www.frontiersin.org/articles/10.3389/fninf.2016.00026/pdf https://www.frontiersin.org/articles/10.3389/fninf.2016.00026/pdf] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

To date, non-reproducibility of neurophysiological research is a matter of intense discussion in the scientific community. A crucial component to enhance reproducibility is to comprehensively collect and store metadata, that is, all information about the experiment, the data, and the applied preprocessing steps on the data, such that they can be accessed and shared in a consistent and simple manner. However, the complexity of experiments, the highly specialized analysis workflows, and a lack of knowledge on how to make use of supporting software tools often overburden researchers to perform such a detailed documentation. For this reason, the collected metadata are often incomplete, incomprehensible for outsiders, or ambiguous. Based on our research experience in dealing with diverse datasets, we here provide conceptual and technical guidance to overcome the challenges associated with the collection, organization, and storage of metadata in a neurophysiology [[laboratory]]. Through the concrete example of managing the metadata of a complex experiment that yields multi-channel recordings from monkeys performing a behavioral motor task, we practically demonstrate the implementation of these approaches and solutions, with the intention that they may be generalized to other projects. Moreover, we detail five use cases that demonstrate the resulting benefits of constructing a well-organized metadata collection when processing or analyzing the recorded data, in particular when these are shared between laboratories in a modern scientific collaboration. Finally, we suggest an adaptable workflow to accumulate, structure and store metadata from different sources using, by way of example, the odML metadata framework. | To date, non-reproducibility of neurophysiological research is a matter of intense discussion in the scientific community. A crucial component to enhance reproducibility is to comprehensively collect and store metadata, that is, all information about the experiment, the data, and the applied preprocessing steps on the data, such that they can be accessed and shared in a consistent and simple manner. However, the complexity of experiments, the highly specialized analysis workflows, and a lack of knowledge on how to make use of supporting software tools often overburden researchers to perform such a detailed documentation. For this reason, the collected metadata are often incomplete, incomprehensible for outsiders, or ambiguous. Based on our research experience in dealing with diverse datasets, we here provide conceptual and technical guidance to overcome the challenges associated with the collection, organization, and storage of metadata in a neurophysiology [[laboratory]]. Through the concrete example of managing the metadata of a complex experiment that yields multi-channel recordings from monkeys performing a behavioral motor task, we practically demonstrate the implementation of these approaches and solutions, with the intention that they may be generalized to other projects. Moreover, we detail five use cases that demonstrate the resulting benefits of constructing a well-organized metadata collection when processing or analyzing the recorded data, in particular when these are shared between laboratories in a modern scientific collaboration. Finally, we suggest an adaptable workflow to accumulate, structure and store metadata from different sources using, by way of example, the odML metadata framework. | ||

| Line 35: | Line 31: | ||

==Organizing metadata in neurophysiology== | ==Organizing metadata in neurophysiology== | ||

Metadata are generally defined as data describing data.<ref name="BacaIntro08">{{cite book |url=http://www.getty.edu/publications/intrometadata/ |title=Introduction to Metadata |editor=Baca, M. publisher=Getty Publications |edition=3rd |year=2016}}</ref><ref name="MWMetadata">{{cite web |url=https://www.merriam-webster.com/dictionary/metadata |title=Metadata |work=Merriam-Webster.com |publisher=Merriam-Webster |accessdate=06 July 2016}</ref> More specifically, metadata are information that describe the conditions under which a certain dataset has been recorded.<ref name="GreweABottom11" /> Ideally all metadata would be machine-readable and available at a single location that is linked to the corresponding recorded dataset. The fact that such central, comprehensive metadata collections are not common practice already today is by no means a sign of negligence on the part of the scientists, but is explained by the fact that in the absence of conceptual guidelines and software support, such a situation is extremely difficult to achieve given the high complexity of the task. Already, the fact that an electrophysiological setup is composed of several hardware and software components, often from different vendors, imposes the need to handle multiple files of different formats. Some files may even contain metadata that are not machine-readable and -interpretable. Furthermore, performing an experiment requires the full attention of the experimenters, which limits the amount of metadata that can be manually captured online. Metadata that arise unexpectedly during an experiment, e.g., the cause of a sudden noise artifact, are commonly documented as handwritten notes in the laboratory notebook. In fact, handwritten notes are often unavoidable, because the legal regulations of some countries, e.g., [http://www.cnrs.fr/infoslabos/cahier-laboratoire/docs/cahierlabo.pdf France], require the documentation of experiments in the form of a handwritten laboratory notebook. | Metadata are generally defined as data describing data.<ref name="BacaIntro08">{{cite book |url=http://www.getty.edu/publications/intrometadata/ |title=Introduction to Metadata |editor=Baca, M. publisher=Getty Publications |edition=3rd |year=2016}}</ref><ref name="MWMetadata">{{cite web |url=https://www.merriam-webster.com/dictionary/metadata |title=Metadata |work=Merriam-Webster.com |publisher=Merriam-Webster |accessdate=06 July 2016}}</ref> More specifically, metadata are information that describe the conditions under which a certain dataset has been recorded.<ref name="GreweABottom11" /> Ideally all metadata would be machine-readable and available at a single location that is linked to the corresponding recorded dataset. The fact that such central, comprehensive metadata collections are not common practice already today is by no means a sign of negligence on the part of the scientists, but is explained by the fact that in the absence of conceptual guidelines and software support, such a situation is extremely difficult to achieve given the high complexity of the task. Already, the fact that an electrophysiological setup is composed of several hardware and software components, often from different vendors, imposes the need to handle multiple files of different formats. Some files may even contain metadata that are not machine-readable and -interpretable. Furthermore, performing an experiment requires the full attention of the experimenters, which limits the amount of metadata that can be manually captured online. Metadata that arise unexpectedly during an experiment, e.g., the cause of a sudden noise artifact, are commonly documented as handwritten notes in the laboratory notebook. In fact, handwritten notes are often unavoidable, because the legal regulations of some countries, e.g., [http://www.cnrs.fr/infoslabos/cahier-laboratoire/docs/cahierlabo.pdf France], require the documentation of experiments in the form of a handwritten laboratory notebook. | ||

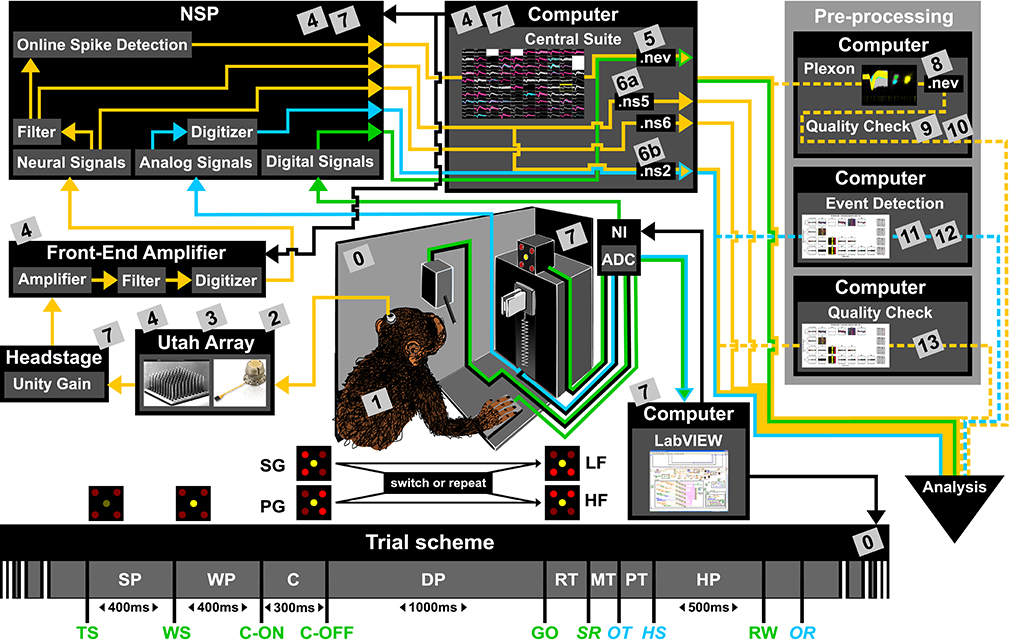

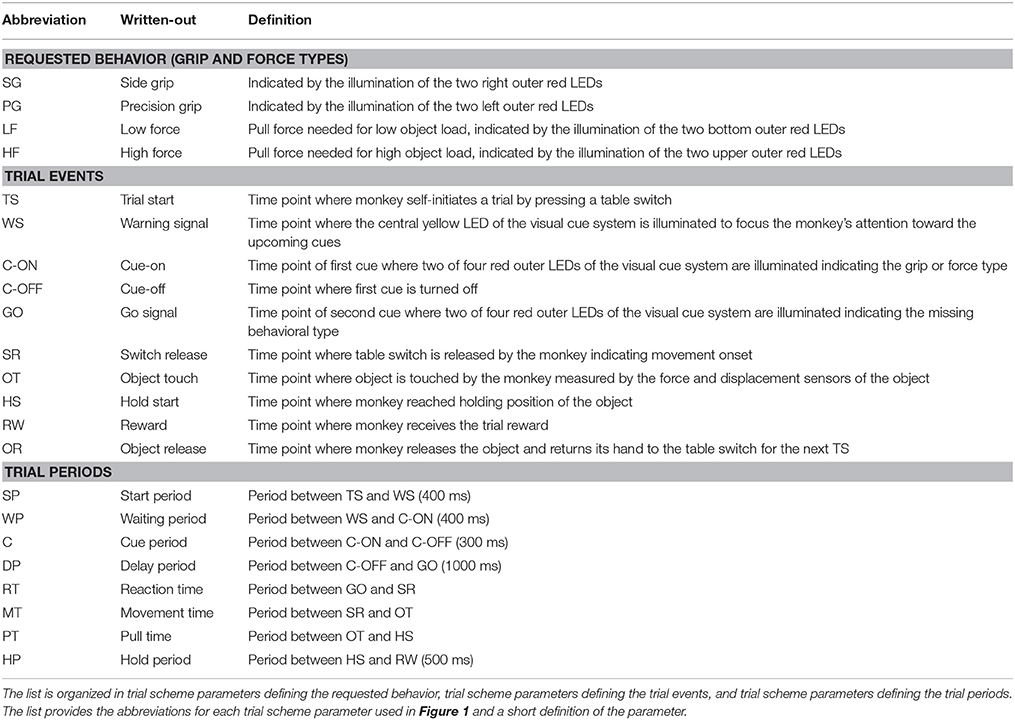

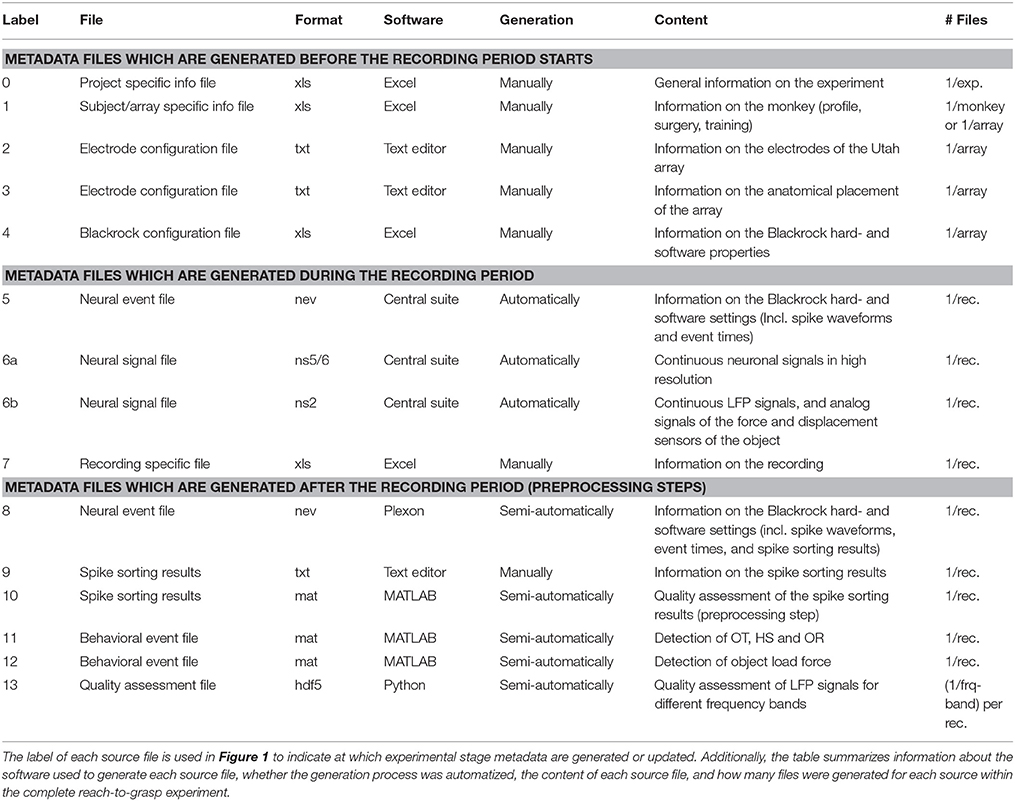

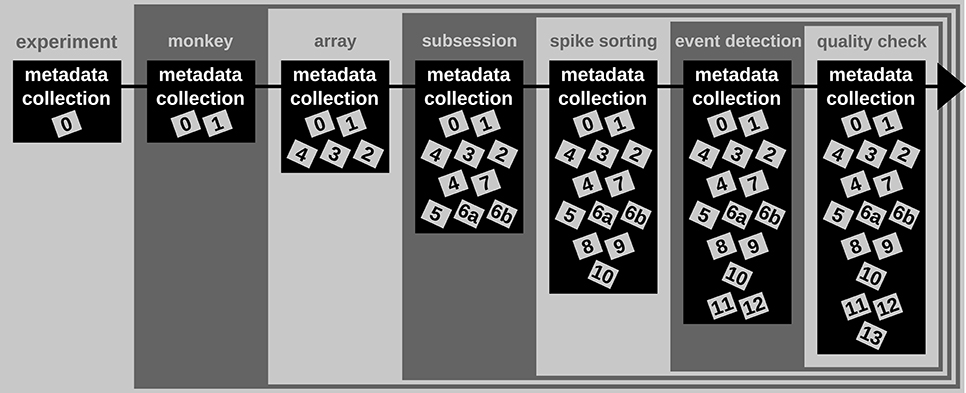

To present the concept of metadata management in a practical context, we introduce in the following a selected electrophysiological experiment. For clarity, a graphical summary of the behavioral task and the recording setup is provided in Figure 1, and a list of task-related abbreviations (e.g., behavioral conditions and events) is listed in Table 1. First described in Riehle ''et al.''<ref name="RiehleMapping13" /> and Milekovic ''et al.''<ref name="MilekovicLocal15">{{cite journal |title=Local field potentials in primate motor cortex encode grasp kinetic parameters |journal=Neuroimage |author=Milekovic, T.; Truccolo, W.; Grün, S. et al. |volume=114 |pages=338–55 |year=2015 |doi=10.1016/j.neuroimage.2015.04.008 |pmid=25869861 |pmc=PMC4562281}}</ref>, the experiment employs high density multi-electrode recordings to study the modulation of spiking and LFP activities in the motor/pre-motor cortex of monkeys performing an instructed delayed reach-to-grasp task. In total, three monkeys (Macaca mulatta; two females, L, T; one male, N) were trained to grasp an object using one of two different grip types (side grip, SG, or precision grip, PG) and to pull it against one of two possible loads requiring either a high (HF) or low (LF) pulling force (cf. Figure 1). In each trial, instructions for the requested behavior were provided to the monkeys through two consecutive visual cues (C and GO) which were separated by a one-second delay and generated by the illumination of specific combinations of five LEDs positioned above the object (cf. Figure 1). The complexity of this study makes it particularly suitable to expose the difficulty of collecting, organizing, and storing metadata. Moreover, metadata were first organized according to the laboratory internal procedures and practices for documentation, while the organization of the metadata into a comprehensive machine-readable format was done a-posteriori, after the experiment was completed. To work with the data and metadata of this experiment, one has to handle on average 300 recording sessions per monkey, with data distributed over three files per session, metadata distributed over five files per implant, at least 10 files per recording, and one file for general information of the experiment (cf. Table 2). This situation imposed an additional complexity to reorganize the various metadata sources. | To present the concept of metadata management in a practical context, we introduce in the following a selected electrophysiological experiment. For clarity, a graphical summary of the behavioral task and the recording setup is provided in Figure 1, and a list of task-related abbreviations (e.g., behavioral conditions and events) is listed in Table 1. First described in Riehle ''et al.''<ref name="RiehleMapping13" /> and Milekovic ''et al.''<ref name="MilekovicLocal15">{{cite journal |title=Local field potentials in primate motor cortex encode grasp kinetic parameters |journal=Neuroimage |author=Milekovic, T.; Truccolo, W.; Grün, S. et al. |volume=114 |pages=338–55 |year=2015 |doi=10.1016/j.neuroimage.2015.04.008 |pmid=25869861 |pmc=PMC4562281}}</ref>, the experiment employs high density multi-electrode recordings to study the modulation of spiking and LFP activities in the motor/pre-motor cortex of monkeys performing an instructed delayed reach-to-grasp task. In total, three monkeys (Macaca mulatta; two females, L, T; one male, N) were trained to grasp an object using one of two different grip types (side grip, SG, or precision grip, PG) and to pull it against one of two possible loads requiring either a high (HF) or low (LF) pulling force (cf. Figure 1). In each trial, instructions for the requested behavior were provided to the monkeys through two consecutive visual cues (C and GO) which were separated by a one-second delay and generated by the illumination of specific combinations of five LEDs positioned above the object (cf. Figure 1). The complexity of this study makes it particularly suitable to expose the difficulty of collecting, organizing, and storing metadata. Moreover, metadata were first organized according to the laboratory internal procedures and practices for documentation, while the organization of the metadata into a comprehensive machine-readable format was done a-posteriori, after the experiment was completed. To work with the data and metadata of this experiment, one has to handle on average 300 recording sessions per monkey, with data distributed over three files per session, metadata distributed over five files per implant, at least 10 files per recording, and one file for general information of the experiment (cf. Table 2). This situation imposed an additional complexity to reorganize the various metadata sources. | ||

| Line 140: | Line 136: | ||

In summary, compared to Scenario 1, Scenario 2 improved the workflow of selecting datasets according to certain criteria in two aspects: (i) to check for the selection criteria, only one metadata file per recording needs to be loaded, and (ii) to extract metadata stored in a standardized format, a loading routine is already available. Thus, in Scenario 2 the scripts for automatized data selection are less complicated, which improved the reproducibility of the operation. | In summary, compared to Scenario 1, Scenario 2 improved the workflow of selecting datasets according to certain criteria in two aspects: (i) to check for the selection criteria, only one metadata file per recording needs to be loaded, and (ii) to extract metadata stored in a standardized format, a loading routine is already available. Thus, in Scenario 2 the scripts for automatized data selection are less complicated, which improved the reproducibility of the operation. | ||

====Use Case 4: Metadata screening==== | |||

<blockquote>Sometimes it can be helpful to gain an overview of the metadata of an entire experiment. Such a screening process is often negatively influenced by the following aspects: (i) Some metadata are stored along with the actual electrophysiological data, (ii) metadata are often distributed over several files and formats, and (iii) some metadata need to be computed from other metadata. All three aspects slow down the screening procedure and complicate the corresponding code. Use Case 4 demonstrates how a comprehensive metadata collection improves the speed and reproducibility of a metadata screening procedure.</blockquote> | |||

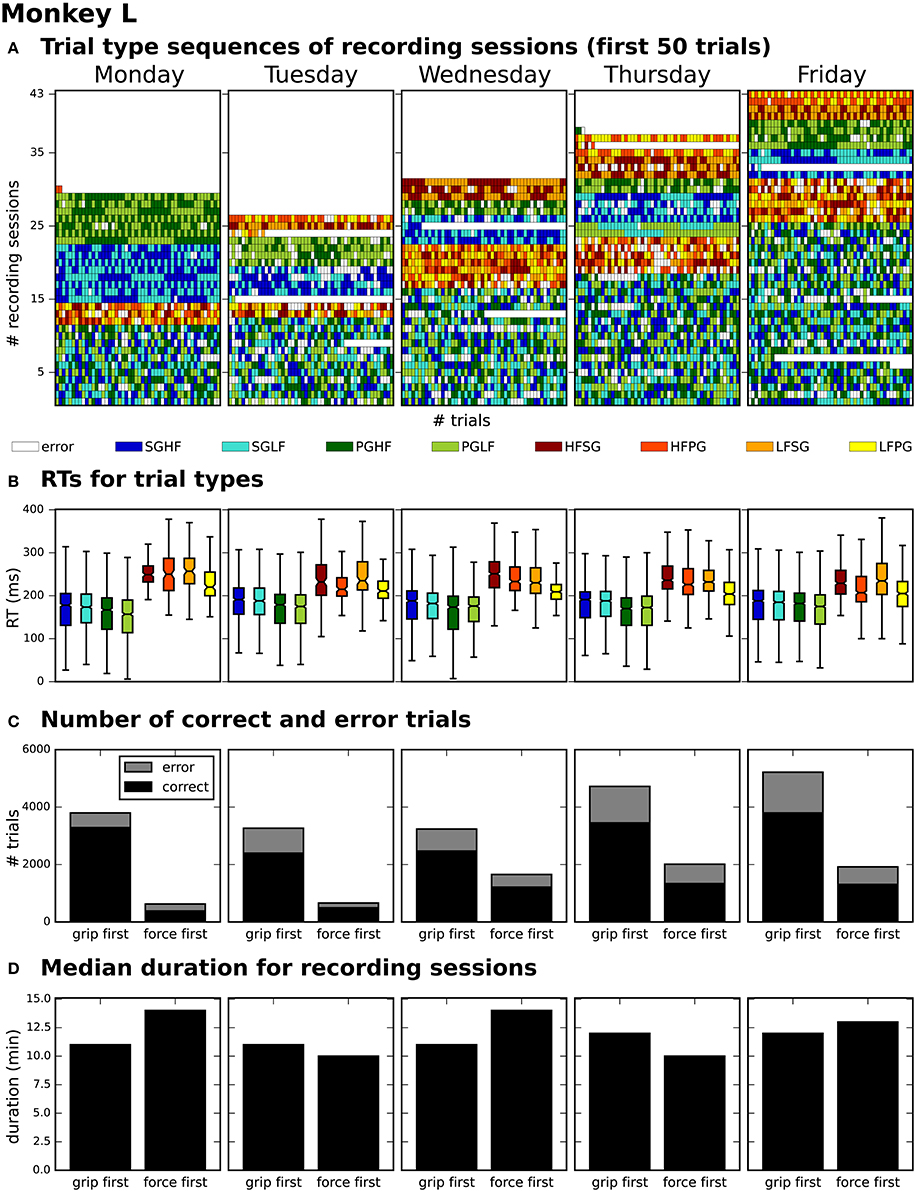

After generating for each weekday a corresponding list of recording file names (see Use Case 3, Section 3.3), Bob would like to generate an overview figure summarizing the set of metadata that best reflect the performance of the monkey during the selected recordings (Figure 3). This set includes the following metadata: the RTs of the trials for each recording (Figure 3B), the number of correct vs. error trials (Figure 3C), and the total duration of the recording (Figure 3D). To exclude a bias due to a variable distribution of the different task conditions, Bob also wants to include the trial type combinations and their sequential order (Figure 3A). | |||

[[File:Fig3 Zehl FrontInNeuro2016 10.jpg|922px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="922px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 3.''' Overview of reach-to-grasp metadata summarizing the performance of monkey L on each weekday. '''(A)''' displays the order of trial sequences of the first 50 trials (small bars along the x-axis) for each of all sessions (rows along the y-axis) recorded on the corresponding weekday. Each small bar corresponds to a trial and its color to the requested trial type of the trial (see color legend below subplot (A); for trial type acronyms see main text). '''(B)''' summarizes the median reaction times (RTs) for all trials of the same trial type (see color legend) of all sessions recorded on the corresponding weekday. '''(C)''' shows for each weekday the total number of trials of all sessions differentiating between correct and error trials (colored in black and gray) and between sessions where the monkey was first informed about the grip type (grip first) compared to sessions where the first cue informed about the force type (force first). '''(D)''' displays for each weekday the median recording duration of the grip first and force first sessions.</blockquote> | |||

|- | |||

|} | |||

|} | |||

To create the overview figure in Scenario 1, Bob has to load the .nev data file (Label 5 in Figure 1 and Table 2) in which most selection criteria are stored, and additionally the .ns2 data file (Label 6b in Figure 1 and Table 2) to extract the duration of the recording. For both files, Bob is able to use available loading routines, but for accessing the desired metadata, he always has to load the complete data files. Depending on the data size this processing can be very time consuming. In Scenario 2, Bob is able to directly and efficiently extract all metadata from one comprehensive metadata file without having to load the neuronal data in parallel. | |||

In summary, compared to Scenario 1, the workflow of creating an overview of certain metadata of an entire experiment is improved in Scenario 2 by reducing the number of metadata files which need to be screened to one per recording, and by drastically lowering the run time to collect the criteria used in the figure. In addition, Bob benefits from better reproducibility as in Use Case 3. | |||

===Use Case 5: Metadata queries for data selection=== | |||

<blockquote>It is common that datasets are analyzed not only by members of the experimenter's lab but also collaborators. Two difficulties may arise in this context. First, the partners will often base their work on different workflow strategies and software technologies, making it difficult to share their code, in particular code that is used to access data objects. Second, the geographical separation represents a communication barrier resulting from infrequent and impersonal communication by telephone, chat, or email, requiring extra care in conveying relevant information to the partner in a precise way. Use Case 5 demonstrates how a standard format used to save the comprehensive metadata collection improves cross-lab collaborations by formalizing the communication process through the use of queries on the metadata.</blockquote> | |||

Alice has detected a systematic noise artifact in the LFP signals of some channels in recording sessions performed in July (possibly due to additional air conditioning). As a consequence, Alice decided to exclude recordings performed in July from her LFP spectral analyses. Alice collaborates with Carol to perform complementary spike correlation analyses on an identical subset of recordings in order to find out if the network correlation structure is affected by task performance. To ensure that they analyze exactly the same datasets, the best data selection solution is to rely on metadata information that is located in the data files, rather than error-prone measures such as interpreting the file name or file creation date. | |||

In Scenario 1, since Alice and Carol use different programming languages (MATLAB and Python), they need to ensure that their routines extract the same recording date. This procedure will require providing corresponding cross-validations between their MATLAB and Python routines to extract identical dates. | |||

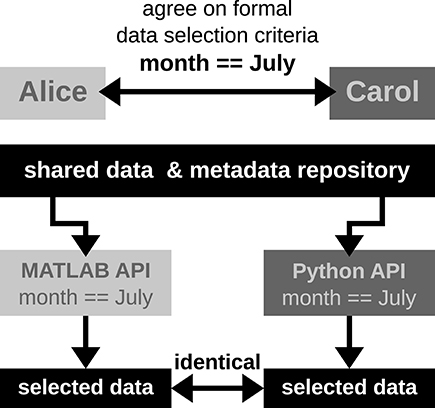

In Scenario 2, Alice and Carol agree on a concrete formal specification of the dataset selection (Figure 4) via the comprehensive metadata collection. In such a specification, metadata are stored in a defined format that reflects the structure of key-value pairs: in our example, Alice would specify the data selection by telling Carol to allow for only those recording sessions where the key month has the exact value "July." For the chosen standardized format of the collection, the metadata query can be handled by an application program interface (API) available both at the MATLAB and Python levels for Alice and Carol, respectively. Therefore, the query is guaranteed to produce the same result for both scientists. The formalization of such metadata queries will result in a more coherent and less error-prone synchronization between the work in the two laboratories. | |||

[[File:Fig4 Zehl FrontInNeuro2016 10.jpg|400px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="400px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 4.''' Schematic workflow of selecting data across laboratories based on available API for a comprehensive metadata collection. In this optimized workflow, Alice and Carol are both able to identically select data from the common repository by screening the comprehensive metadata collection for the selection criteria (month == July) via the available APIs for MATLAB and Python. Such a formalized query in data selection supports a well-defined collaborative workflow across laboratories.</blockquote> | |||

|- | |||

|} | |||

|} | |||

==Guidelines for creating a comprehensive metadata collection== | |||

The five use cases illustrate the importance and usefulness of a comprehensive metadata collection in a standardized format. One effort to develop such a standardized format is the odML project, which implements a metadata model proposed by Grewe ''et al.''<ref name="GreweABottom11" /> The odML project supports a software library (the odML library) for reading, writing, and handling odML files through an API, with language support for Python, Java, and MATLAB. The remainder of this paper complements the original technical publication of the software<ref name="GreweABottom11" /> by illustrating in a tutorial-like style its practical use in creating a comprehensive metadata collection. We demonstrate this process using the metadata of the described experiment as a practical example. We show how to generate a comprehensive metadata file and outline a workflow to enter and maintain metadata in a collection of such files. Although we are convinced that the odML library is particularly well designed to reach this goal, the concepts and guidelines are of general applicability and could be implemented with other suitable technology as well. | |||

===The odML metadata model=== | |||

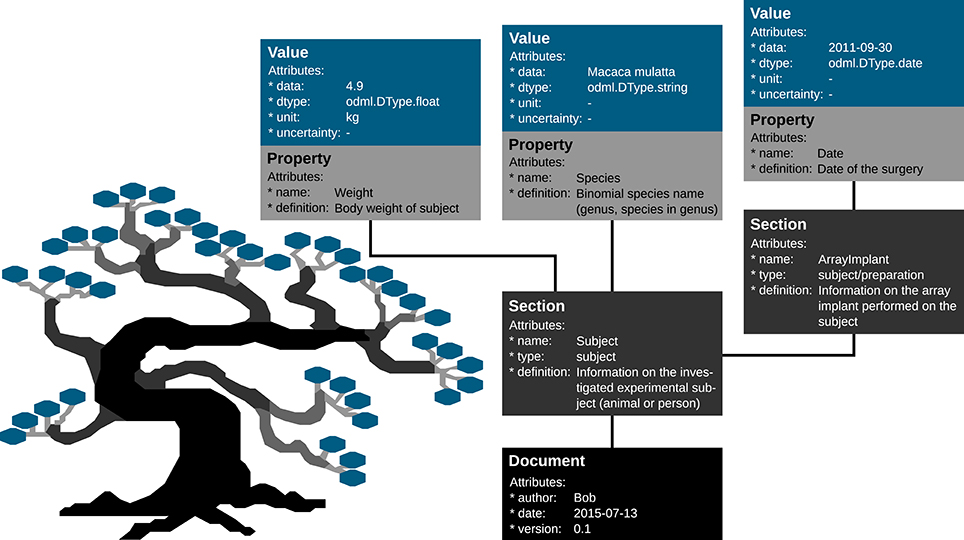

Metadata can be of arbitrary type and describe various aspects of the recording and preprocessing steps of the experiment (cf. Figure 1 and Table 2). Nevertheless, all metadata can be represented by a key-value pair, where the key indicates the type of metadata, and the value contains the metadata or points to a file or a remote location where the metadata can be obtained (e.g., for image files). Consequently, the odML metadata model is built on the concepts of Properties (keys) paired with Values as the structural foundation of any metadata file. A Property may contain one or several Values. Its name is a short identifier by which the metadata can be addressed, and its definition can be used to give a textual description of metadata stored in the Value(s). Properties that belong to the same context (e.g., description of an experimental subject) are grouped in so called Sections which are specified by their name, type, and a definition. Sections can further be nested, i.e., contain sub-sections and thus form a tree-like structure. At the root of the tree is the Document which contains information about the author, the date of the file, and a version. Figure 5 illustrates how a subset of the experimental metadata is organized in an odML file. The design concepts presented here can be transferred to many other software solutions for metadata handling. We chose the odML library because it is comparatively generic and flexible, which makes it well-suited for a broad variety of experimental scenarios, and which enabled us to introduce it as metadata framework in all our collaborations. For extensive details on the odML metadata model in addition to Grewe ''et al.''<ref name="GreweABottom11" />, we provide a tutorial of the odML API as part of the [https://github.com/G-Node/python-odml odML Python library]. | |||

[[File:Fig5 Zehl FrontInNeuro2016 10.jpg|964px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="964px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 5.''' Example of the structural design of an odML file. The displayed Sections, Properties and Values are a subset of the odML Subject branch of the reach-to-grasp experiment (Subject_Demo.odml). Metadata are stored in the “data” attributes of odML Value objects, which also provide additional attributes to describe the datatype (dtype), the unit or the uncertainty of the Value. Which metadata are stored in the Values is defined by the connected Property object via its “name” and “definition” attribute. These Property-Value/s pairs that contain metadata of a similar context are grouped together into a Section object that is given a “name” and a “definition.” If a sub-context needs to be defined in more detail, Sections can also group other Sections together containing the corresponding metadata again in Property-Value/s pairs. To finalize the tree structure, Sections which are highest in the hierarchy are grouped together into a Document object which can state additional information on the odML file, such as who created it (“author”), when it was created (“date”), or which code or template version was used to create it.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Metadata strategy: Distribution of information=== | |||

In preparing a metadata strategy for an experiment, one has to decide if and how information should be distributed over multiple files. For example, one could decide to either generate (i) several files for each recording, (ii) a single file per recording, or (iii) a single file that comprises a series of recordings. The appropriate approach depends on both, the complexity of metadata and the user's specific needs for accessing them. In the following, we will exemplify situations which could lead to one of the three different approaches: | |||

(i) If the metadata of a preprocessing step are complex, combining them with the metadata related to the initial data acquisition could be confusing. One could instead generate separate files for the preprocessing and the recording. The downside of this approach is that the availability of both files needs to be assured. | |||

(ii) In an experiment where each single recording comprises a certain behavioral condition, the amount of metadata describing each condition is complex, and recordings are performed independently from each other, a single comprehensive metadata file per recording should be used. This approach was chosen for the example experiment described in Section 2. | |||

(iii) If one recording is strongly related to, or even directly influences, future recordings (e.g., learning of a certain behavior in several training sessions), then one single comprehensive metadata file should cover all the related recordings. Even metadata of preprocessing steps could be attached to this single file. | |||

===Metadata strategy: Structuring information=== | |||

Organizing metadata in a hierarchical structure facilitates navigation through possibly complex and extensive metadata files. The way to structure this hierarchy strongly depends on the experiment, the metadata content, and the individual demands resulting from how the metadata collection should be used. Nevertheless, there are some general guidelines to consider (based on Grewe ''et al.''<ref name="GreweABottom11" />): | |||

:(i) Keep the structure as flat as possible and as deep as necessary. Avoid Sections without any Properties. | |||

:(ii) Try to keep the structure and content as generic as possible. This enables the reuse of parts of the structure for other recording situations or even different experiments. Design a common structure for the entire experiment, or even across related studies, so that the same set of metadata filters can be used as queries in upcoming analyses. If this is not possible and multiple structures are introduced, for instance, because of very different task designs, create a Property which you can use to determine which structure was used in a particular file (e.g., Property “UsedTaskDesign”: Value “TaskDesign_01”). | |||

:(iii) Create a structure that categorizes metadata clearly into different branches. Make use of the general components of an electrophysiological experiment (e.g., subject, setup, hardware, software, etc.), but also classify metadata according to context or time (e.g., previous knowledge, pre- and postprocessing steps). | |||

:(iv) In order to describe repeating entities like an experimental trial or an electrode description, it is advisable to separate constant properties from those that change individually. Generate one Section for constant features and unique Sections for each repetition for metadata that may change. | |||

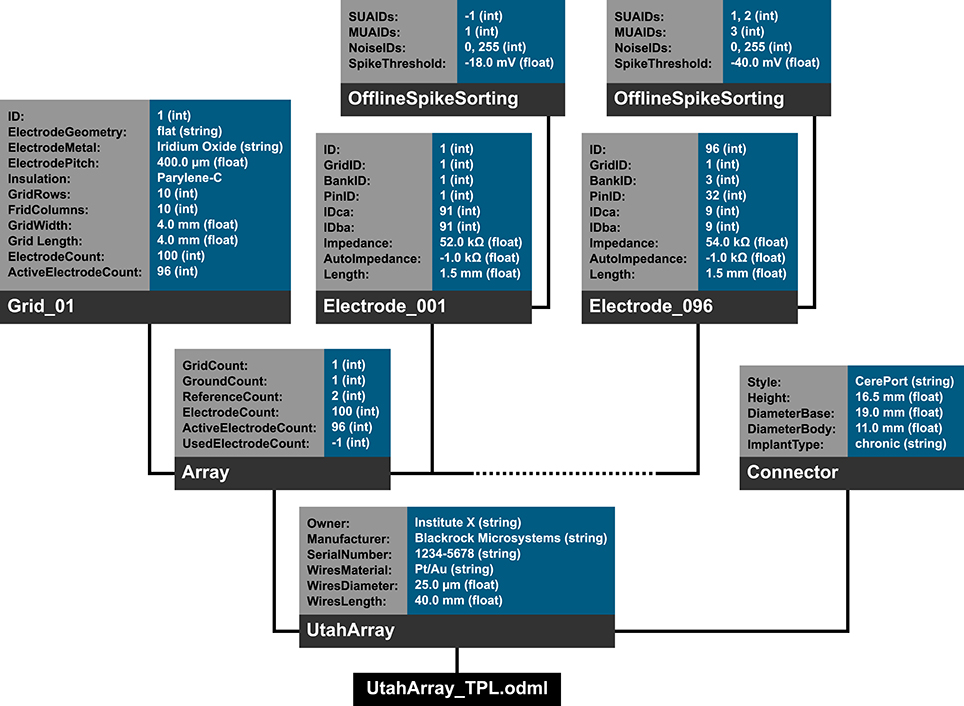

In the following we will illustrate these principles with a subset of the metadata related to our example experiment (Section 2). Using the nomenclature of the odML metadata framework, we structured the metadata of a Utah array into the hierarchy of Sections, which is schematically displayed in Figure 6. | |||

[[File:Fig6 Zehl FrontInNeuro2016 10.jpg|964px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="964px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 6.''' Schematic view of the tree structure of the odML template for a Utah array. Color code of boxes matches color code of odML objects in Figure 5. To simplify the schema, odML Properties and their values are listed in blocks for each odML section and additional attributes of the odML objects (e.g., definitions) are not displayed. Likewise, the remaining 94 odML Sections of the Utah Array electrodes (“Electrode_002” to “Electrode_095”) are left out and only indicated by the dashed line. Based on the size of the odML template for a Utah array, which is only one branch of a final odML file of the reach-to-grasp study, one can imagine the complexity of a complete reach-to-grasp odML file.</blockquote> | |||

|- | |||

|} | |||

|} | |||

The top level is called “UtahArray.” A Utah array is a silicon-based multi-electrode array which is wired to a connector. General metadata of the Utah array, such as the serial number (cf. “SerialNo” in Figure 6) are directly attached as Properties to the main Section (guideline i). | |||

More detailed descriptions are needed for the other components of the Utah array. The hierarchy is thus extended with a Section for the actual electrode array and a Section for the connector component. For both Utah array components, there are different fabrication types available which differ only slightly in their metadata configuration. For this reason, we named the Sections generically “Array” and “Connector” (guideline ii) and specified their actual fabrication type via their attached Properties (e.g., “Style”). | |||

The fabrication type of the “Array” is defined by the number and configuration of electrodes. In our experiment a Utah array with 100 electrodes (96 connected, 4 inactive) arranged on a 10 × 10 grid, supplied with wires for two references and one ground was used. It is, however, possible to have a different total number of electrodes and even an array split into several grids with different electrode arrangements. Nevertheless, all electrodes or grids can be defined via a fixed set of properties that describe the individual setting of each electrode or grid. To keep the structure as generic as possible, we registered, besides the total number of electrodes references and grounds, the number of active electrodes, and the number of grids as Properties of the (level-2-) Section “Array” (guideline ii). Within the “Array” Section, (level-3-) Sections named “Electrode_XXX” for each electrode and grid are attached, each containing the same Properties, but with individual Values for that particular electrode. This design makes it possible to maintain the structure for other experiments where the number and arrangement of electrodes and grids might be different (guidelines ii and iv). The electrode IDs of a Utah array are numbered consecutively, independent of the number of grids. In order not to further increase the hierarchy depth, a Property “Grid_ID” in each “Electrode_XXX” Section identifies to which grid the electrode belongs to, instead of attaching the electrodes as (level-4-) Sections to its Grid and Array parent Sections (guideline i). | |||

The example of the Utah array demonstrates the advantage of a meaningful naming scheme for Sections and Properties. To prevent ambiguity, any Section and Property name at the same level of the hierarchy must be unique. However, a given name can be reused at different hierarchy depths. This reuse can facilitate the readability of the structure and make its interpretation more intuitive. The “UtahArray” Section (Figure 6) demonstrates a situation in which ambiguous Section and Property names are useful, and where they must be avoided. The Sections for the individual electrodes of the array are all on the same hierarchy level. For this reason, their names need to be unique, which is guaranteed by including the electrode ID into the Section name (e.g., “Electrode_001”). In contrast, the Property names of each individual electrode Section, such as “ID,” “GridID,” etc., can be reused. Similarly, the results of the offline spike-sorting can be stored in a (level-4-) Section “OfflineSpikeSorting” below each electrode Section. Using the identical name for this Section for each electrode is helpful, because its content identifies the same type of information. In the Supplementary Material, we show for the odML framework hands-on how one can make use of recurring Section or Property names to quickly extract metadata from large and complex hierarchies. | |||

===Metadata strategy: Workflow=== | |||

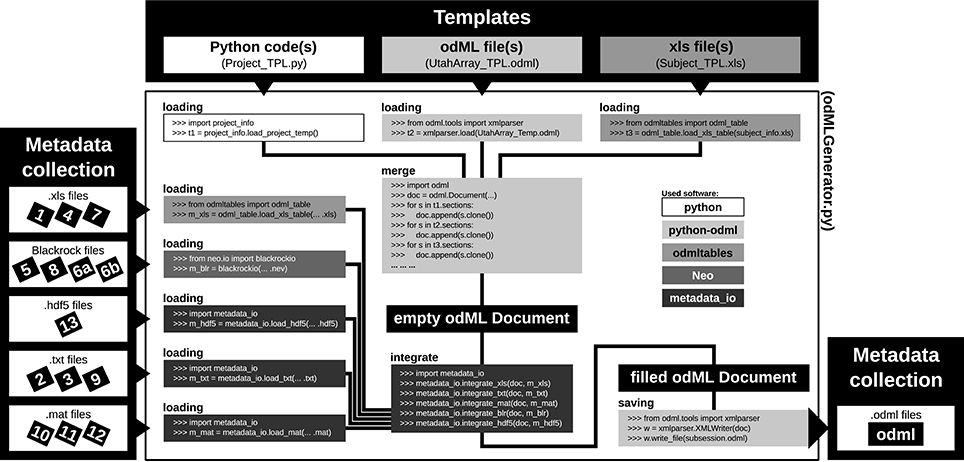

For most experiments it is unavoidable that metadata are distributed across various files and formats (Figure 1 and Table 2). To combine them into one or multiple file(s) of one standard format, one not only needs to generate a meaningful structure for organizing the metadata of the experiment, but also to write routines that load and integrate metadata into the corresponding file(s). For reasons of clarity and comprehensibility we argue to separate these processes. Figure 7 illustrates and summarizes the corresponding workflow for the example experiment using the odML library. | |||

[[File:Fig7 Zehl FrontInNeuro2016 10.jpg|964px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="964px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 7.''' SSchematic workflow for generating odML files in the reach-to-grasp experiment. The Templates box (top): illustrates three out of six template parts which are used to build the complete, but (mostly) empty odML Document combining metadata of one recording session in the experiment. The three possible template formats (script, odML file, and spreadsheet) are indicated within the gray shaded boxes (left to right, respectively). The Metadata collection box (left) illustrates all metadata sources of a recording session (black labeled small boxes) ordered according to file formats (white boxes). Labels of metadata sources are listed in Table 2. The central large white box shows the workflow of the odML generator routine (odMLGenerator.py). Code snippets used at the different steps of this workflow are illustrated in the smaller gray scaled boxes whose colors represent the software used for the code (see legend right of center). The black colored boxes indicate files or file stages. The workflow consists of five steps: (i) loading all template parts, (ii) merging all template parts into one empty odML Document, (iii) loading all metadata sources of a session, partially with custom-made routines (metadata_io), (iv) integrating all metadata sources into the empty odML Document using custom-made routines (metadata_io), and (v) saving the filled odML Document as odML file of the corresponding recording session. The Metadata collection box (right) illustrates the reduction of all metadata sources of one recording session to one metadata source (odML file).</blockquote> | |||

|- | |||

|} | |||

|} | |||

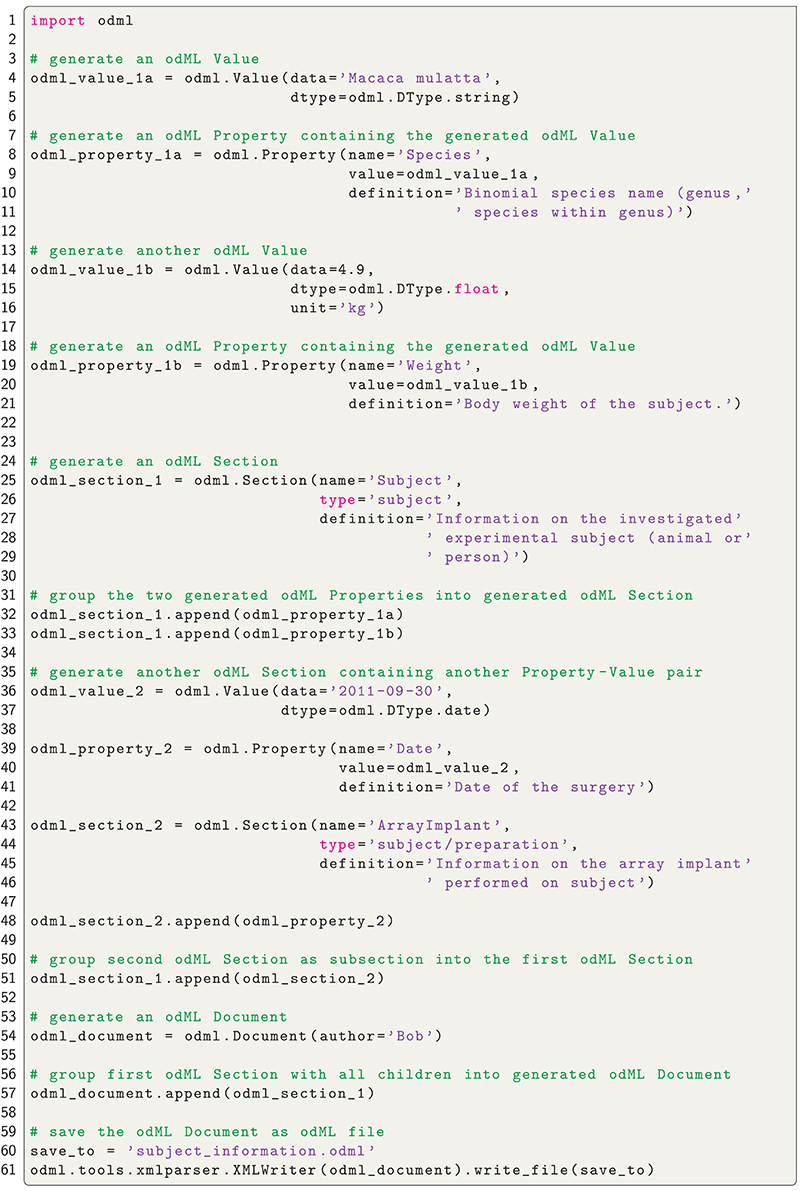

As a first step, it is best to write a template in order to develop and maintain the structure of the files of a comprehensive metadata collection. In the odML metadata model, a template is defined as an “empty” odML file in which the user determined the structure and attributes of Sections and Properties to organize the metadata but filled it with dummy Values. To create an odML template one can use (i) the odML Editor, a graphical user interface that is part of the odML Python library (see supplement), (ii) a custom-written program based on the odML library (Figure 8), or (iii) spreadsheet software (see Figure 9), e.g., Excel (Microsoft Corporation). Especially for large and complex structures, (ii) or (iii) are the more flexible approaches to generate a template. For (iii) we developed the [https://github.com/INM-6/python-odmltables odML-tables package] which provides a framework to convert between odML and spreadsheets saved in the Excel or CSV format. To simplify editing templates it is advisable to create multiple smaller templates (e.g., into the top-level Sections). These parts can then be handled independently and are later easily merged back into the final structure. This approach facilitates the development and editing even of large odML structures. The top of Figure 7 illustrates how three templates of the example experiment (Project_Temp, UtahArray_Temp, and Subject_Temp) representing the three possible template formats (i-iii) are merged into one large “empty” odML file via the Python odML library. | |||

[[File:Fig8 Zehl FrontInNeuro2016 10.jpg|807px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="807px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 8.''' Python code to create an odML file. Python code to create the Subject_Demo.odml which is schematically shown in Figure 5.</blockquote> | |||

|- | |||

|} | |||

|} | |||

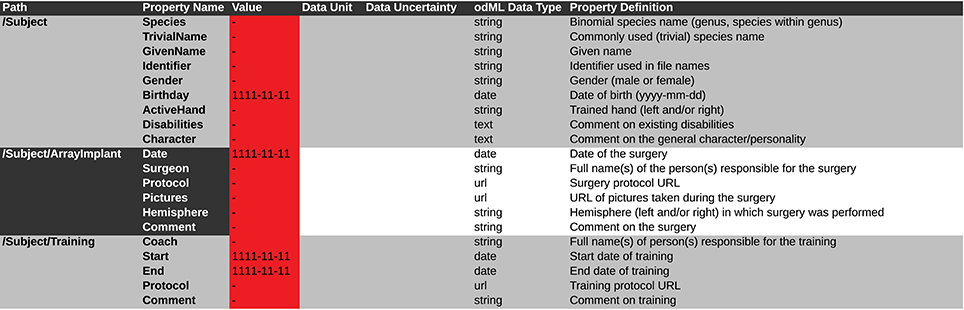

Once a template is created, as a second step, the user has to write or reuse a set of routines which compile metadata from the various sources and integrate them into a copy of the template and save them as files of the comprehensive metadata collection (see Figure 7, left part). Although, it would be desirable if the complete workflow of loading and integrating metadata into one or multiple file(s) of a comprehensive metadata collection would be automatized, in most experiments it cannot be avoided that some metadata need to be entered by hand (see Section 2). Importantly, we here avoid direct manual modification of the comprehensive metadata files. Instead, the final metadata collection is always created from a separate file containing manually entered metadata. This approach has the advantage that, if at one point the structure needs to be changed, these manual entries will remain intact. Practically, manual metadata entries are collected into a source file that is in a machine-readable format, such as text files, CSV format, Excel, [https://www.hdfgroup.org/ HDF<sub>5</sub>], [http://www.json.org/ JSON], or directly in the format of the chosen metadata management software (e.g., odML), and use the corresponding libraries for file access to load and integrate them into the file(s) of the comprehensive metadata collection. In the example experiment, we stored manual metadata in spreadsheets that are accessible by the odML-tables package. In the special case that these manual metadata entries are constant across the collection (e.g., project information, cf. Table 2), one can even reduce the number of metadata sources by entering the corresponding metadata directly into the templates (e.g., Project_Temp in Figure 7). This avoids unnecessary clutter in the later compilation of metadata and facilitates consistency across odML files. Metadata that are not constant across odML files should be preferably stored in source files that adhere to standard file formats which can be loaded via generic routines. For this reason, all spreadsheet source files in the presented experiment were designed to be compatible with the odML-tables package (see Subject_Temp in Figure 7 and as example in Figure 9). Furthermore, the files generated by the Blackrock data acquisition system as well as the results of the LFP quality assessment are loadable via the file interfaces of the Neo Python library<ref name="GarciaNeo14">{{cite journal |title=Neo: An object model for handling electrophysiology data in multiple formats |journal=Frontiers in Neuroinformatics |author=Garcia, S.; Guarino, D.; Jaillet, F. et al. |volume=8 |pages=10 |year=2014 |doi=10.3389/fninf.2014.00010 |pmid=24600386 |pmc=PMC3930095}}</ref>, which provides standardized access to electrophysiological data. Nevertheless, for the example experiment it was still necessary to write also custom loading and integration routines from scratch to cover all metadata from the various sources (e.g., .hdf5 and .mat files for results of the various preprocessing steps in Figure 7). | |||

[[File:Fig9 Zehl FrontInNeuro2016 10.jpg|964px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="964px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 9.''' Screen shot of a spreadsheet that can be automatically transferred to a corresponding odML template. This example is generated from the template of the Subject branch (Subject_TPL). The .xls file representation is used to fill the template part with the corresponding metadata of the reach-to-grasp experiment (compare to Figure 5). Default values which should be manually changed to actual metadata are marked in red. To be able to directly use such a .xls file as template and later directly translate it to the odML format one should at least include the Section Definition as additional column [e.g., for “Path: /Subject” add “Section Definition: Information on the investigated experimental subject (animal or person)”].</blockquote> | |||

|- | |||

|} | |||

|} | |||

In summary, this two stage workflow of first generating templates and then filling them from multiple source files guarantees flexibility and consistency of the metadata collection over time. In particular, in a situation where the structure needs to be changed at a later time (e.g., if new metadata sources need to be integrated) one only needs to adapt or extend the template as well as the code that fills the template with metadata in order to generate a consistent, updated metadata collection from scratch. If the metadata management can be planned in advance, one should attempt to optimize the corresponding workflow in the following aspects: | |||

* Use existing templates (e.g., the Utah array odML template) to increase consistency with other experimental studies. | |||

* Keep the number of metadata sources at a minimum. | |||

* Avoid hidden knowledge in the form of handwritten notes or implicit knowledge of the experimenter by transferring such information into a machine-readable format (e.g., standardized Excel sheets compatible with odML-tables) early on. | |||

* Automatize the saving of metadata as much as possible. | |||

==Discussion== | |||

We have outlined how to structure, collect and distribute metadata of electrophysiological experiments. In particular, we demonstrated the importance of comprehensible metadata collections (i) to facilitate enrichment of data with additional information, including post-processing steps, (ii) to gain accessibility to the metadata by pooling information from various sources, (iii) to allow for a simple and well-defined selection of data based on metadata information using standard query mechanisms, (iv) to create textual and graphical representations of sets of related metadata in a fast manner in order to screen data across the experiment, and (v) to formalize communication in collaborations by means of metadata queries. We illustrated how to practically create a metadata collection from data using the odML framework as example, and how to utilize existing metadata collections in the context of the five use cases. | |||

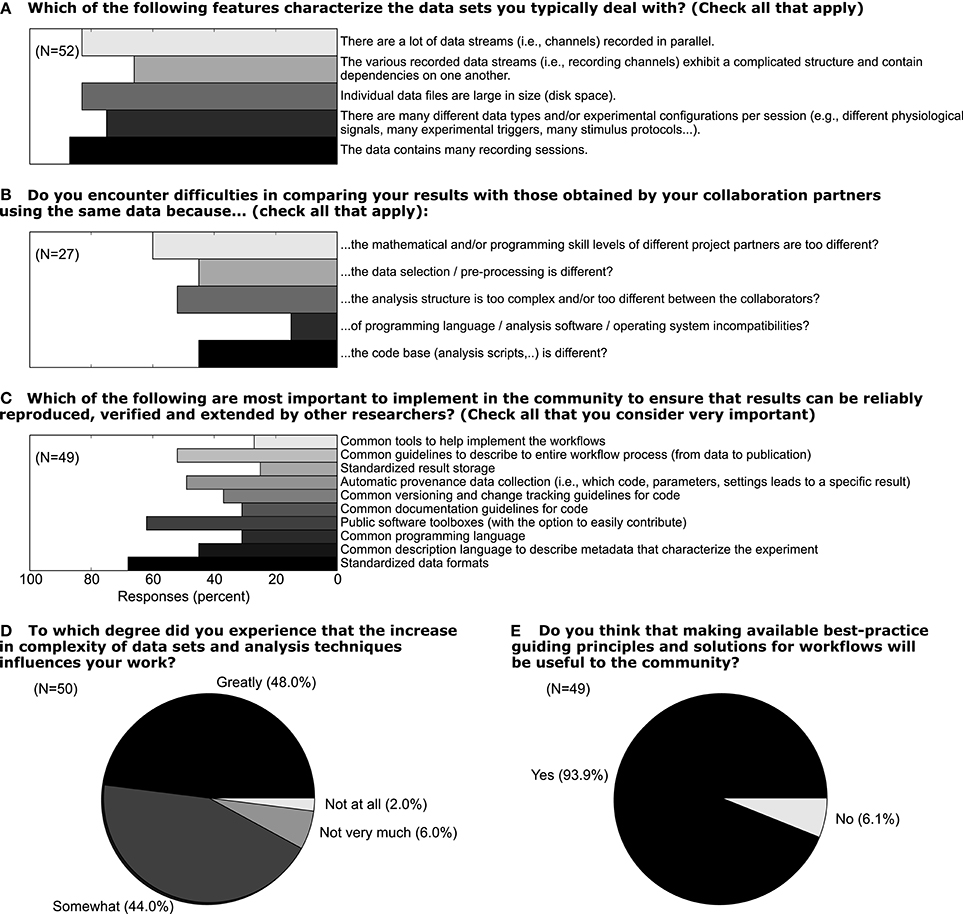

In light of the increasing volume of data generated in complex experiments, neuroscientists, in particular in the field of electrophysiology, are facing the need to improve the workflows of daily scientific life.<ref name="DenkerDesigning16">{{cite book |chapter=Designing Workflows for the Reproducible Analysis of Electrophysiological Data |title=Brain-Inspired Computing |author=Denker, M.; Grün, S. |editor=Amunts, K.; Grandinetti, L.; Lippert, T.; Petkov, N. |series=Lecture Notes in Computer Science |volume=10087 |publisher=Springer |year=2016 |doi=10.1007/978-3-319-50862-7_5 |isbn=9783319508627}}</ref> In this context, the aspects of handling metadata for electrophysiological experiments described above should be considered as part of such workflows. We conducted a survey among members of the electrophysiology and modeling community (N = 52) in 2011 to better understand how scientists think about the current status of their workflow, which aspects of their work could be improved, and to what extent these researchers would embrace efforts to improve workflows. In Figure 10 we show a selection of survey responses that are closely related to the role of metadata in setting up such workflows. Forty-eight percent of respondents reported that the increased complexity of data sets greatly influences their work (Question D). When asked about which features characterize their data, it is obvious that multiple factors of complexity come into play, including the number of sessions, data size, dependencies between different data records (Question A). In total, 93% believed that making available best-practice guidelines and workflow solutions would be beneficial for the community (Question E). Handling metadata in the odML framework represents one option in designing best-practice for building such a workflow (Question C). In fact, 46% of respondents believed that a common description of metadata would be required to achieve that scientific work can be reproduced, verified, and extended by other researchers. At present, 44% of researchers stated also that they find it difficult to compare their results to those obtained by other researchers working on the same or similar data due to differences in preprocessing steps and data selection (Question B; cf., Use Case 5). The results of the full survey can be found at http://www.csn.fz-juelich.de/survey. | |||

[[File:Fig10 Zehl FrontInNeuro2016 10.jpg|963px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="963px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 10.''' Responses to five selected questions taken from a survey among scientists who deal with electrophysiological data sets. The online survey was hosted on “Google Drive” and a total of 52 respondents filled the questionnaire in the time period June 12 through October 17, 2011 (Associate/full professor: 18%, Assistant professor: 22%, Post-doc: 28%, PhD student 30%, no response 2%; self-reported). For questions A–C multiple answers could be given by a single person. The number of respondents ''N'' is provided separately for each question.</blockquote> | |||

|- | |||

|} | |||

|} | |||

While the storage of metadata to improve the overall experimental and analysis workflow is technically feasible, it cannot solve the intrinsic problem of identifying the metadata in an experiment, collecting the individual metadata, and pooling them in an automatic way. All of these steps are not trivial and are time-consuming by nature, in particular when organizing metadata for a particular experiment for the first time. Currently, no funding is granted for such tasks, and software tools that support the automated generation and usage of metadata are largely missing. Therefore, rightly the question may arise whether it is truly worth the effort. Through the illustration of use cases, we hope to have convinced the reader that indeed numerous advantages are associated with a well-maintained metadata collection that accompanies the data. For the individual researcher these can possibly be best summarized by the ease of organizing, searching, and selecting datasets based on the metadata information. Even more advantages are gained for collaborative work. First, an easily accessible central source for metadata information ensures that all researchers access the exact same information. This is particularly important if metadata are difficult to access from the source files, hand-written notebooks or via a specialized program code, or if the metadata need to be collected from different locations. Second, the communication between collaborators becomes more precise by the strict use of defined property-value pairs, lowering the probability for unintended confusion. Last but not least, once the metadata collection structure, content, and method of creation are defined by the experimenter, metadata entry and compilation will in general run very smoothly, be less error prone, and will even save time in comparison to traditional methods. Thus, we believe that there are a number of significant advantages that justify the initial investment of including automated metadata handling as part of the workflow of an electrophysiological experiment. The survey results presented above reveal that these advantages are also increasingly recognized by the community. Furthermore, as scientists we have the obligation to properly document our scientific work in a clear fashion that enables the highest degree of reproducibility. Reproducibility becomes increasingly recognized by publishers and funding agencies<ref name="MorrisonTime14">{{cite journal |title=Time to do something about reproducibility |journal=eLife |author=Morrison, S.J. |volume=4 |pages=e039841 |year=2014 |doi=10.7554/eLife.03981 |pmid=25493617 |pmc=PMC4260475}}</ref><ref name="CandelaData15">{{cite journal |title=Data journals: A survey |journal=Journal of the Association for Information Science and Technology |author=Candela, L.; Castelli, D.; Manghi, P.; Tani, A. |volume=66 |issue=9 |pages=1747–1762 |year=2015 |doi=10.1002/asi.23358}}</ref><ref name="OSCPsychology15" /><ref name="PulvererRepro15">{{cite journal |title=Reproducibility blues |journal=EMBO Journal |author=Pulverer, B. |volume=34 |issue=22 |pages=2721-4 |year=2015 |doi=10.15252/embj.201570090 |pmid=26538323 |pmc=PMC4682652}}</ref>, such that appropriate resources of time and man-power allocated to produce more sophisticated data management will become a necessity. It is thus not only important to gain experience in how to document data properly but also to produce better tools that reduce the time investment required for these steps. | |||

As a result of our experience with complex analysis of experiments in systems neuroscience as reported in this paper, one of our recommendations is to record as much information about the experiment as possible. This may seem in contrast to efforts specifying minimal information guidelines in the life sciences (MIBBI<ref name="TaylorPromoting08">{{cite journal |title=Promoting coherent minimum reporting guidelines for biological and biomedical investigations: The MIBBI project |journal=Nature Biotechnology |author=Taylor, C.F.; Field, D.; Sansone, S.A. et al. |volume=26 |issue=8 |pages=889-96 |year=2008 |doi=10.1038/nbt.1411 |pmid=18688244 |pmc=PMC2771753}}</ref> and MINI<ref name="GibsonMinimum09">{{cite journal |title=Minimum Information about a Neuroscience Investigation (MINI): Electrophysiology |journal=Nature Precedings |author=Gibson, F.; Overton, P.G.; Smulders, T.V. et al. |year=2009 |url=http://precedings.nature.com/documents/1720/version/2}}</ref>). However, those initiatives target the use case where data are uploaded to a public database, and their goal is to achieve a balance of information detail such that the minimally sufficient information is provided to make the data potentially useful for the community. They are not meant as guidelines for procedures in the laboratory. To ensure reproducibility of the primary analysis, but also in the interest of future reuse of the data, we argue that it is highly desirable to store all potentially relevant information about an experiment. | |||

We have exemplified the practical issues of metadata management using the odML metadata framework as one particular method. Similar results could be obtained using other formats. [https://www.w3.org/RDF/ RDF] is a powerful standard approach specifically designed for semantic annotation of data. Many libraries and tools are available for this format, and in combination with ontologies it is highly suitable for standardization. However, efficiently utilizing this format requires elaborate technology that is not easy to use. Simpler formats like [http://www.json.org/ JSON] or [http://yaml.org/ YAML] are in many respects similar to the XML schema used for odML, and we would expect that they have been used in individual labs to realize approaches similar to the one presented here. We are, however, not aware of specific tools available for the collection of metadata that use these formats. In addition, none of these alternative formats provide specific support for storing measured quantities as odML does. Using a combination of XML and [https://www.hdfgroup.org/HDF5/ HDF<sub>5</sub>] has been proposed to define a format for scientific data<ref name="MillardAdapt11">{{cite journal |title=Adaptive informatics for multifactorial and high-content biological data |journal=Nature Methods |author=Millard, B.L.; Niepel, M.; Menden, M.P. et al. |volume=8 |issue=6 |pages=487-93 |year=2011 |doi=10.1038/nmeth.1600 |pmid=21516115 |pmc=PMC3105758}}</ref>, which could in principle be used for metadata collection. However, requiring extensive schema definitions it is much less flexible and lightweight than odML. Solutions for the management of scientific workflows, like [http://www.taverna.org.uk/ Taverna], [https://kepler-project.org/ Kepler], [http://www.vistrails.org/ VisTrails], [https://www.knime.org/knime/ KNIME], and [http://www.wings-workflows.org/ Wings]<ref name="INCF13">{{cite web |url=https://space.incf.org/index.php/s/kuFRRGBezreEYPJ#pdfviewer |title=INCF Program on Standards for Data Sharing: New perspectives on workflows and data management for the analysis of electrophysiological data |author=INCF |date=4 December 2013}}</ref> are targeted toward standardized and reproducible data processing workflows. We are focusing here on the management of experimental metadata, and a consideration of data processing would go beyond this scope. Workflow management systems could be utilized for managing metadata, but their suitability for the collection of metadata during the experiment seems limited. Nevertheless, the approach we have described here must ultimately be combined with such systems, as the development of automated workflows depends critically on the availability of a metadata collection. Likewise, our approach is suitable for combination with provenance tracking solutions like Sumatra.<ref name="DavisonSumatra14">{{cite book |chapter=Sumatra: A toolkit for reproducible research |title=Implementing Reproducible Research |author=Davison, A.P.; Mattioni, M.; Samarkanov, D.; Teleńczuk, B. |editor=Stodden, V.; Leisch, F.; Peng, R.D. |publisher=CRC Press |pages=57–79 |year=2014 |isbn=9781466561595}}</ref> | |||

Indeed, while we believe that using a metadata framework such as odML represents an important step toward better data and metadata management, it is also clear that this approach has a number of potential improvements. A natural step would be for odML to become an intrinsic file format for the commercially available data acquisition systems, such that their metadata are instantly available for inclusion in the user's hierarchical tree. In this context, the odML format so far has been adopted in the [https://www.nitrc.org/projects/cff/ Connectome] file format, the [http://www.relacs.net/ Relacs] data acquisition and stimulation software, and the [https://eegdatabase.kiv.zcu.cz/ EEGBase]] database for EEG/ERP data. | |||

Likewise, the availability of interfaces for easy odML export in popular experiment control suites, vendor-specific hardware or generic software, such as LabVIEW, would greatly speed up metadata generation. Additionally, a repository for popular terminologies and hardware devices has been initiated by the [http://portal.g-node.org/odml/terminologies/v1.0/terminologies.xml German Neuroinformatics Node], which is open for any extensions by the community. More importantly, in conditions where the details of recording or post-processing steps may change over time, it is essential to keep track also of the versions of the code that generated a certain post-processing result, including the corresponding libraries used by the code and installed on the computer executing the code. Popular solutions to version control (e.g., Git) or provenance tracking (e.g., Sumatra<ref name="DavisonSumatra14" />) offer mechanisms to keep track of this information. However, it is up to the user to make sure that information about the correct version numbers or hash values provided by these systems are saved to the file containing the metadata collection to guarantee that metadata can be linked to its provenance trail. The more direct integration of support for metadata recording into the various tools performing the post-processing would allow to automatize this process, leading to a more robust metadata collection, paired with enhanced usability. | |||

A perhaps more challenging problem is devising mechanisms that link the metadata to the actual data objects they refer or relate to. This issue becomes particularly clear in the context of the Neo library<ref name="GarciaNeo14" />, which is an open-source Python package that provides data objects for storing electrophysiological data, along with file input/output for common file formats. The Neo library could be used to read a particular spike train that relates to a certain unit ID in the recording, while the corresponding odML file would contain for each unit ID the information about the assigned unit type (SUA, MUA, or noise) and the signal-to-noise ratio (SNR) obtained by the spike sorting preprocessing step. A common task would now be to link these two pieces of information in a generic way. Currently, it is up to the user to manually extract the SNR of each neuron ID from the metadata, and then annotate the spike train data with this particular piece of metadata. This is a procedure that is time-consuming and again may be performed differently by partners in a collaboration causing incoherence in the workflow. Recently, the NWB format<ref name="TeetersNeurodata15">{{cite journal |title=Neurodata Without Borders: Creating a Common Data Format for Neurophysiology |journal=Neuron |author=Teeters, J.L.; Godfrey, K.; Young, R. et al. |volume=88 |issue=4 |pages=629-34 |year=2015 |doi=10.1016/j.neuron.2015.10.025 |pmid=26590340}}</ref> was proposed as a file format to store electrophysiological data with a detailed, use-case specific data and metadata organization. In contrast, the [http://www.g-node.org/nix NIX] file format<ref name="StoewerFile14">{{cite journal |title=File format and library for neuroscience data and metadata |journal=Frontiers in Neuroinformatics |author=Stoewer, A.; Keller, C.J.; Benda, J. et al. |year=2014 |doi=10.3389/conf.fninf.2014.18.00027}}</ref> was proposed as a more general solution to link data and metadata already on the file level. With this approach, metadata are organized hierarchically, as in odML, but can be linked to the respective data stored in the same file. This enables relating data and metadata meaningfully to facilitate and automate data retrieval and analysis. | |||

Finally, convenient manual metadata entry is an important requirement for collecting metadata of an experiment. In the case of odML, while the existing editor enables researchers to fill in odML files, a number of convenience features would not only reduce the amount of time required to collect the information but could also provide further incentives to store metadata in the odML format. An example for the former could be editor support for templates such that new files with default values may be created quickly, while an example for the latter could be to provide more flexible ways to display metadata in the editor. The odML-tables library, which can transform the hierarchical structure of an odML file to an editable flat table in the Excel or CSV format, is one current attempt to solve these issues in the odML framework. The conversion of odML to commonly known spreadsheets increases the accessibility of odML for collaborators with little programming knowledge. Another particularly notable project aimed to improve the manual entries of metadata during an experiment is the odML mobile app<ref name="LeFrancMobile14">{{cite journal |title=Mobile metadata: bringing Neuroinformatics tools to the bench |journal=Frontiers in Neuroinformatics |author=Le Franc, Y.; Gonzalez, D.; Mylyanyk, I. et al. |year=2014 |doi=10.3389/conf.fninf.2014.18.00053}}</ref> that runs on mobile devices that are easy to carry around in a lab situation. | |||

The odML framework by itself is of a general nature and can easily be used in other domains of neuroscience than electrophysiology, or even other fields of science. An obvious use case would be to store metadata of neuroscientific simulation experiments. As we witness a similar increase in the complexity of ''in silico'' data, providing adequate metadata records to describe these data gains importance. However, datasets emerging from simulations differ in the composition of their metadata in terms of the more advanced technical descriptions required to capture the mathematical details of the employed models (e.g., descriptions using NeuroML<ref name="GleesonNeuroML10" /><ref name="CrookCreating12" />), and by the fact that simulations are often described on a procedural rather than a declarative level (e.g., descriptions based on PyNN<ref name="DavisonPyNN09">{{cite journal |title=PyNN: A Common Interface for Neuronal Network Simulators |journal=Frontiers in Neuroinformatics |author=Davison, A.P.; Brüderle, D.; Eppler, J. et al. |volume=2 |pages=11 |year=2009 |doi=10.3389/neuro.11.011.2008 |pmid=19194529 |pmc=PMC2634533}}</ref>). How these can be best linked to more generic standards for metadata capture and representation, and what level of description of metadata is adequate in this scenario, remains a matter of investigation supported by use-cases, as performed here for experimental data. A common storage mechanism for metadata of experimental and simulated data would simplify their comparison, a task that is bound to become increasingly important for the future of computational neuroscience. Using a well-defined, machine-readable format for metadata brings the potential for integration of the information across heterogeneous datasets, for example in larger databases or data repositories. | |||

In summary, the complexity of current electrophysiological experiments forces the scientific community to reorganize their workflow of data handling, including metadata management, to ensure reproducibility in research.<ref name="StoddenImplem14">{{cite book |title=Implementing Reproducible Research |editor=Stodden, V.; Leisch, F.; Peng, R.D. |publisher=CRC Press |pages=448 |year=2014 |isbn=9781466561595}}</ref> Readily available tools to support metadata management, such as odML, are a vital component in constructing such workflows. It is our responsibility to propagate and incorporate these tools into our daily routines in order to improve workflows through the principle of co-design between scientists and software engineers. | |||

==Author contributions== | |||

LZ, MD, and SG designed the research. LZ, TB, and AR performed the research. LZ, MD, JG, FJ, ASo, ASt, and TW contributed to unpublished software tools. All authors participated in writing the paper. | |||

==Conflict of interest statement== | |||

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. | |||

==Acknowledgments== | |||

We commemorate Paul Chorley and thank him for his valuable input during the development of the metadata structures and templates. We thank Benjamin Weyers and Christian Kellner for valuable discussions. This work was partly supported by Helmholtz Portfolio Supercomputing and Modeling for the Human Brain (SMHB), Human Brain Project (HBP, EU Grant 604102), German Neuroinformatics Node (G-Node, BMBF Grant 01GQ1302), BrainScaleS (EU Grant 269912), and DFG SPP Priority Program 1665 (GR 1753/4-1 and DE 2175/1-1). ANR-GRASP, CNRS, and Riken-CNRS Research Agreement. | |||

==Supplementary material== | |||

The supplementary material for this article can be found [https://www.frontiersin.org/articles/file/downloadfile/195830_supplementary-materials_presentations_1_pdf/octet-stream/Presentation%201.pdf/2/195830 here]. | |||

==References== | ==References== | ||

Latest revision as of 18:41, 22 December 2017

| Full article title | Handling metadata in a neurophysiology laboratory |

|---|---|

| Journal | Frontiers in Neuroinformatics |

| Author(s) |

Zehl, Lyuba; Jaillet, Florent; Stoewer, Adrian; Grewe, Jan; Sobolev, Andrey; Wachtler, Thomas; Brochier, Thomas G.; Riehle, Alexa; Denker, Michael; Grün, Sonja |

| Author affiliation(s) |

Jülich Research Centre, Aix-Marseille Université, Ludwig-Maximilians-Universität München, Eberhard-Karls-Universität Tübingen, Aachen University |

| Primary contact | Email: l dot zehl at fz-juelich dot de |

| Editors | Luo, Qingming |

| Year published | 2016 |

| Volume and issue | 10 |

| Page(s) | 26 |

| DOI | 10.3389/fninf.2016.00026 |

| ISSN | 1662-5196 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.frontiersin.org/articles/10.3389/fninf.2016.00026/full |

| Download | https://www.frontiersin.org/articles/10.3389/fninf.2016.00026/pdf (PDF) |

Abstract

To date, non-reproducibility of neurophysiological research is a matter of intense discussion in the scientific community. A crucial component to enhance reproducibility is to comprehensively collect and store metadata, that is, all information about the experiment, the data, and the applied preprocessing steps on the data, such that they can be accessed and shared in a consistent and simple manner. However, the complexity of experiments, the highly specialized analysis workflows, and a lack of knowledge on how to make use of supporting software tools often overburden researchers to perform such a detailed documentation. For this reason, the collected metadata are often incomplete, incomprehensible for outsiders, or ambiguous. Based on our research experience in dealing with diverse datasets, we here provide conceptual and technical guidance to overcome the challenges associated with the collection, organization, and storage of metadata in a neurophysiology laboratory. Through the concrete example of managing the metadata of a complex experiment that yields multi-channel recordings from monkeys performing a behavioral motor task, we practically demonstrate the implementation of these approaches and solutions, with the intention that they may be generalized to other projects. Moreover, we detail five use cases that demonstrate the resulting benefits of constructing a well-organized metadata collection when processing or analyzing the recorded data, in particular when these are shared between laboratories in a modern scientific collaboration. Finally, we suggest an adaptable workflow to accumulate, structure and store metadata from different sources using, by way of example, the odML metadata framework.

Introduction

Technological advances in neuroscience during the last decades have led to methods that nowadays enable researchers to simultaneously record the activity from tens to hundreds of neurons simultaneously, in vitro or in vivo, using a variety of techniques[1][2][3] in combination with sophisticated stimulation methods, such as optogenetics.[4][5] In addition, recordings can be performed in parallel from multiple brain areas, together with behavioral measures such as eye or limb movements.[6][7] Such recordings enable researchers to study network interactions and cross-area coupling, and to relate neuronal processing to the behavioral performance of the subject.[8][9][10] These approaches lead to increasingly complex experimental designs that are difficult to parameterize, e.g., due to multidimensional characterization of natural stimuli[11] or high-dimensional movement parameters for almost freely behaving subjects.[12] It is a serious challenge for researchers to keep track of the overwhelming amount of metadata generated at each experimental step and to precisely extract all the information relevant for data analysis and interpretation of results. Various aspects such as the parametrization of the experimental task, filter settings and sampling rates of the setup, the quality of the recorded data, broken electrodes, preprocessing steps (e.g., spike sorting), or the condition of the subject need to be considered. Nevertheless, the organization of these metadata is of utmost importance for conducting research in a reproducible manner, i.e., the ability to faithfully reproduce the experimental procedures and subsequent analysis steps.[13][14][15] Moreover, detailed knowledge of the complete recording and analysis processes is crucial for the correct interpretation of results, and is a minimal requirement to enable researchers to verify published results and build their own research on the previous findings.

To achieve reproducibility, experimenters have typically developed their own lab procedures and practices for performing experiments and their documentation. Within the lab, crucial information about the experiment is often transmitted by personal communication, through handwritten laboratory notebooks, or implicitly by trained experimental procedures. However, at least when it comes to data sharing across labs, essential information is often missed in the exchange.[16][17] Moreover, if collaborating groups have different scientific backgrounds, for example experimenters and theoreticians, implicit domain-specific knowledge is often not communicated or is communicated in an ambiguous fashion that leads to misunderstandings. To avoid such scenarios, the general principle should be to keep as much information about an experiment as possible from the beginning on, even if information seems to be trivial or irrelevant at the time. Furthermore, one should annotate the data with these metadata in a clear and concise fashion.

In order to provide metadata in an organized, easily accessible, but also machine-readable way, Grewe et al.[18] introduced odML (open metadata Markup Language) as a simple file format in analogy to SBML in systems biology[19], or NeuroML in neuroscientific simulation studies.[20][21] However, lacking to date is a detailed investigation on how to incorporate metadata management in the daily lab routine in terms of (i) organizing the metadata in a comprehensive collection, (ii) practically gathering and entering the metadata, and (iii) profiting from the resulting comprehensive metadata collection in the process of analyzing the data. Here we address these points, both conceptually and practically, in the context of a complex behavioral experiment that involves neuronal recordings from a large number of electrodes that yield massively parallel spike and local field potential (LFP) data.[22] To illustrate how to organize a comprehensive metadata collection (i), we introduce in the next section the concept of metadata and demonstrate the rich diversity of metadata that arise in the context of the example experiment. To demonstrate why the effort of creating a comprehensive metadata collection is time well spent, we next describe five use cases that summarize where the access to metadata becomes relevant when working with the data (iii). Afterwards we provide detailed guidelines and assistance on how to create, structure, and hierarchically organize comprehensive metadata collections (i and ii). Complementing these guidelines, we provide in the supplementary material section a thorough practical introduction on how to embed a metadata management tool, such as the odML library, into the experimental and analysis workflow. Finally, we close by critically contrasting the importance of proper metadata handling against its difficulties and deriving future challenges.

Organizing metadata in neurophysiology

Metadata are generally defined as data describing data.[23][24] More specifically, metadata are information that describe the conditions under which a certain dataset has been recorded.[18] Ideally all metadata would be machine-readable and available at a single location that is linked to the corresponding recorded dataset. The fact that such central, comprehensive metadata collections are not common practice already today is by no means a sign of negligence on the part of the scientists, but is explained by the fact that in the absence of conceptual guidelines and software support, such a situation is extremely difficult to achieve given the high complexity of the task. Already, the fact that an electrophysiological setup is composed of several hardware and software components, often from different vendors, imposes the need to handle multiple files of different formats. Some files may even contain metadata that are not machine-readable and -interpretable. Furthermore, performing an experiment requires the full attention of the experimenters, which limits the amount of metadata that can be manually captured online. Metadata that arise unexpectedly during an experiment, e.g., the cause of a sudden noise artifact, are commonly documented as handwritten notes in the laboratory notebook. In fact, handwritten notes are often unavoidable, because the legal regulations of some countries, e.g., France, require the documentation of experiments in the form of a handwritten laboratory notebook.

To present the concept of metadata management in a practical context, we introduce in the following a selected electrophysiological experiment. For clarity, a graphical summary of the behavioral task and the recording setup is provided in Figure 1, and a list of task-related abbreviations (e.g., behavioral conditions and events) is listed in Table 1. First described in Riehle et al.[22] and Milekovic et al.[25], the experiment employs high density multi-electrode recordings to study the modulation of spiking and LFP activities in the motor/pre-motor cortex of monkeys performing an instructed delayed reach-to-grasp task. In total, three monkeys (Macaca mulatta; two females, L, T; one male, N) were trained to grasp an object using one of two different grip types (side grip, SG, or precision grip, PG) and to pull it against one of two possible loads requiring either a high (HF) or low (LF) pulling force (cf. Figure 1). In each trial, instructions for the requested behavior were provided to the monkeys through two consecutive visual cues (C and GO) which were separated by a one-second delay and generated by the illumination of specific combinations of five LEDs positioned above the object (cf. Figure 1). The complexity of this study makes it particularly suitable to expose the difficulty of collecting, organizing, and storing metadata. Moreover, metadata were first organized according to the laboratory internal procedures and practices for documentation, while the organization of the metadata into a comprehensive machine-readable format was done a-posteriori, after the experiment was completed. To work with the data and metadata of this experiment, one has to handle on average 300 recording sessions per monkey, with data distributed over three files per session, metadata distributed over five files per implant, at least 10 files per recording, and one file for general information of the experiment (cf. Table 2). This situation imposed an additional complexity to reorganize the various metadata sources.

|

|

|

To give a more concrete impression of the painstaking detail that needs to be considered while planning and organizing a comprehensive metadata collection, we provide an exhaustive description of the experiment in the supplementary material. To illustrate the level of complexity of metadata management in this example, Figure 1 outlines the different components of the experimental setup, the signal flow, the task, and the trial scheme. In addition, the heterogeneous pieces of metadata and the corresponding files that contain them in the absence of a comprehensive metadata collection are listed and described in Table 2. Here, all metadata source files are labeled by numbers and appear in Figure 1 wherever they were generated. If labels appear multiple times, they were iteratively enriched with information obtained from the corresponding components of the setup.