Difference between revisions of "Journal:Recommended versus certified repositories: Mind the gap"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (→Notes: Cats) |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 18: | Line 18: | ||

|website = [https://datascience.codata.org/article/10.5334/dsj-2017-042/ https://datascience.codata.org/article/10.5334/dsj-2017-042/] | |website = [https://datascience.codata.org/article/10.5334/dsj-2017-042/ https://datascience.codata.org/article/10.5334/dsj-2017-042/] | ||

|download = [https://datascience.codata.org/articles/10.5334/dsj-2017-042/galley/710/download/ https://datascience.codata.org/articles/10.5334/dsj-2017-042/galley/710/download/] (PDF) | |download = [https://datascience.codata.org/articles/10.5334/dsj-2017-042/galley/710/download/ https://datascience.codata.org/articles/10.5334/dsj-2017-042/galley/710/download/] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

| Line 32: | Line 27: | ||

Data sharing and data management are topics that are becoming increasingly important. More information is appearing about their benefits, such as increased citation rates for research papers with associated shared datasets.<ref name="PiwowarDataReuse13">{{cite journal |title=Data reuse and the open data citation advantage |journal=PeerJ |author=Piwowar, H.A.; Vision, T.J. |volume=1 |pages=e175 |year=2013 |doi=10.7717/peerj.175 |pmid=24109559 |pmc=PMC3792178}}</ref><ref name="PiwowarSharing07">{{cite journal |title=Sharing detailed research data is associated with increased citation rate |journal=PLoS One |author=Piwowar, H.A.; Day, R.S.; Fridsma, D.B. |volume=2 |issue=3 |pages=e308 |year=2007 |doi=10.1371/journal.pone.0000308 |pmid=17375194 |pmc=PMC1817752}}</ref> A growing number of funding bodies such as the NIH and the Wellcome Trust<ref name="NIHGrants15">{{cite web |url=https://grants.nih.gov/grants/policy/nihgps/nihgps.pdf |format=PDF |title=NIH Grants Policy Statement |publisher=National Institutes of Health |date=2015 |accessdate=27 January 2017}}</ref><ref name="WellcomePolicy">{{cite web |url=https://wellcome.ac.uk/funding/managing-grant/policy-data-software-materials-management-and-sharing |title=Policy on data, software and materials management and sharing |publisher=Wellcome Trust |accessdate=27 January 2017}}</ref>, as well as several journals<ref name="BorgmanTheConun12">{{cite journal |title=The conundrum of sharing research data |journal=Journal of the American Society for Information Science and Technology |author=Borgman, C.L. |volume=63 |issue=6 |pages=1059-1078 |year=2012 |doi=10.1002/asi.22634}}</ref>, have installed policies that require research data to be shared.<ref name="MayernikPeer14">{{cite journal |title=Peer Review of Datasets: When, Why, and How |journal=Bulletin of the American Meteorological Society |author=Mayernik, M.S.; Callaghan, S.; Leigh, R. et al. |volume=96 |issue=2 |pages=191–201 |year=2014 |doi=10.1175/BAMS-D-13-00083.1}}</ref> To be able to share data, both now and in the future, datasets not only need to be preserved but also need to be comprehensible and usable for others. To ensure these qualities, research data needs to be managed<ref name="DobratzTheUse10">{{cite journal |title=The Use of Quality Management Standards in Trustworthy Digital Archives |journal=International Journal of Digital Curation |author=Dobratz, S.; Rödig, P.; Borghoff, U.M. et al. |volume=5 |issue=1 |pages=46–63 |year=2010 |doi=10.2218/ijdc.v5i1.143}}</ref>, and data repositories can play a role in maintaining the data in a usable structure.<ref name="AssanteAreSci16">{{cite journal |title=Are Scientific Data Repositories Coping with Research Data Publishing? |journal=Data Science Journal |author=Assante, M.; Candela, L.; Castelli, D. et al. |volume=15 |issue=6 |pages=1–24 |year=2016 |doi=10.5334/dsj-2016-006}}</ref> However, using a data repository does not guarantee that the data is usable, since not every repository uses the same procedures and quality metrics, such as applying proper metadata tags.<ref name="MersonAvoid16">{{cite journal |title=Avoiding Data Dumpsters--Toward Equitable and Useful Data Sharing |journal=New England Journal of Medicine |author=Merson, L.; Gaye, O.; Guerin, P.J. |volume=374 |issue=25 |pages=2414-5 |year=2016 |doi=10.1056/NEJMp1605148 |pmid=27168351}}</ref> As many repositories have not yet adopted generally accepted standards, it can be difficult for researchers to choose the right repository for their dataset.<ref name="DobratzTheUse10" /> | Data sharing and data management are topics that are becoming increasingly important. More information is appearing about their benefits, such as increased citation rates for research papers with associated shared datasets.<ref name="PiwowarDataReuse13">{{cite journal |title=Data reuse and the open data citation advantage |journal=PeerJ |author=Piwowar, H.A.; Vision, T.J. |volume=1 |pages=e175 |year=2013 |doi=10.7717/peerj.175 |pmid=24109559 |pmc=PMC3792178}}</ref><ref name="PiwowarSharing07">{{cite journal |title=Sharing detailed research data is associated with increased citation rate |journal=PLoS One |author=Piwowar, H.A.; Day, R.S.; Fridsma, D.B. |volume=2 |issue=3 |pages=e308 |year=2007 |doi=10.1371/journal.pone.0000308 |pmid=17375194 |pmc=PMC1817752}}</ref> A growing number of funding bodies such as the NIH and the Wellcome Trust<ref name="NIHGrants15">{{cite web |url=https://grants.nih.gov/grants/policy/nihgps/nihgps.pdf |format=PDF |title=NIH Grants Policy Statement |publisher=National Institutes of Health |date=2015 |accessdate=27 January 2017}}</ref><ref name="WellcomePolicy">{{cite web |url=https://wellcome.ac.uk/funding/managing-grant/policy-data-software-materials-management-and-sharing |title=Policy on data, software and materials management and sharing |publisher=Wellcome Trust |accessdate=27 January 2017}}</ref>, as well as several journals<ref name="BorgmanTheConun12">{{cite journal |title=The conundrum of sharing research data |journal=Journal of the American Society for Information Science and Technology |author=Borgman, C.L. |volume=63 |issue=6 |pages=1059-1078 |year=2012 |doi=10.1002/asi.22634}}</ref>, have installed policies that require research data to be shared.<ref name="MayernikPeer14">{{cite journal |title=Peer Review of Datasets: When, Why, and How |journal=Bulletin of the American Meteorological Society |author=Mayernik, M.S.; Callaghan, S.; Leigh, R. et al. |volume=96 |issue=2 |pages=191–201 |year=2014 |doi=10.1175/BAMS-D-13-00083.1}}</ref> To be able to share data, both now and in the future, datasets not only need to be preserved but also need to be comprehensible and usable for others. To ensure these qualities, research data needs to be managed<ref name="DobratzTheUse10">{{cite journal |title=The Use of Quality Management Standards in Trustworthy Digital Archives |journal=International Journal of Digital Curation |author=Dobratz, S.; Rödig, P.; Borghoff, U.M. et al. |volume=5 |issue=1 |pages=46–63 |year=2010 |doi=10.2218/ijdc.v5i1.143}}</ref>, and data repositories can play a role in maintaining the data in a usable structure.<ref name="AssanteAreSci16">{{cite journal |title=Are Scientific Data Repositories Coping with Research Data Publishing? |journal=Data Science Journal |author=Assante, M.; Candela, L.; Castelli, D. et al. |volume=15 |issue=6 |pages=1–24 |year=2016 |doi=10.5334/dsj-2016-006}}</ref> However, using a data repository does not guarantee that the data is usable, since not every repository uses the same procedures and quality metrics, such as applying proper metadata tags.<ref name="MersonAvoid16">{{cite journal |title=Avoiding Data Dumpsters--Toward Equitable and Useful Data Sharing |journal=New England Journal of Medicine |author=Merson, L.; Gaye, O.; Guerin, P.J. |volume=374 |issue=25 |pages=2414-5 |year=2016 |doi=10.1056/NEJMp1605148 |pmid=27168351}}</ref> As many repositories have not yet adopted generally accepted standards, it can be difficult for researchers to choose the right repository for their dataset.<ref name="DobratzTheUse10" /> | ||

Several organizations, including funding agencies, academic publishers, and data organizations provide researchers with lists of supported or recommended repositories, e.g., BioSharing.<ref name="McQuiltonBioSharing16">{{cite journal |title=BioSharing: | Several organizations, including funding agencies, academic publishers, and data organizations provide researchers with lists of supported or recommended repositories, e.g., BioSharing.<ref name="McQuiltonBioSharing16">{{cite journal |title=BioSharing: Curated and crowd-sourced metadata standards, databases and data policies in the life sciences |journal=Database |author=McQuilton, P.; Gonzalez-Beltran, A.; Rocca-Serra, P. et al. |volume=2016 |pages=baw075 |year=2016 |doi=10.1093/database/baw075 |pmid=27189610 |pmc=PMC4869797}}</ref> These lists vary in length, in the number and type of repositories they list, and in their selection criteria for recommendation. In addition, recommendations for data and data sharing are emerging, such as the [[Journal:The FAIR Guiding Principles for scientific data management and stewardship|FAIR Data Principles]], guidelines to establish a common ground for all data to be findable, accessible, interoperable, and reusable.<ref name="WilkinsonTheFAIR16">{{cite journal |title=The FAIR Guiding Principles for scientific data management and stewardship |journal=Scientific Data |author=Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J. et al. |volume=3 |pages=160018 |year=2016 |doi=10.1038/sdata.2016.18 |pmid=26978244 |pmc=PMC4792175}}</ref> Some data repositories are beginning to incorporate the FAIR principles into their policies, such as the UK Data Service<ref name="UKDSTheFair16">{{cite web |url=https://www.ukdataservice.ac.uk/news-and-events/newsitem/?id=4615 |title=The 'FAIR' principles for scientific data management |publisher=UK Data Service |date=08 June 2016 |accessdate=28 October 2016}}</ref> and several funders such as the EU Horizon 2020 program and the NIH.<ref name="ECGuide16">{{cite web |url=http://ec.europa.eu/research/participants/data/ref/h2020/grants_manual/hi/oa_pilot/h2020-hi-oa-data-mgt_en.pdf |format=PDF |title=Guidelines on FAIR Data Management in Horizon 2020 |publisher=European Commission |date=26 July 2016 |accessdate=27 October 2016}}</ref><ref name="NIHBigData17">{{cite web |url=https://commonfund.nih.gov/bd2k |title=Big Data to Knowledge |publisher=National Institutes of Health |date=2017}}</ref> Lists of recommended repositories and guidelines such as these can help researchers decide how and where to store and share their data. | ||

Next to lists of recommended repositories, there are a number of schemes which specifically certify the quality of data repositories. One of the first of these certification schemes is the Data Seal of Approval (DSA), with an objective "to safeguard data, to ensure high quality and to guide reliable management of data for the future without requiring the implementation of new standards, regulations or high costs."<ref name="DSAAbout">{{cite web |url=https://www.datasealofapproval.org/en/information/about/ |title=Data Seal of Approval: About |publisher=DSA Board |accessdate=18 January 2017}}</ref> Building upon the DSA certification, but with more elaborate and detailed guidelines<ref name="DilloTen15">{{cite journal |title=Ten Years Back, Five Years Forward: The Data Seal of Approval |journal=International Journal of Digital Curation |author=Dillo, I.; de Leeuw, L. |volume=10 |issue=1 |pages=230–239 |year=2015 |doi=10.2218/ijdc.v10i1.363}}</ref>, is the Network of Expertise in Long-Term Storage of Digital Resources (NESTOR) and the ISO 16363 standard/Trusted Data Repository (TDR). DSA, NESTOR, and TDR form a three-step framework for data repository certification.<ref name="DilloTen15" /> The ICSU-WDS membership incorporates guidelines from DSA, NESTOR and Trustworthy Repositories Audit & Certification (TRAC), among others, for its data repository framework.<ref name="ICSUCert12">{{cite web |url=https://www.icsu-wds.org/files/wds-certification-summary-11-june-2012.pdf |format=PDF |title=Certification of WDS Members |publisher=ICSU World Data System |date=11 June 2012 |accessdate=28 October 2016}}</ref> Furthermore, the TRAC guidelines were used as a basis for the ISO 16363/TDR guidelines.<ref name="CCSDSRecomm11">{{cite web |url=https://public.ccsds.org/pubs/652x0m1.pdf |format=PDF |title=Audit and Certification of Trustworthy Digital Repositories |publisher=CCSDS |date=September 2011 |accessdate=28 October 2016}}</ref> | |||

Given the multitude of recommendations and certification schemes, we set out to map the current landscape to compare criteria and analyze which repositories are recommended and certified by different parties. This paper is structured as follows: first, we investigate which repositories have been recommended and certified by different organizations. Next, we provide an analysis of the criteria used by organizations recommending repositories and the criteria used by certification schemes, and then derive a set of shared criteria for recommendation and certification. Lastly, we explore what this tells us about the overlap between recommendations and certifications. | |||

==Methods== | |||

===Lists of repositories=== | |||

====Recommended repositories==== | |||

To examine which repositories are being recommended, we looked at the recommendations of 17 different organizations, including academic publishers, funding agencies, and data organizations. These lists of recommended repositories include all the available recommendation lists currently found on the BioSharing (now "FAIRsharing") website under the Recommendations tab<ref name="FAIRsharingRecomm">{{cite web |url=https://fairsharing.org/recommendations/ |title=Recommendations |work=FAIRsharing.org |publisher=University of Oxford}}</ref> and those found in a web search by using the term “recommended data repositories.” These lists have been compiled by the American Geophysical Union, BBSRC<ref name="BBSRCResources">{{cite web |url=http://www.bbsrc.ac.uk/research/resources/ |title=Resources |publisher=BBSRC |accessdate=27 January 2017}}</ref>, BioSharing<ref name="FAIRsharingDatabases">{{cite web |url=https://fairsharing.org/databases/?q=&selected_facets=recommended:true |title=Databases |work=FAIRsharing.org |publisher=University of Oxford}}</ref>, COPDESS<ref name="COPDESSSearch">{{cite web |url=https://copdessdirectory.osf.io/search/ |title=Search for Repositories |publisher=COPDESS |accessdate=27 January 2017}}</ref>, DataMed<ref name="DataMedRepos">{{cite web |url=https://datamed.org/repository_list.php |title=Repository List |publisher=bioCADDIE |accessdate=27 January 2017}}</ref>, Elsevier<ref name="ElsevierSupp">{{cite web |url=https://www.elsevier.com/authors/author-services/research-data/data-base-linking/supported-data-repositories |title=Supported Data Repositories |publisher=Elsevier |accessdate=27 January 2017}}</ref>, EMBO Press<ref name="EMBOAuthor">{{cite web |url=http://msb.embopress.org/authorguide#datadeposition |title=Data Deposition |work=Author Guidelines |publisher=EMBO Press |accessdate=27 January 2017}}</ref>, F1000Research<ref name="F1000Data">{{cite web |url=https://f1000research.com/for-authors/data-guidelines |title=Data Guidelines |work=How to Publish |publisher=F1000 Research |accessdate=27 January 2017}}</ref>, GigaScience<ref name="GigsScienceEditor">{{cite web |url=https://academic.oup.com/gigascience/pages/editorial_policies_and_reporting_standards |title=Editorial Policies & Reporting Standards |publisher=Oxford University Press |accessdate=27 January 2017}}</ref>, NIH<ref name="NIHDataSharing">{{cite web |url=https://www.nlm.nih.gov/NIHbmic/nih_data_sharing_repositories.html |title=NIH Data Sharing Repositories |publisher=U.S. National Library of Medicine |accessdate=27 January 2017}}</ref>, PLOS<ref name="PLOSDataAvail">{{cite web |url=http://journals.plos.org/plosbiology/s/data-availability |title=Data Availability |publisher=PLOS |accessdate=27 January 2017}}</ref>, Scientific Data<ref name="SciDataRecommended">{{cite web |url=https://www.nature.com/sdata/policies/repositories |title=Recommended Data Repositories |work=Scientific Data |publisher=Macmillan Publishers Limited |accessdate=27 January 2017}}</ref>, Springer Nature/BioMed Central (both share the same list)<ref name="SpringerNatRecomm">{{cite web |url=http://www.springernature.com/gp/authors/research-data-policy/repositories/12327124?countryChanged=true |title=Recommended Repositories |work=Springer Nature |publisher=Springer-Verlag GmbH |accessdate=27 January 2017}}</ref>, Web of Science<ref name="WebofScienceMaster">{{cite web |url=http://wokinfo.com/cgi-bin/dci/search.cgi |title=Master Data Repository List |work=Web of Science |publisher=Clarivate Analytics |accessdate=27 January 2017}}</ref>, Wellcome Trust<ref name="WellcomeDataRepos">{{cite web |url=https://wellcome.ac.uk/funding/managing-grant/data-repositories-and-database-resources |title=Data repositories and database resources |publisher=Wellcome Trust |accessdate=27 January 2017}}</ref>, and Wiley. All lists, including links to the online lists, were compiled into one list to compare recommendations (http://dx.doi.org/10.17632/zx2kcyvvwm.1). Not all data repositories indexed by the Web of Science’s Data Citation Index (DCI) were included as there is no publicly available list with all repositories indexed by the DCI, so retrieval of recommended repositories was done through an individual search. The repositories indexed by Re3Data were not included in our list of recommended repositories as Re3data functions as “a global registry of research data repositories”<ref name="RE3DATAAbout">{{cite web |url=https://www.re3data.org/about |title=About |work=re3data.org |publisher=Karlsruhe Institute of Technology |accessdate=27 January 2017}}</ref> and thus does not recommend repositories. However, Re3Data was used to verify the repository’s status, persistent identifiers, and obtained certifications. | |||

====Certified repositories==== | |||

For our analysis of data repository certification schemes, we examined five certification schemes. These were the DSA, ICSU-WDS, NESTOR, TRAC, and ISO 16363/TDR. These schemes were chosen due to being used for certification (DSA, ICSU-WDS, NESTOR and ISO 16363/TDR) or as a self-assessment check for repositories (TRAC). The DSA<ref name="DSAAss">{{cite web |url=https://www.datasealofapproval.org/en/assessment/ |title=Assessment |work=Data Seal of Approval |publisher=DSA Board |accessdate=20 February 2017}}</ref>, ICSU-WDS<ref name="ICSUMember">{{cite web |url=https://www.icsu-wds.org/community/membership/regular-members |title=Membership |publisher=ICSU World Data System |accessdate=20 February 2017}}</ref>, and NESTOR<ref name="NestorSeal">{{cite web |url=http://www.langzeitarchivierung.de/Subsites/nestor/EN/Siegel/siegel_node.html |title=Nestor Seal for Trustworthy Digital Archives |publisher=nestor-Geschäftsstelle |accessdate=20 February 2017}}</ref> provide lists of certified repositories on their respective websites. ISO 16363/TDR certification has not yet been awarded.<ref name="LarrimerAccred16">{{cite web |url=https://anab.qualtraxcloud.com/ShowDocument.aspx?ID=4446 |format=PDF |title=Accreditation Program for ISO 16363 Trustworthy Digital Repositories Management Systems |work=ANAB Heads Up |author=Larrimer, N. |date=20 January 2016 |accessdate=28 October 2016}}</ref> We consulted the websites of the DSA, ICSU-WDS, NESTOR, TRAC, and ISO 16363/TDR certification schemes to see which repositories they certified. The results were compiled into one list (https://doi.org/10.17632/zx2kcyvvwm.1). | |||

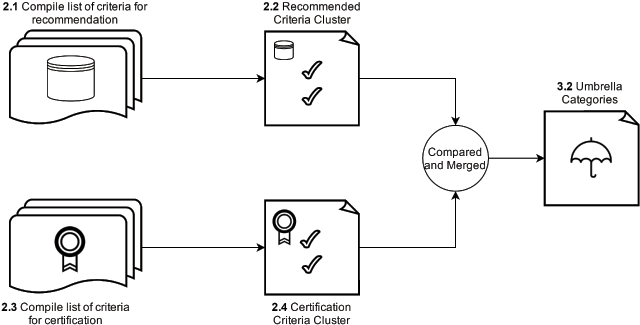

After composing the list of recommended repositories, we investigated which criteria are being used to determine a recommendation or certification, and whether an overlap exists between recommended and certified repositories, and the criteria used (Figure 1). | |||

* 2.1 Compiled list of criteria for recommendation | |||

* 2.2 Clustered criteria into the “Recommended Criteria Cluster” | |||

* 2.3 Compiled list of criteria for certification | |||

* 2.4 Clustered criteria into the “Certification Criteria Cluster” | |||

* 3.2 Compared and merged steps 2.2 and 2.4 to create the umbrella categories | |||

[[File:Fig1 Husen DataSciJourn2017 16-1.png|640px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="640px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 1.''' Flowchart of the methodology used to create the umbrella categories</blockquote> | |||

|- | |||

|} | |||

|} | |||

These steps will be discussed in turn. | |||

===Criteria used for recommendation and certification=== | |||

====Criteria for recommendation==== | |||

To understand the motivation behind specific recommendations, we looked at the organizations’ selection criteria for their lists of recommended repositories. Four of the 17 organizations supplied criteria for this online alongside their lists: BioCADDIE, F1000Research, Scientific Data/Springer Nature (SD/SN), and Web of Science (WoS). The Research Data Alliance’s (RDA)<ref name="RDAReposBundle">{{cite web |url=https://www.rd-alliance.org/group/data-fabric-ig/wiki/repository-bundle.html |title=Repository Bundle |publisher=Research Data Alliance |accessdate=27 January 2017}}</ref> criteria for recommended repositories were also included in this analysis. Although the RDA does not maintain a list of recommended repositories, we included their criteria to balance out the weight between the number of organizations that recommend repositories and the number of organizations that provide criteria for certification. The criteria of the five organizations were then compiled into one list (https://doi.org/10.17632/zx2kcyvvwm.1). | |||

====Recommended Criteria Cluster==== | |||

We categorized the criteria into 15 subheadings: Recognition, Mission, Transparency, Certification, Interface, Legal, Access, Structure, Retrievability, Preservation/Persistence, Curation, Persistent Identifier, Citability, Language, and Diversity of Data. These subheadings were derived from recurring and shared subjects throughout the different criteria lists. We then filtered out repetitions or criteria unique to one organization, namely Language and Diversity of Data, to create the “Recommended Criteria Cluster.” | |||

====Criteria for certification==== | |||

We consulted relevant websites to obtain the criteria used by the DSA, ICSU-WDS, NESTOR, TRAC and ISO 16363/TDR certification schemes and compiled a list of all certification criteria of the five schemes. In the case of the DSA<ref name="DSAReqs">{{cite web |url=https://www.datasealofapproval.org/en/information/requirements/ |title=Requirements |work=Data Seal of Approval |publisher=DSA Board |accessdate=07 April 2017}}</ref>, ICSU-WDS<ref name="ICSUCert12" />, and NESTOR<ref name="NESTORExplan13">{{cite web |url=https://d-nb.info/1047613859/34 |title=Explanatory notes on the nestor Seal for Trustworthy Digital Archives |publisher=nestor Certification Working Group |date=July 2013 |accessdate=07 April 2017}}</ref>, these were found through their respective websites. The criteria for TRAC were found through the website of the Center for Research Libraries (CRL)<ref name="OCLCCRLTrust07">{{cite web |url=http://www.crl.edu/sites/default/files/d6/attachments/pages/trac_0.pdf |format=PDF |title=Trustworthy Repositories Audit & Certification: Criteria and Checklist |author=OCLC and CRL |publisher=Center for Research Libraries |date=February 2007 |accessdate=07 April 2017}}</ref>, and the criteria for the ISO 16363/TDR were found through the Primary Trustworthy Digital Repository Authorisation Body, on the website of the Consultative Committee for Space Systems.<ref name="CCSDSRecomm11" /> | |||

====Certification Criteria Cluster==== | |||

The criteria found were categorized into 14 subheadings: Recognition, Mission, Transparency, Certification, Interface, Legal, Access, Indexation, Structure, Retrievability, Preservation/Persistence, Curation, Persistent Identifier, and Citability. These subheadings were derived from recurring and shared subjects throughout the different lists. Criteria that did not match any of the 14 subheadings, due to being too specific for one scheme, were categorized under a “miscellaneous” subheading. These criteria were then reorganized and filtered to remove repetitions and criteria unique to one certification scheme, into 11 reworded categories: Community, Mission, Providence, Organization, Technical Structure, Legal and Contractual Compliance, Accessibility, Data Quality, Retrievability, Responsiveness, and Preservation. We named this list the “Certification Criteria Cluster.” | |||

===Overlap between recommended and certified repositories=== | |||

====Recommended versus certified repositories==== | |||

To see whether there was overlap between the lists of recommended and certified repositories, we gathered the lists of repositories issued by the certifying organizations and compiled these results into one list (available here: https://doi.org/10.17632/zx2kcyvvwm.1). We then calculated the number of times a repository was recommended by the different organizations as well as the percentage of recommended repositories with and without certification. | |||

====Criteria of recommendation and certification==== | |||

We compared the Recommended Criteria Cluster and the Certification Criteria Cluster by looking at commonalities and recurrences between the two sets of broader headings and its constituents. We matched headings such as Community together with Recognition, Access with Accessibility, and Technical Structure with Interface to derive a higher-order cluster of categories, which we are calling “Umbrella Categories.” This was done to create broader terms that are relevant to both recommendation and certification criteria, to further ease the process of qualifying repositories. In the process of matching terms, those that were identical were left as is, e.g., Mission and Retrievability. | |||

====Repositories by discipline==== | |||

Next, we classified every recommended repository into one of five disciplines, to analyze if a relationship exists between certification, recommendation, and discipline. The disciplines we identified — General/Interdisciplinary, Health and Medicine, Life Sciences, Physical Sciences, and Social Sciences and Economics — were based on the focus disciplines provided on the websites of Scientific Data/Springer Nature, PLOS, Elsevier, and Web of Science, as these organizations provided comparable lists of disciplines. | |||

==Results== | |||

===Lists of repositories=== | |||

The lists of recommended repositories of all 17 organizations were compiled into one list together with the lists of certified repositories (https://doi.org/10.17632/zx2kcyvvwm.1) to allow for further analysis. | |||

===Criteria used for recommendation and certification=== | |||

Based on the list of criteria that were used by the different organizations to recommend repositories, criteria were reorganized into 15 subheadings. After initial creation of the subheadings, we found some repetitions or scheme-specific criteria which we refined into 13 subheadings. The resulting list is the “Recommended Criteria Cluster” (Supplementary Table 1). | |||

Similarly, based on the list of criteria of certification schemes, we were able to create 14 subheadings. These were re-examined and after refining, 11 subheadings remained. These resulting 11 subheadings and their criteria form the “Certification Criteria Cluster” (Supplementary Table 2). | |||

===Overlap between recommendation and certification=== | |||

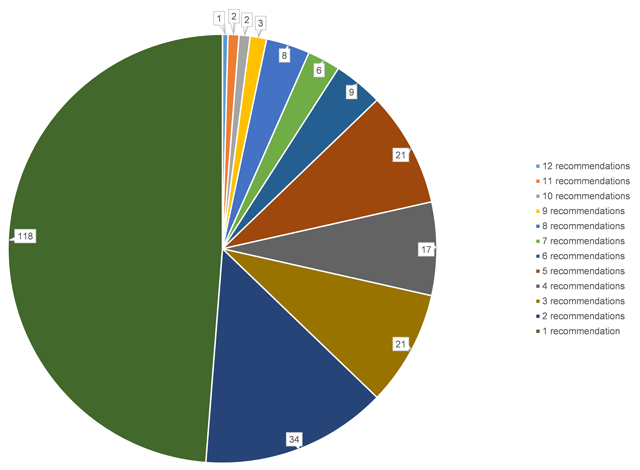

In total, we found 242 repositories that were recommended by publishers, funding agencies, and/or community organizations, and the distribution of recommendations is depicted in Figure 2. A majority (88) were only recommended by a single organization; ArrayExpress was mentioned most frequently, being recommended by 12 out of 17 organizations. | |||

[[File:Fig2 Husen DataSciJourn2017 16-1.png|640px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="640px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 2.''' Pie-chart showing the number of repositories that are recommended by 1 to 17 organizations. The highest number of recommendations received is 12.</blockquote> | |||

|- | |||

|} | |||

|} | |||

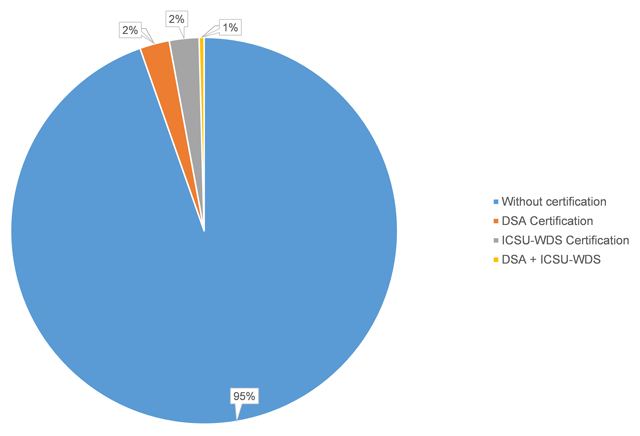

When we look at recommended repositories with certification, only 13 out of 242 recommended repositories had any kind of certification: six repositories were certified by the DSA; six by the ICSU-WDS; none by NESTOR, TRAC, or TDR (Figure 3). Only one repository was certified by both the DSA and ICSU-WDS: the Inter-University Consortium for Political and Social Research (ICPSR). Of the 50 repositories that were recommended most often, only PANGAEA was certified. | |||

[[File:Fig3 Husen DataSciJourn2017 16-1.png|640px]] | |||

{{clear}} | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="640px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Figure 3.''' Pie-chart showing the percentage of recommended repositories that have obtained one of the following certifications: DSA, ICSU-WDS, and both DSA and ICSU-WDS.</blockquote> | |||

|- | |||

|} | |||

|} | |||

To arrive at broadly applicable terms, we grouped the criteria and the broader categories of the Recommended Criteria Cluster and the Certification Criteria Cluster into seven recurring umbrella categories, listed in Table 1. | |||

{| | |||

| STYLE="vertical-align:top;"| | |||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="70%" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" colspan="4"|'''Table 1.''' Table listing seven common terms, referred to as “umbrella categories,” based on a comparison between the Recommended Criteria Cluster and the Certification Criteria Cluster. The second column describes the shared meaning of the umbrella category, followed by differences in characteristics of recommended repository criteria and repository certification scheme criteria. | |||

|- | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Umbrella Categories | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Shared | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Recommended Repository Criteria | |||

! style="background-color:#dddddd; padding-left:10px; padding-right:10px;"|Repository Certification Scheme Criteria | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Mission'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Explicit mission statement in providing long-term responsibility, persistence, and management of data(sets) | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Community/Recognition'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Evidence of use by downloads or citations from an identifiable and active user community | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Understand and meet the needs of the designated and defined target community | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Legal and Contractual Compliance'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Repository operates within a legal framework/Ensures compliance with legal regulations | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|When applicable, have contractual regulations governing the protection of human subjects | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Contracts and agreements maintained with relevant parties on relevant subjects | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Access/Accessibility'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Public access to the scientific/repository designated community | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Anonymous referees (including peer-reviewers) have access to the data before public release as indicated by policies | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Technical Structure/Interface'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|The software system supports data organisation and searchability by both humans and computers. The interface is intuitive and mobile user-friendly | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|The technical (infra)structure is appropriate, protective, and secure | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Retrievability'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Data need to have enough metadata. All data receive a persistent identifier | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"| | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|''Preservation'' | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Long-term and formal preservation/succession plan for the data, even if the repository ceases to exist | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|If the data are retracted, the persistent identifier needs to be maintained | |||

| style="background-color:white; padding-left:10px; padding-right:10px;"|Preservation of data information properties and metadata | |||

|- | |||

|} | |||

|} | |||

When we looked at the discipline that each recommended repository covers, we found that that the most common focus discipline is Life Sciences with 115 repositories, followed by Physical Sciences with 80, Health and Medicine with 26, General/Interdisciplinary with 12, and Social Sciences and Economics with 9 (https://doi.org/10.17632/zx2kcyvvwm.1). | |||

==Discussion== | |||

In the analyses of criteria, we identified seven categories for criteria based on the shared aspects between the criteria for recommendation and the criteria for certification. These categories give an indication of the common requirements observed in this study. These suggest that a repository needs: | |||

#To have an explicit mission statement; | |||

#To have a defined user community; | |||

#To operate within a legal framework; | |||

#To be accessible to the designated user community; | |||

#To have an adequate technical structure; | |||

#To ensure data retrievability; and | |||

#To ensure long-term preservation of data. | |||

In comparing lists of recommended and certified repositories, we found a strong discrepancy: only 13 out of the 242 repositories that were recommended have obtained any form of certification. This gap between recommendation and certification can also be found in the fact that of the 50 most often recommended repositories, only one is certified. There are several possible explanations for the existence of a gap between recommended and certified repositories. | |||

Firstly, repository certifications find their origins in specific disciplines/domains. For instance, the DSA has a background in social sciences and humanities; NESTOR is formed by a network of museums, archives, and libraries; and the ICSU-WDS mainly operates in the earth and space science domain.<ref name="DilloTen15" /> When looking at the different repository domains, we found that 50 of the most recommended repositories are linked to the natural sciences (life/physical/health sciences); exceptions in the top 50 are three interdisciplinary repositories. This depicts a contrast between repository domains and certifications’ target domains. Repositories operating within the life sciences domain might be less aware of certifications while conversely, certifiers might be tailoring standards that are less focused on the requirements for life science repositories. | |||

A second reason might be the dynamics in the field of data sharing; guidelines and practices are constantly evolving and being evaluated. While data repositories such as FlyBase have been active since the early '90s<ref name="GelbartFlyBase97">{{cite journal |title=FlyBase: A Drosophila database - The FlyBase consortium |journal=Nucleic Acids Research |author=Gelbart, W.M.; Crosby, M.; Matthews, B. et al. |volume=25 |issue=1 |pages=63–66 |year=1997 |pmid=9045212 |pmc=PMC146418}}</ref>, certification schemes tend to be much more recent. The DSA is one of the oldest certifications, created in 2005 and becoming an internationally applicable scheme in 2009.<ref name="DilloTen15" /> Changing data sharing dynamics are also reflected in the collaboration between certifying organizations and the merger of different guidelines. For instance, the partnership between the DSA and ICSU-WDS has brought together two certification schemes from diverse backgrounds and disciplines.<ref name="DilloTen15" /> Today, these schemes are still being developed and improved. | |||

The only certifications that have been obtained by the repositories in our analysis are DSA and ICSU-WDS. NESTOR has certified two repositories at the time of writing, none of which were included in the examined recommendation lists. Both TRAC and ISO 16363/TDR have not officially certified repositories. In the case of the TRAC guidelines, this is because they are "meant primarily for those responsible for auditing digital repositories […] seeking objective measurement of the trustworthiness of their repository,"<ref name="CCSDSRecomm11" /> and are intended as a self-assessment check for repositories. The ISO 16363/TDR is a follow-up based on TRAC: it is an audit standard supported by the Primary Trustworthy Digital Repository Authorisation Body. Next to being considered a detailed way to evaluate a digital repository together with DSA and NESTOR<ref name="DilloTen15" />, repositories can be ISO 16363/TDR accredited by the ANSI-ASQ National Accreditation Board, although so far this has not yet happened.<ref name="LarrimerAccred16" /> | |||

Another possible cause of the gap between certification and recommendation is that most organizations do not ask repositories for certification. From the five organizations used to create our Recommended Criteria Cluster analysis, only the RDA requires repositories to have a certification. The RDA states that a “trustworthy” repository is one "that untertake [sic] regularly quality assessments successfully such as Data Seal of Approval/World Data Systems."<ref name="RDAReposBundle" /> Organisations not asking for certification might provide repositories with little incentive to work towards the requirements of certification schemes. | |||

A limitation of this study is that we clustered the lists of all 17 organizations under the header of recommended repositories, but the way in which this recommendation happens differs. In some cases repositories are referred to by these lists as “supported” or “approved,” encouraged for their ability to make data reusable (NIH), meeting access, preservation, and stability requirements (Scientific Data/Springer Nature), having received funding or investment (BBSRC, Wellcome), or the list contains generally recognized repositories (PLOS). This can explain why overlap between the lists is limited, in certain cases, because different considerations might have played a role, depending on the intention of the organization. We note that there might be additional lists available that were not found using our search methodology. | |||

==Conclusion== | |||

The umbrella categories which we identified for data repositories indicate that publishers, community organizations and certification schemes largely agree on quality. Yet this is not reflected in the relationship between recommended and certified repositories. Out of the entire list, less than six percent of recommended repositories obtained some form of certification. Programs such as the Horizon 2020 program of the European Union tell their grantees that when choosing a repository "[p]reference should be given to certified repositories which support open access where possible,"<ref name="ECGuide16" /> increasing the focus on certified repositories. This focus could create an incentive for repositories, in particular uncertified recommended repositories, to become certified. | |||

To ensure certification schemes are known to repositories, we suggest domains collaborate in creating or maintaining certification schemes. While we see the value in having different levels of certification, the recent DSA – WDS partnership provides a good example of a collaboration between domains. If repositories and certification schemes work together towards common standards, this can provide clarity and improve data management and quality. It would improve the data landscape if collectively we make sure that recommended repositories are certified, and certifications are obtained by repositories that are being recommended. | |||

Further research into this area could involve looking at whether the criteria for repository certification have actually contributed to better data. It would be of interest to study if certification leads to more “FAIR” data, and whether data in a certified repository is more highly used and cited. Another possible topic of study is whether these criteria can be applied at the level of the dataset. This could also lead to the development of (semi-)automated tools to check whether datasets comply with the certification standards, before or during the process of submission of datasets to repositories. Our umbrella categories might help to develop the categories that datasets are judged against. | |||

As a final conclusion, we suggest that the seven common umbrella categories we identified could form a common ground for the standardization of data repository requirements. These categories are multifaceted in meaning and could therefore support and improve data management, data quality, and transparency of the services provided by the data repository. Our results complement previous work by Dobratz ''et al.''<ref name="DobratzTheUse10" /> in which she concludes that general standards for repositories are complex, standards are used as guidelines instead, and that there is a need for a specific standard. Our umbrella categories could provide the basis for this standard. Therefore, we suggest that researchers take these criteria into account when choosing a repository and suggest that recommending organizations consider converging on these standards. | |||

==Additional files== | |||

The additional files for this article can be found as follows: | |||

Supplementary Table 1: Table “Recommended Criteria Cluster” showing 13 main criteria used by organizations recommending repositories, with separate individual characteristics for each. The occurrence of criteria in the information provided by organizations is represented by an “X.” DOI: https://doi.org/10.5334/dsj-2017-042.s1 | |||

Supplementary Table 2: Table “Certified Criteria Cluster” showing 11 main criteria used by organizations certifying repositories, with separate individual characteristics for each. The occurrence of criteria in the information provided by organizations is represented by an “X.” DOI: https://doi.org/10.5334/dsj-2017-042.s2 | |||

==Competing interests== | |||

The authors are affiliated with one of the organizations keeping a list of supported repositories. | |||

==Authors contribution== | |||

Sean Edward Husen and Zoë G. de Wilde have contributed equally to this paper. | |||

==References== | ==References== | ||

| Line 38: | Line 227: | ||

==Notes== | ==Notes== | ||

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. The original article lists references alphabetically, but this version — by design — lists them in order of appearance. | This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added. The original article lists references alphabetically, but this version — by design — lists them in order of appearance. The BioSharing website has since become the "FAIRSharing" website, and as such the original BioSharing links point to the new website. Several other website URLs have also changed, and the updated URL is used here. The original includes several inline citations that are not listed in the references section; they have been omitted here. | ||

<!--Place all category tags here--> | <!--Place all category tags here--> | ||

[[Category:LIMSwiki journal articles (added in 2017) | [[Category:LIMSwiki journal articles (added in 2017)]] | ||

[[Category:LIMSwiki journal articles (all) | [[Category:LIMSwiki journal articles (all)]] | ||

[[Category:LIMSwiki journal articles on big | [[Category:LIMSwiki journal articles on big data]] | ||

[[Category:LIMSwiki journal articles on | [[Category:LIMSwiki journal articles on FAIR data principles]] | ||

[[Category:LIMSwiki journal articles on informatics]] | |||

Latest revision as of 16:38, 29 April 2024

| Full article title | Recommended versus certified repositories: Mind the gap |

|---|---|

| Journal | Data Science Journal |

| Author(s) | Husen, Sean Edward; de Wilde, Zoë G.; de Waard, Anita; Cousijn, Helena |

| Author affiliation(s) | Leiden University, Elsevier |

| Primary contact | Email: s dot e dot husen at hum dot leidenuniv dot nl |

| Year published | 2017 |

| Volume and issue | 16(1) |

| Page(s) | 42 |

| DOI | 10.5334/dsj-2017-042 |

| ISSN | 1683-1470 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://datascience.codata.org/article/10.5334/dsj-2017-042/ |

| Download | https://datascience.codata.org/articles/10.5334/dsj-2017-042/galley/710/download/ (PDF) |

Abstract

Researchers are increasingly required to make research data publicly available in data repositories. Although several organizations propose criteria to recommend and evaluate the quality of data repositories, there is no consensus of what constitutes a good data repository. In this paper, we investigate, first, which data repositories are recommended by various stakeholders (publishers, funders, and community organizations) and second, which repositories are certified by a number of organizations. We then compare these two lists of repositories, and the criteria for recommendation and certification. We find that criteria used by organizations recommending and certifying repositories are similar, although the certification criteria are generally more detailed. We distill the lists of criteria into seven main categories: “Mission,” “Community/Recognition,” “Legal and Contractual Compliance,” “Access/Accessibility,” “Technical Structure/Interface,” “Retrievability,” and “Preservation.” Although the criteria are similar, the lists of repositories that are recommended by the various agencies are very different. Out of all of the recommended repositories, less than six percent obtained certification. As certification is becoming more important, steps should be taken to decrease this gap between recommended and certified repositories, and ensure that certification standards become applicable, and applied, to the repositories which researchers are currently using.

Keywords: data repositories, data management, certification, data quality, data fitness

Introduction

Data sharing and data management are topics that are becoming increasingly important. More information is appearing about their benefits, such as increased citation rates for research papers with associated shared datasets.[1][2] A growing number of funding bodies such as the NIH and the Wellcome Trust[3][4], as well as several journals[5], have installed policies that require research data to be shared.[6] To be able to share data, both now and in the future, datasets not only need to be preserved but also need to be comprehensible and usable for others. To ensure these qualities, research data needs to be managed[7], and data repositories can play a role in maintaining the data in a usable structure.[8] However, using a data repository does not guarantee that the data is usable, since not every repository uses the same procedures and quality metrics, such as applying proper metadata tags.[9] As many repositories have not yet adopted generally accepted standards, it can be difficult for researchers to choose the right repository for their dataset.[7]

Several organizations, including funding agencies, academic publishers, and data organizations provide researchers with lists of supported or recommended repositories, e.g., BioSharing.[10] These lists vary in length, in the number and type of repositories they list, and in their selection criteria for recommendation. In addition, recommendations for data and data sharing are emerging, such as the FAIR Data Principles, guidelines to establish a common ground for all data to be findable, accessible, interoperable, and reusable.[11] Some data repositories are beginning to incorporate the FAIR principles into their policies, such as the UK Data Service[12] and several funders such as the EU Horizon 2020 program and the NIH.[13][14] Lists of recommended repositories and guidelines such as these can help researchers decide how and where to store and share their data.

Next to lists of recommended repositories, there are a number of schemes which specifically certify the quality of data repositories. One of the first of these certification schemes is the Data Seal of Approval (DSA), with an objective "to safeguard data, to ensure high quality and to guide reliable management of data for the future without requiring the implementation of new standards, regulations or high costs."[15] Building upon the DSA certification, but with more elaborate and detailed guidelines[16], is the Network of Expertise in Long-Term Storage of Digital Resources (NESTOR) and the ISO 16363 standard/Trusted Data Repository (TDR). DSA, NESTOR, and TDR form a three-step framework for data repository certification.[16] The ICSU-WDS membership incorporates guidelines from DSA, NESTOR and Trustworthy Repositories Audit & Certification (TRAC), among others, for its data repository framework.[17] Furthermore, the TRAC guidelines were used as a basis for the ISO 16363/TDR guidelines.[18]

Given the multitude of recommendations and certification schemes, we set out to map the current landscape to compare criteria and analyze which repositories are recommended and certified by different parties. This paper is structured as follows: first, we investigate which repositories have been recommended and certified by different organizations. Next, we provide an analysis of the criteria used by organizations recommending repositories and the criteria used by certification schemes, and then derive a set of shared criteria for recommendation and certification. Lastly, we explore what this tells us about the overlap between recommendations and certifications.

Methods

Lists of repositories

Recommended repositories

To examine which repositories are being recommended, we looked at the recommendations of 17 different organizations, including academic publishers, funding agencies, and data organizations. These lists of recommended repositories include all the available recommendation lists currently found on the BioSharing (now "FAIRsharing") website under the Recommendations tab[19] and those found in a web search by using the term “recommended data repositories.” These lists have been compiled by the American Geophysical Union, BBSRC[20], BioSharing[21], COPDESS[22], DataMed[23], Elsevier[24], EMBO Press[25], F1000Research[26], GigaScience[27], NIH[28], PLOS[29], Scientific Data[30], Springer Nature/BioMed Central (both share the same list)[31], Web of Science[32], Wellcome Trust[33], and Wiley. All lists, including links to the online lists, were compiled into one list to compare recommendations (http://dx.doi.org/10.17632/zx2kcyvvwm.1). Not all data repositories indexed by the Web of Science’s Data Citation Index (DCI) were included as there is no publicly available list with all repositories indexed by the DCI, so retrieval of recommended repositories was done through an individual search. The repositories indexed by Re3Data were not included in our list of recommended repositories as Re3data functions as “a global registry of research data repositories”[34] and thus does not recommend repositories. However, Re3Data was used to verify the repository’s status, persistent identifiers, and obtained certifications.

Certified repositories

For our analysis of data repository certification schemes, we examined five certification schemes. These were the DSA, ICSU-WDS, NESTOR, TRAC, and ISO 16363/TDR. These schemes were chosen due to being used for certification (DSA, ICSU-WDS, NESTOR and ISO 16363/TDR) or as a self-assessment check for repositories (TRAC). The DSA[35], ICSU-WDS[36], and NESTOR[37] provide lists of certified repositories on their respective websites. ISO 16363/TDR certification has not yet been awarded.[38] We consulted the websites of the DSA, ICSU-WDS, NESTOR, TRAC, and ISO 16363/TDR certification schemes to see which repositories they certified. The results were compiled into one list (https://doi.org/10.17632/zx2kcyvvwm.1).

After composing the list of recommended repositories, we investigated which criteria are being used to determine a recommendation or certification, and whether an overlap exists between recommended and certified repositories, and the criteria used (Figure 1).

- 2.1 Compiled list of criteria for recommendation

- 2.2 Clustered criteria into the “Recommended Criteria Cluster”

- 2.3 Compiled list of criteria for certification

- 2.4 Clustered criteria into the “Certification Criteria Cluster”

- 3.2 Compared and merged steps 2.2 and 2.4 to create the umbrella categories

|

These steps will be discussed in turn.

Criteria used for recommendation and certification

Criteria for recommendation

To understand the motivation behind specific recommendations, we looked at the organizations’ selection criteria for their lists of recommended repositories. Four of the 17 organizations supplied criteria for this online alongside their lists: BioCADDIE, F1000Research, Scientific Data/Springer Nature (SD/SN), and Web of Science (WoS). The Research Data Alliance’s (RDA)[39] criteria for recommended repositories were also included in this analysis. Although the RDA does not maintain a list of recommended repositories, we included their criteria to balance out the weight between the number of organizations that recommend repositories and the number of organizations that provide criteria for certification. The criteria of the five organizations were then compiled into one list (https://doi.org/10.17632/zx2kcyvvwm.1).

Recommended Criteria Cluster

We categorized the criteria into 15 subheadings: Recognition, Mission, Transparency, Certification, Interface, Legal, Access, Structure, Retrievability, Preservation/Persistence, Curation, Persistent Identifier, Citability, Language, and Diversity of Data. These subheadings were derived from recurring and shared subjects throughout the different criteria lists. We then filtered out repetitions or criteria unique to one organization, namely Language and Diversity of Data, to create the “Recommended Criteria Cluster.”

Criteria for certification

We consulted relevant websites to obtain the criteria used by the DSA, ICSU-WDS, NESTOR, TRAC and ISO 16363/TDR certification schemes and compiled a list of all certification criteria of the five schemes. In the case of the DSA[40], ICSU-WDS[17], and NESTOR[41], these were found through their respective websites. The criteria for TRAC were found through the website of the Center for Research Libraries (CRL)[42], and the criteria for the ISO 16363/TDR were found through the Primary Trustworthy Digital Repository Authorisation Body, on the website of the Consultative Committee for Space Systems.[18]

Certification Criteria Cluster

The criteria found were categorized into 14 subheadings: Recognition, Mission, Transparency, Certification, Interface, Legal, Access, Indexation, Structure, Retrievability, Preservation/Persistence, Curation, Persistent Identifier, and Citability. These subheadings were derived from recurring and shared subjects throughout the different lists. Criteria that did not match any of the 14 subheadings, due to being too specific for one scheme, were categorized under a “miscellaneous” subheading. These criteria were then reorganized and filtered to remove repetitions and criteria unique to one certification scheme, into 11 reworded categories: Community, Mission, Providence, Organization, Technical Structure, Legal and Contractual Compliance, Accessibility, Data Quality, Retrievability, Responsiveness, and Preservation. We named this list the “Certification Criteria Cluster.”

Overlap between recommended and certified repositories

Recommended versus certified repositories

To see whether there was overlap between the lists of recommended and certified repositories, we gathered the lists of repositories issued by the certifying organizations and compiled these results into one list (available here: https://doi.org/10.17632/zx2kcyvvwm.1). We then calculated the number of times a repository was recommended by the different organizations as well as the percentage of recommended repositories with and without certification.

Criteria of recommendation and certification

We compared the Recommended Criteria Cluster and the Certification Criteria Cluster by looking at commonalities and recurrences between the two sets of broader headings and its constituents. We matched headings such as Community together with Recognition, Access with Accessibility, and Technical Structure with Interface to derive a higher-order cluster of categories, which we are calling “Umbrella Categories.” This was done to create broader terms that are relevant to both recommendation and certification criteria, to further ease the process of qualifying repositories. In the process of matching terms, those that were identical were left as is, e.g., Mission and Retrievability.

Repositories by discipline

Next, we classified every recommended repository into one of five disciplines, to analyze if a relationship exists between certification, recommendation, and discipline. The disciplines we identified — General/Interdisciplinary, Health and Medicine, Life Sciences, Physical Sciences, and Social Sciences and Economics — were based on the focus disciplines provided on the websites of Scientific Data/Springer Nature, PLOS, Elsevier, and Web of Science, as these organizations provided comparable lists of disciplines.

Results

Lists of repositories

The lists of recommended repositories of all 17 organizations were compiled into one list together with the lists of certified repositories (https://doi.org/10.17632/zx2kcyvvwm.1) to allow for further analysis.

Criteria used for recommendation and certification

Based on the list of criteria that were used by the different organizations to recommend repositories, criteria were reorganized into 15 subheadings. After initial creation of the subheadings, we found some repetitions or scheme-specific criteria which we refined into 13 subheadings. The resulting list is the “Recommended Criteria Cluster” (Supplementary Table 1).

Similarly, based on the list of criteria of certification schemes, we were able to create 14 subheadings. These were re-examined and after refining, 11 subheadings remained. These resulting 11 subheadings and their criteria form the “Certification Criteria Cluster” (Supplementary Table 2).

Overlap between recommendation and certification

In total, we found 242 repositories that were recommended by publishers, funding agencies, and/or community organizations, and the distribution of recommendations is depicted in Figure 2. A majority (88) were only recommended by a single organization; ArrayExpress was mentioned most frequently, being recommended by 12 out of 17 organizations.

|

When we look at recommended repositories with certification, only 13 out of 242 recommended repositories had any kind of certification: six repositories were certified by the DSA; six by the ICSU-WDS; none by NESTOR, TRAC, or TDR (Figure 3). Only one repository was certified by both the DSA and ICSU-WDS: the Inter-University Consortium for Political and Social Research (ICPSR). Of the 50 repositories that were recommended most often, only PANGAEA was certified.

|

To arrive at broadly applicable terms, we grouped the criteria and the broader categories of the Recommended Criteria Cluster and the Certification Criteria Cluster into seven recurring umbrella categories, listed in Table 1.

| ||||||||||||||||||||||||||||||||||||

When we looked at the discipline that each recommended repository covers, we found that that the most common focus discipline is Life Sciences with 115 repositories, followed by Physical Sciences with 80, Health and Medicine with 26, General/Interdisciplinary with 12, and Social Sciences and Economics with 9 (https://doi.org/10.17632/zx2kcyvvwm.1).

Discussion

In the analyses of criteria, we identified seven categories for criteria based on the shared aspects between the criteria for recommendation and the criteria for certification. These categories give an indication of the common requirements observed in this study. These suggest that a repository needs:

- To have an explicit mission statement;

- To have a defined user community;

- To operate within a legal framework;

- To be accessible to the designated user community;

- To have an adequate technical structure;

- To ensure data retrievability; and

- To ensure long-term preservation of data.

In comparing lists of recommended and certified repositories, we found a strong discrepancy: only 13 out of the 242 repositories that were recommended have obtained any form of certification. This gap between recommendation and certification can also be found in the fact that of the 50 most often recommended repositories, only one is certified. There are several possible explanations for the existence of a gap between recommended and certified repositories.

Firstly, repository certifications find their origins in specific disciplines/domains. For instance, the DSA has a background in social sciences and humanities; NESTOR is formed by a network of museums, archives, and libraries; and the ICSU-WDS mainly operates in the earth and space science domain.[16] When looking at the different repository domains, we found that 50 of the most recommended repositories are linked to the natural sciences (life/physical/health sciences); exceptions in the top 50 are three interdisciplinary repositories. This depicts a contrast between repository domains and certifications’ target domains. Repositories operating within the life sciences domain might be less aware of certifications while conversely, certifiers might be tailoring standards that are less focused on the requirements for life science repositories.

A second reason might be the dynamics in the field of data sharing; guidelines and practices are constantly evolving and being evaluated. While data repositories such as FlyBase have been active since the early '90s[43], certification schemes tend to be much more recent. The DSA is one of the oldest certifications, created in 2005 and becoming an internationally applicable scheme in 2009.[16] Changing data sharing dynamics are also reflected in the collaboration between certifying organizations and the merger of different guidelines. For instance, the partnership between the DSA and ICSU-WDS has brought together two certification schemes from diverse backgrounds and disciplines.[16] Today, these schemes are still being developed and improved.

The only certifications that have been obtained by the repositories in our analysis are DSA and ICSU-WDS. NESTOR has certified two repositories at the time of writing, none of which were included in the examined recommendation lists. Both TRAC and ISO 16363/TDR have not officially certified repositories. In the case of the TRAC guidelines, this is because they are "meant primarily for those responsible for auditing digital repositories […] seeking objective measurement of the trustworthiness of their repository,"[18] and are intended as a self-assessment check for repositories. The ISO 16363/TDR is a follow-up based on TRAC: it is an audit standard supported by the Primary Trustworthy Digital Repository Authorisation Body. Next to being considered a detailed way to evaluate a digital repository together with DSA and NESTOR[16], repositories can be ISO 16363/TDR accredited by the ANSI-ASQ National Accreditation Board, although so far this has not yet happened.[38]

Another possible cause of the gap between certification and recommendation is that most organizations do not ask repositories for certification. From the five organizations used to create our Recommended Criteria Cluster analysis, only the RDA requires repositories to have a certification. The RDA states that a “trustworthy” repository is one "that untertake [sic] regularly quality assessments successfully such as Data Seal of Approval/World Data Systems."[39] Organisations not asking for certification might provide repositories with little incentive to work towards the requirements of certification schemes.

A limitation of this study is that we clustered the lists of all 17 organizations under the header of recommended repositories, but the way in which this recommendation happens differs. In some cases repositories are referred to by these lists as “supported” or “approved,” encouraged for their ability to make data reusable (NIH), meeting access, preservation, and stability requirements (Scientific Data/Springer Nature), having received funding or investment (BBSRC, Wellcome), or the list contains generally recognized repositories (PLOS). This can explain why overlap between the lists is limited, in certain cases, because different considerations might have played a role, depending on the intention of the organization. We note that there might be additional lists available that were not found using our search methodology.

Conclusion

The umbrella categories which we identified for data repositories indicate that publishers, community organizations and certification schemes largely agree on quality. Yet this is not reflected in the relationship between recommended and certified repositories. Out of the entire list, less than six percent of recommended repositories obtained some form of certification. Programs such as the Horizon 2020 program of the European Union tell their grantees that when choosing a repository "[p]reference should be given to certified repositories which support open access where possible,"[13] increasing the focus on certified repositories. This focus could create an incentive for repositories, in particular uncertified recommended repositories, to become certified.

To ensure certification schemes are known to repositories, we suggest domains collaborate in creating or maintaining certification schemes. While we see the value in having different levels of certification, the recent DSA – WDS partnership provides a good example of a collaboration between domains. If repositories and certification schemes work together towards common standards, this can provide clarity and improve data management and quality. It would improve the data landscape if collectively we make sure that recommended repositories are certified, and certifications are obtained by repositories that are being recommended.

Further research into this area could involve looking at whether the criteria for repository certification have actually contributed to better data. It would be of interest to study if certification leads to more “FAIR” data, and whether data in a certified repository is more highly used and cited. Another possible topic of study is whether these criteria can be applied at the level of the dataset. This could also lead to the development of (semi-)automated tools to check whether datasets comply with the certification standards, before or during the process of submission of datasets to repositories. Our umbrella categories might help to develop the categories that datasets are judged against.

As a final conclusion, we suggest that the seven common umbrella categories we identified could form a common ground for the standardization of data repository requirements. These categories are multifaceted in meaning and could therefore support and improve data management, data quality, and transparency of the services provided by the data repository. Our results complement previous work by Dobratz et al.[7] in which she concludes that general standards for repositories are complex, standards are used as guidelines instead, and that there is a need for a specific standard. Our umbrella categories could provide the basis for this standard. Therefore, we suggest that researchers take these criteria into account when choosing a repository and suggest that recommending organizations consider converging on these standards.

Additional files

The additional files for this article can be found as follows:

Supplementary Table 1: Table “Recommended Criteria Cluster” showing 13 main criteria used by organizations recommending repositories, with separate individual characteristics for each. The occurrence of criteria in the information provided by organizations is represented by an “X.” DOI: https://doi.org/10.5334/dsj-2017-042.s1

Supplementary Table 2: Table “Certified Criteria Cluster” showing 11 main criteria used by organizations certifying repositories, with separate individual characteristics for each. The occurrence of criteria in the information provided by organizations is represented by an “X.” DOI: https://doi.org/10.5334/dsj-2017-042.s2

Competing interests

The authors are affiliated with one of the organizations keeping a list of supported repositories.

Authors contribution

Sean Edward Husen and Zoë G. de Wilde have contributed equally to this paper.

References

- ↑ Piwowar, H.A.; Vision, T.J. (2013). "Data reuse and the open data citation advantage". PeerJ 1: e175. doi:10.7717/peerj.175. PMC PMC3792178. PMID 24109559. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3792178.

- ↑ Piwowar, H.A.; Day, R.S.; Fridsma, D.B. (2007). "Sharing detailed research data is associated with increased citation rate". PLoS One 2 (3): e308. doi:10.1371/journal.pone.0000308. PMC PMC1817752. PMID 17375194. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1817752.

- ↑ "NIH Grants Policy Statement" (PDF). National Institutes of Health. 2015. https://grants.nih.gov/grants/policy/nihgps/nihgps.pdf. Retrieved 27 January 2017.

- ↑ "Policy on data, software and materials management and sharing". Wellcome Trust. https://wellcome.ac.uk/funding/managing-grant/policy-data-software-materials-management-and-sharing. Retrieved 27 January 2017.

- ↑ Borgman, C.L. (2012). "The conundrum of sharing research data". Journal of the American Society for Information Science and Technology 63 (6): 1059-1078. doi:10.1002/asi.22634.

- ↑ Mayernik, M.S.; Callaghan, S.; Leigh, R. et al. (2014). "Peer Review of Datasets: When, Why, and How". Bulletin of the American Meteorological Society 96 (2): 191–201. doi:10.1175/BAMS-D-13-00083.1.

- ↑ 7.0 7.1 7.2 Dobratz, S.; Rödig, P.; Borghoff, U.M. et al. (2010). "The Use of Quality Management Standards in Trustworthy Digital Archives". International Journal of Digital Curation 5 (1): 46–63. doi:10.2218/ijdc.v5i1.143.

- ↑ Assante, M.; Candela, L.; Castelli, D. et al. (2016). "Are Scientific Data Repositories Coping with Research Data Publishing?". Data Science Journal 15 (6): 1–24. doi:10.5334/dsj-2016-006.

- ↑ Merson, L.; Gaye, O.; Guerin, P.J. (2016). "Avoiding Data Dumpsters--Toward Equitable and Useful Data Sharing". New England Journal of Medicine 374 (25): 2414-5. doi:10.1056/NEJMp1605148. PMID 27168351.

- ↑ McQuilton, P.; Gonzalez-Beltran, A.; Rocca-Serra, P. et al. (2016). "BioSharing: Curated and crowd-sourced metadata standards, databases and data policies in the life sciences". Database 2016: baw075. doi:10.1093/database/baw075. PMC PMC4869797. PMID 27189610. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4869797.

- ↑ Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J. et al. (2016). "The FAIR Guiding Principles for scientific data management and stewardship". Scientific Data 3: 160018. doi:10.1038/sdata.2016.18. PMC PMC4792175. PMID 26978244. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4792175.

- ↑ "The 'FAIR' principles for scientific data management". UK Data Service. 8 June 2016. https://www.ukdataservice.ac.uk/news-and-events/newsitem/?id=4615. Retrieved 28 October 2016.

- ↑ 13.0 13.1 "Guidelines on FAIR Data Management in Horizon 2020" (PDF). European Commission. 26 July 2016. http://ec.europa.eu/research/participants/data/ref/h2020/grants_manual/hi/oa_pilot/h2020-hi-oa-data-mgt_en.pdf. Retrieved 27 October 2016.

- ↑ "Big Data to Knowledge". National Institutes of Health. 2017. https://commonfund.nih.gov/bd2k.

- ↑ "Data Seal of Approval: About". DSA Board. https://www.datasealofapproval.org/en/information/about/. Retrieved 18 January 2017.

- ↑ 16.0 16.1 16.2 16.3 16.4 16.5 Dillo, I.; de Leeuw, L. (2015). "Ten Years Back, Five Years Forward: The Data Seal of Approval". International Journal of Digital Curation 10 (1): 230–239. doi:10.2218/ijdc.v10i1.363.

- ↑ 17.0 17.1 "Certification of WDS Members" (PDF). ICSU World Data System. 11 June 2012. https://www.icsu-wds.org/files/wds-certification-summary-11-june-2012.pdf. Retrieved 28 October 2016.

- ↑ 18.0 18.1 18.2 "Audit and Certification of Trustworthy Digital Repositories" (PDF). CCSDS. September 2011. https://public.ccsds.org/pubs/652x0m1.pdf. Retrieved 28 October 2016.

- ↑ "Recommendations". FAIRsharing.org. University of Oxford. https://fairsharing.org/recommendations/.

- ↑ "Resources". BBSRC. http://www.bbsrc.ac.uk/research/resources/. Retrieved 27 January 2017.

- ↑ "Databases". FAIRsharing.org. University of Oxford. https://fairsharing.org/databases/?q=&selected_facets=recommended:true.

- ↑ "Search for Repositories". COPDESS. https://copdessdirectory.osf.io/search/. Retrieved 27 January 2017.

- ↑ "Repository List". bioCADDIE. https://datamed.org/repository_list.php. Retrieved 27 January 2017.

- ↑ "Supported Data Repositories". Elsevier. https://www.elsevier.com/authors/author-services/research-data/data-base-linking/supported-data-repositories. Retrieved 27 January 2017.

- ↑ "Data Deposition". Author Guidelines. EMBO Press. http://msb.embopress.org/authorguide#datadeposition. Retrieved 27 January 2017.

- ↑ "Data Guidelines". How to Publish. F1000 Research. https://f1000research.com/for-authors/data-guidelines. Retrieved 27 January 2017.

- ↑ "Editorial Policies & Reporting Standards". Oxford University Press. https://academic.oup.com/gigascience/pages/editorial_policies_and_reporting_standards. Retrieved 27 January 2017.

- ↑ "NIH Data Sharing Repositories". U.S. National Library of Medicine. https://www.nlm.nih.gov/NIHbmic/nih_data_sharing_repositories.html. Retrieved 27 January 2017.

- ↑ "Data Availability". PLOS. http://journals.plos.org/plosbiology/s/data-availability. Retrieved 27 January 2017.

- ↑ "Recommended Data Repositories". Scientific Data. Macmillan Publishers Limited. https://www.nature.com/sdata/policies/repositories. Retrieved 27 January 2017.

- ↑ "Recommended Repositories". Springer Nature. Springer-Verlag GmbH. http://www.springernature.com/gp/authors/research-data-policy/repositories/12327124?countryChanged=true. Retrieved 27 January 2017.

- ↑ "Master Data Repository List". Web of Science. Clarivate Analytics. http://wokinfo.com/cgi-bin/dci/search.cgi. Retrieved 27 January 2017.

- ↑ "Data repositories and database resources". Wellcome Trust. https://wellcome.ac.uk/funding/managing-grant/data-repositories-and-database-resources. Retrieved 27 January 2017.

- ↑ "About". re3data.org. Karlsruhe Institute of Technology. https://www.re3data.org/about. Retrieved 27 January 2017.

- ↑ "Assessment". Data Seal of Approval. DSA Board. https://www.datasealofapproval.org/en/assessment/. Retrieved 20 February 2017.

- ↑ "Membership". ICSU World Data System. https://www.icsu-wds.org/community/membership/regular-members. Retrieved 20 February 2017.

- ↑ "Nestor Seal for Trustworthy Digital Archives". nestor-Geschäftsstelle. http://www.langzeitarchivierung.de/Subsites/nestor/EN/Siegel/siegel_node.html. Retrieved 20 February 2017.