Difference between revisions of "Journal:Generalized procedure for screening free software and open-source software applications/In-depth evaluation"

Shawndouglas (talk | contribs) (Removing test code.) |

Shawndouglas (talk | contribs) m (→Community: Internal link) |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 16: | Line 16: | ||

|issn = | |issn = | ||

|license = [https://creativecommons.org/licenses/by-sa/4.0/ Creative Commons Attribution-ShareAlike 4.0 International] | |license = [https://creativecommons.org/licenses/by-sa/4.0/ Creative Commons Attribution-ShareAlike 4.0 International] | ||

|website = | |website = [[Journal:Generalized procedure for screening free software and open-source software applications/Print version|Print-friendly version]] | ||

|download = | |download = [https://drive.google.com/file/d/0B1hujIvHpfUwQjR0UlgtcGNCYU0/view?usp=sharing PDF] (Note: Inline references fail to load in PDF version) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

Free software and [[:Category:Open-source software|open-source software projects]] have become a popular alternative tool in both scientific research and other fields. However, selecting the optimal application for use in a project can be a major task in itself, as the list of potential applications must first be identified and screened to determine promising candidates before an in-depth analysis of systems can be performed. To simplify this process, we have initiated a project to generate a library of in-depth reviews of free software and open-source software applications. Preliminary to beginning this project, a review of evaluation methods available in the literature was performed. As we found no one method that stood out, we synthesized a general procedure using a variety of available sources for screening a designated class of applications to determine which ones to evaluate in more depth. In this paper, we examine a number of currently published processes to identify their strengths and weaknesses. By selecting from these processes we synthesize a proposed screening procedure to triage available systems and identify those most promising of pursuit. To illustrate the functionality of this technique, this screening procedure is executed against a selected class of applications. | Free software and [[:Category:Open-source software|open-source software projects]] have become a popular alternative tool in both scientific research and other fields. However, selecting the optimal application for use in a project can be a major task in itself, as the list of potential applications must first be identified and screened to determine promising candidates before an in-depth analysis of systems can be performed. To simplify this process, we have initiated a project to generate a library of in-depth reviews of [[Free and open-source software|free software and open-source software]] applications. Preliminary to beginning this project, a review of evaluation methods available in the literature was performed. As we found no one method that stood out, we synthesized a general procedure using a variety of available sources for screening a designated class of applications to determine which ones to evaluate in more depth. In this paper, we examine a number of currently published processes to identify their strengths and weaknesses. By selecting from these processes we synthesize a proposed screening procedure to triage available systems and identify those most promising of pursuit. To illustrate the functionality of this technique, this screening procedure is executed against a selected class of applications. | ||

==Introduction== | ==Introduction== | ||

| Line 110: | Line 110: | ||

====Community==== | ====Community==== | ||

Many of the researchers I've encountered have indicated that community is the most critical factor of a FLOSS project. There are a number of reasons for this. First, the health and sustainability of a FLOSS project is indicated by a large and diverse community that is both active and responsive.<ref name="Silva10Quest09">{{cite web |url=http://www.techrepublic.com/blog/10-things/10-questions-to-ask-when-selecting-open-source-products-for-your-enterprise/ |title=10 questions to ask when selecting open source products for your enterprise |author=Silva, Chamindra de |work=TechRepublic |publisher=CBS Interactive |date=20 December 2009 |accessdate=13 April 2015}}</ref> Additionally, the core programmers in a FLOSS project are generally few, and it is the size of the project community that determines how well-reviewed the application is, ensuring quality control of the projects code. Finally, the size of the project community correlates with the lifetime of the project. | Many of the researchers I've encountered have indicated that community is the most critical factor of a [[Free and open-source software#FLOSS|FLOSS]] project. There are a number of reasons for this. First, the health and sustainability of a FLOSS project is indicated by a large and diverse community that is both active and responsive.<ref name="Silva10Quest09">{{cite web |url=http://www.techrepublic.com/blog/10-things/10-questions-to-ask-when-selecting-open-source-products-for-your-enterprise/ |title=10 questions to ask when selecting open source products for your enterprise |author=Silva, Chamindra de |work=TechRepublic |publisher=CBS Interactive |date=20 December 2009 |accessdate=13 April 2015}}</ref> Additionally, the core programmers in a FLOSS project are generally few, and it is the size of the project community that determines how well-reviewed the application is, ensuring quality control of the projects code. Finally, the size of the project community correlates with the lifetime of the project. | ||

Suggested ratings are: | Suggested ratings are: | ||

| Line 315: | Line 315: | ||

:[[File:Fig3 Joyce 2015.png|700px]] | :[[File:Fig3 Joyce 2015.png|700px]] | ||

{{clear}} | {{clear}} | ||

<blockquote>'''Illustration 3.''': License compatibility between common FOSS software licenses according to David A. Wheeler (2007): | {| | ||

| STYLE="vertical-align:top;"| | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

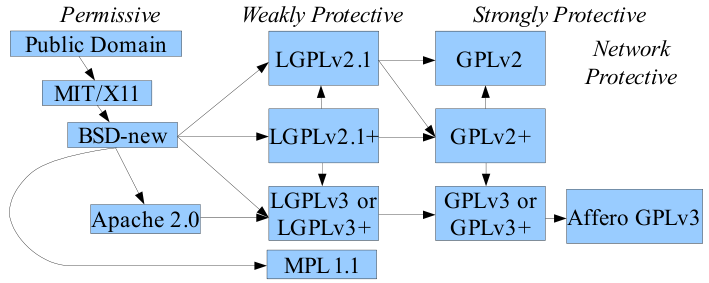

| style="background-color:white; padding-left:10px; padding-right:10px;"| <blockquote>'''Illustration 3.''': License compatibility between common FOSS software licenses according to David A. Wheeler (2007): the vector arrows denote an one directional compatibility, therefore better compatibility on the left side than on the right side.<ref name="WheelerTheFree07">{{cite web |url=http://www.dwheeler.com/essays/floss-license-slide.html |title=The Free-Libre / Open Source Software (FLOSS) License Slide |author=Wheeler, David A. |work=dwheeler.com |date=27 September 2007 |accessdate=28 May 2015}}</ref></blockquote> | |||

|- | |||

|} | |||

|} | |||

Just to be absolutely clear, the license of FLOSS and proprietary applications generally disavows any type of warranty that the program will work and disclaims any liability for any damage or injuries that result from the programs use. Now, having said that, you may be able to purchase a warranty separately; just don't anticipate any legal recourse in the event of a system failure. | Just to be absolutely clear, the license of FLOSS and proprietary applications generally disavows any type of warranty that the program will work and disclaims any liability for any damage or injuries that result from the programs use. Now, having said that, you may be able to purchase a warranty separately; just don't anticipate any legal recourse in the event of a system failure. | ||

Latest revision as of 16:18, 19 January 2016

| Full article title | Generalized Procedure for Screening Free Software and Open Source Software Applications |

|---|---|

| Author(s) | Joyce, John |

| Author affiliation(s) | Arcana Informatica; Scientific Computing |

| Primary contact | Email: jrjoyce@gmail.com |

| Year published | 2015 |

| Distribution license | Creative Commons Attribution-ShareAlike 4.0 International |

| Website | Print-friendly version |

| Download | PDF (Note: Inline references fail to load in PDF version) |

Abstract

Free software and open-source software projects have become a popular alternative tool in both scientific research and other fields. However, selecting the optimal application for use in a project can be a major task in itself, as the list of potential applications must first be identified and screened to determine promising candidates before an in-depth analysis of systems can be performed. To simplify this process, we have initiated a project to generate a library of in-depth reviews of free software and open-source software applications. Preliminary to beginning this project, a review of evaluation methods available in the literature was performed. As we found no one method that stood out, we synthesized a general procedure using a variety of available sources for screening a designated class of applications to determine which ones to evaluate in more depth. In this paper, we examine a number of currently published processes to identify their strengths and weaknesses. By selecting from these processes we synthesize a proposed screening procedure to triage available systems and identify those most promising of pursuit. To illustrate the functionality of this technique, this screening procedure is executed against a selected class of applications.

Introduction

Results

Literature review

Initial evaluation and selection recommendations

In-depth evaluation

While in-depth analysis of the screened systems will require a more detailed examination and comparison, for the purpose of this initial survey a much simpler assessment protocol will serve. While there is no single "correct" evaluation protocol, something in the nature of the three-leaf scoring criteria described for QSOS[1] should be suitable. Keep in mind that for this quick assessment we are using broad criteria, so both the criteria and the scoring will both be more ambiguous than that required for an in-depth assessment. Do not be afraid to split any of these criteria up into multiple finer classes of criteria if the survey requires it. This need would be most likely appear under "system functionality," as that is where most people's requirements greatly diverge.

In this process, we will assign one of three numeric values to each of the listed criteria. A score of zero indicates that the system does not meet the specified criteria. A score of one indicates that the system marginally meets the specified criteria. You can look on this as the feature is present and workable, but not to the degree you'd like it. Finally, a score of two indicates that the system fully meets or exceeds the specified requirement. In the sections below I will list some possible criteria for this table. However, you can adjust these descriptions or add a weighting factor, as many other protocols do, to adjust for the criticality of a given requirement.

Realistically, when it comes down to some of your potential evaluation criteria, the actuality is that for some of them, you can compensate for the missing factor in some other way. For other criteria, their presence or absence can be a drop-dead issue. That is, if the particular criteria or feature isn't present, then it doesn't matter how well any of the other criteria are ranked: that particular system is out of consideration. Deciding which, if any, criteria are drop-dead items should ideally be determined before you start your survey. This will not only be more efficient, in that it will allow you to cut off data collection at any failure point, but it will also help dampen the psychological temptation to fudge your criteria, retroactively deciding that a given criteria was not that important after all.

At this stage we are just wanting to reduce the number of systems for in-depth evaluation from potentially dozens, to perhaps three or four. As such, we will be refining our review criteria later, so if something isn't really a drop-dead criteria, don't mark it so. It's amazing the variety of feature tradeoffs people tend to make further down the line.

System functionality

While functionality is a key aspect of selecting a system, its assessment must be used with care. Depending on how a system is designed, a key function that you are looking for might not have been implemented, but in one system it can easily be added, while in another it would take a complete redesign of the application. Also consider the possibility of whether this function must be intrinsic to the application or if you can pair the application being evaluated with another application to cover the gap.

In most cases, you can obtain much of the functionality information from the project's web site or, occasionally, web sites. Some projects have multiple web sites, usually with one focused on project development and another targeting general users or application support. There are two different types of functionality to be tested. The first might be termed "general functionality" that would apply to almost any system. Examples of this could include the following:

- User authentication

- Audit trail (I'm big on detailed audit trails, as they can make the difference between between being in regulatory compliance and having a plant shut down. Even if you aren't required to have them, they are generally a good idea, as the records they maintain may be the only way for you to identify and correct a problem.)

- Sufficient system status display that the user can understand the state of the system

- Ability to store data in a secure fashion

We might term the second as "application functionality." This is functionality specifically required to be able to perform your job. As the subject matter expert, you will be the one to create this list. Items might be as diverse as the following:

For a laboratory information management system...

- Can it print bar coded labels?

- Can it track the location of samples through the laboratory, as well as maintain the chain-of-custody for the sample?

- Can it track the certification of analysts for different types of equipment, as well as monitor the preventive maintenance on the instruments?

- Can it generate and track aliquots of the original sample?

For a geographic information system (GIS)[2]...

- What range of map projections is supported?

- Does the system allow you to create custom parameters?

- Does it allow import of alternate system formats?

- Can it directly interface with geographic positioning devices (GPS)?

For a library management software system (LMSS) (or integrated library system [ILS], if you prefer, or even library information management system [LIMS]; have you ever noticed how scientists love to reuse acronyms, even within the same field?)...

- Can you identify the location of any item in the collection at a given time?

- Can you identify any items that were sent out for repair and when?

- Can it identify between multiple copies of an item and between same named items on different types of media?

- Can it handle input from RFID tags?

- Can it handle and differentiate different clients residing at the same address?

- If needed, can it correlate the clients age with the particular item they are requesting, in case you have to deal with any type of age appropriate restrictions?

- If so, can it be overridden where necessary (maintaining the appropriate records in the audit trail as to why the rule was overridden)?

For an archival record manager (This classification can cover a lot of ground due to all of the different ways that "archival" and "record" are interpreted.)...

- In some operations, a record can be any information about a sample, including the sample itself. By regulation, some types of information must be maintained essentially for ever. In others, you might have to keep information for five years, while you have to maintain data for another type for 50 years.

- Can the application handle tracking records for different amounts of time?

- Does the system automatically delete these records at the end of the retention period or does it ask for confirmation from a person?

- Can overrides on a particular sample be applied so that records are not allowed to be deleted, either manually of because they are past their holding date, such as those that might be related to any litigation, while again maintaining all information about the override and who applied the override in the audit trail?

- In other operations, an archival record manager may actually refer to the management of archival records, be these business plans, architectural plans, memos, art work, etc.

- Does the system keep track of the type of each record?

- Does the system support appropriate meta data on different types of records?

- Does it just record the items location and who is responsible for it, such as a work of art?

- If a document, does it just maintain an electronic representation of a document, such as a PDF file, or does it record the location of the original physical document, or can it do both?

- Can it manage both permanently archived items, such as a work of art or a historically significant document, and more transitory items, where your record retention rules say to save it for five, 10, 15 years, etc., and then destroy it?

- In the latter case, does the system require human approval before destroying any electronic documents or flagging a physical item for disposal? Does it require a single human sign-off or must a chain of people sign-off on it, just to confirm that it is something to be discarded by business rules and not an attempt to hide anything?

- This is a challenging quagmire, with frequently changing regulations and requirements. Depending on how you want to break it down, this heading can be segmented into two classes: electronic medical records (EMR) which can constitute an electronic version of the tracking of the patients health and electronic health records (EHR) which contains extensive information on the patient, including test results, diagnostic information, and other observations by the medical staff. For those who want to get picky, you can also subdivide the heading into imaging systems, such as X-rays and CAT scans and other specialized systems.

- Can all records be accessed quickly and completely under emergency situations?

- What functionality is in place to minimize the risk of a Health Insurance Portability and Accountability Act (HIPAA) violation?

- What functionality exists for automated data transfer from instruments or laboratory data systems to minimize transcription errors.

- How are these records integrated with any billing or other medical practice system?

- If integrated with the EMR and EHR record systems, does this application apply granular control over who can access these records and what information they are able to see?

For an enterprise resource planning (ERP) system...

- Davis has indicated that a generally accepted definition of an ERP system is a "complex, modular software integrated across the company with capabilities for most functions in the organization."[5] I believe this translates as "good luck," considering the complexity of the systems an ERP is designed to model and all of the functional requirements that go into that. It is perhaps for that reason that successful ERP implementations generally take several years.

- ERP systems generally must be integrated with other informatics systems within the organization. What types of interfaces does this system support?

- Is their definition of plug-and-play that you just have to configure the addresses and fields to exchange?

- Is their definition of interface that the system can read in information in a specified format and export the same, leaving you to write the middle ware program to translate the formats between the two systems?

- ERP systems generally must be integrated with other informatics systems within the organization. What types of interfaces does this system support?

From the above, it is easy to see why researchers have encountered difficulty in developing a fixed method that can be used to evaluate anything. At this point, it is quite acceptable to group similar functions together — as this is a high-level survey to identify which systems will definitely not be suitable — so we can focus our researches on those that might be. Researchers such as Sarrab and Rehman summarize system functionality as "achieving the user's requirements, correct output as user's expectations and verify that the software functions appropriately as needed."[6]

Suggested ratings are:

- Zero – Application does not support required functionality.

- One – Application supports the majority of functionality to at least an useable extent.

- Two – Application meets or exceeds all functional requirements.

Community

Many of the researchers I've encountered have indicated that community is the most critical factor of a FLOSS project. There are a number of reasons for this. First, the health and sustainability of a FLOSS project is indicated by a large and diverse community that is both active and responsive.[7] Additionally, the core programmers in a FLOSS project are generally few, and it is the size of the project community that determines how well-reviewed the application is, ensuring quality control of the projects code. Finally, the size of the project community correlates with the lifetime of the project.

Suggested ratings are:

- Zero – No community exists. No development activity is observable. Project is dead.

- One - Community is small and perhaps insular. May consist of just one or two programmers with perhaps a small number of satellite users.

- Two – Community is large and dynamic, with many contributors and active communication between the core developers and the rest of the community. Community is responsive to outside inquiries.

System cost

Since the main goal of this survey procedure is to evaluate FLOSS products, the base system cost for the software will normally be low, frequently $0.00. However, this is not the only cost you need to consider. Many of these costs could potentially be placed under multiple headings depending on how your organization is structured. No matter how it itemizes them, there will be additional costs. Typical items to consider include the following:

- Cost of supporting software – e.g. Does it require a commercial data base such as Oracle or some other specialized commercial software component?

- Cost of additional hardware – e.g. Does the system require the purchase of additional servers or storage systems? Custom hardware interfaces?

- Cost of training – e.g. How difficult or intuitive is the system to operate? This will impact the cost of training that users must receive. Keep in mind this cost will exist whether you are dealing with an Open Source or proprietary system. Are costs for system manuals and other required training material included? Some proprietary systems don't, or might perhaps send a single hard copy of the manuals. Who will perform the training? Whether you hire someone from outside or have some of your own people do it, there will be a cost, as you would be pulling people away from from their regular jobs.

- Cost of support – e.g. Is support through a commercial organization or the Open Source development group? If the former, what are their contract costs? While harder to evaluate, what is the turnaround time from when you request the support? Immediate? Days? Sometime? The amount of time you have to wait for a problem to be fixed, is definitely a "cost," whether it means your system is dead in the water or just not as efficient and productive as it could be.

The primary issue here is to be realistic in your evaluation. It's hard to believe that anyone would assume that there were no associated costs with using FLOSS, or even proprietary software for that matter, but apparently there are. Foote does a good job of exploring and disproving this belief, showing all of the items that go into figuring the total cost of ownership (TCO), which should be representative of FLOSS applications.[8] I wouldn't call them hidden costs, at least not with the FLOSS systems, but rather costs that are overlooked, as are so many other things when people focus on a single central item. To quote Robert Heinlein, "TANSTAAFL!" (There ain't no such thing as a free lunch!)[9]

The important thing here is to pay attention to all of the interactions taking place. For example, if you wished to interface a piece of equipment to your FLOSS application, remember to factor in the cost of the interface. Despite what some advertisers think, data bits don't just disappear from one place and magically appear in another. It is very easy to lose track of where the costs are. Keep in mind that many items, such as training, you will have a cost either way you go. If a proprietary vendor says they will provide free training, you can be assured that the cost for it is included in the contract. But if you take that route, be very careful to read the contract thoroughly, as not all vendors include any training at all. It would be very easy to end up having to pay for "optional" training.

Suggested ratings are:

- Zero – Installation and support costs are excessive and greatly exceed any available budget.

- One – System may require purchase of additional hardware or customization. These costs, along with training costs, are within potential budget.

- Two – Installation and support costs are relatively minor, with no additional hardware required. System design is relatively intuitive with in-depth documentation and active support from the community.

Popularity

This heading can be somewhat confusing in terms of how it is interpreted even though most of the recommendations we looked at include it. Popularity is sometimes considered to be similar to market share. That is, of the number of people using a specific application in a given class of open-source applications, what percentage do they represent out of all people running applications in the class? If the majority of people are using a single system, this might indicate that it is the better system, or it might just indicate that other systems are newer and, even if potentially better, people haven't migrated over to them yet. An alternate approach to examining it is to ask how many times the application has been downloaded. In general, the larger the market share or the number of downloads, the more likely that a given product is to be usable. This is not an absolute, as people may have downloaded the application for testing and then rejected it or downloaded it simply to game the system, but it is a place to start. The point of this question usually isn't to determine how popular a particular application is, but rather to ensure that it is being used and it is a living (as opposed to an abandoned) project. Be leery of those applications with just a few downloads. If there is a large group of people using the application, there is a higher probability that the application works as claimed.

At the same time, learning who some of the other users of this application are can give you some insight of how well it actually works. As Silva reminds us, "the best insight you can get into a product is from another user who has been using it for a while."[7]

Suggested ratings are:

- Zero – No other discernible users or reality of listed users is questionable.

- One – Application is being used by multiple groups, but represents only a small fraction of its "market." Appears to be little 'buzz' about the application.

- Two – Application appears to be widely used and represents a significant fraction of its "market." This rating is enhanced if listed users include major commercial organizations.

Product support

Product support can be a critically important topic for any application. Whether you are selecting a proprietary application or a FLOSS one, it is vitally important to ensure that you will have reliable support available. Just because you purchased a proprietary program will not ensure that you have the support you need. Some vendors include at least limited support in their contracts, others don't. However, over the years I've found that even purchasing a separate support contract doesn't ensure that the people who answer the phone will be able to help you. When making the final decision, don't make assumptions: research!

Support can be broken down into several different sub-categories:

- User manuals – Do they exist? What is their quality?

- System managers manuals – Do they exist? What is their quality?

- Application developers manuals – Do they exist? What is their quality?

- System design manuals – Do they exist? What degree of detail do they provide?

- For database-related projects, is an accurate and detailed entity relationship diagram (ERD) included?

- Have any third-party books been written about this application? Are they readily available, readable, and easy to interpret?

- Is product support provided directly by the application development community?

- Is product support provided by an independent user group, separate from the development group?

- Is commercial product support available?

- If so, what are their rates?

- Are on-site classes available?

- Are online training classes available?

- A frequently overlooked type of product support is how well documented the program code is. Are embedded comments sparse or frequent and meaningful? Are the names of program variables and functions arbitrary or meaningful?

Another factor in evaluating product support is whether you have anyone on your team with the expertise to understand it. That is not a derogatory statement: depending on the issue, someone might have to modify an associated data base, the application code, or the code in a required library, whether to correct an error or add functionality. Do any of your people have expertise in that language? Would someone have to start from scratch or do you have the budget to hire an outside consultant? Even if you have no desire to modify the code, having someone on the project that understands the language used can be a big help in discerning how the program works, as well as determining how meaningful the program comments are.

Suggested ratings are:

- Zero – Limited or no support available. Documentation essentially non-existent, source code minimally documented, no user group support, and erratic response from the developers.

- One – Documentation scattered and of poor quality. Support from user group discouraged and no commercial support options exist.

- Two - Excellent documentation, including user, system manager, and developer documentation. Enthusiastic support from the user community and developers. Commercial third-party support available for those desiring it. Third-party books may also have been released documenting the use of this product.

Maintenance

This is another item that can be interpreted in multiple ways. One way to look at it is how quickly the developers respond to any bug report. Depending on the particular FLOSS project, you may actually be able to review the problem logs for the system and see what the average response time was between a bug being reported and the problem resolved. In some cases this might be hours or days, in others it is never resolved. To be fair, don't base your decision on a single instance in the log file, as some bugs are much easier to find and fix than others. However, a constant stream of open bugs or bugs that have only been closed after months or years should make you leery.

To others this question is to determine whether development is still taking place on the project or if it is dead. Alternately, it is like asking if anybody is maintaining the system and correcting bugs when they are discovered. There are several ways of addressing this issue. Examining the problem logs described above is one way of checking for project activity, while another is the check the release dates for different versions of the application. Are releases random or on a temporal schedule? How long has it been since the application was last updated? If the last release date was over a year or two ago, this is cause for concern and should trigger a closer look. Just because there hasn't been a recent release does not mean that the project has been abandoned. If the development of the app has advanced to the point where it is stable and no other changes need to be made you may not see a recent release because none is needed. However, the latter is very rare, both because bugs can be so insidious and because a lot of programmers can't resist just tweaking things, to make them a wee bit better. If a project is inactive, but everything else regarding the project looks good, it might be possible to work with the developers to revitalize it. While this course of action is feasible, it is important to realize that it is taking on a great deal of responsibility and an unknown amount of expense. The latter is particularly true as you may be having to assign one or more developers to work on the project full time.

Suggested ratings are:

- Zero – No releases, change log activity, or active development discussion in message forums in over two years.

- One – No releases, change log activity or active development discussions in message forums for between one and two years.

- Two – A new version has been released within the year, change logs show recent development activity, and there is active development discussion in the message forums.

Reliability

Reliability is the degree to which you can rely on the application to function properly. Of course, the exact definition becomes somewhat more involved. The reliability of a system is defined as the ability of an application to operate properly under a specified set of conditions for a specified period of time. Fleming states that "[o]ne aspect of this characteristic is fault tolerance that is the ability of a system to withstand component failure. For example if the network goes down for 20 seconds then comes back the system should be able to recover and continue functioning."[10]

Because of its nature, the reliability of a system is hard to measure, as you are basically waiting to see how frequently it goes down. While we'd like to aim for never, one should probably be satisfied if the system recovered properly after the failure. In most instances, unless you are actually testing a system under load, the best that you can hope for is to observe indicators from which you can infer its reliability. As a generality, the more mature a given code base is, the more reliable it is, but keep in mind that this is a generality; there are always incidents that can occur to destabilize every thing. Unfortunately, it frequently feels as if the problem turns out to be something that you would swear was totally unrelated. Face it, Murphy is just cleverer than you.[11]

Wheeler also reminds us that "[p]roblem reports are not necessarily a sign of poor reliability - people often complain about highly reliable programs, because their high reliability often leads both customers and engineers to extremely high expectations."[12] One thing that can be very reassuring is to see that the community takes reliability seriously by continually testing the system during development.

Suggested ratings are:

- Zero – Error or bug tracking logs show a high incident of serious system problems. Perhaps worse, no logs of reported problems are kept at all, particularly for systems that have been in release for less than a year.

- One – Error logs show relatively few repeating or serious problems, particularly if these entries correlate with entries in the change logs indicating that a particular problem has been corrected. System has been in release for over a year.

- Two - Error logs are maintained, but show relatively few bug reports, with the majority of them being minor. A version of the system, using the same code base, has been in release for over two years. Developers both distribute and run a test suite to confirm proper system operation.

Performance

Performance of an application is always a concern. Depending on what the application is trying to do and how the developers coded the functions, you may encounter a program that works perfectly but is just too unresponsive to use. Sometimes this is a matter of hardware, other times it is just inefficient coding, such as making sequential calls to a database to return part of a block of data rather than making a single call to return all of the block at once. Performance and scalability are usually closely linked.

You might be able to obtain some information on the system's actual performance from the project web site, but it is hard to tell if this is for representative or selected data. Reviewing the project mailing list may provide a more accurate indication of the system's performance or any performance problems encountered. Testing the system under your working conditions is the only way to make certain what the system's actual performance is. Unfortunately, the steps involved in setting up such a test system require much more effort than a high-level survey will allow. If any user reviews exist, they may give an insight into the system's performance. Locating other users through the project message board might be a very useful resource as well, particularly if they handle the same projected work loads that you are expecting.

It is difficult to define performance ratings without having knowledge of what the application is supposed to do. However, for systems that interact with a human operator, the time lapse between when a function is initiated and when the system responds can be suggestive. If the project maintains a test suite, particularly one containing sample data, reviewing its processing time can give an insight to the system's performance as well. Response delays of even a few seconds in frequently executed functions will not only kill the overall process performance but also result in users resistive to using the system.

Suggested ratings are:

- Zero – A system designed to be interactive fails to respond in an acceptable time frame. For many types of applications it is reasonable to expect an almost instantaneous response, particularly for screen management functions. It is not reasonable for a system to take over a minute, or even five seconds to switch screens or acknowledge an input, particularly in regards to frequently executed functions such as results entry, modification, or review.. A system that batch processes data maxes out under data loads below that of your current system.

- One – A system designed to be interactive appears to lag behind human entry for peripheral functions, but frequently accessed functions, such as results entry, modification, or review appear to respond almost instantaneously. A system that batch processes data maxes out under your existing data loads.

- Two – System is highly responsive, showing no annoying delayed responses. A system that batch processes data can process several times your current data load before maxing out.

Scalability

Scalability ensures that the application will operate over the data scale range you will be working with. In general, it means that if you test the system functionality with a low data load, the application will "scale up" to handle larger data loads. This may be handled by expanding from a single processor to a larger cluster or parallel processing system. Note that for a given application, throwing more hardware at it may not resolve the problem, as the application needs to be designed to take advantage of that additional hardware. Another caveat is to carefully examine the flow of data through your system. The processor is not the only place you can encounter roadblocks limiting scalability. Other possibilities include how quickly the system can access the needed data. If the system is processing the data faster than it can access it, adding more computer power will not resolve the problem. The limiting issues might be the bandwidth of your communication lines, the access speed of the devices that the data is stored on, or contention for needed resources with other applications. As with many aspects of selecting a system and getting it up and running, making assumptions is the real killer.

Silva indicates that many open-source applications are built on the LAMP (Linux, Apache, MySQL, PHP/Perl/Python) technology stack and that this is one of the most scalable configurations available.[7] However, you should ensure that there is evidence that the application has been successfully tested that way; the performance survey and test phases are never a good time to start making assumptions. A look at the application's user base will likely identify someone who can provide this feedback.

Suggested ratings are:

- Zero – System does not support scaling, whether due to application design or restriction of critical resources, such as rate of data access.

- One – System supports limited scaling, but overhead or resource contention, such as a data bottleneck, results in a quick performance fall off.

- Two - System is balanced and scales well, supporting large processing clusters or cloud operations without any restrictive resource pinch-points.

Usability

Usability means pretty much what it says. The concern here is not how well the program works but rather how easy is it to learn and use. The interface should be clear, intuitive, and help guide the user through the programs operation. Despite Steve Jobs, there is a limit to how intuitive an interface can be, thus the operation of the interface should be clearly documented in the user manual. Ideally the system will support a good context-sensitive help system as well. The best help systems may also provide multimedia support so that the system can actually show you how something should be done, rather than trying to tell you. I've found that frequently a good video can be worth well more than a thousand words! No matter how much time is spent writing the text for a manual or help system, it will always be unclear to somebody, if only because of the diversity of the backgrounds of people using it.

The interface between the operator and the computer may vary with the purpose of the application. While with new applications you are more likely to encounter a graphic user interface (GUI), there are still instances where you may encounter a command-line interface. Both types of interfaces have their advantages, and there are many times when something is actually easier to do with a command-line interface. The important thing to remember is that it is your interface with the system. It should be easy to submit commands to the system and interpret its response without having to hunt through a lot of extraneous information. This is normally best done by keeping the interface as clean and uncluttered as possible. As Abran et al. have pointed out, the usability of a given interface varies with "the nature of the user, the task and the environment."[13]

If you would prefer a somewhat drier set of definitions, Abran et al. also extracted the definitions of usability from a variety of ISO standards, and they are included in the following table:

|

Table 3.: ISO Usability Definitions[13]

Fleming translates this into a somewhat more colloquial statement: “Usability only exists with regard to functionality and refers to the ease of use for a given function.” For those interested in learning more about the usability debate, I suggest that you check out Andreasen et al.[14] and especially Saxena and Dubey.[15]

In addition to usability, it has been highly recommended to me that, if budget exists, it is also helpful to have a user experience (UX) specialist on the review team as well.[16][17]

Suggested ratings are:

- Zero – System is difficult to use, frequently requiring switching between multiple screens or menus to perform a simple function. Operation of system discourages use and can actively antagonize users.

- One – System is useable but relatively unintuitive regarding how to perform a function. Both control and output displays tend to be cluttered, increasing the effort required to operate the system and interpret its output.

- Two – System is relatively intuitive and designed to help guide the user through its operation. Ideally, this is complemented with a context-sensitive help system to minimize any uncertainties in operation, particularly for any rarely used functions.

Security

This heading overlaps with functionality and is usually difficult to assess from a high-level evaluation. While it is unlikely that you will observe any obvious security issues during a survey of this type, there are indicators that can provide a hint as to how much the applications designers and developers were concerned with security.

The simplest approach is to simply take a look at whether they've done anything that shows a concern for possible security vulnerabilities. The following are a few potential indicators that you can look for, but just being observant when seeing a demonstration can also tell you a lot.

- Is there any mention of security in the systems documentation? Does it describe any potential holes that you need to guard against or configuration changes you might make to your system environment to reduce any risks.

- Do the manuals describe any type of procedure for reporting bugs or observed system issues?

- If you have access to the developers, discuss any existing process for reporting and tracking security issues.

- Check their error logs and see if any security related issues are listed. If they are, what was the turnaround time to have them repaired, or were they repaired?

- If the developers are security-conscious, they will almost certainly want to prove to whomever received the program that it hadn't been modified by a third party. The basic way of doing this is by separately sending you what is known as an MD5 hash. This is a distinctive number generated by another program from your applications code. If you generate a new MD5 hash from the code you receive, these numbers should match. If they don't match, that means that something in the code has been altered. For developers with more concern, they might generate a cryptographic signature incorporating the code. This will tell you who sent the code as well as indicate whether the program was altered.

- Depending on the type of program, does it allow a 21 CFR Part 11-compliant implementation or conform to a similar standard?

- Depending on the type of application, does it include a detailed audit trail and security logs?

Suggested ratings are:

- Zero – System shows no concern with security or operator tracking. Anyone can walk up to it and execute a function without having to log in. System doesn't support even a minimal audit trail. Any intermediate files are easily accessible and modifiable outside of the system.

- One - System shows some attempt at user control but supports only a minimal audit trail. It may support a user table, but it fails to follow best practices by allowing user records to be deleted. Audit trail is modifiable by power users.

- Two – Maintaining system security is emphasized in the user documentation. A detailed audit trail is maintained that logs all system changes and user activities. Application is distributed along with an MD5 hash or incorporated into an electronic signature by the developer.

Flexibility/Customizability

The goal of this topic is to identify how easily the functionality of this application can be altered or how capable it is of handling situations outside of its design parameters. Systems are generally designed to be either configurable or customizable, sometimes with a combination of both.

- Configurability - This refers to how much or how easily the functionality of the system can be altered by changing configuration settings. Configurable changes do not require any changes to the application code and generally simplify future application upgrades.

- Customizability - This refers to whether the functionality of the system must be altered by modifying the applications code. As we are targeting open-source systems, the initial assumption might be that they are all customizable; however, this can be affected by the type of license that the application is released under. More practically, how easily an application can be customized depends on how well it is designed and documented. While in theory you might be able to customize a system, if it is a mass of spaghetti code and poorly compartmentalized, it might be a nightmare to do. In any case, if you customize the system code, you may not be able to take advantage of any system upgrades without having to recreate the customizations in them.

- Extendability – While you won't find this term in most definitions, it is a hybrid system that is both configurable and customizable. It is normally configured using the same approaches as a standard configurable system. However, the ability to be upgraded remains by feeding any code customizations through an aApplication program interface (API). As long as this API is maintained between upgrades, any extension modules should continue to work.

In addition, a well designed application is usually modular, which makes program changes easier. In an ideal world, any application that you may have to customize will be specifically designed to make customization simple. There are a variety of ways of doing this. Perhaps the easiest, for an application that is designed to be modular, would be to support optional software plug-in modules that added extra functionality. Unless these were "off-the-shelf" modules, you would need to confirm that there was appropriate documentation regarding their design and use. This would most likely be done via an API, as discussed above. Depending on the system, you could transfer data through the API or have one system control another, the caveat again being that you need to have thorough documentation of the API and its capabilities.

In the majority of situations, I strongly encourage you to stick with a configurable system, assuming you can find one that meets your needs. Customizing a system is rarely justified unless you are working situationally. While almost everyone feels that their needs are unique, the reality is that a well-designed configurable system can generally meet your needs.

Suggested ratings are:

- Zero – Application does not support configuration and shows evidence of being difficult to customize, usually indicated by use of spaghetti code rather than modular design, poorly named variables and functions, along with cryptic or no embedded comments. In a worst-case situations, the source code has been deliberately obfuscated to make the system even less customizable.

- One – Application supports minor configuration capabilities or is moderately difficult to modify. The latter might be due to minimal application documentation or poor programming practices, but not deliberate obfuscation.

- Two – Application is highly configurable and accompanied by detailed documentation guiding the user through its configuration. Code is clearly documented and commented. It also follows good programming practices with highly modularized functionality, simplifying customization of the programs source code, ideally via an API.

Interoperability/Integration

Determine whether this software will work with the rest of the systems that you plan to use. Exactly what to check for is up to you, as you are the only one who has any idea what you will be doing with it. The following is a list of possible items that might conceivably fall under this heading:

- Does it understand the data and control protocols to talk to and control external equipment such as a drill press, telescope, or sewing machine (as appropriate)?

- Is it designed to conform to both electronic and physical standards to avoid being locked into a single supplier?

- Does it handle localization to avoid conflicts with local systems?

Suggested ratings are:

- Zero – System provides no support for integration with other applications. File formats and communication protocols used are not documented.

- One – System is not optimized for either interoperability or integration with other systems. However, it does use standard protocols so that other applications can interpret its activities. Application likely does not include an API or any existing API is undocumented.

- Two – Application is optimized for interoperability and integration with other systems. All interfaces and protocols, particularly for any existing API, are clearly documented and accompanied with sample code.

Legal/License issues

This section refers to the type of license that the application was released under and the associated legal and functional implications. With proprietary software, many people never bother to read the software license either because they don't care or think they have no choice but to accept them. Be that as it may, when selecting a FLOSS application, it is wise to take the time to read the accompanying license: it can make a big difference in what you can do with the software. First, if the software has no license, legally you have no right to even download the software, let alone run it.[18][19]

While you have no control over which license the application was released under, you definitely control whether you wish to use it under the terms of the license. Which types of licenses are acceptable strongly depends on what you plan to do with the application. Do you intend to use the application as is or do you plan to modify it? If the latter, what do you plan to do with the modified code? Do you want to integrate this FLOSS application with another, either proprietary or open-source? Does this license clash with theirs? Your right to do any of these things is controlled by the license, so it must be considered very carefully, both in the light of what you want to do now and what you might want to do in the future.

One of the first things to do is to confirm that the application is even open-source; just being able to see the source code is insufficient. To qualify as open-source the license must comply with the 10 points listed in the Open Source Definition maintained by the Open Source Initiative (OSI).[20] Pulling just the headers, their web site lists these as the required criteria:

- Free redistribution

- Source code

- Derived works

- Integrity of the author's source code

- No discrimination against persons or groups

- No discrimination against fields of endeavor

- Distribution of licenses

- License must not be specific to a product

- License must not restrict other software

- License must be technology-neutral

While somewhat cryptic to look at cold, each of the headings is associated with a longer definition, which primarily boils down to the freedom to use, modify, and redistribute the software. For those wanting to know the justification for each item, there is also an annotated version of this definition.[21] At present, OSI recognizes 71 distinct Open Source licenses, not counting the WTFPL.[22]

One of the functions of the OSI is to review prospective licenses to determine whether they meet these criteria and are indeed open-source. The OSI web site maintains a list of popular licenses, along with links to all approved licenses[23], sorted by name or category. These lists include full copies of the licenses. While clearer than most legal documents, they can still be somewhat confusing, particularly if you are trying to select one. If the definitions seem to blur, you might want to check out the Software Licenses Explained in Plain English web page maintained by TL;DRLegal.[24] As long as the license is classified as an open-source license and you aren't planning to modify it yourself or integrate it into other systems, you probably won't have any problems. However, if you have any uncertainty at all, it might be worth making the investment to discuss the license with an intellectual property lawyer who is familiar with OSS/FS before you inadvertently commit your organization to terms that conflict with their plans.

If your plans include the possible creation of complementary software, I suggest a quick read of Wheeler's essay on selecting a license.[25] The potential problem here is that most open-source and proprietary applications contain multiple libraries or sub-applications, each with their own license. Depending on which licenses the original developers used, they may be compatible with other applications you wish to use, or they may be incompatible. The following figure illustrates some of these complications:

|

Just to be absolutely clear, the license of FLOSS and proprietary applications generally disavows any type of warranty that the program will work and disclaims any liability for any damage or injuries that result from the programs use. Now, having said that, you may be able to purchase a warranty separately; just don't anticipate any legal recourse in the event of a system failure.

Suggested ratings are:

- Zero – Application does not include a license or license terms are unacceptable or incompatible with those of other applications being used.

- One – Application includes a license, but it contains potential conflicts with other licenses or allowable use that will need to be carefully reviewed.

- Two – License is fully open, allowing you to freely use the software.

Completing the evaluation

Summary

Glossary

References

- ↑ Deprez,Jean-Christophe; Alexandre, Simon (2008). "Comparing Assessment Methodologies for Free/Open Source Software: OpenBRR and QSOS". In Jedlitschka, Andreas; Salo, Outi. Product-Focused Software Process Improvement. Springer. pp. 189-203. doi:10.1007/978-3-540-69566-0_17. ISBN 9783540695660.

- ↑ Netelera, Markus; Bowmanb, M. Hamish; Landac, Martin; Metz, Markus (May 2012). "GRASS GIS: A multi-purpose open source GIS". Environmental Modelling & Software 31: 124–130. doi:10.1016/j.envsoft.2011.11.014.

- ↑ Khanine, Dmitri (7 May 2015). ""Meaningful Use" Regulations of Medical Information in Health IT". Toad World - Oracle Community. Dell Software, Inc. http://www.toadworld.com/platforms/oracle/b/weblog/archive/2015/05/07/quot-meaningful-use-quot-regulations-of-medical-information-in-health-it. Retrieved 12 June 2015.

- ↑ Khanine, Dmitri (6 May 2015). "Open-Source Medical Record Systems of 2015". Toad World - Oracle Community. Dell Software, Inc. http://www.toadworld.com/platforms/oracle/b/weblog/archive/2015/05/06/open-source-medical-record-systems-of-2015. Retrieved 28 May 2015.

- ↑ Davis, Ashley (2008). "Enterprise Resource Planning Under Open Source Software". In Ferran, Carlos; Salim, Ricardo. Enterprise Resource Planning for Global Economies: Managerial Issues and Challenges. Hershey, PA: IGI Global. doi:10.4018/978-1-59904-531-3.ch004. ISBN 9781599045313.

- ↑ Sarrah, Mohamed; Rehman, Osama M. Hussain (2013). "Selection Criteria of Open Source Software: First Stage for Adoption". International Journal of Information Processing and Management 4 (4): 51–58. doi:10.4156/ijipm.vol4.issue4.6.

- ↑ 7.0 7.1 7.2 Silva, Chamindra de (20 December 2009). "10 questions to ask when selecting open source products for your enterprise". TechRepublic. CBS Interactive. http://www.techrepublic.com/blog/10-things/10-questions-to-ask-when-selecting-open-source-products-for-your-enterprise/. Retrieved 13 April 2015.

- ↑ Foote, Amanda (2010). "The Myth of Free: The Hidden Costs of Open Source Software". Dalhousie Journal of Interdisciplinary Management 6 (Spring 2010): 1–9. doi:10.5931/djim.v6i1.31.

- ↑ Heinlein, Robert A. (1997) [1966]. The Moon Is a Harsh Mistress. New York, NY: Tom Doherty Associates. pp. 8–9. ISBN 9780312863555.

- ↑ Fleming, Ian (2014). "ISO 9126 Software Quality Characteristics". SQA Definition. http://www.sqa.net/iso9126.html. Retrieved 18 June 2015.

- ↑ Taylor, Dave (2015). "Murphy's Laws". Dave Taylor's Educational & Guidance Counseling Services. http://davetgc.com/Murphys_Law.html. Retrieved 08 August 2015.

- ↑ Wheeler, David A. (5 August 2011). "How to Evaluate Open Source Software / Free Software (OSS/FS) Programs". dwheeler.com. http://www.dwheeler.com/oss_fs_eval.html. Retrieved 19 March 2015.

- ↑ 13.0 13.1 Abran, Alain; Khelifi, Adel; Suryn, Witold; Seffah, Ahmed (2003). "Usability Meanings and Interpretations in ISO Standards". Software Quality Journal 11 (4): 325–338. doi:10.1023/A:1025869312943.

- ↑ Andreasen, M.S.; Nielsen, H.V.; Schrøder, S.O.; Stage, J. (2006). "Usability in open source software development: Opinions and practice" (PDF). Information Technology and Control 35 (3A): 303-312. http://itc.ktu.lt/itc353/Stage353.pdf.

- ↑ Saxena, S.; Dubey, S.K. (January 2013). "Impact of Software Design Aspects on Usability". International Journal of Computer Applications 61 (22): 48-53. doi:10.5120/10233-5043. http://www.ijcaonline.org/archives/volume61/number22/10233-5043.

- ↑ AllAboutUX.org volunteers (8 October 2010). "User experience definitions". All About UX. http://www.allaboutux.org/ux-definitions. Retrieved 08 August 2015.

- ↑ Gube, Jacob (5 October 2010). "What Is User Experience Design? Overview, Tools And Resources". Smashing Magazine. Smashing Magazine GmbH. http://www.smashingmagazine.com/2010/10/what-is-user-experience-design-overview-tools-and-resources/. Retrieved 08 August 2105.

- ↑ Reitz, Kenneth (2014). "Choosing a License". The Hitchhiker's Guide to Python!. http://docs.python-guide.org/en/latest/writing/license/. Retrieved 13 May 2015.

- ↑ Atwood, Jeff (3 April 2007). "Pick a License, Any License". Coding Horror: Programming and Human Factors. http://blog.codinghorror.com/pick-a-license-any-license/. Retrieved 13 May 2015.

- ↑ "The Open Source Definition". Open Source Initiative. 2015. http://opensource.org/osd. Retrieved 17 June 2015.

- ↑ "The Open Source Definition (Annotated)". Open Source Initiative. 2015. http://opensource.org/docs/definition.php. Retrieved 28 May 2015.

- ↑ Hocevar, Sam (2015). "WTFPL – Do What the Fuck You Want to Public License". WTFPL.net. http://www.wtfpl.net/. Retrieved 16 June 2015.

- ↑ "Licenses & Standards". Open Source Initiative. 2015. http://opensource.org/licenses. Retrieved 13 May 2015.

- ↑ "TL;DRLegal - Software Licenses Explained in Plain English". FOSSA, Inc. 2015. https://tldrlegal.com/. Retrieved 16 June 2015.

- ↑ Wheeler, David A. (16 February 2014). "Make Your Open Source Software GPL-Compatible. Or Else". dwheeler.com. http://www.dwheeler.com/essays/gpl-compatible.html. Retrieved 20 March 2015.

- ↑ Wheeler, David A. (27 September 2007). "The Free-Libre / Open Source Software (FLOSS) License Slide". dwheeler.com. http://www.dwheeler.com/essays/floss-license-slide.html. Retrieved 28 May 2015.

Notes

This article has not officially been published in a journal. However, this presentation is largely faithful to the original paper. The content has been edited for grammar, punctuation, and spelling. Additional error correction of a few reference URLs and types as well as cleaning up of the glossary also occurred. Redundancies and references to entities that don't offer open-source software were removed from the FLOSS examples in Table 2. DOIs and other identifiers have been added to the references to make them more useful. This article is being made available for the first time under the Creative Commons Attribution-ShareAlike 4.0 International license, the same license used on this wiki.