Difference between revisions of "Journal:Critical analysis of the impact of AI on the patient–physician relationship: A multi-stakeholder qualitative study"

Shawndouglas (talk | contribs) |

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

||

| (One intermediate revision by the same user not shown) | |||

| Line 19: | Line 19: | ||

|download = [https://journals.sagepub.com/doi/reader/10.1177/20552076231220833 https://journals.sagepub.com/doi/reader/10.1177/20552076231220833] (PDF) | |download = [https://journals.sagepub.com/doi/reader/10.1177/20552076231220833 https://journals.sagepub.com/doi/reader/10.1177/20552076231220833] (PDF) | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

'''Objective''': This qualitative study aims to present the aspirations, expectations, and critical analysis of the potential for [[artificial intelligence]] (AI) to transform the patient–physician relationship, according to multi-stakeholder insight. | '''Objective''': This qualitative study aims to present the aspirations, expectations, and critical analysis of the potential for [[artificial intelligence]] (AI) to transform the patient–physician relationship, according to multi-stakeholder insight. | ||

| Line 38: | Line 31: | ||

==Introduction== | ==Introduction== | ||

Recent developments in [[large language model]]s (LLM) have attracted public attention in regard to [[artificial intelligence]] (AI) development, raising many hopes among the wider public as well as healthcare professionals. After [[ChatGPT]] was launched in November 2022, producing human-like responses, it reached 100 million users in the following two months. | Recent developments in [[large language model]]s (LLM) have attracted public attention in regard to [[artificial intelligence]] (AI) development, raising many hopes among the wider public as well as healthcare professionals. After [[ChatGPT]] was launched in November 2022, producing human-like responses, it reached 100 million users in the following two months.<ref name=":0">{{Cite journal |last=Meskó |first=Bertalan |last2=Topol |first2=Eric J. |date=2023-07-06 |title=The imperative for regulatory oversight of large language models (or generative AI) in healthcare |url=https://www.nature.com/articles/s41746-023-00873-0 |journal=npj Digital Medicine |language=en |volume=6 |issue=1 |pages=120 |doi=10.1038/s41746-023-00873-0 |issn=2398-6352 |pmc=PMC10326069 |pmid=37414860}}</ref> Many suggestions for its potential applications in healthcare have appeared on social media. These have ranged from using AI to write outpatient clinic letters to insurance companies, thereby saving time for the practicing physician, to offering advice to physicians on how to diagnose a patient.<ref>{{Cite web |last=Johnson, K. |date=24 April 2023 |title=ChatGPT Can Help Doctors–and Hurt Patients |work=Wired |url=https://www.wired.com/story/chatgpt-can-help-doctors-and-hurt-patients/ |accessdate=05 July 2023}}</ref> Such an AI-enabled chatbot-based symptom checker can be used as a self-triaging and patient monitoring tool, or AI can be used for translating and explaining medical notes or making diagnoses in a patient-friendly way.<ref name=":1">{{Cite journal |last=Dave |first=Tirth |last2=Athaluri |first2=Sai Anirudh |last3=Singh |first3=Satyam |date=2023-05-04 |title=ChatGPT in medicine: an overview of its applications, advantages, limitations, future prospects, and ethical considerations |url=https://www.frontiersin.org/articles/10.3389/frai.2023.1169595/full |journal=Frontiers in Artificial Intelligence |volume=6 |pages=1169595 |doi=10.3389/frai.2023.1169595 |issn=2624-8212 |pmc=PMC10192861 |pmid=37215063}}</ref> Therefore, the introduction of ChatGPT represented a potential benefit not only for healthcare professionals but also for patients themselves, particularly with the improved version of GPT-4. In addition to ChatGPT, various other LLMs are at different stages of development, for example, BioGPT (Massachusetts Institute of Technology, Boston, MA, USA), LaMDA (Google, Mountainview, CA, USA), Sparrow (Deepmind AI, London, UK), Pangu Alpha (Huawei, Shenzen, China), OPT-IML (Meta, Menlo Park, CA, USA), and Megatron Turing MLG (Nvidia, Santa Clara, CA, USA).<ref name=":2">{{Cite journal |last=Li |first=Hanzhou |last2=Moon |first2=John T |last3=Purkayastha |first3=Saptarshi |last4=Celi |first4=Leo Anthony |last5=Trivedi |first5=Hari |last6=Gichoya |first6=Judy W |date=2023-06 |title=Ethics of large language models in medicine and medical research |url=https://linkinghub.elsevier.com/retrieve/pii/S2589750023000833 |journal=The Lancet Digital Health |language=en |volume=5 |issue=6 |pages=e333–e335 |doi=10.1016/S2589-7500(23)00083-3}}</ref> | ||

However, despite the wealth of potential applications for LLM, including cost-saving and time-saving benefits that can be used to increase productivity, there has been widespread acknowledgement that it must be used wisely. | However, despite the wealth of potential applications for LLM, including cost-saving and time-saving benefits that can be used to increase productivity, there has been widespread acknowledgement that it must be used wisely.<ref name=":1" /> Therefore, the critical awareness approach mostly relates to underlying ethical issues such as transparency, accountability, and fairness.<ref name=":3">{{Cite journal |last=De Angelis |first=Luigi |last2=Baglivo |first2=Francesco |last3=Arzilli |first3=Guglielmo |last4=Privitera |first4=Gaetano Pierpaolo |last5=Ferragina |first5=Paolo |last6=Tozzi |first6=Alberto Eugenio |last7=Rizzo |first7=Caterina |date=2023-04-25 |title=ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health |url=https://www.frontiersin.org/articles/10.3389/fpubh.2023.1166120/full |journal=Frontiers in Public Health |volume=11 |pages=1166120 |doi=10.3389/fpubh.2023.1166120 |issn=2296-2565 |pmc=PMC10166793 |pmid=37181697}}</ref> Critical thinking is essential for physicians to avoid blindly relying only on the recommendations of AI algorithms, without applying clinical reasoning or reviewing current best practices, which could lead to compromising the ethical principles of beneficence and non-maleficence.<ref>{{Cite journal |last=Beltrami |first=Eric J. |last2=Grant-Kels |first2=Jane Margaret |date=2023-03 |title=Consulting ChatGPT: Ethical dilemmas in language model artificial intelligence |url=https://linkinghub.elsevier.com/retrieve/pii/S019096222300364X |journal=Journal of the American Academy of Dermatology |language=en |pages=S019096222300364X |doi=10.1016/j.jaad.2023.02.052}}</ref> Moreover, when using LLM in the healthcare context, the provision of [[Protected health information|sensitive health information]] by feeding up the algorithmic black box might be met with a lack of transparency in terms of the ways in which the commercial companies will use or store such [[information]]. In other words, such information might be made available to company employees or potential hackers.<ref name=":2" /> In addition, from a [[public health]] perspective, using ChatGPT could potentially lead to an "AI-driven infodemic," producing a vast amount of scientific articles, fake news, and misinformation.<ref name=":3" /> Therefore, all of these challenges<ref>{{Cite journal |last=Minssen |first=Timo |last2=Vayena |first2=Effy |last3=Cohen |first3=I. Glenn |date=2023-07-25 |title=The Challenges for Regulating Medical Use of ChatGPT and Other Large Language Models |url=https://jamanetwork.com/journals/jama/fullarticle/2807167 |journal=JAMA |language=en |volume=330 |issue=4 |pages=315 |doi=10.1001/jama.2023.9651 |issn=0098-7484}}</ref> necessitate further regulation of LLM in healthcare in order to minimize the potential harms and foster trust in AI among patients and healthcare providers.<ref name=":0" /> | ||

Interestingly, healthcare professionals have demonstrated openness and readiness to adopt generative AI, mostly because they are excessively burdened by administrative tasks | Interestingly, healthcare professionals have demonstrated openness and readiness to adopt generative AI, mostly because they are excessively burdened by administrative tasks<ref>{{Cite web |last=Brumfiel, G. |date=05 April 2023 |title=Doctors are drowning in paperwork. Some companies claim AI can help |work=Health Shots |url=https://www.npr.org/sections/health-shots/2023/04/05/1167993888/chatgpt-medicine-artificial-intelligence-healthcare |publisher=NPR |accessdate=06 July 2023}}</ref> and are desperately seeking a practical solution. Several medical specializations have been identified as benefiting from the use of medical AI, including general practitioners<ref name=":4">{{Cite journal |last=Buck |first=Christoph |last2=Doctor |first2=Eileen |last3=Hennrich |first3=Jasmin |last4=Jöhnk |first4=Jan |last5=Eymann |first5=Torsten |date=2022-01-27 |title=General Practitioners’ Attitudes Toward Artificial Intelligence–Enabled Systems: Interview Study |url=https://www.jmir.org/2022/1/e28916 |journal=Journal of Medical Internet Research |language=en |volume=24 |issue=1 |pages=e28916 |doi=10.2196/28916 |issn=1438-8871 |pmc=PMC8832268 |pmid=35084342}}</ref>, nephrologists<ref name=":5">{{Cite journal |last=Samhammer |first=David |last2=Roller |first2=Roland |last3=Hummel |first3=Patrik |last4=Osmanodja |first4=Bilgin |last5=Burchardt |first5=Aljoscha |last6=Mayrdorfer |first6=Manuel |last7=Duettmann |first7=Wiebke |last8=Dabrock |first8=Peter |date=2022-12-20 |title=“Nothing works without the doctor:” Physicians’ perception of clinical decision-making and artificial intelligence |url=https://www.frontiersin.org/articles/10.3389/fmed.2022.1016366/full |journal=Frontiers in Medicine |volume=9 |pages=1016366 |doi=10.3389/fmed.2022.1016366 |issn=2296-858X |pmc=PMC9807757 |pmid=36606050}}</ref>, nuclear medicine practitioners<ref name=":6">{{Cite journal |last=Alberts |first=Ian L. |last2=Mercolli |first2=Lorenzo |last3=Pyka |first3=Thomas |last4=Prenosil |first4=George |last5=Shi |first5=Kuangyu |last6=Rominger |first6=Axel |last7=Afshar-Oromieh |first7=Ali |date=2023-05 |title=Large language models (LLM) and ChatGPT: what will the impact on nuclear medicine be? |url=https://link.springer.com/10.1007/s00259-023-06172-w |journal=European Journal of Nuclear Medicine and Molecular Imaging |language=en |volume=50 |issue=6 |pages=1549–1552 |doi=10.1007/s00259-023-06172-w |issn=1619-7070 |pmc=PMC9995718 |pmid=36892666}}</ref>, and [[Pathology|pathologists]]<ref name=":7">{{Cite journal |last=Drogt |first=Jojanneke |last2=Milota |first2=Megan |last3=Vos |first3=Shoko |last4=Bredenoord |first4=Annelien |last5=Jongsma |first5=Karin |date=2022-11 |title=Integrating artificial intelligence in pathology: a qualitative interview study of users' experiences and expectations |url=https://linkinghub.elsevier.com/retrieve/pii/S0893395222002204 |journal=Modern Pathology |language=en |volume=35 |issue=11 |pages=1540–1550 |doi=10.1038/s41379-022-01123-6 |pmc=PMC9596368 |pmid=35927490}}</ref>, with the technology reportedly having a direct impact on physicians’ roles, responsibilities, and competencies. <ref name=":7" /><ref name=":8">{{Cite journal |last=Tanaka |first=Masashi |last2=Matsumura |first2=Shinji |last3=Bito |first3=Seiji |date=2023-05-18 |title=Roles and Competencies of Doctors in Artificial Intelligence Implementation: Qualitative Analysis Through Physician Interviews |url=https://formative.jmir.org/2023/1/e46020 |journal=JMIR Formative Research |language=en |volume=7 |pages=e46020 |doi=10.2196/46020 |issn=2561-326X |pmc=PMC10236283 |pmid=37200074}}</ref><ref name=":9">{{Cite journal |last=Russell |first=Regina G. |last2=Lovett Novak |first2=Laurie |last3=Patel |first3=Mehool |last4=Garvey |first4=Kim V. |last5=Craig |first5=Kelly Jean Thomas |last6=Jackson |first6=Gretchen P. |last7=Moore |first7=Don |last8=Miller |first8=Bonnie M. |date=2023-03 |title=Competencies for the Use of Artificial Intelligence–Based Tools by Health Care Professionals |url=https://journals.lww.com/10.1097/ACM.0000000000004963 |journal=Academic Medicine |language=en |volume=98 |issue=3 |pages=348–356 |doi=10.1097/ACM.0000000000004963 |issn=1040-2446}}</ref>Although the above-mentioned potential has been recognized, various studies have noted that the implementation of medical AI would bring about certain challenges<ref name=":10">{{Cite journal |last=Petersson |first=Lena |last2=Larsson |first2=Ingrid |last3=Nygren |first3=Jens M. |last4=Nilsen |first4=Per |last5=Neher |first5=Margit |last6=Reed |first6=Julie E. |last7=Tyskbo |first7=Daniel |last8=Svedberg |first8=Petra |date=2022-12 |title=Challenges to implementing artificial intelligence in healthcare: a qualitative interview study with healthcare leaders in Sweden |url=https://bmchealthservres.biomedcentral.com/articles/10.1186/s12913-022-08215-8 |journal=BMC Health Services Research |language=en |volume=22 |issue=1 |pages=850 |doi=10.1186/s12913-022-08215-8 |issn=1472-6963 |pmc=PMC9250210 |pmid=35778736}}</ref> and barriers<ref name=":11">{{Cite journal |last=Strohm |first=Lea |last2=Hehakaya |first2=Charisma |last3=Ranschaert |first3=Erik R. |last4=Boon |first4=Wouter P. C. |last5=Moors |first5=Ellen H. M. |date=2020-10 |title=Implementation of artificial intelligence (AI) applications in radiology: hindering and facilitating factors |url=https://link.springer.com/10.1007/s00330-020-06946-y |journal=European Radiology |language=en |volume=30 |issue=10 |pages=5525–5532 |doi=10.1007/s00330-020-06946-y |issn=0938-7994 |pmc=PMC7476917 |pmid=32458173}}</ref>, such as physicians’ trust in the AI, user-friendliness<ref name=":12">{{Cite journal |last=Van Cauwenberge |first=Daan |last2=Van Biesen |first2=Wim |last3=Decruyenaere |first3=Johan |last4=Leune |first4=Tamara |last5=Sterckx |first5=Sigrid |date=2022-12 |title=“Many roads lead to Rome and the Artificial Intelligence only shows me one road”: an interview study on physician attitudes regarding the implementation of computerised clinical decision support systems |url=https://bmcmedethics.biomedcentral.com/articles/10.1186/s12910-022-00787-8 |journal=BMC Medical Ethics |language=en |volume=23 |issue=1 |pages=50 |doi=10.1186/s12910-022-00787-8 |issn=1472-6939 |pmc=PMC9077861 |pmid=35524301}}</ref>, or tensions between the human-centric model and technology-centric model, that is, upskilling and deskilling<ref>{{Cite journal |last=Aquino |first=Yves Saint James |last2=Rogers |first2=Wendy A. |last3=Braunack-Mayer |first3=Annette |last4=Frazer |first4=Helen |last5=Win |first5=Khin Than |last6=Houssami |first6=Nehmat |last7=Degeling |first7=Christopher |last8=Semsarian |first8=Christopher |last9=Carter |first9=Stacy M. |date=2023-01 |title=Utopia versus dystopia: Professional perspectives on the impact of healthcare artificial intelligence on clinical roles and skills |url=https://linkinghub.elsevier.com/retrieve/pii/S1386505622002179 |journal=International Journal of Medical Informatics |language=en |volume=169 |pages=104903 |doi=10.1016/j.ijmedinf.2022.104903}}</ref>, which will further impact on the (non-)acceptance of AI-based tools.<ref name=":12" /> | ||

===Aims=== | ===Aims=== | ||

| Line 48: | Line 41: | ||

==Methods== | ==Methods== | ||

This study was conducted from June to December 2021 as a multi-stakeholder (''n'' = 75) qualitative study. It employs an anticipatory ethics approach, an innovative form of ethical reasoning that is applied to the analysis of potential mid-term to long-term implications and outcomes of technological innovation | This study was conducted from June to December 2021 as a multi-stakeholder (''n'' = 75) qualitative study. It employs an anticipatory ethics approach, an innovative form of ethical reasoning that is applied to the analysis of potential mid-term to long-term implications and outcomes of technological innovation<ref>{{Cite journal |last=York |first=Emily |last2=Conley |first2=Shannon N. |date=2020-12 |title=Creative Anticipatory Ethical Reasoning with Scenario Analysis and Design Fiction |url=https://link.springer.com/10.1007/s11948-020-00253-x |journal=Science and Engineering Ethics |language=en |volume=26 |issue=6 |pages=2985–3016 |doi=10.1007/s11948-020-00253-x |issn=1353-3452}}</ref>, and sociology of expectations, focusing on the role of expectations in shaping scientific and technological change.<ref>{{Cite journal |last=Borup |first=Mads |last2=Brown |first2=Nik |last3=Konrad |first3=Kornelia |last4=Van Lente |first4=Harro |date=2006-07 |title=The sociology of expectations in science and technology |url=http://www.tandfonline.com/doi/abs/10.1080/09537320600777002 |journal=Technology Analysis & Strategic Management |language=en |volume=18 |issue=3-4 |pages=285–298 |doi=10.1080/09537320600777002 |issn=0953-7325}}</ref><ref>{{Cite journal |last=Brown |first=Nik |last2=Michael |first2=Mike |date=2003-03 |title=A Sociology of Expectations: Retrospecting Prospects and Prospecting Retrospects |url=http://www.tandfonline.com/doi/abs/10.1080/0953732032000046024 |journal=Technology Analysis & Strategic Management |language=en |volume=15 |issue=1 |pages=3–18 |doi=10.1080/0953732032000046024 |issn=0953-7325}}</ref> These are the theoretical frameworks underpinning the design of the qualitative study, in which the questions were followed by two scenarios set in 2030 and 2023 to stimulate discussions. Although both referred to the digital health context, the first scenario focused on the use of an AI-based virtual assistant, while the second focused on self-monitoring devices. This article focuses only on the first scenario (see Appendix I) as it was embedded in the clinical setting and depicts the future care provision and transformation of healthcare. The study follows the consolidated criteria for reporting qualitative research (COREQ) guidelines<ref>{{Cite journal |last=Tong |first=A. |last2=Sainsbury |first2=P. |last3=Craig |first3=J. |date=2007-09-16 |title=Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups |url=https://academic.oup.com/intqhc/article-lookup/doi/10.1093/intqhc/mzm042 |journal=International Journal for Quality in Health Care |language=en |volume=19 |issue=6 |pages=349–357 |doi=10.1093/intqhc/mzm042 |issn=1353-4505}}</ref> (see Appendix II). Furthermore, it was approved by the Catholic University of Croatia Ethics Committee n. 498-03-01-04/2-19-02. | ||

===Participants and recruitment=== | ===Participants and recruitment=== | ||

| Line 68: | Line 61: | ||

===Data collection and analysis=== | ===Data collection and analysis=== | ||

Semi-structured interviews were conducted both in-person (at locations convenient for the participants or at the research group's work office) and online, using the Zoom platform, by researchers experienced in qualitative research. Only the participant and the researcher attended the interviews. The initial interview guide was based on the authors’ previous desk research on recognized ethical, legal, and social issues in the development and deployment of AI in medicine. | Semi-structured interviews were conducted both in-person (at locations convenient for the participants or at the research group's work office) and online, using the Zoom platform, by researchers experienced in qualitative research. Only the participant and the researcher attended the interviews. The initial interview guide was based on the authors’ previous desk research on recognized ethical, legal, and social issues in the development and deployment of AI in medicine.<ref name=":13">{{Cite journal |last=Čartolovni |first=Anto |last2=Tomičić |first2=Ana |last3=Lazić Mosler |first3=Elvira |date=2022-05 |title=Ethical, legal, and social considerations of AI-based medical decision-support tools: A scoping review |url=https://linkinghub.elsevier.com/retrieve/pii/S1386505622000521 |journal=International Journal of Medical Informatics |language=en |volume=161 |pages=104738 |doi=10.1016/j.ijmedinf.2022.104738}}</ref> It was inspired by similar studies<ref name=":14">{{Cite journal |last=Mabillard |first=Vincent |last2=Demartines |first2=Nicolas |last3=Joliat |first3=Gaëtan-Romain |date=2022-10-19 |title=How Can Reasoned Transparency Enhance Co-Creation in Healthcare and Remedy the Pitfalls of Digitization in Doctor-Patient Relationships? |url=https://pubmed.ncbi.nlm.nih.gov/33590744 |journal=International Journal of Health Policy and Management |volume=11 |issue=10 |pages=1986–1990 |doi=10.34172/ijhpm.2020.263 |issn=2322-5939 |pmc=9808292 |pmid=33590744}}</ref><ref name=":15">{{Cite journal |last=Sauerbrei |first=Aurelia |last2=Kerasidou |first2=Angeliki |last3=Lucivero |first3=Federica |last4=Hallowell |first4=Nina |date=2023-04-20 |title=The impact of artificial intelligence on the person-centred, doctor-patient relationship: some problems and solutions |url=https://bmcmedinformdecismak.biomedcentral.com/articles/10.1186/s12911-023-02162-y |journal=BMC Medical Informatics and Decision Making |language=en |volume=23 |issue=1 |pages=73 |doi=10.1186/s12911-023-02162-y |issn=1472-6947 |pmc=PMC10116477 |pmid=37081503}}</ref><ref name=":16">{{Cite journal |last=Carter |first=Stacy M. |last2=Rogers |first2=Wendy |last3=Win |first3=Khin Than |last4=Frazer |first4=Helen |last5=Richards |first5=Bernadette |last6=Houssami |first6=Nehmat |date=2020-02 |title=The ethical, legal and social implications of using artificial intelligence systems in breast cancer care |url=https://linkinghub.elsevier.com/retrieve/pii/S0960977619305648 |journal=The Breast |language=en |volume=49 |pages=25–32 |doi=10.1016/j.breast.2019.10.001 |pmc=PMC7375671 |pmid=31677530}}</ref> and was pilot-tested on a group of 23 stakeholders. Later, the interview guide was adapted as the study continued to take account of emerging themes until data saturation was reached. All interviews were recorded using a portable audio recorder and later transcribed; the average length of interviews was 47 minutes. Transcripts were not provided to participants for comments or corrections. The transcribed interviews were entered into the NVivo qualitative data analysis software. Researchers familiarized themselves with the material by reading the transcripts and taking notes to gain deeper insights into the data. Next, a thematic analysis was conducted.<ref name=":17">{{Cite journal |last=Holzinger |first=Andreas |last2=Langs |first2=Georg |last3=Denk |first3=Helmut |last4=Zatloukal |first4=Kurt |last5=Müller |first5=Heimo |date=2019 |title=Causability and explainability of artificial intelligence in medicine |url=https://pubmed.ncbi.nlm.nih.gov/32089788 |journal=Wiley Interdisciplinary Reviews. Data Mining and Knowledge Discovery |volume=9 |issue=4 |pages=e1312 |doi=10.1002/widm.1312 |issn=1942-4787 |pmc=7017860 |pmid=32089788}}</ref> Following that, an open coding process was initiated for the interviews (''n'' = 11). Based on the initial codes, the researchers agreed on thematic categories<ref>{{Cite journal |last=Burnard |first=Philip |date=1991-12 |title=A method of analysing interview transcripts in qualitative research |url=https://linkinghub.elsevier.com/retrieve/pii/026069179190009Y |journal=Nurse Education Today |language=en |volume=11 |issue=6 |pages=461–466 |doi=10.1016/0260-6917(91)90009-Y}}</ref>, leading to the development of the final codebook, which was then used to code the remaining interviews. Finally, the researchers combined and discussed themes for comparison and reached a consensus on how to define and use them. All interviews were analyzed in the original language (Croatian), and the quotes presented in this article have been translated into English. | ||

==Results== | ==Results== | ||

| Line 80: | Line 73: | ||

| colspan="6" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' Participants’ socio-demographic characteristics. | | colspan="6" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 1.''' Participants’ socio-demographic characteristics. | ||

|- | |- | ||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px; | ! colspan="2" style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Socio-demographic characteristics | ||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Patients | ! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Patients | ||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Physicians | ! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Physicians | ||

| Line 86: | Line 79: | ||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Σ | ! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Σ | ||

|- | |- | ||

| style="background-color:white; padding-left:10px; padding-right:10px; | | rowspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |Gender | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |M | | style="background-color:white; padding-left:10px; padding-right:10px;" |M | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |5 | | style="background-color:white; padding-left:10px; padding-right:10px;" |5 | ||

| Line 105: | Line 98: | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |38 | | style="background-color:white; padding-left:10px; padding-right:10px;" |38 | ||

|- | |- | ||

| style="background-color:white; padding-left:10px; padding-right:10px; | | rowspan="7" style="background-color:white; padding-left:10px; padding-right:10px;" |Age | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |18–24 | | style="background-color:white; padding-left:10px; padding-right:10px;" |18–24 | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |2 | | style="background-color:white; padding-left:10px; padding-right:10px;" |2 | ||

| Line 148: | Line 141: | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |38 | | style="background-color:white; padding-left:10px; padding-right:10px;" |38 | ||

|- | |- | ||

| style="background-color:white; padding-left:10px; padding-right:10px; | | rowspan="4" style="background-color:white; padding-left:10px; padding-right:10px;" |Location | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Urban center | | style="background-color:white; padding-left:10px; padding-right:10px;" |Urban center | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |8 | | style="background-color:white; padding-left:10px; padding-right:10px;" |8 | ||

| Line 173: | Line 166: | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |38 | | style="background-color:white; padding-left:10px; padding-right:10px;" |38 | ||

|- | |- | ||

| style="background-color:white; padding-left:10px; padding-right:10px; | | rowspan="2" style="background-color:white; padding-left:10px; padding-right:10px;" |Regular use of technology for health monitoring | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | | style="background-color:white; padding-left:10px; padding-right:10px;" |Yes | ||

| style="background-color:white; padding-left:10px; padding-right:10px;" |5 | | style="background-color:white; padding-left:10px; padding-right:10px;" |5 | ||

| Line 210: | Line 203: | ||

<blockquote>I think the most important thing for me is that we communicate about everything. To talk. To trust what he tells me. (Patient 5) | <blockquote>I think the most important thing for me is that we communicate about everything. To talk. To trust what he tells me. (Patient 5) | ||

It's important that he{{Efn|In Croatian, the noun physician (liječnik) is masculine; therefore, the patient refers to the physician as a male.}} listens to me and doesn't jump to conclusions before I fully explain the issue. (Patient 9) | It's important that he{{Efn|In Croatian, the noun physician (liječnik) is masculine; therefore, the patient refers to the physician as a male.}} listens to me and doesn't jump to conclusions before I fully explain the issue. (Patient 9) | ||

It is crucial that he listen to me and that I listen to him. That's really the most important thing because if he does not listen to me, if it only gets heard in one ear, coming here is a waste of time. (Patient 12)</blockquote> | It is crucial that he listen to me and that I listen to him. That's really the most important thing because if he does not listen to me, if it only gets heard in one ear, coming here is a waste of time. (Patient 12)</blockquote> | ||

| Line 218: | Line 212: | ||

The most crucial aspect is establishing easy communication and trust in what we do. (Physician 12) | The most crucial aspect is establishing easy communication and trust in what we do. (Physician 12) | ||

Actually, it's always about honesty. Knowing that, when patients speak, they tell the exact truth and don't deceive. That's an essential factor for me. Another important factor is the patient's capacity to communicate clearly. To correctly interpret their symptoms and condition of health. To convey a comprehensive picture to me. (Physician 11)</blockquote> | Actually, it's always about honesty. Knowing that, when patients speak, they tell the exact truth and don't deceive. That's an essential factor for me. Another important factor is the patient's capacity to communicate clearly. To correctly interpret their symptoms and condition of health. To convey a comprehensive picture to me. (Physician 11)</blockquote> | ||

| Line 245: | Line 240: | ||

Today, they are mostly on the computer, and they have to type everything, so they have very little time to dedicate to us patients. (Patient 1)<br /><br /> | Today, they are mostly on the computer, and they have to type everything, so they have very little time to dedicate to us patients. (Patient 1)<br /><br /> | ||

Somehow, since computers were introduced in healthcare, a visit to one physician, a neurologist, for example, would be like this: I spent 5 minutes with him. Out of those 5 minutes, he would talk to me for a whole minute, and then spend 4 minutes typing on his computer. (Patient 13) | Somehow, since computers were introduced in healthcare, a visit to one physician, a neurologist, for example, would be like this: I spent 5 minutes with him. Out of those 5 minutes, he would talk to me for a whole minute, and then spend 4 minutes typing on his computer. (Patient 13) | ||

I want to be polite, look at him, and talk to him, but on the other hand, you see this “click, click”, and if I don't click, I can't proceed. And then he says, “Why are you constantly looking at that [computer]?” – I mean, it's impolite, right? But I can't continue working because I have to type this and move on to the next… (Hospital manager 4)</blockquote> | I want to be polite, look at him, and talk to him, but on the other hand, you see this “click, click”, and if I don't click, I can't proceed. And then he says, “Why are you constantly looking at that [computer]?” – I mean, it's impolite, right? But I can't continue working because I have to type this and move on to the next… (Hospital manager 4)</blockquote> | ||

| Line 263: | Line 259: | ||

It can be an excellent tool for speeding up the diagnostic process, increasing accuracy, and making work easier. (Physician 2)<br /><br /> | It can be an excellent tool for speeding up the diagnostic process, increasing accuracy, and making work easier. (Physician 2)<br /><br /> | ||

Many of our routine tasks, which consume our daily time, can be replaced. (Physician 5) | Many of our routine tasks, which consume our daily time, can be replaced. (Physician 5) | ||

Physicians don't have time, and the routine tasks that require lower levels of care could be performed by artificial intelligence as well… (Physician 6)</blockquote> | Physicians don't have time, and the routine tasks that require lower levels of care could be performed by artificial intelligence as well… (Physician 6)</blockquote> | ||

| Line 287: | Line 284: | ||

<blockquote>Firstly, medicine would become significantly more humane, as people who truly need medical help would receive it in its full scope. Physicians don't have time, and routine tasks that require lower levels of care could be performed by artificial intelligence, so I think everyone would be satisfied; there would be no queues, everyone would receive services quickly, and, as I mentioned, what is essential, and what we can do less and less, is the time spent with the physicians, which would then truly be dedicated to the patient in a way that we cannot do now. (Physician 6) | <blockquote>Firstly, medicine would become significantly more humane, as people who truly need medical help would receive it in its full scope. Physicians don't have time, and routine tasks that require lower levels of care could be performed by artificial intelligence, so I think everyone would be satisfied; there would be no queues, everyone would receive services quickly, and, as I mentioned, what is essential, and what we can do less and less, is the time spent with the physicians, which would then truly be dedicated to the patient in a way that we cannot do now. (Physician 6) | ||

For example, one of the most advantageous aspects is that it frees up more time for doctors to spend with patients and reduces the basically pointless standing around time. (Physician 1) | For example, one of the most advantageous aspects is that it frees up more time for doctors to spend with patients and reduces the basically pointless standing around time. (Physician 1) | ||

So, suppose you already have an algorithm that, for example, saves you maybe five minutes per patient, and you are not overwhelmed with work. In that case, you can spend those five minutes on the relationship between you and the patient, which is greatly mutually beneficial. (Physician 2)</blockquote> | So, suppose you already have an algorithm that, for example, saves you maybe five minutes per patient, and you are not overwhelmed with work. In that case, you can spend those five minutes on the relationship between you and the patient, which is greatly mutually beneficial. (Physician 2)</blockquote> | ||

| Line 306: | Line 304: | ||

The physicians reported that AI alone cannot perform the job sufficiently well and that collaborative cooperation will always be essential. According to them, AI will never have the skills that a physician possesses, particularly when it comes to visual observations and recognizing emotions that are inherent to humans. In addition to medical knowledge, experience and intuition often play a crucial role in guiding a physician's thinking. | The physicians reported that AI alone cannot perform the job sufficiently well and that collaborative cooperation will always be essential. According to them, AI will never have the skills that a physician possesses, particularly when it comes to visual observations and recognizing emotions that are inherent to humans. In addition to medical knowledge, experience and intuition often play a crucial role in guiding a physician's thinking. | ||

<blockquote>When you see a patient, when they walk through the door, you know what's wrong with them. Not because you've seen many like them and so on. By their appearance, by their | <blockquote>When you see a patient, when they walk through the door, you know what's wrong with them. Not because you've seen many like them and so on. By their appearance, by their behavior, you know. You ask them two questions, and that's it. You understand. But artificial intelligence would have to go step by step, excluding similar things. So what might be straightforward for us, would require AI to ask 24 questions before arriving at the same conclusion we can get from two questions. (Physician 12)<br /><br /> | ||

Sometimes the entire field of medicine is on one side, but your gut feeling tells you something else, and in the end it turns out you are right. (Hospital manager 4)</blockquote> | Sometimes the entire field of medicine is on one side, but your gut feeling tells you something else, and in the end it turns out you are right. (Hospital manager 4)</blockquote> | ||

| Line 368: | Line 366: | ||

Excessive digitalization reduces personal contact between people, and that, in turn, reduces communication and connection, and so on. (Patient 4)<br /><br /> | Excessive digitalization reduces personal contact between people, and that, in turn, reduces communication and connection, and so on. (Patient 4)<br /><br /> | ||

Physicians like to have a conversation with patients. Not just give a diagnosis and be done with it. They prefer to talk to patients and explain exactly what they need to do to maintain such a health condition. So, I believe that through digitalization and all those things, they won't be able to do that. (Patient 14) | Physicians like to have a conversation with patients. Not just give a diagnosis and be done with it. They prefer to talk to patients and explain exactly what they need to do to maintain such a health condition. So, I believe that through digitalization and all those things, they won't be able to do that. (Patient 14) | ||

Well, probably, the relationship with the physician on a personal level will become less frequent. Currently, many patients can connect with a physician and develop a personal approach over time… I think that will be less and less, you know, colder… (Patient 9)</blockquote> | Well, probably, the relationship with the physician on a personal level will become less frequent. Currently, many patients can connect with a physician and develop a personal approach over time… I think that will be less and less, you know, colder… (Patient 9)</blockquote> | ||

| Line 382: | Line 381: | ||

<blockquote>When we receive a diagnosis, it usually contains many Latin terms that we don't understand. (Patient 13) | <blockquote>When we receive a diagnosis, it usually contains many Latin terms that we don't understand. (Patient 13) | ||

It's so easy to get lost in all that data nowadays. And you can often come across incorrect information and so on. (Physician 12) | It's so easy to get lost in all that data nowadays. And you can often come across incorrect information and so on. (Physician 12) | ||

There's a study that actually proved that people who have access to such solutions tend to think they are smart, and they believe they are right, even if the application provides them with completely wrong answers. So, they don't even engage their brains to see that it's utter nonsense. As soon as they see it came from a smartphone or someone said it, they think it must be true. So, that's also a danger – false authority, knowledge and, consequently, wrong decisions. (Hospital manager 11)</blockquote> | There's a study that actually proved that people who have access to such solutions tend to think they are smart, and they believe they are right, even if the application provides them with completely wrong answers. So, they don't even engage their brains to see that it's utter nonsense. As soon as they see it came from a smartphone or someone said it, they think it must be true. So, that's also a danger – false authority, knowledge and, consequently, wrong decisions. (Hospital manager 11)</blockquote> | ||

| Line 387: | Line 387: | ||

==Discussion== | ==Discussion== | ||

This is the first qualitative study to combine a multi-stakeholder approach and an anticipatory ethics approach to analyze the ethical, legal, and social issues surrounding the implementation of AI-based tools in healthcare by focusing on the micro-level of healthcare decision-making and not only on groups such as healthcare professionals/workers | This is the first qualitative study to combine a multi-stakeholder approach and an anticipatory ethics approach to analyze the ethical, legal, and social issues surrounding the implementation of AI-based tools in healthcare by focusing on the micro-level of healthcare decision-making and not only on groups such as healthcare professionals/workers<ref name=":4" /><ref name=":5" /><ref name=":7" /><ref name=":8" /><ref name=":11" /><ref name=":12" />, healthcare leaders<ref name=":10" /> or patients, family members, and healthcare professionals.<ref name=":18">{{Cite journal |last=Amann |first=Julia |last2=Vayena |first2=Effy |last3=Ormond |first3=Kelly E. |last4=Frey |first4=Dietmar |last5=Madai |first5=Vince I. |last6=Blasimme |first6=Alessandro |date=2023-01-11 |editor-last=Canzan |editor-first=Federica |title=Expectations and attitudes towards medical artificial intelligence: A qualitative study in the field of stroke |url=https://dx.plos.org/10.1371/journal.pone.0279088 |journal=PLOS ONE |language=en |volume=18 |issue=1 |pages=e0279088 |doi=10.1371/journal.pone.0279088 |issn=1932-6203 |pmc=PMC9833517 |pmid=36630325}}</ref> Interestingly, the findings have highlighted the impact of AI on: (1) the fundamental aspects of the patient–physician relationship and its underlying core values as well as the need for a synergistic dynamic between the physician and AI; (2) alleviating workload and reducing the administrative burden by saving time and bringing the patient to the center of the caring process; and (3) the potential loss of a holistic approach by neglecting humanness in healthcare. | ||

===The fundamental aspects of the patient–physician relationship and its underlying core values require a synergistic dynamic between physicians and AI=== | ===The fundamental aspects of the patient–physician relationship and its underlying core values require a synergistic dynamic between physicians and AI=== | ||

One of the themes that emerged in the results was the potential transformation of patient–physician relationships, a concern which has already been noted in the literature | One of the themes that emerged in the results was the potential transformation of patient–physician relationships, a concern which has already been noted in the literature<ref name=":13" /> and other qualitative studies.<ref name=":6" /><ref name=":7" /><ref name=":12" /> All of the participants emphasized the importance of the patient–physician relationship and the need to preserve its core values, which are based on trust and honesty, achieved through open and sincere communication. In the realm of mental health, several studies<ref>{{Cite journal |last=Fulmer |first=Russell |last2=Joerin |first2=Angela |last3=Gentile |first3=Breanna |last4=Lakerink |first4=Lysanne |last5=Rauws |first5=Michiel |date=2018-12-13 |title=Using Psychological Artificial Intelligence (Tess) to Relieve Symptoms of Depression and Anxiety: Randomized Controlled Trial |url=http://mental.jmir.org/2018/4/e64/ |journal=JMIR Mental Health |language=en |volume=5 |issue=4 |pages=e64 |doi=10.2196/mental.9782 |issn=2368-7959 |pmc=PMC6315222 |pmid=30545815}}</ref><ref>{{Cite journal |last=Szalai |first=Judit |date=2021-06 |title=The potential use of artificial intelligence in the therapy of borderline personality disorder |url=https://onlinelibrary.wiley.com/doi/10.1111/jep.13530 |journal=Journal of Evaluation in Clinical Practice |language=en |volume=27 |issue=3 |pages=491–496 |doi=10.1111/jep.13530 |issn=1356-1294}}</ref><ref>{{Cite book |date=2021-08-27 |editor-last=Trachsel |editor-first=Manuel |editor2-last=Gaab |editor2-first=Jens |editor3-last=Biller-Andorno |editor3-first=Nikola |editor4-last=Tekin |editor4-first=Şerife |editor5-last=Sadler |editor5-first=John Z. |title=Oxford Handbook of Psychotherapy Ethics |url=https://academic.oup.com/edited-volume/35471 |language=en |edition=1 |publisher=Oxford University Press |doi=10.1093/oxfordhb/9780198817338.001.0001 |isbn=978-0-19-881733-8}}</ref> have endorsed the use of AI in an assistive role as playing a positive role in improving openness and communication while avoiding potential complications in interpersonal relationships, since patients in mental health context may be more willing to disclose personal information to AI rather than physicians. | ||

Although the majority of the participants in this study had a positive outlook on the potential for AI to improve the patient–physician relationship, the physicians feared that implementing such technology could reduce physician–patient interactions and erode the established trust in the patient–physician relationship.<ref>{{Cite journal |last=Char |first=Danton S. |last2=Shah |first2=Nigam H. |last3=Magnus |first3=David |date=2018-03-15 |title=Implementing Machine Learning in Health Care — Addressing Ethical Challenges |url=http://www.nejm.org/doi/10.1056/NEJMp1714229 |journal=New England Journal of Medicine |language=en |volume=378 |issue=11 |pages=981–983 |doi=10.1056/NEJMp1714229 |issn=0028-4793 |pmc=PMC5962261 |pmid=29539284}}</ref> In this relationship, as perceived by the respondents, the AI cannot replace the physician's skills, visual observations, and emotional recognition of the patients, nor the experience and intuition that guides the physician's decision-making. Moreover, substituting humans with AI in healthcare would not be possible because healthcare relies on relationships, empathy, and human warmth.<ref name=":19">{{Cite journal |last=Nagy, M.; Sisk, B. |date=2020-05-01 |title=How Will Artificial Intelligence Affect Patient-Clinician Relationships? |url=https://journalofethics.ama-assn.org/article/how-will-artificial-intelligence-affect-patient-clinician-relationships/2020-05 |journal=AMA Journal of Ethics |language=en |volume=22 |issue=5 |pages=E395–400 |doi=10.1001/amajethics.2020.395 |issn=2376-6980}}</ref> Therefore, patients, physicians, and hospital managers view the implementation of AI in healthcare as a necessary synergy between physicians and AI, with AI providing technical support, and physicians providing a human-specific approach in the form of a partnership.<ref>{{Cite journal |last=Verghese |first=Abraham |last2=Shah |first2=Nigam H. |last3=Harrington |first3=Robert A. |date=2018-01-02 |title=What This Computer Needs Is a Physician: Humanism and Artificial Intelligence |url=http://jama.jamanetwork.com/article.aspx?doi=10.1001/jama.2017.19198 |journal=JAMA |language=en |volume=319 |issue=1 |pages=19 |doi=10.1001/jama.2017.19198 |issn=0098-7484}}</ref> Thus, the AI can become the physician's right hand<ref name=":18" />, augmenting the physician's existing capabilities.<ref>{{Cite journal |last=Crigger |first=Elliott |last2=Reinbold |first2=Karen |last3=Hanson |first3=Chelsea |last4=Kao |first4=Audiey |last5=Blake |first5=Kathleen |last6=Irons |first6=Mira |date=2022-02 |title=Trustworthy Augmented Intelligence in Health Care |url=https://link.springer.com/10.1007/s10916-021-01790-z |journal=Journal of Medical Systems |language=en |volume=46 |issue=2 |pages=12 |doi=10.1007/s10916-021-01790-z |issn=0148-5598 |pmc=PMC8755670 |pmid=35020064}}</ref> Ideally, this supportive and augmenting role of AI-based tools within the patient–physician relationship would be followed up using models of shared decision-making.<ref name=":9" /> | |||

It is important to note that AI-based tools were perceived only as potentially empowering physicians<ref>{{Citation |last=Bohr |first=Adam |last2=Memarzadeh |first2=Kaveh |date=2020 |title=The rise of artificial intelligence in healthcare applications |url=https://linkinghub.elsevier.com/retrieve/pii/B9780128184387000022 |work=Artificial Intelligence in Healthcare |language=en |publisher=Elsevier |pages=25–60 |doi=10.1016/b978-0-12-818438-7.00002-2 |isbn=978-0-12-818438-7 |pmc=PMC7325854 |accessdate=2024-03-08}}</ref> in particular. Hospital managers argued that future development would need to be geared towards assisting physicians rather than providing a tool for patients’ (self-)diagnosis, which might have a negative effect on younger generations in particular, who are more inclined towards the use of new technology. Some parallels can be drawn with the rise of ChatGPT and its potentially risky contribution to the "AI-driven infodemic."<ref name=":3" /> | |||

===Alleviating workload and reducing the administrative burden by saving time and bringing the patient to the center of the caring process=== | |||

Moreover, the potential of AI has been recognized in the findings as improving the existing patient–physician relationship among all stakeholder groups considering the current state of medicine and healthcare, which is confronted with excessive workload and administrative burden. In particular, physicians view AI as an opportunity to replace or automate certain tasks<ref name=":8" />, such as the manual input of data, which often reduces the patient consultation to a meeting with the screen/monitor, involving limited physical contact with the patient. Furthermore, the patients anticipated that the implementation of AI might minimize waiting times and administration in healthcare. However, the perception of AI-based tools being a solution to the persisting issues in healthcare reveals that, among our respondents, AI is seen as a panacea. Specific tasks being taken over by AI-based tools will allow more time for physicians to spend with patients or for professional growth.<ref name=":18" /> However, it could be argued that using this extra time might be also used to see more patients each day, which at the end might result in the opposite effect of our respondents expectations. Recent reports have implied that, with the implementation of AI, productivity might increase, and greater efficiency of care delivery will allow healthcare systems to provide more and better care to more people. <ref>{{Cite web |last=Spatharou, A.; Hieronimus, S.; Jenkins, J. |date=10 March 2020 |title=Transforming healthcare with AI: The impact on the workforce and organizations |work=McKinsey & Company |url=https://www.mckinsey.com/industries/healthcare/our-insights/transforming-healthcare-with-ai |accessdate=06 November 2023}}</ref> The recent AI economic model for diagnosis and treatment<ref>{{Cite journal |last=Khanna |first=Narendra N. |last2=Maindarkar |first2=Mahesh A. |last3=Viswanathan |first3=Vijay |last4=Fernandes |first4=Jose Fernandes E |last5=Paul |first5=Sudip |last6=Bhagawati |first6=Mrinalini |last7=Ahluwalia |first7=Puneet |last8=Ruzsa |first8=Zoltan |last9=Sharma |first9=Aditya |last10=Kolluri |first10=Raghu |last11=Singh |first11=Inder M. |date=2022-12-09 |title=Economics of Artificial Intelligence in Healthcare: Diagnosis vs. Treatment |url=https://www.mdpi.com/2227-9032/10/12/2493 |journal=Healthcare |language=en |volume=10 |issue=12 |pages=2493 |doi=10.3390/healthcare10122493 |issn=2227-9032 |pmc=PMC9777836 |pmid=36554017}}</ref> demonstrated that AI-related reductions in time for diagnosis and treatment could potentially lead to a triple increase of patients per day in the next 10 years. | |||

The respondents did not perceive that the delegation of certain tasks to AI would result in AI replacing physicians, nor the physicians’ role being threatened, because their role is not only to provide a diagnosis but to fully engage with the patients, offering consolation, consultations, and more. Sezgin<ref>{{Cite journal |last=Sezgin |first=Emre |date=2023-01 |title=Artificial intelligence in healthcare: Complementing, not replacing, doctors and healthcare providers |url=http://journals.sagepub.com/doi/10.1177/20552076231186520 |journal=DIGITAL HEALTH |language=en |volume=9 |pages=20552076231186520 |doi=10.1177/20552076231186520 |issn=2055-2076 |pmc=PMC10328041 |pmid=37426593}}</ref> provides concrete instances of the ways in which AI enhances healthcare without taking the place of physicians. For example, AI-assisted [[Clinical decision support system|decision support systems]] work with [[magnetic resonance imaging]] and ultrasound equipment in helping physicians with diagnosis, or enhancing speech recognition in dictation devices that record radiologists’ notes. Nagy and Sisk<ref name=":19" /> argue that although AI-based tools might reduce the burden of administrative tasks, they might also increase the interpersonal demands of patient care. Therefore, AI-based tools have the potential to place the patient at the center of the caring process, safeguarding the patients’ autonomy and assisting them in making informed decisions that align with their values.<ref>{{Cite journal |last=Quinn |first=Thomas P |last2=Senadeera |first2=Manisha |last3=Jacobs |first3=Stephan |last4=Coghlan |first4=Simon |last5=Le |first5=Vuong |date=2021-03-18 |title=Trust and medical AI: the challenges we face and the expertise needed to overcome them |url=https://academic.oup.com/jamia/article/28/4/890/6042213 |journal=Journal of the American Medical Informatics Association |language=en |volume=28 |issue=4 |pages=890–894 |doi=10.1093/jamia/ocaa268 |issn=1527-974X |pmc=PMC7973477 |pmid=33340404}}</ref> | |||

===The potential loss of a holistic approach by neglecting humanness in healthcare=== | |||

The essential aspects of the patient–physician relationship, such as effective communication and personal interaction, are already under threat due to excessive administrative tasks, physician workload, and technology that hinders direct patient interaction. Indeed, physicians endorse the need for synergy between AI and physicians; they consider the physical contact between physicians and patients as something that must be preserved, and which could be threatened by the implementation of AI. This is due to the perception that interaction between patients and physicians will likely decrease, leading to alienation, and excessive digitalization could further reduce communication and connection within patient–physician relationships. Sparrow and Hatherley<ref>{{Cite journal |last=Sparrow |first=Robert |last2=Hatherley |first2=Joshua |date=2020-01 |title=High Hopes for “Deep Medicine”? AI, Economics, and the Future of Care |url=https://onlinelibrary.wiley.com/doi/10.1002/hast.1079 |journal=Hastings Center Report |language=en |volume=50 |issue=1 |pages=14–17 |doi=10.1002/hast.1079 |issn=0093-0334}}</ref> emphasize the paradox of the expectations of AI, which predict that, on the one hand, it will help improve the physician's efficiency but, on the other—taking into consideration the economics of healthcare—it might erode the empathic and compassionate nature of the relationship between patients and physicians as a result of increased numbers of patient consultations each day, due to the physician's increased efficiency. | |||

On the contrary, some studies<ref name=":5" /><ref name=":9" /> have reported that AI-based tools represent an opportunity to enhance the humanistic aspects of the patient–physician relationship by requiring enhanced communication skills in explaining to patients the outputs of AI-based tools that might influence their care. Explaining how a particular decision has been made is the first step in building a trusting relationship between the physician, patient, and AI<ref name=":14" />, which might lead towards a more productive patient–physician relationship by increasing the transparency of decision-making.<ref name=":15" /> The lack of explainability might be problematic for physicians<ref name=":16" /> to take responsibility for decisions involving AI systems, as the participants in this study emphasized that physicians should retain ultimate responsibility. The ability of a human expert to explain and reverse-engineer AI decision-making processes is still necessary, even in situations in which AI tools can supplement physicians and, in certain circumstances, even significantly influence the decision-making process in the future.<ref name=":17" /> Therefore, keeping humans in the loop with regard to the inclusion of AI in healthcare will contribute to preserving the humane aspects of healthcare. Otherwise, the holistic elements of care might be lost and the dehumanization of the healthcare system<ref name=":18" /> would render AI a severe disadvantage, rather than a significant benefit. | |||

==Strengths and limitations== | |||

Caution should be taken when interpreting the results of the study, as there are some limitations. The sample size of the participants was not calculated using power analysis; instead, the data saturation approach was taken within each specific category of key stakeholders. Therefore, it is impossible to make generalizations for the entire stakeholder groups. As participants were recruited using purposive sampling and the snowballing method, a degree of sample bias is acknowledged because some of the individuals who were keen and familiar with AI technology expressed their willingness to participate in the study; these people might have had higher medical and digital literacy levels compared to the average members of the included stakeholder groups in Croatia. Another limitation is the timeframe of the research, because it was conducted during the [[COVID-19]] [[pandemic]], when meetings between patients and physicians were reduced to a minimum. As a result, participants may have been more aware of the impact of AI on the patient–physician relationship. | |||

==Conclusions== | |||

This multi-stakeholder qualitative study, focusing on the micro-level of healthcare decision-making, sheds new light on the impact of AI on healthcare and the future of patient–physician relationships. Following the recent introduction of ChatGPT and its impact on public awareness of the potential benefits of AI in healthcare, it would be interesting for future research endeavors to conduct a follow-up study to determine how the participants’ aspirations and expectations of AI in healthcare have changed. The results of the current study highlight the need to use a critical awareness approach to the implementation of AI in healthcare by applying critical thinking and reasoning, rather than simply relying upon the recommendation of the algorithm. It is important not to neglect clinical reasoning and consideration of best clinical practices, while avoiding a negative impact on the existing patient–physician relationship by preserving its core values, such as trust and honesty situated in open and sincere communication. The implementation of AI should not be allowed to cause the dehumanization and deterioration of healthcare but should help to bring the patient to the center of the healthcare focus. Therefore, preservation of the trust-based relationship between patient and physician is urged, by emphasizing a human-centric model involving both the patient and the public even at the design stage of AI-based tool development. | |||

==Acknowledgements== | |||

We would like to thank our former research group member Ana Tomičić, PhD, who also participated in conducting and transcribing the interviews, as well as our former student research group members Josipa Blašković and Ana Posarić, for their assistance with the transcription of interviews. Furthermore, we want to express our immense gratitude to two anonymous reviewers who provided us with detailed, valuable, and constructive feedback for improving the manuscript. We also wish to acknowledge that the credit for the proposed future research avenue of a follow-up study following the introduction of ChatGPT goes to one of our two reviewers. | |||

===Funding=== | |||

The authors disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the Hrvatska Zaklada za Znanost HrZZ (Croatian Science Foundation) (grant number UIP-2019-04-3212). | |||

===Ethical approval=== | |||

The ethics committee of the Catholic University of Croatia approved this study (number: 498-03-01-04/2-19-02). | |||

===Conflict of interest=== | |||

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article. | |||

==Supplementary material== | |||

*[https://journals.sagepub.com/doi/suppl/10.1177/20552076231220833/suppl_file/sj-docx-1-dhj-10.1177_20552076231220833.docx Supplementary material 1] (.docx) | |||

==Footnotes== | ==Footnotes== | ||

Latest revision as of 00:18, 8 March 2024

| Full article title | Critical analysis of the impact of AI on the patient–physician relationship: A multi-stakeholder qualitative study |

|---|---|

| Journal | Digital Health |

| Author(s) | Čartolovni, Anto; Malešević, Anamaria; Poslon, Luka |

| Author affiliation(s) | Catholic University of Croatia |

| Primary contact | Email: anto dot cartolovni at unicath dot hr |

| Year published | 2023 |

| Volume and issue | 9 |

| Article # | 231220833 |

| DOI | 10.1177/20552076231220833 |

| ISSN | 2055-2076 |

| Distribution license | Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International |

| Website | https://journals.sagepub.com/doi/10.1177/20552076231220833 |

| Download | https://journals.sagepub.com/doi/reader/10.1177/20552076231220833 (PDF) |

Abstract

Objective: This qualitative study aims to present the aspirations, expectations, and critical analysis of the potential for artificial intelligence (AI) to transform the patient–physician relationship, according to multi-stakeholder insight.

Methods: This study was conducted from June to December 2021, using an anticipatory ethics approach and sociology of expectations as the theoretical frameworks. It focused mainly on three groups of stakeholders, namely physicians (n = 12), patients (n = 15), and healthcare managers (n = 11), all of whom are directly related to the adoption of AI in medicine (n = 38).

Results: In this study, interviews were conducted with 40% of the patients in the sample (15/38), as well as 31% of the physicians (12/38) and 29% of health managers in the sample (11/38). The findings highlight the following: (1) the impact of AI on fundamental aspects of the patient–physician relationship and the underlying importance of a synergistic relationship between the physician and AI; (2) the potential for AI to alleviate workload and reduce administrative burden by saving time and putting the patient at the center of the caring process; and (3) the potential risk to the holistic approach by neglecting humanness in healthcare.

Conclusions: This multi-stakeholder qualitative study, which focused on the micro-level of healthcare decision-making, sheds new light on the impact of AI on healthcare and the potential transformation of the patient–physician relationship. The results of the current study highlight the need to adopt a critical awareness approach to the implementation of AI in healthcare by applying critical thinking and reasoning. It is important not to rely solely upon the recommendations of AI while neglecting clinical reasoning and physicians’ knowledge of best clinical practices. Instead, it is vital that the core values of the existing patient–physician relationship—such as trust and honesty, conveyed through open and sincere communication—are preserved.

Keywords: artificial intelligence, patient-physician relationship, ethics, bioethics, qualitative research, multi-stakeholder approach

Introduction

Recent developments in large language models (LLM) have attracted public attention in regard to artificial intelligence (AI) development, raising many hopes among the wider public as well as healthcare professionals. After ChatGPT was launched in November 2022, producing human-like responses, it reached 100 million users in the following two months.[1] Many suggestions for its potential applications in healthcare have appeared on social media. These have ranged from using AI to write outpatient clinic letters to insurance companies, thereby saving time for the practicing physician, to offering advice to physicians on how to diagnose a patient.[2] Such an AI-enabled chatbot-based symptom checker can be used as a self-triaging and patient monitoring tool, or AI can be used for translating and explaining medical notes or making diagnoses in a patient-friendly way.[3] Therefore, the introduction of ChatGPT represented a potential benefit not only for healthcare professionals but also for patients themselves, particularly with the improved version of GPT-4. In addition to ChatGPT, various other LLMs are at different stages of development, for example, BioGPT (Massachusetts Institute of Technology, Boston, MA, USA), LaMDA (Google, Mountainview, CA, USA), Sparrow (Deepmind AI, London, UK), Pangu Alpha (Huawei, Shenzen, China), OPT-IML (Meta, Menlo Park, CA, USA), and Megatron Turing MLG (Nvidia, Santa Clara, CA, USA).[4]

However, despite the wealth of potential applications for LLM, including cost-saving and time-saving benefits that can be used to increase productivity, there has been widespread acknowledgement that it must be used wisely.[3] Therefore, the critical awareness approach mostly relates to underlying ethical issues such as transparency, accountability, and fairness.[5] Critical thinking is essential for physicians to avoid blindly relying only on the recommendations of AI algorithms, without applying clinical reasoning or reviewing current best practices, which could lead to compromising the ethical principles of beneficence and non-maleficence.[6] Moreover, when using LLM in the healthcare context, the provision of sensitive health information by feeding up the algorithmic black box might be met with a lack of transparency in terms of the ways in which the commercial companies will use or store such information. In other words, such information might be made available to company employees or potential hackers.[4] In addition, from a public health perspective, using ChatGPT could potentially lead to an "AI-driven infodemic," producing a vast amount of scientific articles, fake news, and misinformation.[5] Therefore, all of these challenges[7] necessitate further regulation of LLM in healthcare in order to minimize the potential harms and foster trust in AI among patients and healthcare providers.[1]

Interestingly, healthcare professionals have demonstrated openness and readiness to adopt generative AI, mostly because they are excessively burdened by administrative tasks[8] and are desperately seeking a practical solution. Several medical specializations have been identified as benefiting from the use of medical AI, including general practitioners[9], nephrologists[10], nuclear medicine practitioners[11], and pathologists[12], with the technology reportedly having a direct impact on physicians’ roles, responsibilities, and competencies. [12][13][14]Although the above-mentioned potential has been recognized, various studies have noted that the implementation of medical AI would bring about certain challenges[15] and barriers[16], such as physicians’ trust in the AI, user-friendliness[17], or tensions between the human-centric model and technology-centric model, that is, upskilling and deskilling[18], which will further impact on the (non-)acceptance of AI-based tools.[17]

Aims

This study seeks to present the aspirations, fears, expectations, and critical analysis of the ability of AI to transform healthcare. Therefore, this qualitative study aims to provide multi-stakeholder insights, with a particular focus on the perspectives of patients, healthcare professionals, and managers regarding the current state of healthcare, the ways in which AI should be implemented, the expectations of AI, the synergistic effect between physicians and AI, and its impact on the patient–physician relationship. These results will provide some clarification regarding questions that have been raised about openness towards embracing AI, and critical awareness of AI's potential limitations in clinical practice.

Methods

This study was conducted from June to December 2021 as a multi-stakeholder (n = 75) qualitative study. It employs an anticipatory ethics approach, an innovative form of ethical reasoning that is applied to the analysis of potential mid-term to long-term implications and outcomes of technological innovation[19], and sociology of expectations, focusing on the role of expectations in shaping scientific and technological change.[20][21] These are the theoretical frameworks underpinning the design of the qualitative study, in which the questions were followed by two scenarios set in 2030 and 2023 to stimulate discussions. Although both referred to the digital health context, the first scenario focused on the use of an AI-based virtual assistant, while the second focused on self-monitoring devices. This article focuses only on the first scenario (see Appendix I) as it was embedded in the clinical setting and depicts the future care provision and transformation of healthcare. The study follows the consolidated criteria for reporting qualitative research (COREQ) guidelines[22] (see Appendix II). Furthermore, it was approved by the Catholic University of Croatia Ethics Committee n. 498-03-01-04/2-19-02.

Participants and recruitment

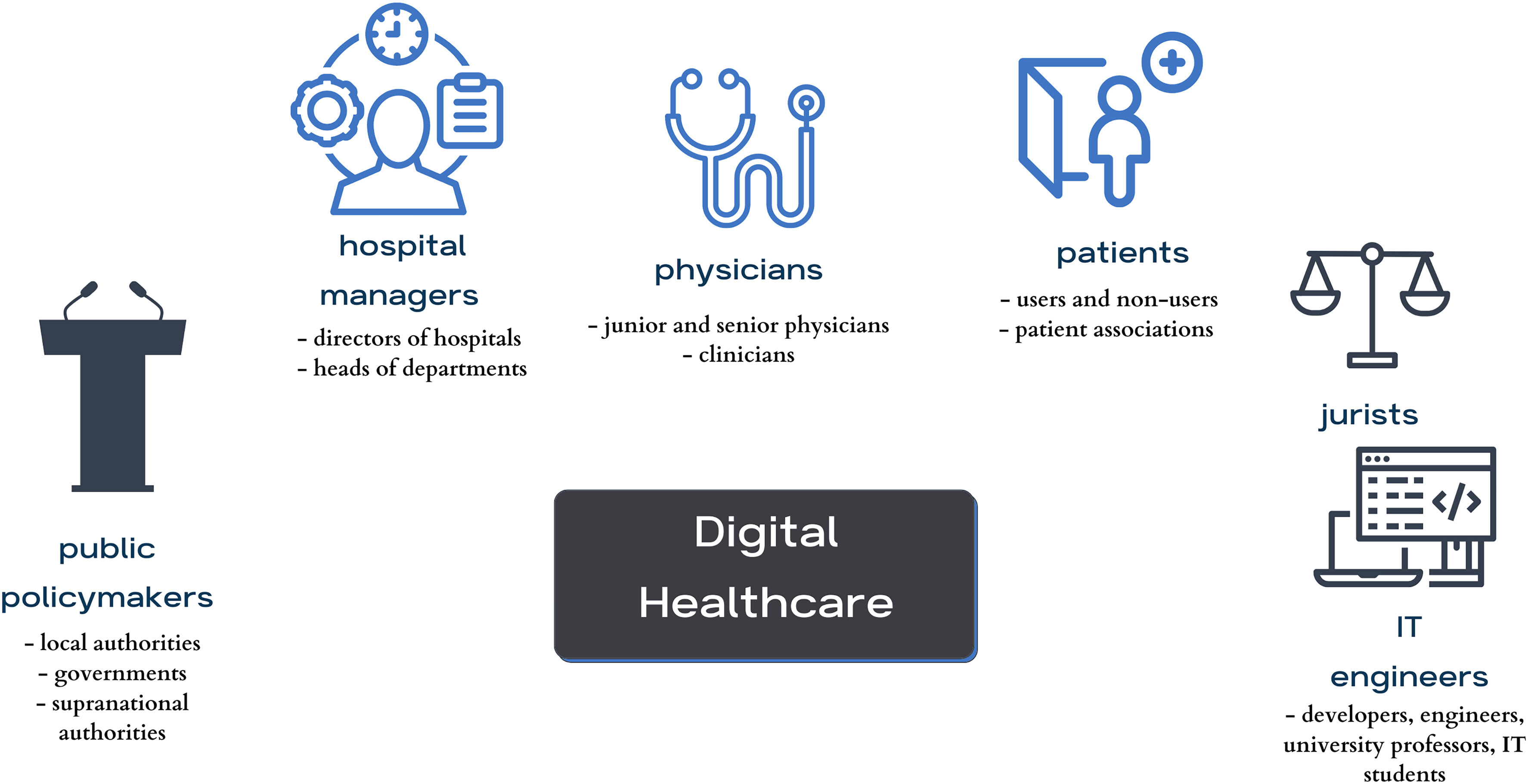

A purposeful random sampling method was employed. The inclusion criteria included that they belonged to specific key stakeholder groups (physicians, patients, or hospital managers), while people who were under 18 years of age or who did not fall into any of the specified key stakeholder groups were excluded. The participants were recruited using the snowballing technique until data saturation was reached, respecting and ensuring data heterogeneity and aiming for maximum variation in variables such as stakeholder category, age, gender, and location. Participants (n = 75) were identified as stakeholders in the healthcare context: patients, physicians, IT engineers, jurists, hospital managers, and policymakers (Figure 1). Initially, an email was sent to invite participation in the research. Some of the invitees did not respond to the email, though no one provided reasons for declining. Of those who agreed to participate, some participants opted to postpone the interviews due to other commitments, and some were ultimately not conducted.

|

Considering the context, with the recent introduction of ChatGPT and outlined aim, it was decided to focus mainly on three groups that were directly affected by the adoption of AI in medicine (n = 38), which were physicians (n = 12), patients (n = 15), and healthcare managers (n = 11). All participation was voluntary and, prior to the interview, participants received all of the information they needed to provide informed consent.

Data collection and analysis

Semi-structured interviews were conducted both in-person (at locations convenient for the participants or at the research group's work office) and online, using the Zoom platform, by researchers experienced in qualitative research. Only the participant and the researcher attended the interviews. The initial interview guide was based on the authors’ previous desk research on recognized ethical, legal, and social issues in the development and deployment of AI in medicine.[23] It was inspired by similar studies[24][25][26] and was pilot-tested on a group of 23 stakeholders. Later, the interview guide was adapted as the study continued to take account of emerging themes until data saturation was reached. All interviews were recorded using a portable audio recorder and later transcribed; the average length of interviews was 47 minutes. Transcripts were not provided to participants for comments or corrections. The transcribed interviews were entered into the NVivo qualitative data analysis software. Researchers familiarized themselves with the material by reading the transcripts and taking notes to gain deeper insights into the data. Next, a thematic analysis was conducted.[27] Following that, an open coding process was initiated for the interviews (n = 11). Based on the initial codes, the researchers agreed on thematic categories[28], leading to the development of the final codebook, which was then used to code the remaining interviews. Finally, the researchers combined and discussed themes for comparison and reached a consensus on how to define and use them. All interviews were analyzed in the original language (Croatian), and the quotes presented in this article have been translated into English.

Results

Participant demographics

This study focuses on 38 conducted interviews with patients (comprising 40% of the sample; 15/38), followed by physicians (31% of the sample; 12/38), and health managers (29% of the sample; 11/38). In terms of gender, 53% of the participants were female (20/38), while 47% (18/38) were male. The participants’ ages ranged from the 18 to 24 age group to the 65 and older category. Regarding the geographical distribution, most respondents 74% (28/38) hailed from urban centers, and nine participants, representing 23% of respondents (9/38), were from the urban periphery, whereas one participant resided in a rural periphery (Table 1). A minority of patients (33%; 5/15) regularly used technology for health monitoring, such as applications and smart devices (e.g., smartwatches), in their daily routines.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Thematic analysis

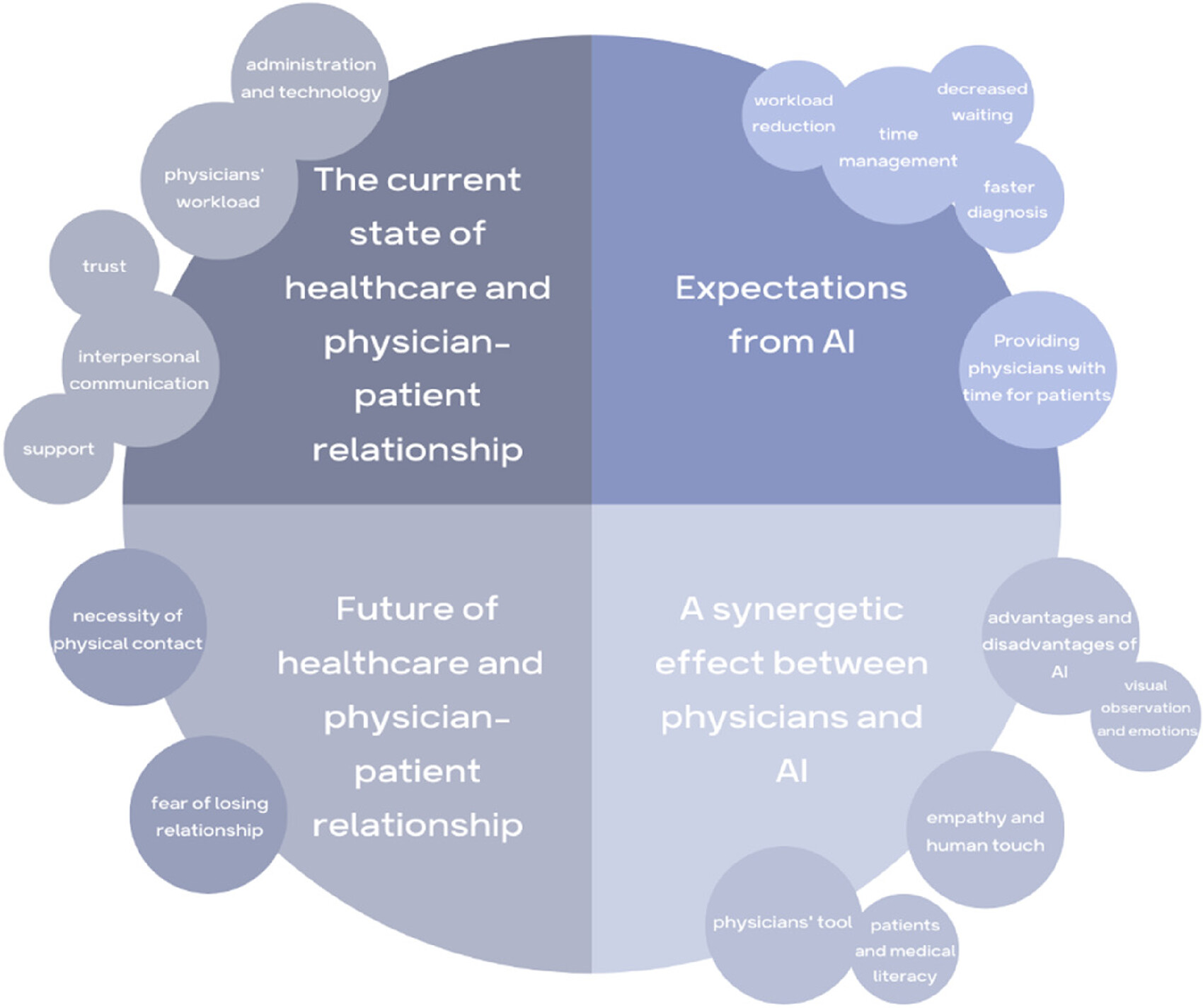

Four themes and subthemes (Figure 2) were identified: (1) the current state of healthcare and the patient–physician relationship; (2) expectations of AI; (3) a synergetic effect between physicians and AI; and (4) the future of healthcare and the patient–physician relationship.

|

The current state of healthcare and the patient–physician relationship

Research participants reflected on the current state of healthcare, discussing both positive and negative aspects. Their responses encompassed a wide range of topics related to healthcare, including health policies, infrastructure, physicians’ qualifications, patients’ medical literacy, weak hospital management, and financial losses. While each of these themes is intriguing for analysis, this section will focus on the current state of the patient–physician relationship, and specifically those aspects highlighted by the participants as being the essential, positive, or negative aspects of this relationship.

Many participants emphasized trust, honesty, and effective interpersonal communication as a fundamental aspect of the patient–physician relationship. Patients stressed the importance of being heard by their physicians and actively engaging in a shared decision-making process.

I think the most important thing for me is that we communicate about everything. To talk. To trust what he tells me. (Patient 5)

It's important that he[a] listens to me and doesn't jump to conclusions before I fully explain the issue. (Patient 9)

It is crucial that he listen to me and that I listen to him. That's really the most important thing because if he does not listen to me, if it only gets heard in one ear, coming here is a waste of time. (Patient 12)

Physicians also emphasized the importance of patients having trust in them, identifying openness and honesty as directly enabling the identification of the fastest and optimal treatment approach. Furthermore, they highlighted the significance of patients’ ability to articulate their condition effectively, enabling them to convey their symptoms clearly to the physician.

When they have trust in me, it becomes much easier for me to treat them. (Physician 3)

I have always strived, both in direct and indirect interactions at work, to listen to the patient, guide them, and provide advice in good faith, with the simple aim of resolving any issues. (Physician 4)

The most crucial aspect is establishing easy communication and trust in what we do. (Physician 12)

Actually, it's always about honesty. Knowing that, when patients speak, they tell the exact truth and don't deceive. That's an essential factor for me. Another important factor is the patient's capacity to communicate clearly. To correctly interpret their symptoms and condition of health. To convey a comprehensive picture to me. (Physician 11)

Some physicians emphasized that patients often come to them specifically to have a conversation. In other words, patients sometimes approach physicians not only for health issues but also for support and simply to be heard.

Many people come to the physician just to vent. They come to vent, and then we find some psychological component in a good percentage of them. I believe it's important for them to sit down with the physician, have a chat. (Physician 11)

Some patients reported that they have developed a friendly relationship with their physician due to long-term treatment.

The friendship that is built over the years is essential because I have been with this physician for twenty years, and I simply feel like… When I come to her, it's like talking to a friend – we chat a little, laugh, and then I start talking about my [health] problems. (Patient 11)

A friendly patient–physician relationship must be developed. (Patient 5)

Patients also expressed dissatisfaction that they sometimes do not receive enough care and attention from physicians. However, they were aware that the reason for this is often the physicians’ overwhelming workload and the limited time they have available.

It feels selfish to ask because I know they have a lot of work to do, but I don't like being just a number and a piece of paper in a drawer. (Patient 10)

You feel like they want to get rid of you. To get it done as quickly as possible, and that's it. (Patient 1)

Some participants pointed out that the paperwork is a problem. According to them, physicians are more focused on paperwork than on the individuals sitting in front of them.

I'd prefer if the physician didn't just look at the papers but lifted their head, talked to me, gave me a look, and conducted an examination if needed, which is equally important, because lately, it often seems to be reduced to just paperwork. (Patient 15)

Physicians also expressed dissatisfaction with the fact that a part of their consultation time with patients is spent on tasks that could be done differently or replaced by other methods.

Suppose I spend time measuring their blood pressure at every consultation, explaining to them that I'm referring them for certain tests, and prescribing medications every time. In that case, I have less time for purposeful [laughs] procedures that could really impact treatment outcomes, right? (Physician 9)

One aspect that physicians highlighted as being time-consuming was the administrative tasks undertaken during patient consultations. Similarly, manual data collection and entering data into the system often means the physician is spending the meeting time looking at a screen, having limited actual contact with the patient who is there.