Difference between revisions of "Journal:Establishing reliable research data management by integrating measurement devices utilizing intelligent digital twins"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 46: | Line 46: | ||

==Related work== | ==Related work== | ||

In order to better situate the present work in the state of the art, the following subsections first show the foundations of RDM, then the developments in the field of DTs. For both focal points, requirements for the development of the later introduced architecture are elaborated, which provides a basis for discussion at the end. | |||

===Research data management=== | |||

The motivating force for reliable RDM should not be the product per se, but rather the necessity to build a body of knowledge enabling the subsequent integration and reuse of data and knowledge by the research community through a reliable RDM process. [3] Therefore, the primary objective of RDM is to capture data in order to pave the way for new scientific knowledge in the long term. | |||

To bridge the gap from simple [[information]] to actual knowledge generation in order to bring greater value to researchers, data are the fundamental resource that enables the integration of the physical world with the virtual world, and finally, the interaction with each other. [13] The DT as an innovative concept of Industry 4.0 enables the convergence of the physical world with the virtual world through its definition-given bilateral data exchange. Data from physical reality are seamlessly transferred into virtual reality, allowing developed applications and services to influence the behavior and impact on the physical reality. Data are the underlying structure that enables the DT; as such, having good data management practices in place provides the realization of the concept. [1] | |||

Specifically, in the context of the ongoing advances in innovative technologies, data have evolved from being merely static in nature to being a continuous stream of information. [14] In practice, the data generated in research activities are commonly stored in a decentralized manner on the computers of individual researchers or on local data mediums. [5] A recent study showed that only 12 percent of research data is stored in reliable repositories accessible by others. The far greater part, the so-called “shadow data,” remains in the hands of the researchers, resulting in the loss of non-reproducible data sets, devoid of the possibility of extracting further knowledge from this data. [4] In addition, the [[Backup|backed-up]] data may become inconsistent and lose significance without the entire measurement series being available. According to Schadt ''et al.''[15], the most efficient method currently available for transmitting large amounts of data to collaborative partners entails copying the data to a sufficiently large storage drive, which is then sent to the intended recipient. This observation can also be confirmed within CeMOS, where this practice of data transfer prevails. Not only is this method inefficient and a barrier to data sharing, but it can also become a security issue when dealing with sensitive data. With such an abundance of data flows, large amounts of data need to be processed and reliably stored, causing RDM to gain momentum within the researcher’s community. [14] | |||

Based on the increasing awareness and the initiated ambition towards a reformation of publishing and communication systems in research, the international coalition of Wilkinson ''et al.'' [3] proposed the FAIR Data Principles in 2016. These principles are intended to serve as a guide for those seeking to improve the reusability of their data assets, according to which data are expected to be findable, accessible, interoperable, and reusable (FAIR) throughout the data lifecycle. The FAIR principles are briefly outlined below within the context of the technical requirements, as modeled by Wilkinson ''et al.''[3]: | |||

* '''Findable''': Data are described with extensive [[metadata]], which are given a globally unique and persistent identifier and are stored in a searchable resource. | |||

* '''Accessible''': Metadata are retrievable by their individual indicators through a standardized protocol, which is publicly free and universally implementable, as well as enabling an authentication procedure. The metadata must remain accessible even if the data are no longer available. | |||

* '''Interoperable''': (Meta)-data utilize a formal, broadly applicable language and follow FAIR principles; moreover, references exist between (meta)-data. | |||

* '''Reusable''': (Meta)-data are characterized by relevant attributes and released on the basis of clear data usage licenses. The origin of the (meta)-data is clearly referenced. In addition, (meta)-data comply with domain-relevant community standards. | |||

While the FAIR principles define the core foundation for a reliable RDM, there is also a need to ensure that the necessary scientific infrastructure is in place to support RDM. [16] In addition to the FAIR criteria, the concept of a data management plan (DMP) has a significant impact on the success of any RDM effort. The DMP is a comprehensive document that details the management of a research project’s data throughout its entire lifecycle. [17] A standard DMP in fact does not exist, as it must be individually tailored to the requirements of the respective research project. This requires an extensive understanding of the individual research project and an awareness of the complexity and project-specific research data. The actual implementation of a DMP often creates additional work for researchers, such as data preparation or documentation. [16] With the aim of providing researchers with a useful instrument, a number of web-based collaborative tools for creating DMPs has since emerged, such as DMPTool, DMPonline, and Research Data Management Organizer (RDMO). [18] | |||

In addition to the benefits already mentioned, the use of a research data infrastructure facilitates the visibility of scientists’ research as well as identifying new collaboration partners in industry, research, or funding bodies. [5,16] In the meantime, funding bodies in particular have recognized the necessity of effective RDM, making it a prerequisite for the submission of research proposals. [16,17] | |||

===Digital twins=== | |||

The first pioneering principles for twinning systems can be dated back to training and simulation facilities of the National Aeronautics and Space Administration (NASA). In 1970, these facilities gained particular prominence during the thirteenth mission of the Apollo lunar landing program. Using a full-scale simulation environment of the command and lunar landing capsule, NASA engineers on Earth mirrored the condition of the seriously damaged spacecraft and tested all necessary operations for a successful return of the astronauts. All the possibilities could thus be simulated and validated before executing the real protocol to avoid the potential fatal outcome of a mishandling. [19,20] The actual paradigm of a virtual representation of physical entities was initiated later in 2002. After the first introduction, Michael Grieves further developed his product life cycle (PLC) model, which was later given the term "digital twin" by NASA engineer John Vickers. The mirrored systems approach was popularized in 2010 when it was incorporated into NASA’s technical road map. [21,22,23] | |||

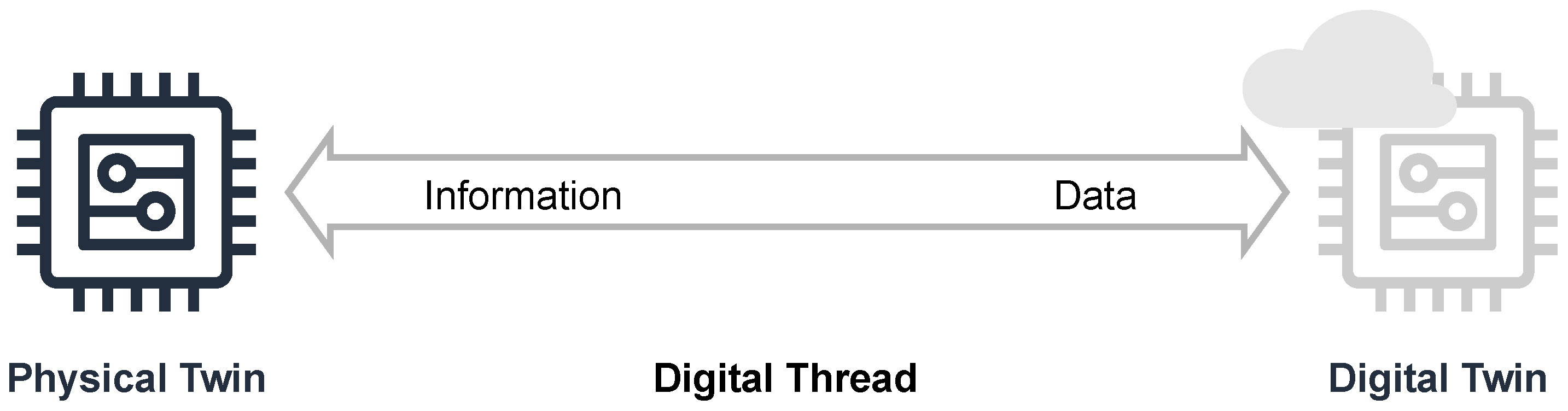

The fundamental concept can be divided into the duality of the physical and virtual world. According to Figure 1, the physical world or space contains tangible components, i.e., machines, apparatuses, production assets, measurement devices, or even physical processes, the so-called PTs. In this context, the illustration shows a stylized device of arbitrary complexity on the left-hand side. On the right side, its virtual counterpart is shown in the virtual world or space. The coexistence of both is ensured by the bilateral stream of data and information, which is introduced as a digital thread. All raw data accumulated from the physical world are sent by the PT to its DT, which aggregates them and provides accessibility. Vice versa, by processing these data, the DT provides the PT with refined analytical information. Each PT is allocated to precisely one DT. One of the goals is to transfer work activities from the physical world to the virtual world so that efficiency and resources are preserved. [23] Systems with a multitude of devices especially require flexible approaches for orchestration. Processes and devices must be able to be varied, rescheduled, and reconfigured. [24] Twin technologies as enablers for this, providing the greatest possible degree of freedom. | |||

[[File:Fig1 Lehmann Sensors23 23-1.png|700px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="700px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' Concept of digital twins according to Grieves. [23]</blockquote> | |||

|- | |||

|} | |||

|} | |||

==References== | ==References== | ||

Revision as of 16:56, 12 September 2023

| Full article title | Establishing reliable research data management by integrating measurement devices utilizing intelligent digital twins |

|---|---|

| Journal | Sensors |

| Author(s) | Lehmann, Joel; Schorz, Stefan; Rache, Alessa; Häußermann, Tim; Rädle, Matthias; Reichwald, Julian |

| Author affiliation(s) | Mannheim University of Applied Sciences |

| Primary contact | Email: j dot lehmann at hs dash mannheim dot de |

| Year published | 2023 |

| Volume and issue | 23(1) |

| Article # | 468 |

| DOI | 10.3390/s23010468 |

| ISSN | 1424-8220 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.mdpi.com/1424-8220/23/1/468 |

| Download | https://www.mdpi.com/1424-8220/23/1/468/pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

One of the main topics within research activities is the management of research data. Large amounts of data acquired by heterogeneous scientific devices, sensor systems, measuring equipment, and experimental setups have to be processed and ideally managed by FAIR (findable, accessible, interoperable, and reusable) data management approaches in order to preserve their intrinsic value to researchers throughout the entire data lifecycle. The symbiosis of heterogeneous measuring devices, FAIR principles, and digital twin technologies is considered to be ideally suited to realize the foundation of reliable, sustainable, and open research data management. This paper contributes a novel architectural approach for gathering and managing research data aligned with the FAIR principles. A reference implementation as well as a subsequent proof of concept is given, leveraging the utilization of digital twins to overcome common data management issues at equipment-intense research institutes. To facilitate implementation, a top-level knowledge graph has been developed to convey metadata from research devices along with the produced data. In addition, a reactive digital twin implementation of a specific measurement device was devised to facilitate reconfigurability and minimized design effort.

Keywords: cyber–physical system, sensor data, research data management, FAIR, digital twin, research 4.0, knowledge graph, ontology

Introduction

Initiated through the ongoing efforts of digitization, one of the new fields of activity within research concerns the management of research data. New technologies and the related increase in computing power can now generate large amounts of data, providing new paths to scientific knowledge. [1] Research is increasingly adopting toolsets and techniques raised by Industry 4.0 while gearing itself up for Research 4.0. [2] The requirement for reliable research data management (RDM) can be managed by FAIR data management principles, which indicate that data must be findable, accessible, interoperable, and reusable through the entire data lifecycle in order to provide value to researchers. [3] In practice, however, implementation often fails due to the high heterogeneity of hardware and software, as well as outdated or decentralized data backup mechanisms. [4,5] This experience can be confirmed by the work at the Center for Mass Spectrometry and Optical Spectroscopy (CeMOS), a research institute at the Mannheim University of Applied Sciences which employs approximately 80 interdisciplinary scientific staff. In the various fields within the institute’s research landscape—including medical technology, biotechnology, artificial intelligence (AI), and digital transformation, a wide variety of hardware and software is required to collect and process the data that are generated, which in initial efforts is posing a significant challenge for achieving holistic data integration.

To cater to the respective disciplines, researchers of the institute develop experimental equipment such as middle infrared (MIR) scanners for the rapid detection and imaging of biochemical substances in medical tissue sections, multimodal imaging systems generating hyperspectral images of tissue slices, or photometrical measurement devices for detection of particle concentration. Nevertheless, they also use non-customizable equipment such as mass spectrometers, microscopes, and cell imagers for their experiments. These appliances provide great benefits for further development within the respective research disciplines, which is why the data are of immense value and must be brought together accordingly in a reliable RDM system.

Research practice shows that the step into the digital world seems to be associated with obstacles. As an innovative technology, the digital twin (DT) can be seen as a secure data source, as it mirrors a physical device (also called a physical twin or PT) into the digital world through a bilateral communication stream. [6] DTs are key actors for the implementation of Industry 4.0 prospects. [7] Consequently, additional reconfigurability of hardware and software of the digitally imaged devices becomes a reality. The data mapped by the DT thus enable the bridge to the digital world and hence to the digital use and management of the data. [1] Depending on the domain and use case, industry and research are creating new types of standardization-independent DTs. In most cases, only a certain part of the twin’s life cycle is reflected. Only when utilized over the entire life cycle of the physical entity does the DT becomes a powerful tool of digitization. [8,9] With the development of semantic modeling, hardware, and communication technology, there are more degrees of freedom to leverage the semantic representation of DTs, improving their usability. [10] For the internal interconnection in particular, the referencing of knowledge correlations distinguishes intelligent DTs. [11] The analysis of relevant literature reveals a research gap in the combination of both approaches (RDM and DTs), which the authors intend to address with this work.

In this paper, a centralized solution-based approach for data processing and storage is chosen, which is in contrast to the decentralized practice in RDM. Common problems of data management include having many locally, decentrally distributed research data; missing access authorizations; and missing experimental references, which is why the results become unusable over long periods of time. The resulting replication of data is followed by inconsistencies and interoperability issues. [12] Furthermore, these circumstances were also determined by empirical surveys at the authors’ institute. Therefore, a holistic infrastructure for data management is introduced, starting with the collection of the measurement series of the physical devices, up to the final reliable reusability of the data. Relevant requirements for a sustainable RDM leveraged by intelligent DTs are elaborated based on the related work. By enhancing with DT paradigms, the efficiency of a reliable RDM can be further extended. This forms the basis for an architectural concept for reliable data integration into the infrastructure with the DTs of the fully mapped physical devices.

Due to the broad spectrum and interdisciplinarity of the institution, myriad data of different origins, forms, and quantities are created. The generic concept of DT allows evaluation units to be created agnostically from their specific use cases. Not only do the physical measuring devices and apparatuses benefit in the form of flexible reconfiguration through the possibilities of providing their virtual representation with intelligent functions, but also directly through the great variety of harmonized data structures and interfaces made possible by DTs. The bidirectional communication stream between the twins enables the physical devices to be directly influenced. Accordingly, parameterization of the physical device takes place dynamically using the DT, instead of statically using firmware as is usually the case. In addition, due to the real-time data transmission and the seamless integration of the DT, an immediate and reliable response to outliers is possible. Both data management and DTs as disruptive technology are mutual enablers in terms of their realization. [1] Therefore, the designed infrastructure is based on the interacting functionality of both technologies to leverage their synergies providing sustainable and reliable data management. In order to substantiate the feasibility and practicability, a demo implementation of a measuring device within the realized infrastructure is carried out using a photometrical measuring device developed at the institute. This also forms the basis for the proof of concept and the evaluation of the overall system.

As main contributions, the paper (1) presents a new type of approach for dealing with large amounts of research data according to FAIR principles; (2) identifies the need for the use of DTs to break down barriers for the digital transformation in research institutes in order to arm them for Research 4.0; (3) elaborates a high-level knowledge graph that addresses the pending issues of interoperability and meta-representation of experimental data and associated devices; (4) devises an implementation variant for reactive DTs as a basis for later proactive realizations going beyond DTs as pure, passive state representations; and (5) works out a design approach that is highly reconfigurable, using the example of a photometer, which opens up completely new possibilities with less development effort in hardware and software engineering by using the DT rather than the physical device itself.

This paper is organized as follows. The next section points out the state of the art and the related work in terms of RDM and DTs. Both subsections derive architectural requirements, which serve to evolve an architecture for sustainable and reliable RDM. Next, specifically picked use cases of the authors’ institute are outlined, followed by their implementation and subsequent proof of concept and evaluation. Finally, after a discussion that relates the predefined requirements with each other and the implemented infrastructure, the work will be concluded and future challenges will be prospected.

Related work

In order to better situate the present work in the state of the art, the following subsections first show the foundations of RDM, then the developments in the field of DTs. For both focal points, requirements for the development of the later introduced architecture are elaborated, which provides a basis for discussion at the end.

Research data management

The motivating force for reliable RDM should not be the product per se, but rather the necessity to build a body of knowledge enabling the subsequent integration and reuse of data and knowledge by the research community through a reliable RDM process. [3] Therefore, the primary objective of RDM is to capture data in order to pave the way for new scientific knowledge in the long term.

To bridge the gap from simple information to actual knowledge generation in order to bring greater value to researchers, data are the fundamental resource that enables the integration of the physical world with the virtual world, and finally, the interaction with each other. [13] The DT as an innovative concept of Industry 4.0 enables the convergence of the physical world with the virtual world through its definition-given bilateral data exchange. Data from physical reality are seamlessly transferred into virtual reality, allowing developed applications and services to influence the behavior and impact on the physical reality. Data are the underlying structure that enables the DT; as such, having good data management practices in place provides the realization of the concept. [1]

Specifically, in the context of the ongoing advances in innovative technologies, data have evolved from being merely static in nature to being a continuous stream of information. [14] In practice, the data generated in research activities are commonly stored in a decentralized manner on the computers of individual researchers or on local data mediums. [5] A recent study showed that only 12 percent of research data is stored in reliable repositories accessible by others. The far greater part, the so-called “shadow data,” remains in the hands of the researchers, resulting in the loss of non-reproducible data sets, devoid of the possibility of extracting further knowledge from this data. [4] In addition, the backed-up data may become inconsistent and lose significance without the entire measurement series being available. According to Schadt et al.[15], the most efficient method currently available for transmitting large amounts of data to collaborative partners entails copying the data to a sufficiently large storage drive, which is then sent to the intended recipient. This observation can also be confirmed within CeMOS, where this practice of data transfer prevails. Not only is this method inefficient and a barrier to data sharing, but it can also become a security issue when dealing with sensitive data. With such an abundance of data flows, large amounts of data need to be processed and reliably stored, causing RDM to gain momentum within the researcher’s community. [14]

Based on the increasing awareness and the initiated ambition towards a reformation of publishing and communication systems in research, the international coalition of Wilkinson et al. [3] proposed the FAIR Data Principles in 2016. These principles are intended to serve as a guide for those seeking to improve the reusability of their data assets, according to which data are expected to be findable, accessible, interoperable, and reusable (FAIR) throughout the data lifecycle. The FAIR principles are briefly outlined below within the context of the technical requirements, as modeled by Wilkinson et al.[3]:

- Findable: Data are described with extensive metadata, which are given a globally unique and persistent identifier and are stored in a searchable resource.

- Accessible: Metadata are retrievable by their individual indicators through a standardized protocol, which is publicly free and universally implementable, as well as enabling an authentication procedure. The metadata must remain accessible even if the data are no longer available.

- Interoperable: (Meta)-data utilize a formal, broadly applicable language and follow FAIR principles; moreover, references exist between (meta)-data.

- Reusable: (Meta)-data are characterized by relevant attributes and released on the basis of clear data usage licenses. The origin of the (meta)-data is clearly referenced. In addition, (meta)-data comply with domain-relevant community standards.

While the FAIR principles define the core foundation for a reliable RDM, there is also a need to ensure that the necessary scientific infrastructure is in place to support RDM. [16] In addition to the FAIR criteria, the concept of a data management plan (DMP) has a significant impact on the success of any RDM effort. The DMP is a comprehensive document that details the management of a research project’s data throughout its entire lifecycle. [17] A standard DMP in fact does not exist, as it must be individually tailored to the requirements of the respective research project. This requires an extensive understanding of the individual research project and an awareness of the complexity and project-specific research data. The actual implementation of a DMP often creates additional work for researchers, such as data preparation or documentation. [16] With the aim of providing researchers with a useful instrument, a number of web-based collaborative tools for creating DMPs has since emerged, such as DMPTool, DMPonline, and Research Data Management Organizer (RDMO). [18]

In addition to the benefits already mentioned, the use of a research data infrastructure facilitates the visibility of scientists’ research as well as identifying new collaboration partners in industry, research, or funding bodies. [5,16] In the meantime, funding bodies in particular have recognized the necessity of effective RDM, making it a prerequisite for the submission of research proposals. [16,17]

Digital twins

The first pioneering principles for twinning systems can be dated back to training and simulation facilities of the National Aeronautics and Space Administration (NASA). In 1970, these facilities gained particular prominence during the thirteenth mission of the Apollo lunar landing program. Using a full-scale simulation environment of the command and lunar landing capsule, NASA engineers on Earth mirrored the condition of the seriously damaged spacecraft and tested all necessary operations for a successful return of the astronauts. All the possibilities could thus be simulated and validated before executing the real protocol to avoid the potential fatal outcome of a mishandling. [19,20] The actual paradigm of a virtual representation of physical entities was initiated later in 2002. After the first introduction, Michael Grieves further developed his product life cycle (PLC) model, which was later given the term "digital twin" by NASA engineer John Vickers. The mirrored systems approach was popularized in 2010 when it was incorporated into NASA’s technical road map. [21,22,23]

The fundamental concept can be divided into the duality of the physical and virtual world. According to Figure 1, the physical world or space contains tangible components, i.e., machines, apparatuses, production assets, measurement devices, or even physical processes, the so-called PTs. In this context, the illustration shows a stylized device of arbitrary complexity on the left-hand side. On the right side, its virtual counterpart is shown in the virtual world or space. The coexistence of both is ensured by the bilateral stream of data and information, which is introduced as a digital thread. All raw data accumulated from the physical world are sent by the PT to its DT, which aggregates them and provides accessibility. Vice versa, by processing these data, the DT provides the PT with refined analytical information. Each PT is allocated to precisely one DT. One of the goals is to transfer work activities from the physical world to the virtual world so that efficiency and resources are preserved. [23] Systems with a multitude of devices especially require flexible approaches for orchestration. Processes and devices must be able to be varied, rescheduled, and reconfigured. [24] Twin technologies as enablers for this, providing the greatest possible degree of freedom.

|

References

Notes

This presentation is faithful to the original, with only a few minor changes to presentation. In some cases important information was missing from the references, and that information was added.