Difference between revisions of "Journal:Health data privacy through homomorphic encryption and distributed ledger computing: An ethical-legal qualitative expert assessment study"

Shawndouglas (talk | contribs) (Finished adding rest of content.) |

Shawndouglas (talk | contribs) |

||

| Line 20: | Line 20: | ||

}} | }} | ||

==Abstract== | ==Abstract== | ||

'''Background''': Increasingly, [[hospital]]s and research institutes are developing technical solutions for [[Data sharing|sharing patient data]] in a [[Information privacy|privacy preserving manner]]. Two of these technical solutions are [[homomorphic encryption]] and [[Blockchain|distributed ledger]] technology. Homomorphic encryption allows computations to be performed on data without this data ever being decrypted. Therefore, homomorphic encryption represents a potential solution for conducting feasibility studies on cohorts of sensitive patient data stored in distributed locations. Distributed ledger technology provides a permanent record on all transfers and processing of patient data, allowing data custodians to audit access. A significant portion of the current literature has examined how these technologies might comply with data protection and research ethics frameworks. In the Swiss context, these instruments include the Federal Act on Data Protection and the Human Research Act. There are also institutional frameworks that govern the processing of health related and genetic data at different universities and hospitals. Given Switzerland’s geographical proximity to European Union (EU) member states, the [[General Data Protection Regulation]] (GDPR) may impose additional obligations. | '''Background''': Increasingly, [[hospital]]s and research institutes are developing technical solutions for [[Data sharing|sharing patient data]] in a [[Information privacy|privacy-preserving manner]]. Two of these technical solutions are [[homomorphic encryption]] and [[Blockchain|distributed ledger]] technology. Homomorphic encryption allows computations to be performed on data without this data ever being decrypted. Therefore, homomorphic encryption represents a potential solution for conducting feasibility studies on cohorts of sensitive patient data stored in distributed locations. Distributed ledger technology provides a permanent record on all transfers and processing of patient data, allowing data custodians to audit access. A significant portion of the current literature has examined how these technologies might comply with data protection and research ethics frameworks. In the Swiss context, these instruments include the Federal Act on Data Protection and the Human Research Act. There are also institutional frameworks that govern the processing of health related and genetic data at different universities and hospitals. Given Switzerland’s geographical proximity to European Union (EU) member states, the [[General Data Protection Regulation]] (GDPR) may impose additional obligations. | ||

'''Methods''': To conduct this assessment, we carried out a series of qualitative interviews with key stakeholders at Swiss hospitals and research institutions. These included legal and clinical data management staff, as well as clinical and research ethics experts. These interviews were carried out with two series of vignettes that focused on data discovery using homomorphic encryption and data erasure from a distributed ledger platform. | '''Methods''': To conduct this assessment, we carried out a series of qualitative interviews with key stakeholders at Swiss hospitals and research institutions. These included legal and clinical data management staff, as well as clinical and research ethics experts. These interviews were carried out with two series of vignettes that focused on data discovery using homomorphic encryption and data erasure from a distributed ledger platform. | ||

Latest revision as of 19:20, 7 February 2023

| Full article title | Health data privacy through homomorphic encryption and distributed ledger computing: An ethical-legal qualitative expert assessment study |

|---|---|

| Journal | BMC Medical Ethics |

| Author(s) | Scheibner, James; Ienca, Marcello; Vayena, Effy |

| Author affiliation(s) | ETH Zürich, Flinders University, Swiss Federal Institute of Technology Lausanne |

| Primary contact | Email: effy dot vayena at hest dot ethz dot ch |

| Year published | 2022 |

| Volume and issue | 23 |

| Article # | 121 |

| DOI | 10.1186/s12910-022-00852-2 |

| ISSN | 1472-6939 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://bmcmedethics.biomedcentral.com/articles/10.1186/s12910-022-00852-2 |

| Download | https://bmcmedethics.biomedcentral.com/counter/pdf/10.1186/s12910-022-00852-2.pdf (PDF) |

Abstract

Background: Increasingly, hospitals and research institutes are developing technical solutions for sharing patient data in a privacy-preserving manner. Two of these technical solutions are homomorphic encryption and distributed ledger technology. Homomorphic encryption allows computations to be performed on data without this data ever being decrypted. Therefore, homomorphic encryption represents a potential solution for conducting feasibility studies on cohorts of sensitive patient data stored in distributed locations. Distributed ledger technology provides a permanent record on all transfers and processing of patient data, allowing data custodians to audit access. A significant portion of the current literature has examined how these technologies might comply with data protection and research ethics frameworks. In the Swiss context, these instruments include the Federal Act on Data Protection and the Human Research Act. There are also institutional frameworks that govern the processing of health related and genetic data at different universities and hospitals. Given Switzerland’s geographical proximity to European Union (EU) member states, the General Data Protection Regulation (GDPR) may impose additional obligations.

Methods: To conduct this assessment, we carried out a series of qualitative interviews with key stakeholders at Swiss hospitals and research institutions. These included legal and clinical data management staff, as well as clinical and research ethics experts. These interviews were carried out with two series of vignettes that focused on data discovery using homomorphic encryption and data erasure from a distributed ledger platform.

Results: For our first set of vignettes, interviewees were prepared to allow data discovery requests if patients had provided general consent or ethics committee approval, depending on the types of data made available. Our interviewees highlighted the importance of protecting against the risk of re-identification given different types of data. For our second set, there was disagreement amongst interviewees on whether they would delete patient data locally, or delete data linked to a ledger with cryptographic hashes. Our interviewees were also willing to delete data locally or on the ledger, subject to local legislation.

Conclusion: Our findings can help guide the deployment of these technologies, as well as determine ethics and legal requirements for such technologies.

Keywords: data protection, privacy preserving technologies, qualitative research, vignettes, interviews, distributed ledger technology, homomorphic encryption

Introduction

Advanced technological solutions are increasingly used to resolve privacy and security challenges with clinical and research data sharing.[1][2] The legal assessment of these technologies so far has focused on compliance. Significant attention has been paid to the modernized General Data Protection Regulation (GDPR) of the European Union (EU) and its impacts. In particular, the GDPR restricts the use and transfer of sensitive personal data, including genetic-, medical-, and health-related data. Further, under the GDPR, data custodians and controllers must take steps to ensure the auditability of personal data, including patient data. Specifically, the GDPR’s provisions guarantee the right to access information about processing (particularly automated processing) and allow data subjects to monitor the use of their data.[3] These rights exist alongside requirements for data custodians and controllers to keep records of how they have processed personal data.[4] The GDPR also introduces a right of erasure, which allows an individual to request that a particular data controller or data custodian delete their data.

At the same time, the increased use of big data techniques in health research and personalized medicine has led to a surge in hospitals and healthcare institutions collecting data.[1] Therefore, guaranteeing patient privacy—particularly for data shared between hospitals and healthcare institutions—represents a significant technical and organizational challenge. The relative approach to determining anonymization under the GDPR means that whether data is anonymized depends on both the data and the environment in which it is shared. Accordingly, at present it is unclear whether the GDPR permits general or “broad” consent for a research project.[5] A further issue concerns how patient data might be used for research. On the one hand, patients are broadly supportive of their data being used for research purposes or for improving the quality of healthcare. On the other hand, patients have concerns about data privacy, as well as having their data be misused or handled incorrectly.[6]

In response to these challenges, several technological solutions have emerged to aid compliance with data protection legislation.[7] Two examples of these with differing objectives are homomorphic encryption (HE) and distributed ledger technology (DLT). HE can allow single data custodians to share aggregated results without the need to share the data used to answer that query.[8][9] For example, HE can be particularly useful for performing queries on data which must remain confidential, such as trade secrets.[10] In addition, as we discuss in this paper, HE can be useful for researchers who wish to conduct data discovery or feasibility studies on patient records.[11] A feasibility study is a piece of research conducted before a main research project. The purpose of a feasibility study is to determine whether it is possible to conduct a larger structured research project. Feasibility studies can be used to assess the number of patients required for a main study, as well as response rates and strategies to improve participation.[12] A challenge with conducting feasibility studies is that datasets may be held by separate data custodians at multiple locations (such as multiple hospitals). To conduct a feasibility study, the data custodian at each location would need to guarantee data security before transferring the data. Although necessary, this process can be time-consuming, particularly due to the need for bespoke governance arrangements.[13] However, HE can allow for data discovery queries to be performed on patient records without the need for that data to be transferred.[11] With adequate organizational controls, the lack of transfer of data using HE could satisfy the GDPR’s definition of anonymized data and state-of-the-art encryption measures.[2][7]

By contrast, DLT is not designed to guarantee privacy, but rather increase transparency and trust. DLT attempts to achieve this objective by offering each agent in a processing network a copy of a chain of content. This chain of content, known as the ledger, is read-only, and all access to the content on it is time-stamped. Accordingly, this ledger provides all agents with a record of when access to data occurs.[14] To prevent tampering, the ledger uses a cryptographic “proof of work” algorithm before new records can be added.[15]

Perhaps the most famous use case for DLT is blockchain, which is designed to record agents transacting with digital assets.[16] However, proof of work algorithms were initially employed to certify the authenticity of emails and block spam messages.[17] Further, a similar algorithm to that employed in many blockchain implementations was initially used to record the order in which digital documents were created.[14][18] These examples demonstrate that there may be possible uses for DLT outside current implementations.[18]

Accordingly, some scholars have proposed using DLT-style implementations to create an auditable record of access to patient data.[19][20] Proponents of this approach argue that access to an auditable record could help increase patient trust in the security of their data.[21][22] Further, DLT could be coupled with advanced privacy-enhancing technologies to enable auditing for feasibility studies and ensure that only authorized entities can access patient records.[20]

Nevertheless, there are ongoing questions as to the degree to which HE and DLT can be used to store and process personal data whilst remaining GDPR-compliant.[7][23] In particular, the read-only nature of the ledger underpinning DLT might conflict with the right of erasure contained in the GDPR. The relationship between data protection law and these novel technologies is further complicated when considering data transfer outside the EU.[24][25] Beyond these legal considerations, there are deeper normative issues regarding the relationship between individual privacy and the benefits flowing from information exchange. These issues are particularly pronounced when dealing with healthcare, medical, or biometric data, where patients are forced into an increasingly active role in how their data is used.[26]

Accordingly, the purpose of this paper is to assess the degree to which novel privacy-enhancing technologies can assist key data custodian stakeholders in complying with regulations. For this paper, we will focus on Switzerland as a case study. Switzerland is not an EU member state and is therefore not required to implement the GDPR into its national data protection law (the Datenschutzgesetz, or Federal Act on Data Protection [FADP]). However, because of Switzerland’s regional proximity to other EU member states, there is significant data transfer between Swiss and EU data custodians.[27] Accordingly, the ongoing transfer of data between Switzerland and the EU requires the FADP to offer adequate protection, as assessed under Article 45 of the GDPR.[28][29] Because the FADP was last updated in 1992, the Swiss Federal Parliament in September 2020 passed a draft version of the updated FADP. Estimated to come into effect in 2022, this FADP is designed to achieve congruence with the GDPR.[30]

Switzerland also has separate legislation concerning the processing of health-related and human data for medical research: the Human Research Act (HRA) and the Human Research Ordinance (HRO). These instruments impose additional obligations beyond the FADP for the processing of health-related data for scientific research purposes.[29][31] Crucially, the HRA permits the reuse of health-related data for future secondary research subject to consent and ethics approval. The HRA also creates a separate regime of genetic exceptionalism for non-genetic and genetic data.[32] Specifically, anonymized genetic data requires the patient “not to object” to secondary use, whilst anonymized non-genetic data can be transferred without consent. Under the HRA, research with coded health-related data can be conducted with general consent, whilst coded genetic data requires consent for a specific research project.[1] Recent studies indicate that most health research projects in Switzerland use coded data.[33] This definition is considered analogous to pseudonymized data under the GDPR.[1][34]

Finally, Switzerland is a federated country, with different cantonal legislation for data protection and health related data. This federated system has the potential to undermine nationwide strategies for interoperable data sharing. Therefore, the Swiss Personalised Health Network (SPHN) was established to encourage interoperable patient data sharing. In addition to technical infrastructure, the SPHN has provided a governance framework to standardize the ethical processing of health-related data by different hospitals.[31]

In this paper we describe a series of five vignettes designed to test how key stakeholders perceive using HE and DLT for healthcare data management. The first three vignettes focus on governing data transfer with several different types of datasets using HE. These include both genetic and non-genetic data, to capture the genetic exceptionalism under the Swiss regulatory framework. The latter two vignettes focus on erasure requests for records of data stored using DLT. We gave these vignettes to several key stakeholders in Swiss hospitals, healthcare institutions, and research institutions to answer. We then transcribed and coded those answers. We offer recommendations in this paper regarding the use of these technologies for healthcare management.

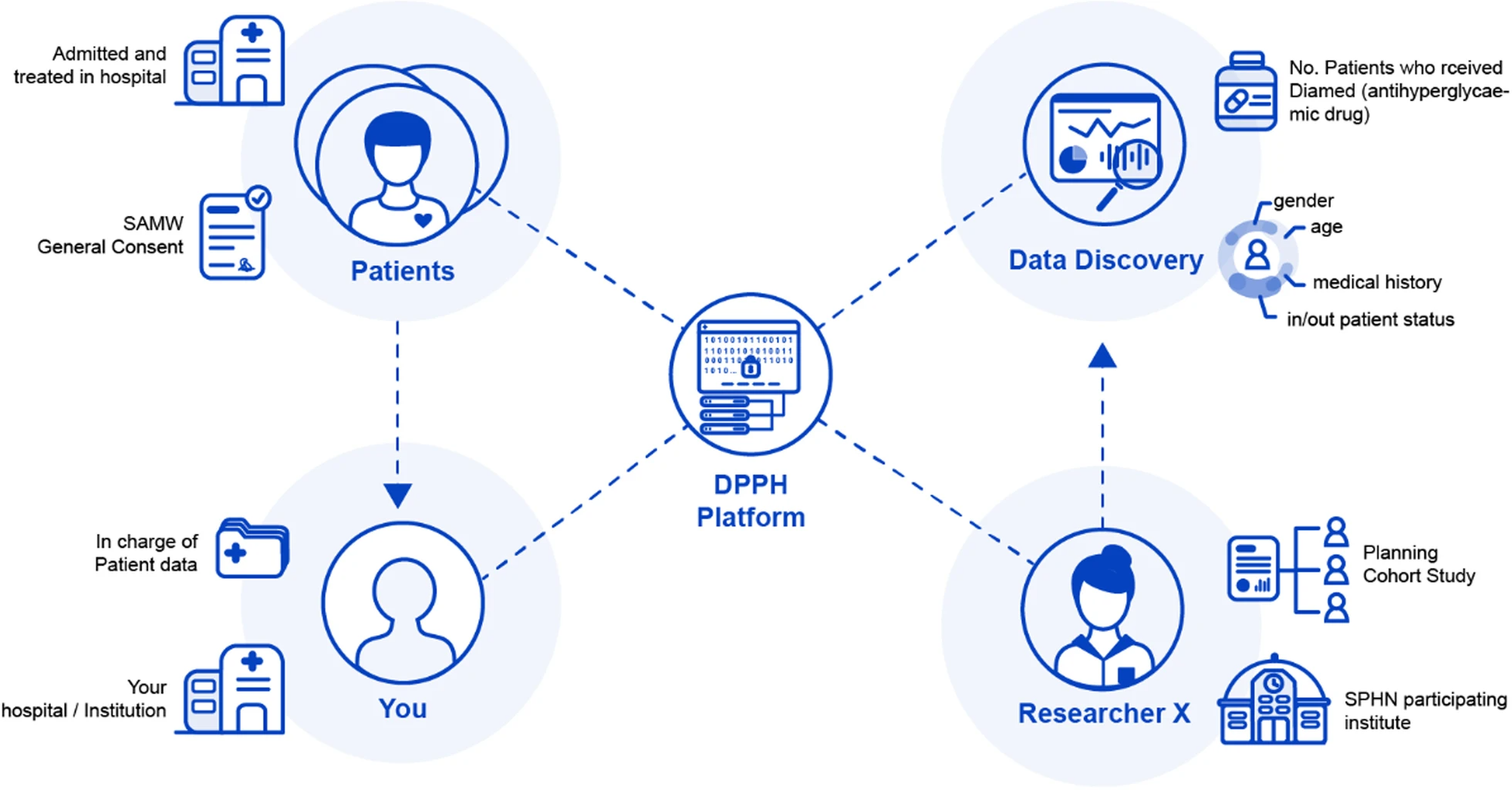

Our paper is the product of an ethico-legal assessment of HE and DLT, conducted as part of the Data Protection and Personalised Health (DPPH) project. The DPPH project is an SPHN-Personalized Health and Related Technologies (PHRT) driver project designed to assess the use of these technologies for multisite data sharing. Specifically, the platform MedCo, which is the main technical output from the DPPH project, uses HE for data feasibility requests on datasets stored locally at Swiss hospitals.[11] Access to this data will be monitored using decentralized ledger technology that will guarantee auditability. Therefore, the purpose of this study is to empirically assess the degree to which the technologies above can help bridge the gap between these multiple layers of regulation.

Methods

To explore the impact of these technologies on regulation-governing health data sharing, we conducted an interview study with expert participants in the Swiss health data sharing landscape. Our interviewees were drawn from legal practitioners, ethics practitioners, and clinical data managers working at Swiss hospitals, research institutes, and governance centers. We used vignette studies, with two sets of vignettes for each technology we tested. For this part of our project, we developed an interview study protocol with vignettes, describing two parts dedicated to the two technologies described above. Vignettes are a useful tool for both qualitative and quantitative research, as well as research with small and large samples. In qualitative research, they allow for both structured data and comparison of responses.[35][36] Further, vignettes can provoke interviewees to explain the reasons for their responses more comprehensively than open-ended questions.[37] Accordingly, for this study, vignettes were chosen because we not only wanted to assess perspectives on specific technologies but also on how various stakeholders would apply these principles in practice.[38] Our findings can help assess the degree to which these technologies can help bridge the gap between different layers of the regulatory framework described above.

Part A of our vignette contained three scenarios concerning a data discovery or feasibility request on patient data. These scenarios were designed using example data discovery protocols from the DPPH project.[11] The first scenario pertained to a data discovery request on non-genetic patient data to build a cohort of patients who had received an anti-diabetic drug. The second scenario pertained to a data discovery request on genetic patient data to build a cohort of patients with biomarkers responding to skin cutaneous melanomas. The third scenario pertained to a data discovery request performed on a larger set of melanoma related biomarkers. However, unlike the other two scenarios, this scenario concerned a data discovery request from a non-SPHN-affiliated commercial research institute, for conducting drug discovery on an anti-melanoma drug. For each scenario, participants were asked whether they would accept the request with general consent from patients, request specific informed consent, or refer the matter to an ethics committee. In the alternative, patients were given the option to indicate another method of handling the request. For each scenario, participants were asked an alternative question as to whether they would permit the feasibility request if general consent had not been sought from patients. The Swiss Academy of Medical Sciences general consent form was used as the template for all the vignettes in our interview guide.

Part B of our vignette contained two additional scenarios. The fourth overall scenario concerned a data erasure request for patient data from the second scenario of Part A that was included as part of a cohort. In this scenario, the patient was concerned about an adverse finding regarding their visa status.[39] The fifth scenario was identical to the fourth scenario, except that the patient’s data had already been included in a cohort that was sufficiently large for research and sent for publication. Both the background and scenario mentioned that personally identifying data was not stored as part of the ledger. Instead, the locally available data was linked to the data via by a cryptographic hash which linked to the record in the local ledger. This hash could be deleted, breaking the link between the data and the ledger, and making the patient’s data unavailable for further research.

In addition, for each of our scenarios, we coupled the qualitative answers with a set of Likert-like scale questions for the four ethical processing factors from the SPHN Ethical Framework for Responsible Data Processing in Personalized Health.[31] These four ethical factors were privacy, data fairness, respect for persons, and accountability (see Fig. 1). The purpose of this was to assess the degree to which interviewees rated the importance of competing ethical factors in research. (The vignettes and questionnaires used in this project are contained in Additional File 1.)

|

An initial list of interviewees was compiled by examining the web pages of relevant institutions in Switzerland to see whether there were any appropriate subject matter experts. We targeted our survey at clinical data management experts, data protection experts, clinical ethics advisors, in-house legal counsel, external legal advisors affiliated with institutions, and health policy experts. These interviewees work at institutions across the different linguistic and geographic barriers in Switzerland. Specifically, these included the five major university hospitals in Switzerland—Geneva University Hospitals (Hôpitaux universitaires de Genève, or HUG), the University Hospital of Bern (Universitätsspital Bern, or Inselspital), the Lausanne University Hospital (Centre Hospitalier Universitaire Vaudois), and the University Hospital of Zürich (Universitätsspital Zürich, or USZ)—as well as various universities and research institutions. We also drew interviewees from any institutions involved in ethics or legal governance for healthcare. Interview invitations were sent out to those participants who had a publicly available email address. After two weeks, reminders were sent out to interviewees. If interviewees had another person that they thought was a more appropriate fit for the research project, we asked them to nominate another set of potential interviewees.

Ethics approval was obtained for this project with the ETH (Eidgenössische Technische Hochschule) Zürich ethics review committee (2019-N-69). Interviewees who agreed to participate in our research project were first asked to sign a consent form and were then asked again to provide verbal consent at the start of the interview. We also sought approval to perform chain sampling for this project, such that at the end of each interview we asked our interviewee groups for recommendations on other interviewees. Some interviews were conducted with more than one interviewee as part of a group. We obliged this request on the grounds that it would grant results more consistency if multiple experts from the same institution agreed on answers. Five potential interviewees opted out of this study because of concerns that they did not have expertise to participate in the study. All interviews were recorded with two devices, one device provided by the ETH Zürich audio visual department, and one device owned by the first author. The interviews were conducted either in-person or via voice-over-IP (VoIP) software such as Skype or Zoom. On average, the interviews took approximately 42 minutes each to complete.

The interviews were transcribed and then inductively-deductively coded for themes by the first author. This inductive coding process involved the first author reading through the transcript and familiarizing themselves with the answers of the interviewee and identifying the interview’s justifications. For example, if the interviewee wanted an ethics committee to review a data discovery request due to existing policy, this answer was labelled as "policy consistency." The first author then generated initial codes around the interview questions and answers. These included whether the interviewee would release the data without further consent, seek informed consent from patients, or refer the matter to an ethics committee. These initial codes were then used to generate further codes from the research, such as reasons for an interviewee’s decision or the interviewee’s understanding of technical terms. For example, these could include why the interviewee would refer the matter to an ethics committee for examination or the meaning of anonymized data.[40] Once these initial codes were applied, the first named author grouped them into themes for each vignette. This grouping aided the analysis of the results, depending on the scenario and the circumstances.[41] This qualitative data analysis was complemented with numerical data analysis to measure how many interviewees were willing to accept data transfer requests under the scenarios depicted in our vignettes.

Results

Overall, 13 interview sessions with 16 interviewees were conducted between September 2019 and September 2020. Data collection was extended due to a lack of immediate uptake from participants (in part due to the COVID-19 pandemic), and to achieve theoretical saturation.[42] However, previous research has indicated that as few as 12 interviews are sufficient to achieve thematic saturation.[43] Accordingly, the interviewees included in this study were sufficient to achieve theoretical saturation.

Demographic details for each of the interviewees are included below in Table 1.

| |||||||||||||||||||||||||||||||||||||||||

Part A

First scenario—Data discovery on non-genetic health data

For the first scenario (demonstrated by Additional File 1: Figure S1), interviewee groups were prepared to accept the request if general consent had been obtained from the patients (n = 11) or would refer the matter to an ethics committee for further approval (n = 5). However, the reason for approving the request changed between the different groups that were interviewed. Four interviewee groups pointed out that the data being requested in the circumstances was aggregated data. Because this data was aggregated data, and not personal data, it therefore could be shared without the need for further informed consent. However, the interviewee group mentioned above also highlighted they would inspect the request to see what types of data were being shared before permitting the request. This answer was justified on the grounds that there was an orthogonal risk of re-identification from repeated queries. Another interviewee who was prepared to refer the request to an ethics committee highlighted how inpatient/outpatient dates could be used to single out one or more records. One interviewee who said that they would accept the request without informed consent mentioned that they wanted to ensure that, from a technical perspective, only records where general consent had been sought were available.

For the second scenario (demonstrated by Additional File 1: Figure S2), some interviewees did not distinguish between genetic and non-genetic health related data. Therefore, they would have still agreed to make this data accessible if general consent had been obtained from the patients. The most common justification for this reasoning was that general consent would permit both access to anonymized genetic and non-genetic health related data. This conclusion is consistent with Articles 32 and 33 of the HRA. Article 32, Paragraph 2 provides that further use can be made of genetic data if informed consent has been sought from the patient beforehand. Likewise, Article 33, Paragraph 2 permits further non-genetic data if informed consent has been sought from the patient beforehand. Several interviewee groups (n = 6) expressed doubt as to whether genetic data could be ever considered anonymized data due to the high potential for re-identification. However, two interviewee groups were prepared to permit access to genetic data with general consent only. Their justification for this decision was that the mutation in the scenario was relatively common, and as such the data could not be used to identify individuals. Conversely, another interviewee who would have permitted the request on non-genetic data with general consent would not have permitted access to this data without specific consent from the patient. We will return to this comment when addressing the legal and ethical considerations from this paper.

For the third scenario (demonstrated by Additional File 1: Figure S3), all interviewees were willing to provide access to a commercial institute, provided this organization agreed to be bound to the SPHN principles. The same concern regarding checking the types of genetic data requested and the potential for re-identification remained for this scenario. Further, interviewees disagreed on how the relationship between the data custodian and the requesting commercial should best be handled. On one hand, four interviewees highlighted how, in their experience, patients were willing to support or even participate in research. Despite this willingness, these interviewees argued that if patients were not made aware that their data might be used for commercial research, it was questionable whether informed consent had been obtained in the circumstances. On the other hand, two other interviewee groups highlighted that it was the legal responsibility of the institute sharing the data to ensure compliance with data protection and research ethics laws. Accordingly, these interviewees argued that they would ensure the requesting institution had signed appropriate data transfer and data use agreements before sending the data.

In a similar fashion, another interviewee mentioned that for this scenario, they would refer the request to an ethics committee to ensure that the appropriate contractual mechanisms were in place to transfer data. Further, this interviewee mentioned that pharmaceutical companies often contacted their institution to run feasibility studies and determine whether there were enough patients. Finally, several interviewee groups mentioned the reputation of the requesting institution, as well as where they were located (n = 5), as a factor in permitting feasibility requests. This decision was justified on the grounds that Switzerland has assessed several jurisdictions (such as EU member states) as offering adequate data protection laws. In these cases, these interviewees would be prepared to send data to institutions or private companies in these jurisdictions. However, for other jurisdictions which did not offer adequate status with EU laws, our interviewees explained they would exercise greater caution in permitting access.

Regarding these three scenarios...

A consistent theme that emerged across all three scenarios amongst interviewees was guaranteeing that the requesting researcher or institution needed the data for specified, explicit, and legitimate purposes. Irrespective of the type of data, interviewees mentioned that it was important to guarantee that the data requested matched the purpose stated by the requesting institute. This perspective is consistent with Article 5 of the GDPR, which states that personal data shall be collected for specified, explicit, and legitimate purposes and not further processed in a manner that is incompatible with those initial purposes. This principle is known as "purpose limitation."[5] The practical effect of the purpose limitation is that data must be collected for a particular research or statistical processing purpose with the explicit consent of research subjects.[44] In the alternative, there may be limited circumstances where data can be collected for research purposes without explicit and informed consent. These alternatives are discussed in further detail in the discussion section.

Another repeating theme that emerged across all scenarios was the question of general consent and appropriate information to be included in this form. Several interviewees raised concerns about the form that was supplied during the interview sessions. These interviewees felt that patients should be given the option to opt out of having their encoded and anonymized data used for research purposes. If patients were only allowed to opt out or refuse to consent to having their encoded data, but not their anonymized data used for research purposes, these interviewees reasoned that this would impinge on patient rights. One interviewee voiced concern about whether patients would have the capacity to understand the technology used to access and store patient data. According to this interviewee, patients might feel a lack of trust in the DPPH platform if they believed it was used to access their data and bypass the oversight of a research ethics committee. One point of concern was the proliferation of institution-specific general consent forms (n = 8).

Several interviewees mentioned that they would be uncertain about reusing data from another institution if that institution used a different type of informed consent form. Further, one interviewee mentioned that general consent forms had only been introduced into their hospital recently. As a result, the number of patient records where general consent had been sought for research use was relatively small. The lack of a uniform general or prospective consent form is frequently reported as an issue in both Switzerland and other jurisdictions. However, several interviewees noted that a potential solution to these problems existed via Article 34 of the HRA. This provision permits data without explicit consent with ethics committee approval if it would be impossible to obtain consent, no refusal exists, and the research goals outweigh the rights of individuals. We will discuss strategies on how to deal with the proliferation and variety of consent forms later in the Discussion section.

Another point of concern related to expertise with respect to the scenarios. As mentioned previously, five potential interviewees who were contacted to be interviewed refused to participate on the grounds they lacked technical expertise to answer the questions. This concern reflects a broader issue where decision-making institutions, such as institutional review boards, might lack the educational and practical background to adequately assess the risks of computational science research. This lack of experience is by no means an individual failing and more an artifact of the lack of experienced researchers available to ethics committees to provide this expertise.[45]

Figure 2 summarizes the relationship between the different actors who are involved in data discovery requests on the DPPH platform.

|

Part B

Scenario four—Erasure request before publication

For the fourth scenario (demonstrated by Additional File 1: Figure S4), a consistent theme was the competing ethical considerations at play. On one hand, most of our interviewees at universities and research institutes explained that it was important to respect the wishes of patients and guarantee their privacy. In particular, the scenarios concerned a patient wishing to delete their data because of the validity of their residency permit. In addition, under Article 5 of the FADP, controllers are obliged to delete any information that is incorrect or incomplete about a data subject, granting a limited right of erasure.[46] On the other hand, interviewees for hospitals noted they had legal and institutional responsibilities to guarantee the completeness of data. These legal obligations include being able to conduct audits on patient data, as well as cantonal legislative requirements not to erase any patient data. Finally, some interviewees mentioned that an erasure request would be a relatively rare occurrence. This observation reflects similar perspectives regarding erasure requests under the GDPR.[47]

From an ethical perspective, the need to ensure that data used in research was openly available was also an important consideration. This dilemma highlights some of the ethical conflicts associated with deleting patient data. The interviewees who were not prepared to delete local date noted that the concern of the patient in this scenario did not relate to the erasure of data for treatment purposes. Maintaining patient data is important for ensuring ongoing quality of care and auditing. Further, deleting research data raises ethical considerations regarding wasting data and the time of research participants.[48] However, emergent models of patient data ownership are increasingly challenging the idea that healthcare professionals rather than patients control data.[49] For this research project, interviewees also highlighted that under the current Federal Act on Data Protection, data can only be processed for a scientific publication if it has been anonymized. Therefore, these interviewees were prepared for aggregate (and therefore anonymized) data to be included in a publication provided that no future research could be conducted with the patient’s data. The patient’s data would either not be deleted or sealed so that it remained accessible for clinical treatment but not for research.

Scenario five—Erasure request after publication

With specific regards to the fifth scenario (demonstrated by Additional File 1: Figure S5), our interviewees highlighted different sources of potential authority to justify processing the patient’s request. One interviewee pointed out that the general consent form in the scenario guaranteed that patient data would not be available for new research projects if the patient withdrew their consent. According to this interviewee, this definition would be wide enough to encapsulate. Another interviewee highlighted the fact that Article 10 of the Human Research Ordinance requires data to be anonymized once it has been evaluated. Further, the previously mentioned requirement to anonymize data before it is included in a scientific publication meant that these interviewees believed no personally identifying data could be lawfully included as part of a publication. Nevertheless, one of these interviewees conceded that from an ethical perspective, determining whether to delete data would depend on the size of the study. For example, a study with 10 patients might be significantly more impacted by an erasure request than a study with thousands of patients. Therefore, this interviewee explained that they would attempt to use a “technical consensus” in determining whether to erase data.

Likert scores for all five scenarios

The aggregate values for the Likert scores are displayed in Table 2. It should be noted that one interviewee group was not prepared to offer scores for the scenarios.

| |||||||||||||||||||||||||||||||||||

Discussion

To aid with the discussion, we will split this section into legal and ethical issues.

Legal issues

In practice, the distinction between genetic and non-genetic data under the HRA was not reflected in the answers given by interviewees. Instead, interviewees adopted a more contextual approach for determining when genetic or non-genetic data would be sensitive personal data. This approach confirms what the authors have written in previous studies regarding orthogonal risks to privacy from processing aggregate data.[1][2] These orthogonal risks include circumstances where an attacker possesses other information that can be used to identify individual records or conduct inference attacks on aggregated data. These risks can be accentuated when dealing with genomic data, which can be used to identify individuals even more precisely.[50] Likewise, with big data and machine learning techniques proliferating in social sciences research, it can be difficult to determine whether research protocols fall within the scope of ethics committee purview.[51] Accordingly, data encrypted using advanced privacy technologies such as HE will not be anonymized where the entity holding that data possesses a method to decrypt it. This approach is analogous to the treatment of pseudonymized data and encryption keys under the GDPR.[7] When handed to a third party without the means to decrypt this data, the data will be anonymized data. However, depending on the data that has been released, there may be an orthogonal risk of singling out one or more records. Further, the fact that privacy and respect for persons were the most highly rated scores on our Likert scale indicates the importance of guaranteeing participant privacy to interviewees.

There are several strategies that could be used separately or in concert to resolve this problem and reduce the risk of re-identification. The first is to combine data discovery requests (and accompanying privacy-enhancing technologies) with role-based access control. This would allow data custodians to certify the requesting clinician, researcher, or institution to determine that they had been approved access. Role-based access control could also be used to prevent repeated requests that might be used to re-identify an individual. The second would be to adopt a more contextual approach for determining when data was encoded or anonymized beyond the distinction between genetic and non-genetic data under Swiss legislation.[49] For example, one group of interviewees mentioned that whole genome sequencing data would carry significantly fewer risks than germline or aggregate results about the number of single mutations. Another interviewee also mentioned that certain types of non-genetic data, such as inpatient and outpatient status, could be used to reidentify patients. This contextual approach could be combined with role-based access control to decrease the risk of patients being re-identified. Likewise, as interviewees suggested, patients could be given more control to prevent the upload of potentially sensitive patient data. Finally, from an organizational perspective, an ethics review committee could establish a protocol for determining when the risk of re-identification is sufficient that a feasibility request is referred for ethics review. It should be noted that a mechanism for a "jurisdictional request" already exists for an ethics committee to determine whether a particular project should undergo ethics approval.[52] A version of this "jurisdictional request" could be made to a specialist in computer science or statistics to reassess the potential for re-identification.

Another important consideration raised by some interviewees was the status of the different entities responsible for processing. Three interviewees requested that we clarify who they were meant to be in the scenario prior to giving their answers. Their justification for this response was that the responsibilities for data custodians such as hospitals and requesting agencies such as universities and private research companies differ under data protection law. Specifically, data custodians should be treated as data controllers under the GDPR and FADP. However, the authors have previously assessed requesting institutes and companies as joint controllers who are equally responsible for compliance when using advanced privacy-enhancing technologies.[2] Therefore, it is important that the contractual responsibilities of each processing entity are clarified prior to processing. One interviewee mentioned the BioMedIT Network, the output of another SPHN driver project. The purpose of BioMedIT is to create a platform for collaborative data analysis without compromising data privacy.[53] This interviewee mentioned that queries could be performed on data using the BioMedIT infrastructure. A BioMedIT Network node would be treated as a data processor under data protection law, rather than a controller, as the operators of this node are appointed to process data. However, all these details would need to be clarified in contractual terms between the entities responsible for processing data. In addition to clarifying the terms governing data processing, this contract would ensure an appropriate physical and organizational separation of encryption keys to prevent re-identification.[2]

A final legal issue that needs to be clarified is the terms of general consent forms. As mentioned previously, several interviewees noted that there had been a proliferation of general consent forms. This problem is well recognized within the Swiss context, and studies have been dedicated to developing a nationwide integrated framework.[54] Therefore, interviewees were concerned that a general consent that was recognized and valid for one hospital would not be valid for another. Further, one interviewee mentioned that a general consent form should not only allow a patient to opt out of having their data encoded, but also having their data anonymized. This distinction is important; once a patient’s data is anonymized or aggregated, it cannot be traced back to them. Therefore, the patient loses the ability to exercise their rights with respect to their own data.[55] Another interviewee from a university hospital noted their institution had developed a general consent form that allowed opting out of further use for both encoded and anonymized data. Although this consent form went beyond the legal requirements, it nevertheless offered the patient more control over their data compared to other general consent forms. Accordingly, amending existing ethics forms to offer patients more control over their data, even once it has been anonymized, could be an important strategy to guarantee social licence. This discussion dovetails into the ethical discussion of general consent forms below.

Ethical issues

General and specific consent forms are also relevant from an ethical perspective. One interviewee, who refused to give specific scores for the Likert scales, argued that general consent forms could be used strategically by researchers. The effect of this use would be to limit the liability or the ongoing responsibility of the research team, whilst maximizing reuse of the data. Likewise, this interviewee believed that patients would see advanced technologies that enhance privacy as a method for researchers to reduce their ethical responsibilities. Although such technologies are primarily designed to reduce the risk of data breaches, patient trust and social license are essential to reusing patient data for research purposes.[56] Accordingly, failing to ensure that advanced privacy-enhancing technologies have sufficient public license could undermine the willingness of patients to permit their data to be processed using these technologies. Another interviewee mentioned this public trust could be accentuated with a general consent form that would allow the patient to seek further information about the research projects their data is used for. This general consent form should highlight whether a patient’s data might be used for commercial research purposes. In the alternative, other researchers have focussed on the concept of meta consent. Holm and Ploug describe a meta consent model in which patients can specify their consent, data, and projects for which this data can be used. First, patients can specify whether they grant specific consent to a particular research project, or broad consent for multiple research projects. Secondly, patients can consent to different types of data being used for research purposes (including patient records and linked data). Thirdly, patients can consent to their data being used for non-commercial and commercial purposes.[57] Ploug and Holm have subsequently presented a proof-of-concept mobile application that can be used to record consent.[58] Accordingly, a similar approach should be adopted with the use of advanced privacy-enhancing technologies and distributed ledger technologies. For privacy-enhancing technologies, patients should have the option to indicate whether they would be willing to let their data be used for feasibility studies. The EU has recognized the need for a uniform consent model to encourage the secondary use of data, and accordingly the European Commission proposed a new Data Governance Act in 2020.[59] This new act is discussed in further detail in the next section, which addresses European-wide strategies for secondary uses of data.

Connected to ethical considerations regarding consent are questions of both practitioner and patient education. As mentioned previously, five experts contacted as interviewees refused on the grounds that they lacked knowledge about advanced privacy-enhancing technologies or DLT. Therefore, both researchers and decision-making bodies, such as research ethics committees, should receive ongoing training about computational technologies and data driven research. This training would help researchers and decision-making bodies develop a consistent understanding of terms such as anonymization and balance competing ethical considerations that might spring from its use.[45][60] Similarly, one interviewee questioned whether a patient could give explicit and informed consent to having their data processed using this technology. However, as another interviewee explained, it might be difficult to explain HE and DLT to a patient in a fashion that was comprehensible. Accordingly, this interviewee suggested that, in addition for participants to find further information about their research, the general consent form should include a concise summary of these technologies. Further, the first interviewee above mentioned that ongoing publication education and awareness campaigns could be used to help encourage acceptance of advanced privacy-enhancing technologies. One limitation of this paper is the focus on expert interviews, a point raised by many interviewee groups. Future studies could provide vignette scenarios to patients to examine how they would respond to these requests and in what circumstances they would accept their data being uploaded. Likewise, this paper focused on interviews with legal experts, who do not necessarily have subject matter expertise on advanced privacy-enhancing technologies. Future studies could replicate these questions for computer scientists, biostatisticians, and data scientists handling health data. However, these questions would need to be slightly modified to provide greater context for ethical and legal concepts, given that potential interviewees may not have subject matter expertise in these fields.

Applicability outside of Switzerland

One challenge that needs to be addressed with this project is the question of compatibility with both national and supranational legislation outside of Switzerland. Although the HRA explicitly recognizes the potential for general consent forms to be used for research, the lawfulness of general consent under the GDPR is unclear. Article 9(1) of the GDPR prima facie prohibits the processing of special categories of data, including health related and genetic data. However, Article 9(2)(a) overturns this prohibition if free, informed, and explicit consent is obtained from data subjects. Recital 33 of the GDPR provides that subjects should be able to give their consent to certain areas of scientific research. Implicitly, this Recital could support the need for general consent.[61] However, the former Article 29 Working Party subsequently held that Recital 33 cannot be used to dispense with the requirements for a well-defined research purpose. Instead, the goals of research can only be described in more general rather than specific terms.[62] Although not denying researchers the ability to rely on general consent under the GDPR, these guidelines significantly reduce the scope of broad consent. Nevertheless, Article 9(4) permits member states to impose further conditions on the processing of genetic and health-related data. Therefore, the boundaries for informed consent may significantly depend on a case-by-case basis.

Mentioned previously, one development that may aid secondary uses of medical data across borders is the European Commission proposal for a Data Governance Act.[59] The purpose of this Act is to create a framework to encourage reuse of public sector data for commercial and "altruistic purposes," including scientific research. The Data Governance Act does not mandate reuse of public sector data, such as data subject to intellectual property protections or highly confidential data. In this context, "public sector data" includes both personal data as governed under the GDPR and non-personal data. However, Article 22 of the proposed Data Governance Act allows the European Commission to create implementing acts for a "European data altruism consent form" to allow for uniform consent across the EU. This consent form must be modular so that it can be customized for different sectors and purposes. Further, data subjects must have the right to consent to and withdraw their data from being processed for specific purposes. The Data Governance Act has not yet entered into force, and the current draft could still undergo significant revisions. However, the Data Governance Act could act as a mechanism to standardize general consent between different EU member states, ameliorating the challenges with cross border transfers of data. The Data Governance Act could also act to empower data subjects so that they can exercise greater control over how their data is used for research.[63]

With respect to erasure, and the GDPR’s right of erasure under Article 17, the drafters of the GDPR recommended that personal data not be stored in any blockchain ledger. If data must be stored in a DLT platform, that storage should be coupled with adequate access control mechanisms.[64] However, Article 17 paragraph 3(c) creates an exception for data collected for public health and safety purposes. Alternatively, per paragraph 3(d), the right to be forgotten cannot be exercised where personal data is archived for research or statistical processing, and erasure would render the purpose of research impossible. Although the interpretation of this exception is uncertain, it offers a relatively broad scope for researchers to continue to process data, despite erasure requests.[55] Nevertheless, it is important to not only consider the legal but also the ethical consequences of refusing erasure requests. Specifically, the decentralized ledger implementation used in MedCo allows links to locally stored data to be erased, thereby complying with GDPR erasure requests.

Conclusions

In this paper, we described an interview study on the use of HE and DLT for processing patient data. This interview study was conducted with experts from Swiss hospitals and research institutes, and it included legal and clinical data management staff, along with clinical and legal ethicists. We interviewed these stakeholders with two sets of five vignettes concerning feasibility or data discovery requests, and data erasure requests. With respect to the first set of requests, most of our interviewees were prepared to permit processing, provided that general consent had been obtained from patients to do so. Accordingly, advanced privacy-enhancing technologies have the potential to fill the regulatory gaps that exist under current data protection laws in Switzerland. However, our interviewees also highlighted the importance of assessing the risk of re-identification from data released as part of a feasibility request. In addition, our interviewees identified that existing consent practices may not be sufficient to explain the technical complexity of advanced privacy-enhancing technologies. Depending on the canton where interviewees were located and cantonal or institutional retention requirements, interviewees were willing to delete links to data in a distributed ledger or to that locally stored data. However, our interviewees also expressed concern regarding potential consent issues concerning technological complexity.

As such, this study demonstrates that a holistic approach needs to be taken to introducing HE and DLT as a mechanism for patient data management. It is important to recognize that social license and public trust from patients and physicians is as important as legal compliance. Specifically, general consent forms should be amended to offer patients the opportunity to opt out to having their data anonymized using advanced privacy-enhancing technologies. Further, patients should have the opportunity to indicate what types of their data they wish to be made available for data discovery. Finally, a public education campaign should be targeted at explaining how these technologies work to give patients the opportunity to understand how their data will be processed. This education campaign will help support the social license required for these initiatives. Future research should address how patients might respond to the use of these technologies to process their data.

Supplementary information

- Additional File 1: Interview questionnaire and vignettes

Abbreviations, acronyms, and initialisms

- DLT: distributed ledger technology

- DPPH: Data Protection and Personalised Health

- EU: European Union

- FADP: Federal Act on Data Protection

- GDPR: General Data Protection Regulation

- HE: homomorphic encryption

- HRA: Human Research Act

- HRO: Human Research Ordinance

- PHRT: Personalized Health and Related Technologies

- SPHN: Swiss Personalised Health Network

Acknowledgements

We would like to thank Joanna Sleigh for her assistance in designing the diagrams for this paper, and Anna Jobin and Julia Amann for their help in designing the research protocol.

Contributions

Authors JS, MI, and EV contributed to conceptualizing the study design. JS and MI tested the protocol. JS carried out data collection via interviews. JS and MI performed the analysis of the qualitative data and wrote the first draft of the manuscript. All authors contributed to revising this draft, as well as reading and approving the submitted version. All authors read and approved the final manuscript.

Funding

Open-access funding provided by Swiss Federal Institute of Technology Zurich. This research was supported by the Personalized Health and Related Technologies Program, Grant 2017-201; project: Data Protection and Personalized Health. The funding bodies did not take part in designing this research and writing the manuscript.

Ethics approval and consent to participate

All methods were conducted in accordance with ethics approval and informed consent was obtained from all participants. Ethics approval was obtained for this project with the ETH Zürich ethics review committee (Ethics Approval 2019-N-69). Interviewees who agreed to participate in our research project were first asked to sign a consent form and were then asked again to provide verbal consent to being recorded at the start of the interview. As per the study protocol, all personal data were coded and identifying details removed.

Availability of data and materials

The datasets generated and analyzed during the current study are not publicly available due to the low number of interviewees and high potential for re-identification; however, they are available from the author Ienca on reasonable request.

Competing interests

The authors declare they have no competing interests.

References

- ↑ 1.0 1.1 1.2 1.3 1.4 Scheibner, James; Ienca, Marcello; Kechagia, Sotiria; Troncoso-Pastoriza, Juan Ramon; Raisaro, Jean Louis; Hubaux, Jean-Pierre; Fellay, Jacques; Vayena, Effy (25 July 2020). "Data protection and ethics requirements for multisite research with health data: a comparative examination of legislative governance frameworks and the role of data protection technologies†" (in en). Journal of Law and the Biosciences 7 (1): lsaa010. doi:10.1093/jlb/lsaa010. ISSN 2053-9711. PMC PMC7381977. PMID 32733683. https://academic.oup.com/jlb/article/doi/10.1093/jlb/lsaa010/5825716.

- ↑ 2.0 2.1 2.2 2.3 2.4 Scheibner, James; Raisaro, Jean Louis; Troncoso-Pastoriza, Juan Ramón; Ienca, Marcello; Fellay, Jacques; Vayena, Effy; Hubaux, Jean-Pierre (25 February 2021). "Revolutionizing Medical Data Sharing Using Advanced Privacy-Enhancing Technologies: Technical, Legal, and Ethical Synthesis" (in en). Journal of Medical Internet Research 23 (2): e25120. doi:10.2196/25120. ISSN 1438-8871. PMC PMC7952236. PMID 33629963. http://www.jmir.org/2021/2/e25120/.

- ↑ Edwards, Lilian; Veale, Michael (1 May 2018). "Enslaving the Algorithm: From a “Right to an Explanation” to a “Right to Better Decisions”?". IEEE Security & Privacy 16 (3): 46–54. doi:10.1109/MSP.2018.2701152. ISSN 1540-7993. https://ieeexplore.ieee.org/document/8395080/.

- ↑ Conley, E.; Pocs, M. (2018). "GDPR Compliance Challenges for Interoperable Health Information Exchanges (HIEs) and Trustworthy Research Environments (TREs)". European Journal for Biomedical Informatics 14 (3): 48–61. https://www.ejbi.org/abstract/gdpr-compliance-challenges-for-interoperable-health-information-exchanges-hies-and-trustworthy-research-environments-tre-4619.html.

- ↑ 5.0 5.1 Quinn, Paul; Quinn, Liam (1 October 2018). "Big genetic data and its big data protection challenges" (in en). Computer Law & Security Review 34 (5): 1000–1018. doi:10.1016/j.clsr.2018.05.028. https://linkinghub.elsevier.com/retrieve/pii/S0267364918300827.

- ↑ Brall, Caroline; Berlin, Claudia; Zwahlen, Marcel; Ormond, Kelly E.; Egger, Matthias; Vayena, Effy (1 April 2021). Kerasidou, Angeliki. ed. "Public willingness to participate in personalized health research and biobanking: A large-scale Swiss survey" (in en). PLOS ONE 16 (4): e0249141. doi:10.1371/journal.pone.0249141. ISSN 1932-6203. PMC PMC8016315. PMID 33793624. https://dx.plos.org/10.1371/journal.pone.0249141.

- ↑ 7.0 7.1 7.2 7.3 Spindler, G.; Schmechel, P. (2016). "Personal Data and Encryption in the European General Data Protection Regulation". Journal of Intellectual Property, Information Technology and E-Commerce Law 7 (2): 44408. https://www.jipitec.eu/issues/jipitec-7-2-2016/4440.

- ↑ Cramer, Ronald; Damgård, Ivan Bjerre; Nielsen, Jesper Buus (30 June 2015). Secure Multiparty Computation and Secret Sharing (1 ed.). Cambridge University Press. doi:10.1017/cbo9781107337756. ISBN 978-1-107-33775-6. https://www.cambridge.org/core/product/identifier/9781107337756/type/book.

- ↑ Chillotti, Ilaria; Gama, Nicolas; Georgieva, Mariya; Izabachène, Malika (2016), Cheon, Jung Hee; Takagi, Tsuyoshi, eds., "Faster Fully Homomorphic Encryption: Bootstrapping in Less Than 0.1 Seconds" (in en), Advances in Cryptology – ASIACRYPT 2016 (Berlin, Heidelberg: Springer Berlin Heidelberg) 10031: 3–33, doi:10.1007/978-3-662-53887-6_1, ISBN 978-3-662-53886-9, http://link.springer.com/10.1007/978-3-662-53887-6_1. Retrieved 2023-02-04

- ↑ Wiebe, Andreas; Schur, Nico (1 October 2019). "Protection of trade secrets in a data-driven, networked environment – Is the update already out-dated?" (in en). Journal of Intellectual Property Law & Practice 14 (10): 814–821. doi:10.1093/jiplp/jpz119. ISSN 1747-1532. https://academic.oup.com/jiplp/article/14/10/814/5572178.

- ↑ 11.0 11.1 11.2 11.3 Raisaro, Jean Louis; Troncoso-Pastoriza, Juan Ramon; Misbach, Mickael; Sousa, Joao Sa; Pradervand, Sylvain; Missiaglia, Edoardo; Michielin, Olivier; Ford, Bryan et al. (1 July 2019). "MedCo: Enabling Secure and Privacy-Preserving Exploration of Distributed Clinical and Genomic Data". IEEE/ACM Transactions on Computational Biology and Bioinformatics 16 (4): 1328–1341. doi:10.1109/TCBB.2018.2854776. ISSN 1545-5963. https://ieeexplore.ieee.org/document/8410926/.

- ↑ Arain, Mubashir; Campbell, Michael J; Cooper, Cindy L; Lancaster, Gillian A (1 December 2010). "What is a pilot or feasibility study? A review of current practice and editorial policy" (in en). BMC Medical Research Methodology 10 (1): 67. doi:10.1186/1471-2288-10-67. ISSN 1471-2288. PMC PMC2912920. PMID 20637084. https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/1471-2288-10-67.

- ↑ Brondani, M A (2018). "A critical review of protocols for conventional microwave oven use for denture disinfection". Community Dental Health (35): 228–234. doi:10.1922/CDH_4372Brondani07. ISSN 2515-1746. https://doi.org/10.1922/CDH_4372Brondani07.

- ↑ 14.0 14.1 Haber, Stuart; Stornetta, W. Scott (1991), Menezes, Alfred J.; Vanstone, Scott A., eds., "How to Time-Stamp a Digital Document" (in en), Advances in Cryptology-CRYPT0’ 90 (Berlin, Heidelberg: Springer Berlin Heidelberg) 537: 437–455, doi:10.1007/3-540-38424-3_32, ISBN 978-3-540-54508-8, http://link.springer.com/10.1007/3-540-38424-3_32. Retrieved 2023-02-04

- ↑ Ølnes, Svein; Ubacht, Jolien; Janssen, Marijn (1 September 2017). "Blockchain in government: Benefits and implications of distributed ledger technology for information sharing" (in en). Government Information Quarterly 34 (3): 355–364. doi:10.1016/j.giq.2017.09.007. https://linkinghub.elsevier.com/retrieve/pii/S0740624X17303155.

- ↑ Herian, Robert (1 July 2018). "The Politics of Blockchain" (in en). Law and Critique 29 (2): 129–131. doi:10.1007/s10978-018-9223-1. ISSN 0957-8536. http://link.springer.com/10.1007/s10978-018-9223-1.

- ↑ Dwork, Cynthia; Naor, Moni (1993), Brickell, Ernest F., ed., "Pricing via Processing or Combatting Junk Mail" (in en), Advances in Cryptology — CRYPTO’ 92 (Berlin, Heidelberg: Springer Berlin Heidelberg) 740: 139–147, doi:10.1007/3-540-48071-4_10, ISBN 978-3-540-57340-1, http://link.springer.com/10.1007/3-540-48071-4_10. Retrieved 2023-02-04

- ↑ 18.0 18.1 Herian, Robert (1 September 2017). "Blockchain and the (re)imagining of trusts jurisprudence" (in en). Strategic Change 26 (5): 453–460. doi:10.1002/jsc.2145. https://onlinelibrary.wiley.com/doi/10.1002/jsc.2145.

- ↑ Azaria, Asaph; Ekblaw, Ariel; Vieira, Thiago; Lippman, Andrew (1 August 2016). "MedRec: Using Blockchain for Medical Data Access and Permission Management". 2016 2nd International Conference on Open and Big Data (OBD) (Vienna, Austria: IEEE): 25–30. doi:10.1109/OBD.2016.11. ISBN 978-1-5090-4054-4. http://ieeexplore.ieee.org/document/7573685/.

- ↑ 20.0 20.1 Trancoso-Pasoriza, J.R.; Raisaro, J.L.; Gasser, L. et al. (March 2019). "MedChain: Accountable and Auditable Data Sharing in Distributed Medical Scenarios". Proceeding from the 2019 AMIA Informatics Summit. https://bford.info/pub/dec/medchain/.

- ↑ Casino, Fran; Dasaklis, Thomas K.; Patsakis, Constantinos (1 March 2019). "A systematic literature review of blockchain-based applications: Current status, classification and open issues" (in en). Telematics and Informatics 36: 55–81. doi:10.1016/j.tele.2018.11.006. https://linkinghub.elsevier.com/retrieve/pii/S0736585318306324.

- ↑ Munn, Luke; Hristova, Tsvetelina; Magee, Liam (1 January 2019). "Clouded data: Privacy and the promise of encryption" (in en). Big Data & Society 6 (1): 205395171984878. doi:10.1177/2053951719848781. ISSN 2053-9517. http://journals.sagepub.com/doi/10.1177/2053951719848781.

- ↑ Berberich, M.; Steiner, M. (2016). "Practitioner's Corner ∙ Blockchain Technology and the GDPR – How to Reconcile Privacy and Distributed Ledgers?". European Data Protection Law Review 2 (3): 422–426. doi:10.21552/EDPL/2016/3/21. http://edpl.lexxion.eu/article/EDPL/2016/3/21.

- ↑ Bentzen, Heidi Beate; Castro, Rosa; Fears, Robin; Griffin, George; ter Meulen, Volker; Ursin, Giske (1 August 2021). "Remove obstacles to sharing health data with researchers outside of the European Union" (in en). Nature Medicine 27 (8): 1329–1333. doi:10.1038/s41591-021-01460-0. ISSN 1078-8956. PMC PMC8329618. PMID 34345050. https://www.nature.com/articles/s41591-021-01460-0.

- ↑ Dove, Edward S. (2018). "The EU General Data Protection Regulation: Implications for International Scientific Research in the Digital Era" (in en). Journal of Law, Medicine & Ethics 46 (4): 1013–1030. doi:10.1177/1073110518822003. ISSN 1073-1105. https://www.cambridge.org/core/product/identifier/S1073110500021380/type/journal_article.

- ↑ Epstein, Charlotte (1 June 2016). "Surveillance, Privacy and the Making of the Modern Subject: Habeas what kind of Corpus ?" (in en). Body & Society 22 (2): 28–57. doi:10.1177/1357034X15625339. ISSN 1357-034X. http://journals.sagepub.com/doi/10.1177/1357034X15625339.

- ↑ Métille, S. (2013). "Swiss Information Privacy Law and the Transborder Flow of Personal Data". Journal of International Commercial Law and Technology 8 (1): 71–80. https://ssrn.com/abstract=2203003.

- ↑ Wagner, Julian (1 November 2018). "The transfer of personal data to third countries under the GDPR: when does a recipient country provide an adequate level of protection?" (in en). International Data Privacy Law 8 (4): 318–337. doi:10.1093/idpl/ipy008. ISSN 2044-3994. https://academic.oup.com/idpl/article/8/4/318/5047863.

- ↑ 29.0 29.1 Martani, Andrea; Egli, Philipp; Widmer, Michael; Elger, Bernice (1 September 2020). "Data protection and biomedical research in Switzerland: setting the record straight". Swiss Medical Weekly 150 (3536): w20332. doi:10.4414/smw.2020.20332. ISSN 1424-3997. https://smw.ch/index.php/smw/article/view/2857.

- ↑ Naqib, M. (8 March 2019). "Update on the revision of the Swiss Federal Act on Data Protection". PwC. https://www.pwc.ch/en/insights/fs/swiss-federal-act-on-data-protection-revision.html.

- ↑ 31.0 31.1 31.2 J, Meier-Abt, Peter; K, Lawrence, Adrien; Liselotte, Selter; Effy, Vayena; Torsten, Schwede (2 January 2018) (in en). The Swiss approach to precision medicine. pp. 8 p.. doi:10.3929/ETHZ-B-000274911. http://hdl.handle.net/20.500.11850/274911.

- ↑ Martani, Andrea; Geneviève, Lester Darryl; Pauli-Magnus, Christiane; McLennan, Stuart; Elger, Bernice Simone (20 December 2019). "Regulating the Secondary Use of Data for Research: Arguments Against Genetic Exceptionalism". Frontiers in Genetics 10: 1254. doi:10.3389/fgene.2019.01254. ISSN 1664-8021. PMC PMC6951399. PMID 31956328. https://www.frontiersin.org/article/10.3389/fgene.2019.01254/full.

- ↑ Driessen, Susanne; Gervasoni, Pietro (18 January 2021). "Research projects in human genetics in Switzerland: analysis of research protocols submitted to cantonal ethics committees in 2018". Swiss Medical Weekly 151 (0304): w20403. doi:10.4414/smw.2021.20403. ISSN 1424-3997. https://smw.ch/index.php/smw/article/view/2944.

- ↑ Driessen, Susanne; Gervasoni, Pietro (7 July 2021). "Response to comment on: Research projects in human genetics in Switzerland: analysis of research protocols submitted to Cantonal Ethics Commissions in 2018". Swiss Medical Weekly 151 (2526): w20518. doi:10.4414/smw.2021.20518. ISSN 1424-3997. https://smw.ch/index.php/smw/article/view/3022.

- ↑ Hemminki, Elina; Virtanen, Jorma I; Veerus, Piret (1 December 2014). "Varying ethics rules in clinical research and routine patient care – research ethics committee chairpersons’ views in Finland" (in en). Health Research Policy and Systems 12 (1): 15. doi:10.1186/1478-4505-12-15. ISSN 1478-4505. PMC PMC3987656. PMID 24666735. https://health-policy-systems.biomedcentral.com/articles/10.1186/1478-4505-12-15.

- ↑ Whiddett, Dick; Hunter, Inga; McDonald, Barry; Norris, Tony; Waldon, John (1 August 2016). "Consent and widespread access to personal health information for the delivery of care: a large scale telephone survey of consumers' attitudes using vignettes in New Zealand" (in en). BMJ Open 6 (8): e011640. doi:10.1136/bmjopen-2016-011640. ISSN 2044-6055. PMC PMC5013334. PMID 27554103. https://bmjopen.bmj.com/lookup/doi/10.1136/bmjopen-2016-011640.

- ↑ Törrönen, Jukka (25 July 2018). "Using vignettes in qualitative interviews as clues, microcosms or provokers" (in en). Qualitative Research Journal 18 (3): 276–286. doi:10.1108/QRJ-D-17-00055. ISSN 1443-9883. https://www.emerald.com/insight/content/doi/10.1108/QRJ-D-17-00055/full/html.

- ↑ Nicolini, Davide (1 April 2009). "Articulating Practice through the Interview to the Double" (in en). Management Learning 40 (2): 195–212. doi:10.1177/1350507608101230. ISSN 1350-5076. http://journals.sagepub.com/doi/10.1177/1350507608101230.

- ↑ Schweikart, S.J. (2019). "Should Immigration Status Information Be Considered Protected Health Information?" (in en). AMA Journal of Ethics 21 (1): E32–37. doi:10.1001/amajethics.2019.32. ISSN 2376-6980. https://journalofethics.ama-assn.org/article/should-immigration-status-information-be-considered-protected-health-information/2019-01.

- ↑ McCradden, Melissa D.; Baba, Ami; Saha, Ashirbani; Ahmad, Sidra; Boparai, Kanwar; Fadaiefard, Pantea; Cusimano, Michael D. (1 January 2020). "Ethical concerns around use of artificial intelligence in health care research from the perspective of patients with meningioma, caregivers and health care providers: a qualitative study" (in en). CMAJ Open 8 (1): E90–E95. doi:10.9778/cmajo.20190151. ISSN 2291-0026. PMC PMC7028163. PMID 32071143. http://cmajopen.ca/lookup/doi/10.9778/cmajo.20190151.

- ↑ Braun, Virginia; Clarke, Victoria (1 January 2006). "Using thematic analysis in psychology" (in en). Qualitative Research in Psychology 3 (2): 77–101. doi:10.1191/1478088706qp063oa. ISSN 1478-0887. http://www.tandfonline.com/doi/abs/10.1191/1478088706qp063oa.

- ↑ Morse, Janice M. (1 May 2015). "“Data Were Saturated . . . ”" (in en). Qualitative Health Research 25 (5): 587–588. doi:10.1177/1049732315576699. ISSN 1049-7323. http://journals.sagepub.com/doi/10.1177/1049732315576699.

- ↑ Guest, Greg; Bunce, Arwen; Johnson, Laura (1 February 2006). "How Many Interviews Are Enough?: An Experiment with Data Saturation and Variability" (in en). Field Methods 18 (1): 59–82. doi:10.1177/1525822X05279903. ISSN 1525-822X. http://journals.sagepub.com/doi/10.1177/1525822X05279903.

- ↑ ETH Zurich; Vayena, E.; Ienca, M.; Scheibner, J. et al. (July 2019). "How the General Data Protection Regulation changes the rules for scientific research". European Parliament. https://www.europarl.europa.eu/thinktank/en/document/EPRS_STU(2019)634447.

- ↑ 45.0 45.1 Ferretti, Agata; Ienca, Marcello; Hurst, Samia; Vayena, Effy (1 September 2020). "Big Data, Biomedical Research, and Ethics Review: New Challenges for IRBs" (in en). Ethics & Human Research 42 (5): 17–28. doi:10.1002/eahr.500065. ISSN 2578-2355. PMC PMC7814666. PMID 32937036. https://onlinelibrary.wiley.com/doi/10.1002/eahr.500065.

- ↑ Voss, W.G.; Castets-Renard, C. (2015). "Proposal for an international taxonomy on the various forms of the right to be forgotten: A study on the convergence of norms international & comparative technology law". Colorado Technology Law Journal 14 (2): 281–344. Archived from the original on 30 June 2016. https://web.archive.org/web/20160630045122/http://ctlj.colorado.edu/?page_id=399.

- ↑ Francis, Bridget (1 October 2020). "General Data Protection Regulation (GDPR) and Data Protection Act 2018: What does this mean for clinicians?" (in en). Archives of disease in childhood - Education & practice edition 105 (5): 298–299. doi:10.1136/archdischild-2018-316057. ISSN 1743-0585. https://ep.bmj.com/lookup/doi/10.1136/archdischild-2018-316057.

- ↑ Politou, Eugenia; Alepis, Efthimios; Patsakis, Constantinos (1 January 2018). "Forgetting personal data and revoking consent under the GDPR: Challenges and proposed solutions" (in en). Journal of Cybersecurity 4 (1). doi:10.1093/cybsec/tyy001. ISSN 2057-2085. https://academic.oup.com/cybersecurity/article/doi/10.1093/cybsec/tyy001/4954056.

- ↑ 49.0 49.1 Martani, Andrea; Geneviève, Lester Darryl; Elger, Bernice; Wangmo, Tenzin (1 April 2021). "'It’s not something you can take in your hands'. Swiss experts’ perspectives on health data ownership: an interview-based study" (in en). BMJ Open 11 (4): e045717. doi:10.1136/bmjopen-2020-045717. ISSN 2044-6055. PMC PMC8039276. https://bmjopen.bmj.com/lookup/doi/10.1136/bmjopen-2020-045717.

- ↑ Gymrek, Melissa; McGuire, Amy L.; Golan, David; Halperin, Eran; Erlich, Yaniv (18 January 2013). "Identifying Personal Genomes by Surname Inference" (in en). Science 339 (6117): 321–324. doi:10.1126/science.1229566. ISSN 0036-8075. https://www.science.org/doi/10.1126/science.1229566.

- ↑ Favaretto, Maddalena; De Clercq, Eva; Briel, Matthias; Elger, Bernice Simone (1 October 2020). "Working Through Ethics Review of Big Data Research Projects: An Investigation into the Experiences of Swiss and American Researchers" (in en). Journal of Empirical Research on Human Research Ethics 15 (4): 339–354. doi:10.1177/1556264620935223. ISSN 1556-2646. http://journals.sagepub.com/doi/10.1177/1556264620935223.

- ↑ Gloy, Viktoria; McLennan, Stuart; Rinderknecht, Matthias; Ley, Bettina; Meier, Brigitte; Driessen, Susanne; Gervasoni, Pietro; Hirschel, Bernard et al. (12 August 2020). "Uncertainties about the need for ethics approval in Switzerland: a mixed-methods study". Swiss Medical Weekly 150 (3334): w20318. doi:10.4414/smw.2020.20318. ISSN 1424-3997. https://smw.ch/index.php/smw/article/view/2852.

- ↑ Coman Schmid, Diana; Crameri, Katrin; Oesterle, Sabine; Rinn, Bernd; Sengstag, Thierry; Stockinger, Heinz; Team, BioMedIT network (2020). "SPHN – The BioMedIT Network: A Secure IT Platform for Research with Sensitive Human Data". Digital Personalized Health and Medicine: 1170–1174. doi:10.3233/SHTI200348. https://ebooks.iospress.nl/doi/10.3233/SHTI200348.

- ↑ Maurer, Julia; Saccilotto, Ramon; Pauli-Magnus, Christiane (8 September 2018). "E-general consent: development and implementation of a nationwide harmonised interactive electronic general consent" (in en). Swiss Medical Informatics. doi:10.4414/smi.34.00412. ISSN 2296-0406. https://doi.emh.ch/smi.34.00412.

- ↑ 55.0 55.1 Pormeister, Kärt (1 May 2017). "Genetic data and the research exemption: is the GDPR going too far?" (in en). International Data Privacy Law 7 (2): 137–146. doi:10.1093/idpl/ipx006. ISSN 2044-3994. https://academic.oup.com/idpl/article-lookup/doi/10.1093/idpl/ipx006.