Difference between revisions of "Journal:An integrated data analytics platform"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 74: | Line 74: | ||

OceanWorks’ analytics engine is called NEXUS.<ref name="HuangEmerging15">{{cite journal |title=Emerging Big Data Technologies for Geoscience - NEXUS: The Deep Data Platform |journal=2016 Federation of Earth Science Information Partners Winter Meeting |author=Huang, T.; Armstrong, E.; Chang, G. et al. |year=2015 |url=http://commons.esipfed.org/node/8810}}</ref> It takes on a different approach for storing and analyzing large collections of geospatial, array-based data by breaking the netCDF/HDF file data into data tiles and storing them in a cloud-scale data management system. With each data tile having its own geospatial index, a regional subset operation only requires the retrieval of the relevant tiles into the analytic engine. Our recent benchmark shows NEXUS can compute an area-averaged time series hundreds time faster than a traditional file-based approach.<ref name="JacobDesign17">{{cite journal |title=Design Patterns to Achieve 300x Speedup for Oceanographic Analytics in the Cloud |journal=2017 American Geophysical Union Fall Meeting |author=Jacob, J.C.; Greguska III, F.R.; Huang, T. et al. |year=2017 |url=https://ui.adsabs.harvard.edu/abs/2017AGUFMIN23F..06J/abstract}}</ref> The traditional file-based approach typically involves subsetting large collection of time-based granule files before applying analysis on the subsetted data. Much of the traditional file-based approach is spent on file manipulation. | OceanWorks’ analytics engine is called NEXUS.<ref name="HuangEmerging15">{{cite journal |title=Emerging Big Data Technologies for Geoscience - NEXUS: The Deep Data Platform |journal=2016 Federation of Earth Science Information Partners Winter Meeting |author=Huang, T.; Armstrong, E.; Chang, G. et al. |year=2015 |url=http://commons.esipfed.org/node/8810}}</ref> It takes on a different approach for storing and analyzing large collections of geospatial, array-based data by breaking the netCDF/HDF file data into data tiles and storing them in a cloud-scale data management system. With each data tile having its own geospatial index, a regional subset operation only requires the retrieval of the relevant tiles into the analytic engine. Our recent benchmark shows NEXUS can compute an area-averaged time series hundreds time faster than a traditional file-based approach.<ref name="JacobDesign17">{{cite journal |title=Design Patterns to Achieve 300x Speedup for Oceanographic Analytics in the Cloud |journal=2017 American Geophysical Union Fall Meeting |author=Jacob, J.C.; Greguska III, F.R.; Huang, T. et al. |year=2017 |url=https://ui.adsabs.harvard.edu/abs/2017AGUFMIN23F..06J/abstract}}</ref> The traditional file-based approach typically involves subsetting large collection of time-based granule files before applying analysis on the subsetted data. Much of the traditional file-based approach is spent on file manipulation. | ||

OceanWorks enables advanced analytics that can easily scale to the available computation hardware along the full spectrum, from an ordinary laptop or desktop computer, to a multi-node server class cluster computer, to a private or public cloud computer. The architectural drivers are: | |||

* both REST and Python API interfaces to the analytics; | |||

* in-memory map-reduce style of computation; | |||

* horizontal scaling, such that computational resources can be added or removed on demand; | |||

* rapid access to data tiles that form natural spatio-temporal partition boundaries for parallelization; | |||

* computations performed close to the data store to minimize network traffic; and | |||

* container-based deployment. | |||

The REST and Python API enables OceanWorks to be easily plugged into a variety of web-based user interfaces, each tuned to particular domains. Calls to OceanWorks from Jupyter Notebook enables interactive cloud-scale, science-grade analytics. | |||

Built-in analytics are provided for the following algorithms: | |||

:1. Area-averaged time series to compute statistics (e.g., mean, minimum, maximum, standard deviation) of a single variable or two variables being compared; can optionally apply seasonal or low-pass filters to the result | |||

:2. Time-averaged map to produce a geospatial map that averages gridded measurements over time at each grid coordinate within a user-defined spatio-temporal bounding box | |||

:3. Correlation map to compute the correlation coefficient at each grid coordinate within a user-specified spatio-temporal bounding box for two identically gridded datasets | |||

:4. Climatological map to compute monthly climatology for a user-specified month and year range | |||

:5. Daily difference average to subtract a dataset from its climatology, then, for each timestamp, average the pixel-by-pixel differences within a user-specified spatio-temporal bounding box | |||

:6. ''In situ'' matching to discover ''in situ'' measurements that correspond to a gridded satellite measurement | |||

Additionally, authenticated or trusted users may inject their own custom algorithm code for execution within OceanWorks. An API is provided to pass the custom code as either a single or multi-line string or as a Python file or module. | |||

===''In situ''-to-satellite matching=== | |||

Comparison of measurements from different ocean observing systems is a frequently used method to assess the quality and accuracy of measurements. The matching or collocating and evaluation of ''in situ'' and satellite measurements is a particularly valuable method because the physical characteristics of the observing systems are so different, and therefore the errors related to instrumentation and sampling are not convoluted. The satellite community tends to use collocated ''in situ'' measurements to develop, improve, calibrate, and validate the integrity of retrieval algorithms (e.g., the 2003 work of Bourassa ''et al.''<ref name="BourassaSeaWinds03">{{cite journal |title=SeaWinds validation with research vessels |journal=Journal of Geophysical Research Oceans |author=Bourassa, M.A.; Legler, D.M.; O'Brien, J.J. et al. |volume=108 |issue=C2 |page=3019 |year=2003 |doi=10.1029/2001JC001028}}</ref>). The ''in situ'' observational community uses collocated satellite data to assess the quality of extreme/suspicious values and to add spatial context to the often sparse point values. In both of these research realms there are many more detailed use cases, e.g., near real-time decision support of field programs, planning exercises for future observing system deployments, and development of integrated ''in situ'' plus satellite data, global gridded analyses products that are useful for stand-alone research and for model initialization and boundary conditions. | |||

There are several major data challenges related to successful ''in situ'' and satellite data collation research. Disparate data volume and variety is the primary challenge. Individual satellite collections are typically large in volume, have relatively homogeneous sampling, are derived from a single platform, are composed of a consistent set of parameters, and are represented as scan lines, swaths, or globally gridded fields. ''In situ'' observations typically bring the variety challenge into the problem. They are often replete with heterogeneous observing platforms (ships, drifting and stationary buoys, glides, etc.), instrumentation types and sampling methods, highly varying sampling rates, and sparse spatio-temporal coverage over the global ocean. Another major challenge for collation-based research is logistical. The archives of ''in situ'' and satellite data are often distributed at different centers, have a variety of access methods that need to be understood and applied, and have different data formats and quality control [[information]]. Additionally, these types of data can over time dynamically extend (adding data to the time series) or have completely new versions with critical data quality improvements. The OceanWorks match-up service<ref name="SmithInteg18">{{cite journal |title=Integrating the Distributed Oceanographic Match-Up Service into OceanWorks (OD44A-2773) |journal=2018 Ocean Sciences Meeting |author=Smith, S.R.; Elya, J.L.; Bourassa, M.A. et al. |year=2018 |url=https://agu.confex.com/agu/os18/meetingapp.cgi/Paper/311722}}</ref> resolves these major challenges and many other secondary challenges. | |||

==References== | ==References== | ||

Revision as of 20:47, 9 September 2019

| Full article title | An integrated data analytics platform |

|---|---|

| Journal | Frontiers in Marine Science |

| Author(s) |

Armstrong, Edward M.; Bourassa, Mark A.; Cram, Thomas A.; DeBellis, Maya; Elya, Jocelyn; Greguska III, Frank R.; Huang, Thomas; Jacob, Joseph C.; Ji, Zaihua; Jiang, Yongyao; Li, Yun; Quach, Nga; McGibbney, Lewis; Smith, Shawn; Tsontos, Vardis M.; Wilson, Brian; Worley, Steven J.; Yang, Chaowei; Yam, Elizabeth |

| Author affiliation(s) |

NASA Jet Propulsion Laboratory, Center for Ocean-Atmospheric Prediction Studies, National Center for Atmospheric Research, George Mason University |

| Primary contact | Email: thomas dot huang at jpl dot nasa dot gov |

| Year published | 2019 |

| Volume and issue | 6 |

| Page(s) | 354 |

| DOI | 10.3389/fmars.2019.00354 |

| ISSN | 2296-7745 |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.frontiersin.org/articles/10.3389/fmars.2019.00354/full |

| Download | https://www.frontiersin.org/articles/10.3389/fmars.2019.00354/pdf (PDF) |

|

|

This article should not be considered complete until this message box has been removed. This is a work in progress. |

Abstract

A scientific integrated data analytics platform (IDAP) is an environment that enables the confluence of resources for scientific investigation. It harmonizes data, tools, and computational resources to enable the research community to focus on the investigation rather than spending time on security, data preparation, management, etc. OceanWorks is a National Aeronautics and Space Administration (NASA) technology integration project to establish a cloud-based integrated ocean science data analytics platform for managing ocean science research data at NASA’s Physical Oceanography Distributed Active Archive Center (PO.DAAC). The platform focuses on advancement and maturity by bringing together several NASA open-source, big data projects for parallel analytics, anomaly detection, in situ-to-satellite data matching, quality-screened data subsetting, search relevancy, and data discovery. Our communities are relying on data available through distributed data centers to conduct their research. In typical investigations, scientists would (1) search for data, (2) evaluate the relevance of that data, (3) download it, and (4) then apply algorithms to identify trends, anomalies, or other attributes of the data. Such a workflow cannot scale if the research involves a massive amount of data or multi-variate measurements. With the upcoming NASA Surface Water and Ocean Topography (SWOT) mission expected to produce over 20 petabytes (PB) of observational data during its three-year nominal mission, the volume of data will challenge all existing earth science data archival, distribution, and analysis paradigms. This paper discusses how OceanWorks enhances the analysis of physical ocean data where the computation is done on an elastic cloud platform next to the archive to deliver fast, web-accessible services for working with oceanographic measurements.

Keywords: big data, cloud computing, ocean science, data analysis, matchup, anomaly detection, open source

Introduction

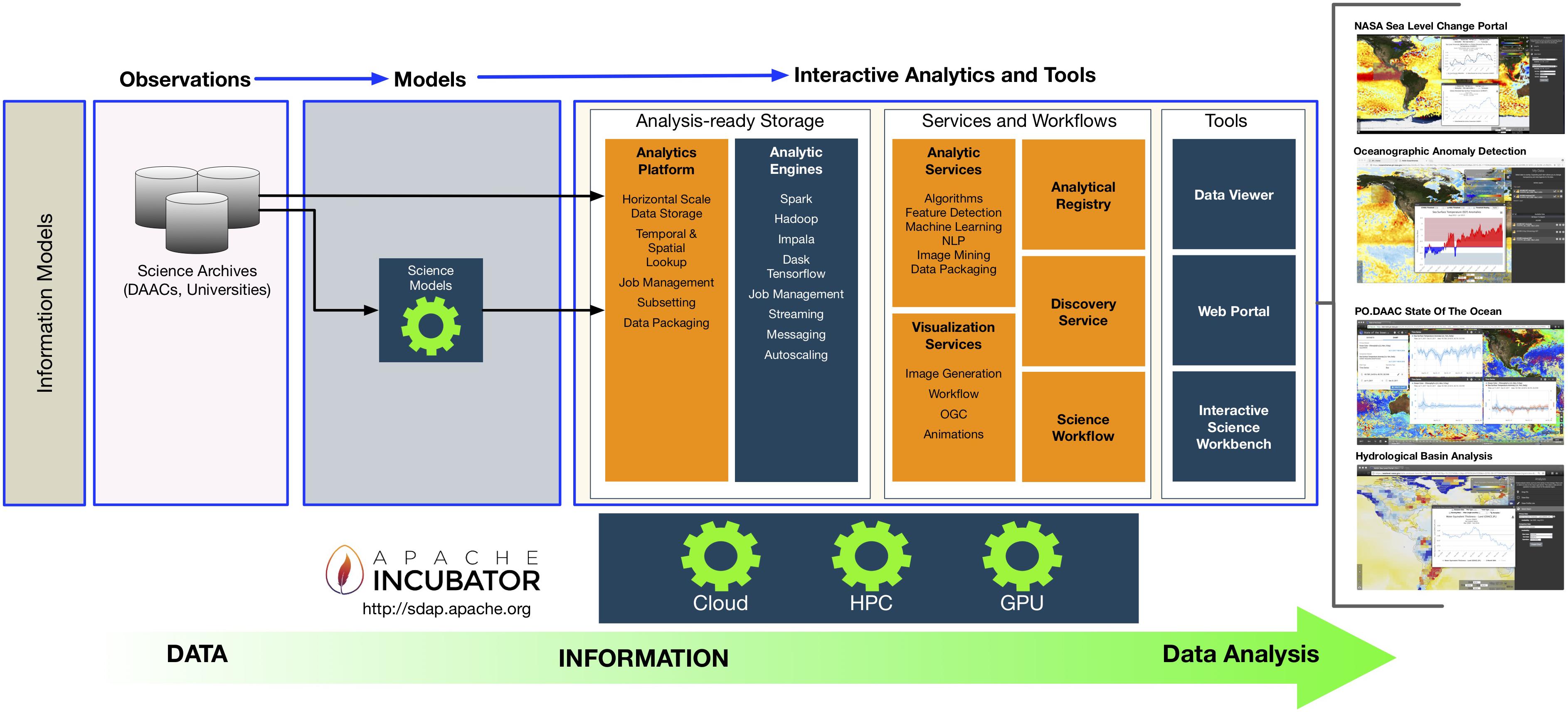

With increasing global temperature, warming of the ocean, and melting of ice sheets and glaciers, numerous impacts can be observed. From changes in anomalous ocean temperature and circulation patterns to increasing extreme weather events and more intense tropical cyclones, sea level rise and storm surge affecting coastlines can be observed, and with them drastic changes and shifts in marine ecosystems. To date, science investigating these phenomena requires researchers to work with a disjointed collection of tools such as search, reprojection, visualization, subsetting, and statistical analysis. Researchers are finding themselves having to convert nomenclature between these tools, including something as mundane as dataset name and representation of geospatial coordinates. Researchers are also at times required to transform the data into a more common representation in order to correlate measurements collected from different instruments. To solve this disjointed data research problem, the concept of an integrated data analytics platform (IDAP) (Figure 1) may help tackle these data wrangling, management, and analysis challenges so researchers can focus on their investigation.

|

In recent years, NASA’s Advanced Information Systems Technology (AIST) and Advancing Collaborating Connections for Earth System Science (ACCESS) programs have invested in developing new technologies targeting big ocean data on cloud computing platforms. Their goal is to address some of the challenges of managing oceanographic big data by leveraging modern computing infrastructure and horizontal-scale software methodologies. Rather than developing a single ocean data analysis application, we have developed a data service platform to enable many analytic applications and lay the foundation for community-driven oceanography research.

OceanWorks[1] is a NASA AIST project to mature NASA’s recent investments through integrated technologies and to provide the oceanographic community with a range of useful and advanced data manipulation and analytics capabilities. As an IDAP, OceanWorks harmonizes data, tools, and computational resources to enable oceanographers to focus on the investigation rather than spending time on security, data preparation, management, etc. Oceanographers have become increasingly frustrated with the growing number of research tool silos and their lack of coherence. A user might use one tool to search data sets and then must manually translate the dataset name, time, and spatial extends in order to satisfy the nomenclature of yet another tool (e.g., subsetting tool). To address this frustration, OceanWorks was developed to implement an IDAP for oceanographers. This platform is designed to be extensible and promote community contribution by providing an integrated collection of features, including:

- data analysis;

- data-Intensive anomaly detection;

- distributed in situ-to-satellite data matching;

- search relevancy;

- quality-screened data subsetting; and

- upload-and-execute custom parallel analytic algorithms.

In 2017 the OceanWorks project team donated all of the project’s source code to the Apache Software Foundation and established the official Science Data Analytics Platform (SDAP) project for community-driven development of the cloud-based data access and analysis platform. Today, the OceanWorks project is still in active development but through the open-source paradigm.

OceanWorks components

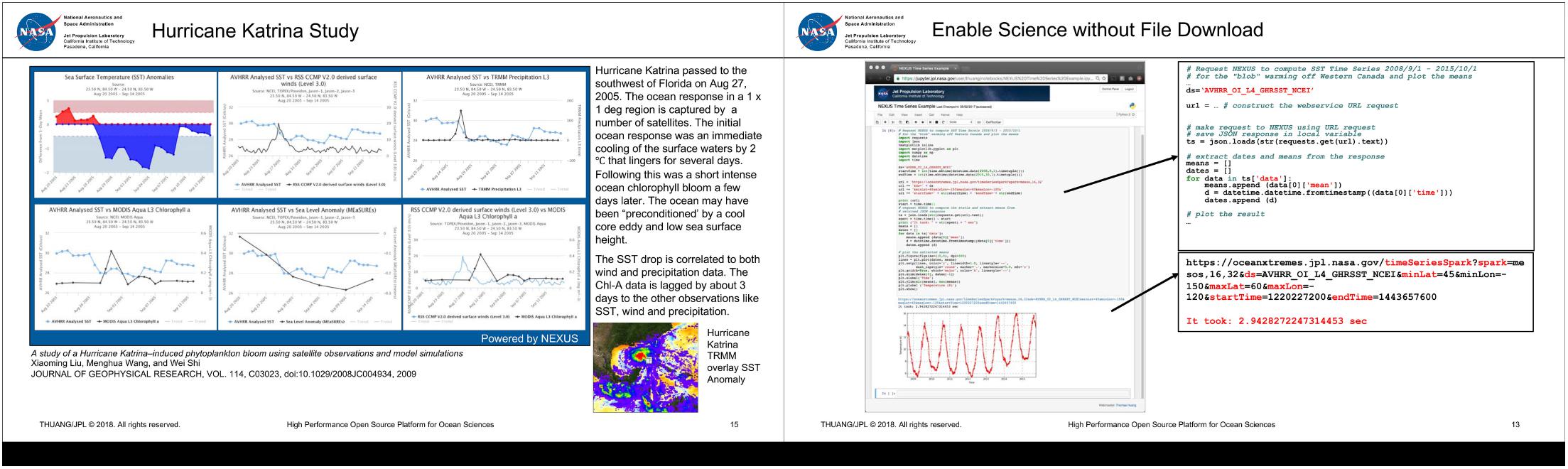

OceanWorks is an orchestration of several NASA big data technologies as a coherent web service platform. Rather than focus on one science application, this web service platform enables various types of applications. Figure 2 show how to use OceanWorks to facilitate on-the-fly analysis of Hurricane Katrina[2] and to use Jupyter Notebook to interact with OceanWorks to analyze the "Blob" in the northeast Pacific.[3] This section discusses some of the key components of OceanWorks.

|

Data analytics

We have been developing analytics solutions around common file packaging standards such as netCDF and HDF. We evangelize for the Climate and Forecast (CF) metadata convention and the Attribute Convention for Dataset Discovery (ACDD) to promote interoperability and improve our searches. Yet, there is very little progress in tackling our current big data analytic challenges, which include how to work with petabyte-scale data and being able to quickly look up the most relevant data for a given research. While the current method of subsetting and analyzing one daily global observational file at a time is the most straightforward, it is an unsustainable approach for analyzing petabytes of data. The common bottleneck is in working with large collections of files. Since these are global files, researchers are finding themselves having to move (or copy) more data than they need for their regional analysis. Web service solutions such as OPeNDAP and THREDDS provide a web service API to work with these data, but their implementation still involves iterating through large collection of files.

OceanWorks’ analytics engine is called NEXUS.[4] It takes on a different approach for storing and analyzing large collections of geospatial, array-based data by breaking the netCDF/HDF file data into data tiles and storing them in a cloud-scale data management system. With each data tile having its own geospatial index, a regional subset operation only requires the retrieval of the relevant tiles into the analytic engine. Our recent benchmark shows NEXUS can compute an area-averaged time series hundreds time faster than a traditional file-based approach.[5] The traditional file-based approach typically involves subsetting large collection of time-based granule files before applying analysis on the subsetted data. Much of the traditional file-based approach is spent on file manipulation.

OceanWorks enables advanced analytics that can easily scale to the available computation hardware along the full spectrum, from an ordinary laptop or desktop computer, to a multi-node server class cluster computer, to a private or public cloud computer. The architectural drivers are:

- both REST and Python API interfaces to the analytics;

- in-memory map-reduce style of computation;

- horizontal scaling, such that computational resources can be added or removed on demand;

- rapid access to data tiles that form natural spatio-temporal partition boundaries for parallelization;

- computations performed close to the data store to minimize network traffic; and

- container-based deployment.

The REST and Python API enables OceanWorks to be easily plugged into a variety of web-based user interfaces, each tuned to particular domains. Calls to OceanWorks from Jupyter Notebook enables interactive cloud-scale, science-grade analytics.

Built-in analytics are provided for the following algorithms:

- 1. Area-averaged time series to compute statistics (e.g., mean, minimum, maximum, standard deviation) of a single variable or two variables being compared; can optionally apply seasonal or low-pass filters to the result

- 2. Time-averaged map to produce a geospatial map that averages gridded measurements over time at each grid coordinate within a user-defined spatio-temporal bounding box

- 3. Correlation map to compute the correlation coefficient at each grid coordinate within a user-specified spatio-temporal bounding box for two identically gridded datasets

- 4. Climatological map to compute monthly climatology for a user-specified month and year range

- 5. Daily difference average to subtract a dataset from its climatology, then, for each timestamp, average the pixel-by-pixel differences within a user-specified spatio-temporal bounding box

- 6. In situ matching to discover in situ measurements that correspond to a gridded satellite measurement

Additionally, authenticated or trusted users may inject their own custom algorithm code for execution within OceanWorks. An API is provided to pass the custom code as either a single or multi-line string or as a Python file or module.

In situ-to-satellite matching

Comparison of measurements from different ocean observing systems is a frequently used method to assess the quality and accuracy of measurements. The matching or collocating and evaluation of in situ and satellite measurements is a particularly valuable method because the physical characteristics of the observing systems are so different, and therefore the errors related to instrumentation and sampling are not convoluted. The satellite community tends to use collocated in situ measurements to develop, improve, calibrate, and validate the integrity of retrieval algorithms (e.g., the 2003 work of Bourassa et al.[6]). The in situ observational community uses collocated satellite data to assess the quality of extreme/suspicious values and to add spatial context to the often sparse point values. In both of these research realms there are many more detailed use cases, e.g., near real-time decision support of field programs, planning exercises for future observing system deployments, and development of integrated in situ plus satellite data, global gridded analyses products that are useful for stand-alone research and for model initialization and boundary conditions.

There are several major data challenges related to successful in situ and satellite data collation research. Disparate data volume and variety is the primary challenge. Individual satellite collections are typically large in volume, have relatively homogeneous sampling, are derived from a single platform, are composed of a consistent set of parameters, and are represented as scan lines, swaths, or globally gridded fields. In situ observations typically bring the variety challenge into the problem. They are often replete with heterogeneous observing platforms (ships, drifting and stationary buoys, glides, etc.), instrumentation types and sampling methods, highly varying sampling rates, and sparse spatio-temporal coverage over the global ocean. Another major challenge for collation-based research is logistical. The archives of in situ and satellite data are often distributed at different centers, have a variety of access methods that need to be understood and applied, and have different data formats and quality control information. Additionally, these types of data can over time dynamically extend (adding data to the time series) or have completely new versions with critical data quality improvements. The OceanWorks match-up service[7] resolves these major challenges and many other secondary challenges.

References

- ↑ Huang, T.; Armstrong, E.M.; Greguska, F.R. et al. (2018). "High Performance Open-Source Big Ocean Science Platform (OD51A-07)". 2018 Ocean Sciences Meeting. https://agu.confex.com/agu/os18/meetingapp.cgi/Paper/314599.

- ↑ Liu, X.; Wang, M.; Shi, W. (2009). "A study of a Hurricane Katrina–induced phytoplankton bloom using satellite observations and model simulations". Journal of Geophysical Research Oceans 114 (C3): C03023. doi:10.1029/2008JC004934.

- ↑ Cavole, L.M.; Demko, A.M.; Diner, R.E. et al. (2016). "Biological Impacts of the 2013–2015 Warm-Water Anomaly in the Northeast Pacific: Winners, Losers, and the Future". Oceanography 29 (2): 273–85. doi:10.5670/oceanog.2016.32.

- ↑ Huang, T.; Armstrong, E.; Chang, G. et al. (2015). "Emerging Big Data Technologies for Geoscience - NEXUS: The Deep Data Platform". 2016 Federation of Earth Science Information Partners Winter Meeting. http://commons.esipfed.org/node/8810.

- ↑ Jacob, J.C.; Greguska III, F.R.; Huang, T. et al. (2017). "Design Patterns to Achieve 300x Speedup for Oceanographic Analytics in the Cloud". 2017 American Geophysical Union Fall Meeting. https://ui.adsabs.harvard.edu/abs/2017AGUFMIN23F..06J/abstract.

- ↑ Bourassa, M.A.; Legler, D.M.; O'Brien, J.J. et al. (2003). "SeaWinds validation with research vessels". Journal of Geophysical Research Oceans 108 (C2): 3019. doi:10.1029/2001JC001028.

- ↑ Smith, S.R.; Elya, J.L.; Bourassa, M.A. et al. (2018). "Integrating the Distributed Oceanographic Match-Up Service into OceanWorks (OD44A-2773)". 2018 Ocean Sciences Meeting. https://agu.confex.com/agu/os18/meetingapp.cgi/Paper/311722.

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, grammar, and punctuation for improved readability. In some cases important information was missing from the references, and that information was added. The singular footnote was turned into an inline link.