Difference between revisions of "Template:Article of the week"

Shawndouglas (talk | contribs) (Updated article of the week text) |

Shawndouglas (talk | contribs) (Updated article of the week text) |

||

| Line 1: | Line 1: | ||

<div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 | <div style="float: left; margin: 0.5em 0.9em 0.4em 0em;">[[File:Fig1 Čartolovni DigitalHealth2023 9.jpeg|240px]]</div> | ||

'''"[[Journal: | '''"[[Journal:Critical analysis of the impact of AI on the patient–physician relationship: A multi-stakeholder qualitative study|Critical analysis of the impact of AI on the patient–physician relationship: A multi-stakeholder qualitative study]]"''' | ||

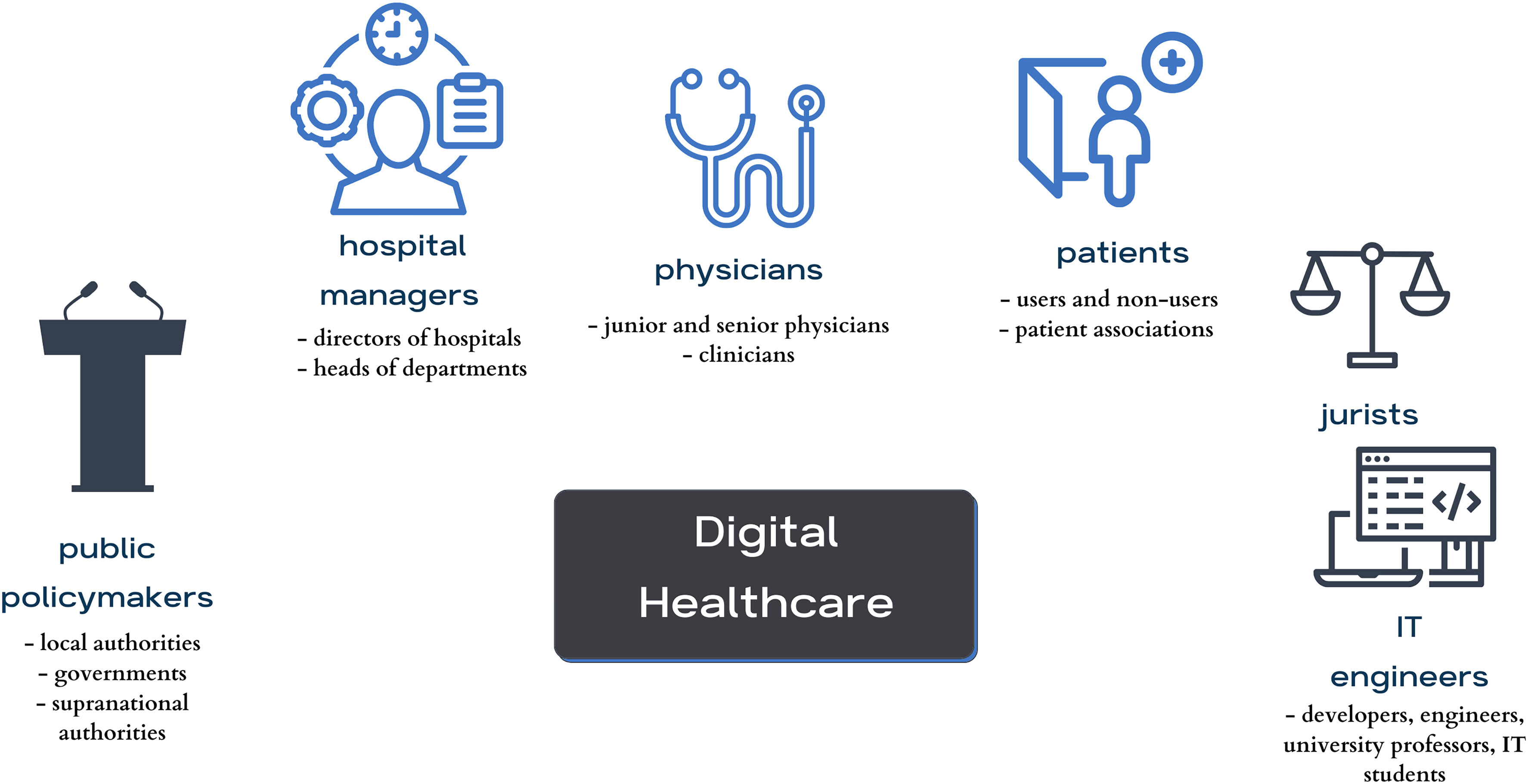

This qualitative study aims to present the aspirations, expectations, and critical analysis of the potential for [[artificial intelligence]] (AI) to transform the patient–physician relationship, according to multi-stakeholder insight. This study was conducted from June to December 2021, using an anticipatory ethics approach and sociology of expectations as the theoretical frameworks. It focused mainly on three groups of stakeholders, namely physicians (''n'' = 12), patients (''n'' = 15), and healthcare managers (''n'' = 11), all of whom are directly related to the adoption of AI in medicine (''n'' = 38). In this study, interviews were conducted with 40% of the patients in the sample (15/38), as well as 31% of the physicians (12/38) and 29% of health managers in the sample (11/38) ... ('''[[Journal:Critical analysis of the impact of AI on the patient–physician relationship: A multi-stakeholder qualitative study|Full article...]]''')<br /> | |||

''Recently featured'': | ''Recently featured'': | ||

{{flowlist | | {{flowlist | | ||

* [[Journal:Judgements of research co-created by generative AI: Experimental evidence|Judgements of research co-created by generative AI: Experimental evidence]] | |||

* [[Journal:Geochemical biodegraded oil classification using a machine learning approach|Geochemical biodegraded oil classification using a machine learning approach]] | * [[Journal:Geochemical biodegraded oil classification using a machine learning approach|Geochemical biodegraded oil classification using a machine learning approach]] | ||

* [[Journal:Knowledge of internal quality control for laboratory tests among laboratory personnel working in a biochemistry department of a tertiary care center: A descriptive cross-sectional study|Knowledge of internal quality control for laboratory tests among laboratory personnel working in a biochemistry department of a tertiary care center: A descriptive cross-sectional study]] | * [[Journal:Knowledge of internal quality control for laboratory tests among laboratory personnel working in a biochemistry department of a tertiary care center: A descriptive cross-sectional study|Knowledge of internal quality control for laboratory tests among laboratory personnel working in a biochemistry department of a tertiary care center: A descriptive cross-sectional study]] | ||

}} | }} | ||

Revision as of 15:48, 26 May 2024

This qualitative study aims to present the aspirations, expectations, and critical analysis of the potential for artificial intelligence (AI) to transform the patient–physician relationship, according to multi-stakeholder insight. This study was conducted from June to December 2021, using an anticipatory ethics approach and sociology of expectations as the theoretical frameworks. It focused mainly on three groups of stakeholders, namely physicians (n = 12), patients (n = 15), and healthcare managers (n = 11), all of whom are directly related to the adoption of AI in medicine (n = 38). In this study, interviews were conducted with 40% of the patients in the sample (15/38), as well as 31% of the physicians (12/38) and 29% of health managers in the sample (11/38) ... (Full article...)

Recently featured:

- Judgements of research co-created by generative AI: Experimental evidence

- Geochemical biodegraded oil classification using a machine learning approach

- Knowledge of internal quality control for laboratory tests among laboratory personnel working in a biochemistry department of a tertiary care center: A descriptive cross-sectional study