Difference between revisions of "Journal:Histopathology image classification: Highlighting the gap between manual analysis and AI automation"

Shawndouglas (talk | contribs) (Saving and adding more.) |

Shawndouglas (talk | contribs) (Saving and adding more.) |

||

| Line 108: | Line 108: | ||

{| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | {| class="wikitable" border="1" cellpadding="5" cellspacing="0" width="100%" | ||

|- | |- | ||

| colspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' The number of H&E images in the training and test sets used in the study. | | colspan="3" style="background-color:white; padding-left:10px; padding-right:10px;" |'''Table 2.''' The number of H&E images in the training and test sets used in the study. ADI - adipose; BACK - background; DEB - debris; LYM - lymphocytes; MUC - mucus; MUS - smooth muscle; NORM - normal colonic mucosa; STR - cancer-associated stroma; TUM - colorectal adenocarcinoma epithelium. | ||

|- | |- | ||

! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Classes | ! style="background-color:#e2e2e2; padding-left:10px; padding-right:10px;" |Classes | ||

| Line 155: | Line 155: | ||

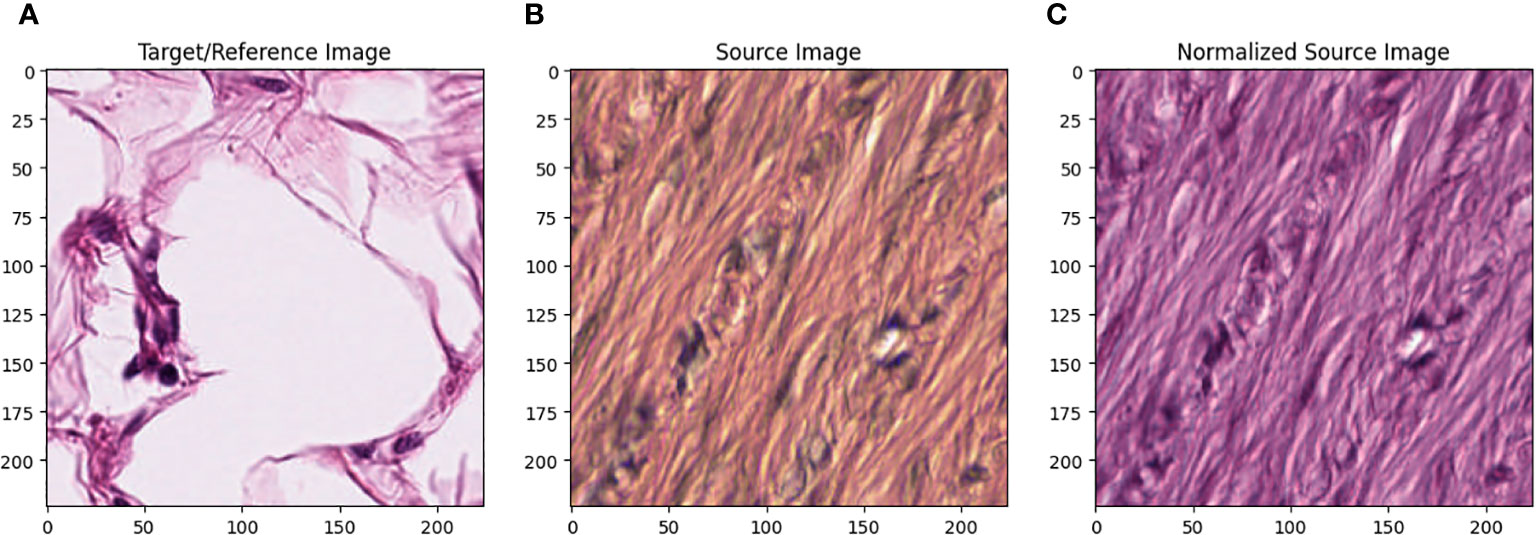

All images in the training set were normalized using the Macenko method. [22] Figure 1 describes the effect of Macenko normalization on sample images. The torchstain library [23], which supports a PyTorch-based approach, is available for color normalization of the image using the Macenko method. | All images in the training set were normalized using the Macenko method. [22] Figure 1 describes the effect of Macenko normalization on sample images. The torchstain library [23], which supports a PyTorch-based approach, is available for color normalization of the image using the Macenko method. | ||

[[File:Fig1 Dogan FrontOnc2024 13.jpg|1100px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="1100px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 1.''' '''(A)''' Target/reference image, '''(B)''' source image, and '''(C)''' normalized source image.</blockquote> | |||

|- | |||

|} | |||

|} | |||

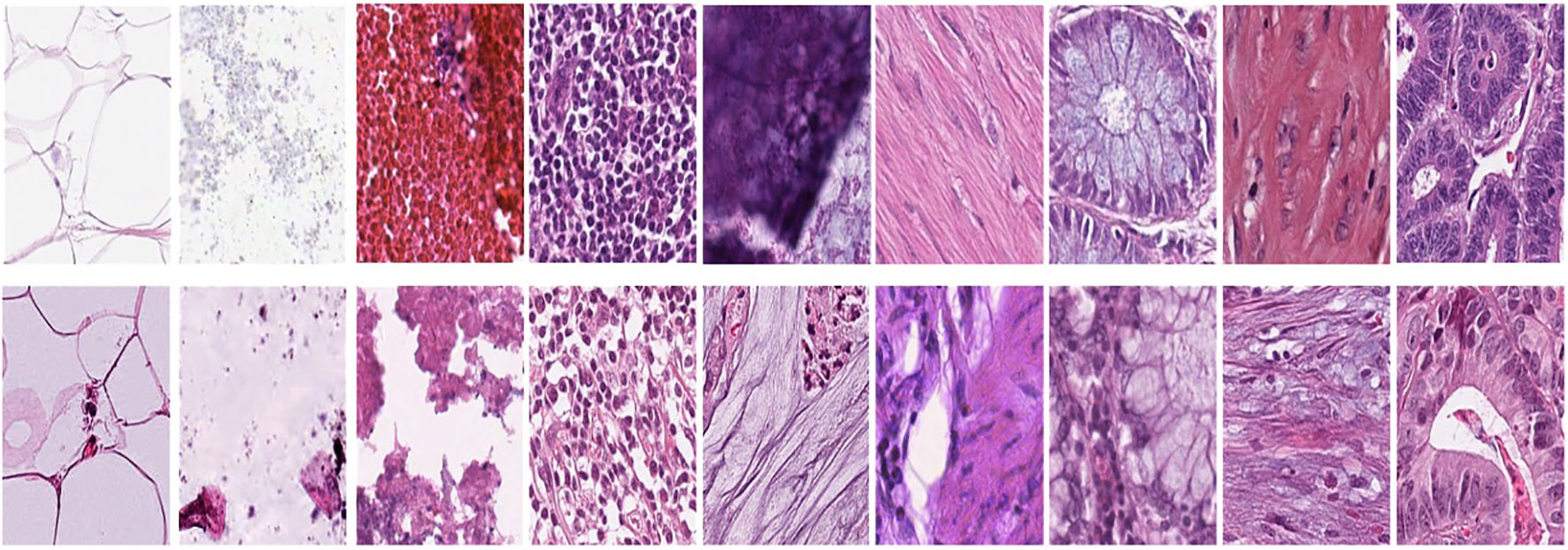

Figure 1A represents this method’s target/reference image, while Figure 1B represents the source images. Macenko normalization aims to make the color distribution of the source images compatible with the target image. In the example shown in the figure, the result of the normalization process applied on the source images (Figure 1B), taking the target image (Figure 1A) as a reference, allows us to obtain a more consistent and similar color profile by reducing color mismatches, as seen in Figure 1C. This will make obtaining more reliable results in machine learning or image analytics applications possible. Normalization was performed on the dataset on which the model was trained, and applying this normalization to the test set can increase the model’s generalization ability. However, the test set represents real-world setups and consists of images routinely obtained in the pathology department. Therefore, since these images wanted to train a clinically meaningful model with different color conditions, they were not applied to the normalization test set. In this way, we also investigated the effect of applying color normalization on classifying different types of tissues. The original data set—shown in the first row of Figure 2—from nine different tissue samples has substantially different color stains; however, the second row of Figure 2 shows their normalized versions. These images are transformed to the same average intensity level. | |||

[[File:Fig2 Dogan FrontOnc2024 13.jpg|1100px]] | |||

{{clear}} | |||

{| | |||

| style="vertical-align:top;" | | |||

{| border="0" cellpadding="5" cellspacing="0" width="1100px" | |||

|- | |||

| style="background-color:white; padding-left:10px; padding-right:10px;" |<blockquote>'''Figure 2.''' First row represents Adipose (ADI), background (BACK), debris (DEB), lymphocytes (LYM), mucus (MUC), smooth muscle (MUS), normal colonic mucosa (NORM), cancer-associated stroma (STR), and colorectal adenocarcinoma epithelium (TUM). The second row data set was obtained by applying normalization to the same tissue examples in the first row.</blockquote> | |||

|- | |||

|} | |||

|} | |||

===Manual analysis algorithm=== | |||

Revision as of 21:03, 20 May 2024

| Full article title | Histopathology image classification: Highlighting the gap between manual analysis and AI automation |

|---|---|

| Journal | Frontiers in Oncology |

| Author(s) | Doğan, Refika S.; Yılmaz, Bülent |

| Author affiliation(s) | Abdullah Gül University, Gulf University for Science and Technology |

| Primary contact | refikasultan dot dogan at agu dot edu dot tr |

| Editors | Pagador, J. Blas |

| Year published | 2024 |

| Volume and issue | 13 |

| Article # | 1325271 |

| DOI | 10.3389/fonc.2023.1325271 |

| ISSN | 2234-943X |

| Distribution license | Creative Commons Attribution 4.0 International |

| Website | https://www.frontiersin.org/journals/oncology/articles/10.3389/fonc.2023.1325271/full |

| Download | https://www.frontiersin.org/journals/oncology/articles/10.3389/fonc.2023.1325271/pdf (PDF) |

|

|

This article should be considered a work in progress and incomplete. Consider this article incomplete until this notice is removed. |

Abstract

The field of histopathological image analysis has evolved significantly with the advent of digital pathology, leading to the development of automated models capable of classifying tissues and structures within diverse pathological images. Artificial intelligence (AI) algorithms, such as convolutional neural networks (CNNs), have shown remarkable capabilities in pathology image analysis tasks, including tumor identification, metastasis detection, and patient prognosis assessment. However, traditional manual analysis methods have generally shown low accuracy in diagnosing colorectal cancer using histopathological images.

This study investigates the use of AI in image classification and image analytics using histopathological images using the histogram of oriented gradients method. The study develops an AI-based architecture for image classification using histopathological images, aiming to achieve high performance with less complexity through specific parameters and layers. In this study, we investigate the complicated state of histopathological image classification, explicitly focusing on categorizing nine distinct tissue types. Our research used open-source multi-centered image datasets that included records of 100,000 non-overlapping images from 86 patients for training and 7,180 non-overlapping images from 50 patients for testing. The study compares two distinct approaches, training AI-based algorithms and manual machine learning (ML) models, to automate tissue classification. This research comprises two primary classification tasks: binary classification, distinguishing between normal and tumor tissues, and multi-classification, encompassing nine tissue types, including adipose, background, debris, stroma, lymphocytes, mucus, smooth muscle, normal colon mucosa, and tumor.

Our findings show that AI-based systems can achieve 0.91 and 0.97 accuracy in binary and multi-class classifications. In comparison, the histogram of directed gradient features and the random forest classifier achieved accuracy rates of 0.75 and 0.44 in binary and multi-class classifications, respectively. Our AI-based methods are generalizable, allowing them to be integrated into histopathology diagnostics procedures and improve diagnostic accuracy and efficiency. The CNN model outperforms existing ML techniques, demonstrating its potential to improve the precision and effectiveness of histopathology image analysis. This research emphasizes the importance of maintaining data consistency and applying normalization methods during the data preparation stage for analysis. It particularly highlights the potential of AI to assess histopathological images.

Keywords: data science, image processing, artificial intelligence, histopathology images, colon cancer

Introduction

Histopathological image analysis is a fundamental method for diagnosing and screening cancer, especially in disorders affecting the digestive system. It is a type of analysis used to diagnose and treat cancer. In the case of pathologists, the physical and visual examinations of complex images often come in the form of resolutions up to 100,000 x 100,000 pixels. On the other hand, the method of pathological image analysis has long been dependent on this approach, known for its time-consuming and labor-intensive characteristics. New approaches are needed to increase the efficiency and accuracy of pathological image analysis. Up to this point, the realization of digital pathology approaches has seen significant progress. Digitization of high-resolution histopathology images allows comprehensive analysis using complex computational methods. As a result, there has been a significant increase in interest in medical image analysis for creating automatic models that can precisely categorize relevant tissues and structures in various clinical images. Early research in this area focused on predicting the malignancy of colon lesions and distinguishing between malignant and normal tissue by extracting features from microscopic images. Esgiar et al. [1] analyzed 44 healthy and 58 cancerous features obtained from microscope images. As a result of the analysis, the percentage of occurrence matrices used equaled 90 percent. These first steps form the basis for more complex procedures that integrate rapid image processing techniques and the functions of visualization software. Digital pathology has recently emerged as a widespread diagnostic tool, primarily through artificial intelligence (AI) algorithms. [2, 3] It has demonstrated impressive capability in processing pathology images in an advanced manner. [4, 5] Advanced techniques, identification of tumors, detection of metastasis, and assessment of patient prognosis are utilized regularly. Through the utilization of this process, the automatic segmentation of pathological images, generation of predictions, and the utilization of relevant observations from this complex visual data have been planned. [6, 7]

Convolutional neural networks (CNNs) have received significant focus among various machine learning (ML) techniques in AI research. As a result of the application of deep learning in previous biological research, ML has been extensively accepted and used. [8–10] CNNs distinguish themselves from other ML methods because of their extraordinary accuracy, generalization capacity, and computational economy. Each patient’s histopathology photographs contain important quantitative data, known as hematoxylin-eosin (H&E) stained tissue slides. Notably, Kather et al. [11] have explored the potential of CNN-based approaches to predict disease progression directly from the available H&E images. In a retrospective study, their findings underscored CNN’s remarkable ability to assess the human tumor microenvironment and prognosticate outcomes based on the analysis of histopathological images. This breakthrough showcases the transformative potential of such AI-based methodologies in revolutionizing the field of medical image analysis, offering new avenues for efficient and objective diagnostic and prognostic assessments.

On the other hand, in the literature, manual analysis methods are also available to classify and predict disease outcomes using the H&E images. Compared to AI-based algorithms, traditional manual analysis generally performs lower. It is highlighted in the literature that the performance of traditional methods like local binary pattern (LBP) and Haralick is poor. [12, 13] These studies emphasized that deep learning is more effective in diagnosing colorectal cancer using histopathology images, and that traditional ML methods are poor. The accuracy of LBP is 0.76 percent, and Haralick’s is 0.75. In this context, since methods such as LBP and Haralick showed low accuracy in the literature, we decided to adopt an approach other than these two methods. We chose to carry out this study with the histogram of oriented gradients (HoG) method. Unlike other studies in the literature, we performed analysis using HoG features for the first time in this study. Our choice offers an alternative perspective to traditional methods and deep learning studies. The results obtained using HoG features make a new contribution to the literature. This study offers a unique perspective to the literature by highlighting the value of analysis using HoG on a specific data set.

Table 1 provides an overview of manual analysis and AI-based studies from various literature sources. In a study by Jiang [14], a high accuracy rate of 0.89 was achieved using InceptionV3 Multi-Scale Gradients and generative adversarial network (GAN) for classifying colorectal cancer histopathological images. Kather et al. [6] resulted in an accuracy metric of 0.87 using texture-based approaches, decision trees, and support vector machines (SVMs) to analyze tissues of multiple classes in colorectal cancer histology. Other studies include Popovici et al. [15] at 0.84 with VGG-f (MatConvNet library) for the prediction of molecular subtypes, 0.84 with Xu [16] using CNN for the classification of stromal and epithelial regions in histopathology images, and 0.83 with Mossotto [17] using optimized SVM for the classification of inflammatory bowel disease. Tsai [19] demonstrated 0.80 accuracy metrics with CNN for detecting pneumonia in chest X-rays. These results show that AI-based classification studies generally achieve high accuracy rates. The primary emphasis of these studies revolves around AI methods employed in analyzing histopathological images, with a particular focus on CNNs. These networks have demonstrated exceptional levels of precision in a wide range of medical applications. These algorithms have demonstrated remarkable outcomes in cancer diagnosis and screening domains. CNNs provide substantial benefits compared to conventional approaches, owing to their ability to handle and evaluate intricate histological data. These methods also excel in their capacity to detect patterns, textures, and structures in high-resolution images, thereby complementing or, in certain instances, even substituting the human review processes of pathologists. The promise of these AI-based techniques to change the field of medical picture processing is well acknowledged.

| ||||||||||||||||||||||||||||||||||||||||

Materials and methods

Dataset

Our research was based on the use of two separate datasets, carefully selected and prepared for use as our training and testing sets. We carefully compiled the training dataset (NCT-CRC-HE-100K) from the pathology archives of the NCT Biobank (National Center for Tumor Diseases, Germany), including records from 86 patients. The University Medical Center Mannheim (UMM), Germany [11, 21] generated the testing dataset using the NCT-VAL-HE-7K dataset. It included data from 50 patients. We obtained the datasets from open-source images after carefully removing them from formalin-fixed paraffin-embedded tissues of colorectal cancer. The dataset we used for training and testing consisted of 100,000 high-resolution H&E (hematoxylin and eosin) images.

From these images, we selected 7,180 non-overlapping sub-images, also known as sub-images. Each of these sub-images measures 0.5 microns in thickness and boasts dimensions of 224x224 pixels. The richness of our dataset is further highlighted by the inclusion of nine distinct tissue textures, each encapsulating the subtle difficulties of various tissue types. These encompass a broad spectrum, from adipose tissue to lymphocytes, mucus, and cancer epithelial cells. Table 2 meticulously presents the distribution of images within the test and training datasets, segmented by their respective tissue classes. For instance, we meticulously assembled a training dataset featuring a robust 14,317 samples within the colorectal cancer tissue class. Simultaneously, the testing dataset for this class comprises 1,233 samples. These detailed statistics play a crucial role in providing readers with a comprehensive understanding of the data distribution and the relative sizes of each class within the study, forming the foundation for our subsequent analyses and model development.

| |||||||||||||||||||||||||||||||||

All images in the training set were normalized using the Macenko method. [22] Figure 1 describes the effect of Macenko normalization on sample images. The torchstain library [23], which supports a PyTorch-based approach, is available for color normalization of the image using the Macenko method.

|

Figure 1A represents this method’s target/reference image, while Figure 1B represents the source images. Macenko normalization aims to make the color distribution of the source images compatible with the target image. In the example shown in the figure, the result of the normalization process applied on the source images (Figure 1B), taking the target image (Figure 1A) as a reference, allows us to obtain a more consistent and similar color profile by reducing color mismatches, as seen in Figure 1C. This will make obtaining more reliable results in machine learning or image analytics applications possible. Normalization was performed on the dataset on which the model was trained, and applying this normalization to the test set can increase the model’s generalization ability. However, the test set represents real-world setups and consists of images routinely obtained in the pathology department. Therefore, since these images wanted to train a clinically meaningful model with different color conditions, they were not applied to the normalization test set. In this way, we also investigated the effect of applying color normalization on classifying different types of tissues. The original data set—shown in the first row of Figure 2—from nine different tissue samples has substantially different color stains; however, the second row of Figure 2 shows their normalized versions. These images are transformed to the same average intensity level.

|

Manual analysis algorithm

References

Notes

This presentation is faithful to the original, with only a few minor changes to presentation, though grammar and word usage was substantially updated for improved readability. In some cases important information was missing from the references, and that information was added.